Explainable Deep Learning Approach for Multi-Class Brain Magnetic Resonance Imaging Tumor Classification and Localization Using Gradient-Weighted Class Activation Mapping

Abstract

:1. Introduction

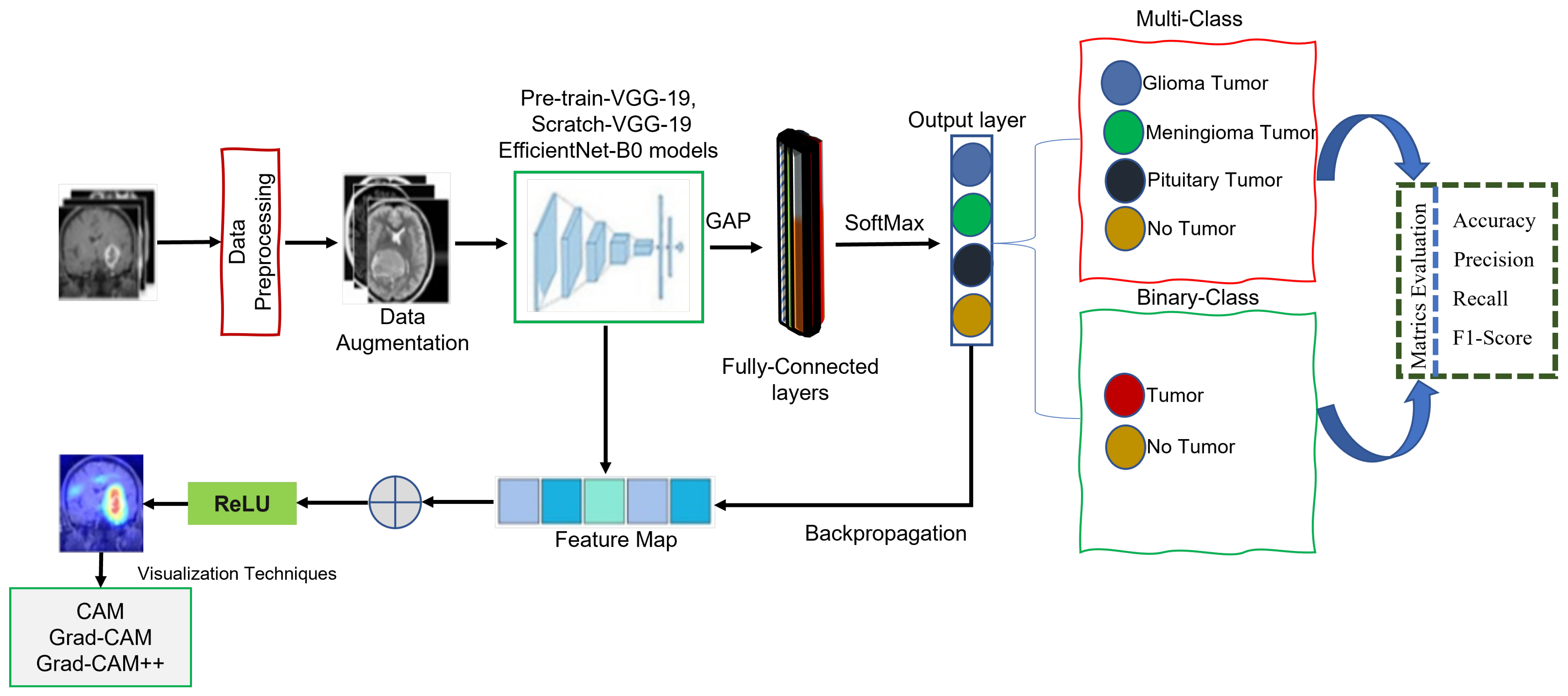

- We present a novel lightweight class-discriminative localization approach employing CAM, Grad-CAM, and Grad-CAM++ on pre-trained VGG-19, scratch VGG-19, and the EfficientNet model. This approach enhances the visual interpretability for multi-class and binary-class brain MRI tumor classification without architecture changes. The effectiveness of this approach was assessed by heatmap localization and model fidelity while maintaining a high performance.

- The proposed framework models were evaluated based on precision, recall, F1-score, accuracy, and heatmap results. We recommend the best model for both classification and localization.

- We perform CAM, Grad-CAM, and Grad-CAM++ evaluations to provide humans with understandable justifications for BT-MRI images with multi-class and binary-class architectures.

- We evaluate the performance and applicability of the proposed method in practical settings using cross-dataset.

2. Related Works

3. Materials and Methods

3.1. Dataset

3.2. Data Pre-Processing

3.3. Data Augmentation

3.4. Pre-Trained VGG-19

Global Average Pooling

3.5. Scratch VGG-19

3.6. EfficientNet-B0

3.7. Model Explainability

4. Experimental Results and Analysis

4.1. Hyperparameter Tuning

4.2. Classification Performance on the BT-MRI-4C and BT-MRI-2C Datasets

4.3. Validation of Model Performance on a Cross-Dataset

4.4. Model Explainability Results

4.5. Comparison with State-of-the-Art Deep Learning Models

5. Ablation Study

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| XDL | Explainable deep learning |

| MRI | Magnetic resonance imaging |

| VGG | Visual geometry group |

| BT | Brain tumor |

| CAM | Class activation mapping |

| Grad-CAM | Gradient weighted class activation mapping |

| Grad-CAM++ | Gradient weighted class activation mapping plus plus |

| CAD | Computer-aided diagnosis |

| DL | Deep learning |

| AI | Artificial intelligence |

| CNN | Convolutional neural network |

| DCNN | Deep convolutional neural network |

| CRM | Class-selective relevance mapping |

| 1D | One-dimensional |

| 2D | Two-dimensional |

| 3D | Three-dimensional |

| XRAI | Improved indicators via regions |

| GAP | Global average pooling |

| GI-T | Glioma tumor |

| Mi-T | Meningioma tumor |

| Pi-T | Pituitary tumor |

References

- Amin, J.; Sharif, M.; Yasmin, M.; Fernandes, S.L. A distinctive approach in brain tumor detection and classification using MRI. Pattern Recognit. Lett. 2020, 139, 118–127. [Google Scholar] [CrossRef]

- Amin, J.; Sharif, M.; Yasmin, M.; Fernandes, S.L. Big data analysis for brain tumor detection: Deep convolutional neural networks. Future Gener. Comput. Syst. 2018, 87, 290–297. [Google Scholar] [CrossRef]

- Nazir, M.; Shakil, S.; Khurshid, K. Role of deep learning in brain tumor detection and classification (2015 to 2020): A review. Comput. Med Imaging Graph. 2021, 91, 101940. [Google Scholar] [CrossRef] [PubMed]

- Tiwari, A.; Srivastava, S.; Pant, M. Brain tumor segmentation and classification from magnetic resonance images: Review of selected methods from 2014 to 2019. Pattern Recognit. Lett. 2020, 131, 244–260. [Google Scholar] [CrossRef]

- Mohan, G.; Subashini, M.M. MRI based medical image analysis: Survey on brain tumor grade classification. Biomed. Signal Process. Control 2018, 39, 139–161. [Google Scholar] [CrossRef]

- Ayadi, W.; Charfi, I.; Elhamzi, W.; Atri, M. Brain tumor classification based on hybrid approach. Vis. Comput. 2022, 38, 107–117. [Google Scholar] [CrossRef]

- Siegel, R.L.; Miller, K.D.; Wagle, N.S.; Jemal, A. Cancer statistics, 2023. CA Cancer J. Clin. 2023, 73, 17–48. [Google Scholar] [CrossRef] [PubMed]

- Dandıl, E.; Çakıroğlu, M.; Ekşi, Z. Computer-aided diagnosis of malign and benign brain tumors on MR images. In Proceedings of the ICT Innovations 2014: World of Data, Ohrid, Macedonia, 9–12 September 2014; Springer: Cham, Switzerland, 2015; pp. 157–166. [Google Scholar]

- Tu, L.; Luo, Z.; Wu, Y.L.; Huo, S.; Liang, X.J. Gold-based nanomaterials for the treatment of brain cancer. Cancer Biol. Med. 2021, 18, 372. [Google Scholar] [CrossRef]

- Miner, R.C. Image-guided neurosurgery. J. Med. Imaging Radiat. Sci. 2017, 48, 328–335. [Google Scholar] [CrossRef]

- Paul, J.; Sivarani, T. Computer aided diagnosis of brain tumor using novel classification techniques. J. Ambient. Intell. Humaniz. Comput. 2021, 12, 7499–7509. [Google Scholar] [CrossRef]

- Abd El-Wahab, B.S.; Nasr, M.E.; Khamis, S.; Ashour, A.S. BTC-fCNN: Fast Convolution Neural Network for Multi-class Brain Tumor Classification. Health Inf. Sci. Syst. 2023, 11, 3. [Google Scholar] [CrossRef] [PubMed]

- Khan, M.S.I.; Rahman, A.; Debnath, T.; Karim, M.R.; Nasir, M.K.; Band, S.S.; Mosavi, A.; Dehzangi, I. Accurate brain tumor detection using deep convolutional neural network. Comput. Struct. Biotechnol. J. 2022, 20, 4733–4745. [Google Scholar] [CrossRef] [PubMed]

- Wijethilake, N.; Meedeniya, D.; Chitraranjan, C.; Perera, I.; Islam, M.; Ren, H. Glioma survival analysis empowered with data engineering—A survey. IEEE Access 2021, 9, 43168–43191. [Google Scholar] [CrossRef]

- Yang, G.; Ye, Q.; Xia, J. Unbox the black-box for the medical explainable AI via multi-modal and multi-centre data fusion: A mini-review, two showcases and beyond. Inf. Fusion 2022, 77, 29–52. [Google Scholar] [CrossRef] [PubMed]

- O’Mahony, N.; Campbell, S.; Carvalho, A.; Harapanahalli, S.; Hernandez, G.V.; Krpalkova, L.; Riordan, D.; Walsh, J. Deep learning vs. traditional computer vision. In Advances in Computer Vision, Proceedings of the 2019 Computer Vision Conference (CVC), Las Vegas, NV, USA, 2–3 May 2019; Springer: Cham, Switzerland, 2020; Volume 1, pp. 128–144. [Google Scholar]

- Huang, P.; He, P.; Tian, S.; Ma, M.; Feng, P.; Xiao, H.; Mercaldo, F.; Santone, A.; Qin, J. A ViT-AMC network with adaptive model fusion and multiobjective optimization for interpretable laryngeal tumor grading from histopathological images. IEEE Trans. Med. Imaging 2022, 42, 15–28. [Google Scholar] [CrossRef] [PubMed]

- Huang, P.; Tan, X.; Zhou, X.; Liu, S.; Mercaldo, F.; Santone, A. FABNet: Fusion attention block and transfer learning for laryngeal cancer tumor grading in P63 IHC histopathology images. IEEE J. Biomed. Health Inform. 2021, 26, 1696–1707. [Google Scholar] [CrossRef]

- Gulum, M.A.; Trombley, C.M.; Kantardzic, M. A review of explainable deep learning cancer detection models in medical imaging. Appl. Sci. 2021, 11, 4573. [Google Scholar] [CrossRef]

- Ahmed Salman, S.; Lian, Z.; Saleem, M.; Zhang, Y. Functional Connectivity Based Classification of ADHD Using Different Atlases. In Proceedings of the 2020 IEEE International Conference on Progress in Informatics and Computing (PIC), Shanghai, China, 18–20 December 2020; pp. 62–66. [Google Scholar] [CrossRef]

- Shah, H.A.; Saeed, F.; Yun, S.; Park, J.H.; Paul, A.; Kang, J.M. A robust approach for brain tumor detection in magnetic resonance images using finetuned efficientnet. IEEE Access 2022, 10, 65426–65438. [Google Scholar] [CrossRef]

- Asif, S.; Yi, W.; Ain, Q.U.; Hou, J.; Yi, T.; Si, J. Improving effectiveness of different deep transfer learning-based models for detecting brain tumors from MR images. IEEE Access 2022, 10, 34716–34730. [Google Scholar] [CrossRef]

- Amin, J.; Sharif, M.; Gul, N.; Yasmin, M.; Shad, S.A. Brain tumor classification based on DWT fusion of MRI sequences using convolutional neural network. Pattern Recognit. Lett. 2020, 129, 115–122. [Google Scholar] [CrossRef]

- Rehman, A.; Khan, M.A.; Saba, T.; Mehmood, Z.; Tariq, U.; Ayesha, N. Microscopic brain tumor detection and classification using 3D CNN and feature selection architecture. Microsc. Res. Tech. 2021, 84, 133–149. [Google Scholar] [CrossRef] [PubMed]

- Wijethilake, N.; Islam, M.; Meedeniya, D.; Chitraranjan, C.; Perera, I.; Ren, H. Radiogenomics of glioblastoma: Identification of radiomics associated with molecular subtypes. In Machine Learning in Clinical Neuroimaging and Radiogenomics in Neuro-Oncology, Proceedings of the Third International Workshop, MLCN 2020, and Second International Workshop, RNO-AI 2020, Held in Conjunction with MICCAI 2020, Lima, Peru, 4–8 October 2020; Proceedings 3; Springer: Cham, Switzerland, 2020; pp. 229–239. [Google Scholar]

- Wijethilake, N.; Meedeniya, D.; Chitraranjan, C.; Perera, I. Survival prediction and risk estimation of Glioma patients using mRNA expressions. In Proceedings of the 2020 IEEE 20th International Conference on Bioinformatics and Bioengineering (BIBE), Cincinnati, OH, USA, 26–28 October 2020; pp. 35–42. [Google Scholar]

- Salman, S.A.; Zakir, A.; Takahashi, H. Cascaded deep graphical convolutional neural network for 2D hand pose estimation. In Proceedings of the International Workshop on Advanced Imaging Technology (IWAIT) 2023; Nakajima, M., Kim, J.G., deok Seo, K., Yamasaki, T., Guo, J.M., Lau, P.Y., Kemao, Q., Eds.; International Society for Optics and Photonics, SPIE: San Diego, CA, USA, 2023; Volume 12592, p. 1259215. [Google Scholar] [CrossRef]

- Singh, V.K. Segmentation and Classification of Multimodal Medical Images Based on Generative Adversarial Learning and Convolutional Neural Networks. Ph.D. Thesis, Universitat Rovira i Virgili, Tarragona, Spain, 2020. [Google Scholar]

- Abdelhafiz, D.; Yang, C.; Ammar, R.; Nabavi, S. Deep convolutional neural networks for mammography: Advances, challenges and applications. BMC Bioinform. 2019, 20, 281. [Google Scholar] [CrossRef] [PubMed]

- Song, Y.; Rana, M.N.; Qu, J.; Liu, C. A Survey of Deep Learning Based Methods in Medical Image Processing. Curr. Signal Transduct. Ther. 2021, 16, 101–114. [Google Scholar] [CrossRef]

- Kang, J.; Ullah, Z.; Gwak, J. Mri-based brain tumor classification using ensemble of deep features and machine learning classifiers. Sensors 2021, 21, 2222. [Google Scholar] [CrossRef] [PubMed]

- Deepak, S.; Ameer, P. Brain tumor classification using deep CNN features via transfer learning. Comput. Biol. Med. 2019, 111, 103345. [Google Scholar] [CrossRef] [PubMed]

- Erhan, D.; Manzagol, P.A.; Bengio, Y.; Bengio, S.; Vincent, P. The difficulty of training deep architectures and the effect of unsupervised pre-training. In Proceedings of the Artificial Intelligence and Statistics, Clearwater Beach, FL, USA, 16–18 April 2009; pp. 153–160. [Google Scholar]

- Azizpour, H.; Sharif Razavian, A.; Sullivan, J.; Maki, A.; Carlsson, S. From generic to specific deep representations for visual recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Boston, MA, USA, 7–12 June 2015; pp. 36–45. [Google Scholar]

- Penatti, O.A.; Nogueira, K.; Dos Santos, J.A. Do deep features generalize from everyday objects to remote sensing and aerial scenes domains? In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Boston, MA, USA, 7–12 June 2015; pp. 44–51. [Google Scholar]

- Salman, S.A.; Zakir, A.; Takahashi, H. SDFPoseGraphNet: Spatial Deep Feature Pose Graph Network for 2D Hand Pose Estimation. Sensors 2023, 23, 9088. [Google Scholar] [CrossRef]

- Badža, M.M.; Barjaktarović, M.Č. Classification of brain tumors from MRI images using a convolutional neural network. Appl. Sci. 2020, 10, 1999. [Google Scholar] [CrossRef]

- Mzoughi, H.; Njeh, I.; Wali, A.; Slima, M.B.; BenHamida, A.; Mhiri, C.; Mahfoudhe, K.B. Deep multi-scale 3D convolutional neural network (CNN) for MRI gliomas brain tumor classification. J. Digit. Imaging 2020, 33, 903–915. [Google Scholar] [CrossRef]

- Ayadi, W.; Elhamzi, W.; Charfi, I.; Atri, M. Deep CNN for brain tumor classification. Neural Process. Lett. 2021, 53, 671–700. [Google Scholar] [CrossRef]

- Abiwinanda, N.; Hanif, M.; Hesaputra, S.T.; Handayani, A.; Mengko, T.R. Brain tumor classification using convolutional neural network. In Proceedings of the World Congress on Medical Physics and Biomedical Engineering 2018, Prague, Czech Republic, 3–8 June 2018; Springer: Cham, Switzerland, 2019; Volume 1, pp. 183–189. [Google Scholar]

- Sultan, H.H.; Salem, N.M.; Al-Atabany, W. Multi-classification of brain tumor images using deep neural network. IEEE Access 2019, 7, 69215–69225. [Google Scholar] [CrossRef]

- Çinar, A.; Yildirim, M. Detection of tumors on brain MRI images using the hybrid convolutional neural network architecture. Med. Hypotheses 2020, 139, 109684. [Google Scholar] [CrossRef]

- Rehman, A.; Naz, S.; Razzak, M.I.; Akram, F.; Imran, M. A deep learning-based framework for automatic brain tumors classification using transfer learning. Circuits Syst. Signal Process. 2020, 39, 757–775. [Google Scholar] [CrossRef]

- Mehrotra, R.; Ansari, M.; Agrawal, R.; Anand, R. A transfer learning approach for AI-based classification of brain tumors. Mach. Learn. Appl. 2020, 2, 100003. [Google Scholar] [CrossRef]

- Rahim, T.; Usman, M.A.; Shin, S.Y. A survey on contemporary computer-aided tumor, polyp, and ulcer detection methods in wireless capsule endoscopy imaging. Comput. Med. Imaging Graph. 2020, 85, 101767. [Google Scholar] [CrossRef] [PubMed]

- Rai, H.M.; Chatterjee, K. 2D MRI image analysis and brain tumor detection using deep learning CNN model LeU-Net. Multimed. Tools Appl. 2021, 80, 36111–36141. [Google Scholar] [CrossRef]

- Intagorn, S.; Pinitkan, S.; Panmuang, M.; Rodmorn, C. Helmet Detection System for Motorcycle Riders with Explainable Artificial Intelligence Using Convolutional Neural Network and Grad-CAM. In Proceedings of the International Conference on Multi-disciplinary Trends in Artificial Intelligence, Hyberabad, India, 17–19 November 2022; Springer: Cham, Switzerland, 2022; pp. 40–51. [Google Scholar]

- Dworak, D.; Baranowski, J. Adaptation of Grad-CAM method to neural network architecture for LiDAR pointcloud object detection. Energies 2022, 15, 4681. [Google Scholar] [CrossRef]

- Lucas, M.; Lerma, M.; Furst, J.; Raicu, D. Visual explanations from deep networks via Riemann-Stieltjes integrated gradient-based localization. arXiv 2022, arXiv:2205.10900. [Google Scholar]

- Chen, H.; Gomez, C.; Huang, C.M.; Unberath, M. Explainable medical imaging AI needs human-centered design: Guidelines and evidence from a systematic review. NPJ Digit. Med. 2022, 5, 156. [Google Scholar] [CrossRef]

- Arrieta, A.B.; Díaz-Rodríguez, N.; Del Ser, J.; Bennetot, A.; Tabik, S.; Barbado, A.; García, S.; Gil-López, S.; Molina, D.; Benjamins, R.; et al. Explainable Artificial Intelligence (XAI): Concepts, taxonomies, opportunities and challenges toward responsible AI. Inf. Fusion 2020, 58, 82–115. [Google Scholar] [CrossRef]

- Singh, A.; Sengupta, S.; Lakshminarayanan, V. Explainable deep learning models in medical image analysis. J. Imaging 2020, 6, 52. [Google Scholar] [CrossRef]

- Lévy, D.; Jain, A. Breast mass classification from mammograms using deep convolutional neural networks. arXiv 2016, arXiv:1612.00542. [Google Scholar]

- Van Molle, P.; De Strooper, M.; Verbelen, T.; Vankeirsbilck, B.; Simoens, P.; Dhoedt, B. Visualizing convolutional neural networks to improve decision support for skin lesion classification. In Understanding and Interpreting Machine Learning in Medical Image Computing Applications, Proceedings of the First International Workshops, MLCN 2018, DLF 2018, and iMIMIC 2018, Held in Conjunction with MICCAI 2018, Granada, Spain, 16–20 September 2018; Proceedings 1; Springer: Cham, Switzerland, 2018; pp. 115–123. [Google Scholar]

- Zhou, B.; Khosla, A.; Lapedriza, A.; Oliva, A.; Torralba, A. Learning deep features for discriminative localization. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 2921–2929. [Google Scholar]

- Selvaraju, R.R.; Cogswell, M.; Das, A.; Vedantam, R.; Parikh, D.; Batra, D. Grad-cam: Visual explanations from deep networks via gradient-based localization. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 618–626. [Google Scholar]

- Eitel, F.; Ritter, K.; Alzheimer’s Disease Neuroimaging Initiative (ADNI). Testing the robustness of attribution methods for convolutional neural networks in MRI-based Alzheimer’s disease classification. In Interpretability of Machine Intelligence in Medical Image Computing and Multimodal Learning for Clinical Decision Support, Proceedings of the Second International Workshop, iMIMIC 2019, and 9th International Workshop, ML-CDS 2019, Held in Conjunction with MICCAI 2019, Shenzhen, China, 17 October 2019; Proceedings 9; Springer: Cham, Switzerland, 2019; pp. 3–11. [Google Scholar]

- Young, K.; Booth, G.; Simpson, B.; Dutton, R.; Shrapnel, S. Deep neural network or dermatologist? In Interpretability of Machine Intelligence in Medical Image Computing and Multimodal Learning for Clinical Decision Support, Proceedings of the Second International Workshop, iMIMIC 2019, and 9th International Workshop, ML-CDS 2019, Held in Conjunction with MICCAI 2019, Shenzhen, China, 17 October 2019; Proceedings 9; Springer: Cham, Switzerland, 2019; pp. 48–55. [Google Scholar]

- Aslam, F.; Farooq, F.; Amin, M.N.; Khan, K.; Waheed, A.; Akbar, A.; Javed, M.F.; Alyousef, R.; Alabdulijabbar, H. Applications of gene expression programming for estimating compressive strength of high-strength concrete. Adv. Civ. Eng. 2020, 2020, 8850535. [Google Scholar] [CrossRef]

- Hacıefendioğlu, K.; Demir, G.; Başağa, H.B. Landslide detection using visualization techniques for deep convolutional neural network models. Nat. Hazards 2021, 109, 329–350. [Google Scholar] [CrossRef]

- Jiang, P.T.; Zhang, C.B.; Hou, Q.; Cheng, M.M.; Wei, Y. Layercam: Exploring hierarchical class activation maps for localization. IEEE Trans. Image Process. 2021, 30, 5875–5888. [Google Scholar] [CrossRef] [PubMed]

- Meng, Q.; Wang, H.; He, M.; Gu, J.; Qi, J.; Yang, L. Displacement prediction of water-induced landslides using a recurrent deep learning model. Eur. J. Environ. Civ. Eng. 2023, 27, 2460–2474. [Google Scholar] [CrossRef]

- Vinogradova, K.; Dibrov, A.; Myers, G. Towards interpretable semantic segmentation via gradient-weighted class activation mapping (student abstract). In Proceedings of the AAAI Conference on Artificial Intelligence, New York, NY, USA, 7–12 February 2020; Volume 34, pp. 13943–13944. [Google Scholar]

- Kim, I.; Rajaraman, S.; Antani, S. Visual interpretation of convolutional neural network predictions in classifying medical image modalities. Diagnostics 2019, 9, 38. [Google Scholar] [CrossRef]

- Yang, C.; Rangarajan, A.; Ranka, S. Visual explanations from deep 3D convolutional neural networks for Alzheimer’s disease classification. In Proceedings of the AMIA Annual Symposium Proceedings, San Francisco, CA, USA, 3–7 November 2018; American Medical Informatics Association: Bethesda, MD, USA, 2018; Volume 2018, p. 1571. [Google Scholar]

- ÖZTÜRK, T.; KATAR, O. A Deep Learning Model Collaborates with an Expert Radiologist to Classify Brain Tumors from MR Images. Turk. J. Sci. Technol. 2022, 17, 203–210. [Google Scholar] [CrossRef]

- Han, S.S.; Kim, M.S.; Lim, W.; Park, G.H.; Park, I.; Chang, S.E. Classification of the clinical images for benign and malignant cutaneous tumors using a deep learning algorithm. J. Investig. Dermatol. 2018, 138, 1529–1538. [Google Scholar] [CrossRef]

- Holzinger, A.; Carrington, A.; Müller, H. Measuring the quality of explanations: The system causability scale (SCS) comparing human and machine explanations. KI-Künstl. Intell. 2020, 34, 193–198. [Google Scholar] [CrossRef]

- Arun, N.; Gaw, N.; Singh, P.; Chang, K.; Aggarwal, M.; Chen, B.; Hoebel, K.; Gupta, S.; Patel, J.; Gidwani, M.; et al. Assessing the trustworthiness of saliency maps for localizing abnormalities in medical imaging. Radiol. Artif. Intell. 2021, 3, e200267. [Google Scholar] [CrossRef]

- Kapishnikov, A.; Bolukbasi, T.; Viégas, F.; Terry, M. Xrai: Better attributions through regions. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 4948–4957. [Google Scholar]

- Ullah, Z.; Farooq, M.U.; Lee, S.H.; An, D. A hybrid image enhancement based brain MRI images classification technique. Med. Hypotheses 2020, 143, 109922. [Google Scholar] [CrossRef]

- Bodapati, J.D.; Shaik, N.S.; Naralasetti, V.; Mundukur, N.B. Joint training of two-channel deep neural network for brain tumor classification. Signal Image Video Process. 2021, 15, 753–760. [Google Scholar] [CrossRef]

- Yazdan, S.A.; Ahmad, R.; Iqbal, N.; Rizwan, A.; Khan, A.N.; Kim, D.H. An efficient multi-scale convolutional neural network based multi-class brain MRI classification for SaMD. Tomography 2022, 8, 1905–1927. [Google Scholar] [CrossRef]

- Díaz-Pernas, F.J.; Martínez-Zarzuela, M.; Antón-Rodríguez, M.; González-Ortega, D. A deep learning approach for brain tumor classification and segmentation using a multiscale convolutional neural network. Healthcare 2021, 9, 153. [Google Scholar] [CrossRef]

- Kibriya, H.; Masood, M.; Nawaz, M.; Nazir, T. Multiclass classification of brain tumors using a novel CNN architecture. Multimed. Tools Appl. 2022, 81, 29847–29863. [Google Scholar] [CrossRef]

- Lizzi, F.; Scapicchio, C.; Laruina, F.; Retico, A.; Fantacci, M.E. Convolutional neural networks for breast density classification: Performance and explanation insights. Appl. Sci. 2021, 12, 148. [Google Scholar] [CrossRef]

- Saporta, A.; Gui, X.; Agrawal, A.; Pareek, A.; Truong, S.Q.; Nguyen, C.D.; Ngo, V.D.; Seekins, J.; Blankenberg, F.G.; Ng, A.Y.; et al. Benchmarking saliency methods for chest X-ray interpretation. Nat. Mach. Intell. 2022, 4, 867–878. [Google Scholar] [CrossRef]

- Zhang, Y.; Hong, D.; McClement, D.; Oladosu, O.; Pridham, G.; Slaney, G. Grad-CAM helps interpret the deep learning models trained to classify multiple sclerosis types using clinical brain magnetic resonance imaging. J. Neurosci. Methods 2021, 353, 109098. [Google Scholar] [CrossRef] [PubMed]

- Bhuvaji, S.; Kadam, A.; Bhumkar, P.; Dedge, S.; Kanchan, S. Brain Tumor Classification (MRI) Dataset. 2020. Available online: https://www.kaggle.com/datasets/sartajbhuvaji/brain-tumor-classification-mri/ (accessed on 23 October 2023).

- Hamada, A. Br35h: Brain Tumor Detection 2020. 2020. Available online: https://www.kaggle.com/datasets/ahmedhamada0/brain-tumor-detection (accessed on 23 October 2023).

- Rosebrock, A. Finding Extreme Points in Contours with Open CV. 2016. Available online: https://pyimagesearch.com/2016/04/11/finding-extreme-points-in-contours-with-opencv/ (accessed on 23 October 2023).

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- Deng, J.; Dong, W.; Socher, R.; Li, L.J.; Li, K.; Fei-Fei, L. Imagenet: A large-scale hierarchical image database. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009; pp. 248–255. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet classification with deep convolutional neural networks. Commun. ACM 2017, 60, 84–90. [Google Scholar] [CrossRef]

- Thrun, S.; Saul, L.K.; Schölkopf, B. Advances in Neural Information Processing Systems 16: Proceedings of the 2003 Conference; MIT Press: Cambridge, MA, USA, 2004; Volume 16. [Google Scholar]

- Srivastava, N.; Hinton, G.; Krizhevsky, A.; Sutskever, I.; Salakhutdinov, R. Dropout: A simple way to prevent neural networks from overfitting. J. Mach. Learn. Res. 2014, 15, 1929–1958. [Google Scholar]

- Tan, M.; Le, Q. Efficientnet: Rethinking model scaling for convolutional neural networks. In Proceedings of the International Conference on Machine Learning, Long Beach, CA, USA, 10–15 June 2019; pp. 6105–6114. [Google Scholar]

- Sandler, M.; Howard, A.; Zhu, M.; Zhmoginov, A.; Chen, L.C. Mobilenetv2: Inverted residuals and linear bottlenecks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 4510–4520. [Google Scholar]

- Tan, M.; Chen, B.; Pang, R.; Vasudevan, V.; Sandler, M.; Howard, A.; Le, Q.V. Mnasnet: Platform-aware neural architecture search for mobile. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 2820–2828. [Google Scholar]

- Nickparvar, M. Brain Tumor MRI Dataset. 2021. Available online: https://www.kaggle.com/datasets/masoudnickparvar/brain-tumor-mri-dataset/ (accessed on 3 March 2021).

- Sekhar, A.; Biswas, S.; Hazra, R.; Sunaniya, A.K.; Mukherjee, A.; Yang, L. Brain tumor classification using fine-tuned GoogLeNet features and machine learning algorithms: IoMT enabled CAD system. IEEE J. Biomed. Health Inform. 2021, 26, 983–991. [Google Scholar] [CrossRef] [PubMed]

- Kakarla, J.; Isunuri, B.V.; Doppalapudi, K.S.; Bylapudi, K.S.R. Three-class classification of brain magnetic resonance images using average-pooling convolutional neural network. Int. J. Imaging Syst. Technol. 2021, 31, 1731–1740. [Google Scholar] [CrossRef]

- Saurav, S.; Sharma, A.; Saini, R.; Singh, S. An attention-guided convolutional neural network for automated classification of brain tumor from MRI. Neural Comput. Appl. 2023, 35, 2541–2560. [Google Scholar] [CrossRef]

- Iytha Sridhar, R.; Kamaleswaran, R. Lung Segment Anything Model (LuSAM): A Prompt-integrated Framework for Automated Lung Segmentation on ICU Chest X-Ray Images. TechRxiv 2023. Available online: https://www.techrxiv.org/articles/preprint/Lung_Segment_Anything_Model_LuSAM_A_Prompt-integrated_Framework_for_Automated_Lung_Segmentation_on_ICU_Chest_X-Ray_Images/22788959 (accessed on 23 October 2023).

- Ramesh, D.B.; Iytha Sridhar, R.; Upadhyaya, P.; Kamaleswaran, R. Lung Grounded-SAM (LuGSAM): A Novel Framework for Integrating Text prompts to Segment Anything Model (SAM) for Segmentation Tasks of ICU Chest X-Rays. TechRxiv 2023. Available online: https://www.techrxiv.org/articles/preprint/Lung_Grounded-SAM_LuGSAM_A_Novel_Framework_for_Integrating_Text_prompts_to_Segment_Anything_Model_SAM_for_Segmentation_Tasks_of_ICU_Chest_X-Rays/24224761 (accessed on 23 October 2023).

- Zhao, C.; Xiang, S.; Wang, Y.; Cai, Z.; Shen, J.; Zhou, S.; Zhao, D.; Su, W.; Guo, S.; Li, S. Context-aware network fusing transformer and V-Net for semi-supervised segmentation of 3D left atrium. Expert Syst. Appl. 2023, 214, 119105. [Google Scholar] [CrossRef]

- Ghali, R.; Akhloufi, M.A. Vision Transformers for Lung Segmentation on CXR Images. SN Comput. Sci. 2023, 4, 414. [Google Scholar] [CrossRef]

- Shelke, A.; Inamdar, M.; Shah, V.; Tiwari, A.; Hussain, A.; Chafekar, T.; Mehendale, N. Chest X-ray classification using deep learning for automated COVID-19 screening. SN Comput. Sci. 2021, 2, 300. [Google Scholar] [CrossRef]

- Hussein, H.I.; Mohammed, A.O.; Hassan, M.M.; Mstafa, R.J. Lightweight deep CNN-based models for early detection of COVID-19 patients from chest X-ray images. Expert Syst. Appl. 2023, 223, 119900. [Google Scholar] [CrossRef]

- Asif, S.; Wenhui, Y.; Amjad, K.; Jin, H.; Tao, Y.; Jinhai, S. Detection of COVID-19 from chest X-ray images: Boosting the performance with convolutional neural network and transfer learning. Expert Syst. 2023, 40, e13099. [Google Scholar] [CrossRef] [PubMed]

- Rizwan, M.; Shabbir, A.; Javed, A.R.; Shabbir, M.; Baker, T.; Obe, D.A.J. Brain tumor and glioma grade classification using Gaussian convolutional neural network. IEEE Access 2022, 10, 29731–29740. [Google Scholar] [CrossRef]

- Chen, L.; Bentley, P.; Mori, K.; Misawa, K.; Fujiwara, M.; Rueckert, D. DRINet for medical image segmentation. IEEE Trans. Med. Imaging 2018, 37, 2453–2462. [Google Scholar] [CrossRef] [PubMed]

- Kermany, D.; Zhang, K.; Goldbaum, M. Large dataset of labeled optical coherence tomography (oct) and chest x-ray images. Mendeley Data 3 2018. [Google Scholar] [CrossRef]

| Refs. | Method | Classification | Mode of Explanation |

|---|---|---|---|

| [71] | Feedforward neural network and DWT | Binary-class classification | Not used |

| [72] | CNN | Three-class BT classification | Not used |

| [73] | Multiscale CNN (MSCNN) | Four-class BT Classification | Not used |

| [74] | Multi-pathway CNN | Three-class BT classification | Not used |

| [75] | CNN | Multi-class brain tumor Classification | Not used |

| [76] | CNN with Grad-CAM | X-ray breast cancer mammogram image | Heatmap |

| [77] | CNN | Chest X-ray image | Heatmap |

| [78] | CNN | Multiple sclerosis MRI image | Heatmap |

| Tumor Type | No of MRI Images | MRI Views |

|---|---|---|

| Glioma tumor | 926 | Axial, coronal, sagittal |

| Meningioma tumor | 937 | Axial, coronal, sagittal |

| Pituitary tumor | 901 | Axial, coronal, sagittal |

| No tumor | 501 | |

| Total number of images | 3265 |

| Tumor Type | No of MRI Images | MRI Views |

|---|---|---|

| Tumor | 1500 | Axial, coronal, sagittal |

| Normal | 1500 | |

| Total number of images | 3000 |

| Brain MRI Dataset | Training | Validation | Testing | Total |

|---|---|---|---|---|

| MRI-4C dataset | 2613 | 326 | 326 | 3265 |

| MRI-2C dataset | 2400 | 300 | 300 | 3000 |

| Parameters | Values |

|---|---|

| Horizontal flip | True |

| Vertical flip | True |

| Range scale | True |

| Zoom range | [0.1, 1.0] |

| Width shift range | 0.2 |

| Height shift range | 0.2 |

| Shear range | 0.2 |

| Brightness range | [0.2, 1.0] |

| Random rotation | [0–90] |

| Brain MRI Dataset | Without Augmentation | Augmented Data |

|---|---|---|

| MRI-4C dataset | 3265 | 6410 |

| MRI-2C dataset | 3000 | 5600 |

| Sr. No. | Hyperparameters | Pre-Trained-VGG-19 | Scratch-VGG-19 |

|---|---|---|---|

| 1 | Number of epochs | 30 | 30 |

| 2 | Batch size | 32 | 32 |

| 3 | Image size | 224 × 224 | 224 × 224 |

| 4 | Optimizers | Adam, RMSprop | Adam, RMSprop |

| 5 | Activation function | SoftMax, ReLU | SoftMax, ReLU |

| 6 | Learning rate | 0.0001 | 0.0001 |

| 7 | Dropout rate | 0.25 | 0.25 |

| DL Model | Precision (%) | Recall (%) | F1-Score (%) | Accuracy (%) |

|---|---|---|---|---|

| Pre-trained-VGG-19 (BT-MRI-4C) | 99.89 | 99.72 | 99.81 | 99.92 |

| Scratch-VGG-19 (BT-MRI-4C) | 97.69 | 98.95 | 98.39 | 98.94 |

| EfficientNet (BT-MRI-4C) | 99.51 | 98.69 | 99.74 | 99.81 |

| Pre-trained-VGG-19 (BT-MRI-2C) | 98.59 | 99.32 | 98.99 | 99.85 |

| Scratch-VGG-19 (BT-MRI-2C) | 95.71 | 95.19 | 96.09 | 96.86 |

| EfficientNet (BT-MRI-2C) | 98.01 | 99.06 | 98.81 | 98.65 |

| DL Model | Tumor Class | Precision (%) | Recall (%) | F1-Score (%) |

|---|---|---|---|---|

| Pre-trained-VGG-19 (BT-MRI-4C) | Glioma | 100 | 99.89 | 100 |

| Meningioma | 96.0 | 99.92 | 98.59 | |

| Pituitary | 99.8 | 100 | 99.91 | |

| No tumor | 100 | 100 | 100 | |

| Scratch-VGG-19 (BT-MRI-4C) | Glioma | 96.0 | 94.00 | 93.00 |

| Meningioma | 79.0 | 96.51 | 92.97 | |

| Pituitary | 88.0 | 92.80 | 89.71 | |

| No tumor | 98.0 | 97.00 | 95.68 | |

| EfficientNet (BT-MRI-4C) | Glioma | 100 | 98.88 | 99.78 |

| Meningioma | 95.00 | 98.10 | 98.63 | |

| Pituitary | 97.59 | 99.35 | 98.89 | |

| No tumor | 99.90 | 100 | 99.73 | |

| Pre-trained-VGG-19 (BT-MRI-2C) | Tumor | 99.89 | 99.72 | 98.74 |

| Normal | 100 | 98.39 | 99.40 | |

| EfficientNet (BT-MRI-2C) | Tumor | 99.70 | 98.00 | 97.75 |

| Normal | 99.79 | 99.10 | 97.38 |

| Tumor Type | Precision | Recall | F1-Score |

|---|---|---|---|

| Glioma tumor | 1.00 | 1.00 | 1.00 |

| Meningioma tumor | 1.00 | 1.00 | 1.00 |

| Pituitary tumor | 1.00 | 1.00 | 1.00 |

| No tumor | 1.00 | 1.00 | 1.00 |

| Average (%) | 100 | 100 | 100 |

| Tumor Type | Precision | Recall | F1-Score |

|---|---|---|---|

| Glioma tumor | 0.97 | 1.00 | 0.98 |

| Meningioma tumor | 0.96 | 0.90 | 0.93 |

| Pituitary tumor | 1.00 | 1.00 | 1.00 |

| No tumor | 0.97 | 0.97 | 0.97 |

| Average (%) | 97.5 | 96.75 | 97.00 |

| Tumor Type | Precision | Recall | F1-Score |

|---|---|---|---|

| Glioma tumor | 0.92 | 0.97 | 0.95 |

| Meningioma tumor | 0.94 | 0.96 | 0.95 |

| Pituitary tumor | 1.00 | 0.94 | 0.97 |

| No tumor | 1.00 | 0.99 | 0.99 |

| Average (%) | 96.5 | 96.5 | 96.5 |

| Tumor Type | Precision | Recall | F1-Score |

|---|---|---|---|

| Glioma tumor | 0.71 | 0.97 | 0.82 |

| Meningioma tumor | 0.95 | 0.66 | 0.78 |

| Pituitary tumor | 1.00 | 0.95 | 0.97 |

| No tumor | 1.00 | 1.00 | 1.00 |

| Average (%) | 91.5 | 89.5 | 89.25 |

| Tumor Type | Precision | Recall | F1-Score |

|---|---|---|---|

| Glioma tumor | 0.98 | 0.98 | 0.98 |

| Meningioma tumor | 0.94 | 0.98 | 0.96 |

| Pituitary tumor | 0.99 | 0.963 | 0.97 |

| No tumor | 1.00 | 0.98 | 0.99 |

| Average (%) | 97.8 | 98.05 | 97.92 |

| Tumor Type | Precision | Recall | F1-Score |

|---|---|---|---|

| Glioma tumor | 0.88 | 1.00 | 0.93 |

| Meningioma tumor | 0.92 | 0.89 | 0.91 |

| Pituitary tumor | 1.00 | 0.85 | 0.91 |

| No tumor | 0.91 | 1.00 | 0.95 |

| Average (%) | 93.13 | 93.66 | 93.09 |

| DL Model | Tumor Class | Precision (%) | Recall (%) | F1-Score (%) |

|---|---|---|---|---|

| Pre-trained-VGG-19 (BT-MRI-2C) | Tumor | 100 | 96.00 | 98.00 |

| No tumor | 96.00 | 100 | 98.00 | |

| Scratch-VGG-19 (BT-MRI-2C) | Tumor | 98.10 | 91.96 | 94.93 |

| No tumor | 91.59 | 98.00 | 94.69 | |

| EfficientNet (BT-MRI-2C) | Tumor | 99.46 | 98.20 | 97.32 |

| No tumor | 98.02 | 96.12 | 97.06 |

| Ref | Method | Parameters | Dataset | Accuracy | Model Explainability |

|---|---|---|---|---|---|

| [91] | CNN, | 97.60% | |||

| SVM, | Not mentioned | Three-class | 98.30% | Not used | |

| KNN, SoftMax | 94.90% | ||||

| [92] | CNN, SoftMax | Not mentioned | Three-class | 97.42% | Not used |

| [72] | CNN, SoftMax | Not mentioned | Three-class | 95.23% | Not used |

| [98] | VGG-16 | 95.9% | |||

| DenseNet-161 | Not mentioned | Three-class | 98.9% | Not used | |

| ResNet-18 | 76% | ||||

| [101] | GCNN | Not mentioned | Two-class | 99.8% | Not used |

| GCNN | Not mentioned | Three-class | 97.14% | Not used | |

| [99] | Lightweight CNN | 0.59 M | Two class | 98.55% | Not used |

| Lightweight CNN | 0.59 M | Three class | 96.83% | Not used | |

| [100] | VGG16 | Not mentioned | Three-class | 97.80% | Not used |

| ResNet50 | Not mentioned | Three-class | 97.40% | Not used | |

| [93] | Four-class | 95.71% | |||

| CNN, | Not mentioned | Three-class | 97.23% | Not used | |

| SoftMax | Binary-class | 99.83% | |||

| [66] | CNN, SoftMax | Not mentioned | Binary-class | 99.33% | Grad-CAM for binary-class prediction |

| Our proposed model | Pre-trained-VGG-19, SoftMax | 20 M | BT-MRI-4C | 99.92% | |

| Scratch VGG-19, SoftMax | 139 M | BT-MRI-4C | 98.94% | ||

| EfficientNet, SoftMax | 10 M | BT-MRI-4C | 99.81% | ||

| Pre-trained-VGG-19, SoftMax | 12 M | BT-MRI-2C | 99.85% | CAM, Grad-CAM and Grad-CAM++ for binary and multi-class predication | |

| Scratch-VGG-19, SoftMax | 9 M | BT-MRI-2C | 96.79% | ||

| EfficientNet, SoftMax | 10 M | BT-MRI-2C | 98.65% |

| Model | Optimizer | Precision (%) | Recall (%) | F1-Score (%) | Accuracy (%) |

|---|---|---|---|---|---|

| Pre-trained-VGG-19 | Adam | 99.89 | 99.72 | 99.81 | 99.92 |

| RMSprop | 99.10 | 98.99 | 99.08 | 99.69 | |

| Scratch-VGG-19 | Adam | 97.69 | 98.95 | 98.39 | 98.94 |

| RMSprop | 97.57 | 97.09 | 98.99 | 97.09 | |

| EfficientNet | Adam | 99.51 | 98.69 | 99.74 | 99.81 |

| RMSprop | 98.42 | 98.75 | 98.14 | 99.62 |

| Model | Optimizer | Precision (%) | Recall (%) | F1-Score (%) | Accuracy (%) |

|---|---|---|---|---|---|

| Pre-trained-VGG-19 | Adam | 98.59 | 99.32 | 98.99 | 99.85 |

| RMSprop | 98.57 | 98.59 | 97.80 | 98.12 | |

| Scratch-VGG-19 | Adam | 96.30 | 95.79 | 96.90 | 96.79 |

| RMSprop | 96.01 | 94.38 | 96.00 | 95.92 | |

| EfficientNet | Adam | 98.01 | 99.06 | 98.81 | 98.65 |

| RMSprop | 98.26 | 97.31 | 97.40 | 98.01 |

| Model | Optimizer | Precision (%) | Recall (%) | F1-Score (%) | Accuracy (%) |

|---|---|---|---|---|---|

| Pre-trained-VGG-19 | Adam | 97.91 | 97.01 | 96.84 | 98.03 |

| RMSprop | 97.11 | 96.85 | 96.21 | 97.09 | |

| Scratch-VGG-19 | Adam | 95.31 | 94.86 | 95.37 | 96.09 |

| RMSprop | 95.01 | 94.28 | 95.41 | 95.71 | |

| EfficientNet | Adam | 97.59 | 96.88 | 96.61 | 97.59 |

| RMSprop | 97.15 | 96.08 | 95.97 | 97.53 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hussain, T.; Shouno, H. Explainable Deep Learning Approach for Multi-Class Brain Magnetic Resonance Imaging Tumor Classification and Localization Using Gradient-Weighted Class Activation Mapping. Information 2023, 14, 642. https://doi.org/10.3390/info14120642

Hussain T, Shouno H. Explainable Deep Learning Approach for Multi-Class Brain Magnetic Resonance Imaging Tumor Classification and Localization Using Gradient-Weighted Class Activation Mapping. Information. 2023; 14(12):642. https://doi.org/10.3390/info14120642

Chicago/Turabian StyleHussain, Tahir, and Hayaru Shouno. 2023. "Explainable Deep Learning Approach for Multi-Class Brain Magnetic Resonance Imaging Tumor Classification and Localization Using Gradient-Weighted Class Activation Mapping" Information 14, no. 12: 642. https://doi.org/10.3390/info14120642

APA StyleHussain, T., & Shouno, H. (2023). Explainable Deep Learning Approach for Multi-Class Brain Magnetic Resonance Imaging Tumor Classification and Localization Using Gradient-Weighted Class Activation Mapping. Information, 14(12), 642. https://doi.org/10.3390/info14120642