Online Professional Development on Educational Neuroscience in Higher Education Based on Design Thinking

Abstract

:1. Introduction

2. Materials

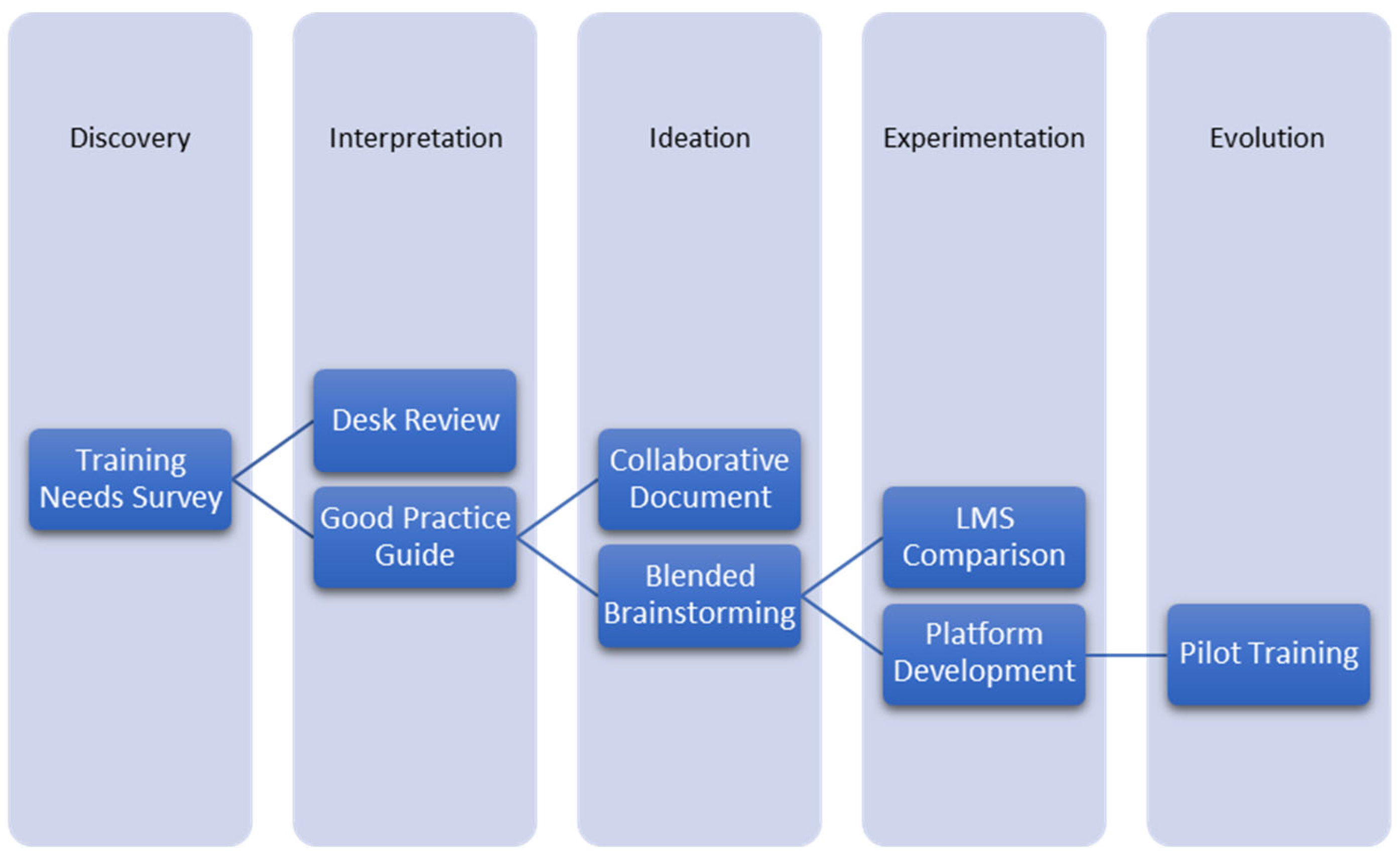

2.1. Platform Design Process

2.2. Neuropedagogy Course Outline

- Introduction to Neuropedagogy: this inaugural module contains five sections:

- The definition of neuropedagogy and how it was born.

- What does neuropedagogy bring to teachers?

- Anatomical organization of the brain.

- How does the brain learn?

- Twelve general principles for classroom application.

- Neuromyths in Education: this module explores the problematic proliferation of inaccurate beliefs regarding the brain and its role in learning. It presents the five more common neuromyths and the debunking of scientific evidence. Its structure is as follows:

- What is a Neuromyth?

- Why do Neuromyths persist in schools and colleges?

- Neuromyth Examples in Higher Education.

- Other Neuromyths.

- How to Spot Neuromyths.

- Engagement: the neural mechanisms underlying engagement are explored along with recommended strategies including cooperation and gamification. Specifically, the following topics are reviewed:

- Introduction to educational neuroscience regarding engagement.

- Approach responses and avoidance responses.

- Strategies that trigger the reward system and engagement.

- Classroom actions.

- Concentration and Attention: the same structure is duplicated here, presenting the definition of and the neural processes behind attention and indicative application methods for classroom instruction.

- Introduction to educational neuroscience regarding concentration and attention

- Attention… what’s in a name?

- How does attention work in the brain?

- Alerting network.

- Orienting network.

- Executive attention network.

- Classroom actions.

- Emotions: this is the most extensive module, starting with a definition and classification of emotions. It explores the emotional and social aspects of the brain and contains several ideas to improve emotional responses in education. Its structure is the following:

- Where do emotions come from? The emotional brain.

- 1.1

- The system responsible for the generation of emotions.

- 1.2

- The emotional brain, motion creation, processing and transmitting

- 1.3

- Neurotransmitters.

- Classification of emotions.

- 2.1

- Basic Emotions.

- 2.2

- Secondary Emotions.

- 2.3

- Positive and Negative Emotions.

- Emotions and the social brain.

- 3.1

- Levels of “social”.

- 3.2

- Social Neural Networks.

- Suggestions and ideas how to implement emotions and emotional responses in the classroom

- Associative Memory: the last module explores the function of memory in learning, the different associative memory types, such as semantic and episodic memory, featuring the following sections:

- Definition of Memory.

- Types of Memory.

- What is Associative Memory?

- Types of Associative Memory.

- Impact of Associative Memory.

- Pitfalls of Associative Memory.

3. Method

4. Results

4.1. Descriptive Statistics

4.2. Correlations

4.3. Generalised Additive Model

4.4. Open-Ended Questions

5. Discussion

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Data Availability Statement

Conflicts of Interest

Appendix A

References

- Youn, T.I.K.; Price, T.M. Learning from the Experience of Others: The Evolution of Faculty Tenure and Promotion Rules in Comprehensive Institutions. J. High. Educ. 2009, 80, 204–237. [Google Scholar] [CrossRef]

- Malcolm, M. A Critical Evaluation of Recent Progress in Understanding the Role of the Research-Teaching Link in Higher Education. High. Educ. 2014, 67, 289–301. [Google Scholar] [CrossRef]

- Entwistle, N.J.; Peterson, E.R. Conceptions of Learning and Knowledge in Higher Education: Relationships with Study Behaviour and Influences of Learning Environments. Int. J. Educ. Res. 2004, 41, 407–428. [Google Scholar] [CrossRef]

- Ansari, D.; de Smedt, B.; Grabner, R.H. Neuroeducation—A Critical Overview of An Emerging Field. Neuroethics 2012, 5, 105–117. [Google Scholar] [CrossRef]

- Cui, Y.; Zhang, H. Educational Neuroscience Training for Teachers’ Technological Pedagogical Content Knowledge Construction. Front. Psychol. 2021, 12, 792723. [Google Scholar] [CrossRef] [PubMed]

- Koehler, M.J.; Mishra, P. What Is Technological Pedagogical Content Knowledge (TPACK)? Contemp. Issues Technol. Teach. Educ. 2009, 9, 60–70. [Google Scholar] [CrossRef] [Green Version]

- Tokuhama-Espinosa, T. Bringing the Neuroscience of Learning to Online Teaching: An Educator’s Handbook; Teachers College Press: New York, NY, USA, 2021; ISBN 9780807765531. [Google Scholar]

- Wilcox, G.; Morett, L.M.; Hawes, Z.; Dommett, E.J. Why Educational Neuroscience Needs Educational and School Psychology to Effectively Translate Neuroscience to Educational Practice. Front. Psychol. 2021, 11, 618449. [Google Scholar] [CrossRef]

- Grospietsch, F.; Lins, I. Review on the Prevalence and Persistence of Neuromyths in Education—Where We Stand and What Is Still Needed. Front. Educ. 2021, 6, 665752. [Google Scholar] [CrossRef]

- Bragg, L.A.; Walsh, C.; Heyeres, M. Successful Design and Delivery of Online Professional Development for Teachers: A Systematic Review of the Literature. Comput. Educ. 2021, 166, 104158. [Google Scholar] [CrossRef]

- Mystakidis, S.; Fragkaki, M.; Filippousis, G. Ready Teacher One: Virtual and Augmented Reality Online Professional Development for K-12 School Teachers. Computers 2021, 10, 134. [Google Scholar] [CrossRef]

- Doukakis, S.; Alexopoulos, E.C. Online Learning, Educational Neuroscience and Knowledge Transformation Opportunities for Secondary Education Students. J. High. Educ. Theory Pract. 2021, 21, 49–57. [Google Scholar] [CrossRef]

- Patrício, R.; Moreira, A.C.; Zurlo, F. Enhancing Design Thinking Approaches to Innovation through Gamification. Eur. J. Innov. Manag. 2021, 24, 1569–1594. [Google Scholar] [CrossRef]

- Gachago, D.; Morkel, J.; Hitge, L.; van Zyl, I.; Ivala, E. Developing ELearning Champions: A Design Thinking Approach. Int. J. Educ. Technol. High. Educ. 2017, 14, 30. [Google Scholar] [CrossRef] [Green Version]

- Gonzalez, C.S.G.; Gonzalez, E.G.; Cruz, V.M.; Saavedra, J.S. Integrating the Design Thinking into the UCD’s Methodology. In Proceedings of the IEEE EDUCON 2010 Conference, Madrid, Spain, 14–16 April 2010; IEEE: Piscataway, NJ, USA, 2010; pp. 1477–1480. [Google Scholar]

- Dimitropoulos, K.; Mystakidis, S.; Fragkaki, M. Bringing Educational Neuroscience to Distance Learning with Design Thinking: The Design and Development of a Hybrid E-Learning Platform for Skillful Training. In Proceedings of the 2022 7th South-East Europe Design Automation, Computer Engineering, Computer Networks and Social Media Conference (SEEDA-CECNSM), Ioannina, Greece, 23–25 September 2022; IEEE: Piscataway, NJ, USA, 2022; pp. 1–6. [Google Scholar]

- Fragkaki, M.; Mystakidis, S.; Dimitropoulos, K. Higher Education Faculty Perceptions and Needs on Neuroeducation in Teaching and Learning. Educ. Sci. 2022, 12, 707. [Google Scholar] [CrossRef]

- Fragkaki, M.; Mystakidis, S.; Dimitropoulos, K. Higher Education Teaching Transformation with Educational Neuroscience Practices. In Proceedings of the 15th Annual International Conference of Education, Research and Innovation, Seville, Spain, 7–9 November 2022; pp. 579–584. [Google Scholar]

- Krouska, A.; Troussas, C.; Virvou, M. Comparing LMS and CMS Platforms Supporting Social E-Learning in Higher Education. In Proceedings of the 2017 8th International Conference on Information, Intelligence, Systems & Applications (IISA), Larnaca, Cyprus, 27–30 August 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 1–6. [Google Scholar]

- Al-Ajlan, A.; Zedan, H. Why Moodle. In Proceedings of the 2008 12th IEEE International Workshop on Future Trends of Distributed Computing Systems, Kunming, China, 21–23 October 2008; pp. 58–64. [Google Scholar] [CrossRef]

- Natriello, G.; Chae, H.S. Taking Project-Based Learning Online. In Innovations in Learning and Technology for the Workplace and Higher Education; Guralnick, D., Auer, M.E., Poce, A., Eds.; Springer International Publishing: Cham, Switzerland, 2022; pp. 224–236. [Google Scholar]

- Mystakidis, S.; Lympouridis, V. Immersive Learning. Encyclopedia 2023, 3, 396–405. [Google Scholar] [CrossRef]

- Pallant, J. SPSS Survival Manual: A Step by Step Guide to Data Analysis Using IBM SPSS; Allen & Unwin: Crows Nest, Australia, 2020; ISBN 9781000252521. [Google Scholar]

- Field, A. Discovering Statistics Using IBM SPSS Statistics; SAGE Publications: Thousand Oaks, CA, USA, 2018; ISBN 9781526419521. [Google Scholar]

- Hauke, J.; Kossowski, T. Comparison of Values of Pearson’s and Spearman’s Correlation Coefficients on the Same Sets of Data. Quaest. Geogr. 2011, 30, 87–93. [Google Scholar] [CrossRef] [Green Version]

- Hastie, T.; Tibshirani, R.; Friedman, J.H. The Elements of Statistical Learning: Data Mining, Inference, and Prediction; Springer Series in Statistics; Springer: Berlin/Heidelberg, Germany, 2009; ISBN 9780387848846. [Google Scholar]

- Walker, D.; Myrick, F. Grounded Theory: An Exploration of Process and Procedure. Qual. Health Res. 2006, 16, 547–559. [Google Scholar] [CrossRef] [PubMed]

- Strauss, A.; Corbin, J.M. Grounded Theory in Practice; Sage: Newbury Park, CA, USA, 1997. [Google Scholar]

- Moore, M.G.; Kearsley, G. Distance Education: A Systems View of Online Learning; Cengage Learning: Boston, MA, USA, 2011; ISBN 9781133715450. [Google Scholar]

- Keshavarz, M.; Ghoneim, A. Preparing Educators to Teach in a Digital Age. Int. Rev. Res. Open Distrib. Learn. 2021, 22, 221–242. [Google Scholar] [CrossRef]

- Al-Azawei, A.; Parslow, P.; Lundqvist, K. Investigating the Effect of Learning Styles in a Blended E-Learning System: An Extension of the Technology Acceptance Model (TAM). Australas. J. Educ. Technol. 2017, 33, 1–23. [Google Scholar] [CrossRef] [Green Version]

- Sun, P.-C.; Tsai, R.J.; Finger, G.; Chen, Y.-Y.; Yeh, D. What Drives a Successful E-Learning? An Empirical Investigation of the Critical Factors Influencing Learner Satisfaction. Comput. Educ. 2008, 50, 1183–1202. [Google Scholar] [CrossRef]

- Rienties, B.; Brouwer, N.; Lygo-Baker, S. The Effects of Online Professional Development on Higher Education Teachers’ Beliefs and Intentions towards Learning Facilitation and Technology. Teach. Teach. Educ. 2013, 29, 122–131. [Google Scholar] [CrossRef] [Green Version]

- Khalil, R.; Mansour, A.E.; Fadda, W.A.; Almisnid, K.; Aldamegh, M.; Al-Nafeesah, A.; Alkhalifah, A.; Al-Wutayd, O. The Sudden Transition to Synchronized Online Learning during the COVID-19 Pandemic in Saudi Arabia: A Qualitative Study Exploring Medical Students’ Perspectives. BMC Med. Educ. 2020, 20, 285. [Google Scholar] [CrossRef] [PubMed]

- Zhou, T.; Huang, S.; Cheng, J.; Xiao, Y. The Distance Teaching Practice of Combined Mode of Massive Open Online Course Micro-Video for Interns in Emergency Department During the COVID-19 Epidemic Period. Telemed. e-Health 2020, 26, 584–588. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Preece, J.; Sharp, H.; Rogers, Y. Interaction Design: Beyond Human-Computer Interaction; Wiley: Hoboken, NJ, USA, 2015; ISBN 9781119020752. [Google Scholar]

- Yu, Z. A Meta-Analysis and Bibliographic Review of the Effect of Nine Factors on Online Learning Outcomes across the World. Educ. Inf. Technol. 2022, 27, 2457–2482. [Google Scholar] [CrossRef]

- Rodrigues, H.; Almeida, F.; Figueiredo, V.; Lopes, S.L. Tracking E-Learning through Published Papers: A Systematic Review. Comput. Educ. 2019, 136, 87–98. [Google Scholar] [CrossRef]

- Darby, F.; Lang, J.M. Small Teaching Online: Applying Learning Science in Online Classes; Wiley: Hoboken, NJ, USA, 2019; ISBN 9781119619093. [Google Scholar]

- Christopoulos, A.; Sprangers, P. Integration of Educational Technology during the Covid-19 Pandemic: An Analysis of Teacher and Student Receptions. Cogent Educ. 2021, 8, 1964690. [Google Scholar] [CrossRef]

- Fernández-Batanero, J.M.; Montenegro-Rueda, M.; Fernández-Cerero, J.; García-Martínez, I. Digital Competences for Teacher Professional Development. Systematic Review. Eur. J. Teach. Educ. 2020, 45, 513–531. [Google Scholar] [CrossRef]

- Bozkurt, A.; Sharma, R.C. Emergency Remote Teaching in a Time of Global Crisis Due to CoronaVirus Pandemic. Asian J. Distance Educ. 2020, 15, 1–6. [Google Scholar]

- Zlatkin-Troitschanskaia, O.; Pant, H.A.; Coates, H. Assessing Student Learning Outcomes in Higher Education: Challenges and International Perspectives. Assess. Eval. High. Educ. 2016, 41, 655–661. [Google Scholar] [CrossRef]

- Christopoulos, A.; Conrad, M.; Shukla, M. Increasing Student Engagement through Virtual Interactions: How? Virtual Real. 2018, 22, 353–369. [Google Scholar] [CrossRef] [Green Version]

- König, J.; Jäger-Biela, D.J.; Glutsch, N. Adapting to Online Teaching during COVID-19 School Closure: Teacher Education and Teacher Competence Effects among Early Career Teachers in Germany. Eur. J. Teach. Educ. 2020, 43, 608–622. [Google Scholar] [CrossRef]

- Vanslambrouck, S.; Zhu, C.; Lombaerts, K.; Philipsen, B.; Tondeur, J. Students’ Motivation and Subjective Task Value of Participating in Online and Blended Learning Environments. Internet High. Educ. 2018, 36, 33–40. [Google Scholar] [CrossRef]

- Nancekivell, S.E.; Shah, P.; Gelman, S.A. Maybe They’re Born with It, or Maybe It’s Experience: Toward a Deeper Understanding of the Learning Style Myth. J. Educ. Psychol. 2020, 112, 221–235. [Google Scholar] [CrossRef]

- Darling-Hammond, L.; Flook, L.; Cook-Harvey, C.; Barron, B.; Osher, D. Implications for Educational Practice of the Science of Learning and Development. Appl. Dev. Sci. 2020, 24, 97–140. [Google Scholar] [CrossRef] [Green Version]

- Doo, M.Y.; Bonk, C.; Heo, H. A Meta-Analysis of Scaffolding Effects in Online Learning in Higher Education. Int. Rev. Res. Open Distrib. Learn. 2020, 21, 60–80. [Google Scholar] [CrossRef]

- Podsakoff, P.M.; MacKenzie, S.B.; Lee, J.-Y.; Podsakoff, N.P. Common Method Biases in Behavioral Research: A Critical Review of the Literature and Recommended Remedies. J. Appl. Psychol. 2003, 88, 879–903. [Google Scholar] [CrossRef] [PubMed]

- Sadaf, A.; Wu, T.; Martin, F. Cognitive Presence in Online Learning: A Systematic Review of Empirical Research from 2000 to 2019. Comput. Educ. Open 2021, 2, 100050. [Google Scholar] [CrossRef]

- Li, X.; Odhiambo, F.A.; Ocansey, D.K.W. The Effect of Students’ Online Learning Experience on Their Satisfaction during the COVID-19 Pandemic: The Mediating Role of Preference. Front. Psychol. 2023, 14, 1095073. [Google Scholar] [CrossRef]

- Clark, R.C.; Mayer, R.E. E-Learning and the Science of Instruction: Proven Guidelines for Consumers and Designers of Multimedia Learning; Wiley Desktop Editions; Wiley: Hoboken, NJ, USA, 2011; ISBN 9781118047262. [Google Scholar]

- Van der Kleij, F.M.; Feskens, R.C.W.; Eggen, T.J.H.M. Effects of Feedback in a Computer-Based Learning Environment on Students’ Learning Outcomes. Rev. Educ. Res. 2015, 85, 475–511. [Google Scholar] [CrossRef]

- Rao, K. Inclusive Instructional Design: Applying UDL to Online Learning. J. Appl. Instr. Des. 2021, 10, 1–10. [Google Scholar] [CrossRef]

- Al-Samarraie, H.; Saeed, N. A Systematic Review of Cloud Computing Tools for Collaborative Learning: Opportunities and Challenges to the Blended-Learning Environment. Comput. Educ. 2018, 124, 77–91. [Google Scholar] [CrossRef]

- Laurillard, D. The Educational Problem That MOOCs Could Solve: Professional Development for Teachers of Disadvantaged Students. Res. Learn. Technol. 2016, 24, 29369. [Google Scholar] [CrossRef] [Green Version]

- Lidolf, S.; Pasco, D. Educational Technology Professional Development in Higher Education: A Systematic Literature Review of Empirical Research. Front. Educ. 2020, 5, 35. [Google Scholar] [CrossRef]

- Carrillo, C.; Flores, M.A. COVID-19 and Teacher Education: A Literature Review of Online Teaching and Learning Practices. Eur. J. Teach. Educ. 2020, 43, 466–487. [Google Scholar] [CrossRef]

- Mystakidis, S.; Berki, E.; Valtanen, J.-P. The Patras Blended Strategy Model for Deep and Meaningful Learning in Quality Life-Long Distance Education. Electron. J. e-Learn. 2019, 17, 66–78. [Google Scholar] [CrossRef] [Green Version]

- Rahrouh, M.; Taleb, N.; Mohamed, E.A. Evaluating the Usefulness of E-Learning Management System Delivery in Higher Education. Int. J. Econ. Bus. Res. 2018, 16, 162. [Google Scholar] [CrossRef]

| Items | Measurement | Coding |

|---|---|---|

| Q1: What is your general evaluation of the course/learning material? | Likert scale | 1 = Very poor, 3 = Average, 5 = Very good |

| Q2: Did you find the learning materials relevant and up-to-date? | Likert scale | 1 = Very irrelevant and out-of-date, 3 = Neither relevant nor irrelevant, somewhat up to date, 5 = Very Relevant and Up-to-Date |

| Q3: What is your general impression of the platform? | Likert scale | 1 = Very poor, 3 = Average, 5 = Very good |

| Q4: Were the educational materials sufficient and effective in achieving the learning objectives of the course? | Likert scale | 1 = Not efficient, 3 = Neither efficient nor inefficient, 5 = Very efficient |

| Q5: Which module(s) did you find particularly useful? | Multiple selection | 1 = Introduction to Neuropedagogy, 2 = Engagement in the learning process, 3 = Neuromyths, 4 = Concentration/Attention, 5 = Emotions, 6 = Associative memory, 7 = None of them |

| S5.1: Please explain why you found the module(s) you selected in the previous question as useful. | Open-ended | N/A |

| Q6: Which module(s) do you think need revision or improvement? | Multiple selection | 1 = Introduction to Neuropedagogy, 2 = Engagement in the learning process, 3 = Neuromyths, 4 = Concentration/Attention, 5 = Emotions, 6 = Associative memory, 7 = None of them |

| S6.1: Please explain why you think this/these specific module(s) should be improved. | Open-ended | N/A |

| S7: Is there anything in the learning material that you would like to change or improve? Please provide your comments and suggestions. | Open-ended | N/A |

| Q8.1: Were the assessment questions in the Introduction to Neuropedagogy module based on the respective educational content? | Binary | 0 = No, 1 = Yes |

| Q8.2: Were the assessment questions in the Neuromyths module based on the respective educational content? | Binary | 0 = No, 1 = Yes |

| Q8.3: Were the assessment questions in the Concentration and Attention module based on the respective educational content? | Binary | 0 = No, 1 = Yes |

| Q8.4: Were the assessment questions in the Associative memory module based on the respective educational content? | Binary | 0 = No, 1 = Yes |

| Q8.5: Were the assessment questions in the Engagement module based on the respective educational content? | Binary | 0 = No, 1 = Yes |

| Q8.6: Were the assessment questions in the Emotions module based on the respective educational content? | Binary | 0 = No, 1 = Yes |

| Q9: Were the learning activities appropriate for the content? | Binary | 0 = No, 1 = Yes |

| Q10: Did you encounter any technical difficulties in your progress through the training programme? | Binary | 0 = No, 1 = Yes |

| S11: Comments and Recommendations | Open-ended | N/A |

| Item | M | Med | Mo | SD | VAR | Kurt | Skew |

|---|---|---|---|---|---|---|---|

| Q1: Evaluation of the course | 4.56 | 5 | 5 | 0.6 | 0.37 | 0.32 | −1.12 |

| Q2: Relevance of the learning materials | 4.53 | 5 | 5 | 0.55 | 0.31 | −0.51 | −0.69 |

| Q3: Perceived usefulness of the platform | 4.46 | 5 | 5 | 0.74 | 0.56 | −0.37 | −1.05 |

| Q4: Efficiency of the educational materials | 4.5 | 5 | 5 | 0.7 | 0.5 | 3.44 | −1.67 |

| Q10: Technical difficulties (platform) | 0.25 | 0 | 0 | 0.43 | 0.18 | −0.57 | 1.21 |

| Item | Q5: Perceived Usefulness of Modules | Q6: Modules That Should Be Revised | ||

|---|---|---|---|---|

| Module | Freq | Percent | Freq | Percent |

| Introduction to Neuropedagogy | 10 | 31.25% | 3 | 9.4% |

| Engagement in the learning process | 10 | 31.25% | 1 | 3.1% |

| Neuromyths | 12 | 37.5% | 3 | 9.4% |

| Concentration and Attention | 15 | 46.85% | 1 | 3.1% |

| Emotions | 18 | 56.25% | 4 | 12.5% |

| Associative memory | 13 | 40.6% | 1 | 3.1% |

| None of them | 0 | 0% | 26 | 81.25% |

| Evaluation of the Course | Relevance and Recency of Learning Materials | Usefulness of the Platform | Effectiveness of Educational Materials | |

|---|---|---|---|---|

| Evaluation of the course | 1 | |||

| Relevance and recency of learning materials | 0.86 | 1 | ||

| Usefulness of the platform | 0.73 | 0.67 | 1 | |

| Effectiveness of educational materials | 0.79 | 0.83 | 0.73 | 1 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Mystakidis, S.; Christopoulos, A.; Fragkaki, M.; Dimitropoulos, K. Online Professional Development on Educational Neuroscience in Higher Education Based on Design Thinking. Information 2023, 14, 382. https://doi.org/10.3390/info14070382

Mystakidis S, Christopoulos A, Fragkaki M, Dimitropoulos K. Online Professional Development on Educational Neuroscience in Higher Education Based on Design Thinking. Information. 2023; 14(7):382. https://doi.org/10.3390/info14070382

Chicago/Turabian StyleMystakidis, Stylianos, Athanasios Christopoulos, Maria Fragkaki, and Konstantinos Dimitropoulos. 2023. "Online Professional Development on Educational Neuroscience in Higher Education Based on Design Thinking" Information 14, no. 7: 382. https://doi.org/10.3390/info14070382

APA StyleMystakidis, S., Christopoulos, A., Fragkaki, M., & Dimitropoulos, K. (2023). Online Professional Development on Educational Neuroscience in Higher Education Based on Design Thinking. Information, 14(7), 382. https://doi.org/10.3390/info14070382