Federated Edge Intelligence and Edge Caching Mechanisms †

Abstract

1. Introduction

- Enhanced Client-Balancing Dirichlet Sampling (ECBDS) (Algorithm 1): We proposed ECBDS, a novel sampling technique that optimizes label distribution in federated learning scenarios. By improving the representation of labels across clients, ECBDS enhances the overall performance and generalization capabilities of federated learning models. This technique is among the novelties of our work, as other state-of-the-art methods do not perform a similar data distribution measure;

- A Client-Side Method for Peer to Peer Federated Learning is presented in Algorithm 2 along with a Peer to Peer Federated Learning Approach which shown in Algorithm 3.

- FedAM (Algorithm 4), for Raspberry Pi Devices: We introduced the FedAM algorithm, specifically tailored and tested for resource-constrained Raspberry Pi devices. This algorithm accelerates convergence and mitigates the impact of noisy gradients in federated learning settings. FedAM offers a practical solution to improve model performance on edge devices, enabling efficient and robust federated learning deployments;

- FedAALR (Algorithm 5), for Heterogeneous Data Distributions: Our proposed FedAALR algorithm addresses the challenge of heterogeneous data distributions in federated learning. By adaptively adjusting learning rates for individual clients, FedAALR enhances convergence speed and model performance. This contribution ensures that federated learning systems can effectively handle diverse and unevenly distributed data sources;

- Parameter Optimization in FedAM: We focused on parameter optimizations specifically for the FedAM algorithm. By fine-tuning key parameters, we achieved improved convergence speed and stability in federated learning processes. This contribution enables practitioners to maximize the effectiveness and efficiency of FedAM-based federated learning systems;

- Integration of Edge Caching Techniques: We enhanced the FedAALR algorithm by incorporating edge caching techniques. By strategically caching frequently accessed data at the network edge, we reduced communication costs and latency, making the algorithm more efficient. This integration of edge caching contributes to the scalability and practicality of federated learning deployments in resource-constrained environments.

2. Literature Review

2.1. Evolution of Federated Learning

2.2. Horizontal Federated Learning

2.3. Vertical Federated Learning

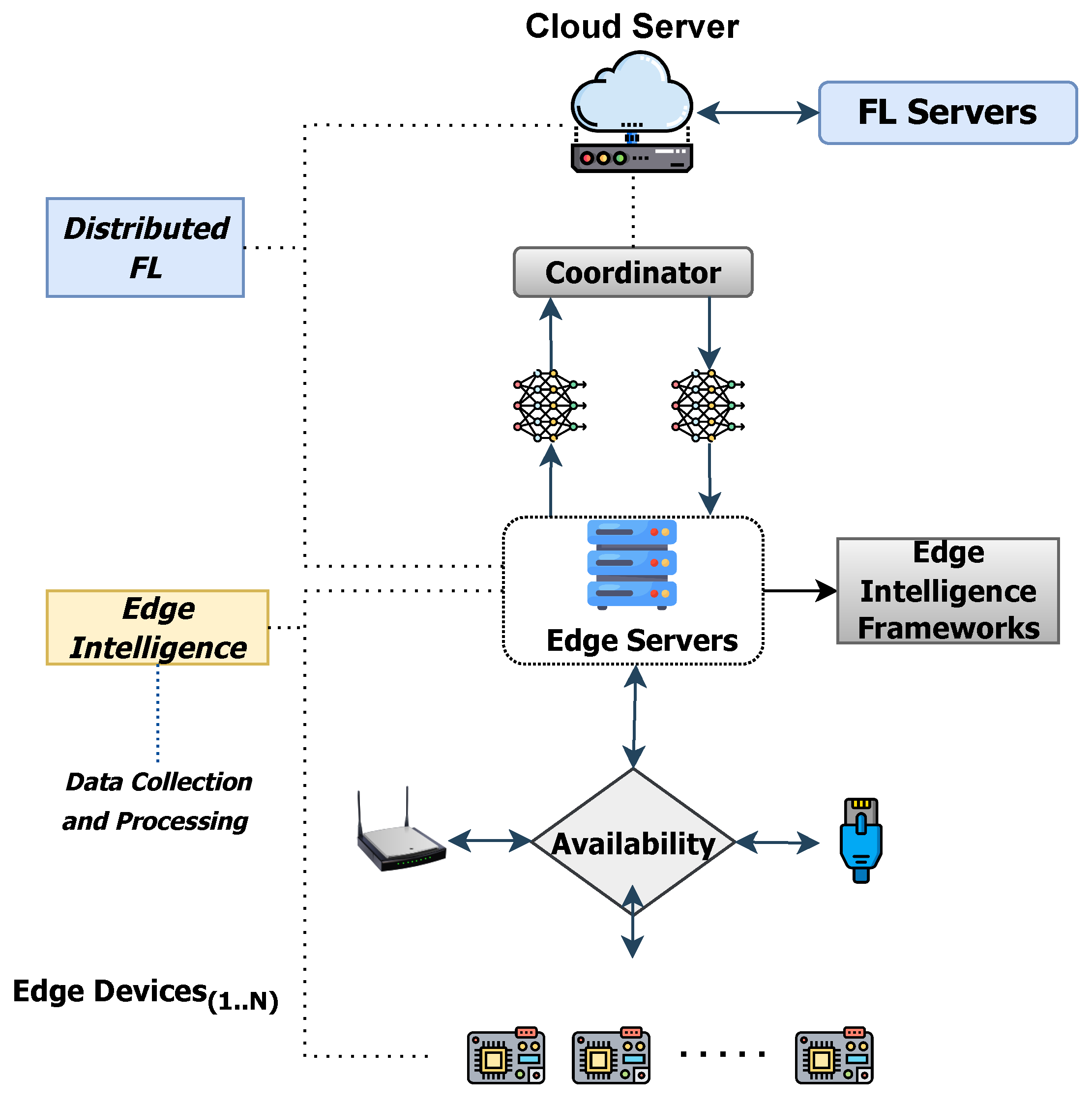

2.4. Incorporating Edge Intelligence with Federated Learning

3. Proposed Methodlogy

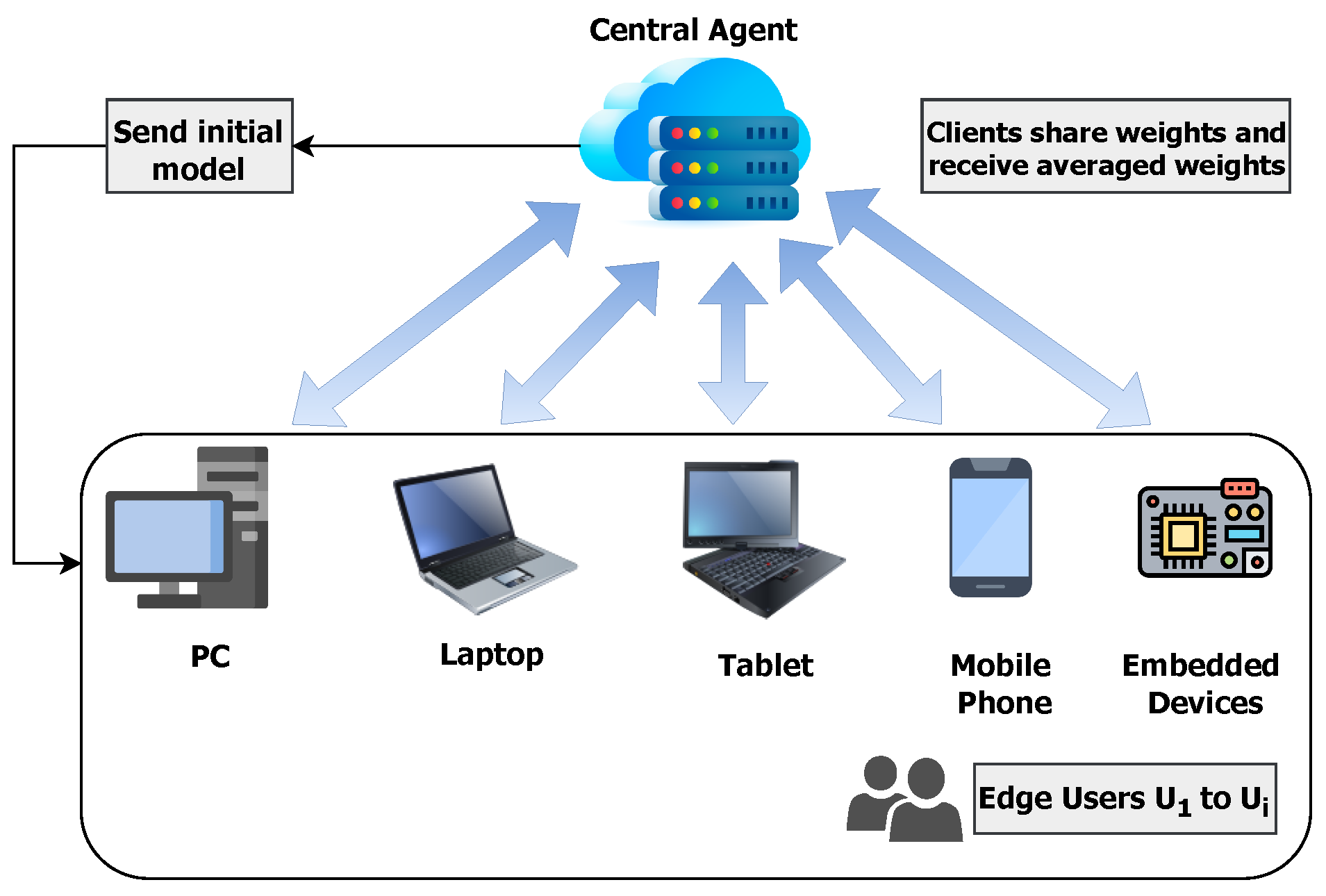

3.1. Understanding Federated Learning Techniques

3.2. Embedded Systems

3.3. Problem Formulation and Dataset

- The parameter vector has a length equal to the number of labels—which was 10 in this case—and ;

- Each sample represents a probability distribution across labels, and determines the corresponding distribution’s uniformity;

- As approaches 0, a single label dominates, whereas as approaches infinity, becomes increasingly uniform. For , every possible has an equal probability of being selected;

- For each client, was sampled, making the label distribution Dirichlet for every client.

3.4. Enchanced Client-Balancing Dirichlet Sampling Algorithm

| Algorithm 1 Enhanced Client-Balancing Dirichlet Sampling (ECBDS) |

| Require: Number of clients C, concentration parameter |

| Ensure: Client-balanced distribution |

|

3.5. Adapting to Federated Learning in a Peer-to-Peer Context

Peer-to-Peer Approach

- Algorithm 2 details the client-side operations in P2P federated learning, which include computing the local average of the received set of weights, updating the model’s weights, evaluating the model, and training the model for a specified number of local epochs. The algorithm saves both pre-fit and post-fit evaluation results for further analysis;

- Algorithm 3 illustrates the P2P federated learning approach, wherein devices exchange weights with one another, simulating P2P communication in a real-world scenario. The algorithm acts as a coordinator, collecting weights from each device and distributing the complete set of weights to all devices. The algorithm starts by sending the model M to all clients within U. Then, for each round of federated learning, it initiates the training of all clients, as shown in Algorithm 2.

| Algorithm 2 Client-Side Operations in P2P Federated Learning |

|

| Algorithm 3 P2P Federated Learning Approach |

|

3.6. Federated Averaging with Momentum

- Local computation: Each edge device (client) in the network computes its own model updates locally using its dataset. This reduces the need for transferring large amounts of raw data to a central server, thereby preserving privacy and reducing communication overhead;

- Momentum: The algorithm incorporates momentum, a popular optimization technique that helps accelerate the convergence of the learning process. By utilizing the momentum term, FedAM can potentially reduce the number of communication rounds needed for convergence, making the algorithm more efficient in terms of both computation and communication;

- Parallel processing: FedAM processes the local updates of multiple clients in parallel, which allows it to take advantage of the distributed nature of the edge devices. This approach can lead to faster convergence times and improved scalability;

- Aggregated updates: Instead of sending the entire local model, each client sends only the model updates to the central server. This reduces communication costs further and helps protect user privacy;

- Adaptability: By modifying its input parameters, such as the number of clients, the number of local epochs, and the learning rate, the FedAM algorithm can be readily adapted to various edge computing scenarios. This versatility makes the algorithm applicable to a vast array of applications.

| Algorithm 4 Federated Averaging with Momentum (FedAM) |

|

3.7. Federated Averaging with Adaptive Learning Rates

| Algorithm 5 Federated Averaging with Adaptive Learning Rates (FedAALR) |

|

- Local computation: Each edge device (client) in the network computes its own model updates locally using its dataset. This reduces the need for transferring large amounts of raw data to a central server, thereby preserving privacy and reducing communication overhead;

- Adaptive learning rates: The algorithm incorporates adaptive learning rates, which are calculated based on the gradients’ magnitude. This technique helps the learning process adapt to different clients’ data distributions and can lead to faster convergence times and improved performance;

- Parallel processing: FedAALR processes the local updates of multiple clients in parallel, which allows it to take advantage of the distributed nature of edge devices. This approach can lead to faster convergence times and improved scalability;

- Aggregated updates: Instead of sending the entire local model, each client sends only the model updates to the central server. This reduces communication costs further and helps protect user privacy;

- Flexibility: FedAALR can be readily adapted to various edge computing scenarios by modifying its input parameters, such as the number of clients, the number of local epochs, the initial learning rate, and the cache decay factor. This adaptability renders the algorithm suitable for a broad spectrum of applications.

3.8. Kullback–Leibler Divergence in the FedDF Algorithm

3.9. Kulback–Leibler Divergence in FedDF Algorithm for Distributed FL

3.10. Jensen–Shannon Divergence

Dealing with Noisy Data

3.11. Evaluation Methodology

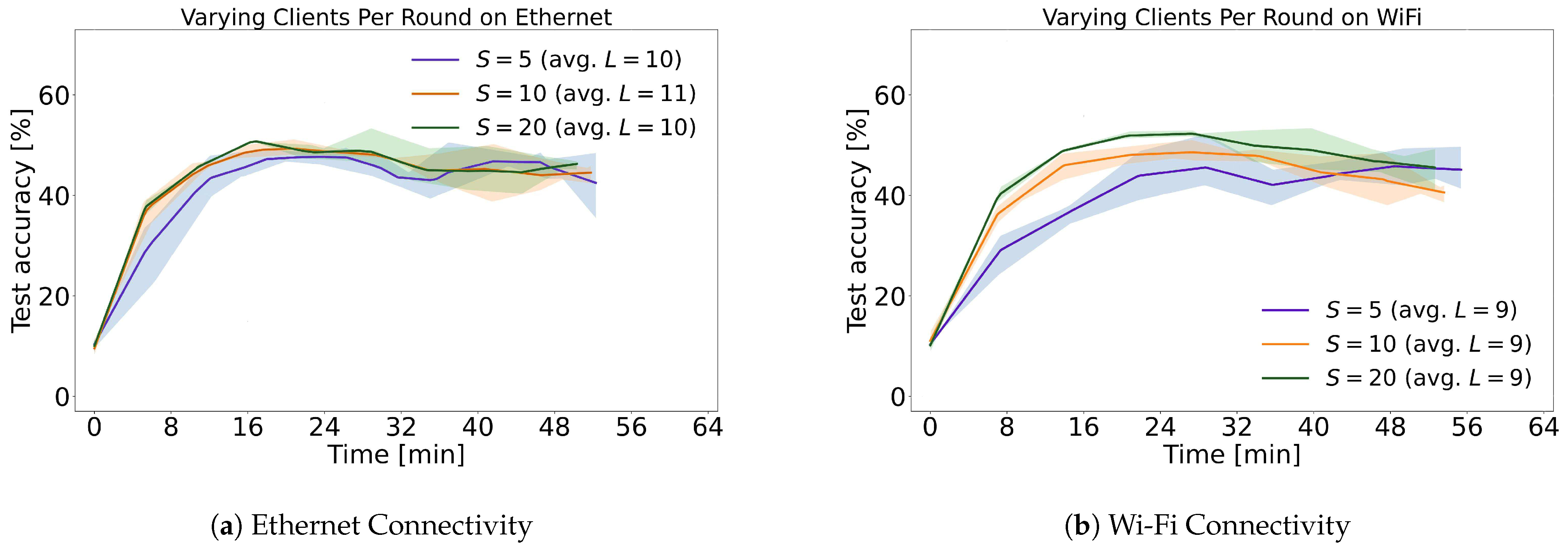

4. Analysis of Experimental Results

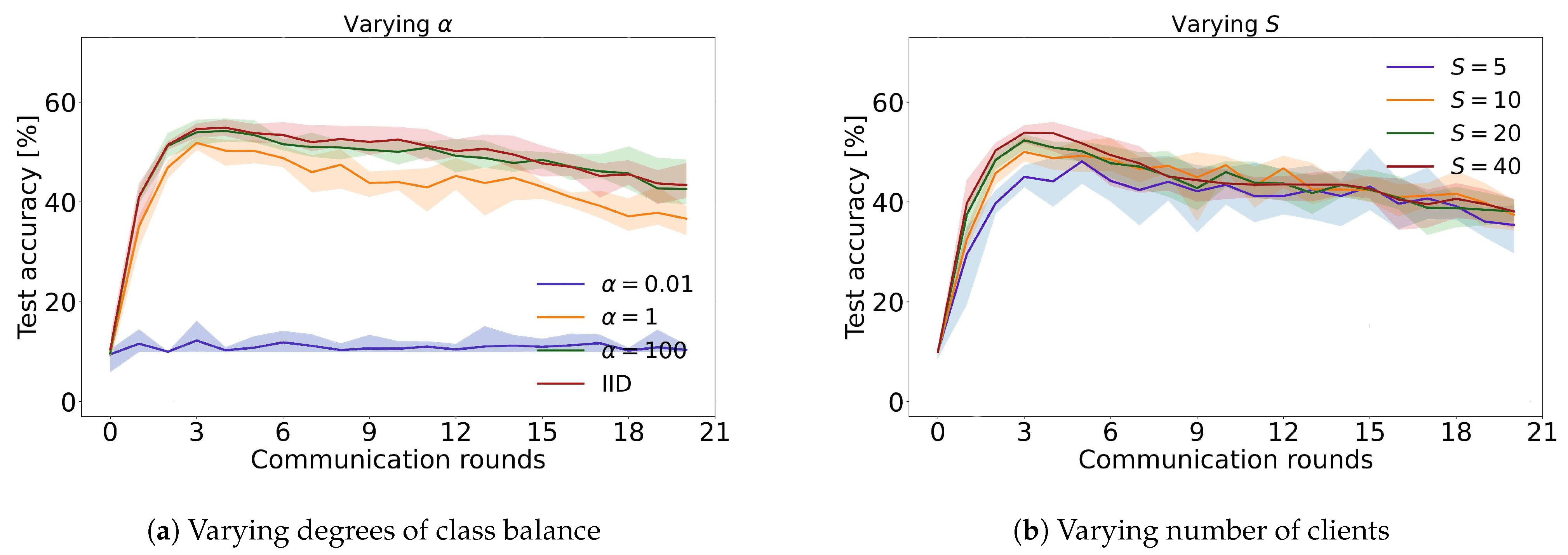

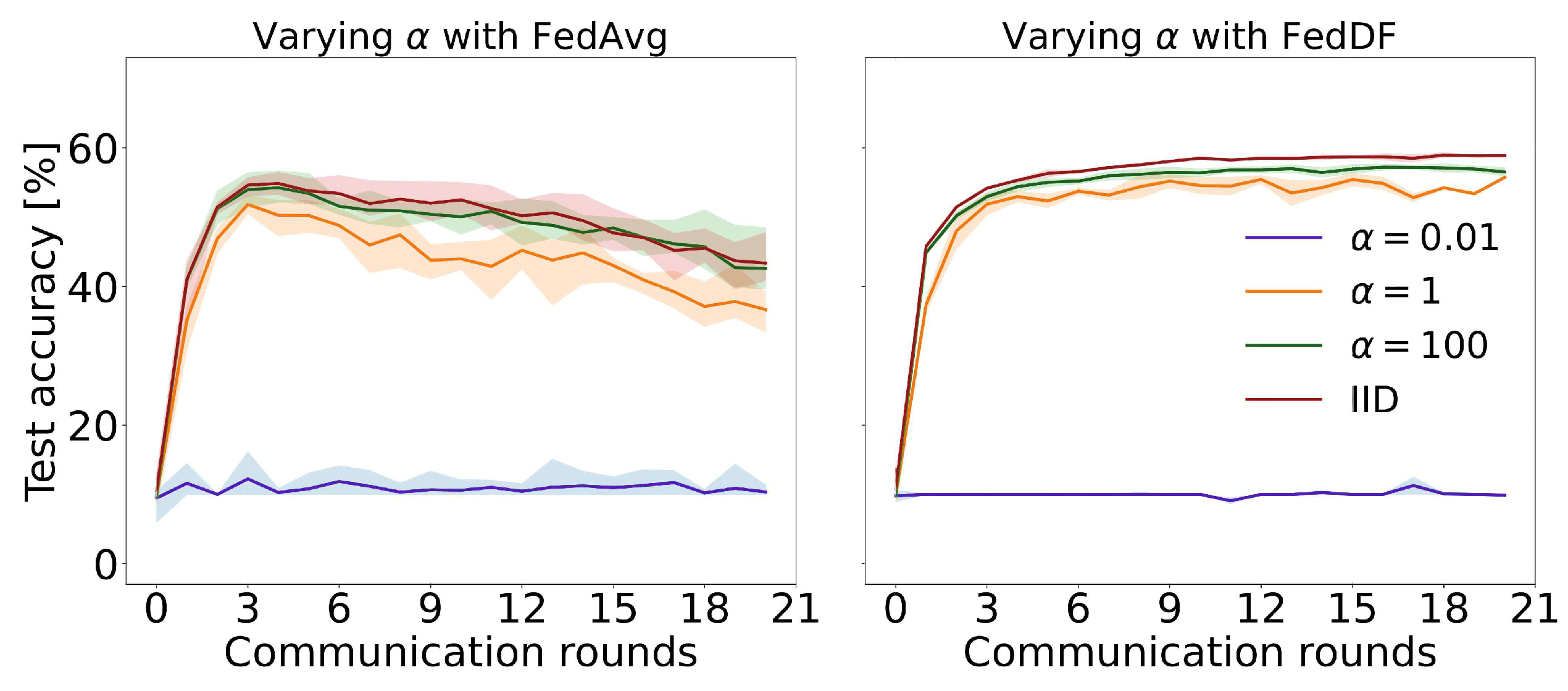

4.1. Previous Outcomes

4.2. Current Results

5. Parameter Optimization in Federated Learning

5.1. Federated Averaging with Momentum

Incorporating Edge Caching and Bayesian Optimizations

5.2. Federated Averaging with Adaptive Learning Rates

- Optimizing the initial learning rate : To optimize , we can minimize the validation loss with respect to . We can define a search space and find the optimal learning rate as follows:

- Optimizing the cache decay rate : To optimize , we can minimize the validation loss with respect to . We can define a search space and find the optimal cache decay rate as follows:

- Optimizing the number of clients and fraction : To optimize K and S, we can minimize the validation loss with respect to K and S. We can define a search space and find the optimal combination as follows:

- Optimizing the number of global communication rounds and local epochs : To optimize L and E, we can minimize the validation loss with respect to L and E. We can define a search space and find the optimal combination as follows:

5.3. Incorporating Edge Caching and Bayesian Optimizations

- Edge Caching: By caching intermediate model updates at the edge devices or edge servers, we can reduce the communication overhead between the clients and the central server. We introduce a caching factor , where , that represents the proportion of local updates to be cached at the edge device or edge server. We can modify the communication overhead function to account for the caching factor :We can optimize the caching factor by incorporating it into the optimization problem:

- Reducing Communication Costs: Communication costs can be reduced by adjusting the parameters of the FedAALR algorithm. For example, increasing the number of local epochs E can reduce the number of required communication rounds, thereby reducing communication costs. Additionally, adjusting the fraction of clients S participating in each round can also influence communication costs. To optimize the trade-off between communication costs and convergence speed, we can consider a joint optimization problem:

- Other Optimizations: Apart from edge caching and communication cost reduction, other optimization techniques can be applied to the FedAALR algorithm. For instance, using adaptive communication strategies, where the frequency of communication between clients and the central server is adjusted based on the convergence rate, can help improve efficiency. Additionally, employing techniques such as gradient sparsification or quantization can further reduce communication overhead.

6. In-Depth Analysis and Explanation

7. Conclusions and Future Directions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Appendix A

Appendix A.1. Basic Notations and Symbols

| Symbol | Description |

|---|---|

| K | Number of clients in the federated learning system |

| Size of the unique portion of the larger training dataset held by the k-th client | |

| Global model hosted on the central server | |

| L | Number of communication rounds |

| S | Number of clients selected for each communication round |

| E | Number of local epochs of learning performed by clients |

| Local model of the k-th client | |

| f | Implicit objective function |

| Model weights | |

| ℓ | Loss function |

| Updated global model after the l-th communication round | |

| Updated local model of the i-th client after the l-th communication round | |

| Parameter vector for the Dirichlet distribution | |

| Probability distribution across labels | |

| Label distribution for the i-th client | |

| C | Number of clients (in the context of the Dirichlet sampling algorithm) |

| Matrix representing the label distributions for all clients | |

| Sum of the elements of the j-th column of matrix | |

| u | Measure to assess the closeness of to the goal of equal column sums |

| Optional scaling factor for the measure u |

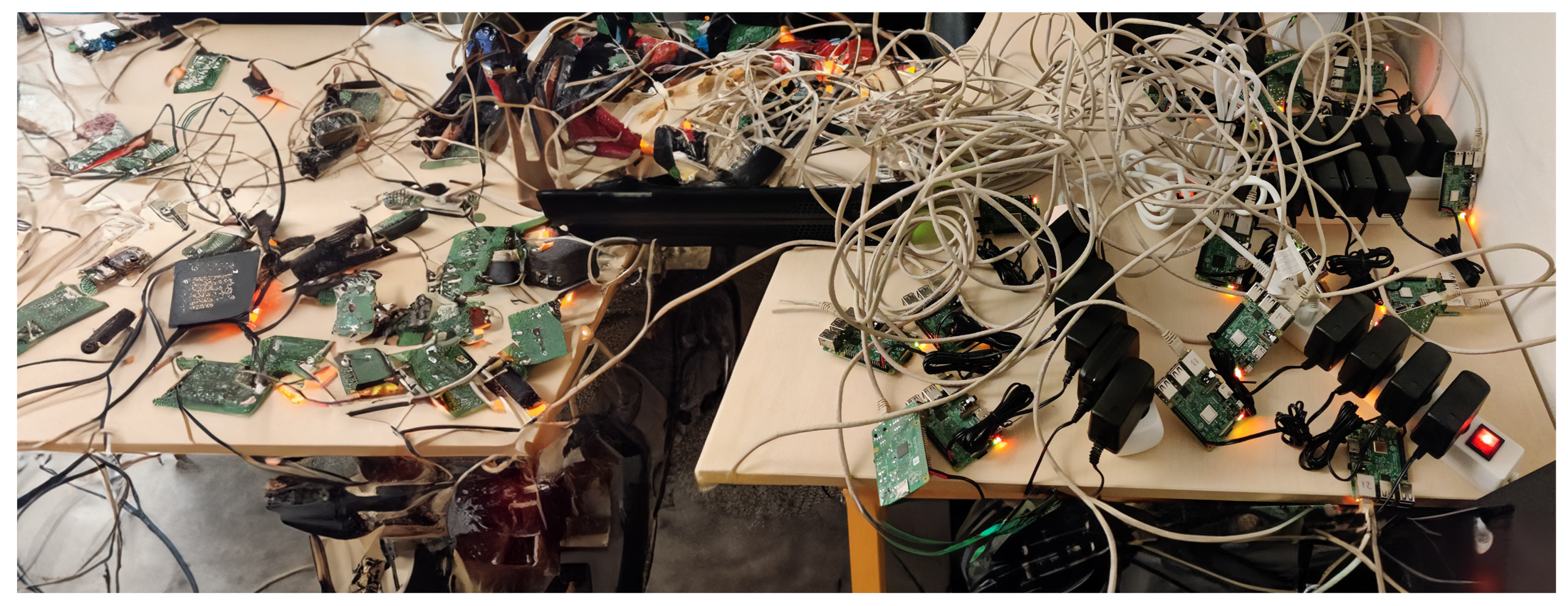

Appendix A.2. Hardware Setup

References

- Kairouz, P.; McMahan, H.B.; Avent, B.; Bellet, A.; Bennis, M.; Bhagoji, A.N.; Bonawitz, K.; Charles, Z.; Cormode, G.; Cummings, R.; et al. Advances and open problems in federated learning. Found. Trends Mach. Learn. 2021, 14, 1–210. [Google Scholar] [CrossRef]

- Blanco-Justicia, A.; Domingo-Ferrer, J.; Martínez, S.; Sánchez, D.; Flanagan, A.; Tan, K.E. Achieving security and privacy in federated learning systems: Survey, research challenges and future directions. Eng. Appl. Artif. Intell. 2021, 106, 104468. [Google Scholar] [CrossRef]

- Yang, Q.; Liu, Y.; Chen, T.; Tong, Y. Federated machine learning: Concept and applications. ACM Trans. Intell. Syst. Technol. (TIST) 2019, 10, 1–19. [Google Scholar] [CrossRef]

- McMahan, B.; Moore, E.; Ramage, D.; Hampson, S.; y Arcas, B.A. Communication-efficient learning of deep networks from decentralized data. In Proceedings of the Artificial Intelligence and Statistics (PMLR 2017), Sydney, NSW, Australia, 6–11 August 2017; pp. 1273–1282. [Google Scholar]

- Lin, T.; Kong, L.; Stich, S.U.; Jaggi, M. Ensemble distillation for robust model fusion in federated learning. Adv. Neural Inf. Process. Syst. 2020, 33, 2351–2363. [Google Scholar]

- Zhang, T.; Gao, L.; He, C.; Zhang, M.; Krishnamachari, B.; Avestimehr, A.S. Federated learning for the internet of things: Applications, challenges, and opportunities. IEEE Internet Things Mag. 2022, 5, 24–29. [Google Scholar] [CrossRef]

- Shaheen, M.; Farooq, M.S.; Umer, T.; Kim, B.S. Applications of federated learning; Taxonomy, challenges, and research trends. Electronics 2022, 11, 670. [Google Scholar] [CrossRef]

- Rodríguez-Barroso, N.; Jiménez-López, D.; Luzón, M.V.; Herrera, F.; Martínez-Cámara, E. Survey on federated learning threats: Concepts, taxonomy on attacks and defences, experimental study and challenges. Inf. Fusion 2023, 90, 148–173. [Google Scholar] [CrossRef]

- Rausch, T.; Dustdar, S. Edge intelligence: The convergence of humans, things, and ai. In Proceedings of the 2019 IEEE International Conference on Cloud Engineering (IC2E), Prague, Czech Republic, 24–27 June 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 86–96. [Google Scholar]

- Wang, T.; Sun, B.; Wang, L.; Zheng, X.; Jia, W. EIDLS: An Edge-Intelligence-Based Distributed Learning System Over Internet of Things. IEEE Trans. Syst. Man Cybern. Syst. 2023, 1–13. [Google Scholar] [CrossRef]

- Britto Corthis, P.; Ramesh, G. A Journey of Artificial Intelligence and Its Evolution to Edge Intelligence. In Micro-Electronics and Telecommunication Engineering: Proceedings of 5th ICMETE 2021, Ghaziabad, India, 24–25 September 2022; Springer: Berlin/Heidelberg, Germany, 2022; pp. 817–826. [Google Scholar]

- Feng, H.; Mu, G.; Zhong, S.; Zhang, P.; Yuan, T. Benchmark analysis of yolo performance on edge intelligence devices. Cryptography 2022, 6, 16. [Google Scholar] [CrossRef]

- Wang, X.; Han, Y.; Leung, V.C.; Niyato, D.; Yan, X.; Chen, X. Edge AI: Convergence of Edge Computing and Artificial Intelligence; Springer: Berlin/Heidelberg, Germany, 2020. [Google Scholar]

- Liu, D.; Kong, H.; Luo, X.; Liu, W.; Subramaniam, R. Bringing AI to edge: From deep learning’s perspective. Neurocomputing 2022, 485, 297–320. [Google Scholar] [CrossRef]

- Du, Y.; Wang, Z.; Leung, V.C. Blockchain-enabled edge intelligence for IoT: Background, emerging trends and open issues. Future Internet 2021, 13, 48. [Google Scholar] [CrossRef]

- Molokomme, D.N.; Onumanyi, A.J.; Abu-Mahfouz, A.M. Edge intelligence in Smart Grids: A survey on architectures, offloading models, cyber security measures, and challenges. J. Sens. Actuator Netw. 2022, 11, 47. [Google Scholar] [CrossRef]

- Liu, J.; Xiang, J.; Jin, Y.; Liu, R.; Yan, J.; Wang, L. Boost precision agriculture with unmanned aerial vehicle remote sensing and edge intelligence: A survey. Remote Sens. 2021, 13, 4387. [Google Scholar] [CrossRef]

- Schizas, N.; Karras, A.; Karras, C.; Sioutas, S. TinyML for Ultra-Low Power AI and Large Scale IoT Deployments: A Systematic Review. Future Internet 2022, 14, 363. [Google Scholar] [CrossRef]

- Mills, J.; Hu, J.; Min, G. Communication-efficient federated learning for wireless edge intelligence in IoT. IEEE Internet Things J. 2019, 7, 5986–5994. [Google Scholar] [CrossRef]

- Ye, Y.; Li, S.; Liu, F.; Tang, Y.; Hu, W. EdgeFed: Optimized federated learning based on edge computing. IEEE Access 2020, 8, 209191–209198. [Google Scholar] [CrossRef]

- Mwase, C.; Jin, Y.; Westerlund, T.; Tenhunen, H.; Zou, Z. Communication-efficient distributed AI strategies for the IoT edge. Future Gener. Comput. Syst. 2022, 131, 292–308. [Google Scholar] [CrossRef]

- Zhang, T.; Wang, S.; Li, G.; Liu, F.; Zhu, G.; Wang, R. Accelerating edge intelligence via integrated sensing and communication. In Proceedings of the ICC 2022-IEEE International Conference on Communications, Seoul, Republic of Korea, 16–20 May 2022; IEEE: Piscataway, NJ, USA, 2022; pp. 1586–1592. [Google Scholar]

- Tang, S.; Chen, L.; He, K.; Xia, J.; Fan, L.; Nallanathan, A. Computational intelligence and deep learning for next-generation edge-enabled industrial IoT. IEEE Trans. Netw. Sci. Eng. 2022. [Google Scholar] [CrossRef]

- Shan, N.; Cui, X.; Gao, Z. “DRL+ FL”: An intelligent resource allocation model based on deep reinforcement learning for Mobile Edge Computing. Comput. Commun. 2020, 160, 14–24. [Google Scholar] [CrossRef]

- Abreha, H.G.; Hayajneh, M.; Serhani, M.A. Federated learning in edge computing: A systematic survey. Sensors 2022, 22, 450. [Google Scholar] [CrossRef] [PubMed]

- Dean, J.; Corrado, G.; Monga, R.; Chen, K.; Devin, M.; Mao, M.; Ranzato, M.; Senior, A.; Tucker, P.; Yang, K.; et al. Large scale distributed deep networks. Adv. Neural Inf. Process. Syst. 2012, 25, 1223–1231. [Google Scholar]

- McMahan, H.B.; Ramage, D.; Talwar, K.; Zhang, L. Learning differentially private recurrent language models. arXiv 2017, arXiv:1710.06963. [Google Scholar]

- Bonawitz, K.; Ivanov, V.; Kreuter, B.; Marcedone, A.; McMahan, H.B.; Patel, S.; Ramage, D.; Segal, A.; Seth, K. Practical secure aggregation for privacy-preserving machine learning. In Proceedings of the 2017 ACM SIGSAC Conference on Computer and Communications Security, Dallas, TX, USA, 30 October–3 November 2017; pp. 1175–1191. [Google Scholar]

- Karras, A.; Karras, C.; Giotopoulos, K.C.; Tsolis, D.; Oikonomou, K.; Sioutas, S. Peer to Peer Federated Learning: Towards Decentralized Machine Learning on Edge Devices. In Proceedings of the 2022 7th South-East Europe Design Automation, Computer Engineering, Computer Networks and Social Media Conference (SEEDA-CECNSM), Ioannina, Greece, 23–25 September 2022; pp. 1–9. [Google Scholar] [CrossRef]

- Wang, X.; Chen, W.; Xia, J.; Wen, Z.; Zhu, R.; Schreck, T. HetVis: A Visual Analysis Approach for Identifying Data Heterogeneity in Horizontal Federated Learning. IEEE Trans. Vis. Comput. Graph. 2023, 29, 310–319. [Google Scholar] [CrossRef] [PubMed]

- Wang, J.; Zhang, L.; Li, A.; You, X.; Cheng, H. Efficient Participant Contribution Evaluation for Horizontal and Vertical Federated Learning. In Proceedings of the 2022 IEEE 38th International Conference on Data Engineering (ICDE), Kuala Lumpur, Malaysia, 9–12 May 2022; IEEE: Piscataway, NJ, USA, 2022; pp. 911–923. [Google Scholar]

- Zhang, X.; Mavromatics, A.; Vafeas, A.; Nejabati, R.; Simeonidou, D. Federated Feature Selection for Horizontal Federated Learning in IoT Networks. IEEE Internet Things J. 2023, 10, 10095–10112. [Google Scholar] [CrossRef]

- Aono, Y.; Hayashi, T.; Wang, L.; Moriai, S. Privacy-preserving deep learning via additively homomorphic encryption. IEEE Trans. Inf. Forensics Secur. 2017, 13, 1333–1345. [Google Scholar]

- Chen, Y.R.; Rezapour, A.; Tzeng, W.G. Privacy-preserving ridge regression on distributed data. Inf. Sci. 2018, 451, 34–49. [Google Scholar] [CrossRef]

- Kim, H.; Park, J.; Bennis, M.; Kim, S.L. On-device federated learning via blockchain and its latency analysis. arXiv 2018, arXiv:1808.03949. [Google Scholar]

- Islam, A.; Al Amin, A.; Shin, S.Y. FBI: A Federated Learning-Based Blockchain-Embedded Data Accumulation Scheme Using Drones for Internet of Things. IEEE Wirel. Commun. Lett. 2022, 11, 972–976. [Google Scholar] [CrossRef]

- Smith, V.; Chiang, C.K.; Sanjabi, M.; Talwalkar, A.S. Federated multi-task learning. Adv. Neural Inf. Process. Syst. 2017, 30, 4424–4434. [Google Scholar]

- Du, W.; Han, Y.S.; Chen, S. Privacy-preserving multivariate statistical analysis: Linear regression and classification. In Proceedings of the 2004 SIAM International Conference on Data Mining, Lake Buena Vista, FL, USA, 22–24 April 2004; pp. 222–233. [Google Scholar]

- Du, W.; Atallah, M.J. Privacy-preserving cooperative statistical analysis. In Proceedings of the Seventeenth Annual Computer Security Applications Conference, New Orleans, LA, USA, 10–14 December 2001; IEEE: Piscataway, NJ, USA, 2001; pp. 102–110. [Google Scholar]

- Wan, L.; Ng, W.K.; Han, S.; Lee, V.C. Privacy-preservation for gradient descent methods. In Proceedings of the 13th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, San Jose, CA, USA, 12–15 August 2007; pp. 775–783. [Google Scholar]

- Karr, A.F.; Lin, X.; Sanil, A.P.; Reiter, J.P. Privacy-preserving analysis of vertically partitioned data using secure matrix products. J. Off. Stat. 2009, 25, 125. [Google Scholar]

- Vaidya, J.; Clifton, C. Privacy preserving association rule mining in vertically partitioned data. In Proceedings of the Eighth ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, Edmonton, AB, Canada, 23–26 July 2002; pp. 639–644. [Google Scholar]

- Cheng, K.; Fan, T.; Jin, Y.; Liu, Y.; Chen, T.; Papadopoulos, D.; Yang, Q. Secureboost: A lossless federated learning framework. IEEE Intell. Syst. 2021, 36, 87–98. [Google Scholar] [CrossRef]

- Hardy, S.; Henecka, W.; Ivey-Law, H.; Nock, R.; Patrini, G.; Smith, G.; Thorne, B. Private federated learning on vertically partitioned data via entity resolution and additively homomorphic encryption. arXiv 2017, arXiv:1711.10677. [Google Scholar]

- Schoenmakers, B.; Tuyls, P. Efficient binary conversion for Paillier encrypted values. In Advances in Cryptology-EUROCRYPT 2006: Proceedings of the 24th Annual International Conference on the Theory and Applications of Cryptographic Techniques, St. Petersburg, Russia, 28 May–1 June 2006; Springer: Berlin/Heidelberg, Germany, 2006; pp. 522–537. [Google Scholar]

- Xu, D.; Li, T.; Li, Y.; Su, X.; Tarkoma, S.; Jiang, T.; Crowcroft, J.; Hui, P. Edge Intelligence: Empowering Intelligence to the Edge of Network. Proc. IEEE 2021, 109, 1778–1837. [Google Scholar] [CrossRef]

- Liu, T.; Di, B.; Song, L. Privacy-Preserving Federated Edge Learning: Modeling and Optimization. IEEE Commun. Lett. 2022, 26, 1489–1493. [Google Scholar] [CrossRef]

- Mora, A.; Fantini, D.; Bellavista, P. Federated Learning Algorithms with Heterogeneous Data Distributions: An Empirical Evaluation. In Proceedings of the 2022 IEEE/ACM 7th Symposium on Edge Computing (SEC), Seattle, WA, USA, 5–8 December 2022; IEEE: Piscataway, NJ, USA, 2022; pp. 336–341. [Google Scholar]

- Jin, C.; Chen, X.; Gu, Y.; Li, Q. FedDyn: A dynamic and efficient federated distillation approach on Recommender System. In Proceedings of the 2022 IEEE 28th International Conference on Parallel and Distributed Systems (ICPADS), Nanjing, China, 10–12 January 2023; IEEE: Piscataway, NJ, USA, 2023; pp. 786–793. [Google Scholar]

- Cui, J.; Wu, Q.; Zhou, Z.; Chen, X. FedBranch: Heterogeneous Federated Learning via Multi-Branch Neural Network. In Proceedings of the 2022 IEEE/CIC International Conference on Communications in China (ICCC), Sanshui, Foshan, China, 11–13 August 2022; IEEE: Piscataway, NJ, USA, 2022; pp. 1101–1106. [Google Scholar]

- Li, N.; Wang, N.; Ou, W.; Han, W. FedTD: Efficiently Share Telemedicine Data with Federated Distillation Learning. In International Conference on Machine Learning for Cyber Security; Springer: Berlin/Heidelberg, Germany, 2023; pp. 501–515. [Google Scholar]

- Yang, Y.; Yang, R.; Peng, H.; Li, Y.; Li, T.; Liao, Y.; Zhou, P. FedACK: Federated Adversarial Contrastive Knowledge Distillation for Cross-Lingual and Cross-Model Social Bot Detection. arXiv 2023, arXiv:2303.07113. [Google Scholar]

- Musa, S.S.; Zennaro, M.; Libsie, M.; Pietrosemoli, E. Mobility-aware proactive edge caching optimization scheme in information-centric iov networks. Sensors 2022, 22, 1387. [Google Scholar] [CrossRef]

- Li, C.; Qianqian, C.; Luo, Y. Low-latency edge cooperation caching based on base station cooperation in SDN based MEC. Expert Syst. Appl. 2022, 191, 116252. [Google Scholar] [CrossRef]

- Qian, L.; Qu, Z.; Cai, M.; Ye, B.; Wang, X.; Wu, J.; Duan, W.; Zhao, M.; Lin, Q. FastCache: A write-optimized edge storage system via concurrent merging cache for IoT applications. J. Syst. Archit. 2022, 131, 102718. [Google Scholar] [CrossRef]

- Sharma, S.; Peddoju, S.K. IoT-Cache: Caching Transient Data at the IoT Edge. In Proceedings of the 2022 IEEE 47th Conference on Local Computer Networks (LCN), Edmonton, AB, Canada, 26–29 September 2022; IEEE: Piscataway, NJ, USA, 2022; pp. 307–310. [Google Scholar]

- Zhang, Z.; Wei, X.; Lung, C.H.; Zhao, Y. iCache: An Intelligent Caching Scheme for Dynamic Network Environments in ICN-based IoT Networks. IEEE Internet Things J. 2022, 10, 1787–1799. [Google Scholar] [CrossRef]

- Reiss-Mirzaei, M.; Ghobaei-Arani, M.; Esmaeili, L. A Review on the Edge Caching Mechanisms in the Mobile Edge Computing: A Social-aware Perspective. Internet Things 2023, 22, 100690. [Google Scholar] [CrossRef]

- Karras, C.; Karras, A.; Avlonitis, M.; Sioutas, S. An Overview of MCMC Methods: From Theory to Applications. In Artificial Intelligence Applications and Innovations, Proceedings of the AIAI 2022 IFIP WG 12.5 International Workshops: MHDW 2022, 5G-PINE 2022, AIBMG 2022, ML@ HC 2022, and AIBEI 2022, Hersonissos, Crete, Greece, 17–20 June 2022; Maglogiannis, I., Iliadis, L., Macintyre, J., Cortez, P., Eds.; Springer: Cham, Switzerland, 2022; pp. 319–332. [Google Scholar]

- Karras, C.; Karras, A.; Avlonitis, M.; Giannoukou, I.; Sioutas, S. Maximum Likelihood Estimators on MCMC Sampling Algorithms for Decision Making. In Artificial Intelligence Applications and Innovations, Proceedings of the AIAI 2022 IFIP WG 12.5 International Workshops: MHDW 2022, 5G-PINE 2022, AIBMG 2022, ML@ HC 2022, and AIBEI 2022, Hersonissos, Crete, Greece, 17–20 June 2022; Maglogiannis, I., Iliadis, L., Macintyre, J., Cortez, P., Eds.; Springer: Cham, Switzerland, 2022; pp. 345–356. [Google Scholar]

- Coullon, J.; South, L.; Nemeth, C. Efficient and generalizable tuning strategies for stochastic gradient MCMC. Stat. Comput. 2023, 33, 66. [Google Scholar] [CrossRef]

- Ding, S.; Li, C.; Xu, X.; Ding, L.; Zhang, J.; Guo, L.; Shi, T. A Sampling-Based Density Peaks Clustering Algorithm for Large-Scale Data. Pattern Recognit. 2023, 136, 109238. [Google Scholar] [CrossRef]

- Karras, A.; Karras, C.; Giotopoulos, K.C.; Giannoukou, I.; Tsolis, D.; Sioutas, S. Download Speed Optimization in P2P Networks Using Decision Making and Adaptive Learning. In Proceedings of the ICR’22 International Conference on Innovations in Computing Research, Athens, Greece, 29–31 August 2022; Daimi, K., Al Sadoon, A., Eds.; Springer International Publishing: Cham, Switezerland, 2022; pp. 225–238. [Google Scholar]

| Method | Advantages | Limitations |

|---|---|---|

| Horizontal FL | Privacy preservation, accuracy enhancement | Information leak risk |

| Vertical FL | FL performance improvement, privacy preservation | Needs novel solutions |

| Edge Intelligence with FL | Low latency, bandwidth economy, privacy/security | Extensive research required |

| K | S | E | L | B | ||||

|---|---|---|---|---|---|---|---|---|

| 40 | 10–40 | 1 | 20 | 20 | 0 | 16 |

| Algorithm | Steps | Accuracy (10 Devices) | Accuracy (20 Devices) | Accuracy (40 Devices) |

|---|---|---|---|---|

| FedAvg | 200 comm. rounds | 62.1 | 65.7 | 69.2 |

| Centralized | 10 full epochs | 65.3 | 67.3 | 70.3 |

| P2P side processing | 10 full epochs | 73.4 | 75.7 | 77.9 |

| P2P Decentralized FL | 200 comm. rounds | 79.2 | 81.3 | 83.1 |

| FedAM | 150 comm. rounds | 79.6 | 80.1 | 81.3 |

| FedAALR | 130 comm. rounds | 80.0 | 81.5 | 82.7 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Karras, A.; Karras, C.; Giotopoulos, K.C.; Tsolis, D.; Oikonomou, K.; Sioutas, S. Federated Edge Intelligence and Edge Caching Mechanisms. Information 2023, 14, 414. https://doi.org/10.3390/info14070414

Karras A, Karras C, Giotopoulos KC, Tsolis D, Oikonomou K, Sioutas S. Federated Edge Intelligence and Edge Caching Mechanisms. Information. 2023; 14(7):414. https://doi.org/10.3390/info14070414

Chicago/Turabian StyleKarras, Aristeidis, Christos Karras, Konstantinos C. Giotopoulos, Dimitrios Tsolis, Konstantinos Oikonomou, and Spyros Sioutas. 2023. "Federated Edge Intelligence and Edge Caching Mechanisms" Information 14, no. 7: 414. https://doi.org/10.3390/info14070414

APA StyleKarras, A., Karras, C., Giotopoulos, K. C., Tsolis, D., Oikonomou, K., & Sioutas, S. (2023). Federated Edge Intelligence and Edge Caching Mechanisms. Information, 14(7), 414. https://doi.org/10.3390/info14070414