Radar-Based Invisible Biometric Authentication

Abstract

:1. Introduction

- Study the stability over time, with signal acquisition on sessions separated by days;

- Understand the impact of emotional states on the system’s performance;

- Assess the system’s sensitivity to the number of classes (subjects).

2. Background

2.1. Biometrics and the Importance of the Bio-Radar

2.2. The Bio-Radar System

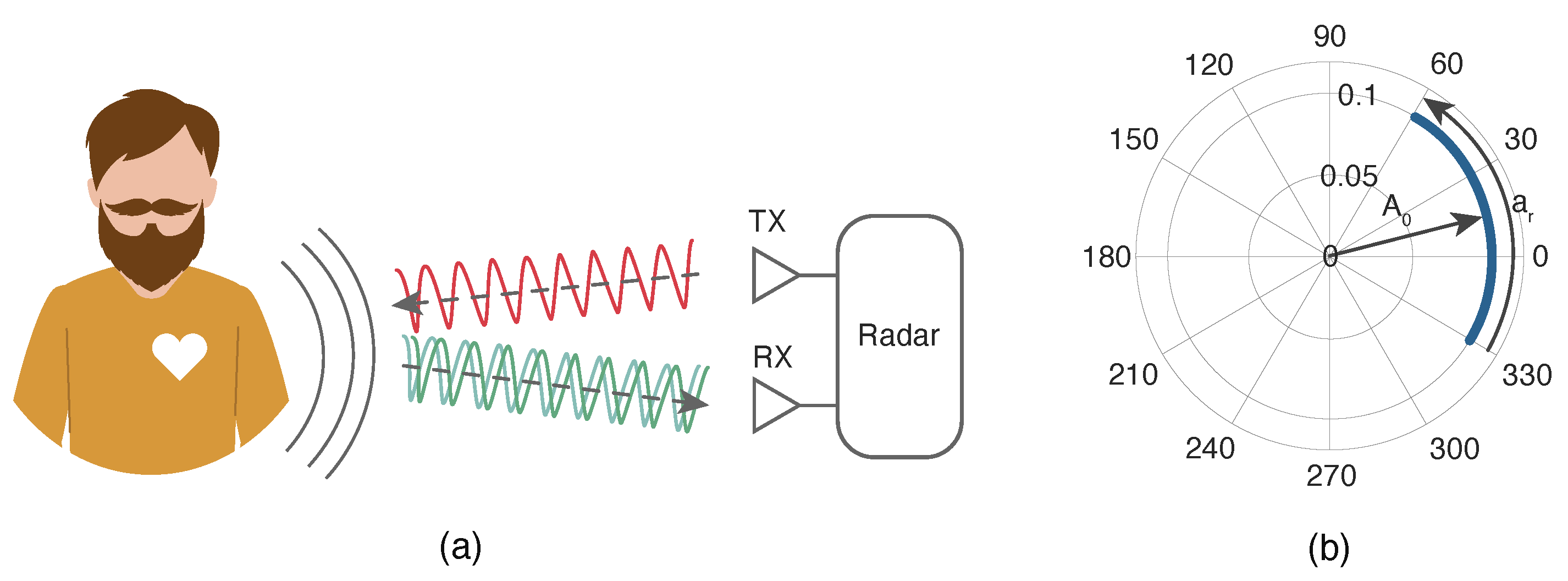

2.2.1. Doppler Radar

2.2.2. Implications of Real-World Applications

3. Related Work

3.1. ECG-Based Biometric Recognition

3.2. BR-Based Biometric Recognition

4. Proposed Approach

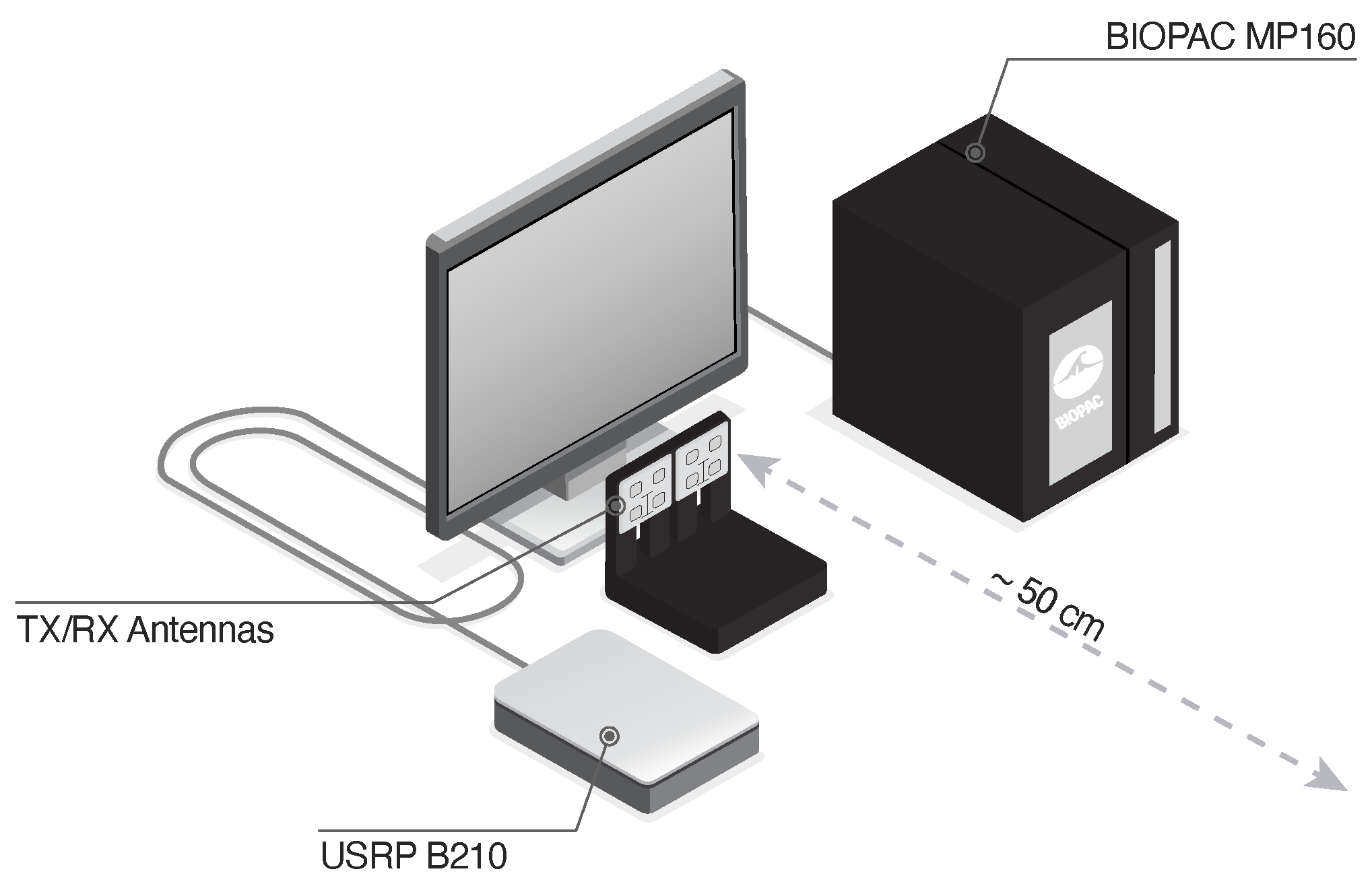

4.1. Dataset Description

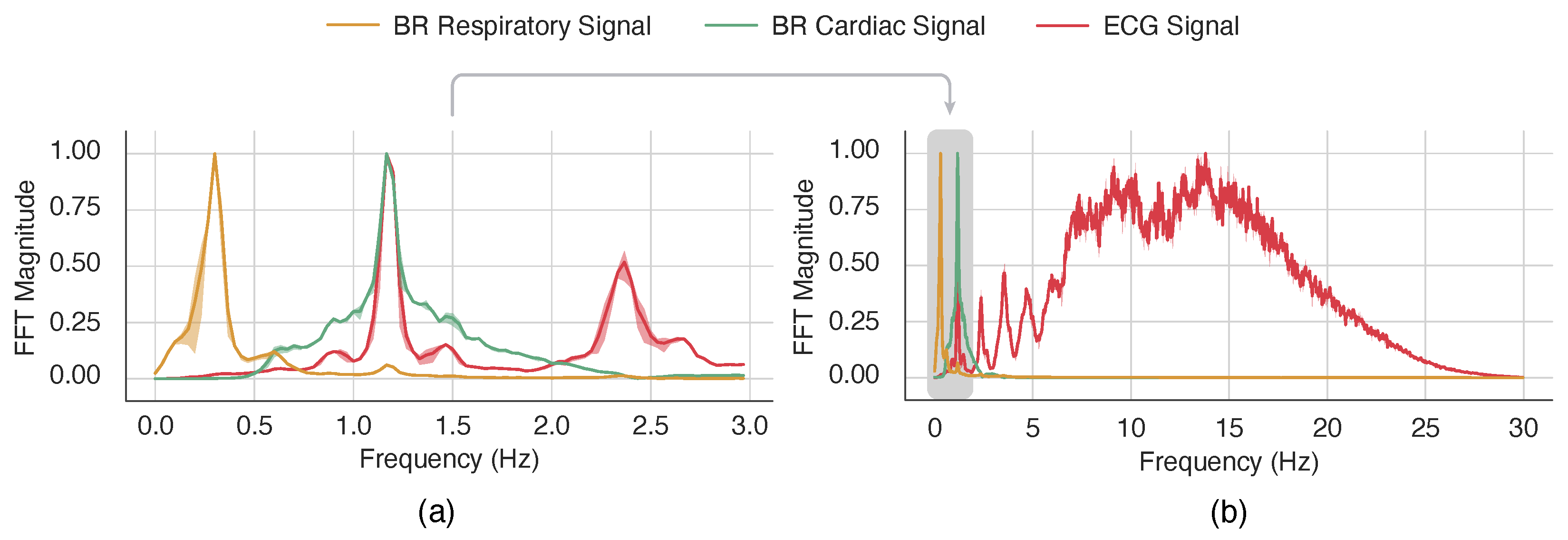

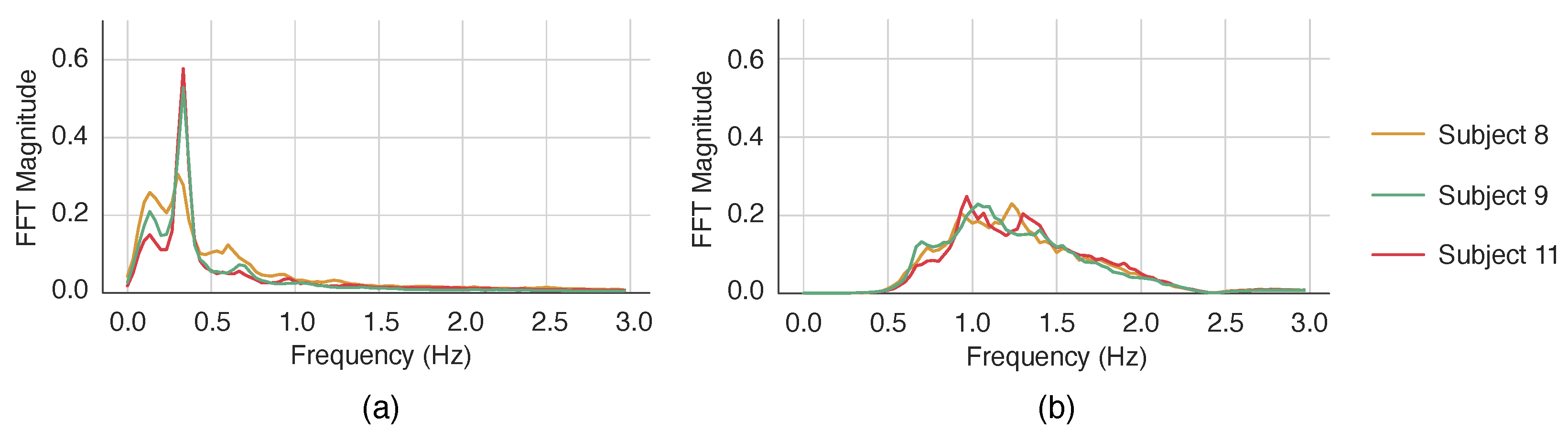

4.2. Physiological Signals Extraction

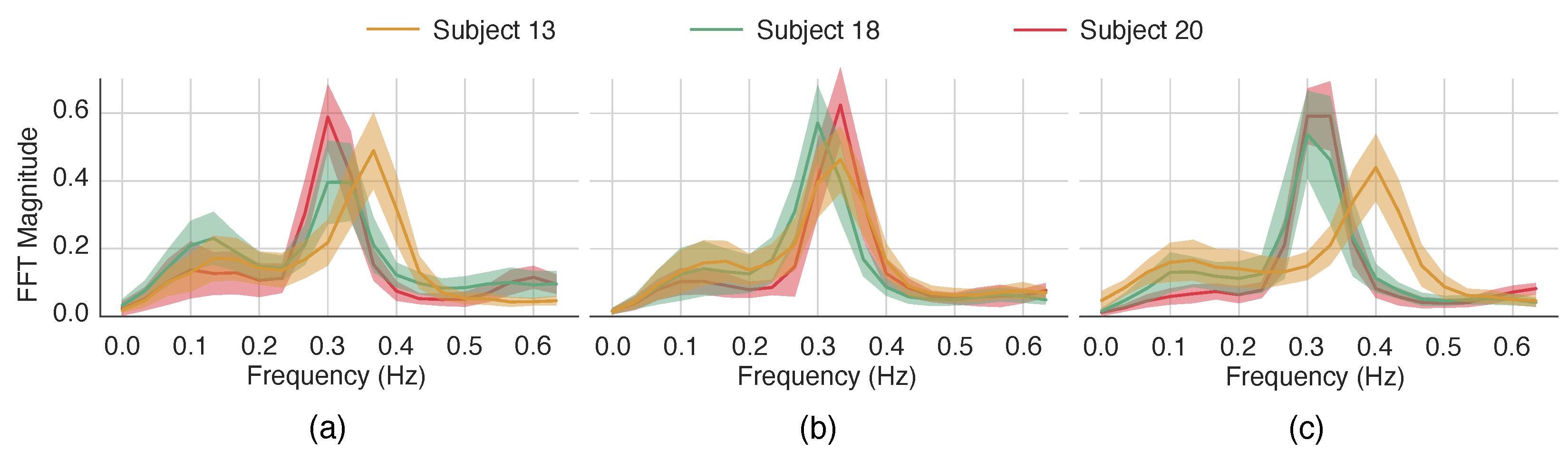

4.3. Feature Selection

4.4. Classification

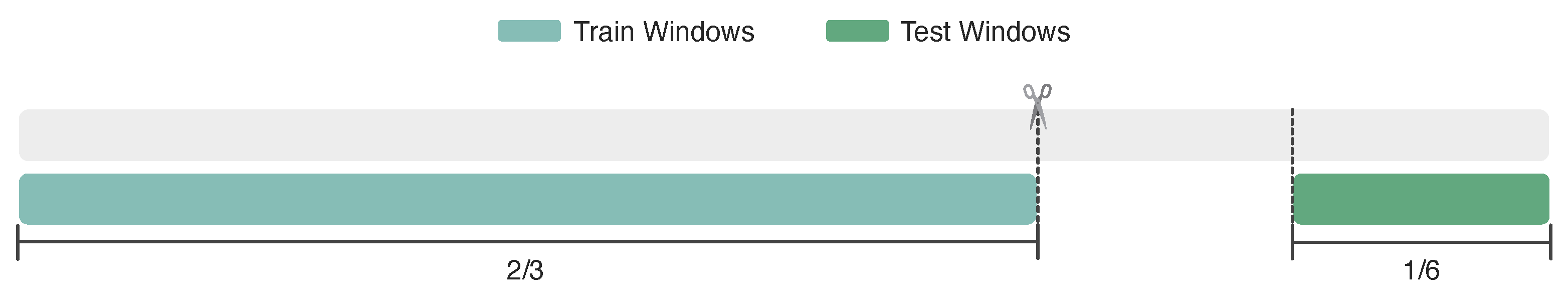

- Scenario S1–Within each session: The BR and ECG recordings from each session, for each subject, were divided into two parts: and . The training and testing windows comprise subsets of the recordings, using the first of the recordings for training, and the last for testing (, and ) as shown in Figure 9. The rationale for this partition is to ensure that the segments of the signal are not contiguous, in order to avoid a temporal relationship between the signals that could bias the result;

- Scenario S2–Between sessions: The training and testing windows come from different sessions, e.g., using session H (where happiness was induced) as , and session F (fear being the intended emotion) for . In this study, it is possible to infer the effects of time and emotion variability;

- Scenario S3–Across sessions: Using two different sessions to train the classifier, and the remainder for testing, e.g., using session H and F as , and session N (where there were no particular stimuli) for . By doing so, the classifier is trained utilising windows from multiple days, which may improve the performance.

5. Results and Discussion

5.1. Scenario S1–Within Session

5.2. Scenario S2–Between Sessions Evaluation: Single Training Session

- Using the entirety of session N as and session H or F as , separately;

- Session H as and the others as , separately;

- Finally, session F as and the remainder for test, separately.

5.3. Scenario S3–Across Sessions Evaluation: Multiple Training Sessions

- The data obtained on the sessions where neutral and happy emotions were stimulated were used for training the classifier (), and the fear session is used for testing ();

- The data obtained where the emotions intended were neutral and fear ones were used for training the classifier (), and the happy session is used for testing ();

- Finally, happiness and fearfulness were used as the training set (), and neutrality is used for testing ().

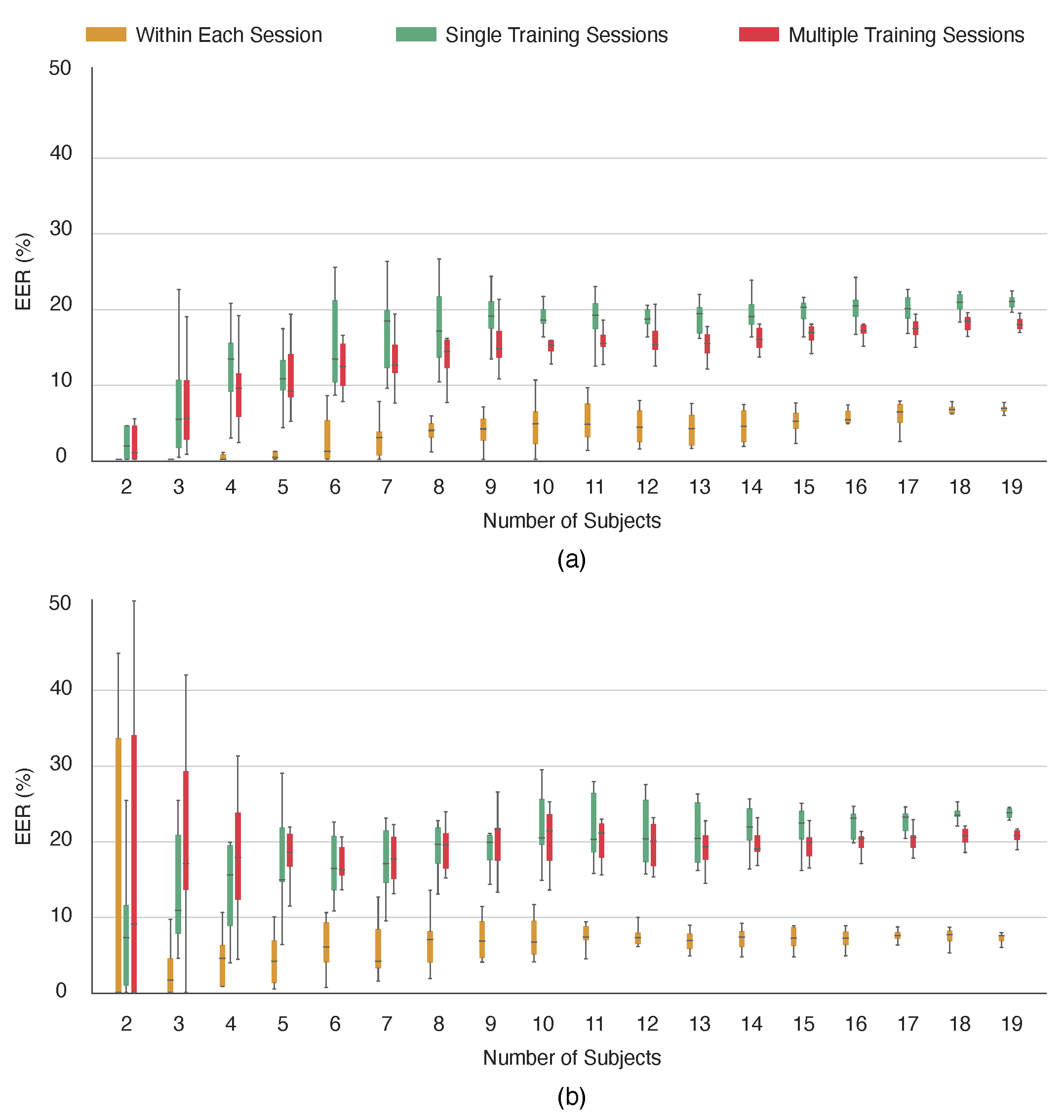

5.4. Sensitivity to the Number of Subjects

5.5. Final Remarks

6. Conclusions

- The study of long term permanence, possibly by means of conducting a trial consisting of a few weeks or even months, with no emotion induced;

- Further studies on emotion variability should be pursued in order to better understand the impact of this specific variable in the biometric template obtained, one option being the use of a broader set of emotions;

- The analysis of the impact that distance has on the biometric recognition accuracy, possibly by collecting information from the same subject at different distances, as in real-world conditions the subjects may not be confined to a single 3D volume;

- Considering the factor of motion artefacts, since BR sensors are vulnerable to movement, and evaluate how these impact the biometric template, and consequently, the classifier’s performance;

- Understanding the features space’s information, in particular, determining which features are specific to emotion variation, or possess temporal locality characteristics, may be crucial from an authentication standpoint.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| BR | Bio-Radar |

| CW | Continuous Wave |

| CDC | Complex Direct Current |

| CNN | Convolutional Neural Network |

| DWT | Discrete Wavelet Transform |

| ECG | Electrocardiogram |

| EER | Equal Error Rate |

| FAR | False Acceptance Rate |

| FRR | False Rejection Rate |

| FFT | Fast Fourier Transform |

| FIR | Finite Impulse Response |

| NN | Nearest Neighbour |

| ROC | Receiver Operating Characteristic |

| SVM | Support Vector Machine |

References

- Prabhakar, S.; Pankanti, S.; Jain, A. Biometric recognition: Security and privacy concerns. IEEE Secur. Priv. 2003, 1, 33–42. [Google Scholar] [CrossRef]

- Jain, A.K.; Bolle, R.; Pankanti, S. Biometrics: Personal Identification in Networked Society; Kluwer Academic Publishers: Norwell, MA, USA, 2002; ISBN 978-0-7923-8345-1. [Google Scholar]

- Hadid, A. Face Biometrics under Spoofing Attacks: Vulnerabilities, Countermeasures, Open Issues, and Research Directions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR) Workshops, Columbus, OH, USA, 23–28 June 2014. [Google Scholar]

- Noureddine, B.; Naït-ali, A.; Fournier, R.; Bereksi Reguig, F. ECG Based Human Authentication using Wavelets and Random Forests. Int. J. Cryptogr. Inf. Secur. 2012, 2, 1–11. [Google Scholar] [CrossRef]

- Matsumoto, T.; Matsumoto, H.; Yamada, K.; Hoshino, S. Impact of artificial “gummy” fingers on fingerprint systems. In Proceedings of the Optical Security and Counterfeit Deterrence Techniques IV, San Jose, CA, USA, 23–25 January 2002; Volume 4677, pp. 275–289. [Google Scholar] [CrossRef]

- Phillips, P.; Martin, A.; Wilson, C.; Przybocki, M. An introduction evaluating biometric systems. Computer 2000, 33, 56–63. [Google Scholar] [CrossRef]

- Singh, Y.N.; Gupta, P. ECG to Individual Identification. In Proceedings of the IEEE Second International Conference on Biometrics: Theory, Applications and Systems, Washington, DC, USA, 29 September–1 October 2008; pp. 1–8. [Google Scholar] [CrossRef]

- Ribeiro Pinto, J.; Cardoso, J.S.; Lourenço, A. Evolution, Current Challenges, and Future Possibilities in ECG Biometrics. IEEE Access 2018, 6, 34746–34776. [Google Scholar] [CrossRef]

- Gouveia, C.; Albuquerque, D.; Vieira, J.; Pinho, P. Dynamic digital signal processing algorithm for vital sign extraction in continuous-wave radars. Remote Sens. 2021, 13, 4079. [Google Scholar] [CrossRef]

- Rissacher, D.; Galy, D. Cardiac radar for biometric identification using nearest neighbour of continuous wavelet transform peaks. In Proceedings of the IEEE International Conference on Identity, Security and Behavior Analysis (ISBA), Hong Kong, China, 23–25 March 2015; pp. 1–6. [Google Scholar] [CrossRef]

- Lin, F.; Song, C.; Zhuang, Y.; Xu, W.; Li, C.; Ren, K. Cardiac Scan: A Non-Contact and Continuous Heart-Based User Authentication System. In Proceedings of the Annual International Conference on Mobile Computing and Networking, San Jose, CA, USA, 23–25 January 2017; pp. 315–328. [Google Scholar] [CrossRef]

- Shi, K.; Will, C.; Weigel, R.; Koelpin, A. Contactless person identification using cardiac radar signals. In Proceedings of the IEEE International Instrumentation and Measurement Technology Conference (I2MTC), Houston, TX, USA, 14–17 May 2018; pp. 1–6. [Google Scholar] [CrossRef]

- Cao, P.; Xia, W.; Li, Y. Heart ID: Human Identification Based on Radar Micro-Doppler Signatures of the Heart Using Deep Learning. Remote Sens. 2019, 11, 1220. [Google Scholar] [CrossRef]

- Rahman, A.; Yavari, E.; Lubecke, V.M.; Lubecke, O.B. Noncontact Doppler radar unique identification system using neural network classifier on life signs. In Proceedings of the IEEE Topical Conference on Biomedical Wireless Technologies, Networks, and Sensing Systems (BioWireleSS), Austin, TX, USA, 24–27 January 2016; pp. 46–48. [Google Scholar] [CrossRef]

- Rahman, A.; Lubecke, V.M.; Boric–Lubecke, O.; Prins, J.H.; Sakamoto, T. Doppler Radar Techniques for Accurate Respiration Characterization and Subject Identification. IEEE J. Emerg. Sel. Top. Circuits Syst. 2018, 8, 350–359. [Google Scholar] [CrossRef]

- Islam, S.M.M.; Sylvester, A.; Orpilla, G.; Lubecke, V.M. Respiratory Feature Extraction for Radar-Based Continuous Identity Authentication. In Proceedings of the IEEE Radio and Wireless Symposium (RWS), San Antonio, TX, USA, 26–29 January 2020; pp. 119–122. [Google Scholar] [CrossRef]

- Islam, S.M.M.; Rahman, A.; Yavari, E.; Baboli, M.; Boric-Lubecke, O.; Lubecke, V.M. Identity Authentication of OSA Patients Using Microwave Doppler radar and Machine Learning Classifiers. In Proceedings of the IEEE Radio and Wireless Symposium (RWS), San Antonio, TX, USA, 26–29 January 2020; pp. 251–254. [Google Scholar] [CrossRef]

- Islam, S.M.M.; Rahman, A.; Prasad, N.; Boric-Lubecke, O.; Lubecke, V.M. Identity Authentication System using a Support Vector Machine (SVM) on Radar Respiration Measurements. In Proceedings of the ARFTG Microwave Measurement Conference (ARFTG), Boston, MA, USA, 7 June 2019; pp. 1–5. [Google Scholar] [CrossRef]

- Komeili, M.; Armanfard, N.; Hatzinakos, D. Liveness Detection and Automatic Template Updating Using Fusion of ECG and Fingerprint. IEEE Trans. Inf. Forensics Secur. 2018, 13, 1810–1822. [Google Scholar] [CrossRef]

- da Silva, H.P. Biomedical Sensors as Invisible Doctors. In Regenerative Design in Digital Practice: A Handbook for the Built Environment; Eurac Research: Bolzano, Italy, 2019; pp. 322–329. [Google Scholar]

- Hu, W.; Zhao, Z.; Wang, Y.; Zhang, H.; Lin, F. Noncontact Accurate Measurement of Cardiopulmonary Activity Using a Compact Quadrature Doppler Radar Sensor. IEEE Trans. Biomed. Eng. 2014, 61, 725–735. [Google Scholar] [CrossRef]

- Silva, A.S.; Correia, M.V.; de Melo, F.; da Silva, H.P. Identity Recognition in Sanitary Facilities Using Invisible Electrocardiography. Sensors 2022, 22, 4201. [Google Scholar] [CrossRef]

- Schires, E.; Georgiou, P.; Lande, T.S. Vital Sign Monitoring Through the Back Using an UWB Impulse Radar With Body Coupled Antennas. IEEE Trans. Biomed. Circuits Syst. 2018, 12, 292–302. [Google Scholar] [CrossRef] [PubMed]

- Hui, X.; Kan, E.C. Seat Integration of RF Vital-Sign Monitoring. In Proceedings of the IEEE MTT-S International Microwave Biomedical Conference (IMBioC), Nanjing, China, 6–8 May 2019; Volume 1, pp. 1–3. [Google Scholar] [CrossRef]

- Lourenço, A.; Alves, A.P.; Carreiras, C.; Duarte, R.P.; Fred, A. CardioWheel: ECG biometrics on the steering wheel. In Proceedings of the Joint European conference on machine learning and knowledge discovery in databases, Porto, Portugal, 7–11 September 2015; pp. 267–270. [Google Scholar]

- Onoja, A.E.; Oluwadamilola, A.M.; Ajao, L.A. Embedded system based radio detection and ranging (RADAR) system using Arduino and ultra-sonic sensor. Am. J. Embed. Syst. Appl. 2017, 5, 7–12. [Google Scholar]

- Boric-Lubecke, O.; Lubecke, V.; Droitcour, A.; Park, B.K.; Singh, A. Doppler Radar Physiological Sensing; John Wiley & Sons, Inc.: Hoboken, NJ, USA, 2016; pp. 1–288. [Google Scholar] [CrossRef]

- Chen, V. The Micro-Doppler Effect in Radar; Artech House Radar Library, Artech House: Boston, MA, USA, 2019. [Google Scholar]

- Banks, D. Continuous wave (CW) radar. In Proceedings of the Electronics and Aerospace Systems Convention, Washington, DC, USA, 29 September–1 October 1975. [Google Scholar]

- Lin, J.C. Microwave sensing of physiological movement and volume change: A review. Bioelectromagnetics 1992, 13, 557–565. [Google Scholar] [CrossRef] [PubMed]

- Gouveia, C.; Albuquerque, D.; Pinho, P.; Vieira, J. Evaluation of Heartbeat Signal Extraction Methods Using a 5.8 Hz Doppler Radar System in a Real Application Scenario. IEEE Sensors J. 2022, 22, 7979–7989. [Google Scholar] [CrossRef]

- Biel, L.; Pettersson, O.; Philipson, L.; Wide, P. ECG analysis: A new approach in human identification. IEEE Trans. Instrum. Meas. 2001, 50, 808–812. [Google Scholar] [CrossRef]

- Israel, S.; Irvine, J.; Cheng, A.; Wiederhold, M.; Wiederhold, B. ECG to identify individuals. Pattern Recognit. 2005, 38, 133–142. [Google Scholar] [CrossRef]

- da Silva, H.P.; Fred, A.; Lourenço, A.; Jain, A.K. Finger ECG signal for user authentication: Usability and performance. In Proceedings of the IEEE International Conference on Biometrics: Theory, Applications and Systems (BTAS), Arlington, VA, USA, 23 September–2 October 2013; pp. 1–8. [Google Scholar] [CrossRef]

- Hassan, Z.; Gilani, S.; Jamil, M. Review of Fiducial and Non-Fiducial Techniques of Feature Extraction in ECG based Biometric Systems. Indian J. Sci. Technol. 2016, 9, 94841. [Google Scholar] [CrossRef]

- Odinaka, I.; Lai, P.H.; Kaplan, A.D.; O’Sullivan, J.A.; Sirevaag, E.J.; Kristjansson, S.D.; Sheffield, A.K.; Rohrbaugh, J.W. ECG biometrics: A robust short-time frequency analysis. In Proceedings of the IEEE International Workshop on Information Forensics and Security, Seattle, WA, USA, 12–15 December 2010; pp. 1–6. [Google Scholar] [CrossRef]

- Coutinho, D.P.; Fred, A.L.; Figueiredo, M.A. One-lead ECG-based personal identification using Ziv-Merhav cross parsing. In Proceedings of the International Conference on Pattern Recognition, Istanbul, Turkey, 23–26 August 2010; pp. 3858–3861. [Google Scholar]

- Hu, F.; Singh, A.; Boric-Lubecke, O.; Lubecke, V. Medical Sensing Using Doppler Radar. In Telehealthcare Computing and Engineering Principles and Design; CRC Press LLC: Boca Raton, FL, USA, 2013; Chapter 10; pp. 281–301. [Google Scholar]

- Petkie, D.T.; Bryan, E.; Benton, C.; Phelps, C.; Yoakum, J.; Rogers, M.; Reed, A. Remote respiration and heart rate monitoring with millimeter-wave/terahertz radars. In Millimetre Wave and Terahertz Sensors and Technology; Krapels, K.A., Salmon, N.A., Eds.; International Society for Optics and Photonics, SPIE: Bellingham, WA, USA, 2008; Volume 7117. [Google Scholar] [CrossRef]

- Zhou, Q.; Liu, J.; Host-Madsen, A.; Boric-Lubecke, O.; Lubecke, V. Detection of Multiple Heartbeats Using Doppler Radar. In Proceedings of the IEEE International Conference on Acoustics Speech and Signal Processing, Toulouse, France, 14–19 May 2006; Volume 2. [Google Scholar] [CrossRef]

- Droitcour, A.D. Non-Contact Measurement of Heart and Respiration Rates with a Single-Chip Microwave Doppler Radar. Ph.D. Thesis, Stanford University, Stanford, CA, USA, 2006. [Google Scholar]

- Jain, A.K.; Ross, A.; Prabhakar, S. An Introduction to Biometric Recognition. IEEE Trans. Circuits Syst. Video Technol. 2004, 14, 4–20. [Google Scholar] [CrossRef]

- Pinto, G.; Carvalho, J.M.; Barros, F.; Soares, S.C.; Pinho, A.J.; Brás, S. Multimodal Emotion Evaluation: A Physiological Model for Cost-Effective Emotion Classification. Sensors 2020, 20, 3510. [Google Scholar] [CrossRef] [PubMed]

- Barros, F.; Figueiredo, C.; Brás, S.; Carvalho, J.M.; Soares, S.C. Multidimensional assessment of anxiety through the State-Trait Inventory for Cognitive and Somatic Anxiety (STICSA): From dimensionality to response prediction across emotional contexts. PLoS ONE 2022, 17, 1–26. [Google Scholar] [CrossRef]

- Jang, Y.I.; Sim, J.Y.; Yang, J.R.; Kwon, N.K. The Optimal Selection of Mother Wavelet Function and Decomposition Level for Denoising of DCG Signal. Sensors 2021, 21, 1851. [Google Scholar] [CrossRef] [PubMed]

- Kathirvel, P.; Sabarimalai Manikandan, M.; Prasanna, S.R.M.; Soman, K.P. An Efficient R-peak Detection Based on New Nonlinear Transformation and First-Order Gaussian Differentiator. Cardiovasc. Eng. Technol. 2011, 2, 408–425. [Google Scholar] [CrossRef]

- Wang, Y.; Agrafioti, F.; Hatzinakos, D.; Plataniotis, K.N. Analysis of Human Electrocardiogram for Biometric Recognition. EURASIP J. Adv. Signal Process. 2007, 2008, 148658. [Google Scholar] [CrossRef]

- Hejazi, M.; Al-Haddad, S.A.R.; Singh, Y.; Hashim, S.; Aziz, A. ECG biometric authentication based on non-fiducial approach using kernel methods. Digit. Signal Process. 2016, 52, 8. [Google Scholar] [CrossRef]

- Chamatidis, I.; Katsika, A.; Spathoulas, G. Using deep learning neural networks for ECG based authentication. In Proceedings of the International Carnahan Conference on Security Technology (ICCST), Madrid, Spain, 23–26 October 2017; pp. 1–6. [Google Scholar] [CrossRef]

shows the number of subjects, and lastly Accuracy (Acc) (%) and Equal Error Rate (EER) (%) display the accuracy and equal error rate in percentage.

shows the number of subjects, and lastly Accuracy (Acc) (%) and Equal Error Rate (EER) (%) display the accuracy and equal error rate in percentage.

shows the number of subjects, and lastly Accuracy (Acc) (%) and Equal Error Rate (EER) (%) display the accuracy and equal error rate in percentage.

shows the number of subjects, and lastly Accuracy (Acc) (%) and Equal Error Rate (EER) (%) display the accuracy and equal error rate in percentage.| Year | Feature | Classifier | #  | Acc % | EER % | Refs. |

|---|---|---|---|---|---|---|

| 2015 | DWT | k-NN 2 | 26 | 19.0 | – | [10] |

| 2016 | Breathing energy, frequency and patterns | Neural Network | 3 | 92.13 | – | [14] |

| 2017 | Geometric Features | SVM | 78 | 98.61 | 4.42 | [11] |

| 2018 | Local Heartbeat | SVM | 4 | 94.6 | – | [12] |

| 2018 | Spectrogram | CNN 3 | 4 | 98.5 | – | [13] |

| 2018 | Various 1 | k-NN | 6 | 95.0 | – | [15] |

| 2019 | FFT | SVM | 6 | 100 | – | [18] |

| 2020 | Various 1 | SVM | 10 | 92 | – | [16] |

| 2020 | Breathing energy, frequency and patterns | k-NN | 5 | 93.75 | – | [17] |

| BR R | BR C | BR RC | ECG | |

|---|---|---|---|---|

| N-N | ||||

| H-H | ||||

| F-F |

| BR R | BR C | BR RC | ECG | |

|---|---|---|---|---|

| N-H | ||||

| N-F | ||||

| H-N | ||||

| H-F | ||||

| F-N | ||||

| F-H |

| BR R | BR C | BR RC | ECG | |

|---|---|---|---|---|

| NH-F | ||||

| NF-H | ||||

| HF-N |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Louro da Silva, M.; Gouveia, C.; Albuquerque, D.F.; Plácido da Silva, H. Radar-Based Invisible Biometric Authentication. Information 2024, 15, 44. https://doi.org/10.3390/info15010044

Louro da Silva M, Gouveia C, Albuquerque DF, Plácido da Silva H. Radar-Based Invisible Biometric Authentication. Information. 2024; 15(1):44. https://doi.org/10.3390/info15010044

Chicago/Turabian StyleLouro da Silva, Maria, Carolina Gouveia, Daniel Filipe Albuquerque, and Hugo Plácido da Silva. 2024. "Radar-Based Invisible Biometric Authentication" Information 15, no. 1: 44. https://doi.org/10.3390/info15010044

APA StyleLouro da Silva, M., Gouveia, C., Albuquerque, D. F., & Plácido da Silva, H. (2024). Radar-Based Invisible Biometric Authentication. Information, 15(1), 44. https://doi.org/10.3390/info15010044