Identifying Smartphone Users Based on Activities in Daily Living Using Deep Neural Networks

Abstract

:1. Introduction

- Creating a new smartphone-based user identification framework called DeepUserIden, which utilizes a DL model to fully automate all steps of identifying individuals based on assessing their activities;

- Putting forward a novel deep residual network named DeepResNeXt that models temporal relationships within attribute representations extracted using convolutional operations;

- Performing experiments demonstrating the superior accuracy and F1-score of the proposed DL approach over existing baseline DL methods using publicly available benchmark datasets.

2. Related Works

2.1. Sensor-Based User Identification

2.2. DL Approaches in User Identification

3. The DeepUserIden Framework

3.1. Data Collection

3.2. Pre-Processing Process

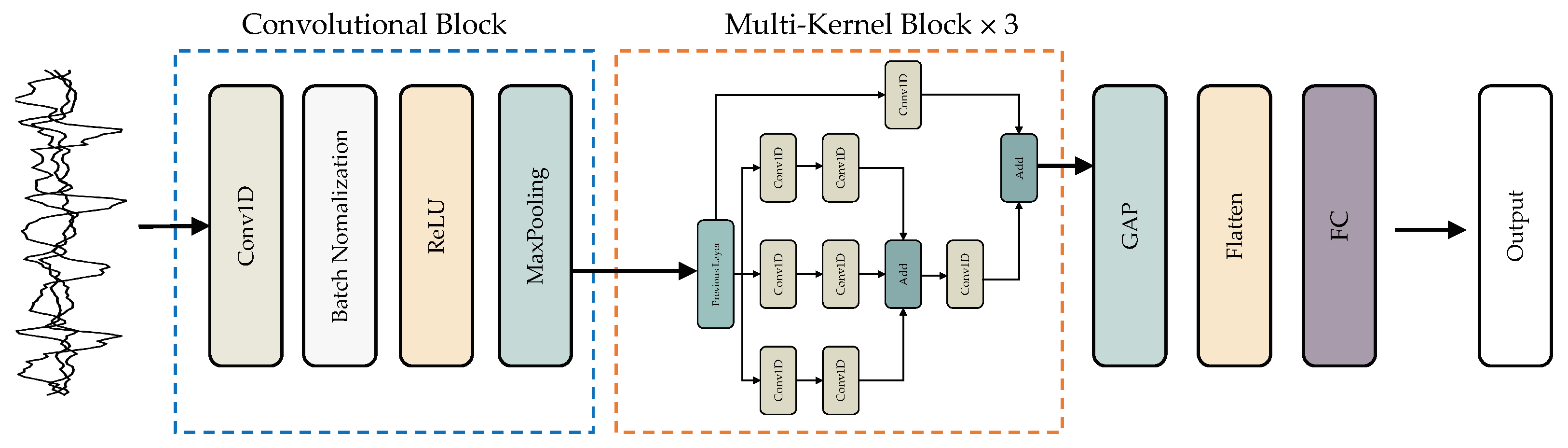

3.3. The Proposed DeepResNeXt Architecture

4. Experiments and Results

4.1. Environmental Configuration

4.2. Experiment Setting

- Experiment I: DL models were trained using accelerometer and gyroscope data from the wrist location;

- Experiment II: accelerometer and gyroscope data from the arm location were used to train DL models;

- Experiment III: DL models were trained using accelerometer and gyroscope data from the belt location;

- Experiment IV: accelerometer and gyroscope data from the left pocket location were utilized for training DL models;

- Experiment V: DL models were trained using accelerometer and gyroscope data from the right pocket location.

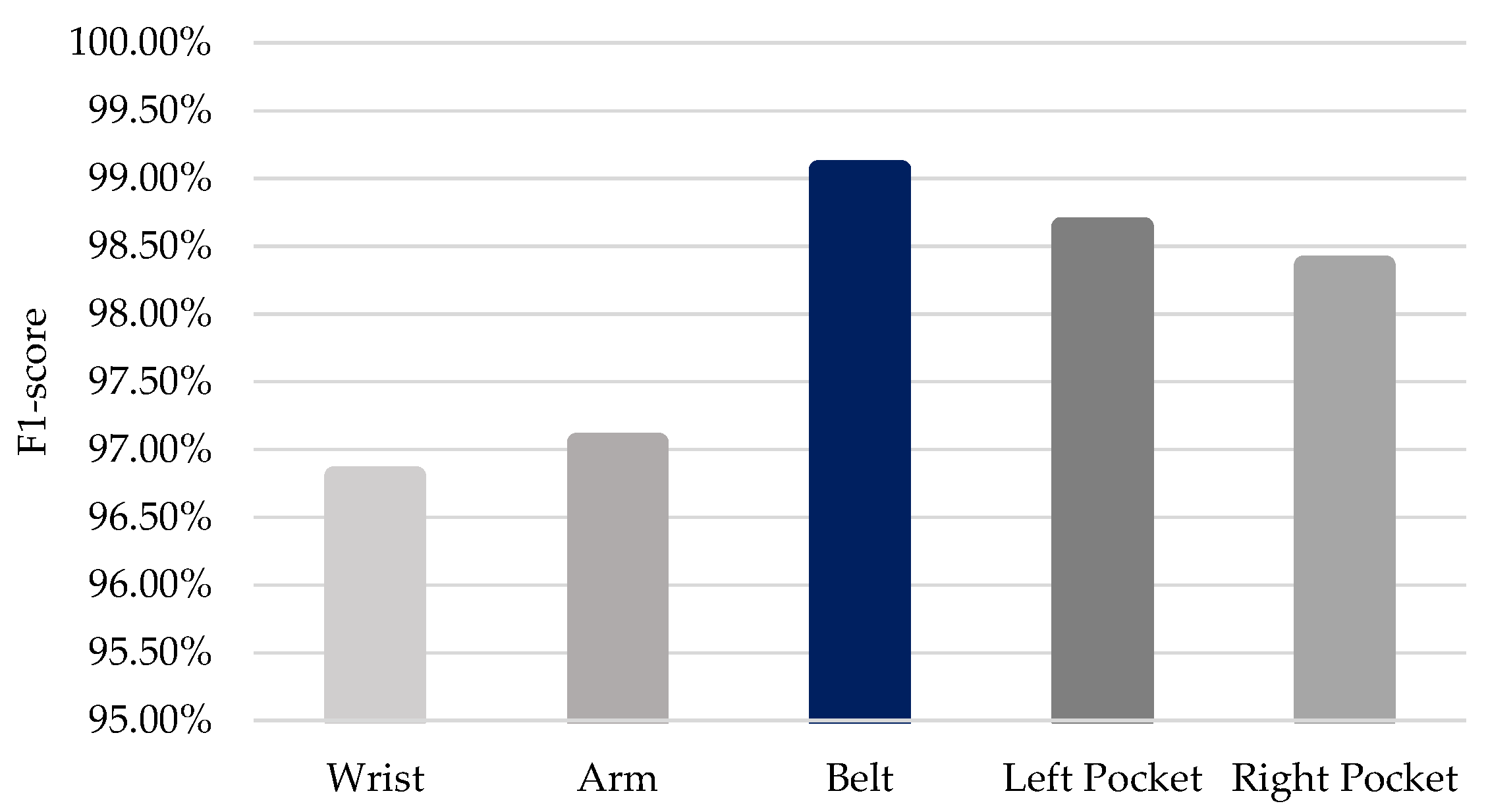

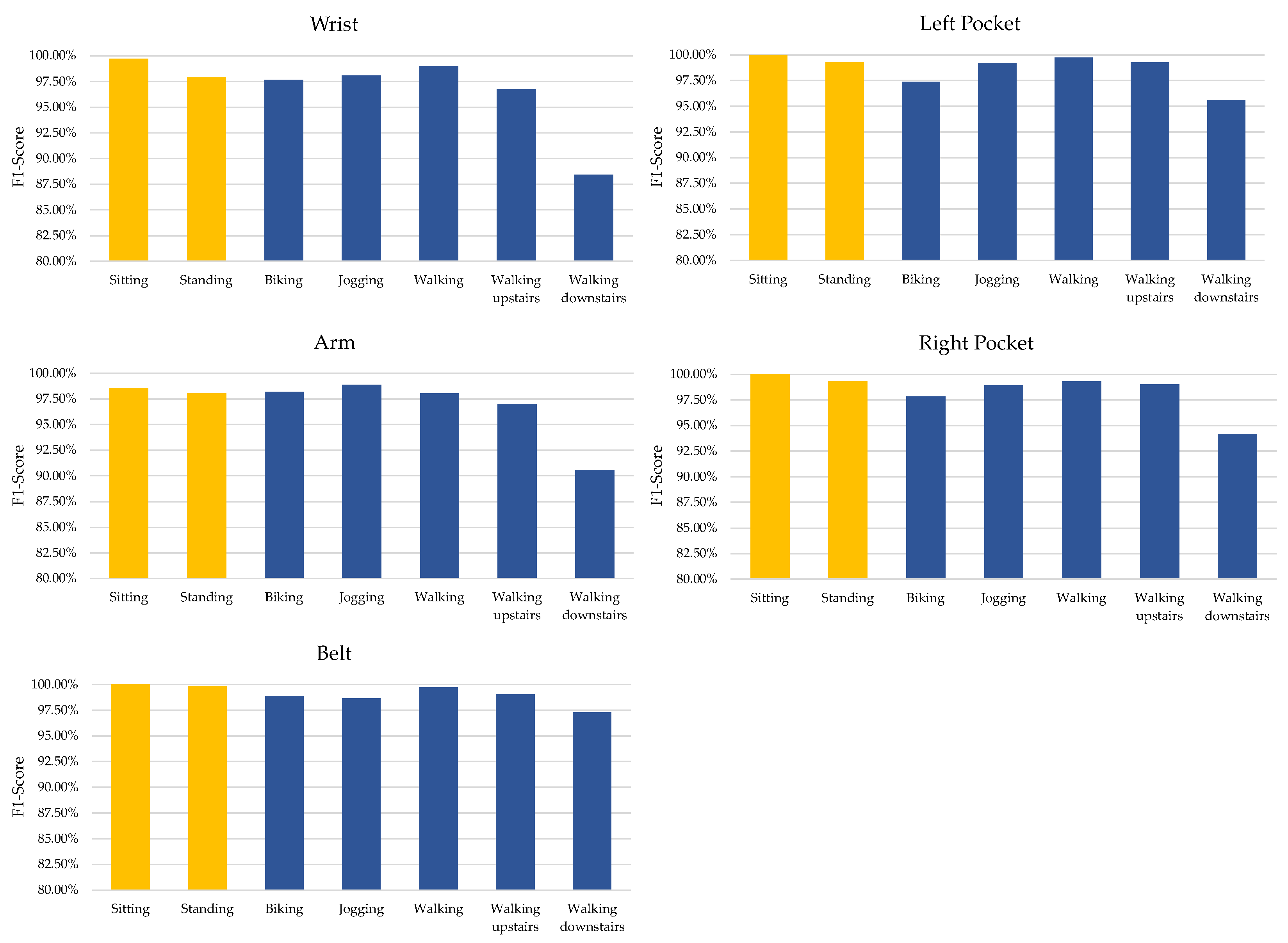

4.3. Experimental Results

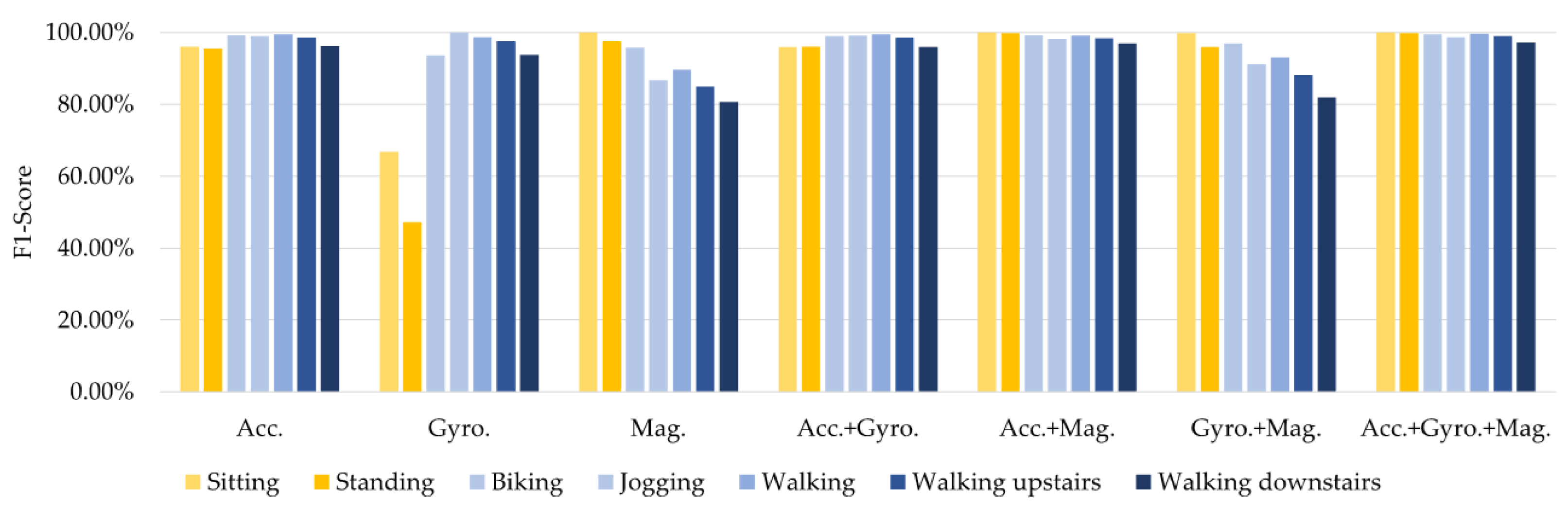

4.4. Additional Experiments

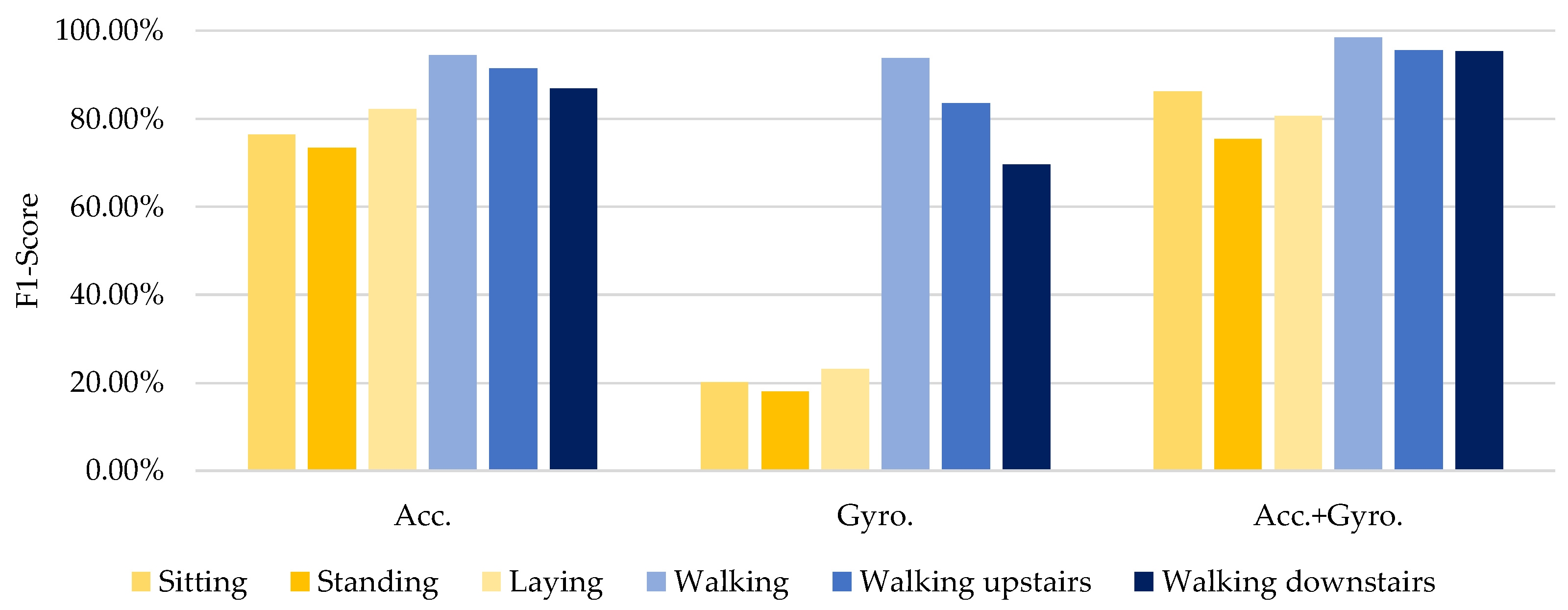

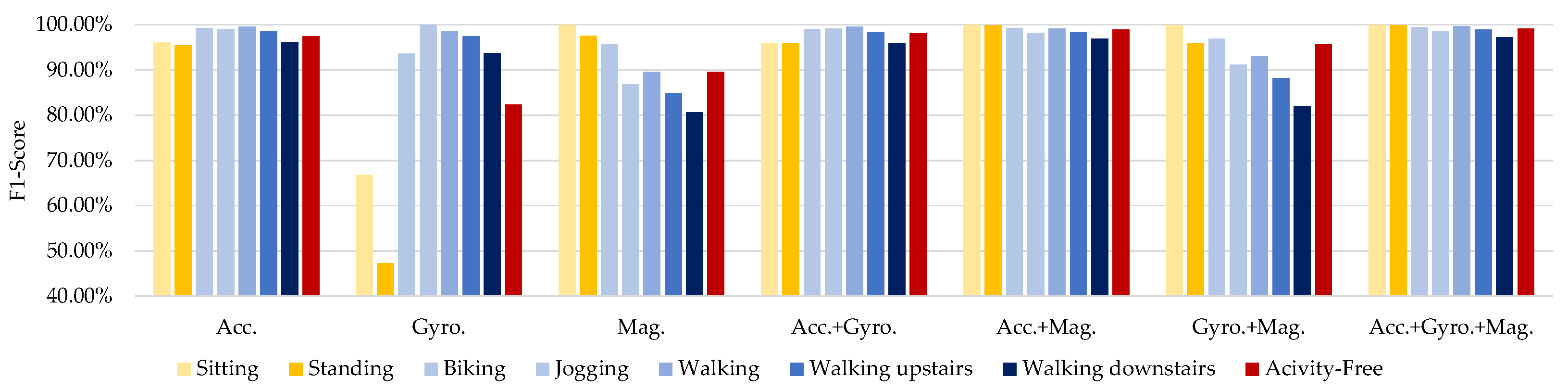

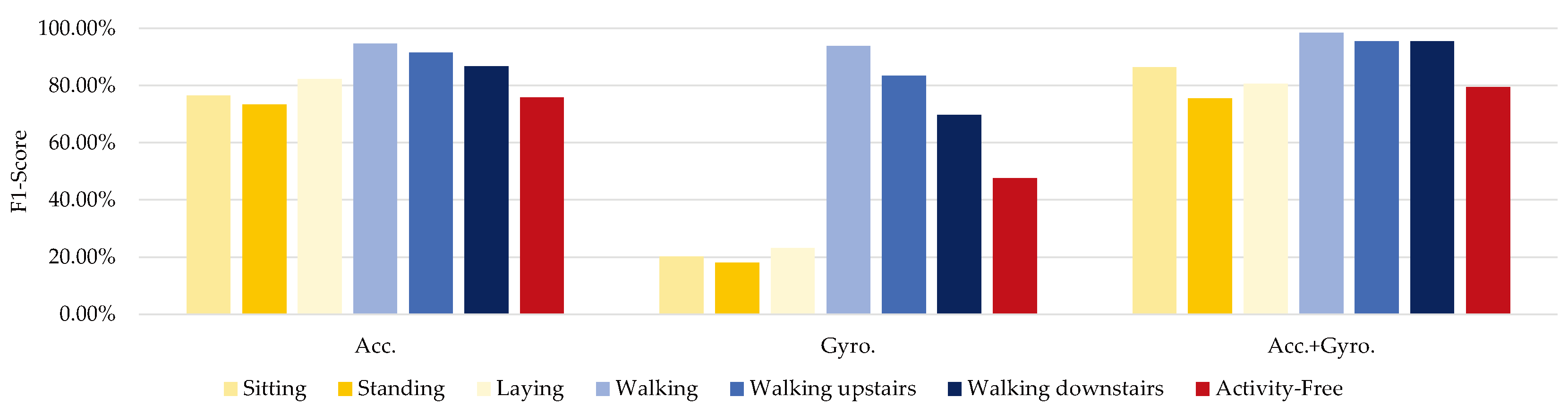

- The first experiment scenario utilized accelerometer sensor data exclusively. This single motion modality input trained and tested the model using dynamic measurements of bodily movements and actions;

- The second scenario relied exclusively on gyroscope data to capture rotational motions. Translational accelerations from the accelerometer sensor were omitted in this case. Only orientation changes contributed inputs for analysis rather than bodily dynamics;

- The third scenario integrated both accelerometer and gyroscope streams as inputs. This multimodal fusion provided multivariate time-series data capturing rotational orientations, bodily movements, and dynamics. The richer input enabled a more comprehensive analysis of the system’s capability to extract distinctive traits from diverse signal types during unrestrained motions.

- Using only accelerometer data (Scenario 1), the model achieves an admirable 82–95% accuracy for dynamic activities like walking, climbing stairs, and lying down. However, the accuracy dips comparatively lower to 75–78% for seated and standing tasks that involve greater motion inertia and less overall change. More limited distinguishing traits likely manifest for static poses lacking rich accelerometer differentiators;

- Without gyroscope inputs (Scenario 2), identification accuracy fluctuates dramatically across activity types. Dynamic motions like walking have significantly higher 94% recognition, given plentiful distinguishing motion cues. However, static poses lacking rotations fare far poorer at 23% accuracy for sitting tasks. Likely, the orientation changes measurable by a gyroscope better differentiates stationary positions that are otherwise hard to characterize uniquely;

- Fusing accelerometer and gyroscope data (Scenario 3) significantly boosts accuracy to 95–98% across most activities versus individual sensor performance. The complementary orientation and motion measurements provide richer input to characterize user traits better. Likely, the additional modalities capture distinguishing features that are difficult to identify from single sensor streams in isolation uniquely. Multimodal integration enhances recognition efficacy overall;

- The performance differences across sensor and activity types highlight each modality’s strengths. Gyroscopes better capture rotational motions while accelerometers excel at overall body dynamics. Combining these complementary orientations and translations creates a more comprehensive movement signature. The enriched characterization of user biomechanics and behaviors during daily tasks enables more reliable identification from sensor readings. Fusing distinct signal captures subtle distinguishing traits that individual streams miss in isolation.

5. Discussion

5.1. Effects of Smartphone Sensor Placement

5.2. Effects of Different Activity Types

5.3. Effects of Sensor Types

5.4. Activity-Free User Identification

6. Conclusions and Future Work

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| Acc. | Accelerometer |

| ACC | Accuracy(%) |

| BiGRU | Bidirectional gated recurrent unit |

| BiLSTM | Bidirectional long short-term memory |

| BiRNN | Bidirectional recurrent neural network |

| BN | Batch normalization |

| CNN | Convolutional neural network |

| Conv | Convolutional layer |

| Conv1D | One-dimensional convolutional layer |

| DL | Deep learning |

| F1 | F1-score(%) |

| GAP | Global average pooling |

| Gyro. | Gyroscope |

| GRU | Gated recurrent unit |

| HAR | Human activity recognition |

| LSTM | Long short-term memory |

| Mag. | Magnetometer |

| ML | Machine learning |

| MK | Multi-kernel |

| MP | Max-pooling |

| ReLU | Rectified linear unit layer |

| RNN | Recurrent neural network |

| UCI | The university of California Irvine |

References

- Wong, S.K.; Yiu, S.M. Location spoofing attack detection with pre-installed sensors in mobile devices. J. Wirel. Mob. Netw. Ubiquitous Comput. Dependable Appl. 2020, 11, 16–30. [Google Scholar] [CrossRef]

- Frik, A.; Kim, J.; Sanchez, J.R.; Ma, J. Users’ Expectations About and Use of Smartphone Privacy and Security Settings. In Proceedings of the 2022 CHI Conference on Human Factors in Computing Systems, New York, NY, USA, 29 April 2022. CHI ’22. [Google Scholar] [CrossRef]

- Nowaczewski, S.; Mazurczyk, W. Securing Future Internet and 5G using Customer Edge Switching using DNSCrypt and DNSSEC. J. Wirel. Mob. Netw. Ubiquitous Comput. Dependable Appl. 2020, 11, 87–106. [Google Scholar] [CrossRef]

- Liu, S.; Shao, W.; Li, T.; Xu, W.; Song, L. Recent advances in biometrics-based user authentication for wearable devices: A contemporary survey. Digit. Signal Process. 2022, 125, 103120. [Google Scholar] [CrossRef]

- Wang, Y.; Zhang, X.; Hu, H. Continuous User Authentication on Multiple Smart Devices. Information 2023, 14, 274. [Google Scholar] [CrossRef]

- Ehatisham-ul Haq, M.; Azam, M.A.; Loo, J.; Shuang, K.; Islam, S.; Naeem, U.; Amin, Y. Authentication of Smartphone Users Based on Activity Recognition and Mobile Sensing. Sensors 2017, 17, 2043. [Google Scholar] [CrossRef] [PubMed]

- Drosou, A.; Ioannidis, D.; Moustakas, K.; Tzovaras, D. Spatiotemporal analysis of human activities for biometric authentication. Comput. Vis. Image Underst. 2012, 116, 411–421. [Google Scholar] [CrossRef]

- Mahfouz, A.; Mahmoud, T.M.; Eldin, A.S. A survey on behavioral biometric authentication on smartphones. J. Inf. Secur. Appl. 2017, 37, 28–37. [Google Scholar] [CrossRef]

- Mekruksavanich, S.; Jitpattanakul, A. Deep Learning Approaches for Continuous Authentication Based on Activity Patterns Using Mobile Sensing. Sensors 2021, 21, 7519. [Google Scholar] [CrossRef] [PubMed]

- Benegui, C.; Ionescu, R.T. Convolutional Neural Networks for User Identification Based on Motion Sensors Represented as Images. IEEE Access 2020, 8, 61255–61266. [Google Scholar] [CrossRef]

- Neverova, N.; Wolf, C.; Lacey, G.; Fridman, L.; Chandra, D.; Barbello, B.; Taylor, G. Learning Human Identity From Motion Patterns. IEEE Access 2016, 4, 1810–1820. [Google Scholar] [CrossRef]

- ul Haq, M.E.; Malik, M.N.; Azam, M.A.; Naeem, U.; Khalid, A.; Ghazanfar, M.A. Identifying Users with Wearable Sensors based on Activity Patterns. Procedia Comput. Sci. 2020, 177, 8–15. [Google Scholar] [CrossRef]

- Sanchez Guinea, A.; Heinrich, S.; Mühlhäuser, M. Activity-Free User Identification Using Wearables Based on Vision Techniques. Sensors 2022, 22, 7368. [Google Scholar] [CrossRef] [PubMed]

- Mekruksavanich, S.; Jitpattanakul, A. Biometric User Identification Based on Human Activity Recognition Using Wearable Sensors: An Experiment Using Deep Learning Models. Electronics 2021, 10, 308. [Google Scholar] [CrossRef]

- Ahmad, M.; Raza, R.A.; Mazzara, M.; Distefano, S.; Bashir, A.K.; Khan, A.; Sarfraz, M.S.; Aftab, M.U. Multi Sensor-Based Implicit User Identification. Comput. Mater. Contin. 2021, 68, 1673–1692. [Google Scholar] [CrossRef]

- Lv, M.; Xu, W.; Chen, T. A hybrid deep convolutional and recurrent neural network for complex activity recognition using multimodal sensors. Neurocomputing 2019, 362, 33–40. [Google Scholar] [CrossRef]

- Zhang, M. Gait Activity Authentication Using LSTM Neural Networks with Smartphone Sensors. In Proceedings of the 2019 15th International Conference on Mobile Ad-Hoc and Sensor Networks (MSN), Shenzhen, China, 11–13 December 2019; pp. 456–461. [Google Scholar] [CrossRef]

- Shoaib, M.; Bosch, S.; Incel, O.D.; Scholten, H.; Havinga, P.J.M. Fusion of Smartphone Motion Sensors for Physical Activity Recognition. Sensors 2014, 14, 10146–10176. [Google Scholar] [CrossRef] [PubMed]

- Mostayed, A.; Kim, S.; Mazumder, M.M.G.; Park, S.J. Foot Step Based Person Identification Using Histogram Similarity and Wavelet Decomposition. In Proceedings of the 2008 International Conference on Information Security and Assurance (ISA 2008), Busan, Republic of Korea, 24–26 April 2008; pp. 307–311. [Google Scholar] [CrossRef]

- Anguita, D.; Ghio, A.; Oneto, L.; Parra, X.; Reyes-Ortiz, J.L. A Public Domain Dataset for Human Activity Recognition using Smartphones. In Proceedings of the European Symposium on Artificial Neural Networks, Bruges, Belgium, 24–26 April 2013; pp. 437–442. [Google Scholar]

- Weiss, G.M.; Yoneda, K.; Hayajneh, T. Smartphone and Smartwatch-Based Biometrics Using Activities of Daily Living. IEEE Access 2019, 7, 133190–133202. [Google Scholar] [CrossRef]

- Ismail Fawaz, H.; Lucas, B.; Forestier, G.; Pelletier, C.; Schmidt, D.F.; Weber, J.; Webb, G.I.; Idoumghar, L.; Muller, P.A.; Petitjean, F. InceptionTime: Finding AlexNet for Time Series Classification. Data Min. Knowl. Discov. 2020, 34, 1936–1962. [Google Scholar] [CrossRef]

- Xie, S.; Girshick, R.; Dollár, P.; Tu, Z.; He, K. Aggregated Residual Transformations for Deep Neural Networks. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 5987–5995. [Google Scholar] [CrossRef]

- Kingma, D.P.; Ba, J. Adam: A Method for Stochastic Optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

| Sensor | Model | Identification Performance | |||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Biking | Jogging | Sitting | Standing | Walking |

Walking Upstairs |

Walking Downstairs | |||||||||

| ACC | F1 | ACC | F1 | ACC | F1 | ACC | F1 | ACC | F1 | ACC | F1 | ACC | F1 | ||

| Wrist | CNN | 90.14 | 90.01 | 93.29 | 93.30 | 98.45 | 98.46 | 95.97 | 95.95 | 91.83 | 91.83 | 69.15 | 68.83 | 46.06 | 45.91 |

| LSTM | 93.84 | 93.87 | 96.99 | 96.98 | 99.30 | 99.30 | 95.28 | 95.21 | 97.32 | 97.32 | 90.56 | 90.49 | 65.77 | 65.32 | |

| BiLSTM | 94.38 | 94.33 | 96.85 | 96.81 | 99.30 | 99.30 | 94.86 | 94.86 | 97.46 | 97.46 | 84.08 | 83.78 | 60.42 | 59.66 | |

| GRU | 97.40 | 97.38 | 97.67 | 97.66 | 99.30 | 99.30 | 97.50 | 97.47 | 98.59 | 98.58 | 93.94 | 93.85 | 80.85 | 80.81 | |

| BiGRU | 96.30 | 96.28 | 98.08 | 98.08 | 99.01 | 99.03 | 97.64 | 97.63 | 98.45 | 98.44 | 90.14 | 89.98 | 76.06 | 75.75 | |

| DeepResNeXt | 97.12 | 97.66 | 98.08 | 98.09 | 99.72 | 99.72 | 97.67 | 97.92 | 99.02 | 99.01 | 96.76 | 96.77 | 87.61 | 88.43 | |

| Sensor | Model | Identification Performance | |||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Biking | Jogging | Sitting | Standing | Walking |

Walking Upstairs |

Walking Downstairs | |||||||||

| ACC | F1 | ACC | F1 | ACC | F1 | ACC | F1 | ACC | F1 | ACC | F1 | ACC | F1 | ||

| Arm | CNN | 82.33 | 82.20 | 91.51 | 91.43 | 98.31 | 98.30 | 96.39 | 96.38 | 86.06 | 85.99 | 74.23 | 74.10 | 58.59 | 58.20 |

| LSTM | 93.01 | 92.94 | 96.30 | 96.26 | 97.04 | 97.02 | 98.06 | 98.00 | 94.51 | 94.51 | 88.59 | 88.48 | 82.68 | 82.57 | |

| BiLSTM | 92.47 | 92.37 | 96.85 | 96.82 | 97.75 | 97.69 | 96.81 | 96.81 | 94.51 | 94.55 | 84.79 | 84.78 | 77.32 | 77.21 | |

| GRU | 96.99 | 96.94 | 98.36 | 98.09 | 98.59 | 98.58 | 98.06 | 97.91 | 97.89 | 97.88 | 95.63 | 95.59 | 88.03 | 87.84 | |

| BiGRU | 96.71 | 96.64 | 98.08 | 98.08 | 97.75 | 97.70 | 97.36 | 97.39 | 96.62 | 96.66 | 94.79 | 94.78 | 85.07 | 85.08 | |

| DeepResNeXt | 97.12 | 98.19 | 98.81 | 98.86 | 98.59 | 98.58 | 98.92 | 98.04 | 98.03 | 98.037 | 97.93 | 97.02 | 89.15 | 90.59 | |

| Sensor | Model | Identification Performance | |||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Biking | Jogging | Sitting | Standing | Walking |

Walking Upstairs |

Walking Downstairs | |||||||||

| ACC | F1 | ACC | F1 | ACC | F1 | ACC | F1 | ACC | F1 | ACC | F1 | ACC | F1 | ||

| Belt | CNN | 95.48 | 95.41 | 92.88 | 92.85 | 99.72 | 99.72 | 98.89 | 98.87 | 98.87 | 98.87 | 93.80 | 93.80 | 88.17 | 88.09 |

| LSTM | 98.22 | 98.23 | 96.99 | 97.00 | 100.00 | 100.00 | 99.72 | 99.71 | 98.45 | 98.41 | 96.34 | 96.29 | 90.14 | 90.03 | |

| BiLSTM | 97.81 | 97.76 | 97.12 | 97.14 | 100.00 | 100.00 | 99.31 | 99.30 | 99.01 | 99.00 | 94.51 | 94.45 | 87.75 | 87.58 | |

| GRU | 98.36 | 98.35 | 98.49 | 98.49 | 100.00 | 100.00 | 99.86 | 99.72 | 99.01 | 99.00 | 97.75 | 97.73 | 95.49 | 95.46 | |

| BiGRU | 98.77 | 98.75 | 97.81 | 97.80 | 100.00 | 100.00 | 99.72 | 99.72 | 99.15 | 99.13 | 95.35 | 95.29 | 90.85 | 90.78 | |

| DeepResNeXt | 98.90 | 98.91 | 98.22 | 98.64 | 100.00 | 100.00 | 99.17 | 99.86 | 99.72 | 99.72 | 98.87 | 99.01 | 97.32 | 97.30 | |

| Sensor | Model | Identification Performance | |||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Biking | Jogging | Sitting | Standing | Walking |

Walking Upstairs |

Walking Downstairs | |||||||||

| ACC | F1 | ACC | F1 | ACC | F1 | ACC | F1 | ACC | F1 | ACC | F1 | ACC | F1 | ||

| Left | CNN | 86.71 | 86.62 | 91.64 | 91.55 | 100.00 | 100.00 | 98.06 | 98.06 | 89.01 | 88.90 | 65.77 | 65.85 | 65.77 | 65.46 |

| LSTM | 94.38 | 94.41 | 94.79 | 94.78 | 100.00 | 100.00 | 98.89 | 98.88 | 97.46 | 97.43 | 92.11 | 92.09 | 92.39 | 92.22 | |

| BiLSTM | 94.11 | 94.10 | 98.08 | 98.08 | 100.00 | 100.00 | 98.75 | 98.745 | 98.45 | 98.43 | 86.90 | 86.76 | 88.17 | 88.10 | |

| GRU | 97.26 | 97.25 | 98.77 | 98.78 | 100.00 | 100.00 | 99.86 | 99.86 | 99.72 | 99.71 | 97.32 | 97.29 | 94.51 | 94.44 | |

| BiGRU | 96.16 | 96.15 | 98.49 | 98.49 | 100.00 | 100.00 | 98.75 | 98.75 | 99.44 | 99.43 | 94.37 | 94.35 | 92.68 | 92.67 | |

| DeepResNeXt | 97.40 | 97.40 | 99.18 | 99.18 | 100.00 | 100.00 | 99.17 | 99.30 | 99.72 | 99.72 | 99.30 | 99.29 | 95.63 | 95.60 | |

| Sensor | Model | Identification Performance | |||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Biking | Jogging | Sitting | Standing | Walking |

Walking Upstairs |

Walking Downstairs | |||||||||

| ACC | F1 | ACC | F1 | ACC | F1 | ACC | F1 | ACC | F1 | ACC | F1 | ACC | F1 | ||

| Right | CNN | 91.23 | 91.13 | 90.41 | 90.38 | 100.00 | 100.00 | 98.19 | 98.20 | 80.85 | 80.79 | 68.59 | 68.64 | 56.06 | 55.68 |

| LSTM | 96.44 | 96.41 | 98.08 | 98.12 | 100.00 | 100.00 | 99.31 | 99.18 | 97.18 | 97.18 | 91.27 | 91.20 | 89.01 | 88.87 | |

| BiLSTM | 96.58 | 96.57 | 98.90 | 98.89 | 100.00 | 100.00 | 99.17 | 99.15 | 97.61 | 97.59 | 89.44 | 89.32 | 78.87 | 78.61 | |

| GRU | 97.81 | 97.80 | 98.22 | 98.23 | 100.00 | 100.00 | 99.58 | 99.58 | 99.30 | 98.04 | 96.62 | 96.53 | 92.54 | 92.43 | |

| BiGRU | 97.81 | 97.23 | 98.90 | 98.10 | 100.00 | 100.00 | 99.17 | 99.16 | 98.73 | 98.74 | 95.92 | 95.90 | 89.30 | 89.32 | |

| DeepResNeXt | 97.83 | 97.81 | 98.93 | 98.91 | 100.00 | 100.00 | 99.27 | 99.29 | 99.32 | 99.30 | 97.89 | 99.01 | 94.23 | 94.18 | |

| Sensor Type | Identification Performance | |||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Walking | Walking Upstairs | Walking Downstairs | Sitting | Standing | Laying | |||||||

| ACC | F1 | ACC | F1 | ACC | F1 | ACC | F1 | ACC | F1 | ACC | F1 | |

| Accelerometer | 94.83 | 94.55 | 91.84 | 91.49 | 87.41 | 86.82 | 77.99 | 76.53 | 74.87 | 73.38 | 82.87 | 82.22 |

| Gyroscope | 94.02 | 93.82 | 84.00 | 83.49 | 70.34 | 69.65 | 23.41 | 20.17 | 19.94 | 18.04 | 26.75 | 23.26 |

| Accelerometer+Gyroscope | 98.43 | 98.43 | 95.60 | 95.50 | 95.67 | 95.40 | 87.38 | 86.25 | 77.02 | 75.39 | 81.89 | 80.64 |

| Sensor Type | Identification Performance | |||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Biking | Jogging | Sitting | Standing | Walking |

Walking Upstairs |

Walking Downstairs | ||||||||

| ACC | F1 | ACC | F1 | ACC | F1 | ACC | F1 | ACC | F1 | ACC | F1 | ACC | F1 | |

| Accelerometer | 99.31 | 99.31 | 99.03 | 99.02 | 96.11 | 96.10 | 95.69 | 95.51 | 99.58 | 99.58 | 98.61 | 98.59 | 96.29 | 96.23 |

| Gyroscope | 93.61 | 93.60 | 100.00 | 100.00 | 68.06 | 66.81 | 49.86 | 47.27 | 98.61 | 98.62 | 97.50 | 97.51 | 93.86 | 93.78 |

| Magnetometer | 95.83 | 95.80 | 87.08 | 86.78 | 100.00 | 100.00 | 97.64 | 97.58 | 89.86 | 89.59 | 85.14 | 84.96 | 81.14 | 80.70 |

| Accelerometer+Gyroscope | 99.03 | 99.03 | 99.17 | 99.17 | 95.97 | 95.98 | 96.11 | 96.02 | 99.58 | 99.59 | 98.47 | 98.49 | 96.00 | 95.95 |

| Accelerometer+Magnetometer | 99.31 | 99.31 | 98.19 | 98.19 | 100.00 | 100.00 | 99.86 | 99.86 | 99.17 | 99.16 | 98.47 | 98.39 | 97.00 | 96.95 |

| Gyroscope+Magnetometer | 96.94 | 96.91 | 91.11 | 91.15 | 99.86 | 99.86 | 96.11 | 95.95 | 93.06 | 93.04 | 88.33 | 88.21 | 83.00 | 82.00 |

| Accelerometer+Gyroscope+Magnetometer | 99.50 | 99.51 | 98.22 | 98.64 | 100.00 | 100.00 | 99.17 | 99.86 | 99.72 | 99.72 | 98.87 | 99.01 | 97.32 | 97.30 |

| Sensor Type | Identification Performance | |||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Walking | Walking Upstairs | Walking Downstairs | Sitting | Standing | Laying | |||||||

| ACC | F1 | ACC | F1 | ACC | F1 | ACC | F1 | ACC | F1 | ACC | F1 | |

| Accelerometer | 94.83 | 94.55 | 91.84 | 91.49 | 87.41 | 86.82 | 77.99 | 76.53 | 74.87 | 73.38 | 82.87 | 82.22 |

| Gyroscope | 94.02 | 93.82 | 84.00 | 83.49 | 70.34 | 69.65 | 23.41 | 20.17 | 19.94 | 18.04 | 26.75 | 23.26 |

| Accelerometer+Gyroscope | 98.43 | 98.43 | 95.60 | 95.50 | 95.67 | 95.40 | 87.38 | 86.25 | 77.02 | 75.39 | 81.89 | 80.64 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Mekruksavanich, S.; Jitpattanakul, A. Identifying Smartphone Users Based on Activities in Daily Living Using Deep Neural Networks. Information 2024, 15, 47. https://doi.org/10.3390/info15010047

Mekruksavanich S, Jitpattanakul A. Identifying Smartphone Users Based on Activities in Daily Living Using Deep Neural Networks. Information. 2024; 15(1):47. https://doi.org/10.3390/info15010047

Chicago/Turabian StyleMekruksavanich, Sakorn, and Anuchit Jitpattanakul. 2024. "Identifying Smartphone Users Based on Activities in Daily Living Using Deep Neural Networks" Information 15, no. 1: 47. https://doi.org/10.3390/info15010047

APA StyleMekruksavanich, S., & Jitpattanakul, A. (2024). Identifying Smartphone Users Based on Activities in Daily Living Using Deep Neural Networks. Information, 15(1), 47. https://doi.org/10.3390/info15010047