Responsible Automation: Exploring Potentials and Losses through Automation in Human–Computer Interaction from a Psychological Perspective

Abstract

1. Introduction

1.1. Responsible Automation

1.2. Research Focus and Contributions

2. Related Work

2.1. Trends in Automation Research

2.2. Research on Human–Robot Interaction (HRI)

2.3. Preliminary Framework on Losses through Automation

3. Study 1: Explorative Investigation of Perceived Gains and Losses through Automation

3.1. Research Questions

- To what degree do people perceive specific gains or losses through automation? Do they differentiate between gains and losses through automation in the different dimensions (hence, do these represent a helpful framework of reflection)?

- Do their perceptions of gains and losses through automation differ between different tasks?

- What is their general attitude towards automation and how do they justify attitudes for or against automation? Are there specific dimensions which seem most relevant for a global judgment for or against automation?

3.2. Method

3.2.1. Study Design and Procedure

3.2.2. Participants

3.2.3. Measures

- Introduction: Brief information about the study and research background, declaration of consent to participate.

- Ratings of gains and losses through automation: Ratings of imagined consequences of automating five tasks in the seven dimensions listed above. For each task, participants provided ratings of imagined losses and gains in the seven dimensions (1 = losing, 7 = gaining, one item per dimension) and a global rating, indicating whether in their view automating this task was desirable or not (1 = not desirable, 7 = desirable).

- Wishes for automation and non-automation: In two open text fields participants indicated one task which in their view definitely should be automated and another task that should definitely not be automated.

- Demographic data: Basic demographic data such as gender, age, household size, occupation.

3.2.4. Data Analysis

3.3. Results

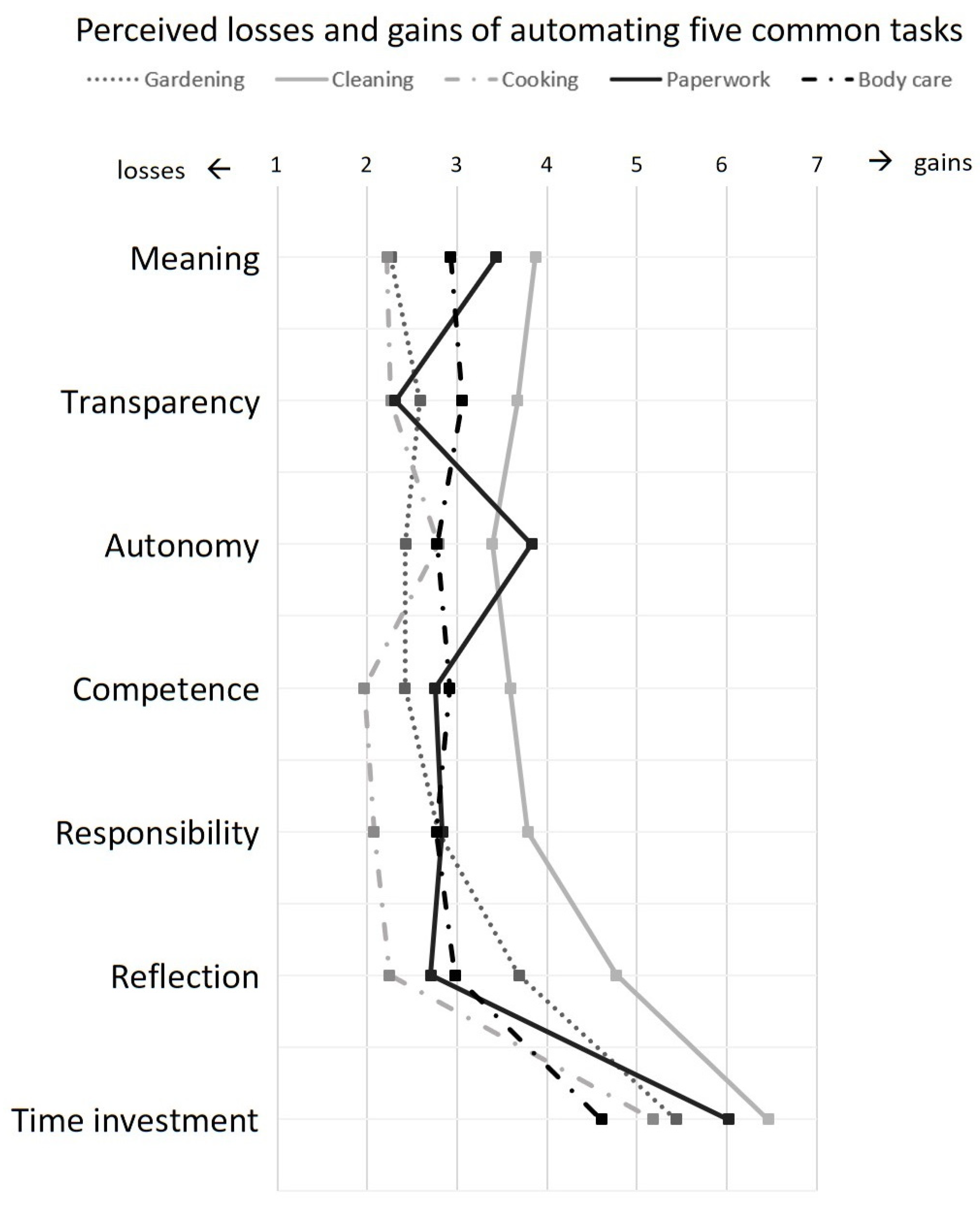

3.3.1. Perceived Gains and Losses through Automation in Different Dimensions

3.3.2. Global Judgments Pro or Contra Automating Different Tasks

3.3.3. Relevance of Gains and Losses in Different Dimensions for Global Judgment Pro or Contra Automating a Task

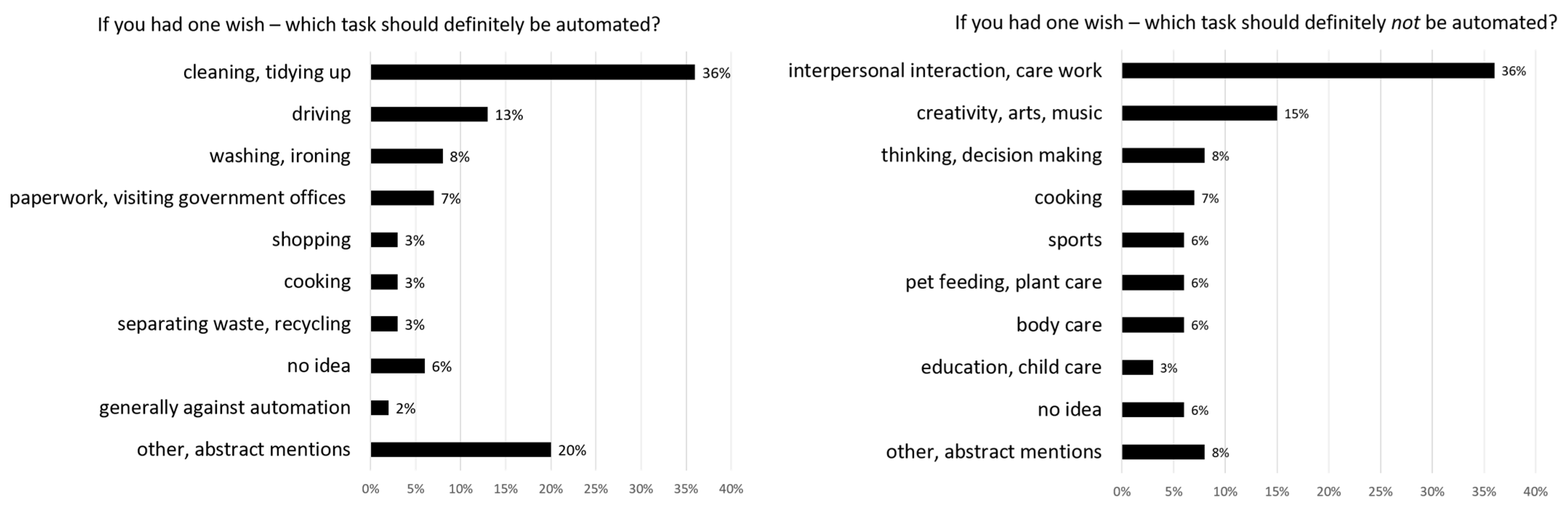

3.3.4. Wishes for Automation and Non-Automation

3.4. Discussion

4. Study 2: Experimental Exploration of User Experience and Responsibility Attributions for Manual vs. Robot Devices

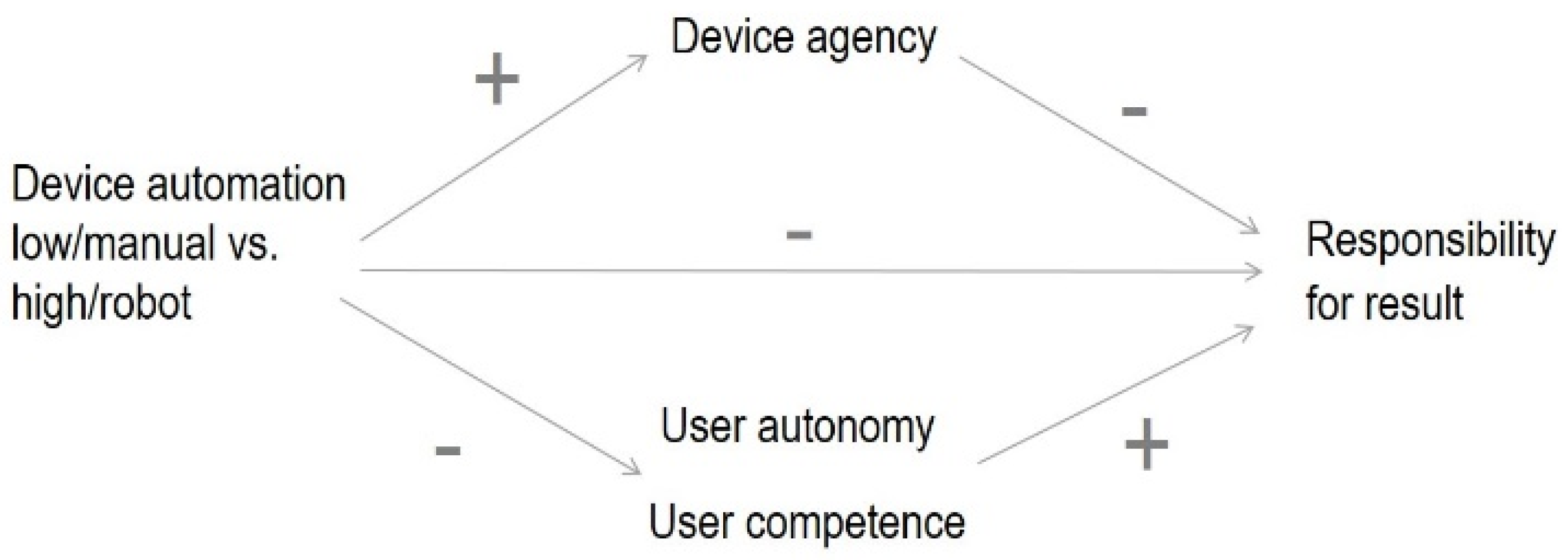

4.1. Mediating Factors of Responsibility Attributions for Manual vs. Robot Devices

4.1.1. Device Agency

4.1.2. Autonomy

4.1.3. Competence

4.2. Hypotheses and Research Questions

4.3. Method

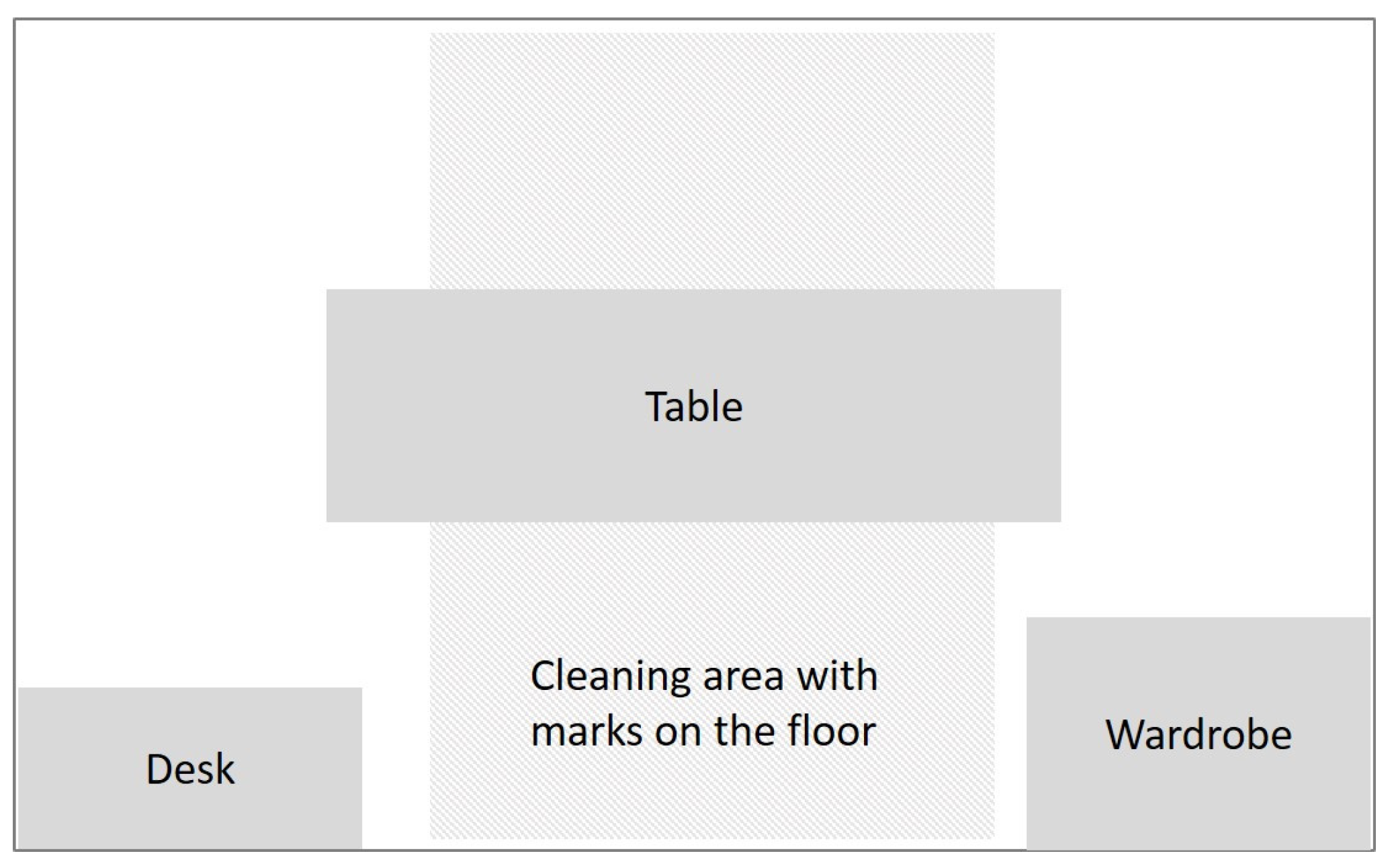

4.3.1. Study Design and Procedure

4.3.2. Participants

4.3.3. Measures

4.3.4. Data Analysis

4.4. Results

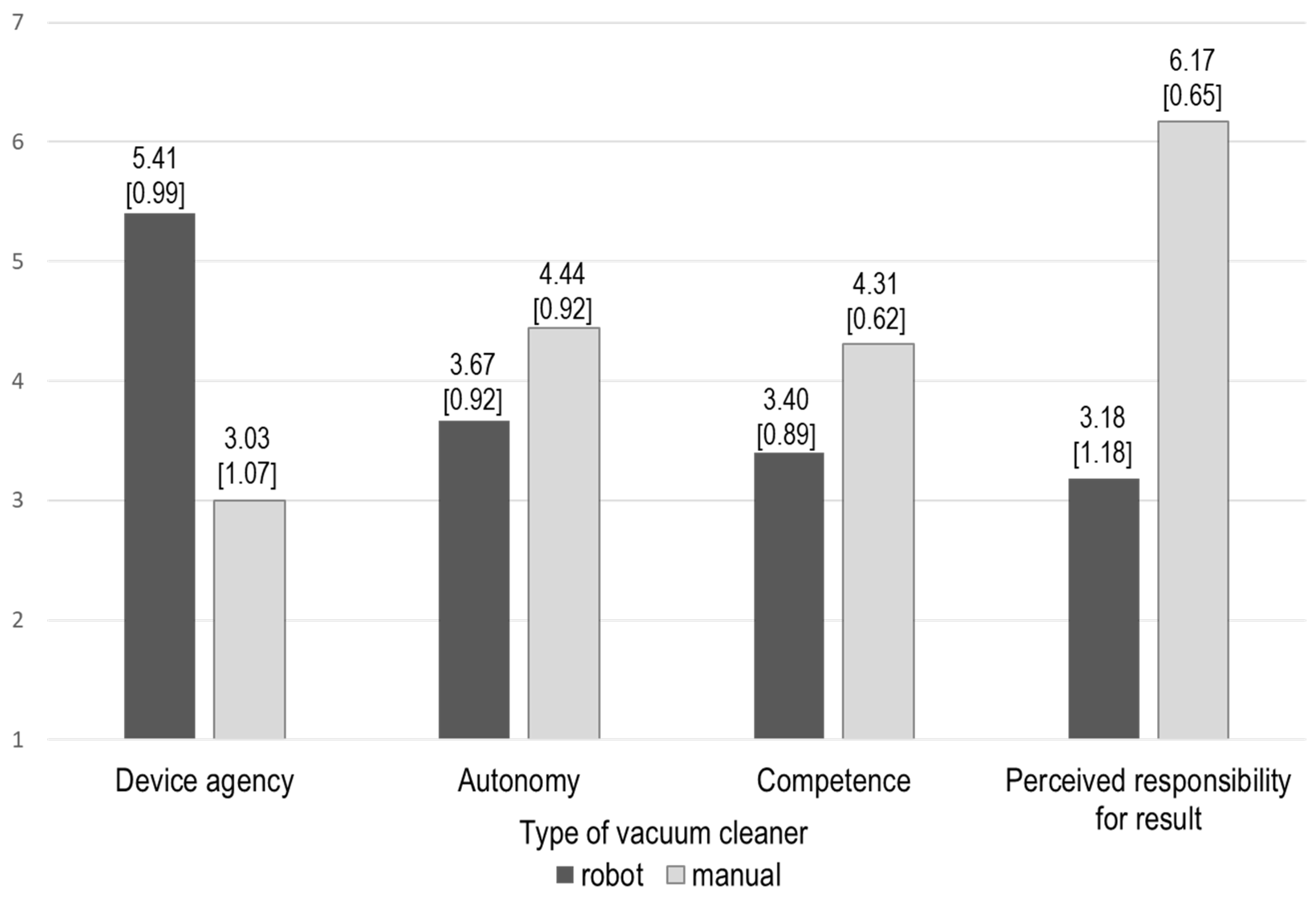

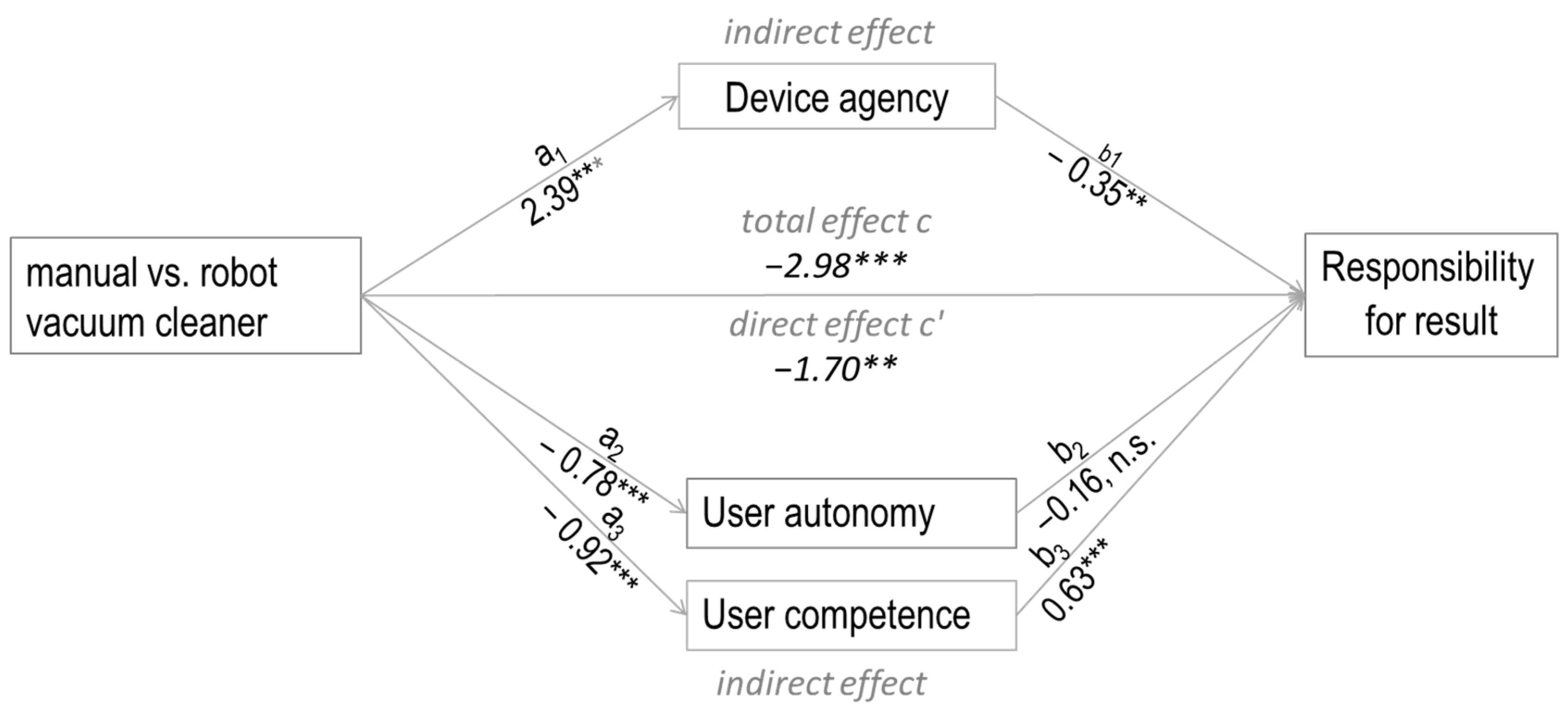

4.4.1. Hypotheses Tests

4.4.2. Exploratory Quantitative Analyses

4.4.3. Qualitative Analyses

4.5. Discussion

5. General Discussion

5.1. Implications for Design

5.2. Limitations and Future Work

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Appendix A

References

- Janssen, C.P.; Donker, S.F.; Brumby, D.P.; Kun, A.L. History and future of human-automation interaction. Int. J. Hum. Comput. Stud. 2019, 131, 99–107. [Google Scholar] [CrossRef]

- Heuzeroth, T. Smarthome: Deutsche Haben Angst vor Einem Intelligenten Zuhause. Available online: https://www.welt.de/wirtschaft/webwelt/article205369107/Smarthome-Deutsche-haben-Angst-vor-einem-intelligenten-Zuhause.html.71 (accessed on 26 September 2023).

- Wright, J. Inside Japan’s long experiment in automating elder care. MIT Technol. Rev. 2023. Available online: https://www.technologyreview.com/2023/01/09/1065135/japan-automating-eldercare-robots/ (accessed on 11 July 2024).

- Gardena. Advertising of the Robot Lawn Mower GARDENA SILENO life. Available online: https://www.media-gardena.com/news-der-countdown-laeuft?id=78619&menueid=17190&l=deutschland&tab=1 (accessed on 12 January 2024).

- Hassenzahl, M.; Klapperich, H. Convenient, clean, and efficient? In Proceedings of the NordiCHI ‘14: The 8th Nordic Conference on Human-Computer Interaction, Helsinki, Finland, 26–30 October 2014; pp. 21–30. [Google Scholar]

- Kullmann, M.; Ehlers, J.; Hornecker, E.; Chuang, L.L. Can Asynchronous Kinetic Cues of Physical Controls Improve (Home) Automation? In Proceedings of the CHI 2023 AutomationXP23 Workshop: Intervening, Teaming, Delegating. Creating Engaging Automation Experiences, Hamburg, Germany, 23 April 2023; Available online: https://ceur-ws.org/Vol-3394/short10.pdf (accessed on 1 August 2024).

- Fröhlich, P.; Mirnig, A.; Zafari, S.; Baldauf, M. The Human in the Loop in Automated Production Processes: Terminology, Aspects and Current Challenges in HCI Research. In Proceedings of the CHI 2023 AutomationXP23 Workshop: Intervening, Teaming, Delegating. Creating Engaging Automation Experiences, Hamburg, Germany, 23 April 2023; Available online: https://ceur-ws.org/Vol-3394/short14.pdf (accessed on 1 August 2024).

- Sadeghian, S.; Hassenzahl, M. On Autonomy and Meaning in Human-Automation Interaction. In Proceedings of the CHI 2023 AutomationXP23 Workshop: Intervening, Teaming, Delegating. Creating Engaging Automation Experiences, Hamburg, Germany, 23 April 2023; Available online: https://ceur-ws.org/Vol-3394/short6.pdf (accessed on 1 August 2024).

- Semuels, A. Millions of Americans Have Lost Jobs in the Pandemic—And Robots and AI Are Replacing Them Faster Than Ever. Time, 6 August 2020. Available online: https://time.com/5876604/machines-jobs-coronavirus/ (accessed on 11 April 2024).

- Willcocks, L. No, Robots Aren’t Destroying Half of All Jobs. The London School of Economics and Political Science. Available online: https://www.lse.ac.uk/study-at-lse/online-learning/insights/no-robots-arent-destroying-half-of-all-jobs (accessed on 11 April 2024).

- Fröhlich, P.; Baldauf, M.; Palanque, P.; Roto, V.; Paternò, F.; Ju, W.; Tscheligi, M. Intervening, Teaming, Delegating: Creating Engaging Automation Experiences. In Proceedings of the CHI ’23: CHI Conference on Human Factors in Computing Systems, Hamburg, Germany, 23–29 April 2023. [Google Scholar]

- Mirnig, A.G. Interacting with automated vehicles and why less might be more. In Proceedings of the CHI 2023 AutomationXP23 Workshop: Intervening, Teaming, Delegating. Creating Engaging Automation Experiences, Hamburg, Germany, 23 April 2023; Available online: https://ceur-ws.org/Vol-3394/short18.pdf (accessed on 1 August 2024).

- Stampf, A.; Rukzio, E. Addressing Passenger-Vehicle Conflicts: Challenges and Research Directions. In Proceedings of the CHI 2023 AutomationXP23 Workshop: Intervening, Teaming, Delegating. Creating Engaging Automation Experiences, Hamburg, Germany, 23 April 2023; Available online: https://ceur-ws.org/Vol-3394/short15.pdf (accessed on 1 August 2024).

- Müller, S.; Baldauf, M.; Fröhlich, P. AI-Assisted Document Tagging—Exploring Adaptation Effects among Domain Experts. In Proceedings of the CHI 2023 AutomationXP23 Workshop: Intervening, Teaming, Delegating. Creating Engaging Automation Experiences, Hamburg, Germany, 23 April 2023; Available online: https://ceur-ws.org/Vol-3394/short12.pdf (accessed on 1 August 2024).

- Sengupta, S.; McNeese, N.J. Synthetic Authority: Speculating the Future of Leadership in the Age of Human-Autonomy Teams. In Proceedings of the CHI 2023 AutomationXP23 Workshop: Intervening, Teaming, Delegating. Creating Engaging Automation Experiences, Hamburg, Germany, 23 April 2023; Available online: https://ceur-ws.org/Vol-3394/short13.pdf (accessed on 1 August 2024).

- Schneiders, E.; Kanstrup, A.M.; Kjeldskov, J.; Skov, M.B. Domestic Robots and the Dream of Automation: Understanding Human Interaction and Intervention. In Proceedings of the 2021 CHI Conference on Human Factors in Computing Systems, Online, 8–13 May 2021; pp. 1–13. [Google Scholar]

- Mennicken, S.; Vermeulen, J.; Huang, E.M. From today’s augmented houses to tomorrow’s smart homes. In Proceedings of the 2014 ACM Conference on Ubiquitous Computing, Seattle, WA, USA, 13–17 September 2014; pp. 105–115. [Google Scholar] [CrossRef]

- Bittner, B.; Aslan, I.; Dang, C.T.; André, E. Of Smarthomes, IoT Plants, and Implicit Interaction Design. In Proceedings of the TEI ‘19: Thirteenth International Conference on Tangible, Embedded, and Embodied Interaction, Tempe, AZ, USA, 17–20 March 2019; pp. 145–154. [Google Scholar]

- Onnasch, L.; Roesler, E. A Taxonomy to Structure and Analyze Human–Robot Interaction. Int. J. Soc. Robot. 2020, 13, 833–849. [Google Scholar] [CrossRef]

- Hegel, F.; Muhl, C.; Wrede, B.; Hielscher-Fastabend, M.; Sagerer, G. Understanding Social Robots. In Proceedings of the 2009 Second International Conferences on Advances in Computer-Human Interactions (ACHI), Cancun, Mexico, 1–7 February 2009; pp. 169–174. [Google Scholar]

- Beer, J.M.; Fisk, A.D.; Rogers, W.A. Toward a Framework for Levels of Robot Autonomy in Human-Robot Interaction. J. Hum.-Robot Interact. 2014, 3, 74–99. [Google Scholar] [CrossRef] [PubMed]

- Noorman, M.; Johnson, D.G. Negotiating autonomy and responsibility in military robots. Ethics Inf. Technol. 2014, 16, 51–62. [Google Scholar] [CrossRef]

- Westfall, M. Perceiving agency. Mind Lang. 2022, 38, 847–865. [Google Scholar] [CrossRef]

- Sheldon, K.M.; Elliot, A.J.; Kim, Y.; Kasser, T. What is satisfying about satisfying events? Testing 10 candidate psychological needs. J. Pers. Soc. Psychol. 2001, 80, 325–339. [Google Scholar] [CrossRef] [PubMed]

- Frankl, V.E. Man’s Search for Meaning; Simon and Schuster: New York, NY, USA, 1984. [Google Scholar]

- Lips-Wiersma, M.; Wright, S. Measuring the meaning of meaningful work: Development and validation of the Comprehensive Meaningful Work Scale (CMWS). Group Organ. Manag. 2012, 37, 655–685. [Google Scholar] [CrossRef]

- Samek, W.; Müller, K.-R. Towards explainable artificial intelligence. In Explainable AI: Interpreting, Explaining and Visualizing Deep Learning; Samek, W., Montavon, G., Vedaldi, A., Hansen, L.K., Müller, K.R., Eds.; Springer: Cham, Switzerland, 2019. [Google Scholar] [CrossRef]

- Xu, F.; Uszkoreit, H.; Du, Y.; Fan, W.; Zhao, D.; Zhu, J. Explainable AI: A brief survey on history, research areas, approaches and challenges. In CCF International Conference on Natural Language Processing and Chinese Computing; Springer: Cham, Switzerland, 2019; pp. 563–574. [Google Scholar] [CrossRef]

- Chen, J.Y.C.; Boyce, M.; Wright, J.; Barnes, M. Situation Awareness-Based Agent Transparency; Defense Technical Information Center: Fort Belvoir, VI, USA, 2014. [Google Scholar] [CrossRef]

- Liao, Q.V.; Gruen, D.; Miller, S. Questioning the AI: Informing Design Practices for Explainable AI User Experiences. In Proceedings of the 2020 CHI Conference on Human Factors in Computing Systems, Honolulu, HI, USA, 25–30 April 2020. [Google Scholar] [CrossRef]

- Roscher, R.; Bohn, B.; Duarte, M.F.; Garcke, J. Explainable Machine Learning for Scientific Insights and Discoveries. IEEE Access 2020, 8, 42200–42216. [Google Scholar] [CrossRef]

- Shin, D.; Zhong, B.; Biocca, F.A. Beyond user experience: What constitutes algorithmic experiences? Int. J. Inf. Manag. 2020, 52, 102061. [Google Scholar] [CrossRef]

- Silva, A.; Schrum, M.; Hedlund-Botti, E.; Gopalan, N.; Gombolay, M. Explainable Artificial Intelligence: Evaluating the Objective and Subjective Impacts of xAI on Human-Agent Interaction. Int. J. Hum. Comput. Interact. 2023, 39, 1390–1404. [Google Scholar] [CrossRef]

- Ehsan, U.; Liao, Q.V.; Muller, M.; Riedl, M.O.; Weisz, J.D. Expanding Explainability: Towards Social Transparency in AI systems. In Proceedings of the 2021 CHI Conference on Human Factors in Computing Systems, Yokohama, Japan, 8–13 May 2021; pp. 1–9. [Google Scholar]

- Berber, A.; Srećković, S. When something goes wrong: Who is responsible for errors in ML decision-making? AI Soc. 2023. [Google Scholar] [CrossRef]

- Chromik, M.; Eiband, M.; Völkel, S.T.; Buschek, D. Dark Patterns of Explainability, Transparency, and User Control for Intelligent Systems. In Proceedings of the IUI Workshops, Los Angeles, CA, USA, 20 March 2019; Volume 2327. [Google Scholar]

- Deci, E.; Ryan, R.M. Intrinsic Motivation and Self-Determination in Human Behavior; Springer Science & Business Media: Berlin/Heidelberg, Germany, 1985; ISBN 0306420228. [Google Scholar]

- Torrance, E.P.; Brehm, J.W. A Theory of Psychological Reactance. Am. J. Psychol. 1968, 81, 133. [Google Scholar] [CrossRef]

- Steindl, C.; Jonas, E.; Sittenthaler, S.; Traut-Mattausch, E.; Greenberg, J. Understanding Psychological Reactance. Z. Psychol. Psychol. 2015, 223, 205–214. [Google Scholar] [CrossRef] [PubMed]

- Fotiadis, A.; Abdulrahman, K.; Spyridou, A. The Mediating Roles of Psychological Autonomy, Competence and Relatedness on Work-Life Balance and Well-Being. Front. Psychol. 2019, 10, 1267. [Google Scholar] [CrossRef]

- Etzioni, A.; Etzioni, O. AI assisted ethics. Ethics Inf. Technol. 2016, 18, 149–156. [Google Scholar] [CrossRef]

- Formosa, P. Robot Autonomy vs. Human Autonomy: Social Robots, Artificial Intelligence (AI), and the Nature of Autonomy. Minds Mach. 2021, 31, 595–616. [Google Scholar] [CrossRef]

- Nyholm, S. Attributing Agency to Automated Systems: Reflections on Human–Robot Collaborations and Responsibility-Loci. Sci. Eng. Ethics 2017, 24, 1201–1219. [Google Scholar] [CrossRef] [PubMed]

- Selvaggio, M.; Cognetti, M.; Nikolaidis, S.; Ivaldi, S.; Siciliano, B. Autonomy in Physical Human-Robot Interaction: A Brief Survey. IEEE Robot. Autom. Lett. 2021, 6, 7989–7996. [Google Scholar] [CrossRef]

- Sundar, S.S. Rise of Machine Agency: A Framework for Studying the Psychology of Human–AI Interaction (HAII). J. Comput. Commun. 2020, 25, 74–88. [Google Scholar] [CrossRef]

- Pickering, J.B.; Engen, V.; Walland, P. The Interplay Between Human and Machine Agency. In Human-Computer Interaction. User Interface Design, Development and Multimodality; Springer International Publishing: Cham, Switzerland, 2017; pp. 47–59. [Google Scholar] [CrossRef]

- Lauermann, F.; Berger, J.-L. Linking teacher self-efficacy and responsibility with teachers’ self-reported and student-reported motivating styles and student engagement. Learn. Instr. 2021, 76, 101441. [Google Scholar] [CrossRef]

- Laitinen, A.; Sahlgren, O. AI Systems and Respect for Human Autonomy. Front. Artif. Intell. 2021, 4, 705164. [Google Scholar] [CrossRef] [PubMed]

- Jia, H.; Wu, M.; Jung, E.; Shapiro, A.; Sundar, S.S. Balancing human agency and object agency. In Proceedings of the 2012 ACM Conference on Ubiquitous Computing, Pittsburgh, PA, USA, 5–8 September 2012; pp. 1185–1188. [Google Scholar]

- van der Woerdt, S.; Haselager, P. When robots appear to have a mind: The human perception of machine agency and responsibility. New Ideas Psychol. 2019, 54, 93–100. [Google Scholar] [CrossRef]

- Waytz, A.; Heafner, J.; Epley, N. The mind in the machine: Anthropomorphism increases trust in an autonomous vehicle. J. Exp. Soc. Psychol. 2014, 52, 113–117. [Google Scholar] [CrossRef]

- Moon, Y.; Nass, C. Are computers scapegoats? Attributions of responsibility in human–computer interaction. Int. J. Hum. Comput. Stud. 1998, 49, 79–94. [Google Scholar] [CrossRef]

- Matthias, A. The responsibility gap: Ascribing responsibility for the actions of learning automata. Ethics Inf. Technol. 2004, 6, 175–183. [Google Scholar] [CrossRef]

- Asaro, P.M. Asaro, P.M. A Body to Kick, but Still No Soul to Damn: Legal Perspectives on Robotics. In Robot Ethics. The Ethical and Social Implications of Robotics; Lin, P., Abney, K., Bekey, G.A., Eds.; MIT Press: Cambridge, MA, USA, 2011; pp. 169–186. [Google Scholar]

- Champagne, M.; Tonkens, R. A Comparative Defense of Self-initiated Prospective Moral Answerability for Autonomous Robot harm. Sci. Eng. Ethics 2023, 29, 27. [Google Scholar] [CrossRef] [PubMed]

- Coeckelbergh, M. Artificial Intelligence, Responsibility Attribution, and a Relational Justification of Explainability. Sci. Eng. Ethics 2019, 26, 2051–2068. [Google Scholar] [CrossRef] [PubMed]

- Gunkel, D.J. Mind the gap: Responsible robotics and the problem of responsibility. Ethics Inf. Technol. 2020, 22, 307–320. [Google Scholar] [CrossRef]

- Theodorou, A.; Dignum, V. Towards ethical and socio-legal governance in AI. Nat. Mach. Intell. 2020, 2, 10–12. [Google Scholar] [CrossRef]

- Vandenhof, C.; Law, E. Contradict the Machine: A Hybrid Approach to Identifying Unknown Unknowns. In Proceedings of the 18th International Conference on Autonomous Agents and Multi Agent Systems, Montreal, QC, Canada, 13–17 May 2019; pp. 2238–2240. [Google Scholar]

- Grosse-Hering, B.; Mason, J.; Aliakseyeu, D.; Bakker, C.; Desmet, P. Slow design for meaningful interactions. In Proceedings of the CHI ‘13: CHI Conference on Human Factors in Computing Systems, Paris, France, 27 April–2 May 2013; pp. 3431–3440. [Google Scholar]

- Strauss, C.F.; Fuad-Luke, A. The slow design principles: A new interrogative and reflexive tool for design research and practice. In Proceedings of the Changing the Change: Design Visions, Proposals and Tools, Turin, Italy, 10–12 July 2008. [Google Scholar]

- Diefenbach, S.; Hassenzahl, M.; Eckoldt, K.; Hartung, L.; Lenz, E.; Laschke, M. Designing for well-being: A case study of keeping small secrets. J. Posit. Psychol. 2016, 12, 151–158. [Google Scholar] [CrossRef]

- Lenz, E.; Diefenbach, S.; Hassenzahl, M. Aesthetics of interaction. In Proceedings of the 8th Nordic Conference on Human-Computer Interaction, Helsinki, Finland, 26–30 October 2014. [Google Scholar] [CrossRef]

- Lenz, E.; Hassenzahl, M.; Diefenbach, S. How Performing an Activity Makes Meaning. In Proceedings of the 2019 CHI Conference on Human Factors in Computing Systems, Scotland, UK, 4–9 May 2019; pp. 1–6. [Google Scholar] [CrossRef]

- Brooke, J. SUS: A “quick and dirty” usability scale. In Usability Evaluation in Industry; Jordan, P.W., Thomas, B., Weerdmeester, B.A., McClelland, A.L., Eds.; Taylor and Francis: London, UK, 1996. [Google Scholar]

- Gaube, S.; Suresh, H.; Raue, M.; Merritt, A.; Berkowitz, S.J.; Lermer, E.; Coughlin, J.F.; Guttag, J.V.; Colak, E.; Ghassemi, M. Do as AI say: Susceptibility in deployment of clinical decision-aids. NPJ Digit. Med. 2021, 4, 31. [Google Scholar] [CrossRef] [PubMed]

- Cai, M.Y.; Lin, Y.; Zhang, W.J. Study of the optimal number of rating bars in the likert scale. In Proceedings of the iiWAS ‘16: 18th International Conference on Information Integration and Web-Based Applications and Services, Singapore, 28–30 November 2016; pp. 193–198. [Google Scholar]

- Finstad, K. Response interpolation and scale sensitivity: Evidence against 5-point scales. J. Usability Stud. 2010, 5, 104–110. [Google Scholar]

- Parasuraman, R.; Sheridan, T.; Wickens, C. A model for types and levels of human interaction with automation. IEEE Trans. Syst. Man Cybern. Part A Syst. Hum. 2000, 30, 286–297. [Google Scholar] [CrossRef] [PubMed]

- Carnovalini, F.; Rodà, A. Computational Creativity and Music Generation Systems: An Introduction to the State of the Art. Front. Artif. Intell. 2020, 3, 14. [Google Scholar] [CrossRef] [PubMed]

- Taffel, S. Automating Creativity. Spheres J. Digit. Cult. 2019, 5, 1–9. [Google Scholar]

- Joshi, B. Is AI Going to Replace Creative Professionals? Interactions 2023, 30, 24–29. [Google Scholar] [CrossRef]

- Inie, N.; Falk, J.; Tanimoto, S. Designing Participatory AI: Creative Professionals’ Worries and Expectations about Generative AI. In Proceedings of the CHI EA ‘23: Extended Abstracts of the 2023 CHI Conference on Human Factors in Computing Systems, Hamburg, Germany, 23–28 April 2023. [Google Scholar]

- Gray, K.; Young, L.; Waytz, A. Mind Perception Is the Essence of Morality. Psychol. Inq. 2012, 23, 101–124. [Google Scholar] [CrossRef] [PubMed]

- Neuhaus, R.; Ringfort-Felner, R.; Dörrenbächer, J.; Hassenzahl, M. How to Design Robots with Superpowers. In Meaningful Futures with Robots—Designing a New Coexistence; Chapman and Hall: London, UK; CRC: Boca Raton, FL, USA, 2022; pp. 43–54. [Google Scholar] [CrossRef]

- Thomas, J. Autonomy, Social Agency, and the Integration of Human and Robot Environments; Simon Fraser University: Burnaby, BC, Canada, 2019. [Google Scholar]

- Jackson, R.B.; Williams, T. A Theory of Social Agency for Human-Robot Interaction. Front. Robot. AI 2021, 8, 687726. [Google Scholar] [CrossRef] [PubMed]

- Moreau, E.; Mageau, G.A. The importance of perceived autonomy support for the psychological health and work satisfaction of health professionals: Not only supervisors count, colleagues too! Motiv. Emot. 2011, 36, 268–286. [Google Scholar] [CrossRef]

- Jain, S.; Argall, B. Probabilistic Human Intent Recognition for Shared Autonomy in Assistive Robotics. ACM Trans. Hum. Robot Interact. 2019, 9, 2. [Google Scholar] [CrossRef] [PubMed]

- Hayes, A.F. Introduction to Mediation, Moderation, and Conditional Process Analysis: A Regression-Based Approach, 3rd ed.; The Guilford Press: New York, NY, USA, 2022. [Google Scholar]

- Hong, J.-W.; Williams, D. Racism, responsibility and autonomy in HCI: Testing perceptions of an AI agent. Comput. Hum. Behav. 2019, 100, 79–84. [Google Scholar] [CrossRef]

- Peifer, C.; Syrek, C.; Ostwald, V.; Schuh, E.; Antoni, C.H. Thieves of Flow: How Unfinished Tasks at Work are Related to Flow Experience and Wellbeing. J. Happiness Stud. 2019, 21, 1641–1660. [Google Scholar] [CrossRef]

- Larsen, S.B.; Bardram, J.E. Competence articulation. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, Florence, Italy, 5–10 April 2008; pp. 553–562. [Google Scholar]

- Klapperich, H.; Uhde, A.; Hassenzahl, M. Designing everyday automation with well-being in mind. Pers. Ubiquitous Comput. 2020, 24, 763–779. [Google Scholar] [CrossRef]

- Dyson. Dyson V15. 2023. Available online: https://www.dyson.de/staubsauger/kabellos/v15/absolute-gelb-nickel (accessed on 26 September 2023).

- Dreame. DreameBot L10 Ultra. 2023. Available online: https://global.dreametech.com/products/dreamebot-l10-ultra (accessed on 26 September 2023).

- Botti, S.; McGill, A.L. When Choosing Is Not Deciding: The Effect of Perceived Responsibility on Satisfaction. J. Consum. Res. 2006, 33, 211–219. [Google Scholar] [CrossRef]

- Rijsdijk, S.A.; Hultink, E.J. “Honey, Have You Seen Our Hamster?” Consumer Evaluations of Autonomous Domestic Products. J. Prod. Innov. Manag. 2003, 20, 204–216. [Google Scholar] [CrossRef]

- Diefenbach, S.; Hassenzahl, M. Psychologie in der nutzerzentrierten Produktgestaltung; Springer Nature: Dordrecht, The Netherlands, 2017. [Google Scholar]

- Ardies, J.; De Maeyer, S.; Gijbels, D. Reconstructing the Pupils Attitude Towards Technology-Survey. Des. Technol. Educ. 2013, 18, 8–19. [Google Scholar]

- Mayring, P. Qualitative content analysis. A companion to qualitative research. Forum Qual. Soc. Res. 2000, 1, 159–176. [Google Scholar]

- Davidson, R.; MacKinnon, J.G. Estimation and Inference in Econometrics; Cambridge University Press: Cambridge, UK, 1993; Volume 63. [Google Scholar]

- Jörling, M.; Böhm, R.; Paluch, S. Service Robots: Drivers of Perceived Responsibility for Service Outcomes. J. Serv. Res. 2019, 22, 404–420. [Google Scholar] [CrossRef]

- Locke, E.A. The relationship of task success to task liking and satisfaction. J. Appl. Psychol. 1965, 49, 379–385. [Google Scholar] [CrossRef]

- Syrek, C.J.; Antoni, C.H. Unfinished tasks foster rumination and impair sleeping—Particularly if leaders have high performance expectations. J. Occup. Health Psychol. 2014, 19, 490–499. [Google Scholar] [CrossRef] [PubMed]

- Syrek, C.J.; Weigelt, O.; Peifer, C.; Antoni, C.H. Zeigarnik’s sleepless nights: How unfinished tasks at the end of the week impair employee sleep on the weekend through rumination. J. Occup. Health Psychol. 2017, 22, 225–238. [Google Scholar] [CrossRef] [PubMed]

- Gabriel, A.S.; Diefendorff, J.M.; Erickson, R.J. The relations of daily task accomplishment satisfaction with changes in affect: A multilevel study in nurses. J. Appl. Psychol. 2011, 96, 1095–1104. [Google Scholar] [CrossRef] [PubMed]

- Specialized. Learn to Ride Again. Available online: https://www.specialized.com/cz/en/electric-bikes (accessed on 26 September 2023).

- Moesgen, T.; Salovaara, A.; Epp, F.A.; Sanchez, C. Designing for Uncertain Futures: An Anticipatory Approach. Interactions 2023, 30, 36–41. [Google Scholar] [CrossRef]

- Bengston, D.N. The Futures Wheel: A Method for Exploring the Implications of Social–Ecological Change. Soc. Nat. Resour. 2015, 29, 374–379. [Google Scholar] [CrossRef]

- Glenn, J. The Futures Wheel. In Futures Research Methodology—V3.0 (ch. 6); Glenn, J.C., Gordon, T.J., Eds.; The Millennium project: Washington, DC, USA, 2009. [Google Scholar]

- Epp, F.A.; Moesgen, T.; Salovaara, A.; Pouta, E.; Gaziulusoy, I. Reinventing the Wheel: The Future Ripples Method for Activating Anticipatory Capacities in Innovation Teams. In Proceedings of the 2022 ACM Designing Interactive Systems Conference (DIS ’22), Virtual, 13–17 June 2022; pp. 387–399. [Google Scholar]

- Cerasoli, C.P.; Nicklin, J.M.; Nassrelgrgawi, A.S. Performance, incentives, and needs for autonomy, competence, and relatedness: A meta-analysis. Motiv. Emot. 2016, 40, 781–813. [Google Scholar] [CrossRef]

- Welge, J.; Hassenzahl, M. Better Than Human: About the Psychological Superpowers of Robots. In Social Robotics; Springer International Publishing: Cham, Switzerland, 2016; pp. 993–1002. [Google Scholar] [CrossRef]

- Ullrich, D.; Butz, A.; Diefenbach, S. The Eternal Robot: Anchoring Effects in Humans’ Mental Models of Robots and Their Self. Front. Robot. AI 2020, 7, 546724. [Google Scholar] [CrossRef]

- Tian, L.; Oviatt, S. A Taxonomy of Social Errors in Human-Robot Interaction. ACM Trans. Hum. Robot Interact. 2021, 10, 1–32. [Google Scholar] [CrossRef]

- Collins, E.C. Drawing parallels in human–other interactions: A trans-disciplinary approach to developing human–robot interaction methodologies. Philos. Trans. R. Soc. B Biol. Sci. 2019, 374, 20180433. [Google Scholar] [CrossRef]

- Verbeek, P.-P. Materializing morality: Design ethics and technological mediation. Sci. Technol. Hum. Values 2006, 31, 361–380. [Google Scholar] [CrossRef]

- Hassenzahl, M. Experience Design: Technology for All the Right Reasons; Morgan & Claypool Publishers: San Rafael, CA, USA, 2010. [Google Scholar]

- Desmet, P.M.A.; Roeser, S. Emotions in Design for Values. In Handbook of Ethics, Values, and Technological Design; Van den Hoven, J., Vermaas, P., van de Poel, I., Eds.; Springer: Dordrecht, Germany, 2015; pp. 203–219. [Google Scholar] [CrossRef]

- Mohseni, S.; Zarei, N.; Ragan, E.D. A Multidisciplinary Survey and Framework for Design and Evaluation of Explainable AI Systems. ACM Trans. Interact. Intell. Syst. 2021, 11, 1–45. [Google Scholar] [CrossRef]

- Mullainathan, S.; Biased Algorithms are Easier to Fix than Biased People. The New York Times, 6 December 2019. Available online: https://www.nytimes.com/2019/12/06/business/algorithm-bias-fix.html (accessed on 11 April 2024).

- Pethig, F.; Kroenung, J. Biased Humans, (Un)Biased Algorithms? J. Bus. Ethics 2023, 183, 637–652. [Google Scholar] [CrossRef]

| Meaning | Responsibility | Reflection | Transparency | Autonomy | Competence | Time Invest | |

|---|---|---|---|---|---|---|---|

| Gardening (GJ) | 0.457 | 0.444 | 0.344 | 0.443 | 0.383 | 0.464 | 0.512 |

| Toilet cleaning (GJ) | 0.289 | 0.366 | 0.197 | 0.257 | 0.355 | 0.271 | 0.538 |

| Cooking (GJ) | 0.565 | 0.531 | 0.462 | 0.427 | 0.586 | 0.576 | 0.571 |

| Paperwork (GJ) | 0.453 | 0.426 | 0.419 | 0.497 | 0.452 | 0.394 | 0.472 |

| Body care (GJ) | 0.562 | 0.557 | 0.528 | 0.413 | 0.546 | 0.380 | 0.651 |

| All tasks (GJ) | 0.543 | 0.497 | 0.441 | 0.435 | 0.548 | 0.471 | 0.625 |

| Question | Category Answer | Frequency | % |

|---|---|---|---|

| (1) To what degree did you feel autonomous during the interaction and why? | |||

| not/little autonomous | 7 | 12% | |

| medium level of autonomy | 15 | 26% | |

| very autonomous | 34 | 60% | |

| other/missing | 1 | 2% | |

| (2) To what degree did you feel competent during the interaction and why? | |||

| not/little competent | 5 | 9% | |

| medium level of competence | 15 | 26% | |

| very competent | 34 | 60% | |

| other/missing | 3 | 5% | |

| (3) To what degree do you feel that the programming or adjusting of settings of a technology could be a source of competence? | |||

| programming as a competence/frustration source | 21 | 37% | |

| programming no good competence source | 10 | 18% | |

| partly/another type of competence | 24 | 42% | |

| meta-reflections | 2 | 4% | |

| (4) Are there any specific features which are deciding for you to still feel sufficiently autonomous, competent, and responsible for the result? | |||

| opportunity for manual control, haptic experience | 14 | 25% | |

| define the basic rules | 13 | 23% | |

| meta-reflections | 13 | 23% | |

| mapping the apartment, defining the robot’s way | 8 | 14% | |

| dialogue with the technology, transparency, hints | 5 | 9% | |

| defining the power | 3 | 5% | |

| defining the time of operation | 1 | 2% | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Diefenbach, S.; Ullrich, D.; Lindermayer, T.; Isaksen, K.-L. Responsible Automation: Exploring Potentials and Losses through Automation in Human–Computer Interaction from a Psychological Perspective. Information 2024, 15, 460. https://doi.org/10.3390/info15080460

Diefenbach S, Ullrich D, Lindermayer T, Isaksen K-L. Responsible Automation: Exploring Potentials and Losses through Automation in Human–Computer Interaction from a Psychological Perspective. Information. 2024; 15(8):460. https://doi.org/10.3390/info15080460

Chicago/Turabian StyleDiefenbach, Sarah, Daniel Ullrich, Tim Lindermayer, and Kaja-Lena Isaksen. 2024. "Responsible Automation: Exploring Potentials and Losses through Automation in Human–Computer Interaction from a Psychological Perspective" Information 15, no. 8: 460. https://doi.org/10.3390/info15080460

APA StyleDiefenbach, S., Ullrich, D., Lindermayer, T., & Isaksen, K.-L. (2024). Responsible Automation: Exploring Potentials and Losses through Automation in Human–Computer Interaction from a Psychological Perspective. Information, 15(8), 460. https://doi.org/10.3390/info15080460