Deep Learning to Authenticate Traditional Handloom Textile

Abstract

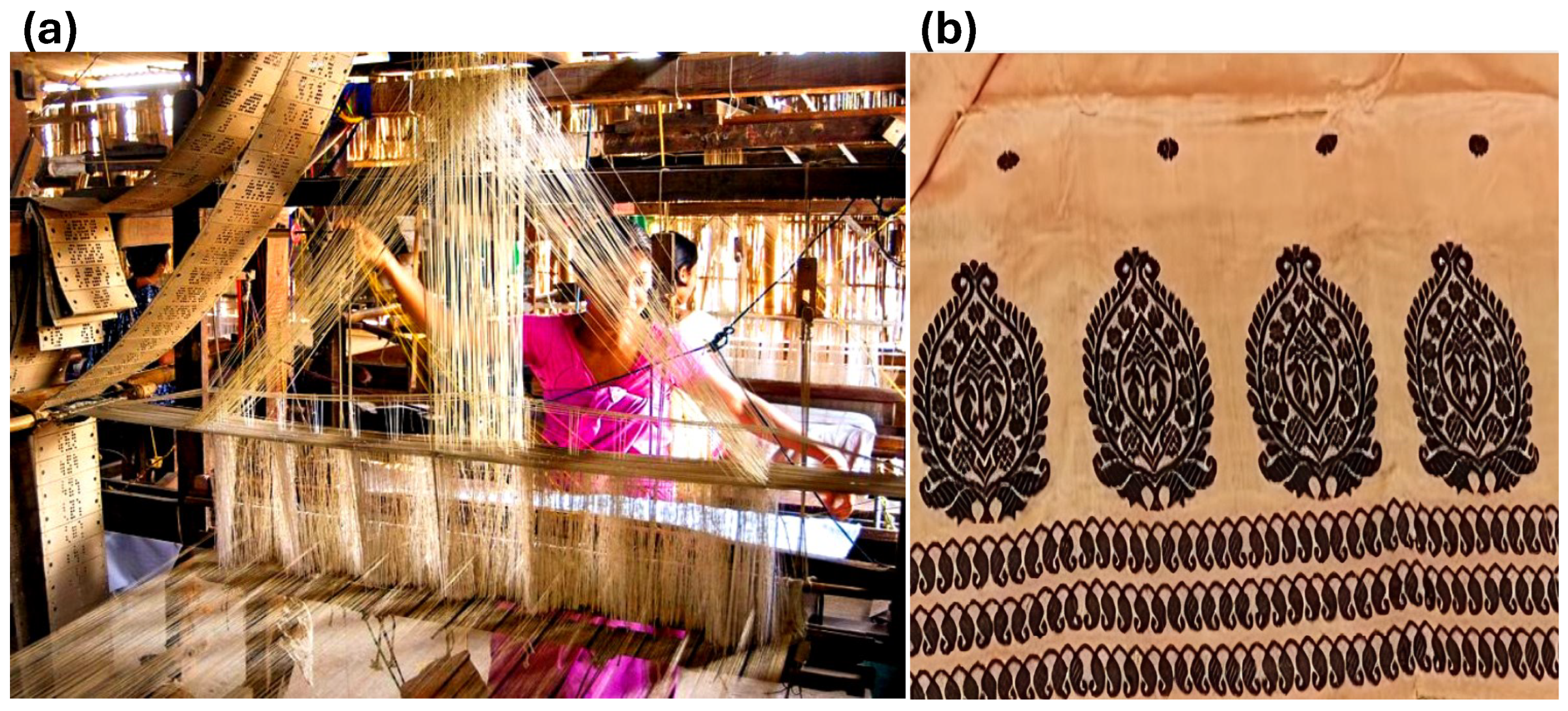

1. Introduction

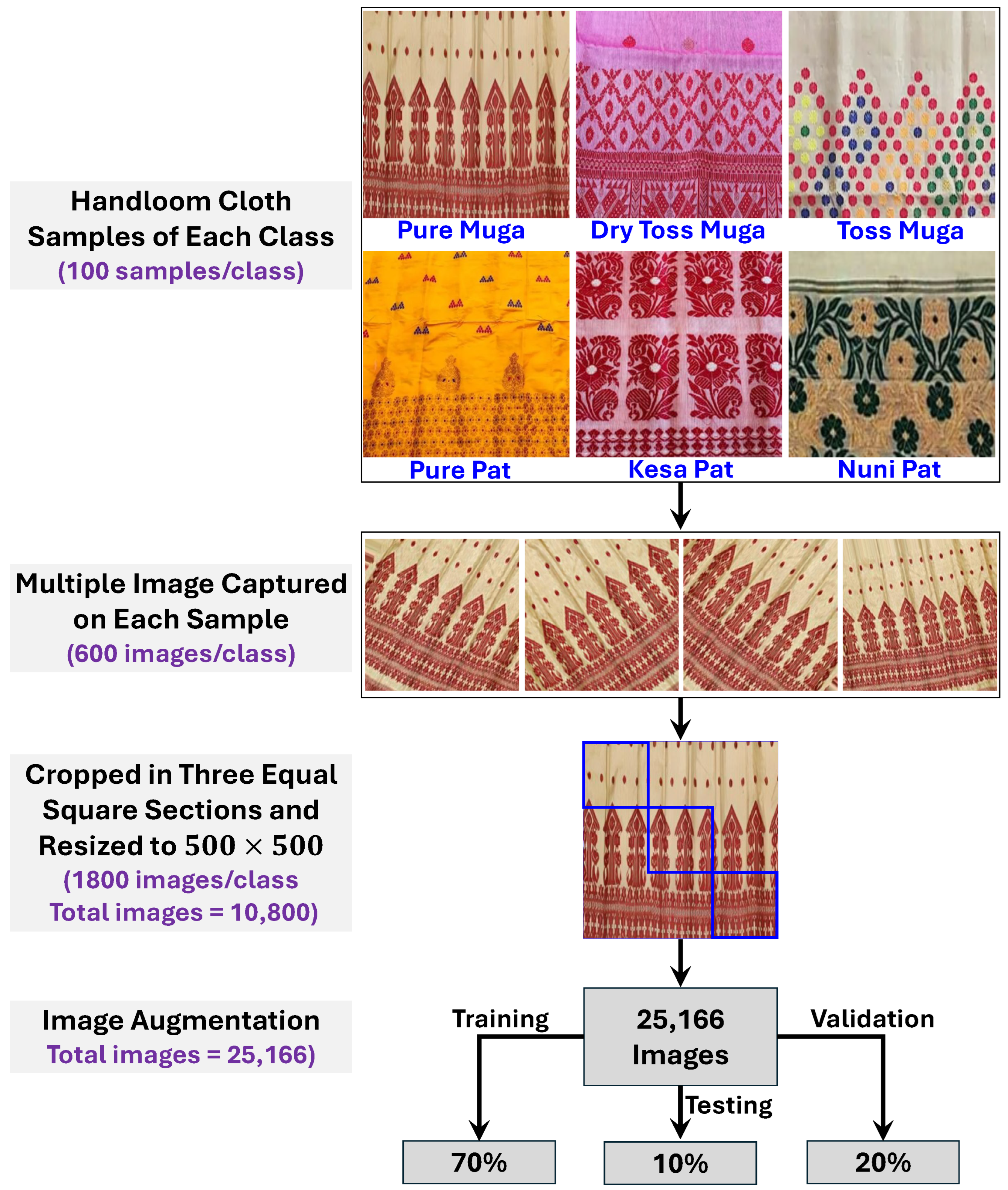

- We are the first to create our own labeled handloom textile image dataset consisting of six classes, i.e., Pure Pat, Kesa Pat, Nuni Pat, Pure Muga, Toss Muga, and Dry Toss Muga.

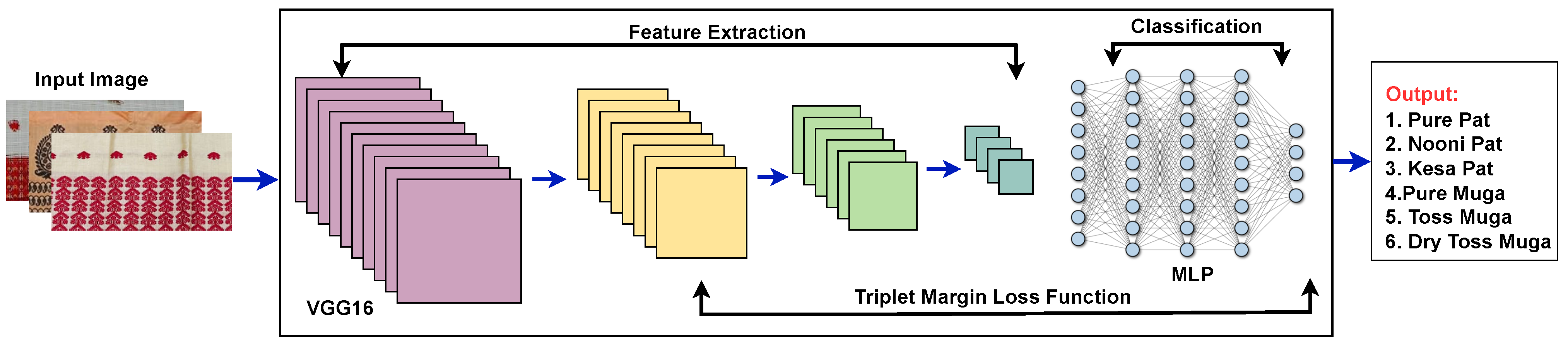

- We have developed a modified deep matric learning model to extract in a combined manner the features from the input sample, enabling them to capture subtle variations in handloom textures and patterns and classify them to their labeled classes.

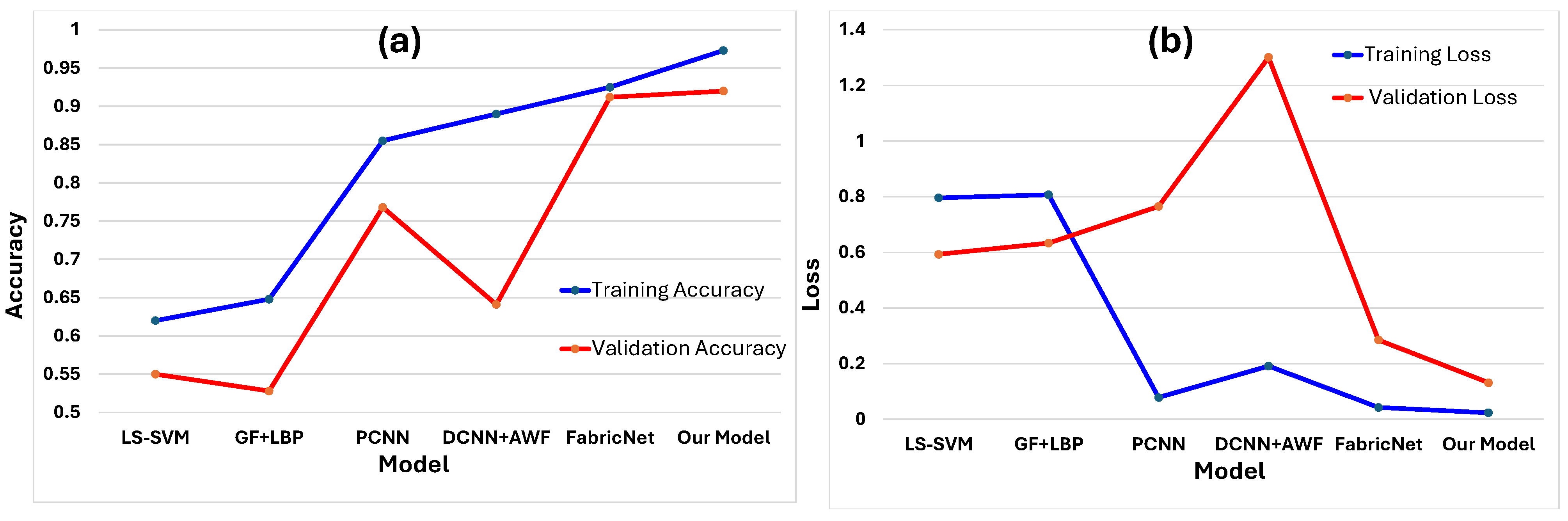

- We compared our proposed method with the state-of-the-art techniques in-terms of precision, recall, F1-score, and accuracy.

2. Literature Survey

3. Methodology

3.1. Dataset Development

3.2. Proposed Network

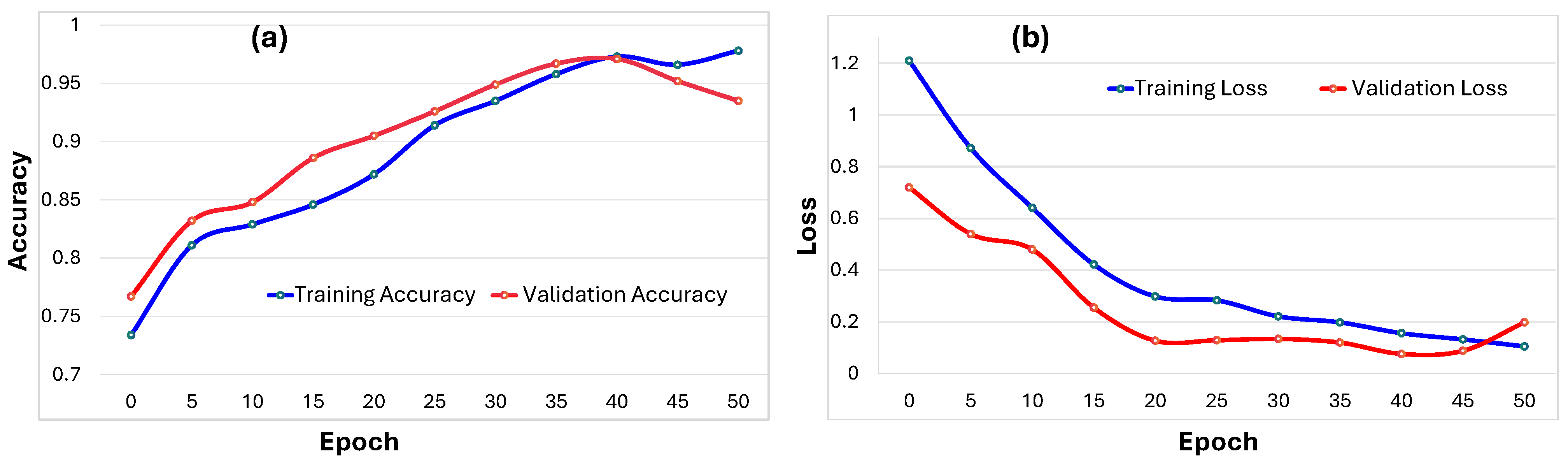

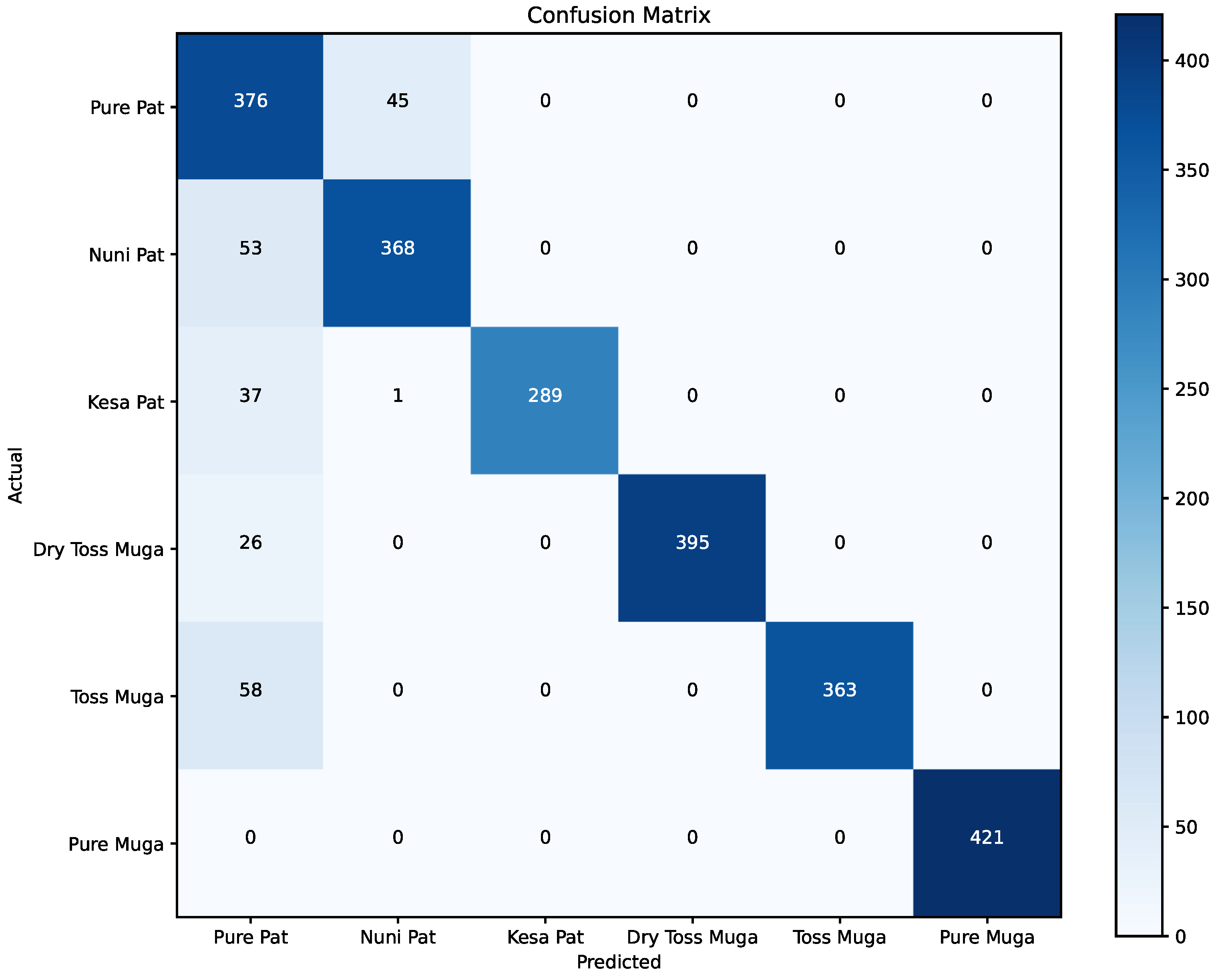

4. Experimental Results

4.1. Experimental Parameter Setup

4.2. Peer Competitors

4.3. Comparison Experiments

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Khatoon, S. Make in India: A platform to Indian handloom market. IOSR J. Bus. Manag. 2016, 18, 36–40. [Google Scholar] [CrossRef]

- Office of The Development Commissioner for Handlooms Ministry of Textiles Government of India. Fourth All India Handloom Census Report 2019–2020; Office of The Development Commissioner for Handlooms Ministry of Textiles Government of India: Delhi, India, 2019. [Google Scholar]

- Goverment of Assam. Directorate of Handloom & Textile. Available online: https://dht.assam.gov.in/ (accessed on 11 May 2024).

- Bajpeyi, C.M.; Padaki, N.V.; Mishra, S.N. Review of silk handloom weaving in Assam. Text. Rev. 2010, 2010, 29–35. [Google Scholar]

- Jain, D.C.; Miss, R.G. An analytical study of handloom industry of India. In Proceedings of the International Conference on Innovative Research in Science, Technology and Management, Singapore, 16–17 September 2017; Volume 6. [Google Scholar]

- Chakravartty, P.; Keshab, B. Sualkuchi Village of Assam: The Country of Golden Thread. IOSR J. Bus. Manag. 2018, 20, 12–16. [Google Scholar]

- Muthan, P.; Sabeenian, R.S. Handloom silk fabric defect detection using first order statistical features on a NIOS II processor. In Proceedings of the Information and Communication Technologies: International Conference, ICT 2010, Kochi, India, 7–9 September 2010; Springer: Berlin/Heidelberg, Germany, 2010. [Google Scholar]

- Thanikodi, S.; Meena, M.; Dwivedi, Y.D.; Aravind, T.; Giri, J.; Samdani, M.S.; Kansal, L.; Shahazad, M.; Ilyas, M. Optimizing the selection of natural fibre reinforcement and polymer matrix for plastic composite using LS-SVM technique. Chemosphere 2024, 349, 140971. [Google Scholar] [CrossRef] [PubMed]

- Frei, M.; Kruis, F.E. FibeR-CNN: Expanding Mask R-CNN to improve image-based fiber analysis. Powder Technol. 2021, 377, 974–991. [Google Scholar] [CrossRef]

- Shuyuti, N.A.S.A.; Salami, E.; Dahari, M.; Arof, H.; Ramiah, H. Application of Artificial Intelligence in Particle and Impurity Detection and Removal: A Survey. IEEE Access 2024, 12, 31498–31514. [Google Scholar] [CrossRef]

- Mahanta, L.B.; Mahanta, D.R.; Rahman, T.; Chakraborty, C. Handloomed fabrics recognition with deep learning. Sci. Rep. 2024, 14, 7974. [Google Scholar] [CrossRef] [PubMed]

- Cay, A.; Vassiliadis, S.; Rangoussi, M. On the use of image processing techniques for the estimation of the porosity of textile fabrics. Int. J. Mater. Text. Eng. 2007, 1, 421–424. [Google Scholar]

- Wang, X.; Georganas, N.D.; Petriu, E.M. Fabric texture analysis using computer vision techniques. IEEE Trans. Instrum. Meas. 2010, 60, 44–56. [Google Scholar] [CrossRef]

- Jamali, N.; Sammut, C. Majority voting: Material classification by tactile sensing using surface texture. IEEE Trans. Robot. 2011, 27, 508–521. [Google Scholar] [CrossRef]

- Zheng, D.; Han, Y.; Hu, J.L. A new method for classification of woven structure for yarn-dyed fabric. Text. Res. J. 2014, 84, 78–95. [Google Scholar] [CrossRef]

- Pawening, R.E.; Dijaya, R.; Brian, T.; Suciati, N. Classification of textile image using support vector machine with textural feature. In Proceedings of the 2015 International Conference on Information & Communication Technology and Systems (ICTS), Surabaya, Indonesia, 16 September 2015; IEEE: Piscataway, NJ, USA, 2015. [Google Scholar]

- Jing, J.; Fan, X.; Li, P. Automated fabric defect detection based on multiple Gabor filters and KPCA. Int. J. Multimed. Ubiquitous Eng. 2016, 11, 93–106. [Google Scholar] [CrossRef]

- Khan, B.; Wang, Z.-J.; Han, F.; Hussain, M.A.I. Fabric weave pattern and yarn color recognition and classification using a deep ELM network. Algorithms 2017, 10, 117. [Google Scholar] [CrossRef]

- Huang, M.-L.; Fu, C.-C. Applying image processing to the textile grading of fleece based on pilling assessment. Fibers 2018, 6, 73. [Google Scholar] [CrossRef]

- Sabeenian, R.S.; Palanisamy, V. Texture Image Classification using Multi Resolution Combined Statistical and Spatial Frequency Method. Int. J. Technol. Eng. Syst. (IJTES) 2011, 2, 167–171. [Google Scholar]

- Kaplan, K.; Kaya, Y.; Kuncan, M.; Ertunç, H.M. Brain tumor classification using modified local binary patterns (LBP) feature extraction methods. Med. Hypotheses 2020, 139, 109696. [Google Scholar] [CrossRef]

- Yildirim, P.; Birant, D.; Alpyildiz, T. Data mining and machine learning in textile industry. Wiley Interdiscip. Rev. Data Min. Knowl. Discov. 2018, 8, e1228. [Google Scholar] [CrossRef]

- Bora, K.; Mahanta, L.B.; Chakraborty, C.; Borah, P.; Rangpi, K.; Barua, B.; Sharma, B.; Mala, R. Computer-Aided Identification of Loom Type of Ethnic Textile, the Gamusa, Using Texture Features and Random Forest Classifier. In Proceedings of the International Conference on Data, Electronics and Computing, Shillong, India, 7–9 September 2022; Springer Nature Singapore: Singapore, 2022. [Google Scholar]

- Ghosh, A.; Guha, T.; Bhar, R.B. Identification of handloom and powerloom fabrics using proximal support vector machines. Indian J. Fibre Text. 2015, 40, 87–93. [Google Scholar]

- Ohi, A.Q.; Mridha, M.F.; Hamid, M.A.; Monowar, M.M.; Kateb, F.A. Fabricnet: A fiber recognition architecture using ensemble convnets. IEEE Access 2021, 9, 13224–13236. [Google Scholar] [CrossRef]

- Bhattacharjee, R.K.; Nandi, M.; Jha, A.; Kalita, G. Handloom design generation using generative networks. In Proceedings of the 2020 IEEE International Conference on Image Processing (ICIP), Abu Dhabi, United Arab Emirates, 25–28 October 2020; IEEE: Piscataway, NJ, USA, 2020. [Google Scholar]

- Lin, T.-Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Zitnick, C.L. Microsoft coco: Common objects in context. In Proceedings of the Computer Vision–ECCV 2014: 13th European Conference, Zurich, Switzerland, 6–12 September 2014; Proceedings, Part V 13. Springer International Publishing: Berlin/Heidelberg, Germany, 2014. [Google Scholar]

- Çam, K.; Aydın, C.; Tarhan, C. Classification of fabric defects using deep learning algorithms. In Proceedings of the 2022 Innovations in Intelligent Systems and Applications Conference (ASYU), Antalya, Turkey, 7–9 September 2022; IEEE: Piscataway, NJ, USA, 2022. [Google Scholar]

- Deng, J.; Dong, W.; Socher, R.; Li, L.-J.; Li, K.; Li, F.-F. Imagenet: A large-scale hierarchical image database. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009; IEEE: Piscataway, NJ, USA, 2009. [Google Scholar]

- Sabeenian, R.S.; Paul, E.; Prakash, C. Fabric defect detection and classification using modified VGG network. J. Text. Inst. 2023, 114, 1032–1040. [Google Scholar] [CrossRef]

- Shah, S.R.; Qadri, S.; Bibi, H.; Shah, S.M.W.; Sharif, M.I.; Marinello, F. Comparing inception V3, VGG 16, VGG 19, CNN, and ResNet 50: A case study on early detection of a rice disease. Agronomy 2023, 13, 1633. [Google Scholar] [CrossRef]

- Popescu, M.-C.; Balas, V.E.; Perescu-Popescu, L.; Mastorakis, N. Multilayer perceptron and neural networks. WSEAS Trans. Circuits Syst. 2009, 8, 579–588. [Google Scholar]

- Hoffer, E.; Ailon, N. Deep metric learning using triplet network. In Proceedings of the Similarity-Based Pattern Recognition: Third International Workshop, SIMBAD 2015, Copenhagen, Denmark, 12–14 October 2015; Proceedings 3. Springer International Publishing: Berlin/Heidelberg, Germany, 2015. [Google Scholar]

- Rhu, M.; Gimelshein, N.; Clemons, J.; Zulfiqar, A.; Keckler, S.W. vDNN: Virtualized deep neural networks for scalable, memory-efficient neural network design. In Proceedings of the 2016 49th Annual IEEE/ACM International Symposium on Microarchitecture (MICRO), Taipei, Taiwan, 15–19 October 2016; IEEE: Piscataway, NJ, USA, 2016. [Google Scholar]

- Yu, H.; Li, H.; Shi, H.; Huang, T.S.; Hua, G. Any-precision deep neural networks. In Proceedings of the AAAI Conference on Artificial Intelligence, Online, 2–9 February 2021; Volume 35. [Google Scholar]

- Buckland, M.; Gey, F. The relationship between recall and precision. J. Am. Soc. Inf. Sci. 1994, 45, 12–19. [Google Scholar] [CrossRef]

- Lipton, Z.C.; Elkan, C.; Narayanaswamy, B. Thresholding classifiers to maximize F1 score. arXiv 2014, arXiv:1402.1892. [Google Scholar]

| Methodology | Dataset | Limitation |

|---|---|---|

| Discrete Wavelet Transform [7] | Silk textile dataset (3501 Images) | The method utlizes decomposition progresses to finer scales, resulting in loss in spatial information |

| LS-SVM [8] | Plain weaving fibers dataset (245 Images) | Optimal hyperparameter tuning is computationally expensive |

| HMAX model [18] | Fabric weave pattern dataset (5640 Images) | Structural dependencies exhibited in intricate patterns |

| Gabor filters and LBP [21] | Textile fabric dataset (40,000 Images) | Limited variations inherent in extracting texture at various orientations and scales |

| DCNN + AWF [9] | ImageNet dataset (51,300 Images) | Lack of explicit control over feature weights in the network |

| PCNN [10] | Warp knitted fabric dataset (1000 Images) | Generalization issue occurs in the training samples |

| Faster-RCNN [11] | Own created dataset (3000 Images) | Computational inefficiencies of the designed network |

| FabricNet [25] | Normal fabric dataset (2000 Images) | Key features selection is difficult in the network |

| PSVM [24] | Woven fabric dataset (130 Images) | Limited to noisy data in training phase |

| KPCA [17] | TILDA dataset (3200 Images) | Complex parameter selection cause bias in the network |

| Category | Handloom Fabic Samples | Captured Images | Cropped Images | Augmented Images |

|---|---|---|---|---|

| Pure Pat | 100 | 600 | 1800 | 4210 |

| Kesa Pat | 100 | 600 | 1800 | 4166 |

| Nuni Pat | 100 | 600 | 1800 | 4210 |

| Pure Muga | 100 | 600 | 1800 | 4210 |

| Toss Muga | 100 | 600 | 1800 | 4210 |

| Dry Toss Muga | 100 | 600 | 1800 | 4210 |

| Layer | Kernel & Units | Activation | Stride | Pool Size |

|---|---|---|---|---|

| Conv1_1 | 3 × 3 × 64 | ReLU | 2 | - |

| Conv1_2 | 3 × 3 × 64 | ReLU | 2 | - |

| MaxPool1 | - | - | 2 | 2 × 2 |

| Conv2_1 | 3 × 3 × 128 | ReLU | 2 | - |

| Conv2_2 | 3 × 3 × 128 | ReLU | 2 | - |

| MaxPool2 | - | - | 2 | 2 × 2 |

| Conv3_1 | 3 × 3 × 256 | ReLU | 2 | - |

| Conv3_2 | 3 × 3 × 256 | ReLU | 2 | - |

| Conv3_3 | 3 × 3 × 256 | ReLU | 2 | - |

| MaxPool3 | - | - | 2 | 2 × 2 |

| Conv4_1 | 3 × 3 × 512 | ReLU | 2 | - |

| Conv4_2 | 3 × 3 × 512 | ReLU | 2 | - |

| Conv4_3 | 3 × 3 × 512 | ReLU | 2 | - |

| MaxPool4 | - | - | 2 | 2 × 2 |

| Flatten | - | - | - | - |

| Dense1 | Units: 4096 | ReLU | - | - |

| Dropout1 | 0.5 | - | - | - |

| Dense2 | Units: 4096 | ReLU | - | - |

| Dropout2 | 0.5 | - | - | - |

| Output | 6 | Softmax | - | - |

| Method | Precision | Recall | F1-Score | Accuracy |

|---|---|---|---|---|

| LS-SVM | 0.121 | 0.344 | 0.230 | 0.620 |

| GF and LBP | 0.754 | 0.671 | 0.754 | 0.648 |

| PCNN | 0.628 | 0.385 | 0.758 | 0.855 |

| DCNN + AWF | 0.970 | 0.987 | 0.775 | 0.890 |

| FabricNet | 0.844 | 0.784 | 0.921 | 0.925 |

| Proposed Method | 0.895 | 0.883 | 0.943 | 0.978 |

| k Value | Accuracy |

|---|---|

| 2 | 88.04 |

| 3 | 90.24 |

| 4 | 93.84 |

| 5 | 96.44 |

| 6 | 97.34 |

| 7 | 98.64 |

| 8 | 97.66 |

| 9 | 96.75 |

| 10 | 98.14 |

| Avg. | 95.23 |

| Std. | 3.56 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Das, A.; Deka, A.; Medhi, K.; Saikia, M.J. Deep Learning to Authenticate Traditional Handloom Textile. Information 2024, 15, 465. https://doi.org/10.3390/info15080465

Das A, Deka A, Medhi K, Saikia MJ. Deep Learning to Authenticate Traditional Handloom Textile. Information. 2024; 15(8):465. https://doi.org/10.3390/info15080465

Chicago/Turabian StyleDas, Anindita, Aniruddha Deka, Kishore Medhi, and Manob Jyoti Saikia. 2024. "Deep Learning to Authenticate Traditional Handloom Textile" Information 15, no. 8: 465. https://doi.org/10.3390/info15080465

APA StyleDas, A., Deka, A., Medhi, K., & Saikia, M. J. (2024). Deep Learning to Authenticate Traditional Handloom Textile. Information, 15(8), 465. https://doi.org/10.3390/info15080465