From Information to Knowledge: A Role for Knowledge Networks in Decision Making and Action Selection

Abstract

:Simple Summary

Abstract

1. Introduction

1.1. Motivation

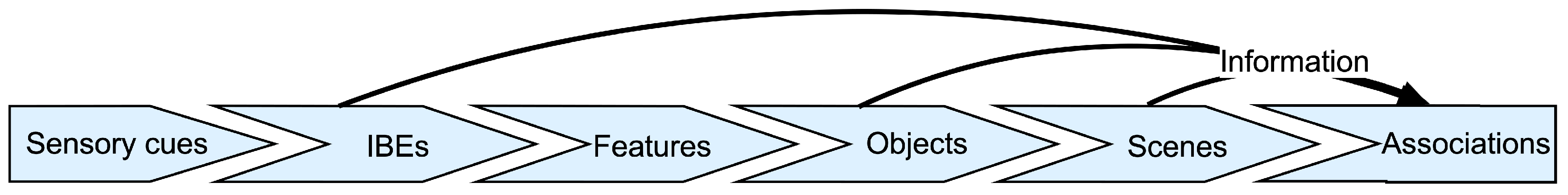

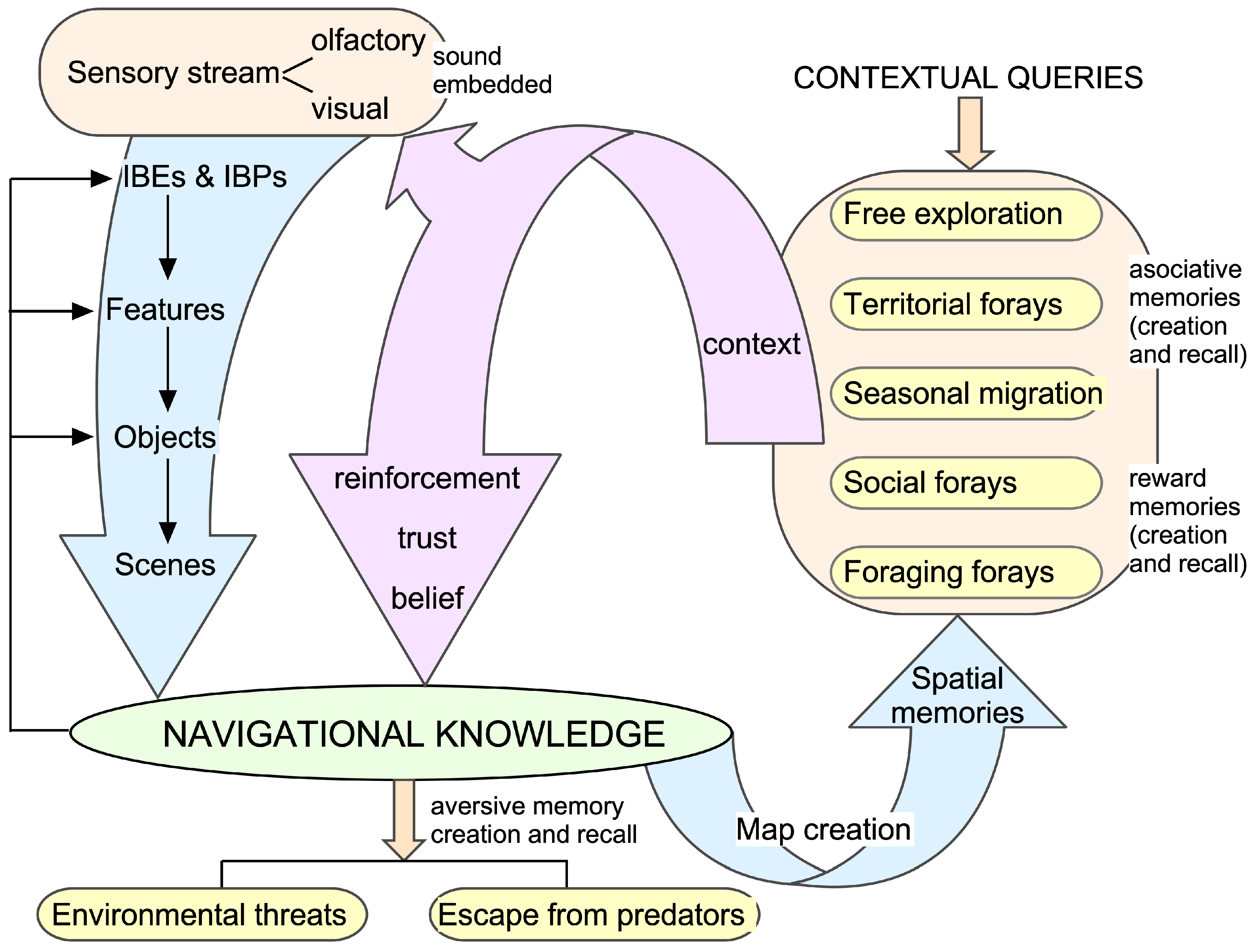

1.2. From Information to Knowledge

1.2.1. Feature Extraction

1.2.2. What Is Knowledge?

1.2.3. Memory vs. Knowledge

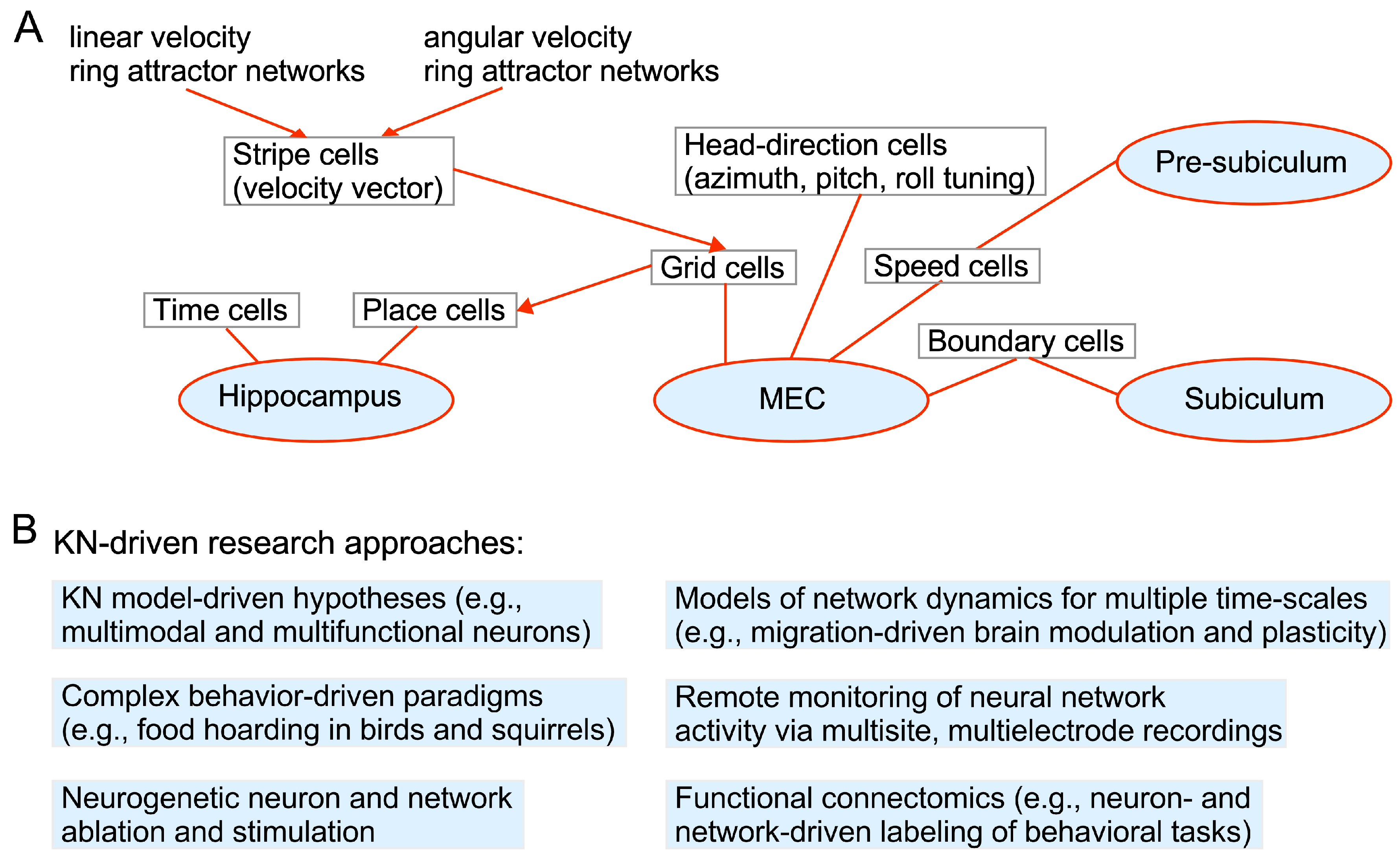

1.3. Brain Structures Involved in Memory Formation and Recall

2. Proposition

2.1. Knowledge Equation

- K(t): the cumulative knowledge at time “t”;

- G(t): The rate of incoming new information at time “t”. G(t) is modeled as a function of time, depending on how information is received. For instance, it could be constant, exponentially growing or influenced by other factors like attention or exposure;

- L(t): The rate of loss or forgetting old information at time “t”. This can be transient, triggered by distraction or change permanently via neuronal or synaptic degradation, as in Alzheimer’s disease;

- α: the integration rate constant, which determines how efficiently new information is integrated into the current knowledge state;

- β: the forgetting rate constant, which determines how quickly old information is forgotten.

- The term αG0/β represents the steady-state knowledge level when the rate of integrating new information and the rate of forgetting are balanced.

- The term (K0 − αG0/β) represents the transient behavior of knowledge over time, showing how it approaches the steady-state level. The rate at which K(t) approaches the steady-state is governed by β.

2.2. Knowledge Networks

2.3. Mathematical Formulation of KNs

- The activity of neural networks, including memory modules, can be described by continuous functions of time.

- The connection weights between neural networks and memory modules are constant over time.

- The contribution weights of neural networks and memory modules to the knowledge network are dynamic and vary with time.

- Neural Networks and Modules:

- Nj (t): the activity level of the i-th NN at time t;

- Mj (t): the activity level of the j-th memory module at time t;

- wij: the connection weight between the i-th NN, and the j-th memory module.

- Knowledge Networks (KNs):

- K (t): total knowledge at time t;

- αi (t): the weight of the i-th NN’s contribution to the KN at time t;

- βj (t): the weight of the j-th memory module’s contribution to the KN at time t.

- Dynamics and Interactions:

- G (t): the rate of gain of new information at time t;

- F (t): the rate of forgetting old information at time t.

- Neural Network Dynamics: The activity level of each NN, Ni (t), represents the dynamic activity levels of different neural circuits that contribute to knowledge. This can be influenced by incoming information and interaction with memory modules.

- Memory Module Dynamics: The activity level of each memory module Mj (t) represents the activity levels of memory storage systems that interact with neural networks, which can be influenced by the activity of a neural network.

- Connection Weights, (wij), represent the strength of interaction between neural networks and memory modules.

- Contribution Weights Dynamics: the weights αi (t) and βj (t) represent the dynamic importance of each neural network and memory module to the KN.

- Total Knowledge Dynamics: The total knowledge at time t, after adjusting weights, is a function of the contributions from neural networks and memory modules. The final solution for total knowledge within a KN at time t, can be modeled as

2.4. Evolution of Knowledge Networks

3. Discussion

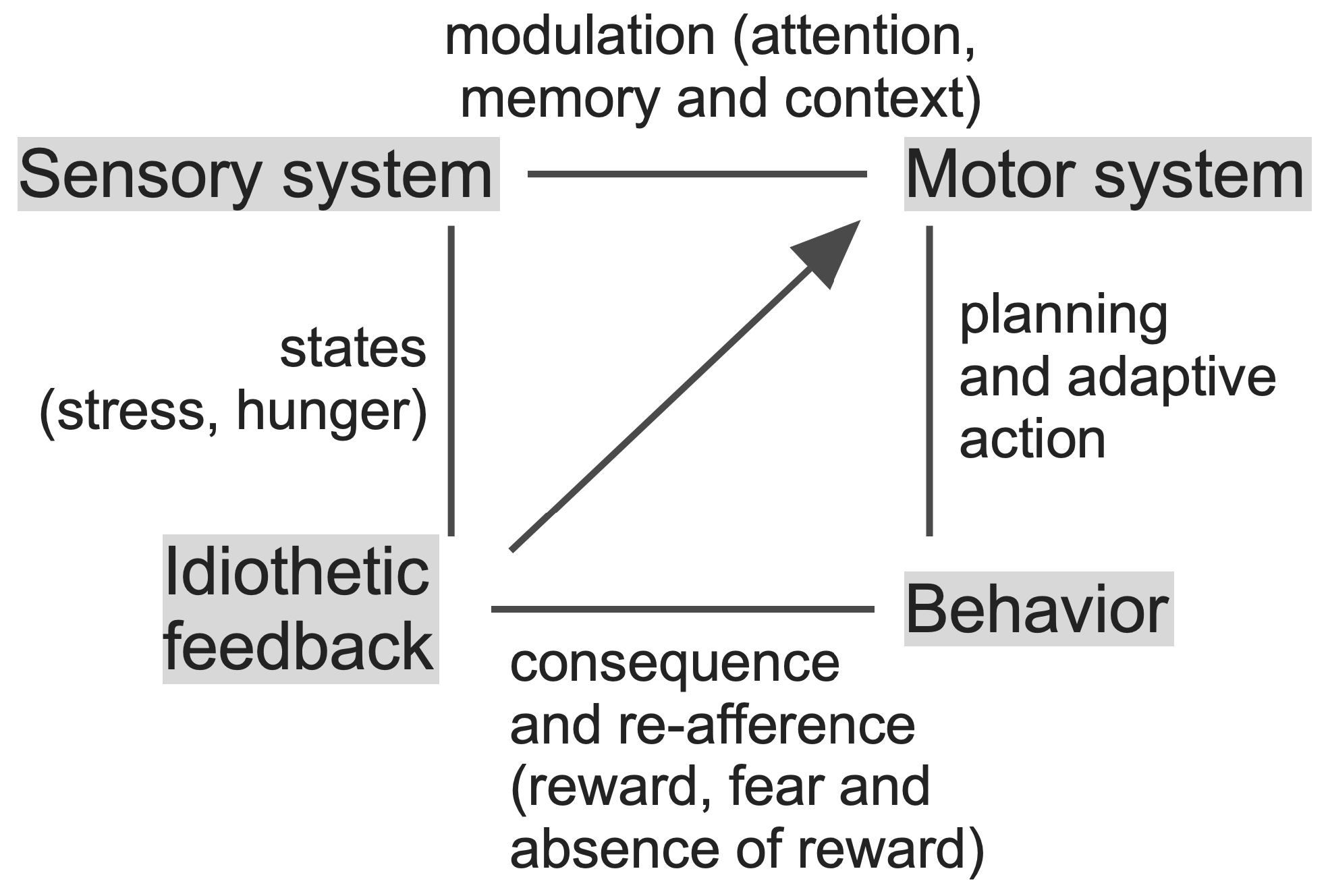

3.1. Knowledge Acquisition and Action Selection

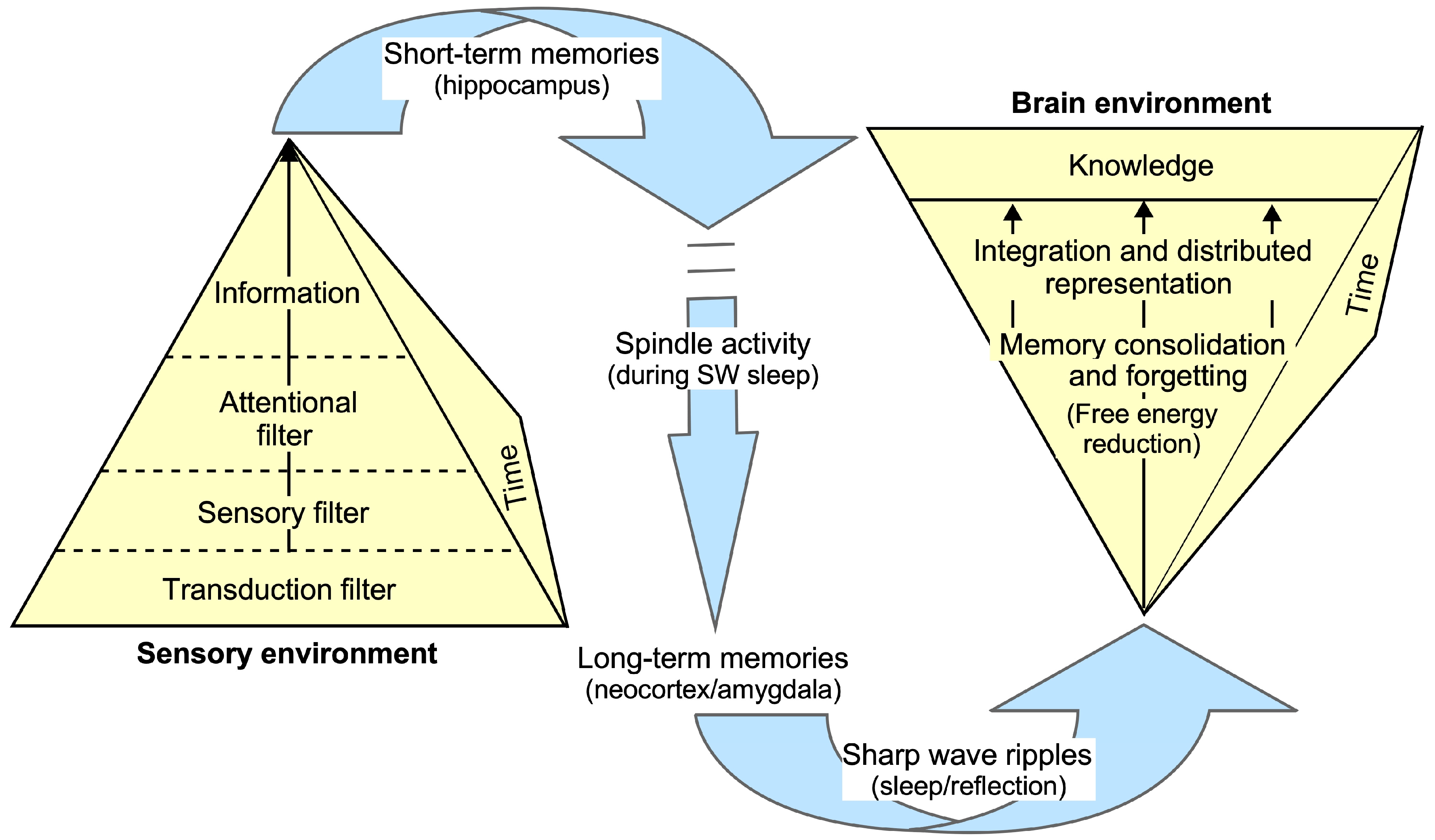

3.2. Establishing and Retrieving Knowledge: Attention, Sleep and Oscillations

3.3. Future Directions

4. Conclusions

Funding

Institutional Review Board Statement

Acknowledgments

Conflicts of Interest

References

- Ge, J.; Peng, G.; Lyu, B.; Wang, Y.; Zhuo, Y.; Niu, Z.; Tan, L.H.; Leff, A.P.; Gao, J.-H. Cross-language differences in the brain network subserving intelligible speech. Proc. Natl. Acad. Sci. USA 2015, 112, 2972–2977. [Google Scholar] [CrossRef] [PubMed]

- Poeppel, D. The maps problem and the mapping problem: Two challenges for a cognitive neuroscience of speech and language. Cogn. Neuropsychol. 2012, 29, 34–55. [Google Scholar] [CrossRef] [PubMed]

- Si, X.; Zhou, W.; Hong, B. Cooperative cortical network for categorical processing of Chinese lexical tone. Proc. Natl. Acad. Sci. USA 2017, 114, 12303–12308. [Google Scholar] [CrossRef] [PubMed]

- Hickok, G.; Poeppel, D. Dorsal and ventral streams: A framework for understanding aspects of the functional anatomy of language. Cognition 2004, 92, 67–99. [Google Scholar] [CrossRef] [PubMed]

- Wahl, M.; Marzinzik, F.; Friederici, A.D.; Hahne, A.; Kupsch, A.; Schneider, G.-H.; Saddy, D.; Curio, G.; Klostermann, F. The human thalamus processes syntactic and semantic language violations. Neuron 2008, 59, 695–707. [Google Scholar] [CrossRef] [PubMed]

- Cohen, L.; Billard, A. Social babbling: The emergence of symbolic gestures and words. Neural Netw. 2018, 106, 194–204. [Google Scholar] [CrossRef] [PubMed]

- Moser, E.I.; Kropff, E.; Moser, M.-B. Place cells, grid cells, and the brain’s spatial representation system. Annu. Rev. Neurosci. 2008, 31, 69–89. [Google Scholar] [CrossRef] [PubMed]

- Solstad, T.; Boccara, C.N.; Kropff, E.; Moser, M.-B.; Moser, E.I. Representation of geometric borders in the entorhinal cortex. Science 2008, 322, 1865–1868. [Google Scholar] [CrossRef] [PubMed]

- Shannon, C.E. A Mathematical Theory of Communication. Bell Syst. Tech. J. 1948, 27, 379–656. [Google Scholar] [CrossRef]

- Nakamura, K.; Komatsu, M. Information seeking mechanism of neural populations in the lateral prefrontal cortex. Brain Res. 2019, 1707, 79–89. [Google Scholar] [CrossRef]

- Nelken, I.; Chechik, G.; Mrsic-Flogel, T.D.; King, A.J.; Schnupp, J.W.H. Encoding stimulus information by spike numbers and mean response time in primary auditory cortex. J. Comput. Neurosci. 2005, 19, 199–221. [Google Scholar] [CrossRef] [PubMed]

- Kayser, C.; Montemurro, M.A.; Logothetis, N.K.; Panzeri, S. Spike-phase coding boosts and stabilizes information carried by spatial and temporal spike patterns. Neuron 2009, 61, 597–608. [Google Scholar] [CrossRef] [PubMed]

- Furukawa, S.; Middlebrooks, J.C. Cortical representation of auditory space: Information-bearing features of spike patterns. J. Neurophysiol. 2002, 87, 1749–1762. [Google Scholar] [CrossRef] [PubMed]

- Averbeck, B.B.; Lee, D. Coding and transmission of information by neural ensembles. Trends Neurosci. 2004, 27, 225–230. [Google Scholar] [CrossRef] [PubMed]

- Lynn, C.W.; Papadopoulos, L.; Kahn, A.E.; Bassett, D.S. Human information processing in complex networks. Nat. Phys. 2020, 16, 965–973. [Google Scholar] [CrossRef]

- Lynn, C.W.; Kahn, A.E.; Nyema, N.; Bassett, D.S. Abstract representations of events arise from mental errors in learning and memory. Nat. Commun. 2020, 11, 2313. [Google Scholar] [CrossRef] [PubMed]

- Steinberg, J.; Sompolinsky, H. Associative memory of structured knowledge. Sci. Rep. 2022, 12, 21808. [Google Scholar] [CrossRef] [PubMed]

- Miller, G.A. The magical number seven, plus or minus two: Some limits on our capacity for processing information. Psychol. Rev. 1956, 63, 81–97. [Google Scholar] [CrossRef] [PubMed]

- Shannon, C.E.; Weaver, W. The Mathematical Theory of Communication; The University of Illinois Press: Champaign, IL, USA, 1963. [Google Scholar]

- Doeller, C.F.; Barry, C.; Burgess, N. Evidence for grid cells in a human memory network. Nature 2010, 463, 657–661. [Google Scholar] [CrossRef]

- Ferreira, T.L.; Shammah-Lagnado, S.J.; Bueno, O.F.A.; Moreira, K.M.; Fornari, R.V.; Oliveira, M.G.M. The indirect amygdala-dorsal striatum pathway mediates conditioned freezing: Insights on emotional memory networks. Neuroscience 2008, 153, 84–94. [Google Scholar] [CrossRef]

- Krauzlis, R.J.; Bogadhi, A.R.; Herman, J.P.; Bollimunta, A. Selective attention without a neocortex. Cortex 2018, 102, 161–175. [Google Scholar] [CrossRef] [PubMed]

- Lai, C.S.W.; Franke, T.F.; Gan, W.-B. Opposite effects of fear conditioning and extinction on dendritic spine remodelling. Nature 2012, 483, 87–91. [Google Scholar] [CrossRef] [PubMed]

- Nader, K. Memory traces unbound. Trends Neurosci. 2003, 26, 65–72. [Google Scholar] [CrossRef] [PubMed]

- Gottfried, J.A.; Dolan, R.J. Human orbitofrontal cortex mediates extinction learning while accessing conditioned representations of value. Nat. Neurosci. 2004, 7, 1144–1152. [Google Scholar] [CrossRef] [PubMed]

- Suga, N. Principles of auditory information-processing derived from neuroethology. J. Exp. Biol. 1989, 146, 277–286. [Google Scholar] [CrossRef] [PubMed]

- Fujita, I.; Tanaka, K.; Ito, M.; Cheng, K. Columns for visual features of objects in monkey inferotemporal cortex. Nature 1992, 360, 343–346. [Google Scholar] [CrossRef] [PubMed]

- von der Emde, G.; Fetz, S. Distance, shape and more: Recognition of object features during active electrolocation in a weakly electric fish. J. Exp. Biol. 2007, 210, 3082–3095. [Google Scholar] [CrossRef] [PubMed]

- Ehret, G.; Haack, B. Ultrasound recognition in house mice: Key-Stimulus configuration and recognition mechanism. J. Comp. Physiol. 1982, 148, 245–251. [Google Scholar] [CrossRef]

- Kanwal, J.S.; Fitzpatrick, D.C.; Suga, N. Facilitatory and inhibitory frequency tuning of combination-sensitive neurons in the primary auditory cortex of mustached bats. J. Neurophysiol. 1999, 82, 2327–2345. [Google Scholar] [CrossRef]

- Esser, K.H.; Condon, C.J.; Suga, N.; Kanwal, J.S. Syntax processing by auditory cortical neurons in the FM-FM area of the mustached bat Pteronotus parnellii. Proc. Natl. Acad. Sci. USA 1997, 94, 14019–14024. [Google Scholar] [CrossRef] [PubMed]

- Xiao, Z.; Suga, N. Reorganization of the auditory cortex specialized for echo-delay processing in the mustached bat. Proc. Natl. Acad. Sci. USA 2004, 101, 1769–1774. [Google Scholar] [CrossRef] [PubMed]

- Fujita, K.; Kashimori, Y. Neural mechanism of corticofugal modulation of tuning property in frequency domain of bat’s auditory system. Neural Process. Lett. 2016, 43, 537–551. [Google Scholar] [CrossRef]

- Grossberg, S. On the development of feature detectors in the visual cortex with applications to learning and reaction-diffusion systems. Biol. Cybern. 1976, 21, 145–159. [Google Scholar] [CrossRef]

- Nelken, I.; Fishbach, A.; Las, L.; Ulanovsky, N.; Farkas, D. Primary auditory cortex of cats: Feature detection or something else? Biol. Cybern. 2003, 89, 397–406. [Google Scholar] [CrossRef]

- Chang, T.R.; Chiu, T.W.; Sun, X.; Poon, P.W.F. Modeling frequency modulated responses of midbrain auditory neurons based on trigger features and artificial neural networks. Brain Res. 2012, 1434, 90–101. [Google Scholar] [CrossRef] [PubMed]

- Goldshtein, A.; Akrish, S.; Giryes, R.; Yovel, Y. An artificial neural network explains how bats might use vision for navigation. Commun. Biol. 2022, 5, 1325. [Google Scholar] [CrossRef]

- Yang, L.; Zhan, X.; Chen, D.; Yan, J.; Loy, C.C.; Lin, D. Learning to cluster faces on an affinity graph. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 2293–2301. [Google Scholar]

- Mahadevkar, S.; Patil, S.; Kotecha, K. Enhancement of handwritten text recognition using AI-based hybrid approach. MethodsX 2024, 12, 102654. [Google Scholar] [CrossRef]

- Diep, Q.B.; Phan, H.Y.; Truong, T.-C. Crossmixed convolutional neural network for digital speech recognition. PLoS ONE 2024, 19, e0302394. [Google Scholar] [CrossRef]

- Suga, N.; O’Neill, W.E. Neural axis representing target range in the auditory cortex of the mustache bat. Science 1979, 206, 351–353. [Google Scholar] [CrossRef]

- Ehret, G.; Bernecker, C. Low-frequency sound communication by mouse pups (Mus musculus): Wriggling calls release maternal behaviour. Anim. Behav. 1986, 34, 821–830. [Google Scholar] [CrossRef]

- Hubel, D.H.; Wiesel, T.N. Receptive fields and functional architecture of monkey striate cortex. J. Physiol. 1968, 195, 215–243. [Google Scholar] [CrossRef]

- Suga, N. Philosophy and stimulus design for neuroethology of complex-sound processing. Philos. Trans. R. Soc. Lond. B Biol. Sci. 1992, 336, 423–428. [Google Scholar] [CrossRef]

- Suga, N. Analysis of information-bearing elements in complex sounds by auditory neurons of bats. Audiology 1972, 11, 58–72. [Google Scholar] [CrossRef] [PubMed]

- Suga, N.; Niwa, H.; Taniguchi, I.; Margoliash, D. The personalized auditory cortex of the mustached bat: Adaptation for echolocation. J. Neurophysiol. 1987, 58, 643–654. [Google Scholar] [CrossRef]

- Mendoza Nava, H.; Holderied, M.W.; Pirrera, A.; Groh, R.M.J. Buckling-induced sound production in the aeroelastic tymbals of Yponomeuta. Proc. Natl. Acad. Sci. USA 2024, 121, e2313549121. [Google Scholar] [CrossRef] [PubMed]

- Baier, A.L.; Stelzer, K.-J.; Wiegrebe, L. Flutter sensitivity in FM bats. Part II: Amplitude modulation. J. Comp. Physiol. A Neuroethol. Sens. Neural Behav. Physiol. 2018, 204, 941–951. [Google Scholar] [CrossRef] [PubMed]

- Kuwabara, N.; Suga, N. Delay lines and amplitude selectivity are created in subthalamic auditory nuclei: The brachium of the inferior colliculus of the mustached bat. J. Neurophysiol. 1993, 69, 1713–1724. [Google Scholar] [CrossRef]

- Washington, S.D.; Kanwal, J.S. DSCF neurons within the primary auditory cortex of the mustached bat process frequency modulations present within social calls. J. Neurophysiol. 2008, 100, 3285–3304. [Google Scholar] [CrossRef]

- Ma, J.; Naumann, R.T.; Kanwal, J.S. Fear conditioned discrimination of frequency modulated sweeps within species-specific calls of mustached bats. PLoS ONE 2010, 5, e10579. [Google Scholar] [CrossRef]

- Andoni, S.; Pollak, G.D. Selectivity for spectral motion as a neural computation for encoding natural communication signals in bat inferior colliculus. J. Neurosci. 2011, 31, 16529–16540. [Google Scholar] [CrossRef]

- Giraudet, P.; Berthommier, F.; Chaput, M. Mitral cell temporal response patterns evoked by odor mixtures in the rat olfactory bulb. J. Neurophysiol. 2002, 88, 829–838. [Google Scholar] [CrossRef] [PubMed]

- Lindsay, S.M.; Vogt, R.G. Behavioral responses of newly hatched zebrafish (Danio rerio) to amino acid chemostimulants. Chem. Senses 2004, 29, 93–100. [Google Scholar] [CrossRef] [PubMed]

- Sigala, N.; Logothetis, N.K. Visual categorization shapes feature selectivity in the primate temporal cortex. Nature 2002, 415, 318–320. [Google Scholar] [CrossRef] [PubMed]

- Ramkumar, P.; Jas, M.; Pannasch, S.; Hari, R.; Parkkonen, L. Feature-specific information processing precedes concerted activation in human visual cortex. J. Neurosci. 2013, 33, 7691–7699. [Google Scholar] [CrossRef]

- Romanski, L.M.; Averbeck, B.B.; Diltz, M. Neural representation of vocalizations in the primate ventrolateral prefrontal cortex. J. Neurophysiol. 2005, 93, 734–747. [Google Scholar] [CrossRef] [PubMed]

- Romanski, L.M.; Averbeck, B.B. The primate cortical auditory system and neural representation of conspecific vocalizations. Annu. Rev. Neurosci. 2009, 32, 315–346. [Google Scholar] [CrossRef] [PubMed]

- Washington, S.D.; Kanwal, J.S. Excitatory tuning to upward and downward directions of frequency-modulated sweeps in the primary auditory cortex. In Proceedings of the Society for Neuroscience, Washington, DC, USA, 12–16 November 2005; Volume 35. [Google Scholar]

- Kanwal, J.S.; Gordon, M.; Peng, J.P.; Heinz-Esser, K. Auditory responses from the frontal cortex in the mustached bat, Pteronotus parnellii. NeuroReport 2000, 11, 367–372. [Google Scholar] [CrossRef] [PubMed]

- Fitzpatrick, D.C.; Kanwal, J.S.; Butman, J.A.; Suga, N. Combination-sensitive neurons in the primary auditory cortex of the mustached bat. J. Neurosci. 1993, 13, 931–940. [Google Scholar] [CrossRef]

- Averbeck, B.B.; Romanski, L.M. Probabilistic encoding of vocalizations in macaque ventral lateral prefrontal cortex. J. Neurosci. 2006, 26, 11023–11033. [Google Scholar] [CrossRef]

- Wagatsuma, N.; Hidaka, A.; Tamura, H. Correspondence between Monkey Visual Cortices and Layers of a Saliency Map Model Based on a Deep Convolutional Neural Network for Representations of Natural Images. eNeuro 2021, 8, 1–19. [Google Scholar] [CrossRef]

- Gallant, J.L.; Braun, J.; Van Essen, D.C. Selectivity for polar, hyperbolic, and Cartesian gratings in macaque visual cortex. Science 1993, 259, 100–103. [Google Scholar] [CrossRef]

- Oliva, A.; Torralba, A. The role of context in object recognition. Trends Cogn. Sci. 2007, 11, 520–527. [Google Scholar] [CrossRef]

- Stoll, J.; Thrun, M.; Nuthmann, A.; Einhäuser, W. Overt attention in natural scenes: Objects dominate features. Vision. Res. 2015, 107, 36–48. [Google Scholar] [CrossRef]

- Suga, N.; Gao, E.; Zhang, Y.; Ma, X.; Olsen, J.F. The corticofugal system for hearing: Recent progress. Proc. Natl. Acad. Sci. USA 2000, 97, 11807–11814. [Google Scholar] [CrossRef]

- Messinger, A.; Squire, L.R.; Zola, S.M.; Albright, T.D. Neural correlates of knowledge: Stable representation of stimulus associations across variations in behavioral performance. Neuron 2005, 48, 359–371. [Google Scholar] [CrossRef]

- Patterson, K.; Nestor, P.J.; Rogers, T.T. Where do you know what you know? The representation of semantic knowledge in the human brain. Nat. Rev. Neurosci. 2007, 8, 976–987. [Google Scholar] [CrossRef] [PubMed]

- Knowledge. Available online: https://en.wikipedia.org/wiki/Knowledge (accessed on 15 January 2024).

- Kump, B.; Moskaliuk, J.; Cress, U.; Kimmerle, J. Cognitive foundations of organizational learning: Re-introducing the distinction between declarative and non-declarative knowledge. Front. Psychol. 2015, 6, 1489. [Google Scholar] [CrossRef]

- Hansson, I.; Buratti, S.; Allwood, C.M. Experts’ and novices’ perception of ignorance and knowledge in different research disciplines and its relation to belief in certainty of knowledge. Front. Psychol. 2017, 8, 377. [Google Scholar] [CrossRef] [PubMed]

- Howlett, J.R.; Paulus, M.P. The neural basis of testable and non-testable beliefs. PLoS ONE 2015, 10, e0124596. [Google Scholar] [CrossRef] [PubMed]

- Sainburg, T.; Gentner, T.Q. Toward a computational neuroethology of vocal communication: From bioacoustics to neurophysiology, emerging tools and future directions. Front. Behav. Neurosci. 2021, 15, 811737. [Google Scholar] [CrossRef]

- Wagner, H.; Egelhaaf, M.; Carr, C. Model organisms and systems in neuroethology: One hundred years of history and a look into the future. J. Comp. Physiol. A Neuroethol. Sens. Neural Behav. Physiol. 2024, 210, 227–242. [Google Scholar] [CrossRef]

- Lambert, K. Wild brains: The value of neuroethological approaches in preclinical behavioral neuroscience animal models. Neurosci. Biobehav. Rev. 2023, 146, 105044. [Google Scholar] [CrossRef] [PubMed]

- Roth, R.H.; Ding, J.B. From neurons to cognition: Technologies for precise recording of neural activity underlying behavior. BME Front. 2020, 2020, 7190517. [Google Scholar] [CrossRef] [PubMed]

- Du, J.; Riedel-Kruse, I.H.; Nawroth, J.C.; Roukes, M.L.; Laurent, G.; Masmanidis, S.C. High-resolution three-dimensional extracellular recording of neuronal activity with microfabricated electrode arrays. J. Neurophysiol. 2009, 101, 1671–1678. [Google Scholar] [CrossRef]

- O’Keefe, J. A computational theory of the hippocampal cognitive map. Prog. Brain Res. 1990, 83, 301–312. [Google Scholar] [PubMed]

- Lever, C.; Wills, T.; Cacucci, F.; Burgess, N.; O’Keefe, J. Long-term plasticity in hippocampal place-cell representation of environmental geometry. Nature 2002, 416, 90–94. [Google Scholar] [CrossRef]

- Moser, E.I.; Moser, M.-B.; McNaughton, B.L. Spatial representation in the hippocampal formation: A history. Nat. Neurosci. 2017, 20, 1448–1464. [Google Scholar] [CrossRef]

- Finkelstein, A.; Derdikman, D.; Rubin, A.; Foerster, J.N.; Las, L.; Ulanovsky, N. Three-dimensional head-direction coding in the bat brain. Nature 2015, 517, 159–164. [Google Scholar] [CrossRef]

- Geva-Sagiv, M.; Las, L.; Yovel, Y.; Ulanovsky, N. Spatial cognition in bats and rats: From sensory acquisition to multiscale maps and navigation. Nat. Rev. Neurosci. 2015, 16, 94–108. [Google Scholar] [CrossRef]

- Mello, C.V.; Ribeiro, S. ZENK protein regulation by song in the brain of songbirds. J. Comp. Neurol. 1998, 393, 426–438. [Google Scholar] [CrossRef]

- Jarvis, E.D.; Mello, C.V. Molecular mapping of brain areas involved in parrot vocal communication. J. Comp. Neurol. 2000, 419, 1–31. [Google Scholar] [CrossRef]

- Chatterjee, D.; Tran, S.; Shams, S.; Gerlai, R. A Simple Method for Immunohistochemical Staining of Zebrafish Brain Sections for c-fos Protein Expression. Zebrafish 2015, 12, 414–420. [Google Scholar] [CrossRef]

- Guthrie, K.M.; Anderson, A.J.; Leon, M.; Gall, C. Odor-induced increases in c-fos mRNA expression reveal an anatomical “unit” for odor processing in olfactory bulb. Proc. Natl. Acad. Sci. USA 1993, 90, 3329–3333. [Google Scholar] [CrossRef] [PubMed]

- Fosque, B.F.; Sun, Y.; Dana, H.; Yang, C.-T.; Ohyama, T.; Tadross, M.R.; Patel, R.; Zlatic, M.; Kim, D.S.; Ahrens, M.B.; et al. Neural circuits. Labeling of active neural circuits in vivo with designed calcium integrators. Science 2015, 347, 755–760. [Google Scholar] [CrossRef]

- Christophel, T.B. Distributed Visual Working Memory Stores Revealed by Multivariate Pattern Analyses. J. Vis. 2015, 15, 1407. [Google Scholar] [CrossRef]

- Linden, D.E.J. The working memory networks of the human brain. Neuroscientist 2007, 13, 257–267. [Google Scholar] [CrossRef]

- Sauseng, P.; Klimesch, W.; Heise, K.F.; Gruber, W.R.; Holz, E.; Karim, A.A.; Glennon, M.; Gerloff, C.; Birbaumer, N.; Hummel, F.C. Brain oscillatory substrates of visual short-term memory capacity. Curr. Biol. 2009, 19, 1846–1852. [Google Scholar] [CrossRef]

- Fiebig, F.; Lansner, A. Memory consolidation from seconds to weeks: A three-stage neural network model with autonomous reinstatement dynamics. Front. Comput. Neurosci. 2014, 8, 64. [Google Scholar] [CrossRef] [PubMed]

- Schafe, G.E.; LeDoux, J.E. Memory consolidation of auditory pavlovian fear conditioning requires protein synthesis and protein kinase A in the amygdala. J. Neurosci. 2000, 20, RC96. [Google Scholar] [CrossRef]

- Gal-Ben-Ari, S.; Rosenblum, K. Molecular mechanisms underlying memory consolidation of taste information in the cortex. Front. Behav. Neurosci. 2011, 5, 87. [Google Scholar] [CrossRef]

- Izquierdo, I.; Medina, J.H. Role of the amygdala, hippocampus and entorhinal cortex in memory consolidation and expression. Braz. J. Med. Biol. Res. 1993, 26, 573–589. [Google Scholar]

- McEwen, B.S. Mood disorders and allostatic load. Biol. Psychiatry 2003, 54, 200–207. [Google Scholar] [CrossRef]

- Toledo-Rodriguez, M.; Sandi, C. Stress during Adolescence Increases Novelty Seeking and Risk-Taking Behavior in Male and Female Rats. Front. Behav. Neurosci. 2011, 5, 17. [Google Scholar] [CrossRef]

- Shekhar, A.; Truitt, W.; Rainnie, D.; Sajdyk, T. Role of stress, corticotrophin releasing factor (CRF) and amygdala plasticity in chronic anxiety. Stress 2005, 8, 209–219. [Google Scholar] [CrossRef]

- Andersen, S.L.; Teicher, M.H. Stress, sensitive periods and maturational events in adolescent depression. Trends Neurosci. 2008, 31, 183–191. [Google Scholar] [CrossRef]

- Krugers, H.J.; Lucassen, P.J.; Karst, H.; Joëls, M. Chronic stress effects on hippocampal structure and synaptic function: Relevance for depression and normalization by anti-glucocorticoid treatment. Front. Synaptic Neurosci. 2010, 2, 24. [Google Scholar] [CrossRef]

- McEwen, B.S. Early life influences on life-long patterns of behavior and health. Ment. Retard. Dev. Disabil. Res. Rev. 2003, 9, 149–154. [Google Scholar] [CrossRef]

- Evans, J.R.; Torres-Pérez, J.V.; Miletto Petrazzini, M.E.; Riley, R.; Brennan, C.H. Stress reactivity elicits a tissue-specific reduction in telomere length in aging zebrafish (Danio rerio). Sci. Rep. 2021, 11, 339. [Google Scholar] [CrossRef]

- Cleber Gama de Barcellos Filho, P.; Campos Zanelatto, L.; Amélia Aparecida Santana, B.; Calado, R.T.; Rodrigues Franci, C. Effects chronic administration of corticosterone and estrogen on HPA axis activity and telomere length in brain areas of female rats. Brain Res. 2021, 1750, 147152. [Google Scholar] [CrossRef]

- Maguire, E.A.; Frith, C.D. The brain network associated with acquiring semantic knowledge. Neuroimage 2004, 22, 171–178. [Google Scholar] [CrossRef]

- Kotkat, A.H.; Katzner, S.; Busse, L. Neural networks: Explaining animal behavior with prior knowledge of the world. Curr. Biol. 2023, 33, R138–R140. [Google Scholar] [CrossRef]

- Livingstone, M.; Hubel, D. Segregation of form, color, movement, and depth: Anatomy, physiology, and perception. Science 1988, 240, 740–749. [Google Scholar] [CrossRef]

- Baumgärtel, K.; Genoux, D.; Welzl, H.; Tweedie-Cullen, R.Y.; Koshibu, K.; Livingstone-Zatchej, M.; Mamie, C.; Mansuy, I.M. Control of the establishment of aversive memory by calcineurin and Zif268. Nat. Neurosci. 2008, 11, 572–578. [Google Scholar] [CrossRef]

- Moser, M.B.; Moser, E.I. Functional differentiation in the hippocampus. Hippocampus 1998, 8, 608–619. [Google Scholar] [CrossRef]

- Fanselow, M.S.; Dong, H.-W. Are the dorsal and ventral hippocampus functionally distinct structures? Neuron 2010, 65, 7–19. [Google Scholar] [CrossRef]

- White, N.M.; McDonald, R.J. Acquisition of a spatial conditioned place preference is impaired by amygdala lesions and improved by fornix lesions. Behav. Brain Res. 1993, 55, 269–281. [Google Scholar] [CrossRef]

- Pikkarainen, M.; Rönkkö, S.; Savander, V.; Insausti, R.; Pitkänen, A. Projections from the lateral, basal, and accessory basal nuclei of the amygdala to the hippocampal formation in rat. J. Comp. Neurol. 1999, 403, 229–260. [Google Scholar] [CrossRef]

- Ghashghaei, H.T.; Hilgetag, C.C.; Barbas, H. Sequence of information processing for emotions based on the anatomic dialogue between prefrontal cortex and amygdala. Neuroimage 2007, 34, 905–923. [Google Scholar] [CrossRef]

- Fernández-Ruiz, A.; Oliva, A.; Nagy, G.A.; Maurer, A.P.; Berényi, A.; Buzsáki, G. Entorhinal-CA3 Dual-Input Control of Spike Timing in the Hippocampus by Theta-Gamma Coupling. Neuron 2017, 93, 1213–1226. [Google Scholar] [CrossRef]

- Aoi, M.C.; Mante, V.; Pillow, J.W. Prefrontal cortex exhibits multidimensional dynamic encoding during decision-making. Nat. Neurosci. 2020, 23, 1410–1420. [Google Scholar] [CrossRef]

- Knight, R.T.; Stuss, D.T. Prefrontal cortex: The present and the future. In Principles of Frontal Lobe Function; Stuss, D.T., Knight, R.T., Eds.; Oxford University Press: New York, NY, USA, 2002; pp. 573–598. ISBN 9780195134971. [Google Scholar]

- Grossberg, S. A neural model of intrinsic and extrinsic hippocampal theta rhythms: Anatomy, neurophysiology, and function. Front. Syst. Neurosci. 2021, 15, 665052. [Google Scholar] [CrossRef] [PubMed]

- Carpenter, G.A.; Grossberg, S.; Mehanian, C. Invariant recognition of cluttered scenes by a self-organizing ART architecture: CORT-X boundary segmentation. Neural Netw. 1989, 2, 169–181. [Google Scholar] [CrossRef]

- Freeman, W.J. Mass Action in the Nervous System; Academic Press: New York, NY, USA, 1975; ISBN 9780122671500. [Google Scholar]

- Buzsáki, G.; Moser, E.I. Memory, navigation and theta rhythm in the hippocampal-entorhinal system. Nat. Neurosci. 2013, 16, 130–138. [Google Scholar] [CrossRef] [PubMed]

- Bermudez-Contreras, E.; Clark, B.J.; Wilber, A. The neuroscience of spatial navigation and the relationship to artificial intelligence. Front. Comput. Neurosci. 2020, 14, 63. [Google Scholar] [CrossRef] [PubMed]

- Rolls, E.T.; Treves, A. A theory of hippocampal function: New developments. Prog. Neurobiol. 2024, 238, 102636. [Google Scholar] [CrossRef] [PubMed]

- Treves, A.; Rolls, E.T. Computational analysis of the role of the hippocampus in memory. Hippocampus 1994, 4, 374–391. [Google Scholar] [CrossRef]

- Rolls, E.T. Neurons including hippocampal spatial view cells, and navigation in primates including humans. Hippocampus 2021, 31, 593–611. [Google Scholar] [CrossRef] [PubMed]

- Kohler, E.; Keysers, C.; Umiltà, M.A.; Fogassi, L.; Gallese, V.; Rizzolatti, G. Hearing sounds, understanding actions: Action representation in mirror neurons. Science 2002, 297, 846–848. [Google Scholar] [CrossRef]

- Heyes, C. Where do mirror neurons come from? Neurosci. Biobehav. Rev. 2010, 34, 575–583. [Google Scholar] [CrossRef]

- Keysers, C.; Gazzola, V. Hebbian learning and predictive mirror neurons for actions, sensations and emotions. Philos. Trans. R. Soc. Lond. B Biol. Sci. 2014, 369, 20130175. [Google Scholar] [CrossRef] [PubMed]

- Briggman, K.L.; Kristan, W.B. Multifunctional pattern-generating circuits. Annu. Rev. Neurosci. 2008, 31, 271–294. [Google Scholar] [CrossRef] [PubMed]

- Queenan, B.N.; Zhang, Z.; Ma, J.; Naumann, R.T.; Mazhar, S.; Kanwal, J.S. Multifunctional cortical neurons exhibit response enhancement during rapid switching from echolocation to communication sound processing. In Proceedings of the Society for Neuroscience, Abstract #275.21. San Diego, CA, USA, 13–17 November 2010. [Google Scholar]

- Suga, N. Multi-function theory for cortical processing of auditory information: Implications of single-unit and lesion data for future research. J. Comp. Physiol. A 1994, 175, 135–144. [Google Scholar] [CrossRef] [PubMed]

- Parker, J.; Khwaja, R.; Cymbalyuk, G. Asymmetric control of coexisting slow and fast rhythms in a multifunctional central pattern generator: A model study. Neurophysiology 2019, 51, 390–399. [Google Scholar] [CrossRef]

- Mahon, B.Z.; Caramazza, A. What drives the organization of object knowledge in the brain? Trends Cogn. Sci. 2011, 15, 97–103. [Google Scholar] [CrossRef] [PubMed]

- Martin, A.; Wiggs, C.L.; Ungerleider, L.G.; Haxby, J.V. Neural correlates of category-specific knowledge. Nature 1996, 379, 649–652. [Google Scholar] [CrossRef] [PubMed]

- Eiermann, A.; Esser, K.H. Auditory responses from the frontal cortex in the short-tailed fruit bat Carollia perspicillata. NeuroReport 2000, 11, 421–425. [Google Scholar] [CrossRef] [PubMed]

- Hage, S.R. Auditory and audio-vocal responses of single neurons in the monkey ventral premotor cortex. Hear. Res. 2018, 366, 82–89. [Google Scholar] [CrossRef] [PubMed]

- Nicolelis, M.A.L. Computing with thalamocortical ensembles during different behavioural states. J. Physiol. 2005, 566, 37–47. [Google Scholar] [CrossRef]

- Pugh, K.; Prusak, L. Designing Effective Knowledge Networks; MIT Press: Cambridge, MA, USA, 2013. [Google Scholar]

- García-Rosales, F.; López-Jury, L.; González-Palomares, E.; Wetekam, J.; Cabral-Calderín, Y.; Kiai, A.; Kössl, M.; Hechavarría, J.C. Echolocation-related reversal of information flow in a cortical vocalization network. Nat. Commun. 2022, 13, 3642. [Google Scholar] [CrossRef] [PubMed]

- Hackett, T.A. Information flow in the auditory cortical network. Hear. Res. 2011, 271, 133–146. [Google Scholar] [CrossRef]

- Bowers, J.S.; Vankov, I.I.; Damian, M.F.; Davis, C.J. Why do some neurons in cortex respond to information in a selective manner? Insights from artificial neural networks. Cognition 2016, 148, 47–63. [Google Scholar] [CrossRef] [PubMed]

- Bullmore, E.; Sporns, O. Complex brain networks: Graph theoretical analysis of structural and functional systems. Nat. Rev. Neurosci. 2009, 10, 186–198. [Google Scholar] [CrossRef] [PubMed]

- Feldt, S.; Bonifazi, P.; Cossart, R. Dissecting functional connectivity of neuronal microcircuits: Experimental and theoretical insights. Trends Neurosci. 2011, 34, 225–236. [Google Scholar] [CrossRef] [PubMed]

- Izhikevich, E.M. Dynamical Systems in Neuroscience: The Geometry of Excitability and Bursting; The MIT Press: Cambridge, MA, USA, 2006; ISBN 9780262276078. [Google Scholar]

- Evans, L.C. Partial Differential Equations (The Graduate Studies in Mathematics, 19), 2nd ed.; American Mathematical Society: Providence, RL, USA, 2022; p. 662. ISBN 978-1-4704-6942-9. [Google Scholar]

- Sacramento, J.; Wichert, A.; van Rossum, M.C.W. Energy Efficient Sparse Connectivity from Imbalanced Synaptic Plasticity Rules. PLoS Comput. Biol. 2015, 11, e1004265. [Google Scholar] [CrossRef] [PubMed]

- Kanwal, J.S.; Peng, J.P.; Esser, K.H. Auditory communication and echolocation in the mustached bat: Computing for dual functions within single neurons. In Echolocation in Bats and Dolphins; Thomas, J.A., Moss, C.J., Vater, M., Eds.; University of Chicago Press: Chicago, IL, USA, 2004; pp. 201–208. [Google Scholar]

- Santiago, A.F. Plasticity in the Prairie Vole: Contextual Factors and Molecular Mechanisms Modulating Bond Plasticity in the Prairie Vole (Microtus ochrogaster). Doctoral Dissertation, Cornell University, Ithaca, NY, USA, 2024. [Google Scholar]

- Cho, J.Y.; Sternberg, P.W. Multilevel modulation of a sensory motor circuit during C. elegans sleep and arousal. Cell 2014, 156, 249–260. [Google Scholar] [CrossRef] [PubMed]

- Takeishi, A.; Yeon, J.; Harris, N.; Yang, W.; Sengupta, P. Feeding state functionally reconfigures a sensory circuit to drive thermosensory behavioral plasticity. eLife 2020, 9, e61167. [Google Scholar] [CrossRef] [PubMed]

- Voigt, K.; Razi, A.; Harding, I.H.; Andrews, Z.B.; Verdejo-Garcia, A. Neural network modelling reveals changes in directional connectivity between cortical and hypothalamic regions with increased BMI. Int. J. Obes. 2021, 45, 2447–2454. [Google Scholar] [CrossRef] [PubMed]

- Dupre, C.; Yuste, R. Non-overlapping Neural Networks in Hydra vulgaris. Curr. Biol. 2017, 27, 1085–1097. [Google Scholar] [CrossRef] [PubMed]

- Keramidioti, A.; Schneid, S.; Busse, C.; von Laue, C.C.; Bertulat, B.; Salvenmoser, W.; Heß, M.; Alexandrova, O.; Glauber, K.M.; Steele, R.E.; et al. A new look at the architecture and dynamics of the Hydra nerve net. eLife 2024, 12, RP87330. [Google Scholar] [CrossRef]

- Musser, J.M.; Schippers, K.J.; Nickel, M.; Mizzon, G.; Kohn, A.B.; Pape, C.; Ronchi, P.; Papadopoulos, N.; Tarashansky, A.J.; Hammel, J.U.; et al. Profiling cellular diversity in sponges informs animal cell type and nervous system evolution. Science 2021, 374, 717–723. [Google Scholar] [CrossRef]

- Schnell, A.K.; Amodio, P.; Boeckle, M.; Clayton, N.S. How intelligent is a cephalopod? Lessons from comparative cognition. Biol. Rev. Camb. Philos. Soc. 2021, 96, 162–178. [Google Scholar] [CrossRef] [PubMed]

- Parent, A.; Hazrati, L.N. Functional anatomy of the basal ganglia. I. The cortico-basal ganglia-thalamo-cortical loop. Brain Res. Brain Res. Rev. 1995, 20, 91–127. [Google Scholar] [CrossRef] [PubMed]

- Braine, A.; Georges, F. Emotion in action: When emotions meet motor circuits. Neurosci. Biobehav. Rev. 2023, 155, 105475. [Google Scholar] [CrossRef] [PubMed]

- Lettvin, J.; Maturana, H.; McCulloch, W.; Pitts, W. What the Frog’s Eye Tells the Frog’s Brain. Proc. IRE 1959, 47, 1940–1951. [Google Scholar] [CrossRef]

- Maisak, M.S.; Haag, J.; Ammer, G.; Serbe, E.; Meier, M.; Leonhardt, A.; Schilling, T.; Bahl, A.; Rubin, G.M.; Nern, A.; et al. A directional tuning map of Drosophila elementary motion detectors. Nature 2013, 500, 212–216. [Google Scholar] [CrossRef] [PubMed]

- Edens, B.M.; Stundl, J.; Urrutia, H.A.; Bronner, M.E. Neural crest origin of sympathetic neurons at the dawn of vertebrates. Nature 2024, 629, 121–126. [Google Scholar] [CrossRef] [PubMed]

- Bedois, A.M.H.; Parker, H.J.; Price, A.J.; Morrison, J.A.; Bronner, M.E.; Krumlauf, R. Sea lamprey enlightens the origin of the coupling of retinoic acid signaling to vertebrate hindbrain segmentation. Nat. Commun. 2024, 15, 1538. [Google Scholar] [CrossRef] [PubMed]

- Corominas-Murtra, B.; Goñi, J.; Solé, R.V.; Rodríguez-Caso, C. On the origins of hierarchy in complex networks. Proc. Natl. Acad. Sci. USA 2013, 110, 13316–13321. [Google Scholar] [CrossRef] [PubMed]

- Watabe-Uchida, M.; Eshel, N.; Uchida, N. Neural circuitry of reward prediction error. Annu. Rev. Neurosci. 2017, 40, 373–394. [Google Scholar] [CrossRef]

- Riceberg, J.S.; Shapiro, M.L. Orbitofrontal Cortex Signals Expected Outcomes with Predictive Codes When Stable Contingencies Promote the Integration of Reward History. J. Neurosci. 2017, 37, 2010–2021. [Google Scholar] [CrossRef]

- Jordan, R. The locus coeruleus as a global model failure system. Trends Neurosci. 2024, 47, 92–105. [Google Scholar] [CrossRef]

- Korzyukov, O.; Lee, Y.; Bronder, A.; Wagner, M.; Gumenyuk, V.; Larson, C.R.; Hammer, M.J. Auditory-vocal control system is object for predictive processing within seconds time range. Brain Res. 2020, 1732, 146703. [Google Scholar] [CrossRef]

- Mikulasch, F.A.; Rudelt, L.; Wibral, M.; Priesemann, V. Where is the error? Hierarchical predictive coding through dendritic error computation. Trends Neurosci. 2023, 46, 45–59. [Google Scholar] [CrossRef]

- Goldberg, J.M.; Fernandez, C. Physiology of peripheral neurons innervating semicircular canals of the squirrel monkey. I. Resting discharge and response to constant angular accelerations. J. Neurophysiol. 1971, 34, 635–660. [Google Scholar] [CrossRef]

- Knafo, S.; Wyart, C. Active mechanosensory feedback during locomotion in the zebrafish spinal cord. Curr. Opin. Neurobiol. 2018, 52, 48–53. [Google Scholar] [CrossRef]

- Henderson, K.W.; Menelaou, E.; Hale, M.E. Sensory neurons in the spinal cord of zebrafish and their local connectivity. Curr. Opin. Physiol. 2019, 8, 136–140. [Google Scholar] [CrossRef]

- Bottjer, S.W. Silent synapses in a thalamo-cortical circuit necessary for song learning in zebra finches. J. Neurophysiol. 2005, 94, 3698–3707. [Google Scholar] [CrossRef]

- Xu, W.; Löwel, S.; Schlüter, O.M. Silent Synapse-Based Mechanisms of Critical Period Plasticity. Front. Cell. Neurosci. 2020, 14, 213. [Google Scholar] [CrossRef] [PubMed]

- Buhusi, C.V. The across-fiber pattern theory and fuzzy logic: A matter of taste. Physiol. Behav. 2000, 69, 97–106. [Google Scholar] [CrossRef] [PubMed]

- Kanwal, J.S.; Ehret, G. Behavior and Neurodynamics for Auditory Communication; Cambridge University Press: Cambridge, UK, 2006. [Google Scholar]

- Vlamou, E.; Papadopoulos, B. Fuzzy logic systems and medical applications. AIMS Neurosci. 2019, 6, 266–272. [Google Scholar] [CrossRef] [PubMed]

- Cacciatore, S.; Luchinat, C.; Tenori, L. Knowledge discovery by accuracy maximization. Proc. Natl. Acad. Sci. USA 2014, 111, 5117–5122. [Google Scholar] [CrossRef] [PubMed]

- Brede, M.; Stella, M.; Kalloniatis, A.C. Competitive influence maximization and enhancement of synchronization in populations of non-identical Kuramoto oscillators. Sci. Rep. 2018, 8, 702. [Google Scholar] [CrossRef] [PubMed]

- Nikonov, A.A.; Finger, T.E.; Caprio, J. Beyond the olfactory bulb: An odotopic map in the forebrain. Proc. Natl. Acad. Sci. USA 2005, 102, 18688–18693. [Google Scholar] [CrossRef] [PubMed]

- Fuss, S.H.; Korsching, S.I. Odorant feature detection: Activity mapping of structure response relationships in the zebrafish olfactory bulb. J. Neurosci. 2001, 21, 8396–8407. [Google Scholar] [CrossRef]

- Stettler, D.D.; Axel, R. Representations of odor in the piriform cortex. Neuron 2009, 63, 854–864. [Google Scholar] [CrossRef] [PubMed]

- Wang, F.; Nemes, A.; Mendelsohn, M.; Axel, R. Odorant receptors govern the formation of a precise topographic map. Cell 1998, 93, 47–60. [Google Scholar] [CrossRef] [PubMed]

- Ohlemiller, K.K.; Kanwal, J.S.; Suga, N. Facilitative responses to species-specific calls in cortical FM-FM neurons of the mustached bat. NeuroReport 1996, 7, 1749–1755. [Google Scholar] [CrossRef] [PubMed]

- García-Rosales, F.; López-Jury, L.; González-Palomares, E.; Cabral-Calderín, Y.; Hechavarría, J.C. Fronto-Temporal Coupling Dynamics During Spontaneous Activity and Auditory Processing in the Bat Carollia perspicillata. Front. Syst. Neurosci. 2020, 14, 14. [Google Scholar] [CrossRef] [PubMed]

- Martin, L.M.; García-Rosales, F.; Beetz, M.J.; Hechavarría, J.C. Processing of temporally patterned sounds in the auditory cortex of Seba’s short-tailed bat, Carollia perspicillata. Eur. J. Neurosci. 2017, 46, 2365–2379. [Google Scholar] [CrossRef] [PubMed]

- Tseng, Y.-L.; Liu, H.-H.; Liou, M.; Tsai, A.C.; Chien, V.S.C.; Shyu, S.-T.; Yang, Z.-S. Lingering Sound: Event-Related Phase-Amplitude Coupling and Phase-Locking in Fronto-Temporo-Parietal Functional Networks During Memory Retrieval of Music Melodies. Front. Hum. Neurosci. 2019, 13, 150. [Google Scholar] [CrossRef]

- Yang, L.; Chen, X.; Yang, L.; Li, M.; Shang, Z. Phase-Amplitude Coupling between Theta Rhythm and High-Frequency Oscillations in the Hippocampus of Pigeons during Navigation. Animals 2024, 14, 439. [Google Scholar] [CrossRef] [PubMed]

- Vivekananda, U.; Bush, D.; Bisby, J.A.; Baxendale, S.; Rodionov, R.; Diehl, B.; Chowdhury, F.A.; McEvoy, A.W.; Miserocchi, A.; Walker, M.C.; et al. Theta power and theta-gamma coupling support long-term spatial memory retrieval. Hippocampus 2021, 31, 213–220. [Google Scholar] [CrossRef]

- Daume, J.; Kamiński, J.; Schjetnan, A.G.P.; Salimpour, Y.; Khan, U.; Kyzar, M.; Reed, C.M.; Anderson, W.S.; Valiante, T.A.; Mamelak, A.N.; et al. Control of working memory by phase-amplitude coupling of human hippocampal neurons. Nature 2024, 629, 393–401. [Google Scholar] [CrossRef]

- Mohan, U.R.; Zhang, H.; Ermentrout, B.; Jacobs, J. The direction of theta and alpha travelling waves modulates human memory processing. Nat. Hum. Behav. 2024, 8, 1124–1135. [Google Scholar] [CrossRef] [PubMed]

- Aggarwal, A.; Brennan, C.; Luo, J.; Chung, H.; Contreras, D.; Kelz, M.B.; Proekt, A. Visual evoked feedforward-feedback traveling waves organize neural activity across the cortical hierarchy in mice. Nat. Commun. 2022, 13, 4754. [Google Scholar] [CrossRef] [PubMed]

- Wu, Y.; Chen, Z.S. Computational models for state-dependent traveling waves in hippocampal formation. BioRxiv 2023. [Google Scholar] [CrossRef]

- Wu, J.Y.; Guan, L.; Bai, L.; Yang, Q. Spatiotemporal properties of an evoked population activity in rat sensory cortical slices. J. Neurophysiol. 2001, 86, 2461–2474. [Google Scholar] [CrossRef]

- Erkol, Ş.; Mazzilli, D.; Radicchi, F. Influence maximization on temporal networks. Phys. Rev. E 2020, 102, 042307. [Google Scholar] [CrossRef]

- Medvedev, A.V.; Chiao, F.; Kanwal, J.S. Modeling complex tone perception: Grouping harmonics with combination-sensitive neurons. Biol. Cybern. 2002, 86, 497–505. [Google Scholar] [CrossRef] [PubMed]

- Aharon, G.; Sadot, M.; Yovel, Y. Bats Use Path Integration Rather Than Acoustic Flow to Assess Flight Distance along Flyways. Curr. Biol. 2017, 27, 3650–3657.e3. [Google Scholar] [CrossRef]

- Merlin, C.; Gegear, R.J.; Reppert, S.M. Antennal circadian clocks coordinate sun compass orientation in migratory monarch butterflies. Science 2009, 325, 1700–1704. [Google Scholar] [CrossRef] [PubMed]

- Shukla, V.; Rani, S.; Malik, S.; Kumar, V.; Sadananda, M. Neuromorphometric changes associated with photostimulated migratory phenotype in the Palaearctic-Indian male redheaded bunting. Exp. Brain Res. 2020, 238, 2245–2256. [Google Scholar] [CrossRef] [PubMed]

- Irachi, S.; Hall, D.J.; Fleming, M.S.; Maugars, G.; Björnsson, B.T.; Dufour, S.; Uchida, K.; McCormick, S.D. Photoperiodic regulation of pituitary thyroid-stimulating hormone and brain deiodinase in Atlantic salmon. Mol. Cell. Endocrinol. 2021, 519, 111056. [Google Scholar] [CrossRef] [PubMed]

- Glimcher, P.W.; Rustichini, A. Neuroeconomics: The consilience of brain and decision. Science 2004, 306, 447–452. [Google Scholar] [CrossRef] [PubMed]

- Tversky, A.; Kahneman, D. Judgment under Uncertainty: Heuristics and Biases. Science 1974, 185, 1124–1131. [Google Scholar] [CrossRef] [PubMed]

- Luo, J.; Yu, R. Follow the heart or the head? The interactive influence model of emotion and cognition. Front. Psychol. 2015, 6, 573. [Google Scholar] [CrossRef] [PubMed]

- Fellows, L.K. The cognitive neuroscience of human decision making: A review and conceptual framework. Behav. Cogn. Neurosci. Rev. 2004, 3, 159–172. [Google Scholar] [CrossRef] [PubMed]

- Pearson, J.M.; Watson, K.K.; Platt, M.L. Decision making: The neuroethological turn. Neuron 2014, 82, 950–965. [Google Scholar] [CrossRef] [PubMed]

- Basten, U.; Biele, G.; Heekeren, H.R.; Fiebach, C.J. How the brain integrates costs and benefits during decision making. Proc. Natl. Acad. Sci. USA 2010, 107, 21767–21772. [Google Scholar] [CrossRef]

- Floresco, S.B.; Ghods-Sharifi, S. Amygdala-prefrontal cortical circuitry regulates effort-based decision making. Cereb. Cortex 2007, 17, 251–260. [Google Scholar] [CrossRef] [PubMed]

- Hikosaka, O.; Takikawa, Y.; Kawagoe, R. Role of the basal ganglia in the control of purposive saccadic eye movements. Physiol. Rev. 2000, 80, 953–978. [Google Scholar] [CrossRef] [PubMed]

- Grillner, S.; Robertson, B. The basal ganglia over 500 million years. Curr. Biol. 2016, 26, R1088–R1100. [Google Scholar] [CrossRef] [PubMed]

- Cregg, J.M.; Leiras, R.; Montalant, A.; Wanken, P.; Wickersham, I.R.; Kiehn, O. Brainstem neurons that command mammalian locomotor asymmetries. Nat. Neurosci. 2020, 23, 730–740. [Google Scholar] [CrossRef] [PubMed]

- DiDomenico, R.; Nissanov, J.; Eaton, R.C. Lateralization and adaptation of a continuously variable behavior following lesions of a reticulospinal command neuron. Brain Res. 1988, 473, 15–28. [Google Scholar] [CrossRef] [PubMed]

- Schemann, M.; Grundy, D. Electrophysiological identification of vagally innervated enteric neurons in guinea pig stomach. Am. J. Physiol. 1992, 263, G709–G718. [Google Scholar] [CrossRef] [PubMed]

- Jing, J.; Vilim, F.S.; Horn, C.C.; Alexeeva, V.; Hatcher, N.G.; Sasaki, K.; Yashina, I.; Zhurov, Y.; Kupfermann, I.; Sweedler, J.V.; et al. From hunger to satiety: Reconfiguration of a feeding network by Aplysia neuropeptide Y. J. Neurosci. 2007, 27, 3490–3502. [Google Scholar] [CrossRef] [PubMed]

- Nugent, M.; St Pierre, M.; Brown, A.; Nassar, S.; Parmar, P.; Kitase, Y.; Duck, S.A.; Pinto, C.; Jantzie, L.; Fung, C.; et al. Sexual Dimorphism in the Closure of the Hippocampal Postnatal Critical Period of Synaptic Plasticity after Intrauterine Growth Restriction: Link to Oligodendrocyte and Glial Dysregulation. Dev. Neurosci. 2023, 45, 234–254. [Google Scholar] [CrossRef] [PubMed]

- Schreurs, B.G.; O’Dell, D.E.; Wang, D. The role of cerebellar intrinsic neuronal excitability, synaptic plasticity, and perineuronal nets in eyeblink conditioning. Biology 2024, 13, 200. [Google Scholar] [CrossRef] [PubMed]

- Christensen, A.C.; Lensjø, K.K.; Lepperød, M.E.; Dragly, S.-A.; Sutterud, H.; Blackstad, J.S.; Fyhn, M.; Hafting, T. Perineuronal nets stabilize the grid cell network. Nat. Commun. 2021, 12, 253. [Google Scholar] [CrossRef]

- Karetko, M.; Skangiel-Kramska, J. Diverse functions of perineuronal nets. Acta Neurobiol. Exp. 2009, 69, 564–577. [Google Scholar] [CrossRef]

- Sorvari, H.; Miettinen, R.; Soininen, H.; Pitkänen, A. Parvalbumin-immunoreactive neurons make inhibitory synapses on pyramidal cells in the human amygdala: A light and electron microscopic study. Neurosci. Lett. 1996, 217, 93–96. [Google Scholar] [CrossRef] [PubMed]

- Deco, G.; Rolls, E.T. Attention, short-term memory, and action selection: A unifying theory. Prog. Neurobiol. 2005, 76, 236–256. [Google Scholar] [CrossRef] [PubMed]

- Jensen, O.; Kaiser, J.; Lachaux, J.-P. Human gamma-frequency oscillations associated with attention and memory. Trends Neurosci. 2007, 30, 317–324. [Google Scholar] [CrossRef] [PubMed]

- Flechsenhar, A.; Larson, O.; End, A.; Gamer, M. Investigating overt and covert shifts of attention within social naturalistic scenes. J. Vis. 2018, 18, 11. [Google Scholar] [CrossRef] [PubMed]

- Belardinelli, A.; Herbort, O.; Butz, M.V. Goal-oriented gaze strategies afforded by object interaction. Vision. Res. 2015, 106, 47–57. [Google Scholar] [CrossRef] [PubMed]

- Okamoto, H.; Cherng, B.-W.; Nakajo, H.; Chou, M.-Y.; Kinoshita, M. Habenula as the experience-dependent controlling switchboard of behavior and attention in social conflict and learning. Curr. Opin. Neurobiol. 2021, 68, 36–43. [Google Scholar] [CrossRef] [PubMed]

- Mohanty, A.; Gitelman, D.R.; Small, D.M.; Mesulam, M.M. The spatial attention network interacts with limbic and monoaminergic systems to modulate motivation-induced attention shifts. Cereb. Cortex 2008, 18, 2604–2613. [Google Scholar] [CrossRef] [PubMed]

- Salmi, J.; Rinne, T.; Koistinen, S.; Salonen, O.; Alho, K. Brain networks of bottom-up triggered and top-down controlled shifting of auditory attention. Brain Res. 2009, 1286, 155–164. [Google Scholar] [CrossRef] [PubMed]

- Tamber-Rosenau, B.J.; Esterman, M.; Chiu, Y.-C.; Yantis, S. Cortical mechanisms of cognitive control for shifting attention in vision and working memory. J. Cogn. Neurosci. 2011, 23, 2905–2919. [Google Scholar] [CrossRef]

- Parker, M.O.; Gaviria, J.; Haigh, A.; Millington, M.E.; Brown, V.J.; Combe, F.J.; Brennan, C.H. Discrimination reversal and attentional sets in zebrafish (Danio rerio). Behav. Brain Res. 2012, 232, 264–268. [Google Scholar] [CrossRef]

- Fodoulian, L.; Gschwend, O.; Huber, C.; Mutel, S.; Salazar, R.; Leone, R.; Renfer, J.-R.; Ekundayo, K.; Rodriguez, I.; Carleton, A. The claustrum-medial prefrontal cortex network controls attentional set-shifting. BioRxiv 2020. [Google Scholar] [CrossRef]

- Buschman, T.J.; Miller, E.K. Shifting the spotlight of attention: Evidence for discrete computations in cognition. Front. Hum. Neurosci. 2010, 4, 194. [Google Scholar] [CrossRef]

- Goldberg, M.E.; Bisley, J.; Powell, K.D.; Gottlieb, J.; Kusunoki, M. The role of the lateral intraparietal area of the monkey in the generation of saccades and visuospatial attention. Ann. N. Y. Acad. Sci. 2002, 956, 205–215. [Google Scholar] [CrossRef]

- Amo, R.; Aizawa, H.; Takahashi, R.; Kobayashi, M.; Takahoko, M.; Aoki, T.; Okamoto, H. Identification of the zebrafish ventral habenula as a homologue of the mammalian lateral habenula. Neurosci. Res. 2009, 65, S227. [Google Scholar] [CrossRef]

- Puentes-Mestril, C.; Roach, J.; Niethard, N.; Zochowski, M.; Aton, S.J. How rhythms of the sleeping brain tune memory and synaptic plasticity. Sleep 2019, 42, 1–14. [Google Scholar] [CrossRef] [PubMed]

- Geva-Sagiv, M.; Mankin, E.A.; Eliashiv, D.; Epstein, S.; Cherry, N.; Kalender, G.; Tchemodanov, N.; Nir, Y.; Fried, I. Augmenting hippocampal-prefrontal neuronal synchrony during sleep enhances memory consolidation in humans. Nat. Neurosci. 2023, 26, 1100–1110. [Google Scholar] [CrossRef]

- Capellini, I.; McNamara, P.; Preston, B.T.; Nunn, C.L.; Barton, R.A. Does sleep play a role in memory consolidation? A comparative test. PLoS ONE 2009, 4, e4609. [Google Scholar] [CrossRef]

- Oyanedel, C.N.; Binder, S.; Kelemen, E.; Petersen, K.; Born, J.; Inostroza, M. Role of slow oscillatory activity and slow wave sleep in consolidation of episodic-like memory in rats. Behav. Brain Res. 2014, 275, 126–130. [Google Scholar] [CrossRef] [PubMed]

- Yeganegi, H.; Ondracek, J.M. Multi-channel EEG recordings reveal age-related differences in the sleep of juvenile and adult zebra finches. Sci. Rep. 2023. [Google Scholar] [CrossRef]

- Buchert, S.N.; Murakami, P.; Kalavadia, A.H.; Reyes, M.T.; Sitaraman, D. Sleep correlates with behavioral decision making critical for reproductive output in Drosophila melanogaster. Comp. Biochem. Physiol. Part A Mol. Integr. Physiol. 2022, 264, 111114. [Google Scholar] [CrossRef]

- Sauseng, P.; Klimesch, W.; Gruber, W.R.; Birbaumer, N. Cross-frequency phase synchronization: A brain mechanism of memory matching and attention. Neuroimage 2008, 40, 308–317. [Google Scholar] [CrossRef]

- Engel, A.K.; Fries, P.; Singer, W. Dynamic predictions: Oscillations and synchrony in top-down processing. Nat. Rev. Neurosci. 2001, 2, 704–716. [Google Scholar] [CrossRef] [PubMed]

- Drebitz, E.; Haag, M.; Grothe, I.; Mandon, S.; Kreiter, A.K. Attention configures synchronization within local neuronal networks for processing of the behaviorally relevant stimulus. Front. Neural Circuits 2018, 12, 71. [Google Scholar] [CrossRef]

- Klimesch, W. EEG alpha and theta oscillations reflect cognitive and memory performance: A review and analysis. Brain Res. Rev. 1999, 29, 169–195. [Google Scholar] [CrossRef] [PubMed]

- Fernandez, L.M.J.; Lüthi, A. Sleep spindles: Mechanisms and functions. Physiol. Rev. 2020, 100, 805–868. [Google Scholar] [CrossRef]

- Macdonald, K.D.; Fifkova, E.; Jones, M.S.; Barth, D.S. Focal stimulation of the thalamic reticular nucleus induces focal gamma waves in cortex. J. Neurophysiol. 1998, 79, 474–477. [Google Scholar] [CrossRef] [PubMed]

- Ngo, H.-V.V.; Born, J. Sleep and the Balance between Memory and Forgetting. Cell 2019, 179, 289–291. [Google Scholar] [CrossRef]

- Kim, J.; Gulati, T.; Ganguly, K. Competing Roles of Slow Oscillations and Delta Waves in Memory Consolidation versus Forgetting. Cell 2019, 179, 514–526.e13. [Google Scholar] [CrossRef]

- Nir, Y.; Tononi, G. Dreaming and the brain: From phenomenology to neurophysiology. Trends Cogn. Sci. 2010, 14, 88–100. [Google Scholar] [CrossRef]

- Tamaki, M.; Berard, A.V.; Barnes-Diana, T.; Siegel, J.; Watanabe, T.; Sasaki, Y. Reward does not facilitate visual perceptual learning until sleep occurs. Proc. Natl. Acad. Sci. USA 2020, 117, 959–968. [Google Scholar] [CrossRef]

- Schredl, M.; Doll, E. Emotions in diary dreams. Conscious. Cogn. 1998, 7, 634–646. [Google Scholar] [CrossRef]

- Marzano, C.; Ferrara, M.; Mauro, F.; Moroni, F.; Gorgoni, M.; Tempesta, D.; Cipolli, C.; De Gennaro, L. Recalling and forgetting dreams: Theta and alpha oscillations during sleep predict subsequent dream recall. J. Neurosci. 2011, 31, 6674–6683. [Google Scholar] [CrossRef] [PubMed]

- Wiswede, D.; Koranyi, N.; Müller, F.; Langner, O.; Rothermund, K. Validating the truth of propositions: Behavioral and ERP indicators of truth evaluation processes. Soc. Cogn. Affect. Neurosci. 2013, 8, 647–653. [Google Scholar] [CrossRef]

- Joo, H.R.; Frank, L.M. The hippocampal sharp wave-ripple in memory retrieval for immediate use and consolidation. Nat. Rev. Neurosci. 2018, 19, 744–757. [Google Scholar] [CrossRef]

- Roumis, D.K.; Frank, L.M. Hippocampal sharp-wave ripples in waking and sleeping states. Curr. Opin. Neurobiol. 2015, 35, 6–12. [Google Scholar] [CrossRef]

- Remondes, M.; Wilson, M.A. Slow-γ Rhythms Coordinate Cingulate Cortical Responses to Hippocampal Sharp-Wave Ripples during Wakefulness. Cell Rep. 2015, 13, 1327–1335. [Google Scholar] [CrossRef]

- Moser, M.-B.; Rowland, D.C.; Moser, E.I. Place cells, grid cells, and memory. Cold Spring Harb. Perspect. Biol. 2015, 7, a021808. [Google Scholar] [CrossRef]

- Friston, K.; Kilner, J.; Harrison, L. A free energy principle for the brain. J. Physiol. Paris. 2006, 100, 70–87. [Google Scholar] [CrossRef] [PubMed]

- Friston, K. The free-energy principle: A rough guide to the brain? Trends Cogn. Sci. 2009, 13, 293–301. [Google Scholar] [CrossRef] [PubMed]

- Krupnik, V. I like therefore I can, and I can therefore I like: The role of self-efficacy and affect in active inference of allostasis. Front. Neural Circuits 2024, 18, 1283372. [Google Scholar] [CrossRef]

- Friston, K. The free-energy principle: A unified brain theory? Nat. Rev. Neurosci. 2010, 11, 127–138. [Google Scholar] [CrossRef] [PubMed]

- Kammer, T.; Spitzer, M. Brain stimulation in psychiatry: Methods and magnets, patients and parameters. Curr. Opin. Psychiatry 2012, 25, 535–541. [Google Scholar] [CrossRef] [PubMed]

- Wagle Shukla, A.; Vaillancourt, D.E. Treatment and physiology in Parkinson’s disease and dystonia: Using transcranial magnetic stimulation to uncover the mechanisms of action. Curr. Neurol. Neurosci. Rep. 2014, 14, 449. [Google Scholar] [CrossRef]

- Magsood, H.; Syeda, F.; Holloway, K.; Carmona, I.C.; Hadimani, R.L. Safety study of combination treatment: Deep brain stimulation and transcranial magnetic stimulation. Front. Hum. Neurosci. 2020, 14, 123. [Google Scholar] [CrossRef] [PubMed]

- Holtzheimer, P.E.; Mayberg, H.S. Neuromodulation for treatment-resistant depression. F1000 Med. Rep. 2012, 4, 22. [Google Scholar] [CrossRef] [PubMed]

- Bluhm, R.; Castillo, E.; Achtyes, E.D.; McCright, A.M.; Cabrera, L.Y. They affect the person, but for better or worse? perceptions of electroceutical interventions for depression among psychiatrists, patients, and the public. Qual. Health Res. 2021, 31, 2542–2553. [Google Scholar] [CrossRef]

- Farries, M.A.; Fairhall, A.L. Reinforcement learning with modulated spike timing dependent synaptic plasticity. J. Neurophysiol. 2007, 98, 3648–3665. [Google Scholar] [CrossRef]

- Detorakis, G.; Sheik, S.; Augustine, C.; Paul, S.; Pedroni, B.U.; Dutt, N.; Krichmar, J.; Cauwenberghs, G.; Neftci, E. Neural and Synaptic Array Transceiver: A Brain-Inspired Computing Framework for Embedded Learning. Front. Neurosci. 2018, 12, 583. [Google Scholar] [CrossRef]

- Florian, R.V. Reinforcement learning through modulation of spike-timing-dependent synaptic plasticity. Neural Comput. 2007, 19, 1468–1502. [Google Scholar] [CrossRef]

- Teng, T.-H.; Tan, A.-H.; Zurada, J.M. Self-organizing neural networks integrating domain knowledge and reinforcement learning. IEEE Trans. Neural Netw. Learn. Syst. 2015, 26, 889–902. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kanwal, J.S. From Information to Knowledge: A Role for Knowledge Networks in Decision Making and Action Selection. Information 2024, 15, 487. https://doi.org/10.3390/info15080487

Kanwal JS. From Information to Knowledge: A Role for Knowledge Networks in Decision Making and Action Selection. Information. 2024; 15(8):487. https://doi.org/10.3390/info15080487

Chicago/Turabian StyleKanwal, Jagmeet S. 2024. "From Information to Knowledge: A Role for Knowledge Networks in Decision Making and Action Selection" Information 15, no. 8: 487. https://doi.org/10.3390/info15080487