Abstract

In this paper, we focus on weighting methods within multi-attribute utility/value theory (MAUT/MAVT). In these methods, the decision maker (DM) provides ordinal information about the relative importance of criteria, but also additional information concerning the strength of the differences between the ranked criteria, which can be expressed in different forms, including precise/imprecise cardinal information, ratio-based methods, a ranking of differences, a semantic scale, or preference statements. Although many comparison analyses of weighting methods based on ordinal information have been carried out in the literature, these analyses do not cover all of the available methods, and it is not possible to identify the best one depending on the information provided by the DM. We review the analyses comparing the performance of these weighting methods based on empirical and simulated data using different quality measures. The aim is to identify weighting methods that could be recommended for use in each situation (depending on the available information) or the missing comparison analyses that should be carried out to arrive at a recommendation. We conclude that in the case of additional information in the form of a semantic scale, the cardinal sum reciprocal method can definitively be recommended. However, when only ordinal information is provided by the DM and in cases where additional information is provided in the form of precise/imprecise cardinal information or a ranking of differences, although there are some outstanding methods, further comparison analysis should be carried out to recommend a weighting method.

1. Introduction

The WEB-MAUT-DSS is a web decision support system (DSS) based on the multi-attribute utility theory (MAUT), which consists of an adaptation and improvement of the generic multi-attribute analysis (GMAA) system [,]. It is based on the decision analysis (DA) methodology [], which has been widely used to address complex real-world decision-making problems on the basis of an additive multi-attribute utility model.

DA consists of the following steps: structuring the problem (building an objective hierarchy and establishing attributes to indicate the extent to which the lowest-level objectives are achieved); identifying the feasible alternatives and their performances in terms of the attributes and uncertainty (if necessary); quantifying preferences, which involves assessing single attribute utility/value functions, as well as the relative importance of criteria; evaluating alternatives by means of a multi-attribute utility/value function; and conducting sensitivity analyses to check the robustness of the results.

Regarding alternative evaluation, the additive multi-attribute utility/value function is considered a valid approach in most real decision-making problems for the reasons described in [,].

However, most complex decision-making problems involve imprecise information, mainly because it is impossible to exactly predict the performances of the alternatives under consideration, which may be derived from statistical methods. Additionally, it is often not easy to elicit precise weights, since decision makers (DMs) may find it difficult to compare criteria (some weighting methods are too cognitively demanding) or may not want to reveal their preferences in public. Alternatively, the imprecision of weights may be the result of a negotiation process. This situation is usually referred to as decision-making with imprecise information, with incomplete information or with partial information [], which has been widely addressed in the literature focusing mainly on the weight elicitation process [,,,,].

WEB-MAUT-DSS accounts for uncertainty about the alternative performance and admits imprecise information about the DMs’ preferences in both the assessment of component utilities and weight elicitation, which leads to classes of utility functions and weight intervals, respectively.

Regarding weight elicitation, the GMAA originally provided two methods to hierarchically elicit the relative importance of criteria. Local weights were first elicited at the different levels and branches of the objective hierarchy by means of a direct assignment or a method based on trade-offs, accounting for imprecision concerning DM responses by means of ranges of responses to the probability question that the DM is asked. Then, the attribute weights, , were computed by multiplying the local weights in the paths from the corresponding attribute to the overall objective.

One of the improvements provided by WEB-MAUT-DSS over the GMAA system is the incorporation of new weighting methods apart from direct assignment or methods based on trade-offs. Specifically, weight elicitation methods based on ordinal information can be considered, where the DM has to provide ordinal information about the relative importance of attributes/criteria, i.e., a ranking of importance of the criteria. Moreover, the DM is allowed to provide additional information about the strength of the differences between the ranked criteria if they are able or consider it appropriate to do so. This additional information could be provided in different forms, including a ranking of differences, a semantic scale, precise/imprecise cardinal information, ratio-based methods, and preference statements. The DM can decide which information they are able/willing to provide (or with which they feel more comfortable), and then the respective weighting method can be used.

However, different weighting methods are available in the literature to deal with only ordinal information within MAVT/MAUT, as well as with additional information about the strength of the differences between the ranked criteria. In addition, different comparative analyses have been carried out to analyse their performance based on empirical and simulated data using different quality measures. The question is, then, is it possible to recommend weighting methods for incorporation into the WEB-MAUT-DSS on the basis of the comparison analyses in the literature? Or should further comparison analyses be carried out to arrive at recommendations for different situations depending on the available information?

In this paper, we try to answer these questions. To achieve this, we first review the different weighting methods reported in the literature dealing with ordinal information on weights or which also employ additional available information. We then look at papers including comparison analyses of weighting method performance, analyzing the conclusions drawn by the different authors in order to arrive at a recommendation or at least identify missing comparison analyses.

The paper is structured as follows: in Section 2, weighting methods accounting for ordinal information on weights are reviewed, including surrogate weighting methods and methods based on the notions of pairwise and absolute dominance, as well as other techniques like stochastic multi-criteria acceptability analysis (SMAA2) or simulation. Section 3 deals with weighting methods in which additional information is also available. A review of comparison analyses in the literature involving the weighting methods under consideration is reported in Section 4. The results of the different comparison analyses are aggregated and discussed in Section 5. Finally, some conclusions and future research lines are provided in Section 6.

2. Weighting Methods Accounting for Ordinal Information

We consider an MAUT/MAVT context in which an additive model is used to evaluate the alternatives under consideration:

where n is the number of attributes/criteria, is the impact/performance of the i-th attribute for alternative , represents the single utility/value assigned to the respective attribute , and is the weight of the i-th attribute.

Most authors agree with the classification of weighting methods into subjective and objective approaches [,]. Subjective approaches reflect the DM’s subjective opinion and intuition, which have a direct influence on the outcome of the decision-making process. Objective approaches determine the criteria weights on the basis of the information contained in a decision-making matrix by means of mathematical models, neglecting the DM’s opinion. Although both approaches are recognized as efficient in dealing with real-life multicriteria decision-making problems, most of the weighting methods in the literature are based on the DM’s cognitive preferences. The hybridization of both concepts has been discussed at length in [,], and other classifications are available in [].

In this paper, we consider subjective MAUT/MAVT methods with partial/imprecise/ incomplete information where ordinal information is available on weights, i.e., the DM is able to provide a ranking of importance of criteria/attributes, arranged in descending order from the most to the least important ones:

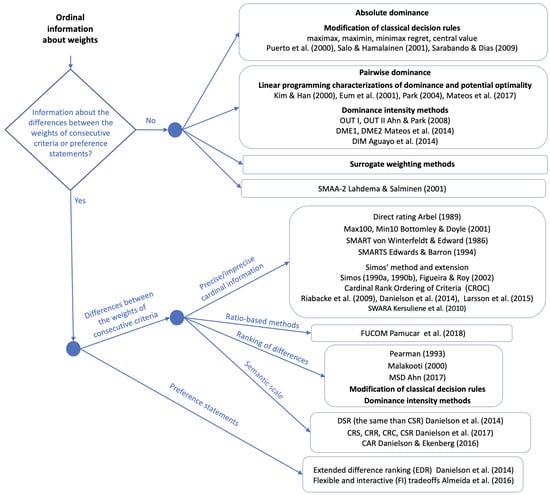

Different approaches can be found in the literature to deal with such a situation, particularly surrogate weighting methods and methods based on the notions of pairwise and absolute dominance, as well as other techniques like SMAA2 or simulation (see Figure 1).

2.1. Surrogate Weighting Methods

In surrogate weighting methods (SWMs), a weight vector is selected from a set of admissible weights to represent the set, which is then used to evaluate the alternatives on the basis of MAVT/MAUT.

The original SWMs were proposed in [] (equal weights (EW)), [] (rank sum (RS), rank exponent (RE) and rank reciprocal (RR) weights), and [] (rank-order centroid (ROC) weights). Table 1 shows how weights are computed for SWMs. The ROC and RR methods attach more importance to the best rankings, whereas all the attributes are equally important in the EW method, and the RS method places equal emphasis on all the weight rankings (i.e., ). The RE method is a generalization of the RS method, which is reduced to the EW and the RS methods if p is 0 or 1, respectively. Note that the SMART exploiting ranks (SMARTER) method [] incorporates a component to elicit ordinal information on weights, from which ROC weights are derived.

Table 1.

Surrogate weighting methods (SWMs).

Different SWMs have been proposed since then. They are summarized in the order in which they were published, as follows.

In the equal ratio fixed (ERF) method [], each weight is given a ratio to the next largest weight, and is determined as . Alternatively, it is suggested that be elicited, thus making in []. The geometric weights (GW) method wass also proposed in [], where weights decrease exponentially by a factor of .

In the rank order distribution (ROD) method [], the relative through are uniformly distributed at the interval , and then the surrogate weights are obtained by computing their means and through normalization. The authors provided formulas for to 5, whereas the corresponding surrogate weights up to were provided in [].

Figure 1.

Weighting methods based on ordinal information and accounting for additional information. [,,,,,,,,,,,,,,,,,,,,,,,,,,,].

In [], the variable-slope linear (VSL) method was proposed, where the criteria weights are assumed to be linear with a variable slope, and least-squares regression is applied to identify the best of three different (quadratic, inverse, and exponential) models to estimate the slope.

Maximum entropy ordered weighted averaging (MEOWA) weights were proposed in [,]. These are a special class of the ordered weighted averaging (OWA) method [] that has the maximal entropy for a specified value of attitudinal character , which, like Hurwicz’s , denotes the DM’s degree of optimism. The underlying notion of the least-squared ordered weighted averaging (LSOWA) method [] is to compute the weights that minimize the sum of deviations from EW, since entropy is maximized when all weights are equal. It also extends the OWA method, deriving the OWA weights that are, as far as possible, evenly spread out around EW. The LSOWA method ensures that weights are the weight space if and (see Table 1).

Combinations of SWMs have also been proposed by different authors, such as the sum reciprocal (SR) method [], an additive combination of RS and RR weight functions, and the rank order total (ROT) method [], a combination of the RS method and the ROC method. In the geometric sum (GS) method [], the resulting weights multiplicatively reflect the rank order, including the parameter s (see Table 1).

The aim of the generalized rank sum (GRS) method [] is to maximize the distance from the closest inequality constraint of the weight space, which is tantamount to finding the middlemost solution to the constraints. The result is actually a simple extraction from the finite geometric series, and (see Table 1) has to be more than 1 to ensure the order relations of the weight space. Note that the GRS method reduces to the EW method when is close to 1.

Finally, the most recent proposals regarding SWMs consist of revised versions of the ROC method. The improved ROC (IROC) method [] claims, contrary to the ROC method, that DM preferences at the corners of the weight space may be similar rather than equal and establishes the corresponding different coefficients by means of simulation techniques. On the other hand, the generalized ROC (GROC) method [] incorporates an upper bound for the ratio , which implies that a given weight needs to be multiplied by to be equal to the next highest weight, leading to the following weight space:

Note that in the ROC method. Meanwhile, another revised version of the ROC method, the rank order logarithm (ROL) method, was proposed in [] (see Table 1).

Note that one or more parameters have to be set in some of the above SWMs, which may imply the application of simulation techniques (IROC). Alternatively, the DM may be required to provide additional information, such as in the ERF method or an upper bound for the ratio in the GROC method. The , , and s parameters also have to be set in the LSOWA, GRS, and GS methods, respectively.

2.2. Methods Based on Pairwise and Absolute Dominance Notions

Pairwise and absolute dominance notions can be used to directly recommend the best alternative and fully rank alternatives rather than selecting a weight vector from a set of admissible weights. Absolute dominance considers the following linear optimization problems:

and alternative absolutely dominates if , i.e., the lower bound of exceeds the upper bound of .

The use of absolute dominance values is exemplified by the modification of four classical decision rules to encompass an imprecise decision context concerning weights and component values/utilities [,]:

- The maximax or optimist, based on their maximum guaranteed value, i.e., .

- The maximin or pessimist, based on their minimum guaranteed value, i.e., .

- The minimax regret rule, based on the maximum loss of value with respect to a better alternative, i.e., , where represents the maximum regret incurred when choosing alternative j, i.e., .

- The central value rule, based on the midpoint of the range of possible performances, i.e., .

Two additional rules were proposed in []: the quasi-optimality rule and the quasi-dominance rule.

Regarding pairwise dominance, linear programming displays dominance and potential optimality for alternatives when information about weights, utilities, and alternative performances is available in the literature [,,,,], whereas the concepts of weak potential optimality and strong potential optimality were proposed in [].

In the rank inclusion in criteria hierarchies (RICH) method [], the DM is allowed to provide a ranking of weights or to specify subsets containing the most important attributes, leading to decision recommendations on the basis of the computation of dominance relations and decision rules. It was implemented in a user-friendly decision support system, RICH Decisions (http://decisionarium.aalto.fi (accessed on 26 August 2024), []).

Another approach accounting for pairwise dominance consists of considering information about each alternative’s intensity of dominance, known as dominance measuring methods (DMMs) [,,,]. DMMs are based on the computation of a dominance matrix including pairwise dominance values, which are exploited in different ways to derive measures of dominance to rank the alternatives under consideration.

The first DMMs, OUT I, and OUT II, were proposed in [], where dominating (OUT I) and dominated measures are computed for each alternative, and then a net dominance accounting for the difference between them is used to rank the alternatives (OUT II).

The methods in [] were extended in [], where dominating and dominated measures are combined into a dominance intensity reducing the duplicate information involved in the computations (dominance measuring extension 1 (DME1) method). The dominance measuring extension 2 (DME2) method was also proposed in [], which derives a global dominance intensity index to rank alternatives on the basis that

where is the pairwise dominance value between alternatives and , and W and define the feasible region for weights and values for each attribute, respectively.

A new dominance intensity method based on triangular fuzzy numbers and a distance notion was proposed in []. This method, known as dominance intensity method (DIM), incorporates the DM’s attitude toward risk into the analysis. Instead of pairwise dominance (), the DIM method uses the following values:

where is the centroid of the polytope representing the weight space and are the centroids of the polytopes in the n attributes delimited by the constraints accounting for alternatives and .

2.3. Other Weighting Methods Based on Ordinal Information

Probability distributions and partial preference information are used to represent inaccurate uncertain criteria values and weights in the stochastic multi-criteria acceptability analysis (SMAA) method [] in order to explore the weight space and identify the scores that each alternative would need to achieve if it were to be the preferred option or ranked in a specific position. The SMAA-2 method [] extends SMAA by incorporating various types of preference information, including a priority order for the criteria.

In addition, Monte Carlo simulation techniques could also be used to deal with ordinal information on weights. For instance, the GMAA system [,], an MAUT decision support system (DSS) accounting for imprecision concerning DM preferences and uncertainty about alternative performances, includes a sensitivity analysis tool that randomly generates weights while preserving a total or partial attribute rank order. The GMAA system computes several statistics about the rankings of each alternative and provides multiple boxplots for the alternatives that can be useful for discarding and/or identifying the most preferred available alternatives.

3. Weighting Methods Accounting for Additional Information

In many real decision-making problems, the DM is unable/unwilling to provide additional information on the ranking of the importance of attributes, as pointed out in Section 1, in which the above methods are applicable. However, the DM might provide information about the differences between the weights of consecutive criteria in different ways (see Figure 1).

One possibility is the provision of precise/imprecise cardinal information, or ratios. Alternatively, the DM could provide a ranking of the differences between the weights of consecutive criteria, although a semantic scale could also be used. In addition, additional information on the basis of preference statements could be available. These possibilities are described in detail below.

3.1. Precise/Imprecise Cardinal Information

Different methods using scores to derive the strength of the differences between the weights of criteria can be found in the literature, including the direct rating, SWING, and simple multi-attribute rating technique using SWING (SMARTS) methods.

In direct rating [], the DM first ranks all the criteria according to their importance and then rates each criterion on a scale of 0–100. Indeed, a high score means that the factor is important. Then, some form of normalization can be applied to this scoring operation.

The SWING method [] explicitly incorporates the attribute ranges in the elicitation questions. The DMs are asked to consider the worst consequence for each criterion and to identify which criterion they would most prefer to change from its worst to its best outcome. This criterion is assigned the highest number of points—for example, 100. The procedure is then repeated with the remaining criteria. The criterion with the next most important swing is assigned a number relative to the most important criterion (thus the points scored by criteria denote their relative importance), and so on. Finally, the scored points are normalized to a sum of one.

The simple multi-attribute rating technique (SMART) and the SWING method were later combined into the SMARTS method [], where SWING was used to set up the order of importance of the criteria.

Simos’ method proposes that the DM should rank criteria by placing cards containing the names of the criteria under consideration in order of the least to the most important (where cards containing equally important criteria are grouped together) [,]. Then, the DM is asked about the importance of successive criteria in the ranking by introducing white cards between two successive cards (or subset of cards). Finally, the collected information is processed to derive the normalized weights, . Simos’ method was extended in [] to overcome some objections to how the normalized weights are derived.

The cardinal and rank ordering of criteria (CROC) method was proposed in [,]. CROC is a two-stage distance-based method where the DM provides the magnitude of the differences between the ranked criteria.

In the first stage, the DM is asked to provide information about their preferences. This stage consists of three steps. First, ordinal information is collected in a criteria ranking with a SWING weighting style, producing a Hasse diagram. Then, the difference between the weights for the most and the least important criteria is assessed. Note that it is easier for DMs to provide information on the most and least important criteria than those in between. Finally, the criteria are equally distributed along a slider (using a graphical user interface), i.e., all magnitudes of the differences are initially equal, and each criterion is associated with a clouded region with a length equal to the default distance. Then, the DM is asked to adjust the distances between criteria to represent information of cardinal importance between them, leading in some cases to overlaps between the respective clouded regions.

In the second stage, the information provided by the DM is translated into a constraint set for weight variables, enabling the use of decision analysis methods to model imprecision by means of linear constraints. This process is explained in detail in [].

This method is capable of handling imprecision in two ways: by means of intervals (where the DM statement in the slider is interpreted as an interval such that , with representing the degree of confidence in the weights) and/or by means of weight comparison.

Although the step-wise weight assessment ratio analysis (SWARA) method [,] was originally proposed as a dispute resolution method in a group decision-making context, it is a score-based method which has also been adapted to deal with a single DM.

In SWARA, after scoring (, ), the differences in the consecutive criteria are determined for incorporation into the weight elicitation as follows:

with and where is the score provided by the DM for the i-th criterion.

Once the values of are computed, a normalization process is performed to derive the weights: .

3.2. Ratio-Based Methods

In the simple multi-attribute rating technique (SMART) [], the DM is asked to rank criteria according to their importance from worst to best. Then, 10 points are assigned to the least important criterion, and an increasing number of points are assigned to the other criteria to address their importance relative to the least important criterion. The derived weights are normalized.

The full consistency method (FUCOM) based on pairwise comparisons of criteria was proposed in []. FUCOM consists of three steps. In the first step, the criteria are ranked according to their significance from the highest to lowest weight. In the second step, the ranked criteria are compared, leading to comparative priorities

where represents the significance (priority) that the i-th criterion has compared to the -th criterion of the ranking. Note that only comparisons are necessary. Finally, in the third step, the weights are computed, taking into account the fact that they should satisfy the following two conditions:

- The weight ratios should be equal to the comparative priorities, i.e., .

- The weight should satisfy the condition of mathematical transitivity, i.e., and then .

Full consistency is achieved if transitivity is fully respected. In order to meet such conditions, the following constraints are established on weights and , with the minimization of ( implies full consistency). Then, weights are obtained by solving the minimization optimization problem (where is also incorporated as a constraint) with the corresponding degree of consistency, .

3.3. Ranking of Differences between the Weights of Consecutive Criteria

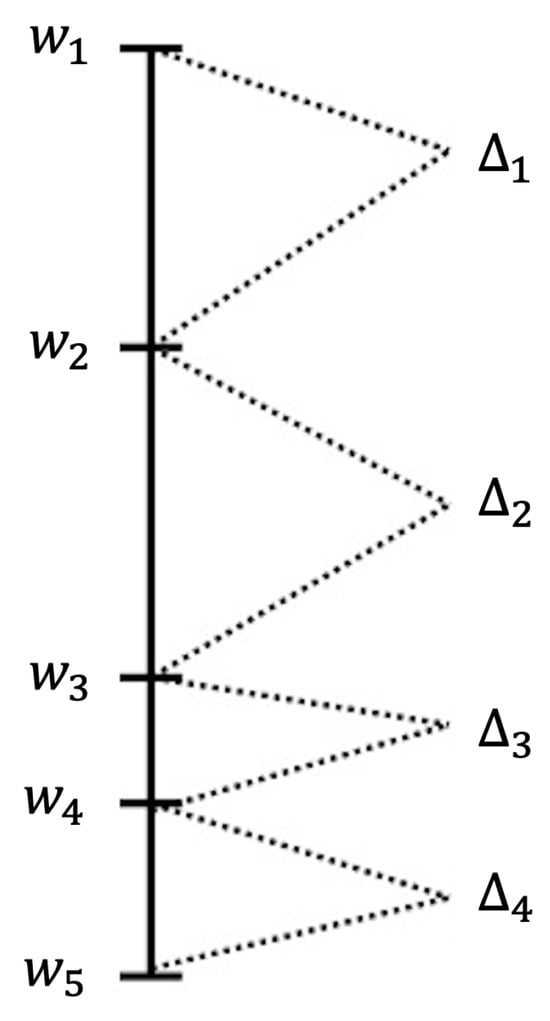

The first possibility that we consider consists of providing a ranking of the differences between the weights of consecutive criteria. Given that the DM has established that , we define , , and a ranking on elements has to be provided by the DM. For instance, the ranking would correspond to the example shown in Figure 2.

Figure 2.

Ranking of weights and differences between consecutive weights.

In [], this kind of information is considered in the computation of dominance in additive weighting models, whereas an effective algorithm for an additive utility function and the partial information represented by a set of linear constraints was proposed in [], including ranking of weights or alternatives, paired comparison of weights or alternatives, and strengths of preferences of weights or alternatives. Ref. [] extended the work in [].

The rule deals with ordinal information about the position of alternatives and about the difference in values between consecutive alternatives with respect to the different criteria under consideration and ordinal information about weights []. The performance of is compared with that of DMMs in []. Note, however, that the ranking of differences refers to consecutive alternatives rather than weights in . Thus, it cannot ultimately be classified as a weighting method measuring differences between the weights of consecutive criteria. Therefore, it is not considered further in this study.

More recently, the minimizing squared deviations from extreme points (MSD) was proposed in [], which extends the ROC weighting method by seeking out surrogate weights adjusted to the barycenter of the weight set. MSD accounts for the strict ranking of weights, ranking with multiples, the ranking of differences in weights, or a mixture of these methods, and it was proven, using simulation techniques, to outperform a linear programming-based weighting method.

The ranking of differences between the weights of consecutive criteria could also be addressed using approaches based on pairwise and absolute dominance, affecting the computation of and in the modification of classical decision rules and of the pairwise dominance matrix in DMMs. Note that linear constraints would be added to the corresponding optimization problems, and a partial, rather than a complete, ranking of differences could be provided, which is less stressful for DMs.

3.4. Semantic Scales to Account for Differences between the Weights of Consecutive Criteria

Another possibility for representing the strength of the differences between the weights of consecutive criteria is to use a semantic scale.

For instance, the following scale was proposed in [,]:

- , equally important;

- , slightly more important;

- , more important (clearly more important), and

- , much more important.

This scale is used in [] to extend the SR method, leading to the DSR method, whereas the SW methods RS, RR, ROC, and SR are adapted in [] to account for this scale, leading to the so-called cardinal rank sum (CRS), cardinal rank reciprocal (CRR), cardinal rank-order centroid (CRC), and cardinal sum reciprocal (CSR) methods, respectively. All of these extended methods derive a weight vector in the same way as the original SR and SW methods. Note that the DSR method in [] is the same as the CSR method in []. In [], the GS method was extended in the same way, leading to the CGS method. In addition, the CAR method proposed in [] uses the semantic scale to elicit the values of the alternatives under each criterion in a similar way to the weights.

The same scale was used in [] to propose the partial SWING (P-SWING) method, a refined version of the SWING method [] that allows for intermediate comparisons, avoiding synthetic constructs to improve understanding. However, these intermediate comparisons refer to the differences in alternative values for different criteria rather than differences between the weights of consecutive criteria. Thus, we will discard this method from further analysis.

3.5. Additional Information on the Basis of Preference Statements

A systematic elicitation process based on an extended difference ranking (EDR) method was proposed in [], including the DM’s strength of preference order over weights. Specifically, it is stated that the set of attributes can be partitioned as , where I defines an arbitrary subset of such that . If the decision maker asserts for a specific I, the constraint can be added to the set of constraints. The authors propose using these new constraints to refine the weights derived from the DSR method, also proposed in [], where a semantic scale is used to represent the strength of the differences between the weights of consecutive criteria (see Section 3.4). Unlike the above methods, EDR outputs an interval weight vector.

In the flexible and interactive tradeoff (FITradeoff) method, the DM is required to provide less information than in the standard tradeoff procedure []. FITradeoff systematically evaluates the possibility of finding a solution to the problem while the elicitation process is ongoing, using partial information elicited from the DM at any point in the process to solve a linear programming problem in order to reduce the subset of potentially optimal alternatives.

In the first step, the order of the weights is obtained , whereas the subsequent steps yield indifference relations in order to find the value of . In step i, the DM is asked to provide the value such that they are indifferent regarding the consequences and , with and being the worst and best value in attribute , respectively, and thus, and . Then, as , we have . Taking into account the fact that , we would need similar equations to find the values of the weights (). If the DM is unable to specify , they can alternatively provide an interval , so that and .

Note that, in each iteration, the FITradeoff method solves the corresponding optimization problems with the available weight space to identify non-dominated and potentially optimal alternatives. Dominated alternatives are removed from the analysis, and the method ends if a unique non-dominated or potentially optimal alternative is found. Otherwise, the values for and are elicited in the next step. Thus, the weight space is progressively reduced, and more alternatives can be identified as dominated, thereby possibly reducing the number of DM preference statements.

The use of data visualization in a new method based on FITradeoff is proposed in [], whereas its implementation in a decision support system (www.fitradeoff.org, accessed on 26 August 2024) is described in [], accounting for a neuroscience-based decision-making approach in order to modulate changes in the decision-making process and in the design of the DSS.

4. Comparison Analyses of Weight Elicitation Methods

When a new weighting method is proposed, it is usual practice to carry out a performance comparison analysis against other methods in the literature. In addition, papers focused on comparison analyses of weighting methods can also be found in the literature. In this section, we conduct a review of the comparison studies existing in the literature, where different information on weights is provided by the DM, ranging from a simple ranking of the weights to additional information as described above.

We used the Citation Gecko search tool to carry out the review process, taking two seeds corresponding the most recent papers we found in which a weighting method based on ordinal information, or a comparison analysis of such methods, was performed. We completed the search directly using combinations of keywords such as weight, ordinal information, comparison, MAUT, surrogate weights, etc., in ScienceDirect, Scopus, and PubMEd.

Table 2 and Table 3 show a summary of the comparison studies considered, including information about the corresponding authors, the data source (empirical and/or simulation-based), the methods under comparison, and the quality metrics used.

Table 2.

Comparison analyses in the literature (SWMs) I.

Table 3.

Comparison analyses in the literature (SWMs) II.

The first paper dates back to 1981, and 27 comparison analyses have been carried out since then, most of which (14) were published in the last decade (2010–2019). In addition, SWMs are clearly the methods that have been most analyzed in these comparisons, either with respect to each other or to other methods, mainly decision rules and dominance measuring methods. However, other weighting methods have sporadically been included in the comparisons, such as the analytic hierarchy process (AHP), tradeoffs, discrete choice experiments, the PAPRIKA methodology, the best worst method, conjoint analysis, and the Slack method (see Table 2 and Table 3).

Regarding the data source, there are two possibilities for empirical-based analyses. The common opinion of a group of DMs is sometimes taken as a sample, whereas other comparison analyses are based on data from previous real-life cases.

On the other hand, Monte Carlo simulation techniques can be used to analyze the performance of weighting methods in different scenarios with different numbers of attributes and alternatives. For this, weight vectors are randomly generated using a probability distribution (uniform, normal, or exponential) and TRUE rankings of alternatives are derived from them using the additive multi-attribute utility function. These random generated weight vectors are then used in the weighting methods under comparison to derive the corresponding rankings, which are compared against the TRUE rankings using the quality measures. The uniform distribution is used as the general case in which the derived weight vectors may have similar or different weight values in magnitude, whereas the normal and exponential distributions function as particular cases in which weights of similar or very different magnitudes will be generated, respectively. Moreover, filtering processes can be incorporated into the generation to discard random vectors that are highly unlikely in real life.

There are significantly fewer papers using empirical than simulated data. This could be explained by the fact that it is hard to assemble enough real-life cases and/or experts. Note that some papers use both empirical and simulation-based information [,,]. Moreover, DMs may be asked their opinion at several stages of an experiment and evaluate methods accordingly [,,,].

The hit ratio and rank-order correlation are the most used metrics in comparison processes. The hit ratio is the proportion of cases where the method under consideration outputs the same best alternative as in the TRUE ranking, whereas the rank-order correlation (Kendall’s , []) measures how similar the overall structures ranking alternatives are between the TRUE ranking and the ranking output by the method under consideration. However, other metrics, such as value loss, mean absolute percentage error, Euclidean distance, Kullback–Leiber divergence, the mean absolute deviation, and the maximum absolute deviation, have also been used (see Table 2 and Table 3).

We first review in Section 4.1 the comparison analyses focusing on the situation where no additional information apart from the ranking of importance of attributes is provided, where SWMs, methods based on absolute and pairwise dominance, the SMAA-2 method, and Monte Carlo simulation techniques can be applied. We then look at the comparison analyses focusing on methods in Section 4.2 where additional information is provided by the DM.

4.1. Comparison Analyses When Only a Ranking of Criteria Is Available

Different analyses have been carried out to compare SWMs [,,,,,,,,,,] and different dominance intensity measuring methods [] in order to identify the best one in each category. On the other hand, comparison methods accounting for methods in the different categories have also been carried out [,,,].

The first comparison analysis on SWMs was conducted by Stillwell based on empirical data from three decision problems, where only the EW, RS, RR, and RM methods were considered []. The conclusion was that EW is outperformed by the other methods. Barron and Barret incorporated the ROC method into the comparison analysis, concluding, on the basis of simulation techniques using EMAR software, that the best method is ROC, followed by RR, RS, and EW [,]. The same conclusion was reached by de Almeida et al. many years later, taking into account the hit ratio and the frequency of cases with perfect consistency []. The EW, RS, and ROC methods were compared against a ratio-based method in [] based on unbiased judgments of attribute weights but subject to random error, concluding that the comparison favored ROC over the RS and EW methods.

ROC was compared with AHP and a fuzzy method in [], and it is concluded that the simplicity and ease of use of the ROC method make it a practical method for eliciting weights. In addition, ROC weights have an appealing theoretical rationale and outperform EW, RS, and RR in terms of choice accuracy []. Moreover, the ROC method’s performance is very good when applied to real-life cases [].

The ROD method was proposed in [] and compared with RR, RS, and ROC using simulation techniques on the basis of the hit ratio and the average value loss. The conclusion was that RS and ROD are the best, followed by ROC and RR. The use of the RS method was recommended since formulae for ROD weights become more complex as the number of attributes increase.

The comparison analysis performed in [] found that MEOWA weights are perfectly compatible with the ROC weights, which outperform RR, RS, and EW.

The SR and GS methods were proposed in [], and were also compared to the ROC, RS, and RR methods. An important concept introduced in this comparative analysis is the degree of freedom (DoF) when randomly generating weight data. If the DM stores the criteria preferences in a way similar to a given point sum, then there are DoF for n criteria. On the other hand, if the DM stores criteria preferences in a way that places no limit on the total number of allocated points (or mass), then there are n DoF. These two models of DM behavior yield very different results in assessing surrogate weights. Moreover, weak and strong filtering processes were incorporated into the analysis to discard random vectors that are highly unlikely in real life. It was concluded that the GS and SR methods are the most efficient and robust SWMs, with a very good average performance, which was consistent irrespective of differences in DM behavior. Moreover, GS slightly outperforms SR, but is more complex since it incorporates a parameter.

The EW, RE, RR, ROC, and SR methods, along with Simos’ method, were compared in [] for both and n DoF on the basis of simulation techniques taking into account the hit ratio, the mean square spread and the mean square variation, concluding that SR is clearly the preferred method, followed by ROC, RE, and Simos’ method.

The GW and VLS methods were incorporated to comparison analyses for the first time in [], together with RS, RR, ROC, and LSD. Simulation techniques varying the number of criteria and weight probability distributions allowed the relative performance of the rank-weighting methods to be compared, accounting for different decision-making situations. Note that in the above comparison analyses based on simulation techniques, the use of a unique distribution favored some weighting methods over others. Specifically, uniform, normal, and exponential distributions defining different priority structures of DMs and two weight normalization rules were used in this analysis. The uniform distribution distributes weights evenly across the whole range of values, whereas the normal distribution implies a greater likelihood of medium weights, avoiding extreme values, and the exponential distribution assigns most weight to a few top-ranked criteria.

It was concluded that the performance of the SWMs under consideration depends on the number of criteria and their weight distribution. The mean absolute percentage error and the least significance distance were used as quality measures. The RS method was best for uniform weights, whereas the VSL and the ROC methods were best for normal and exponential weights, respectively. Thus, if the criteria weight distribution is known in any specific MCDM situation, then the respective best method can be applied; otherwise, the weights can be computed using all three methods and a secondary rule should be applied. Note that the statistical distribution of weights can be elicited from the DM using just a few statements.

Ref. [] agreed with Ref. [] that the performance of SWMs is entirely dependent on how the weight simplex is defined, i.e., the probability distribution used to generate the TRUE weights. The EW, RE, RR, and ROC methods were analyzed on the basis of the hit ratio, Kendall’s , and the value loss, concluding that ROC outperforms the other methods when a uniform distribution is considered. Note, however, that this is a special case among other elicitation possibilities, such as other non-uniform distributions.

More recently, a comparison analysis was carried out in [] including a lot more SWMs (RS, RE(), RR, SR, ERF(), ROC, VLS, and ROD). It was stated that it is very difficult to establish what kind of distribution should be used to randomly generate weights and what affects the simulation results. Therefore, a large number of elicited weights from real-world applications (209 sets of weights with the number of attributes ranging from 2 to 12) were collected.

In the comparison analysis, four alternative measures were used to analyze the quality of the SWMs, and the results derived by each measure were different in some cases. Regarding the average Euclidean distance from elicited weights, ERF > SR > RS > ROD > RE > RR > VLS > ROC, whereas the positions of RE and ROD were inverted using the mean absolute deviation, and the same order was derived from the maximum absolute deviation. Finally, the results using the Kullback–Leibler divergence were clearly different: SR > ROD > RE > RS > RR > ERF > ROC > VLS. However, if the four measures are taken into account, SR was always the best or was statistically indistinguishable from the best SWM, whereas VLS and ROC were always the worst SWMs.

In one the first most recent comparisons carried out in [], the LSOWA, GRS, ROT, IROC, and GROC methods were incorporated into the analysis. A theoretical analysis was performed on the basis of several evaluation measures: steepness (steeper patterns of the weight curves are higher ranked), nonlinearity of the weight curves, non-compensatoriness (number of comparisons in which the weight of a criterion is greater than the sum of weights of the less important criteria), paretoness (80% of outcomes of most phenomena are due to only 20% of relevant causes), optimism (degree of aggregation []), utilization of information (making use of weight entropy [,]), symmetry, and consistency (accounting for possible rank/weight reversal).

No recommendation about the best SWM was given, since the results differ when different measures are applied, but a five-step selection procedure was proposed. The optimism and utilization of information measures are first used to discard some SWMs by computing the DM’s coefficient of optimism and the degree of certainty about the information, which are compared with the optimism and the utilization levels of the SWMs, respectively. Then, the consistency is analyzed, discarding additional SWMs on the basis of a threshold. Finally, the remaining measures are computed, and the highest-scoring SWM is selected.

The RR, RS, ROC, SR, and GS with different values for the s parameter were compared in [], where both and n DoF and a filtering process are again used. It was confirmed that the performance of ROC and SR was stronger than that of the classical methods from [,], whereas the results for the even newer GS method were mixed. These inconclusive results leave the research field open.

The ROL method was proposed for the first time in []. Three types of analysis (theoretical, simulation-based and empirical) were used to compare ROL with EW, RR, RS, ROC, SR, ROT, RE, VSL, LSOWA, and GRS. All three analysis types demonstrated that ROL is as strong as the ROC method.

Finally, RR, RS, ROC, SR, and GS were compared again in [], using both and nDoF, as well as a filtering process. This comparison is novel in that it considers three different probability distributions (uniform, beta, and log-normal). The results again show that RS and ROC perform better for and n DoFs, respectively, whereas SR is ideal for an equal mixture of DoFs.

Looking at other weighting methods based on the absolute or pairwise dominance notions, different comparison analyses can be also found in the literature.

The first was in [], comparing the CENT decision rule with SWMs (EW, RS, RR, and ROC), together with the dominance measuring methods (OUT I and OUT II) proposed in that paper. The analysis was performed on the basis of simulation techniques using the hit ratio and Kendall’s . It was concluded that ROC was the best method, followed by RR and RS, the dominance measuring methods and the CENT decision rule (identified as the best decision rule).

A similar conclusion was reached in [], where decision rules were compared with the ROC method using the hit ratio, the mean position of the supposedly best alternative in each rule’s ranking, and the proportion of cases where the position was 1, 2, 3, 4, or higher. The ROC method slightly outperformed the decision rules. Note that dominance measuring methods were not considered in this analysis.

In [,], a comparison analysis was carried out accounting for decision rules, the OUT I, OUT II, DME1, and DME2 dominance measuring methods, and the SMAA and SMAA-2 methods. However, weight intervals rather than ordinal information on weights were considered. Later, the SWMs (RS, RR, ROC, and EW), the decision rules, and the DMMs (OUT I, OUT II, DME1, and DME2) were compared in []. To achieve this, simulation techniques were used together with the hit ratio and Kendall’s quality measures. The conclusion reached was that DME2 and ROC outperform the other methods.

The DIM method was proposed in [], which was compared with previous dominance measuring methods (OUT I, DME1, and DME2) and Sarabando and Dias’ method []. Ordinal information about the position of alternatives, the difference in values between consecutive alternatives for the different criteria under consideration, and weights were considered, concluding that the DIM and Sarabando and Dias’ methods perform very similarly for a neutral, risk-prone, and risk-averse DM. Both outperform the other dominance-measuring methods.

Finally, the SWMs (RR, RS, SR, and ROC) and dominance-measuring methods were compared in []. The effects of the generator on dominance intensity methods was studied for the very first time since the different dimensions of generators were not differentiated in either [] or [], affecting both efficiency and robustness analyses. In this paper, criteria weights for DME2 were sampled using DoF, and m attribute values were sampled using mDoF, but normalizing the values. The conclusion was that SR outperforms the other methods in terms of robustness, and DME2’s performance is nowhere near as good as that of more classical SWMs. Thus, the added complexity of DIMs is not outweighed by additional performance. Another important conclusion was that the difference in hit ratios for the two weight generation scenarios ( DoF) does not decrease when the number of criteria is increased.

A similar comparison analysis was performed by the same authors in [], where SWMs (RR, RS, SR, GS, and ROC) were compared to linear programming, concluding that linear programming performs worse than any of the other analysed methods by a wide margin.

4.2. Comparison Analyses When Additional Information Is Provided by the DM

Methods using precise/imprecise cardinal information or based on ratios have been widely compared in the literature addressing methods that either do (direct rating, Max100, Min10, SMART, and interval SMART/SWING) or do not (direct point allocation, tradeoff method, SWING, AHP, discrete choice experiments, the PAPRIKA methodology, and conjoint analysis) establish a ranking of criteria beforehand. We focus on analyses that include at least one method where a ranking of criteria is established beforehand.

The convergent validity of SMART was compared with that of AHP, direct point allocation, SWING, and tradeoff weighting [] on the basis of an Internet experiment [], where weight ratios were used, albeit based on different questions, concluding that the derived weights differ because the DMs are asked to choose their responses from a limited set of numbers. In addition, DMs could select any of the studied methods depending on their personal preferences.

The direct rating (DR) method was compared with the Max100 and Min10 methods on the basis of both empirical data and simulations in []. The weights derived from the Max100 method were somewhat more reliable than those derived from DR, followed by the Min10 method. SWARA was also compared to Max100 and pairwise weight elicitation methods in [], where SWARA is recommended when the DM wants criteria weights to have neither very different nor very close values in relation to each other.

More recently, direct rating, SMARTS, AHP, discrete choice experiments [], the PAPRIKA methodology [], and conjoint analysis [] were compared in []. The authors concluded that SMARTS and AHP can reach trade-offs between complexity and the potential for bias.

In addition, two comparison analyses were carried out in [,], comparing the CROC method with SMART, SMARTS, and direct rating, using empirical data elicited from several DMs and the cognitive effort and practical usefulness and the consistency quality measures. In both papers, five DMs were engaged in decision making on purchasing a car according to seven criteria on two occasions (one week apart).

In [], all participants preferred the SMARTS method over direct rating, but considered that it was difficult to explicitly score each criterion using both methods, and preferences between the SMARTS and CROC methods varied. In addition, the CROC method achieved the most consistent results across the two occasions in both comparison analyses, being the more prescriptively useful method. However, CROC has not been compared with SWARA.

Finally, the FUCOM method yields better results than AHP and the best worst method [], but it has not been compared with CROC and SWARA.

In the case of ranking of differences, MSD was proven to outperform a linear programming-based weighting method (the Slack method) which attempts to minimize the slack of constraints via simulation analysis under different forms of incomplete attribute weights in []. However, no comparison analyses have been carried out with the modification of classical decision rules and dominance-measuring methods, which could also have been applied in this situation.

Regarding the use of a semantic scale to express the strength of the differences between the weights of consecutive criteria, a comparison analysis of the CRS, CRR, CRC, and CSR methods together with Simos’ family weighting methods [,,] and SR and ROC SWMs was performed in [] on the basis of simulation techniques, concluding that CSR outperforms the other methods.

The CRR, CRS, CSR, and CRC methods were again compared to SWMs (RR, RS, SR, and ROC), but also to the DME2 dominance-measuring method in []. The conclusion was the same as in []. CSR clearly outperforms the other methods with regard to robustness. A similar comparison analysis was performed in [], incorporating GS and its extension CGS into the analysis. They again concluded that CSR is generally the best-performing method.

The CAR method was proposed in [] and compared in an empirical experiment with SMART and AHP using an equal combination of and nDoF, concluding that CAR outperforms the other methods in terms of the hit ratio and Kendall’s on the basis of simulated data. An empirical experiment suggests that it also excels in terms of quality measures such as ease of use, amount of time and effort required, and perceived correctness and transparency.

Finally, regarding preference statements, no comparison analysis has been performed accounting for either the extended difference ranking (EDR) method or the flexible and interactive tradeoff (FITradeoff) method.

5. Discussion

The aim of this section is to identify which of the weighting methods described in Section 2 and Section 3 could be recommended depending on the information that the DM is able/willing to provide on the basis of the comparison analyses described in Section 4, or what further comparison analyses should be carried out to arrive at such a recommendation. First, we analyze the situation where the DM provides only a ranking of the criteria under consideration. We then look at the situation where additional information is provided in different ways.

More weighing methods are available for situations where DMs provide only a criteria ranking. These include SWMs, methods based on the notions of absolute and pairwise dominance, and the SMAA-2 method (see Section 2).

As pointed out in Section 4, many comparison analyses are available in the literature concerning these weighting methods. Some focus on comparing SWMs with each other, with decision rules, or with DMMs, whereas other papers compare all of the methods.

Looking at papers focused on the comparison of SWMs, we find that only the EW, RR, RS, and ROC methods were compared in the early comparison analyses, since they were the only SWMs to have been proposed when the analyses were carried out [,,,,,,]. The conclusion was that ROC outperforms the other SWMs. The EW, RR, and RS methods were also outperformed by other SWMs in subsequent analyses (in [] or [] for instance), so they can be discarded for further analysis.

In the comparison analysis performed in [], the SR method outperformed the VLS, ERF, ROD, ROC, and RE methods. The SR method was also the best weighting method in the comparison analysis in [] (compared to ROC, RE, RR, EW, and Simos’ methods).

However, although it is more complex since it incorporates a parameter, GS slightly outperforms SR in [], irrespective of differences in DM behavior, whereas the most recent comparison analysis in [] is inconclusive, since ROC, GS, and SR outperform each other depending on the DoF of the random number generator and the number of attributes/alternatives under consideration.

What about the remaining SWMs (LSOWA, ROT, GRS, IROC, and GROC)? They were analysed comparatively in [], but no recommendation about the best was given since the results differed when different measures were applied. Note that this analysis considered neither the SR nor the GS methods.

So, is it possible to arrive at a recommendation about the best SWM when DMs only provide a criteria ranking? The answer is no, because a comparison of ROC, GS, and SR with LSOWA, ROT, GRS, IROC, and GROC is still missing and should be carried out.

Now, what is the best decision rule or the best dominance measuring method, and how do they fare against SWMs and the SMAA-2 method?

In this respect, different comparison analyses were summarized in Section 4.1, concluding that CENT is the best decision rule []. However, CENT is outperformed by the ROC method [,] and by dominance-measuring methods [,]. Thus, decision rules can be safely discarded. In addition, the best dominance measuring method is DIM [], but it is clearly outperformed by the SR method [], which also outperforms linear programming.

Thus, we conclude that SWMs (SR) outperform decision rules and dominance measuring methods. However, the SMAA-2 has not been compared with SWMs. This is a missing comparative analysis that should be performed to arrive at a final recommendation.

Let us now discuss weighting methods when different forms of additional information are available.

First, with respect to methods where precise/imprecise cardinal information is used to represent the strength of the differences between the weights of consecutive criteria, the CROC method is more consistent and a more prescriptively useful method than SMARTS and direct rating [,].

In addition, although the CROC method itself has not been compared with Simos’ methods, it was demonstrated in [] that the ROC method outperforms Simos’ methods when only a ranking of weights is available. Meanwhile, the CRC method also outperforms Simos’ methods when the differences between the weights of consecutive criteria are represented by a semantic scale []. Therefore, the ROC method and its derivatives designed to account for additional information appear to outperform Simos’ methods. However, CRC method has not been compared to SWARA. Such a comparison analysis would be very useful to identify which method should be recommended in this category.

In the case of ranking of differences, MSD was confirmed to perform better than the Slack method in []. However, no comparisons have been made with the modification of classical decision rules and dominance measuring methods, which could also be applied in this situation. Such a comparison analysis would be very useful to identify which method outperforms the others.

Finally, regarding the use of a semantic scale to express the strength of the differences between the weights of consecutive criteria, CSR outperforms CRR, CRC, and Simos’ family of methods [], but also the SWMs (RR, RS, SR and ROC), and the DME2 dominance-measuring method []. Thus, the CSR method is a good recommendation in this case.

6. Conclusions

In this paper, we focused on weighting methods in MAUT/MAVT when ordinal information on criteria is available and the DM can also provide different forms of additional information to express the strength of the differences between the weights of consecutive criteria of such a ranking, including a ranking of differences, a semantic scale, precise/imprecise cardinal information, or ratios.

The aim was to single out the best weighting methods for different situations depending on the available information, or otherwise identify the missing comparison analyses that should be carried out to reach a recommendation. To achieve this, we reviewed the comparative analyses in the literature based on empirical and simulation data and using different quality measures.

After summarizing the weighting methods reported in the literature to address the different types of available information on weights and analyses comparing their performances, we deliberated on the findings of the different authors and arrived at the following conclusions.

First, to be able to provide a recommendation on which method to use when only ordinal information is available, ROC, SR, and GS need to be compared with LSOWA, ROT, GRS, IROC, and GROC. Additionally, a comparison with the SMAA-2 method is also missing. Note that several SWMs outperform decision rules and dominance measuring methods.

If additional information on the ranking of criteria is available in the form of a ranking of differences, a comparison of MSD with the modification of classical decision rules and dominance measuring methods is also missing. If DMs provide additional information in the form of a semantic scale, then the CSR method is recommended. If DMs are able to provide additional precise/imprecise cardinal information, the comparison of CROC against Simos’s methods and SWARA is missing.

Note that methods where the additional information on the ranking of criteria differs (ranking of differences vs. semantic scale vs. imprecise cardinal information) have not been compared as yet, although a broader range of information should provide better results. Moreover, in real decision-making problems, DMs will select which type of information they are able or willing to provide. Thus, the corresponding recommended method would be directly applied. As a future research line, we propose performing the above-mentioned missing comparison analyses aimed at reaching more robust recommendations.

Other future work includes the incorporation of the recommended weighting methods into WEB-MAUT-DSS, a web-based adaptation and improvement of the GMAA decision support system [,] that is being implemented. One of the improvements provided by WEB-MAUT-DSS will be the incorporation of new weighting methods on top of the existing direct assignment methods or methods based on trade-offs. These new methods will account not only for ordinal information on criteria but also for additional information in terms of the ranking of differences or a semantic scale. It will be up to DMs to select the type of information that they wish to provide. Other weighting methods will be included in future versions of the system.

Author Contributions

Conceptualization, Z.C. and A.J.-M.; methodology, Z.C. and A.J.-M.; formal analysis, Z.C. and A.J.-M.; writing—original draft preparation, Z.C.; writing—review and editing, Z.C. and A.J.-M.; supervision, A.J.-M.; project administration, A.J.-M.; funding acquisition, A.J.-M. All authors have read and agreed to the published version of the manuscript.

Funding

This paper was supported by the Grants PID2021-122209OB-C31 and RED2022-134540-T funded by MICIU/AEI/10.13039/501100011033 and the Regional Government of Aragón and FEDER project LMP35-21.

Data Availability Statement

The original contributions presented in the study are included in the article, further inquiries can be directed to the corresponding author.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Jiménez, A.; Rios-Insua, S.; Mateos, A. A decision support system for multiattribute utility evaluation based on imprecise assignments. Decis. Support Syst. 2003, 36, 65–79. [Google Scholar] [CrossRef]

- Jiménez, A.; Rios-Insua, S.; Mateos, A. A generic multi-attribute analysis system. Comput. Oper. Res. 2006, 33, 1081–1101. [Google Scholar] [CrossRef]

- Belton, V. Multiple Criteria Decision Analysis-Practically the Only Way to Choose. In Operational Research Tutorial Papers; Hendry, L.C., Englese, R.W., Eds.; Operational Research Society: Birmingham, UK, 1990; pp. 53–101. [Google Scholar]

- Raiffa, H. The Art and Science of Negotiation; Harvard University Press: Cambridge, MA, USA, 1982. [Google Scholar]

- Stewart, T.J. Robustness of additive value function method in MCDM. J. Multi-Criteria Decis. Anal. 1996, 5, 301–309. [Google Scholar] [CrossRef]

- Ríos, D.; French, S. A framework for sensitivity analysis in discrete multi-objective decision-making. Eur. J. Oper. Res. 1991, 5, 176–190. [Google Scholar]

- Aguayo, E.A.; Mateos, A.; Jiménez-Martín, A. A new dominance intensity method to deal with ordinal information about a DM’s preferences within MAVT. Knowl. Based Syst. 2014, 69, 159–169. [Google Scholar] [CrossRef]

- Lakmayer, S.; Danielson, M.; Ekenberg, L. Automatically Generated Weight Methods for Human and Machine Decision-Making. In Advances and Trends in Artificial Intelligence. Theory and Applications; Lecture Notes in Computer Science, 13925; Fujita, H., Wang, Y., Xiao, Y., Moonis, A., Eds.; Springer: Berlin/Heidelberg, Germany, 2023; pp. 195–206. [Google Scholar]

- Riabacke, M.; Danielson, M.; Ekenberg, L. State-of-the-art prescriptive criteria weight elicitation. Adv. Decis. Sci. 2012, 2012, 276584. [Google Scholar] [CrossRef]

- Şahin, M.A. A comprehensive analysis of weighting and multicriteria methods in the context of sustainable energy. Int. J. Environ. Sci. Technol. 2021, 18, 1591–1616. [Google Scholar] [CrossRef]

- Sarabando, P.; Dias, L.C. Multi-attribute choice with ordinal information: A comparison of different decision rules. IEEE Trans. Syst. Man Cybern. 2009, 39, 545–554. [Google Scholar] [CrossRef]

- Silva, F.; Souza, C.; Silva, F.F.; Costa, H.; da Hora, H.; Erthal, M. Elicitation of criteria weights for multicriteria models: Bibliometrics, typologies, characteristics and applications. Braz. J. Oper. Prod. Manag. 2021, 18, 1–28. [Google Scholar] [CrossRef]

- Tzeng, G.H.; Chen, T.Y.; Wang, J.C. A weight assessing method with habitual domains. Eur. J. Oper. Res. 1998, 110, 342–367. [Google Scholar] [CrossRef]

- Dong, Y.; Liu, Y.; Liang, H. Strategic weight manipulation in multiple attribute decision making. Omega Int. J. Manag. Sci. 2018, 75, 154–164. [Google Scholar] [CrossRef]

- Wang, Y.M.; Luo, Y. Integration of correlations with standard deviations for determining attribute weights in multiple attribute decision making. Math. Comput. Model. 2010, 5, 1–12. [Google Scholar] [CrossRef]

- Dawes, R.M.; Corrigan, B. Linear models in decision making. Psychol. Bull. 1974, 81, 95–106. [Google Scholar] [CrossRef]

- Stillwell, W.G.; Seaver, D.A.; Edwards, W. A comparison of weight approximation techniques in multiattribute utility decision making. Organ. Behav. Hum. Perform. 1981, 28, 62–77. [Google Scholar] [CrossRef]

- Barron, F.H.; Barrett, B.E. Decision quality using ranked attribute weights. Manag. Sci. 1996, 42, 1515–1523. [Google Scholar] [CrossRef]

- Edwards, W.; Barron, F.H. SMARTS and SMARTER: Improved simple methods for multi-attribute utility measurement. Organ. Behav. Hum. Decis. Process. 1994, 60, 306–325. [Google Scholar] [CrossRef]

- Lootsma, F.A. Multi-Criteria Decision Analysis via Ratio and Difference Judgements; Kluwer: Alphen aan den Rijn, The Netherlands, 1999. [Google Scholar]

- Lootsma, F.A.; Bots, P.W.G. The assignment of scores for output-based research funding. J. Multi-Criteria Decis. Anal. 1999, 8, 44–50. [Google Scholar] [CrossRef]

- Roberts, R.; Goodwin, P. Weights approximations in multi-attribute decision models. J. Multi-Criteria Decis. Anal. 2002, 11, 291–303. [Google Scholar] [CrossRef]

- Burk, N.B.; Nehring, R.M. An empirical comparison of rank-based surrogate weights in additive multiattribute. Decis. Anal. 2023, 20, 1–84. [Google Scholar]

- Jiménez, A.; Mateos, A.; Fernández del Pozo, J.A. Dominance measuring methods for the selection of cleaning services in a European underground transportation company. RAIRO Oper. Res. 2023, 50, 809–824. [Google Scholar] [CrossRef][Green Version]

- Ahn, B.S.; Park, K.S. Least-squared ordered weighted averaging operator weights. Int. J. Intell. Syst. 2008, 23, 33–49. [Google Scholar] [CrossRef]

- Danielson, M.; Ekenberg, L. Rank Ordering Methods for Multicriteria Decisions. In Proceedings of the 14th Group Decision and Negotiation-GDN 2014. Lecture Note in Business Information Processing, Toulouse, France, 10–13 June 2014; Springer: Berlin/Heidelberg, Germany, 2014; Volume 180, pp. 128–135. [Google Scholar]

- Puerto, J.; Mármol, A.M.; Monroy, L.; Fernández, F.R. Decision criteria with partial information. Int. Trans. Oper. Res. 2000, 7, 51–65. [Google Scholar] [CrossRef]

- Salo, A.A.; Hämäläinen, R.P. Preference ratios in multiattribute evaluation (PRIME)-elicitation and decision procedures under incomplete information. IEEE Trans. Syst. Man Cybern. 2001, 31, 533–545. [Google Scholar] [CrossRef]

- Eum, Y.; Park, K.; Kim, H. Establishing dominance and potential optimality in multi-criteria analysis with imprecise weights and value. Comput. Oper. Res. 2001, 28, 397–409. [Google Scholar] [CrossRef]

- Kim, S.H.; Han, C.H. Establishing dominance between alternatives with incomplete information in a hierarchically structured attribute tree. Eur. J. Oper. Res. 2000, 122, 79–90. [Google Scholar] [CrossRef]

- Park, K. Mathematical programming models for characterizing dominance and potential optimality when multicriteria alternative values and weights are simultaneously incomplete. IEEE Trans. Syst. Man Cybern. 2004, 34, 601–614. [Google Scholar] [CrossRef]

- Mateos, A.; Jiménez-Martín, A.; Aguayo, E.A.; Sabio, P. Dominance intensity measuring methods in MCDM with ordinal relations regarding weights. Knowl. Based Syst. 2014, 70, 26–32. [Google Scholar] [CrossRef]

- Lahdelma, R.; Salminen, P. SMAA-2: Stochastic multicriteria acceptability analysis for group decision making. Oper. Res. 2001, 49, 444–454. [Google Scholar] [CrossRef]

- Arbel, A. Approximate articulation of preference and priority derivation. Eur. J. Oper. Res. 1989, 43, 317–326. [Google Scholar] [CrossRef]

- Von Winterfeldt, D.; Edwards, W. Decision Analysis and Behavioral Research; Cambridge University Press: Cambridge, UK, 1986. [Google Scholar]

- Simos, J. Évaluer L’impact sur L’environnement: Une Approche Originale par L’analyse Multicritere et la Négociation; Presses Polytechniques et Universitaires Romandes: Lausanne, Switzerland, 1990. [Google Scholar]

- Simos, J. L’évaluation Environnementale: Un Processus Cognitif Négocié. Ph.D. Thesis, DGF-EPFL, Lausanne, Switzerland, 1990. [Google Scholar]

- Figueira, J.; Roy, B. Determining the weights of criteria in the ELECTRE type methods with a revised Simos’ procedure. Eur. J. Oper. Res. 2002, 139, 317–326. [Google Scholar] [CrossRef]

- Danielson, M.; Ekenberg, L.; Larsson, A.; Riabacke, M. Weighting under ambiguos preferences and imprecise differences in a cardinal rank ordering process. Int. J. Comput. Intell. Syst. 2014, 7, 105–112. [Google Scholar] [CrossRef]

- Riabacke, M.; Danielson, M.; Ekenberg, L.; Larsson, A. A Prescriptive Approach for Eliciting Imprecise Weights Statements in an MCDA Process; Rossi, F., Tsoukis, A., Eds.; Lecture Notes in Artificial Intelligence; Springer: Berlin/Heidelberg, Germany, 2009; Volume 5783, pp. 168–179. [Google Scholar]

- Larsson, A.; Riabacke, M.; Danielson, M.; Ekenberg, L. Cardinal and rank ordering of criteria-Addressing prescription within weight elicitation. Int. J. Inf. Technol. Decis. Mak. 2015, 14, 1299–1330. [Google Scholar] [CrossRef]

- Kersuliene, V.; Zavadskas, E.K.; Turskis, Z. Selection of rational dispute resolution method by applying new step-wise weight assessment ratio analysis (SWARA). J. Bus. Econ. Manag. 2010, 11, 243–258. [Google Scholar] [CrossRef]

- Pearman, A.D. Establishing dominance in multiattribute decision making using an ordered metric method. J. Oper. Res. Soc. 1993, 44, 461–469. [Google Scholar] [CrossRef]

- Malakooti, B. Ranking and screening multiple criteria alternatives with partial information and use of ordinal and cardinal strength of preference. IEEE Trans. Syst. Man Cybern. 2002, 30, 355–368. [Google Scholar] [CrossRef]

- Ahn, B.S. Approximate weighting method for multiattribute decision problems with imprecise parameters. Omega Int. J. Manag. Sci. 2017, 72, 87–95. [Google Scholar] [CrossRef]

- Danielson, M.; Ekenberg, L. The CAR method for using preference strength in multicriteria decision making. Group Decis. Negot. 2016, 25, 775–797. [Google Scholar] [CrossRef]

- de Almeida, A.T.; de Almeida, J.A.; Costa, A.P.; de Almeida Filho, A.T. A new method for elicitation of criteria weights in additive models: Flexible and interactive tradeoff. Eur. J. Oper. Res. 2016, 250, 179–191. [Google Scholar] [CrossRef]

- Bottomley, P.; Doyle, J.R. A comparison of three weight elicitation methods: Good, better, and best. Omega Int. J. Manag. Sci. 2001, 29, 553–560. [Google Scholar] [CrossRef]

- Alfares, H.K.; Duffuaa, S.O. Assigning cardinal weights in multi-criteria decision making based on ordinal ranking. J. Multi-Criteria Decis. Anal. 2008, 15, 125–133. [Google Scholar] [CrossRef]

- O’Hagan, M. Using Maximum Entropy-Based Weighted Averaging to Construct a Fuzzy Neuron. In Proceedings of the 24th Annual IEEE Asilomar Conference on Signals, Systems, and Computers, Lecture Notes in Computer Science, Pacific Grove, CA, USA, 5 October–7 November 1990; Volume 521, pp. 618–623. [Google Scholar]

- Fullér, R.; Majlender, P. An Analytic Approach for Obtaining Maximal Entropy Owa Operator Weights. Fuzzy Sets Syst. 2001, 124, 53–57. [Google Scholar] [CrossRef]

- Yager, R.R. On ordered weighted averaging aggregation operators in multi-criteria decision making. IEEE Trans. Syst. Man, Cybern. 1988, 18, 183–190. [Google Scholar] [CrossRef]

- Liu, D.; Li, T.; Liang, D. An integrated approach towards modelling ranked weights. Comput. Ind. Eng. 2020, 147, 106629. [Google Scholar] [CrossRef]

- Wang, J.; Zionts, S. Using ordinal data to estimate cardinal values. J. Multi-Criteria Decis. Anal. 2015, 22, 185–196. [Google Scholar] [CrossRef]

- Hatefi, M.A. An improved rank order centroid method (IROC) for criteria weight estimation: An application in the engine/vehicle selection problem. Informatica 2023, 34, 1–22. [Google Scholar] [CrossRef]

- Hatefi, M.A.; Balilehvand, H.R. Risk assessment of oil and gas drilling operation: An empirical case using a hybrid GROC-VIMUN-modified FMEA method. Process Saf. Environ. Prot. 2023, 170, 392–402. [Google Scholar] [CrossRef]

- Hatefi, M.A. A new method for weighting decision making attributes: An application in high-tech selection in oil and gas industry. Soft Comput. 2024, 28, 281–303. [Google Scholar] [CrossRef]

- Athanassopoulos, A.D.; Podinovski, V.V. Dominance and potential optimality in multiple criteria decision analysis with imprecise information. J. Oper. Res. Soc. 1997, 48, 142–150. [Google Scholar] [CrossRef]

- Mateos, A.; Ríos-Insua, S.; Jiménez, A. Dominance, potential optimality and alternative ranking in imprecise decision making. J. Oper. Res. Soc. 2007, 58, 326–336. [Google Scholar] [CrossRef]

- Lee, K.; Park, K.; Kim, S. Dominance, potential optimality, imprecise information and hierarchical structure in multi-criteria analysis. Comput. Oper. Res. 2002, 29, 1267–1281. [Google Scholar] [CrossRef]

- Salo, A.; Punkka, A. Rank inclusion in criteria hierarchies. Eur. J. Oper. Res. 2005, 163, 338–356. [Google Scholar] [CrossRef]

- Liesiö, J. RICH Decisions—A Decision Support Software. Systems Analysis Laboratory, Helsinki University of Technology. Available online: http://www.sal.tkk.fi/Opinnot/Mat-2.108/pdf-files/elie02.pdf (accessed on 26 August 2024).

- Ahn, B.S.; Park, K.S. Comparing methods for multi-attribute decision making with ordinal weights. Comput. Oper. Res. 2008, 35, 1660–1670. [Google Scholar] [CrossRef]

- Jiménez, A.; Mateos, A.; Sabio, P. Dominance intensity measure within fuzzy weight oriented MAUT: An application. Omega Int. J. Manag. Sci. 2013, 41, 397–405. [Google Scholar] [CrossRef]

- Lahdelma, R.; Hokkanen, J.; Salminen, P. SMAA-stochastic multiobjective acceptability analysis. Eur. J. Oper. Res. 1998, 106, 137–143. [Google Scholar] [CrossRef]

- Sivageerthi, T.; Bathrinath, S.; Uthayakumar, M.; Bhalaji, R.K. A SWARA method to analyze the risks in coal supply chain management. Mater. Today Proc. 2022, 50, 935–940. [Google Scholar] [CrossRef]

- Edwards, W. How to use multiattribute utility measurement for social decisionmaking. IEEE Trans. Syst. Man Cybern. 1977, 7, 326–340. [Google Scholar] [CrossRef]

- Pamučar, D.; Stević, Ž.; Sremac, S. A new model for determining weight coefficients of criteria in MCDM models: Full consistency method (FUCOM). Symmetry 2018, 10, 393. [Google Scholar] [CrossRef]

- Ahn, B.S. Extending Malakooti’s model for ranking multicriteria alternatives with preference strength and partial information. IEEE Trans. Syst. Man, Cybern. 2003, 33, 281–287. [Google Scholar]

- Sarabando, P.; Dias, L.C. Simple procedures of choice in multicriteria problems without precise information about the alternatives’ values. Comput. Oper. Res. 2010, 37, 2239–2247. [Google Scholar] [CrossRef]

- Danielson, M.; Ekenberg, L. A robustness study of state-of-the-art surrogate weights for MCDM. Group Decis. Negot. 2017, 26, 677–691. [Google Scholar] [CrossRef]

- Danielson, M.; Ekenberg, L.; He, Y. Augmenting ordinal methods of attribute weight approximation. Decis. Anal. 2014, 11, 21–26. [Google Scholar] [CrossRef]

- Lakmayer, S.; Danielson, M.; Ekenberg, L. Aspects of Ranking Algorithms in Multi-Criteria Decision Support Systems. In New Trends in Intelligent Software Methodologies, Tools and Techniques; Fujita, H., Guizzi, G., Eds.; IOS Press: Clifton, VA, USA, 2023; pp. 63–75. [Google Scholar]

- Danielson, M.; Ekenberg, L. An improvement to swing techniques for elicitation in MCDM methods. Knowl. Based Syst. 2019, 168, 70–79. [Google Scholar] [CrossRef]

- Frej, E.A.; de Almeida, A.T.; Costa, A.P.C.S. Using data visualization for ranking alternatives with partial information and interactive tradeoff elicitation. Oper. Res. Int. J. 2019, 19, 909–931. [Google Scholar] [CrossRef]

- de Almeida, A.T.; Frej, E.A.; Roselli, L.R.P. DSS for Multicriteria Preference Modeling with Partial Information and Its Modulation with Behavioral Studies. In EURO Working Group on DSS; Integrated Series in Information Systems; Papathanasiou, J., Zaraté, P., de Sousa, J.F., Eds.; Springer: Berlin/Heidelberg, Germany, 2021; pp. 213–238. [Google Scholar]