User Experience and Usability of Voice User Interfaces: A Systematic Literature Review

Abstract

:1. Introduction

- Identification of the top contributing countries, authors, institutions, and sources in the area of user experience, usability, and voice user interfaces;

- Development of a classification framework to classify user experience, usability, and voice user interface research papers on the basis of relevant commonalities;

- Development of a future research agenda in the field.

2. Research Methodology

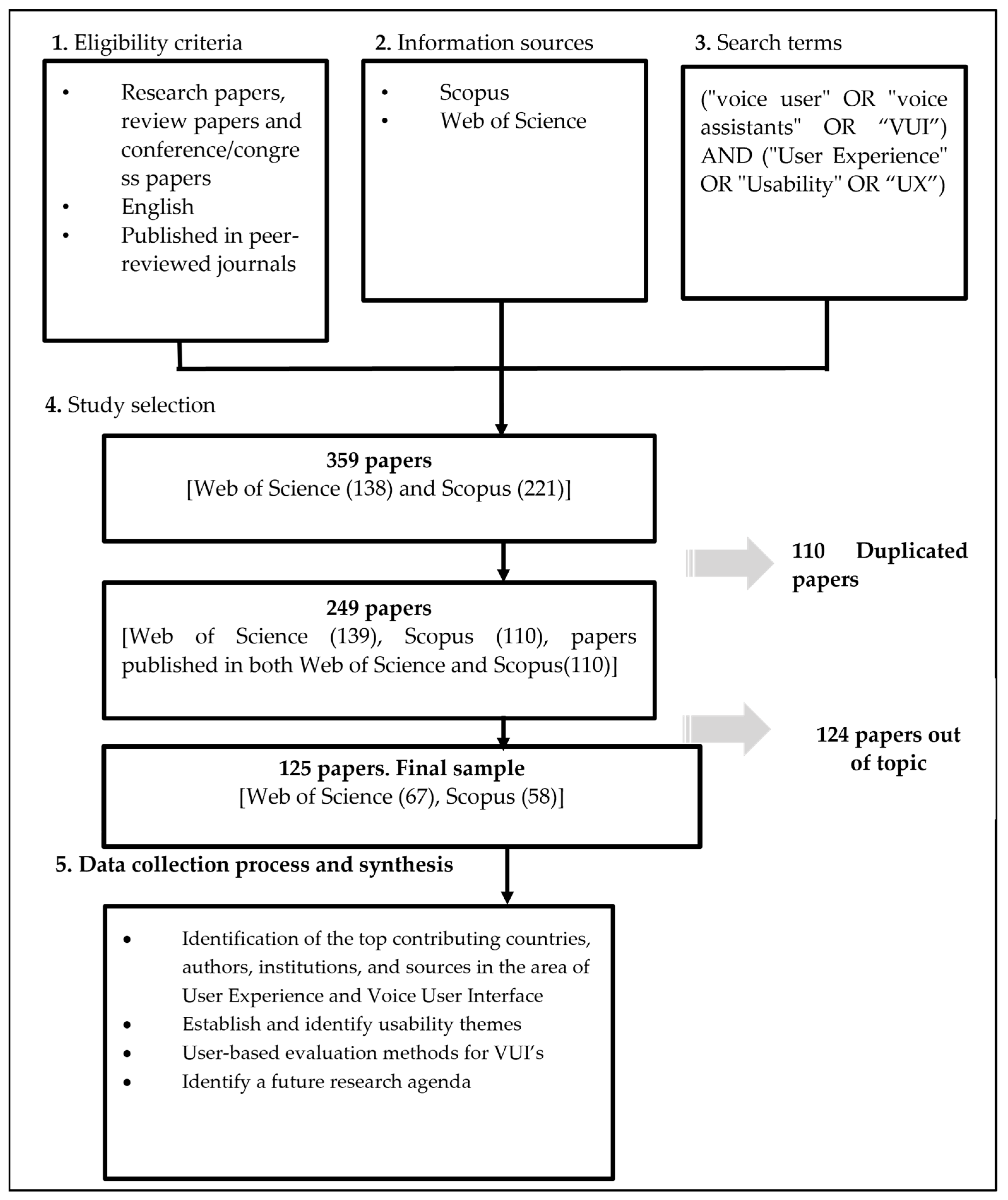

2.1. Eligibility Criteria

2.2. Information Sources

2.3. Search Terms

2.4. Study Selection

2.5. Data Collection Process and Synthesis

3. Bibliometric Analysis

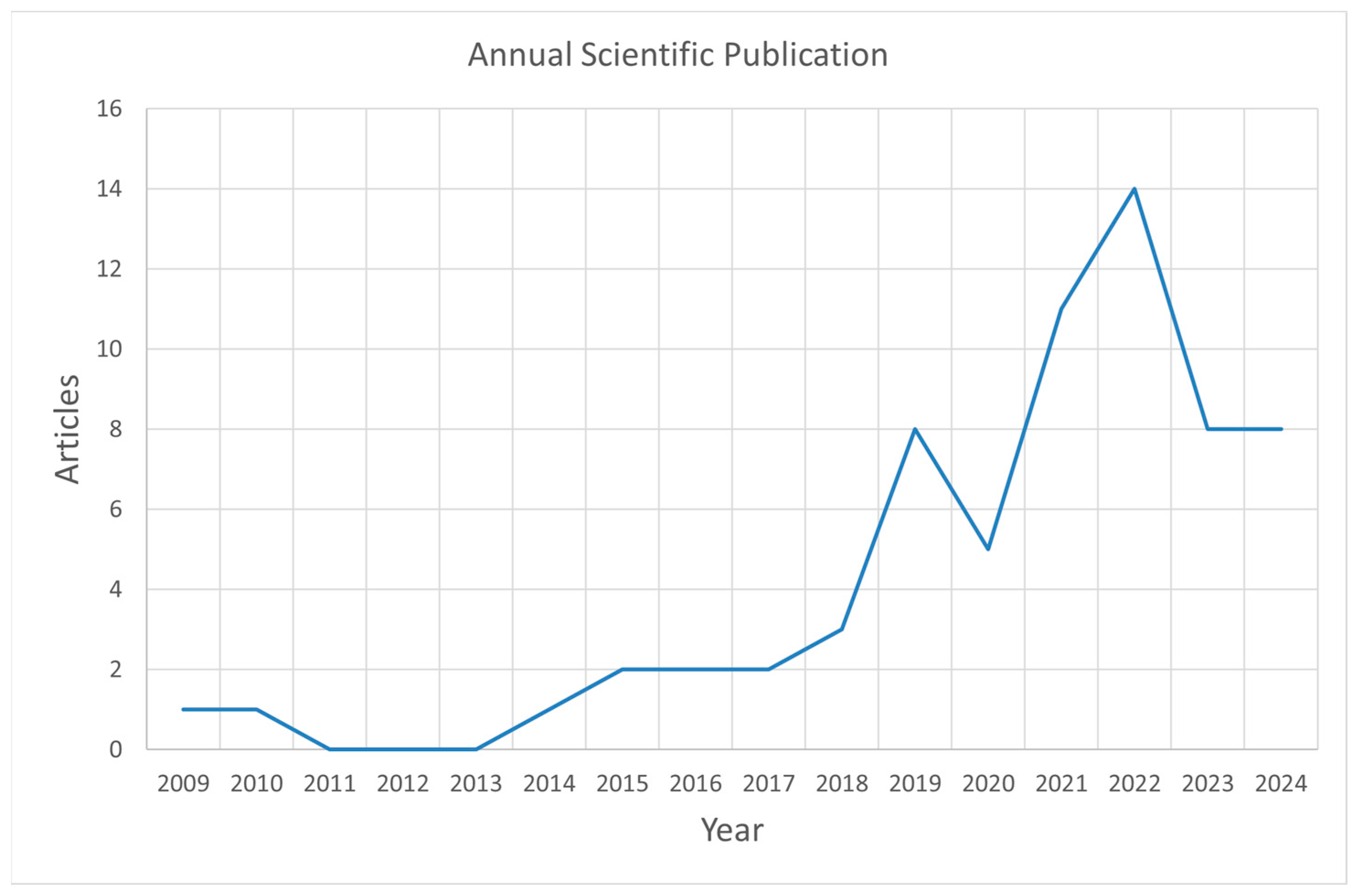

3.1. Trend in Annual Scientific Publications

3.2. Most Influential Authors

3.3. Most Influential Countries

3.4. Top Contributing Institutions

3.5. Most-Cited Papers

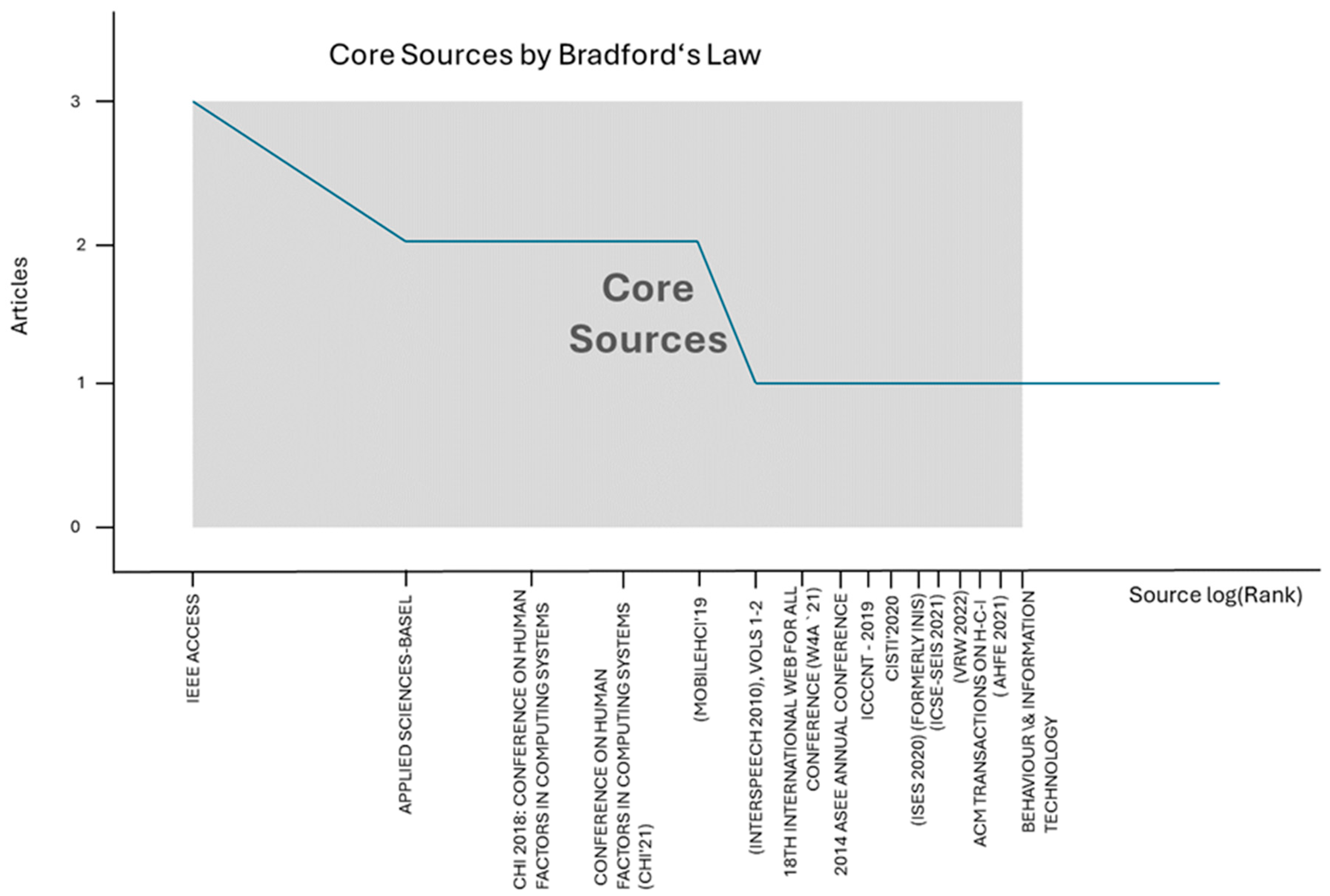

3.6. Source Analysis through Bradford’s Law

4. Classification Framework

5. Future Research Agenda

6. Discussion

- (1)

- According to [12,84] a systematic literature review should be written when there is a substantial body of work in the domain (at least 40 articles for review) and no systematic literature review has been conducted in the field in recent years (within the last 5 years). Therefore, this paper covers a gap in the domain of user experience and voice user interfaces, because this is the first systematic review in the field. Other systematic reviews related to user experience and voice user interfaces focused on different subjects, such as the identification of the scales used for measuring UX of voice assistants, as well as assessing the rigor of operationalization during the development of these scales [44], the synthesis of current knowledge on how proactive behavior has been implemented in voice assistants and under what conditions proactivity has been found more or less suitable [85], or the identification of the usability measures currently used for voice assistants [86];

- (2)

- According to [14], descriptive statistics (e.g., frequency tables) should be used to summarize the basic information on the topic gathered over time in systematic reviews. This paper uses bibliometric statistical analysis techniques to show significant information in the user experience and voice user interface theory domain, such as the top contributing countries, authors, institutions, and sources;

- (3)

- According to [14,87] to make a theoretical contribution, it is not enough to merely report on the previous literature. Systematic literature reviews should focus on identifying new frameworks, promoting the objective discovery of knowledge clusters, or identifying major research streams. Through a content analysis, this paper proposes a classification framework composed of six research categories that shows different ways of contributing to the current state of knowledge on the topic: user experience and usability measurement and evaluation; usability engineering and human–computer interaction; voice assistant design and personalization; privacy, security, and ethical issues; cross-cultural usability and demographic studies; and technological challenges and applications;

- (4)

- According to [12], to make a theoretical contribution, systematic literature reviews can focus on identifying a research agenda. However, this research agenda should follow and accompany another form of synthesis, such as a taxonomy or framework. This paper synthesizes the future research challenges in each research category of the proposed classification framework.

7. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Jain, S.; Basu, S.; Dwivedi, Y.K.; Kaur, S. Interactive voice assistants–Does brand credibility assuage privacy risks? J. Bus. Res. 2022, 139, 701–717. [Google Scholar] [CrossRef]

- Cha, M.C.; Kim, H.C.; Ji, Y.G. The unit and size of information supporting auditory feedback for voice user interface. Int. J. Hum.-Comput. Interact. 2024, 40, 3071–3080. [Google Scholar] [CrossRef]

- Lee, S.; Oh, J.; Moon, W.K. Adopting Voice Assistants in Online Shopping: Examining the Role of Social Presence, Performance Risk, and Machine Heuristic. Int. J. Hum. Comput. Interact. 2023, 39, 2978–2992. [Google Scholar] [CrossRef]

- Chatterjee, K.; Raju, M.; Selvamuthukumaran, N.; Pramod, M.; Krishna Kumar, B.; Bandyopadhyay, A.; Mallik, S. HaCk: Hand Gesture Classification Using a Convolutional Neural Network and Generative Adversarial Network-Based Data Generation Model. Information 2024, 15, 85. [Google Scholar] [CrossRef]

- Fulfagar, L.; Gupta, A.; Mathur, A.; Shrivastava, A. Development and evaluation of usability heuristics for voice user interfaces. In Design for Tomorrow—Volume 1: Proceedings of ICoRD 2021; Springer: Singapore, 2021; pp. 375–385. [Google Scholar]

- Simor, F.W.; Brum, M.R.; Schmidt, J.D.E.; Rieder, R.; De Marchi, A.C.B. Usability evaluation methods for gesture-based games: A systematic review. JMIR Serious Games 2016, 4, e5860. [Google Scholar] [CrossRef]

- Klein, A.M.; Kölln, K.; Deutschländer, J.; Rauschenberger, M. Design and Evaluation of Voice User Interfaces: What Should One Consider? In International Conference on Human-Computer Interaction; Springer Nature: Cham, Switzerland, 2023; pp. 167–190. [Google Scholar]

- Alrumayh, A.S.; Tan, C.C. VORI: A framework for testing voice user interface interactability. High-Confid. Comput. 2022, 2, 100069. [Google Scholar] [CrossRef]

- Iniguez-Carrillo, A.L.; Gaytan-Lugo, L.S.; Garcia-Ruiz, M.A.; Maciel-Arellano, R. Usability Questionnaires to Evaluate Voice User Interfaces. IEEE Lat. Am. Trans. 2021, 19, 1468–1477. [Google Scholar] [CrossRef]

- Klein, A. Toward a user experience tool selector for voice user interfaces. In Proceedings of the 18th International Web for All Conference (W4A ‘21), Ljubljana, Slovenia, 19–20 April 2021; Association for Computing Machinery: New York, NY, USA, 2021; pp. 1–2. [Google Scholar] [CrossRef]

- Deshmukh, A.M.; Chalmeta, R. Validation of System Usability Scale as a usability metric to evaluate voice user interfaces. PeerJ Comput. Sci. 2023, 10, e1918. [Google Scholar] [CrossRef]

- Paul, J.; Criado, A.R. The art of writing literature review: What do we know and what do we need to know? Int. Bus. Rev. 2020, 29, 101717. [Google Scholar] [CrossRef]

- Lytras, M.D.; Visvizi, A.; Daniela, L.; Sarirete, A.; Ordonez De Pablos, P. Social networks research for sustainable smart education. Sustainability 2018, 10, 2974. [Google Scholar] [CrossRef]

- Linnenluecke, M.K.; Marrone, M.; Singh, A.K. A Conducting systematic literature reviews and bibliometric analyses. Aust. J. Manag. 2020, 45, 175–194. [Google Scholar] [CrossRef]

- Liberati, A.; Altman, D.G.; Tetzlaff, J.; Mulrow, C.; Gøtzsche, P.C.; Ioannidis, J.P.; Clarke, M.; Devereaux, P.J.; Kleijnen, J.; Moher, D. The PRISMA statement for reporting systematic reviews and meta-analyses of studies that evaluate health care interventions: explanation and elaboration. Ann. Intern. Med. 2009, 151, 65. [Google Scholar] [CrossRef] [PubMed]

- Ramos-Rodríguez, A.R.; Ruíz-Navarro, J. Changes in the intellectual structure of strategic management research: A bibliometric study of the Strategic Management Journal, 1980–2000. Strateg. Manag. J. 2004, 25, 981–1004. [Google Scholar] [CrossRef]

- Martínez-López, F.J.; Merigó, J.M.; Valenzuela-Fernández, L.; Nicolás, C. Fifty years of the European Journal of Marketing: a bibliometric analysis. Eur. J. Mark. 2018, 52, 439–468. [Google Scholar] [CrossRef]

- Donthu, N.; Kumar, S.; Mukherjee, D.; Pandey, N.; Lim, W.M. How to conduct a bibliometric analysis: An overview and guidelines. J. Bus. Res. 2021, 133, 285–296. [Google Scholar] [CrossRef]

- Aria, M.; Cuccurullo, C. bibliometrix: An R-tool for comprehensive science mapping analysis. J. Informetr. 2017, 11, 959–975. [Google Scholar] [CrossRef]

- Derviş, H. Bibliometric analysis using bibliometrix an R package. J. Scientometr. Res. 2019, 8, 156–160. [Google Scholar] [CrossRef]

- Collier, D. Comparative method in the 1990s. CP Newsl. Comp. Politics Organ. Sect. Am. Political Sci. Assoc. 1998, 9, 1–2. [Google Scholar]

- Seuring, S.; Gold, S. Conducting content analysis-based literature reviews in supply chain management. Supply Chain. Manag. Int. J. 2012, 17, 544–555. [Google Scholar] [CrossRef]

- Chalmeta, R.; Barbeito-Caamaño, A.M. Framework for using online social networks for sustainability awareness. Online Inf. Rev. 2024, 48, 334–353. [Google Scholar] [CrossRef]

- Hirsch, J.E. An index to quantify an individual’s scientific research output. Proc. Natl. Acad. Sci. USA 2005, 102, 16569–16572. [Google Scholar] [CrossRef] [PubMed]

- Egghe, L. Theory and Practise of the g-Index; Springer: Berlin/Heidelberg, Germany, 2006. [Google Scholar]

- Batista, P.D.; Campiteli, M.G.; Kinouchi, O. Is it possible to compare researchers with different scientific interests? Scientometrics 2006, 68, 179–189. [Google Scholar] [CrossRef]

- Myers, C.; Furqan, A.; Nebolsky, J.; Caro, K.; Zhu, J. Patterns for how users overcome obstacles in voice user interfaces. In Proceedings of the 2018 CHI Conference on Human Factors in Computing Systems, Montreal, QC, Canada, 21–26 April 2018; pp. 1–7. [Google Scholar]

- Alepis, E.; Patsakis, C. Monkey says, monkey does: Security and privacy on voice assistants. IEEE Access 2017, 5, 17841–17851. [Google Scholar] [CrossRef]

- Corbett, E.; Weber, A. What can I say? addressing user experience challenges of a mobile voice user interface for accessibility. In Proceedings of the 18th International Conference on Human-Computer Interaction with Mobile Devices and Services, Florence, Italy, 6–9 September 2016; pp. 72–82. [Google Scholar]

- Sayago, S.; Neves, B.B.; Cowan, B.R. Voice assistants and older people: some open issues. In Proceedings of the 1st International Conference on Conversational User Interfaces, Dublin Ireland, 22–23 August 2019; pp. 1–3. [Google Scholar]

- Myers, C.M.; Furqan, A.; Zhu, J. The impact of user characteristics and preferences on performance with an unfamiliar voice user interface. In Proceedings of the 2019 CHI Conference on Human Factors in Computing Systems, Glasgow, UK, 4–9 May 2019; pp. 1–9. [Google Scholar]

- Kim, D.H.; Lee, H. Effects of user experience on user resistance to change to the voice user interface of an in-vehicle infotainment system: Implications for platform and standards competition. Int. J. Inf. Manag. 2016, 36, 653–667. [Google Scholar] [CrossRef]

- Pal, D.; Roy, P.; Arpnikanondt, C.; Thapliyal, H. The effect of trust and its antecedents towards determining users’ behavioral intention with voice-based consumer electronic devices. Heliyon 2022, 8, e09271. [Google Scholar] [CrossRef]

- Reeves, S.; Porcheron, M.; Fischer, J.E.; Candello, H.; McMillan, D.; McGregor, M.; Moore, R.J.; Sikveland, R.; Taylor, A.S.; Velkovska, J.; et al. Voice-based conversational ux studies and design. In Proceedings of the Extended Abstracts of the 2018 CHI Conference on Human Factors in Computing Systems, Montreal, QC, Canada, 21–26 April 2018; pp. 1–8. [Google Scholar]

- Seaborn, K.; Urakami, J. Measuring voice UX quantitatively: A rapid review. In Proceedings of the Extended Abstracts of the 2021 CHI Conference on Human Factors in Computing Systems, Yokohama, Japan, 8–13 May 2021; pp. 1–8. [Google Scholar]

- Bradford, S.C. Sources of information on specific subjects. Engineering 1934, 137, 85–86. [Google Scholar]

- Klein, A.M.; Kollmorgen, J.; Hinderks, A.; Schrepp, M.; Rauschenberger, M.; Escalona, M.J. Validation of the Ueq+ Scales for Voice Quality. SSRN 2024, preprint. [Google Scholar]

- Cataldo, R.; Friel, M.; Grassia, M.G.; Marino, M.; Zavarrone, E. Importance Performance Matrix Analysis for Assessing User Experience with Intelligent Voice Assistants: A Strategic Evaluation. Soc. Indic. Res. 2024, 1–27. [Google Scholar] [CrossRef]

- Kumar, A.; Bala, P.K.; Chakraborty, S.; Behera, R.K. Exploring antecedents impacting user satisfaction with voice assistant app: A text mining-based analysis on Alexa services. J. Retail. Consum. Serv. 2024, 76, 103586. [Google Scholar] [CrossRef]

- Haas, G. Towards Auditory Interaction: An Analysis of Computer-Based Auditory Interfaces in Three Settings. Ph.D. Thesis, Universität Ulm, Ulm, Germany, 2023. [Google Scholar]

- Mont’Alvão, C.; Maués, M. Personified Virtual Assistants: Evaluating Users’ Perception of Usability and UX. In Handbook of Usability and User-Experience; CRC Press: Boca Raton, FL, USA, 2022; pp. 269–288. [Google Scholar]

- Kang, W.; Shao, B.; Zhang, Y. How Does Interactivity Shape Users’ Continuance Intention of Intelligent Voice Assistants? Evidence from SEM and fsQCA. Psychol. Res. Behav. Manag. 2024, 17, 867–889. [Google Scholar] [CrossRef]

- Klein, A.M.; Deutschländer, J.; Kölln, K.; Rauschenberger, M.; Escalona, M.J. Exploring the context of use for voice user interfaces: Toward context-dependent user experience quality testing. J. Softw. Evol. Process 2024, 36, 2618. [Google Scholar] [CrossRef]

- Faruk, L.I.D.; Babakerkhell, M.D.; Mongkolnam, P.; Chongsuphajaisiddhi, V.; Funilkul, S.; Pal, D. A review of subjective scales measuring the user experience of voice assistants. IEEE Access. 2024, 12, 14893–14917. [Google Scholar] [CrossRef]

- Grossman, T.; Fitzmaurice, G.; Attar, R. A survey of software learnability: metrics, methodologies and guidelines. In Proceedings of the Sigchi Conference on Human Factors in Computing Systems, Boston, MA, USA, 4–9 April 2009; pp. 649–658. [Google Scholar]

- Zargham, N.; Bonfert, M.; Porzel, R.; Doring, T.; Malaka, R. Multi-agent voice assistants: An investigation of user experience. In Proceedings of the 20th International Conference on Mobile and Ubiquitous Multimedia, Leuven, Belgium, 5–8 December 2021; pp. 98–107. [Google Scholar]

- Faden, R.R.; Beauchamp, T.L.; Kass, N.E. Informed consent, comparative effectiveness, and learning health care. N. Engl. J. Med. 2014, 370, 766–768. [Google Scholar] [CrossRef] [PubMed]

- Wu, Y.; Rough, D.; Bleakley, A.; Edwards, J.; Cooney, O.; Doyle, P.R.; Clark, L.; Cowan, B.R. October. See what I’m saying? Comparing intelligent personal assistant use for native and non-native language speakers. In Proceedings of the 22nd International Conference on Human-Computer Interaction with Mobile Devices and Services, Oldenburg, Germany, 5–8 October 2020; pp. 1–9. [Google Scholar]

- Murad, C.; Tasnim, H.; Munteanu, C. Voice-First Interfaces in a GUI-First Design World”: Barriers and Opportunities to Supporting VUI Designers On-the-Job. In Proceedings of the 4th Conference on Conversational User Interfaces, Glasgow, UK, 26–28 July 2022; pp. 1–10. [Google Scholar]

- Jannach, D.; Manzoor, A.; Cai, W.; Chen, L. A survey on conversational recommender systems. ACM Comput. Surv. CSUR 2021, 54–55, 1–36. [Google Scholar] [CrossRef]

- Kim, H.; Ham, S.; Promann, M.; Devarapalli, H.; Kwarteng, V.; Bihani, G.; Ringenberg, T.; Bilionis, I.; Braun, J.E.; Rayz, J.T.; et al. MySmartE-A Cloud-Based Smart Home Energy Application for Energy-Aware Multi-unit Residential Buildings. ASHRAE Trans. 2023, 129, 667–675. [Google Scholar]

- Kim, H.; Ham, S.; Promann, M.; Devarapalli, H.; Bihani, G.; Ringenberg, T.; Kwarteng, V.; Bilionis, I.; Braun, J.E.; Rayz, J.T.; et al. MySmartE–An eco-feedback and gaming platform to promote energy conserving thermostat-adjustment behaviors in multi-unit residential buildings. Build. Environ. 2022, 221, 109252. [Google Scholar] [CrossRef]

- Lowdermilk, T. User-Centered Design: A Developer’s Guide to Building User-Friendly Applications; O‘Reilly Media, Inc.: Sebastopol, CA, USA, 2013. [Google Scholar]

- Dirin, A.; Laine, T.H. User experience in mobile augmented reality: emotions, challenges, opportunities and best practices. Computers 2018, 7, 33. [Google Scholar] [CrossRef]

- Gilles, M.; Bevacqua, E. A review of virtual assistants’ characteristics: Recommendations for designing an optimal human–machine cooperation. J. Comput. Inf. Sci. Eng. 2022, 22, 050904. [Google Scholar] [CrossRef]

- Johnson, J.; Finn, K. Designing User Interfaces for an Aging Population: Towards Universal Design; Morgan Kaufmann: Burlington, MA, USA, 2017. [Google Scholar]

- Waytz, A.; Cacioppo, J.; Epley, N. Who sees human? The stability and importance of individual differences in anthropomorphism. Perspect. Psychol. Sci. 2010, 5, 219–232. [Google Scholar] [CrossRef]

- Völkel, S.T.; Schoedel, R.; Kaya, L.; Mayer, S. User perceptions of extraversion in chatbots after repeated use. In Proceedings of the 2022 CHI Conference on Human Factors in Computing Systems, New Orleans, LA, USA, 29 April–5 May 2022; pp. 1–18. [Google Scholar]

- Reinecke, K.; Bernstein, A. Improving performance, perceived usability, and aesthetics with culturally adaptive user interfaces. ACM Trans. Comput.-Hum. Interact. TOCHI 2011, 18, 1–29. [Google Scholar] [CrossRef]

- Schmidt, D.; Nebe, K.; Lallemand, C. Sentence Completion as a User Experience Research Method: Recommendations From an Experimental Study. Interact. Comput. 2024, 36, 48–61. [Google Scholar] [CrossRef]

- Munir, T.; Akbar, M.S.; Ahmed, S.; Sarfraz, A.; Sarfraz, Z.; Sarfraz, M.; Felix, M.; Cherrez-Ojeda, I. A systematic review of internet of things in clinical laboratories: Opportunities, advantages, and challenges. Sensors 2022, 22, 8051. [Google Scholar] [CrossRef] [PubMed]

- Jha, R.; Fahim, M.F.H.; Hassan, M.A.M.; Rai, C.; Islam, M.M.; Sah, R.K. Analyzing the Effectiveness of Voice-Based User Interfaces in Enhancing Accessibility in Human-Computer Interaction. In Proceedings of the 2024 IEEE 13th International Conference on Communication Systems and Network Technologies (CSNT), Jabalpur, India, 6–7 April 2024; pp. 777–781. [Google Scholar]

- Sun, K.; Xia, C.; Xu, S.; Zhang, X. StealthyIMU: Stealing Permission-protected Private Information From Smartphone Voice Assistant Using Zero-Permission Sensors; Network and Distributed System Security (NDSS) Symposium: San Diego, CA, USA, 2023. [Google Scholar]

- Flavián, C.; Guinalíu, M. Consumer trust, perceived security and privacy policy: three basic elements of loyalty to a web site. Ind. Manag. Data Syst. 2006, 106, 601–620. [Google Scholar] [CrossRef]

- Sin, J.; Munteanu, C.; Ramanand, N.; Tan, Y.R. VUI influencers: How the media portrays voice user interfaces for older adults. In Proceedings of the 3rd Conference on Conversational User Interfaces, Bilbao, Spain, 27–29 July 2021; pp. 1–13. [Google Scholar]

- Pyae, A.; Scifleet, P. Investigating differences between native English and non-native English speakers in interacting with a voice user interface: A case of Google Home. In Proceedings of the 30th Australian Conference on Computer-Human Interaction, Melbourne, Australia, 4–7 December 2018; pp. 548–553. [Google Scholar]

- Pearl, C. Designing Voice User Interfaces: Principles of Conversational Experiences; O‘Reilly Media, Inc.: Sebastopol, CA, USA, 2016. [Google Scholar]

- Terzopoulos, G.; Satratzemi, M. Voice assistants and smart speakers in everyday life and in education. Inform. Educ. 2020, 19, 473–490. [Google Scholar] [CrossRef]

- Kuriakose, B.; Shrestha, R.; Sandnes, F.E. Exploring the User Experience of an AI-based Smartphone Navigation Assistant for People with Visual Impairments. In Proceedings of the 15th Biannual Conference of the Italian SIGCHI Chapter, Torino, Italy, 20–22 September 2023; pp. 1–8. [Google Scholar]

- Pyae, A. A usability evaluation of the Google Home with non-native English speakers using the system usability scale. Int. J. Netw. Virtual Organ. 2022, 26, 172–194. [Google Scholar] [CrossRef]

- Tuan, Y.L.; Beygi, S.; Fazel-Zarandi, M.; Gao, Q.; Cervone, A.; Wang, W.Y. Towards large-scale interpretable knowledge graph reasoning for dialogue systems. arXiv 2022, arXiv:2203.10610. [Google Scholar]

- Ma, Y. Emotion-Aware Voice Interfaces Based on Speech Signal Processing. Ph.D. Thesis, Universität Ulm, Ulm, Germany, 2022. [Google Scholar]

- Kendall, L.; Chaudhuri, B.; Bhalla, A. Understanding technology as situated practice: everyday use of voice user interfaces among diverse groups of users in urban India. Inf. Syst. Front. 2020, 22, 585–605. [Google Scholar] [CrossRef]

- Ostrowski, A.K.; Fu, J.; Zygouras, V.; Park, H.W.; Breazeal, C. Speed dating with voice user interfaces: understanding how families interact and perceive voice user interfaces in a group setting. Front. Robot. AI 2022, 8, 730992. [Google Scholar] [CrossRef]

- Kaye, J.J.; Fischer, J.; Hong, J.; Bentley, F.R.; Munteanu, C.; Hiniker, A.; Tsai, J.Y.; Ammari, T. Panel: voice assistants, UX design and research. In Proceedings of the Extended Abstracts of the 2018 CHI Conference on Human Factors in Computing Systems, Montreal, QC, Canada, 21–26 April 2018; pp. 1–5. [Google Scholar]

- Sekkat, C.; Leroy, F.; Mdhaffar, S.; Smith, B.P.; Estève, Y.; Dureau, J.; Coucke, A. Sonos Voice Control Bias Assessment Dataset: A Methodology for Demographic Bias Assessment in Voice Assistants. arXiv 2024, arXiv:2405.19342. [Google Scholar]

- Varsha, P.S.; Akter, S.; Kumar, A.; Gochhait, S.; Patagundi, B. The impact of artificial intelligence on branding: a bibliometric analysis (1982–2019). J. Glob. Inf. Manag. JGIM 2021, 29, 221–246. [Google Scholar] [CrossRef]

- Karat, C.M.; Vergo, J.; Nahamoo, D. Conversational interface technologies. In The Human-Computer Interaction Handbook; Springer: Berlin/Heidelberg, Germany, 2002; pp. 169–186. [Google Scholar]

- Tennant, R. Supporting Caregivers in Complex Home Care: Towards Designing a Voice User Interface. Master’s Thesis, University of Waterloo, Waterloo, Canada, 2021. [Google Scholar]

- Lazar, A.; Thompson, H.; Demiris, G. A systematic review of the use of technology for reminiscence therapy. Health Educ. Behav. 2014, 41 (Suppl. S1), 51S–61S. [Google Scholar] [CrossRef] [PubMed]

- Murray, M.T.; Penney, S.P.; Landman, A.; Elias, J.; Mullen, B.; Sipe, H.; Hartog, B.; Richard, E.; Xu, C.; Solomon, D.H. User experience with a voice-enabled smartphone app to collect patient-reported outcomes in rheumatoid arthritis. Clin. Exp. Rheumatol. 2022, 40, 882–889. [Google Scholar] [CrossRef]

- Diederich, S.; Brendel, A.B.; Morana, S.; Kolbe, L. On the design of and interaction with conversational agents: An organizing and assessing review of human-computer interaction research. J. Assoc. Inf. Syst. 2022, 23, 96–138. [Google Scholar] [CrossRef]

- Tranfield, D.; Denyer, D.; Smart, P. Towards a methodology for developing evidence-informed management knowledge by means of systematic review. Br. J. Manag. 2003, 14, 207–222. [Google Scholar] [CrossRef]

- Paul, J.; Lim, W.M.; O’Cass, A.; Hao, A.W.; Bresciani, S. Scientific procedures and rationales for systematic literature reviews (SPAR-4-SLR). Int. J. Consum. Stud. 2021, 45, O1–O16. [Google Scholar] [CrossRef]

- Bérubé, C.; Nißen, M.; Vinay, R.; Geiger, A.; Budig, T.; Bhandari, A.; Kocaballi, A.B. Proactive behavior in voice assistants: A systematic review and conceptual model. Comput. Hum. Behav. Rep. 2024, 14, 100411. [Google Scholar] [CrossRef]

- Dutsinma, F.L.I.; Pal, D.; Funilkul, S.; Chan, J.H. A systematic review of voice assistant usability: An ISO 9241–11 approach. SN Comput. Sci. 2022, 3, 267. [Google Scholar] [CrossRef]

- Mukherjee, D.; Marc, W.; Kumar, S.; Donthu, N. Guidelines for advancing theory and practice through bibliometric research. J. Bus. Res. 2022, 148, 101–115. [Google Scholar] [CrossRef]

| Database | Keywords | Content | Period | Document | Language |

|---|---|---|---|---|---|

| Scopus and Web of Science | (“voice user” OR “voice assistants” OR “VUI”) AND (“User Experience” OR “Usability” OR “UX”) | TITLE ABS KEY | Until July 2024 | Research and reviews Journal and conference papers | English |

| Author | No. of Publications | Total Citations | h_Index | g_Index | m_Index | Start of Publication Year |

|---|---|---|---|---|---|---|

| Klein A. | 4 | 7 | 2 | 2 | 0.4 | 2020 |

| Munteanu C. | 4 | 16 | 2 | 4 | 0.285714286 | 2018 |

| Myers C. | 3 | 150 | 3 | 3 | 0.428571429 | 2018 |

| Pal D. | 3 | 30 | 2 | 3 | 0.333333333 | 2019 |

| Arpnikanondt C. | 2 | 29 | 2 | 2 | 0.333333333 | 2019 |

| Furqan A. | 2 | 145 | 2 | 2 | 0.285714286 | 2018 |

| Li J. | 2 | 5 | 2 | 2 | 1 | 2023 |

| Moore R. | 2 | 19 | 2 | 2 | 0.285714286 | 2018 |

| Thomaschewski J. | 2 | 5 | 2 | 2 | 0.4 | 2020 |

| Country | No. of Publication |

|---|---|

| USA | 55 |

| Germany | 24 |

| Canada | 21 |

| China | 16 |

| India | 13 |

| Spain | 13 |

| South Korea | 12 |

| Australia | 7 |

| Japan | 7 |

| Thailand | 7 |

| Institutes | Articles |

|---|---|

| University of Toronto | 10 |

| King Mongkuts Univ Technol Thonburi | 7 |

| Universidad de Sevilla | 7 |

| Drexel University | 6 |

| University of Waterloo | 5 |

| Samsung R&D Inst | 4 |

| Seoul National University | 4 |

| Tokyo Inst Technol | 4 |

| University of Applied Sciences Emden/Leer | 4 |

| Kookmin Univ | 3 |

| References | Contribution | Total Citations | Total Citations Per Year |

|---|---|---|---|

| [27] | The main contribution of the paper is identifying obstacle categories and user tactics in interacting with the VUI calendar system DiscoverCal, revealing that NLP errors are common but less frustrating than other obstacles, with users often resorting to “guessing” over using visual aids or knowledge recall. | 113 | 16.1 |

| [28] | The paper demonstrates significant security risks of smart devices with voice assistants, amplified by operating systems and IoT connectivity. It analyzes how these attacks can be launched and their real-world impacts. | 95 | 11.9 |

| [29] | This paper reports on research aimed at improving the visibility and learnability of voice commands in an M-VUI application on Android. The study confirmed longstanding challenges with voice interactions and explored methods to enhance onboarding and learning experiences. Based on these findings, they propose design implications for M-VUIs. | 89 | 9.9 |

| [30] | This paper encourages research on voice assistants (VAs) for older adults (65+), outlining key issues such as perceptions, barriers to use, conversational user experience, and anthropomorphic design, while raising provocative research questions to stimulate debate and discussion. | 52 | 8.7 |

| [31] | This paper reveals that user characteristics like assimilation bias and technical confidence significantly impact interactions with the VUI-based calendar DiscoverCal, affecting performance and approach. | 32 | 5.3 |

| [32] | This study examines the moderating effect of user experience on user resistance in the voice interface of in-vehicle infotainment (IVI) systems. User experience positively moderates the relationship of uncertainty costs with user resistance. | 31 | 3.5 |

| [33] | This paper applies HCI and the parasocial relationship theory to study trust and user behaviour towards VCEDs, finding that users treat these devices as social objects influenced by performance, effort expectancy, presence, and cognition, with privacy concerns showing minimal impact on trust. | 26 | 4.3 |

| [34] | This workshop invites contributions on advancing voice interaction research, focusing on methodologies, social implications, and design insights for improving user experience and addressing challenges in voice UI design. | 13 | 1.9 |

| [33] | This panel examines the challenges in studying the daily use of voice assistants (VAs), highlighting privacy concerns, VA personalization, user value, and UX design considerations. | 12 | 1.7 |

| [35] | This paper addresses the challenge of quantifying user experience (UX) in voice-based systems, identifying gaps, and proposing frameworks to guide future research and standardize measurement methods. | 8 | 2 |

| Research Categories | Description |

|---|---|

| User Experience and usability Measurement and Evaluation (26) | This category focuses on assessing and enhancing interactions with voice-based systems, such as virtual assistants and voice user interfaces (VUIs). Studies emphasize the need for improved evaluation metrics and methods for VUIs, and reveal a diverse array of approaches to evaluating UX. For example, the system usability scale (SUS), to better understand and enhance usability [11]. Another example is the UEQ Plus framework has been extended to incorporate voice communication scales to measure user experience quality, specifically for voice assistants [37]. Additionally, an importance-performance matrix analysis (IPMA) has been utilized to strategically evaluate user experience with intelligent voice assistants, highlighting key factors, such as performance expectancy and barriers that influence user adoption [38]. Research also delves into factors influencing the adoption of AI-based voice assistants, identifying crucial elements, like user satisfaction and the role of AI in enhancing UX [39]. The context of use and context-dependent UX quality testing are also emphasized, addressing the challenges of evaluating non-visual interfaces in varied environments [1]. Moreover, the effects of infotainment auditory–vocal systems’ design on workload and usability have been examined, underscoring the importance of system design elements in influencing user satisfaction [40]. First impressions and the perceived human likeness of voice-activated virtual assistants across different modalities and tasks have been explored, showing how these factors impact user emotions and the overall UX [41]. Additionally, studies have analyzed parameters of the user’s voice as indicators of their experience with intelligent voice assistants, revealing the deep connection between voice control and emotional responses [42]. The research also addresses the usability evaluation of voice interfaces through qualitative methods, providing protocols for assessing voice assistants in specific contexts, such as healthcare [43]. In addition, other studies focus on creating reliable scales for evaluating VA usability, such as the voice usability scale developed to measure user experience, specifically with voice assistants [44] Finally, there is a focus on enhancing voice technologies through innovative methodologies and evaluation frameworks, such as examining the role of English language proficiency in using VUIs [43] and developing adaptable utterances to improve learnability [45]. The collective goal across these studies is to create more effective, user-friendly voice technologies by understanding and improving the UX through comprehensive and context-aware research. |

| Usability Engineering and Human–Computer Interaction (28) | This research category delves into the engineering aspects related to usability and human–computer interaction of voice assistants (VAs) and voice user interfaces (VUIs). As these technologies gain popularity, their usability becomes crucial for widespread adoption. Usability with multi-agent versus single-agent systems are examined to understand the complexities involved in managing multiple voice assistants [46]. The engineering implications in developing verbal consent mechanisms are also explored, emphasizing the importance of legal obligations and ethical best practices [47]. The integration of VUIs in virtual environments (VEs) is also explored, comparing the effectiveness of voice interfaces with graphical user interfaces in virtual reality settings [48]. Industry-focused research underscores the barriers and opportunities in supporting VUI designers on the job, pointing to gaps in current design practices and offering insights for improvement [49]. The role of proactive dialogue strategies in enhancing user acceptance is studied through comparisons of explicit and implicit dialogue strategies for conversational recommendation systems [50]. The impact of energy-saving applications incorporating VUIs is also examined, as seen in the development of a cloud-based smart home energy application aimed at multi-unit residential buildings [51,52]. Collectively, these studies contribute to the development of more intuitive, accessible, and user-friendly VUIs, addressing the diverse needs of users across different contexts and environments. |

| Voice Assistant Design and Personalization (31) | This category focuses on the evolving landscape of voice assistants (VAs), particularly how these systems are designed, personalized, and received by users. The field examines various aspects such as the user-centered design of VAs, intercultural user studies, the impact of personality and anthropomorphism on user experience, and the challenges of implementing effective voice user interfaces (VUIs). This category can be further streamlined into three subcategories: User-centered design and personalization: Research in voice assistant design emphasizes creating natural, human-like interactions through user-centered approaches. For example, designing natural voice assistants for mobile platforms through a user-centered design approach has been highlighted as a crucial element in enhancing user interaction and satisfaction [53]. Studies also explore how adaptive suggestions can increase the learnability of VUIs, improving user engagement over time [54]. Furthermore, the importance of designing emotionally engaging avatars and optimizing dialogue to enhance user experience and acceptance is evident in research focusing on the implementation of emotion recognition in VAs [55]. Cultural and psychological factors: Studies in this subcategory highlight that cultural and psychological factors need to bear in mind on VUIs design. For instance, intercultural comparisons, such as those between Germany and Spain, reveal similar usage patterns despite privacy concerns, underscoring the need to consider cultural nuances in VUI design [56]. Additionally, psychological models exploring users’ mental models of anthropomorphized voice assistants demonstrate how users perceive these technologies, ranging from empathetic, human-like designs to mere machines [57]. Another study on user perceptions of extraversion in chatbot design further supports the notion that personality traits significantly affect user experience after repeated use [58]. Moreover, the importance of cultural sensitivity in VUI design is evident in research from Japan, demonstrating the need to adapt interactions to fit different cultural contexts [59]. Improvement in voice user interfaces design: There is a strong need for tools and guidelines for improvement and enhance the design of voice interfaces. Improving UX is crucial for VUIs. Research identifies common user frustrations, including poor response quality and conversational design issues. For example, a survey-based study focusing on German users found that frequent annoyances included the misinterpretation of commands and inadequate responses, which significantly impacted user satisfaction [60]. On the other hand, Meta-analyses of existing VUI guidelines suggest a move towards unified standards, which can help streamline the design process and ensure a consistent user experience across different platforms [61]. Moreover, the development of tools like the user experience tool selector for VUIs provides valuable resources for researchers and practitioners aiming to improve VUI design effectiveness. Additionally, research on addressing VUIs design challenges for VUIs highlights the importance of accessibility and adaptability in these systems [62]. |

| Privacy, Security, and Ethical issues (10) | This research category delves into the critical aspects of privacy, security, and ethical design in voice user interfaces (VUIs) and related technologies. The studies in this category explore various facets of these themes, emphasizing the need for careful consideration in the design and implementation of VUIs to ensure they meet the diverse needs and expectations of users. This category can be further split into two subcategories:Privacy concerns in voice user interfaces: Privacy is a major concern for VUI users. Studies reveal risks like unwanted activations and data security vulnerabilities. For instance, the StealthyIMU attack demonstrates how motion sensors can covertly steal sensitive information from VUIs, highlighting significant privacy threats that need to be addressed [63]. Additionally, security issues, such as those explored by [64], emphasize the importance of securing data to maintain user trust. Ethical design and inclusion: Ethical design and inclusivity are vital for VUIs. Research on older adults reveals specific challenges and opportunities for creating more accessible and effective interfaces, as discussed by [65]. Similarly, studies focusing on non-native English speakers highlight usability issues and propose solutions to make VUIs more user-friendly, inclusive, and equitable for diverse populations [66]. The inclusion of ethical considerations in the design process ensures that VUIs are not only effective but also respect the diverse needs of their users. |

| Cross-Cultural Usability and Demographic Studies (6) | This category explores how voice assistant technologies and related applications interact with diverse user groups and cultural contexts. Research in this area investigates various aspects of voice assistants, including personalization, naturalness, and human-like traits, to enhance user experience. One study focuses on defining optimal voice assistant characteristics considering different cultural issus, such as communication style, personality, dialogue, and appearance, to create guidelines for more natural interactions, contributing to the development of more human-like VUIs [67]. Another study compares the efficiency of voice assistants like Google Home with traditional technologies in educational settings, revealing that voice assistants can significantly speed up information retrieval and improve user satisfaction, though challenges such as privacy concerns and limited databases remain [68]. Research has also examined the impact of cultural differences on the UX of voice assistants, showing that localization and cultural adaptation are crucial for ensuring a positive user experience across different regions [69]. Other research investigates differences in usability and satisfaction between native and non-native English speakers, shedding light on the challenges non-native speakers face with intelligent personal assistants [70]. Additionally, the reasoning capabilities of dialogue systems are analyzed, focusing on large-scale interpretable knowledge graph reasoning [71]. An investigation into emotional responses to voice user interfaces (VUIs) in India emphasizes the importance of speech modulation in conveying emotions, demonstrating how user-affective responses can be influenced by voice interface stimuli [72]. The role of accessibility in voice interfaces has become a significant research focus, especially for users with disabilities. The category further explores the challenges of designing VUIs for different user demographics, such as age groups, by conducting usability studies to tailor VUIs accordingly [73]. The social dynamics of family interactions with VUIs are highlighted in studies that investigate how families interact with and perceive VUIs in group settings [74]. In addition, studies have explored how different demographic groups, including older adults and individuals with disabilities, experience and interact with VUIs, highlighting the need for inclusive design practices that cater to a diverse user base [75]. The introduction of the Sonos Voice Control Bias Assessment Dataset offers valuable insights into demographic biases in voice assistant performance, providing new methods for assessing and improving inclusivity in these technologies [76]. Lastly, a bibliometric analysis of the impact of artificial intelligence on branding reveals the evolving role of AI, including voice assistants, in shaping brand strategies and consumer perceptions over recent decades [77]. Overall, these studies contribute to a deeper understanding of how voice assistants perform across different cultural and demographic groups, aiming to improve user experience and address technological challenges. |

| Technological challenges and applications (13) | This category explores various facets of voice-based technologies, including conversational voice assistants (CVAs) and intelligent voice assistants (IVAs). The research encompasses a wide range of topics, from usability and user experience to the impact of these technologies on specific applications. This category can be further refined into two subcategories:Technical challenges: Research into technical design of CVAs and IVAs addresses significant challenges, including response accuracy, natural language understanding, and user interaction patterns, which are critical for creating usable and effective voice technologies [78]. Additionally, frameworks like VORI have been developed to test the interactability of VUIs, providing a structured approach to evaluate these systems’ performance [8]. Application contexts: User experience with voice technologies is influenced by factors beyond mere task success, including how system responses align with user expectations and cultural contexts [67]. For instance, studies examining the use of voice technology in caregiving show potential in supporting complex home care tasks, yet they also reveal concerns about the usability and impact of these systems on caregiver experiences [79]. Similarly, research into the application of voice assistants in reminiscence therapy for older adults demonstrates the potential of these technologies to enhance user engagement, though issues related to system usability and user perceptions remain [80]. Further research examines the application of voice technology in specific contexts, such as a mobile health app for rheumatoid arthritis patients. This study shows moderate adherence and mixed satisfaction with the voice features, highlighting the potential and limitations of voice-enabled health technologies [81]. Furthermore, the mediating effects of IVAs on service quality, satisfaction, and loyalty in various service contexts further highlight the importance of user experience in the successful deployment of voice technologies [42]. Additionally, efforts to improve conversational agent responses when they lack sufficient information underline the need for better user interaction strategies to maintain trust and satisfaction [82]. Overall, these studies contribute to a deeper understanding of how voice assistants perform across different contexts, aiming to improve user experience, address usability challenges, and enhance the applicability of these technologies across diverse user groups. |

| Category Name | Research Challenges |

|---|---|

| User Experience and usability Measurement and Evaluation |

|

| Usability Engineering and Human–Computer Interaction (HCI) |

|

| Voice Assistant Design and Personalization |

|

| Privacy, Security, and Ethical issues |

|

| Cross-Cultural Usability and Demographic Studies |

|

| Technological challenges and applications |

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Deshmukh, A.M.; Chalmeta, R. User Experience and Usability of Voice User Interfaces: A Systematic Literature Review. Information 2024, 15, 579. https://doi.org/10.3390/info15090579

Deshmukh AM, Chalmeta R. User Experience and Usability of Voice User Interfaces: A Systematic Literature Review. Information. 2024; 15(9):579. https://doi.org/10.3390/info15090579

Chicago/Turabian StyleDeshmukh, Akshay Madhav, and Ricardo Chalmeta. 2024. "User Experience and Usability of Voice User Interfaces: A Systematic Literature Review" Information 15, no. 9: 579. https://doi.org/10.3390/info15090579

APA StyleDeshmukh, A. M., & Chalmeta, R. (2024). User Experience and Usability of Voice User Interfaces: A Systematic Literature Review. Information, 15(9), 579. https://doi.org/10.3390/info15090579