Human-Centered Artificial Intelligence in Higher Education: A Framework for Systematic Literature Reviews

Abstract

:1. Introduction

2. Conceptual Foundation

2.1. AI in Education

2.2. Human-Centered AI in Education

2.3. HCAI-Based Systematic Literature Reviews

3. Research Design

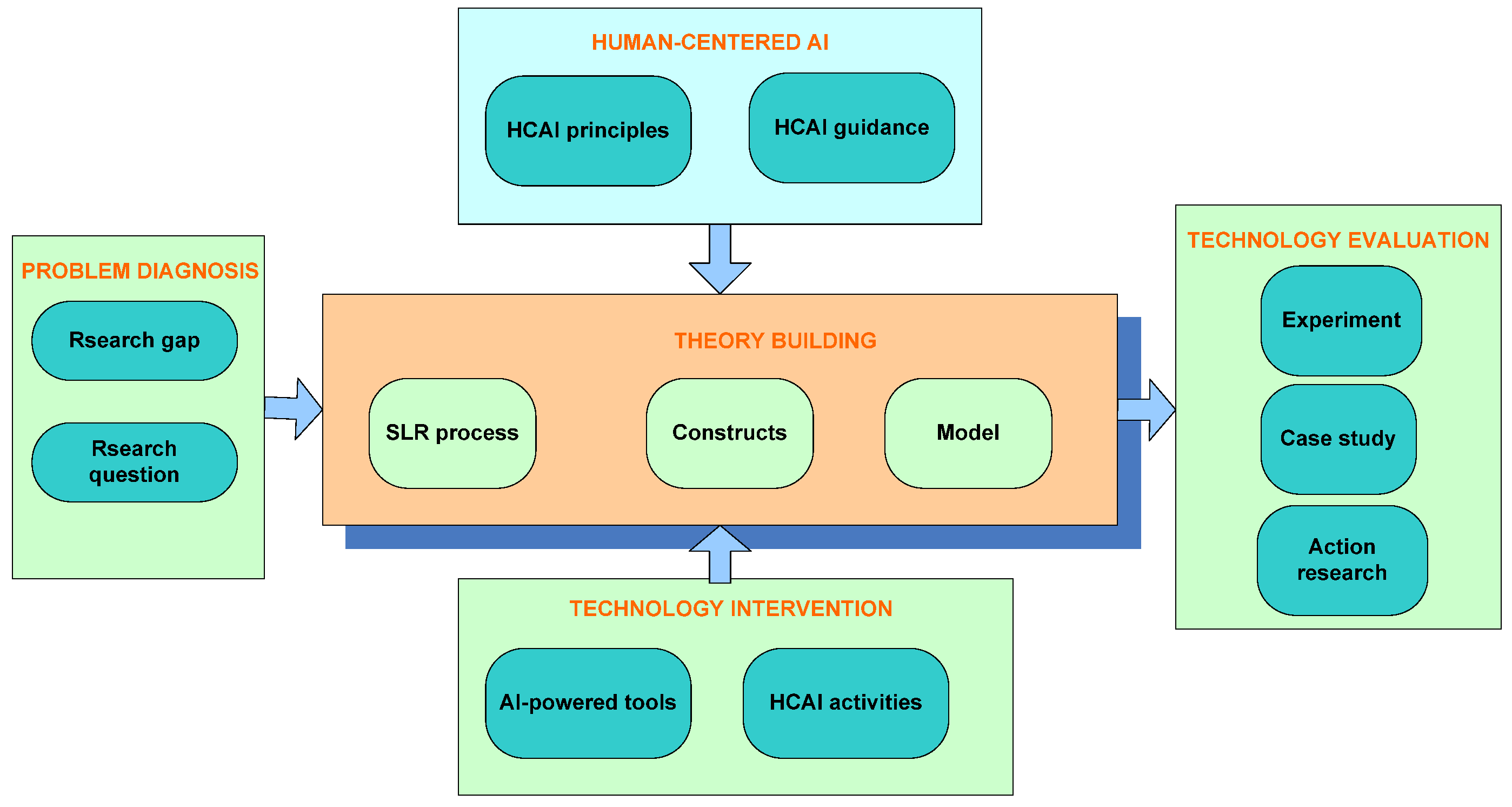

4. HCAI-SLR Framework

4.1. Theory Building

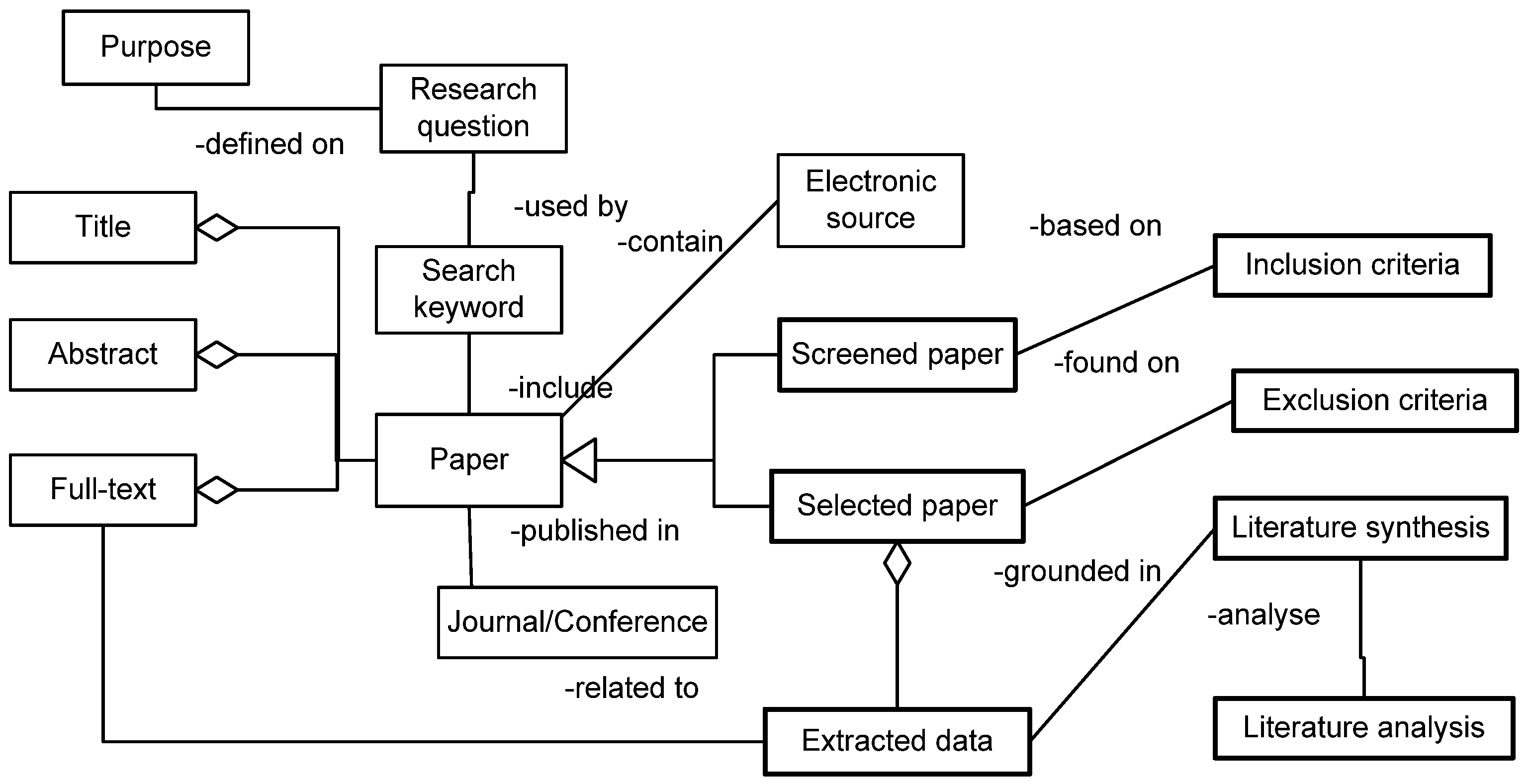

4.1.1. SLR Process

4.1.2. Model and Constructs

4.2. HCAI Elements

4.2.1. HCAI Principles

4.2.2. HCAI Guidance

- First control point (Human-before-the-loop): This control point verifies whether all planning requirements, ethical considerations, and human-centered design principles are met during the initial design phase. For example, when planning a literature review, researchers ensure that the research questions are clearly defined, comprehensive and unbiased search strategies are used, and ethical data collection and analysis considerations are addressed.

- Second control point (Human-in-the-loop): This control point encompasses two crucial checks:

- Data diversity and bias check: Verifies if the collected data objects are sufficiently diverse and representative to minimize bias in the AI system. For example, researchers ensure that the literature search includes a diverse range of sources, including different databases, journals, and publication types, to avoid bias towards a particular perspective or geographical region.

- Model/processing validity check: Ensures that the chosen modeling algorithms or data processing techniques are appropriate, accurate, and aligned with the desired outcomes. For example, when using AI for data extraction, researchers evaluate the accuracy of the extracted data by manually checking a sample of papers. They also ensure the chosen extraction tool is suitable for the type of data being collected and the research questions being addressed.

- Third control point (Human-over-the-loop): This control point confirms that rigorous testing and validation procedures are appropriately performed during deployment. It ensures that the AI functions as intended and that any unintended consequences or biases are identified and addressed. For example, if after deployment, user feedback reveals that the AI-generated summaries are often too short, or the text lacks the appropriate citations, the team would adjust the AI’s parameters to provide more comprehensive summaries and put the right citations, addressing these unintended consequences.

4.3. Technology Intervention

4.3.1. AI-Powered Tools

- Type 1 AI tools—Prompt-based tools: The first category includes conversational AI systems like ChatGPT-4o, Claude-3.5-Sonnet, and Google Bard (Gemini Flash 2.0) [54] that allow interactive querying through natural language prompts and responses. Their conversational nature makes them well-suited for interactive use in the literature review process [23]. These tools utilize large language models (LLMs) [55] trained on massive text datasets. Prompt engineering techniques [56] are crucial to optimize their performance for specific tasks.

- Type 2 AI tools—Task-oriented tools: The second category comprises platforms with graphical user interfaces to support specific literature review tasks [57]. These tools incorporate AI and machine learning (AI/ML) capabilities like natural language processing or machine learning algorithms but operate through predefined interfaces rather than open-ended prompts. Examples are tools for citation screening, quality assessment, and data extraction [57].

4.3.2. HCAI Activities

- Identifies inconsistencies across tools: No AI-based tool is perfect. Thus, the discrepancies help pinpoint areas needing refinement. For example, if Tool A includes a paper but Tool B excludes it, it indicates the screening criteria may need adjustment.

- Reduces systemic biases: Overreliance on one AI tool risks bias inherent in that tool’s training data or algorithms. Testing outputs across diverse tools minimizes singular blind spots. The “wisdom of crowds” principle [67] creates more robust results.

5. Technology Evaluation

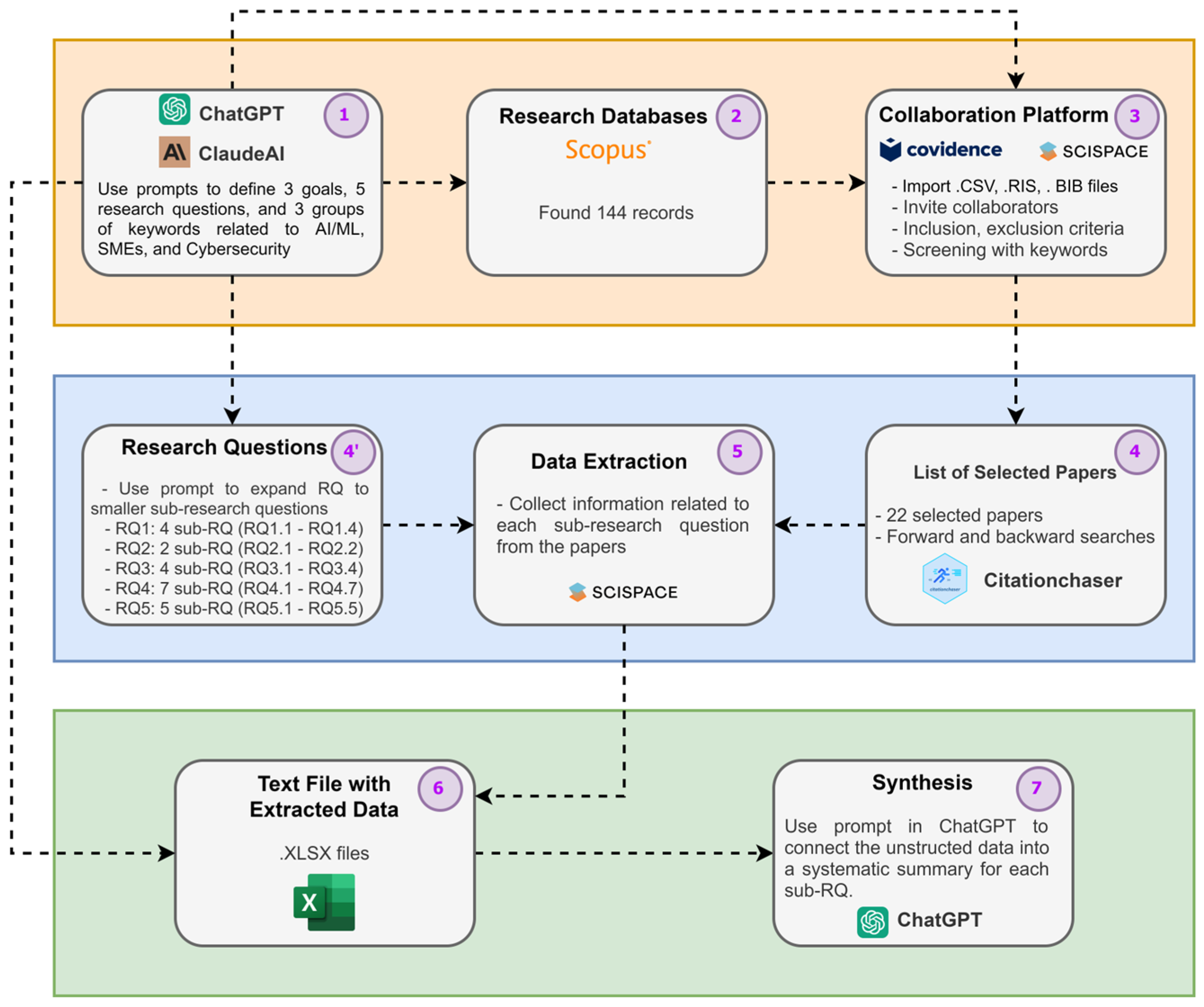

5.1. Illustrative Scenario

- Step 1—Identification phase, ChatGPT (chat.openai.com) and ClaudeAI (claude.ai) were used to establish the scope and parameters to guide the literature search. Three core goals were defined, including synthesizing the landscape, challenges, and outlook. Five specific research questions (RQ) were developed, covering the AI/ML tools used, benefits, adoption barriers, the literature gaps, and evolution. A comprehensive set of 44 keywords was identified and organized into three groups: 24 cybersecurity terms, 13 AI/ML terms, and 7 SME terms. These keywords were combined into a Boolean search string to link the concepts.

- Step 2—Searching phase, using the SCOPUS database, filters were applied, and 144 English language papers published from 2013 to 2023 sourced from journals and conferences were selected.

- Step 3—Screening phase, these 144 papers were imported into the AI-powered platform Covidence (https://www.covidence.org/, accessed on 1 March 2025), which focuses on managing and streamlining SLRs, to resolve conflicts and remove duplicates. Inclusion and exclusion criteria were set and aligned to the review scope. Two reviewers collaboratively performed title/abstract screening supported by Covidence’s machine learning algorithms and highlighted keywords function to expedite the process. A total of 27 papers remained for the full-text review.

- Step 4—Practical screen phase, the full-text screening was conducted using Typeset (typeset.io), a tool designed to enhance the comprehension of research papers. This phase included two quality assessment questions. From this procedure, 22 papers were retained. Moreover, the forward and backward searches were conducted via Citationchaser (estech.shinyapps.io/citationchaser), which allows for obtaining lists of references from across studies. This process did not add any new papers. In total, 22 papers were selected. Thus, in Step 4, ChatGPT and ClaudeAI were again mobilized to divide the main research questions into more focused sub-research questions (sub-RQs). These questions were designed to guide the data extraction using AI tools such as Typeset. In summary, we had 4 sub-RQ for RQ1, 2 sub-RQ for RQ2, 4 sub-RQ for RQ3, 7 sub-RQ for RQ4, and 5 sub-RQ for RQ5.

- Step 5—Quality appraisal and Step 6—Data extractions, these 22 papers then underwent through extraction, guided by the sub-questions formulated in Step 4. Again, the AI tool Typeset was leveraged to accelerate the extraction process through its summarization, data extraction, and chat features. Data were then compiled into a Microsoft Excel sheet aligned to each sub-research question.

- Step 7—Synthesis phase, the extracted unstructured data compiled in the Excel sheet from Step 6 was synthesized using ChatGPT-4. ChatGPT analyzed the spreadsheet data to identify key themes, trends, and insights aligned with the research questions and sub-research questions. Finally, the sub-RQs with too little data have been removed.

5.2. Case Study

- Synthesize and understand circular economy integration across different organizational types.

- Identify challenges, benefits, and key themes in CE implementation.

- Explore sector-specific approaches and determine critical strategies or models.

- Assess factors and enablers for successful CE implementation.

- Provide a comprehensive overview and future directions for CE research.

- First control point: Before initiating the literature search, the research team collaborated with the RRECQ experts to validate the research questions, ensuring their clarity, neutrality, and ethical soundness. This ensured alignment with the RRECQ’s research priorities and ethical considerations. A list of critical keywords was defined by the group of experts.

- Second control point: During the screening, the three independent reviewers manually reviewed the selected papers to assess representation across sectors, organizational types, and research perspectives that helped identify and address any potential geographical or methodological biases. Furthermore, the accuracy of data extracted using AI tools was verified through manual spot-checking. Different AI tools’ results were compared, and expert feedback was incorporated.

- Third control point: Sensitivity analysis was conducted by adjusting search terms and screening criteria to assess the robustness of the findings and minimize potential biases introduced by the AI tools or the review process itself. Moreover, a group of experts validated AI-generated syntheses via many online meetings, and conclusions were drawn before finalizing the review.

- A comprehensive overview of circular economy practices across organizations, highlighting diverse approaches and the importance of technological, policy, and social factors.

- Identification of drivers for adopting circular economy practices, including environmental stewardship and regulatory compliance, alongside significant barriers such as economic constraints and resistance to change.

- The influence of organizational size on the adoption of circular economy practices, noting distinct challenges faced by large corporations versus SMEs.

5.3. Demonstration

- Enhanced Understanding: Participants reported a significant improvement in their understanding of the HCAI-SLR framework and its practical application. A majority of participants (57.1%) found the framework easy to apply. Specifically, 9.5% found that the HCAI-SLR framework very easy to understand, and 47.6% said that it is easy.

- Improved Performance: The majority (71.4%) confirmed that utilizing AI in research enhanced their performance, effectiveness, efficiency, and impact of their research activities. Specifically, 38.1% were very satisfied, and 33.3% were satisfied with the framework’s performance.

- Convenience and Collaboration: 52.4% found the framework convenient for organizing the SLR process, with 19% rating it as very convenient. Furthermore, 57.1% strongly agreed, and 38.1% agreed that the human–AI collaborative approach provided clear benefits.

6. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Ozmen Garibay, O.; Winslow, B.; Andolina, S.; Antona, M.; Bodenschatz, A.; Coursaris, C.; Falco, G.; Fiore, S.M.; Garibay, I.; Grieman, K. Six human-centered artificial intelligence grand challenges. Int. J. Hum. Comput. Interact. 2023, 39, 391–437. [Google Scholar] [CrossRef]

- Riedl, M.O. Human-centered artificial intelligence and machine learning. Hum. Behav. Emerg. Technol. 2019, 1, 33–36. [Google Scholar] [CrossRef]

- Brożek, B.; Furman, M.; Jakubiec, M.; Kucharzyk, B. The black box problem revisited. Real and imaginary challenges for automated legal decision making. Artif. Intell. Law 2024, 32, 427–440. [Google Scholar] [CrossRef]

- Wadden, J.J. Defining the undefinable: The black box problem in healthcare artificial intelligence. J. Med. Ethics 2022, 48, 764–768. [Google Scholar] [CrossRef]

- Zednik, C. Solving the black box problem: A normative framework for explainable artificial intelligence. Philos. Technol. 2021, 34, 265–288. [Google Scholar] [CrossRef]

- Shneiderman, B. Human-centered artificial intelligence: Reliable, safe & trustworthy. Int. J. Hum. Comput. Interact. 2020, 36, 495–504. [Google Scholar]

- Chen, L.; Chen, P.; Lin, Z. Artificial intelligence in education: A review. IEEE Access 2020, 8, 75264–75278. [Google Scholar] [CrossRef]

- Munir, H.; Vogel, B.; Jacobsson, A. Artificial intelligence and machine learning approaches in digital education: A systematic revision. Information 2022, 13, 203. [Google Scholar] [CrossRef]

- Yang, S.J.; Ogata, H.; Matsui, T.; Chen, N.-S. Human-centered artificial intelligence in education: Seeing the invisible through the visible. Comput. Educ. Artif. Intell. 2021, 2, 100008. [Google Scholar] [CrossRef]

- March, S.T.; Smith, G.F. Design and natural science research on information technology. Decis. Support Syst. 1995, 15, 251–266. [Google Scholar] [CrossRef]

- Qu, J.; Zhao, Y.; Xie, Y. Artificial intelligence leads the reform of education models. Syst. Res. Behav. Sci. 2022, 39, 581–588. [Google Scholar] [CrossRef]

- Harry, A. Role of AI in Education. Interdiciplinary J. Hummanity 2023, 2, 260–268. [Google Scholar] [CrossRef]

- Zhang, K.; Aslan, A.B. AI technologies for education: Recent research & future directions. Comput. Educ. Artif. Intell. 2021, 2, 100025. [Google Scholar]

- Hien, H.T.; Cuong, P.-N.; Nam, L.N.H.; Nhung, H.L.T.K.; Thang, L.D. Intelligent assistants in higher-education environments: The FIT-EBot, a chatbot for administrative and learning support. In Proceedings of the 9th International Symposium on Information and Communication Technology, Da Nang, Vietnam, 6–7 December 2018; pp. 69–76. [Google Scholar]

- Sajja, R.; Sermet, Y.; Cikmaz, M.; Cwiertny, D.; Demir, I. Artificial intelligence-enabled intelligent assistant for personalized and adaptive learning in higher education. Information 2024, 15, 596. [Google Scholar] [CrossRef]

- Khanna, S.; Kaushik, A.; Barnela, M. Expert systems advances in education. In Proceedings of the National Conference on Computational Instrumentation NCCI-2010, Chandigarh, India, 19–20 March 2010; Central Scientific Instruments Organisation: Chandigarh, India, 2010; pp. 109–112. [Google Scholar]

- Le Dinh, T.; Pham Thi, T.T.; Pham-Nguyen, C.; Nam, L.N.H. A knowledge-based model for context-aware smart service systems. J. Inf. Telecommun. 2022, 6, 141–162. [Google Scholar] [CrossRef]

- Tiwari, R. The integration of AI and machine learning in education and its potential to personalize and improve student learning experiences. Int. J. Sci. Res. Eng. Manag. 2023, 7. [Google Scholar] [CrossRef]

- Chen, C.-M. Intelligent web-based learning system with personalized learning path guidance. Comput. Educ. 2008, 51, 787–814. [Google Scholar] [CrossRef]

- Li, K.C.; Wong, B.T.-M. Artificial intelligence in personalised learning: A bibliometric analysis. Interact. Technol. Smart Educ. 2023, 20, 422–445. [Google Scholar] [CrossRef]

- Holmes, W.; Miao, F. Guidance for Generative AI in Education and Research; UNESCO Publishing: Paris, France, 2023. [Google Scholar]

- Batista, J.; Mesquita, A.; Carnaz, G. Generative AI and higher education: Trends, challenges, and future directions from a systematic literature review. Information 2024, 15, 676. [Google Scholar] [CrossRef]

- Alshami, A.; Elsayed, M.; Ali, E.; Eltoukhy, A.E.; Zayed, T. Harnessing the power of ChatGPT for automating systematic review process: Methodology, case study, limitations, and future directions. Systems 2023, 11, 351. [Google Scholar] [CrossRef]

- Carter, S.; Nielsen, M. Using artificial intelligence to augment human intelligence. Distill 2017, 2, e9. [Google Scholar] [CrossRef]

- Fügener, A.; Grahl, J.; Gupta, A.; Ketter, W. Cognitive challenges in human–artificial intelligence collaboration: Investigating the path toward productive delegation. Inf. Syst. Res. 2022, 33, 678–696. [Google Scholar] [CrossRef]

- Schmidt, A. Interactive human centered artificial intelligence: A definition and research challenges. In Proceedings of the International Conference on Advanced Visual Interfaces, Ischia Island, Italy, 28 September–2 October 2020; pp. 1–4. [Google Scholar]

- Usmani, U.A.; Happonen, A.; Watada, J. Human-centered artificial intelligence: Designing for user empowerment and ethical considerations. In Proceedings of the 2023 5th International Congress on Human-Computer Interaction, Optimization and Robotic Applications (HORA), Istanbul, Turkey, 8–10 June 2023; pp. 1–7. [Google Scholar]

- Li, K.C.; Wong, B.T.-M. Research landscape of smart education: A bibliometric analysis. Interact. Technol. Smart Educ. 2022, 19, 3–19. [Google Scholar] [CrossRef]

- Gattupalli, S.; Maloy, R.W. On Human-Centered AI in Education; University of Massachusetts Amherst: Amherst, MA, USA, 2024. [Google Scholar]

- Hutson, J.; Plate, D. Human-AI collaboration for smart education: Reframing applied learning to support metacognition. In Advanced Virtual Assistants—A Window to the Virtual Future; IntechOpen: London, UK, 2023. [Google Scholar]

- Hajdarpasic, A.; Brew, A.; Popenici, S. The contribution of academics’ engagement in research to undergraduate education. Stud. High. Educ. 2015, 40, 644–657. [Google Scholar] [CrossRef]

- Richardson, A.J. The discovery of cumulative knowledge: Strategies for designing and communicating qualitative research. Account. Audit. Account. J. 2018, 31, 563–585. [Google Scholar] [CrossRef]

- Correia, A.; Grover, A.; Jameel, S.; Schneider, D.; Antunes, P.; Fonseca, B. A hybrid human–AI tool for scientometric analysis. Artif. Intell. Rev. 2023, 56, 983–1010. [Google Scholar] [CrossRef]

- Walsh, I.; Renaud, A.; Medina, M.J.; Baudet, C.; Mourmant, G. ARTIREV: An integrated bibliometric tool to efficiently conduct quality literature reviews. Systèmes D’information Manag. 2022, 27, 5–50. [Google Scholar] [CrossRef]

- Wagner, G.; Lukyanenko, R.; Paré, G. Artificial intelligence and the conduct of literature reviews. J. Inf. Technol. 2022, 37, 209–226. [Google Scholar] [CrossRef]

- Zawacki-Richter, O.; Marín, V.I.; Bond, M.; Gouverneur, F. Systematic review of research on artificial intelligence applications in higher education–where are the educators? Int. J. Educ. Technol. High. Educ. 2019, 16, 1–27. [Google Scholar] [CrossRef]

- Venable, J. The role of theory and theorising in design science research. In Proceedings of the 1st International Conference on Design Science in Information Systems and Technology (DESRIST 2006), Claremont, CA, USA, 24–25 February 2006; pp. 1–18. [Google Scholar]

- Vom Brocke, J.; Hevner, A.; Maedche, A. Introduction to design science research. In Design Science Research. Cases; Springer Nature: Berlin, Germany, 2020; pp. 1–13. [Google Scholar]

- Muzammul, M. Education System re-engineering with AI (artificial intelligence) for Quality Im-provements with proposed model. Adcaij Adv. Distrib. Comput. Artif. Intell. J. 2019, 8, 51. [Google Scholar]

- Rabiee, F. Focus-group interview and data analysis. Proc. Nutr. Soc. 2004, 63, 655–660. [Google Scholar] [CrossRef] [PubMed]

- Okoli, C.; Schabram, K. A Guide to Conducting a Systematic Literature Review of Information Systems Research. 2015. Available online: https://ssrn.com/abstract=1954824 (accessed on 1 March 2025).

- Levy, Y.; Ellis, T.J. A systems approach to conduct an effective literature review in support of information systems research. Informing Sci. 2006, 9, 181–212. [Google Scholar] [CrossRef] [PubMed]

- Xiao, Y.; Watson, M. Guidance on conducting a systematic literature review. J. Plan. Educ. Res. 2019, 39, 93–112. [Google Scholar] [CrossRef]

- Frické, M. The knowledge pyramid: The DIKW hierarchy. Ko Knowl. Organ. 2019, 46, 33–46. [Google Scholar] [CrossRef]

- Le Dinh, T.; Van, T.H.; Nomo, T.S. A framework for knowledge management in project management offices. J. Mod. Proj. Manag. 2016, 3, 159. [Google Scholar]

- Pilone, D.; Pitman, N. UML 2.0 in a Nutshell; O’Reilly Media, Inc.: Sebastopol, CA, USA, 2005. [Google Scholar]

- Müller-Bloch, C.; Kranz, J. A framework for rigorously identifying research gaps in qualitative literature reviews. In Proceedings of the 36th International Conference on Information Systems (ICIS), Fort Worth, TX, USA, 13–16 December 2015. [Google Scholar]

- Smuha, N.A. The EU Approach to Ethics Guidelines for Trustworthy Artificial Intelligence. Comput. Law Rev. Int. 2019, 20, 97–106. [Google Scholar] [CrossRef]

- ISO/IEC TR 24028; Information Technology. Artificial Intelligence. Overview of Trustworthiness in Artificial Intelligence. ISO: Geneva, Switzerland, 2020. [CrossRef]

- Arrieta, A.B.; Rodríguez, N.D.; Ser, J.D.; Bennetot, A.; Tabik, S.; Barbado, A.; García, S.; Gil-Lopez, S.; Molina, D.; Benjamins, R.; et al. Explainable Artificial Intelligence (XAI): Concepts, Taxonomies, Opportunities and Challenges toward Responsible AI. Inf. Fusion 2019, 58, 82–115. [Google Scholar] [CrossRef]

- Berkel, N.V.; Tag, B.; Gonçalves, J.; Hosio, S.J. Human-centred artificial intelligence: A contextual morality perspective. Behav. Inf. Technol. 2020, 41, 502–518. [Google Scholar] [CrossRef]

- Tahaei, M.; Constantinides, M.; Quercia, D.; Muller, M. A Systematic Literature Review of Human-Centered, Ethical, and Responsible AI. arXiv 2023, arXiv:2302.05284. [Google Scholar]

- Xu, W.; Gao, Z. Enabling Human-Centered AI: A Methodological Perspective. In Proceedings of the 2024 IEEE 4th International Conference on Human-Machine Systems (ICHMS), Toronto, ON, Canada, 15–17 May 2024; pp. 1–6. [Google Scholar]

- Lozić, E.; Štular, B. ChatGPT v Bard v Bing v Claude 2 v Aria v human-expert. How good are AI chatbots at scientific writing? arXiv 2023, arXiv:2309.08636. [Google Scholar]

- Hadi, M.U.; Tashi, A.; Qureshi, R.; Shah, A.; Irfan, M.; Zafar, A.; Shaikh, M.B.; Akhtar, N.; Wu, J.; Mirjalili, S.; et al. Large Language Models: A Comprehensive Survey of its Applications, Challenges, Limitations, and Future Prospects. TechRxiv 2023. [Google Scholar] [CrossRef]

- Giray, L.G. Prompt Engineering with ChatGPT: A Guide for Academic Writers. Ann. Biomed. Eng. 2023, 51, 2629–2633. [Google Scholar] [CrossRef] [PubMed]

- Pinzolits, R. AI in academia: An overview of selected tools and their areas of application. MAP Educ. Humanit. 2023, 4, 37–50. [Google Scholar] [CrossRef]

- Wang, S.; Scells, H.; Koopman, B.; Zuccon, G. Can ChatGPT Write a Good Boolean Query for Systematic Review Literature Search? In Proceedings of the 46th International ACM SIGIR Conference on Research and Development in Information Retrieval 2023, Taipei, Taiwan, 23–27 July 2023. [Google Scholar]

- Khalil, H.; Ameen, D.; Zarnegar, A. Tools to support the automation of systematic reviews: A scoping review. J. Clin. Epidemiol. 2021, 144, 22–42. [Google Scholar] [CrossRef] [PubMed]

- Ramirez-Orta, J.; Xamena, E.; Maguitman, A.G.; Soto, A.J.; Zanoto, F.P.; Milios, E.E. QuOTeS: Query-Oriented Technical Summarization. arXiv 2023, arXiv:2306.11832. [Google Scholar]

- D’Amico, S.; Dall’Olio, D.; Sala, C.; Dall’Olio, L.; Sauta, E.; Zampini, M.; Asti, G.; Lanino, L.; Maggioni, G.; Campagna, A.; et al. Synthetic Data Generation by Artificial Intelligence to Accelerate Research and Precision Medicine in Hematology. JCO Clin. Cancer Inform. 2023, 7, e2300021. [Google Scholar] [CrossRef]

- Wu, J.; Williams, K.; Chen, H.-H.; Khabsa, M.; Caragea, C.; Tuarob, S.; Ororbia, A.; Jordan, D.; Mitra, P.; Giles, C.L. CiteSeerX: AI in a Digital Library Search Engine. AI Mag. 2014, 36, 35–48. [Google Scholar] [CrossRef]

- Harfield, S.; Davy, C.; McArthur, A.; Munn, Z.; Brown, A.; Brown, N.J. Covidence vs Excel for the title and abstract review stage of a systematic review. Int. J. Evid. Based Healthc. 2016, 14, 200–201. [Google Scholar] [CrossRef]

- Marshall, I.J.; Kuiper, J.; Wallace, B.C. RobotReviewer: Evaluation of a system for automatically assessing bias in clinical trials. J. Am. Med. Inform. Assoc. JAMIA 2015, 23, 193–201. [Google Scholar] [CrossRef]

- Baviskar, D.; Ahirrao, S.; Potdar, V.; Kotecha, K.V. Efficient Automated Processing of the Unstructured Documents Using Artificial Intelligence: A Systematic Literature Review and Future Directions. IEEE Access 2021, 9, 72894–72936. [Google Scholar] [CrossRef]

- Hamilton, L.; Elliott, D.; Quick, A.; Smith, S.; Choplin, V. Exploring the Use of AI in Qualitative Analysis: A Comparative Study of Guaranteed Income Data. Int. J. Qual. Methods 2023, 22, 16094069231201504. [Google Scholar] [CrossRef]

- Lyon, A.; Pacuit, E. The Wisdom of Crowds: Methods of Human Judgement Aggregation. In Handbook of Human Computation; Springer Nature: Berlin, Germany, 2013. [Google Scholar]

- Greene, J.; McClintock, C.C. Triangulation in Evaluation. Eval. Rev. 1985, 9, 523–545. [Google Scholar] [CrossRef]

- Santana, V.F.d.; Galeno, L.M.D.F.; Brazil, E.V.; Heching, A.R.; Cerqueira, R.F.G. Retrospective End-User Walkthrough: A Method for Assessing How People Combine Multiple AI Models in Decision-Making Systems. arXiv 2023, arXiv:2305.07530. [Google Scholar]

- Dobrow, M.J.; Hagens, V.; Chafe, R.; Sullivan, T.J.; Rabeneck, L. Consolidated principles for screening based on a systematic review and consensus process. Can. Med. Assoc. J. 2018, 190, E422–E429. [Google Scholar] [CrossRef]

- Peffers, K.; Rothenberger, M.; Tuunanen, T.; Vaezi, R. Design science research evaluation. In Proceedings of the Design Science Research in Information Systems. Advances in Theory and Practice: 7th International Conference, DESRIST 2012, Las Vegas, NV, USA, 14–15 May 2012; pp. 398–410. [Google Scholar]

- Page, M.J.; McKenzie, J.E.; Bossuyt, P.M.; Boutron, I.; Hoffmann, T.C.; Mulrow, C.D.; Shamseer, L.; Tetzlaff, J.M.; Akl, E.A.; Brennan, S.E. The PRISMA 2020 statement: An updated guideline for reporting systematic reviews. Bmj 2021, 372, n71. [Google Scholar] [CrossRef]

| Step | Objective | Constructs |

|---|---|---|

| Identification (S1) | Identify the purpose, goals, title, keywords, and research questions of the review | Purpose, Research question |

| Protocol and training (S2) | Identify the protocol of the review process. More specifically if there is more than one reviewer | |

| Searching for the literature (S3) | Find the related papers in different databases | Electronic sources, Search keyword, Title, Paper, Journal/Conference |

| Practical screen (S4) | Identify what studies were considered for review based on the following criteria: content, publication language, journals, authors, setting, participants or subjects, program or intervention, research design, and sampling methodology, date of publication or of data collection, source of financial support [41] | Abstract, Inclusion criteria, Exclusion criteria, Screened paper |

| Quality appraisal (S5) | Identify the exclusion criteria for judging which articles are of insufficient quality to be included | Selected paper, Paper full text |

| Data Extraction (S6) | Extract the applicable information related to selected research papers | Extracted data |

| Synthesis of studies (S7) | Combine the facts extracted from the studies carried out by selected research papers | Literature synthesis |

| Writing the review (S8) | Present the results of the review systematically [42]. This step is based on the DIKW hierarchy (data–information–knowledge–wisdom) [44]. Data are for gathering of parts. Information is for connecting parts. Knowledge is for forming a whole, and wisdom is for joining the wholes [45] | Literature analysis |

| HCAI Activity | HCAI Guidance | Objective |

|---|---|---|

| Human initiation | Human-before-the-loop | Initiating the research topic and outlining the research objectives and initial research questions |

| AI augmentation | Human-in-the-loop | Augmenting the SLR process using prompt-based and task-oriented AI tools |

| AI triangulation | Human-in-the-loop | Using different AI tools to cross-check against each other under human supervision to identify discrepancies or inconsistencies in the results |

| Human decision | Human-over-the-loop | Ensuring the validity and relevance of the findings |

| Step | Role of AI Tools | Role of Humans |

|---|---|---|

| Identification (S1) | Suggest, refine, and select the research title, keywords, research outline, and initial research questions from input data [58] |

|

| Synthesis of studies (S7) |

|

|

| Writing (S8) |

|

|

| Step | Role of AI Tools | Role of Humans |

|---|---|---|

| Protocol and training (S2) | AI tools have a limited role | Choose a supported platform and define the review protocol |

| Searching for the literature (S3) |

| Ensure comprehensive search across databases and refine search strategy for systematic coverage |

| Practical screen (S4) | Prioritize relevant titles/abstracts with keyword highlights [63] and provide relevance scores; duplicate detection | Validate and decide on the list of screened papers |

| Quality appraisal (S5) |

| Manually verify with full-text screening |

| Data extraction (S6) | Automatically extract key information such as study characteristics, outcomes, contributions, and results from papers according to the research questions [65] |

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Le Dinh, T.; Le, T.D.; Uwizeyemungu, S.; Pelletier, C. Human-Centered Artificial Intelligence in Higher Education: A Framework for Systematic Literature Reviews. Information 2025, 16, 240. https://doi.org/10.3390/info16030240

Le Dinh T, Le TD, Uwizeyemungu S, Pelletier C. Human-Centered Artificial Intelligence in Higher Education: A Framework for Systematic Literature Reviews. Information. 2025; 16(3):240. https://doi.org/10.3390/info16030240

Chicago/Turabian StyleLe Dinh, Thang, Tran Duc Le, Sylvestre Uwizeyemungu, and Claudia Pelletier. 2025. "Human-Centered Artificial Intelligence in Higher Education: A Framework for Systematic Literature Reviews" Information 16, no. 3: 240. https://doi.org/10.3390/info16030240

APA StyleLe Dinh, T., Le, T. D., Uwizeyemungu, S., & Pelletier, C. (2025). Human-Centered Artificial Intelligence in Higher Education: A Framework for Systematic Literature Reviews. Information, 16(3), 240. https://doi.org/10.3390/info16030240