Green MLOps to Green GenOps: An Empirical Study of Energy Consumption in Discriminative and Generative AI Operations †

Abstract

1. Introduction

2. Sustainability Goals

- Goal 9: industry, innovation, and infrastructure—build resilient infrastructure, promote inclusive and sustainable industrialisation and foster innovation: our work aims to establish a roadmap for developing future MLOps frameworks, fostering innovation and promoting best practices across the technology stack.

- Goal 10: reduced inequalities—reduce inequality within and among countries: by reducing energy consumption, ML can become more economically viable and sustainable, meeting the 4Cs of requirements: coverage, capacity, cost, and consumption.

- Goal 12: responsible consumption and production—ensure sustainable consumption and production patterns: green ML has the potential to significantly lower reliance on fossil fuels and reduce overall energy consumption.

- Goal 13: climate action—take urgent action to combat climate change and its impacts: optimising energy usage across the entire MLOps pipeline can lead to a substantial reduction in carbon emissions.

3. Related Work

4. From Green MLOps to Green GenOps

4.1. The Transition from MLOps to GenOps

4.2. Energy Consumption in MLOps and GenOps and Sustainability

5. Methodology

5.1. Gathering Software-Based Energy Consumption Data

5.2. Calculating Energy Usage in Machine Learning Processes

5.3. Hardware Stats and Model Characteristics

6. Results

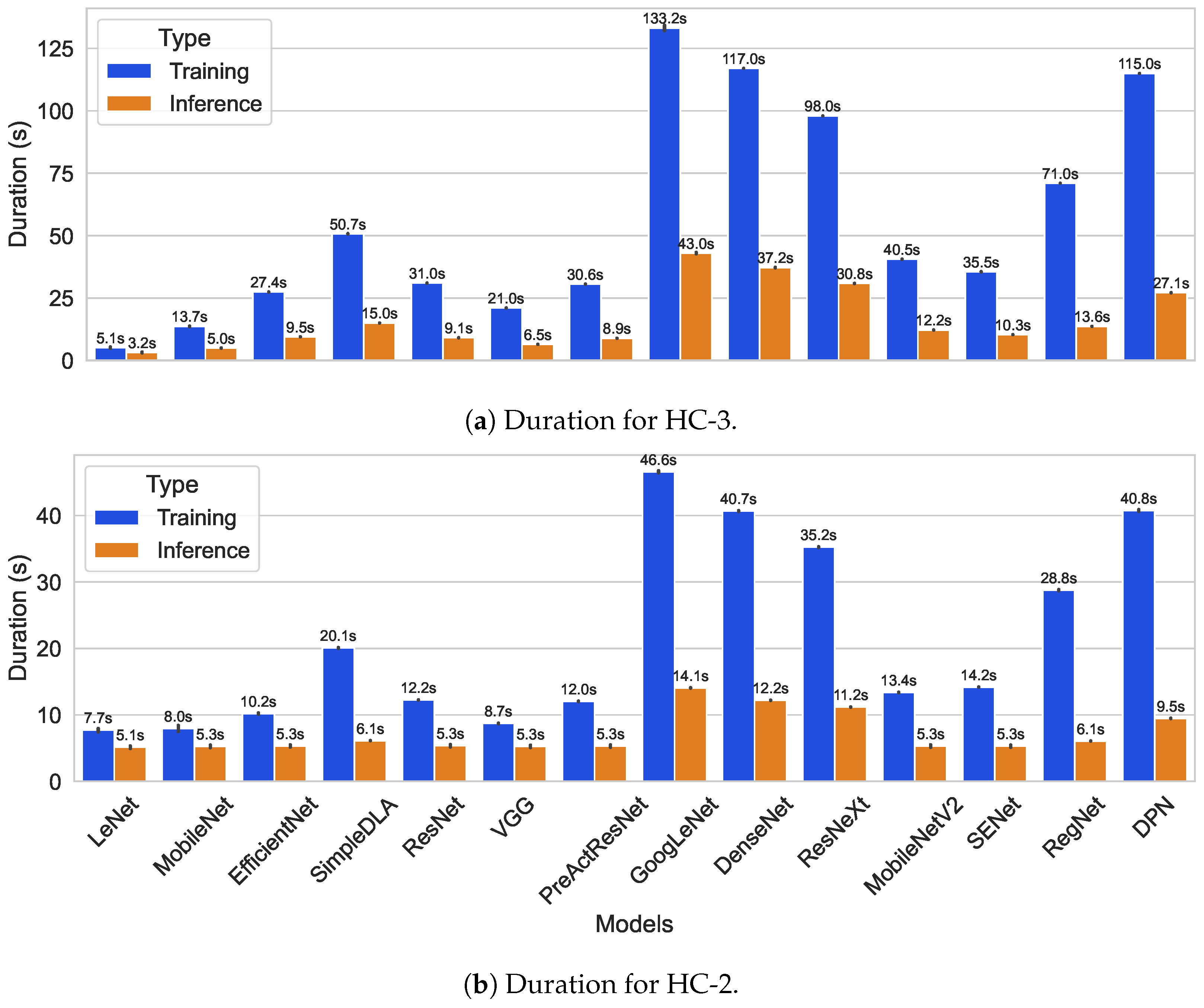

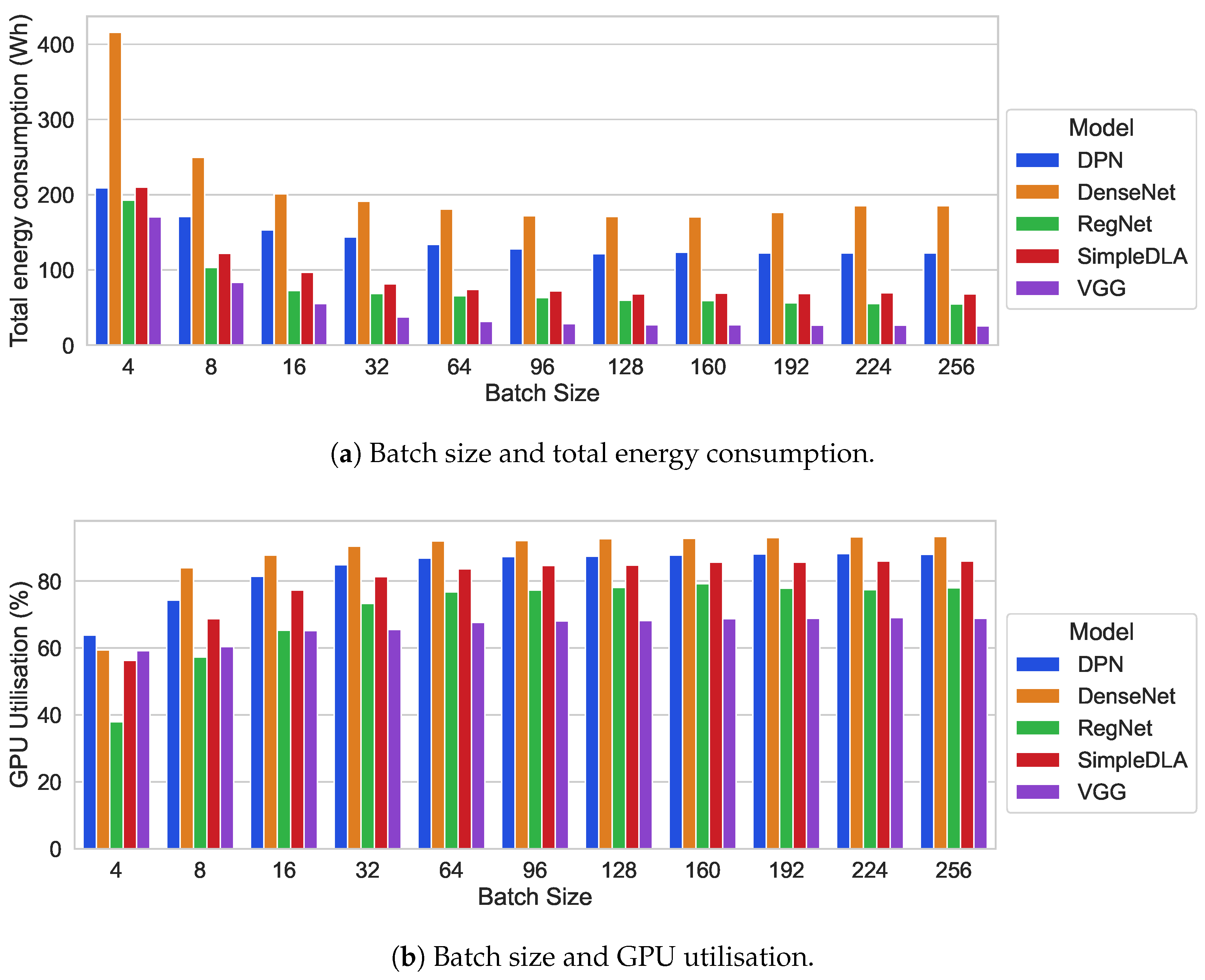

6.1. Discriminative AI Models

6.1.1. Initial Statistics

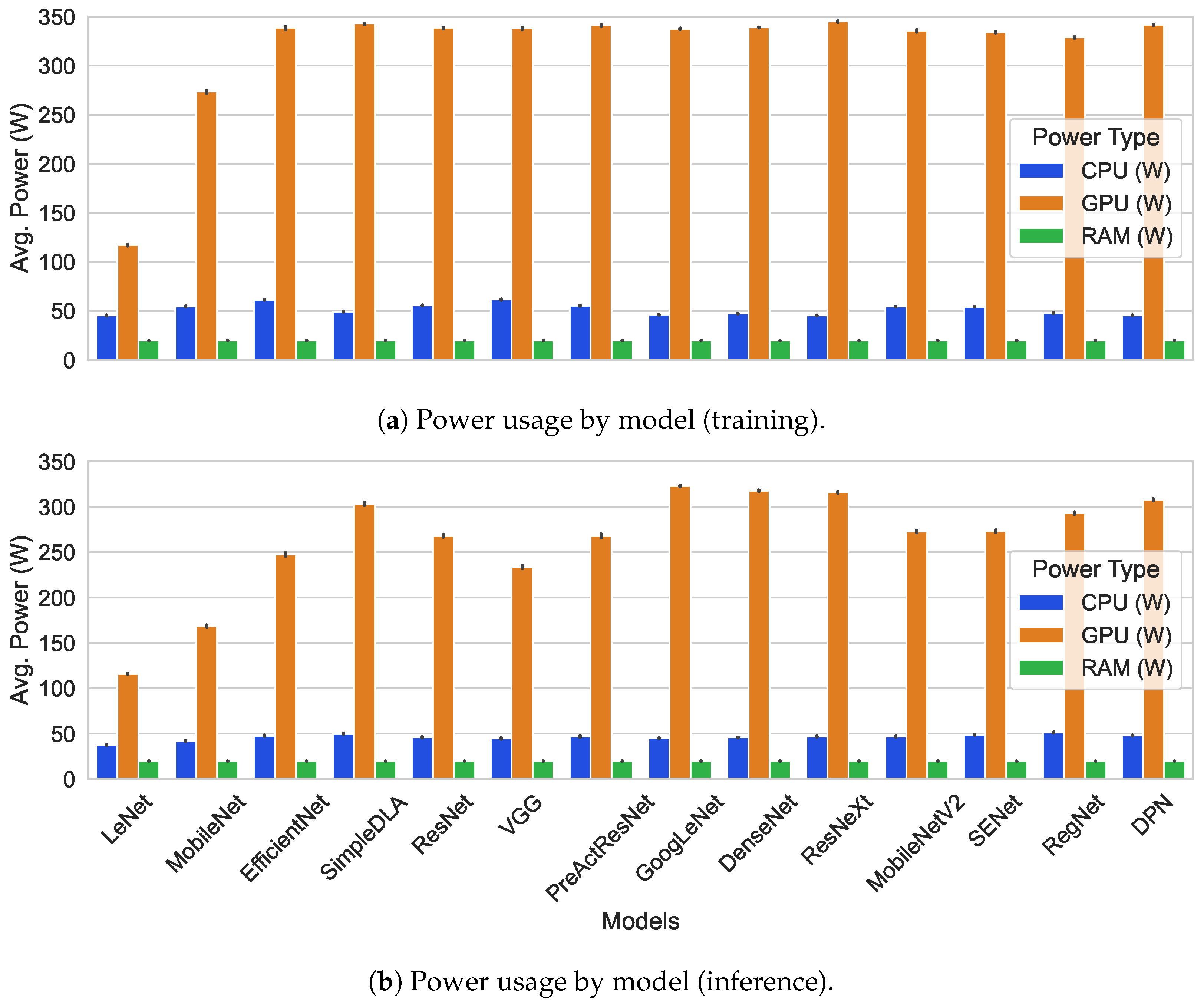

6.1.2. Power Consumption Measurements—Discriminative AI

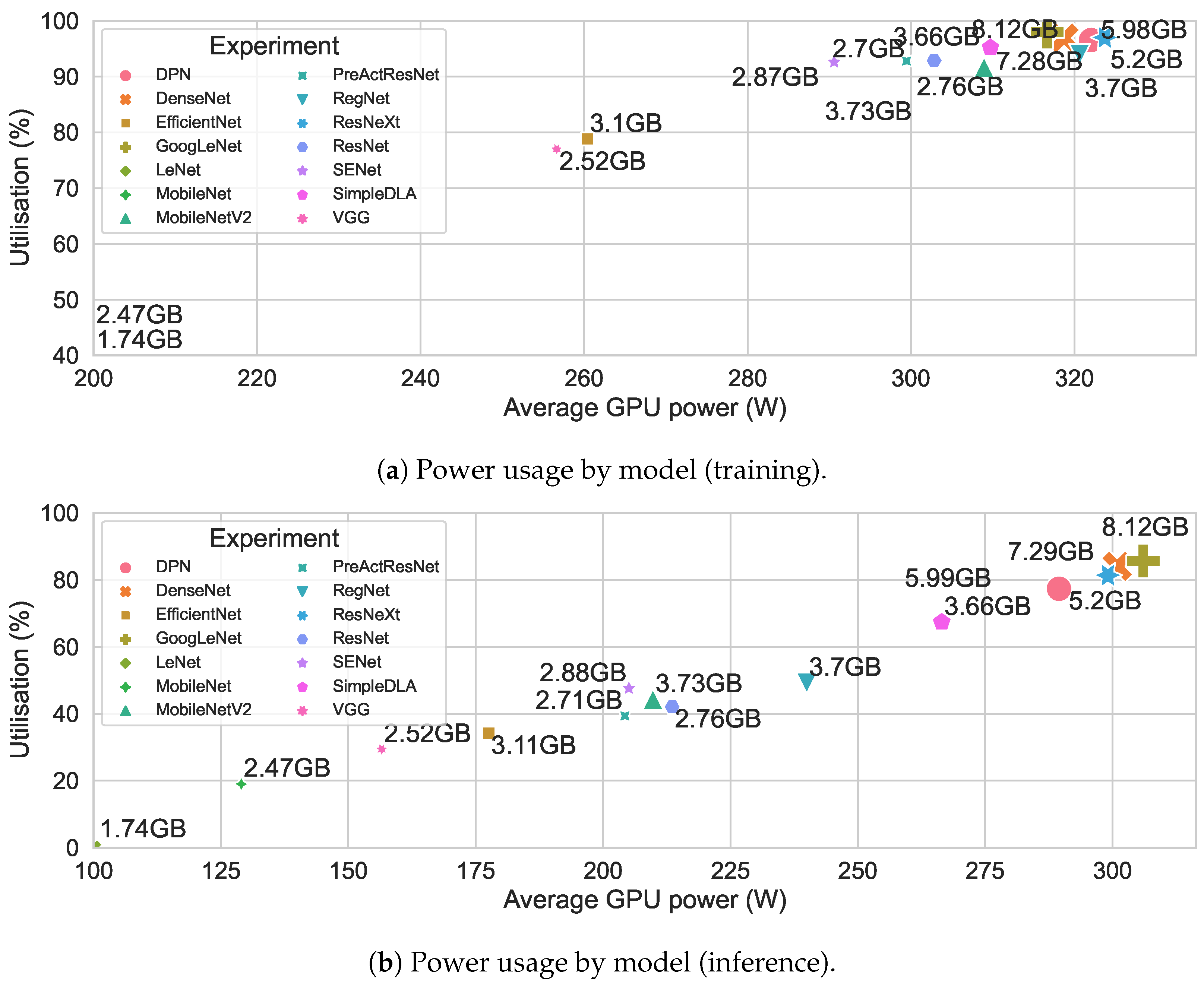

6.1.3. Total and GPU-Only Energy Consumption and Correlation Metrics

- macs_param: calculated as the ratio of MACs to trainable parameters—evaluates the computational efficiency of the model architecture (also seen in Figure 6).

- work_done: defined as the trainable parameters processed per second—assesses computational throughput and resource utilisation.

- overall_efficiency: the ratio of the accuracy multiplied by the work_done over the system’s utilisation.

- energy_per_sample: represents the total average energy consumption for one sample of inference.

- parameters: the total trainable parameters, a key indicator of model complexity and context for other metrics.

- work_per_unit_power: calculated as work_done divided by the observed power for a given batch of samples, quantifying energy efficiency.

- energy_scaling_factor: the ratio of the total power (CPU, GPU, and RAM) to model parameters.

- gpu_energy_scaling_factor: similar to energy_scaling_factor but focused on just the GPU’s absolute power consumption—both show how energy consumption scales with model complexity.

- model_size_to_ram: compares model size to memory usage, aiding in optimising memory efficiency for resource-limited systems.

6.2. Generative AI Models

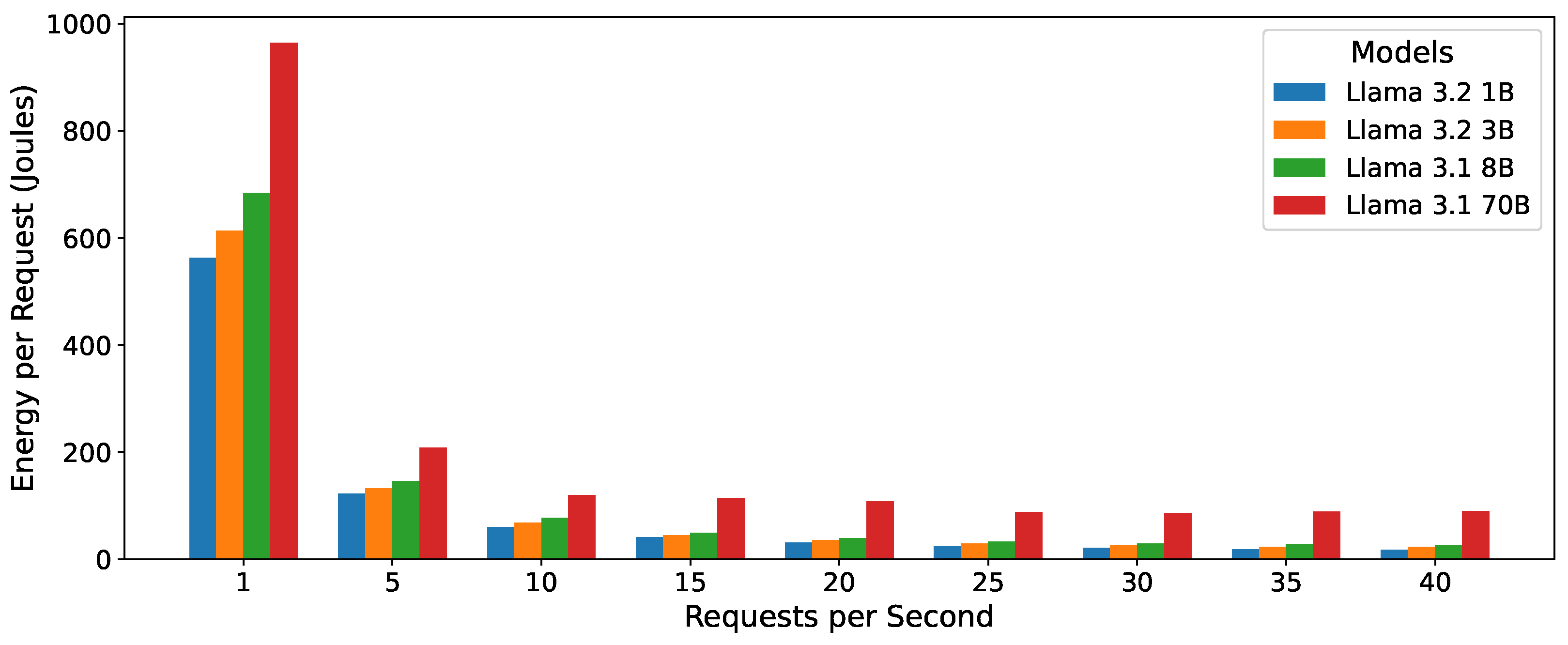

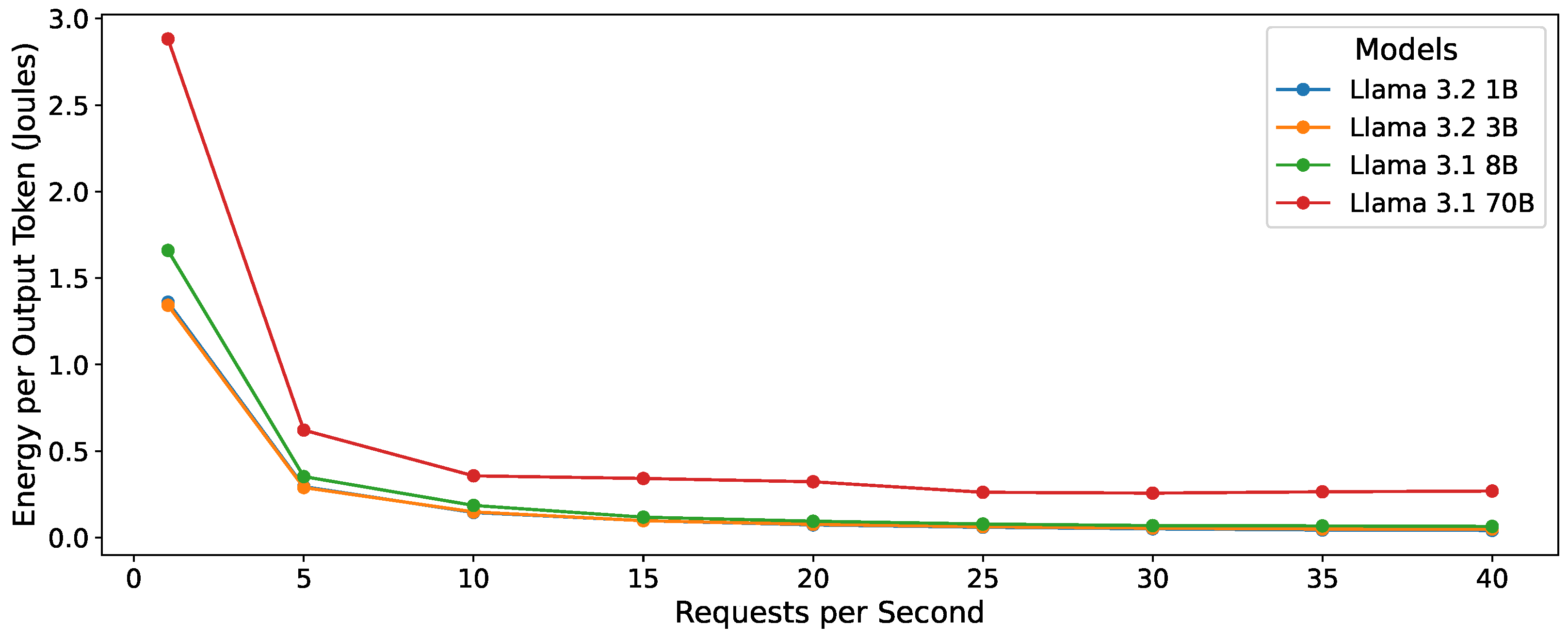

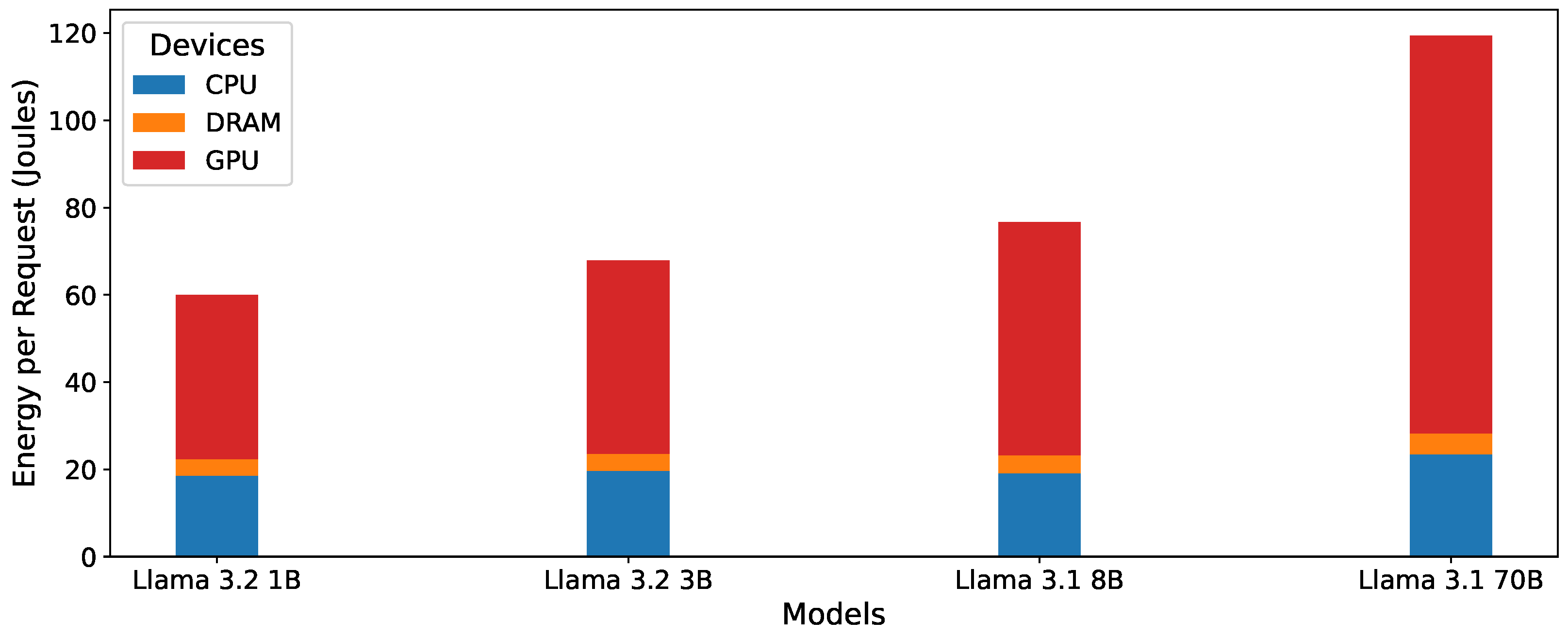

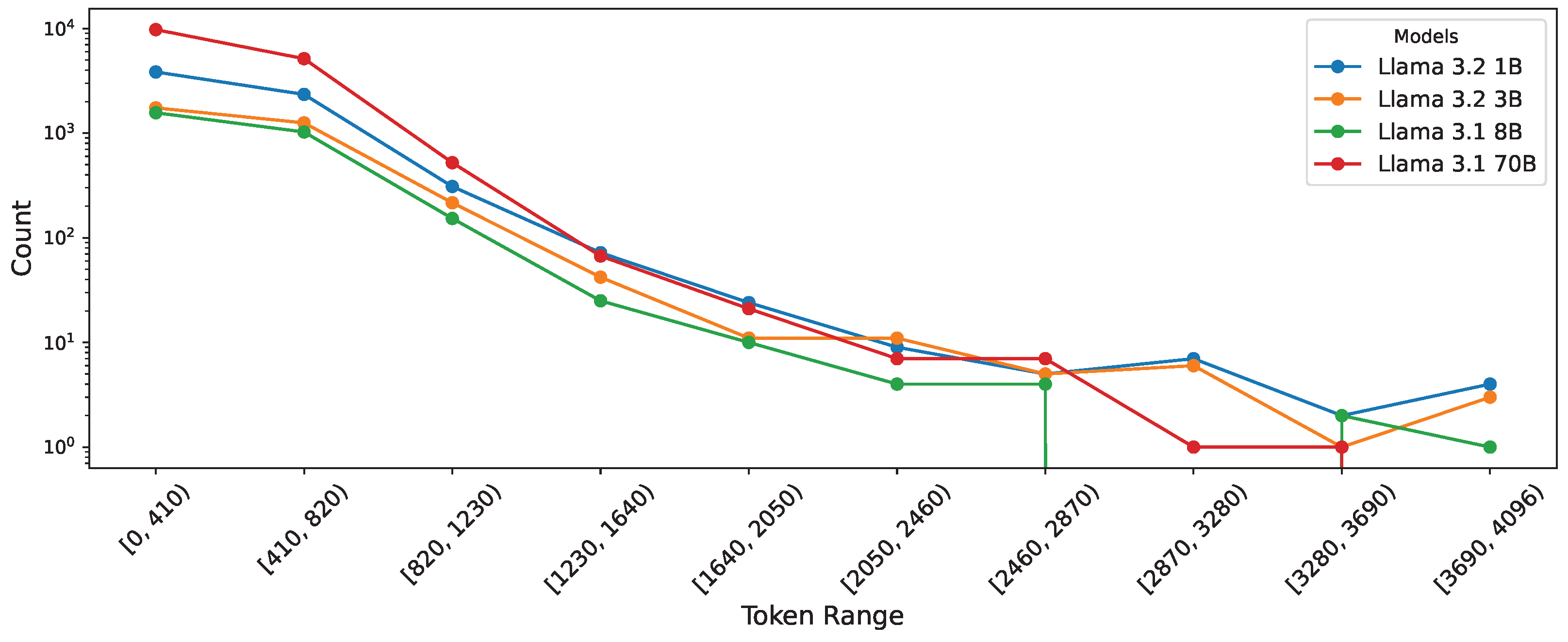

6.2.1. Power-Consumption Measurements—Generative AI

6.2.2. Correlation Metrics for Generative AI

- energy_per_sample represents the total average energy consumed for one LLM inference request. Since this serves as our baseline measure of energy usage, its correlation with total energy is, by definition, equal to 1.00.

- flops: The total number of floating-point operations required for the model’s forward pass. This metric reflects the global computational cost of generating an inference output.

- model_size_to_ram compares the on-GPU size of the model to the total VRAM available, impacting caching efficiency and concurrency.

- parameters: The full parameter count for the LLM reflects the overall model scale. Larger models tend to require more computing but can be more expressive.

- request_rate: The number of inference RPS. A higher RPS often leads to improved batching on GPUs, thus reducing the per-request energy overhead up to resource limits.

- cache_hit_rate: The fraction of queries that leverage cached tokens (e.g., from matching prompt prefixes). Effective caching lowers redundant computation and helps reduce energy usage.

- average_output_token_length: The mean token length of the model’s generated responses. While it does increase inference steps, its effect on the total energy is often secondary to batching or model-scale factors.

7. Discussion

8. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Luccioni, A.; Lacoste, A.; Schmidt, V. Estimating Carbon Emissions of Artificial Intelligence [Opinion]. IEEE Technol. Soc. Mag 2020, 39, 48–51. [Google Scholar]

- Kathikeyan, T.; Revathi, S.; Supreeth, B.R.; Sasidevi, J.; Ahmed, M.; Das, S. Artificial Intelligence and Mixed Reality Technology for Interactive Display of Images in Smart Area. In Proceedings of the 2022 5th International Conference on Contemporary Computing and Informatics (IC3I), Uttar Pradesh, India, 14–16 December 2022; pp. 2049–2053. [Google Scholar] [CrossRef]

- Moinnereau, M.A.; de Oliveira, A.A.; Falk, T.H. Immersive Media Experience: A Survey of Existing Methods and Tools for Human Influential Factors Assessment. Qual. User Exp. 2022, 7, 5. [Google Scholar]

- Bertolini, M.; Mezzogori, D.; Neroni, M.; Zammori, F. Machine Learning for industrial applications: A comprehensive literature review. Expert Syst. Appl. 2021, 175, 114820. [Google Scholar]

- Wang, Y.; Pan, Y.; Yan, M.; Su, Z.; Luan, T.H. A Survey on ChatGPT: AI–Generated Contents, Challenges, and Solutions. IEEE Open J. Comput. Soc 2023, 4, 280–302. [Google Scholar]

- Li, P.; Sánchez-Mompó, A.; Farnham, T.; Khan, A.; Aijaz, A. Large Generative AI Models meet Open Networks for 6G: Integration, Platform, and Monetization. arXiv 2024, arXiv:2410.18790. [Google Scholar]

- Katsaros, K.; Mavromatis, I.; Antonakoglou, K.; Ghosh, S.; Kaleshi, D.; Mahmoodi, T.; Asgari, H.; Karousos, A.; Tavakkolnia, I.; Safi, H.; et al. AI-Native Multi-Access Future Networks—The REASON Architecture. IEEE Access 2024, 12, 178586–178622. [Google Scholar]

- Patterson, D.; Gonzalez, J.; Hölzle, U.; Le, Q.; Liang, C.; Munguia, L.M.; Rothchild, D.; So, D.R.; Texier, M.; Dean, J. The Carbon Footprint of Machine Learning Training Will Plateau, Then Shrink. Computer 2022, 55, 18–28. [Google Scholar]

- Schwartz, R.; Dodge, J.; Smith, N.A.; Etzioni, O. Green AI. Commun. ACM 2020, 63, 54–63. [Google Scholar]

- Verdecchia, R.; Sallou, J.; Cruz, L. A Systematic Review of Green AI. WIREs Data Min. Knowl. Discov. 2023, 13, e1507. [Google Scholar]

- Singh, A.; Patel, N.P.; Ehtesham, A.; Kumar, S.; Khoei, T.T. A Survey of Sustainability in Large Language Models: Applications, Economics, and Challenges. arXiv 2024, arXiv:2412.04782. [Google Scholar]

- Yang, T.J.; Chen, Y.H.; Sze, V. Designing Energy-Efficient Convolutional Neural Networks Using Energy-Aware Pruning. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 6071–6079. [Google Scholar]

- Eliezer, N.S.; Banner, R.; Ben-Yaakov, H.; Hoffer, E.; Michaeli, T. Power Awareness In Low Precision Neural Networks. In Proceedings of the Computer Vision—ECCV 2022 Workshops, Tel Aviv, Israel, 23–27 October 2022; pp. 67–83. [Google Scholar]

- de Reus, P.; Oprescu, A.; Zuidema, J. An Exploration of the Effect of Quantisation on Energy Consumption and Inference Time of StarCoder2. arXiv 2024, arXiv:2411.12758. [Google Scholar]

- Cottier, B.; Rahman, R.; Fattorini, L.; Maslej, N.; Owen, D. The rising costs of training frontier AI models. arXiv 2024, arXiv:2405.21015. [Google Scholar]

- Touvron, H.; Lavril, T.; Izacard, G.; Martinet, X.; Lachaux, M.A.; Lacroix, T.; Rozière, B.; Goyal, N.; Hambro, E.; Azhar, F.; et al. LLaMA: Open and Efficient Foundation Language Models. arXiv 2023, arXiv:2302.13971. [Google Scholar]

- Wu, C.J.; Raghavendra, R.; Gupta, U.; Acun, B.; Ardalani, N.; Maeng, K.; Chang, G.; Aga, F.; Huang, J.; Bai, C.; et al. Sustainable AI: Environmental Implications, Challenges and Opportunities. Proc. Mach. Learn. Syst. 2022, 4, 795–813. [Google Scholar]

- Islam, M.S.; Zisad, S.N.; Kor, A.L.; Hasan, M.H. Sustainability of Machine Learning Models: An Energy Consumption Centric Evaluation. In Proceedings of the 2023 International Conference on Electrical, Computer and Communication Engineering (ECCE), Chittagong, Bangladesh, 23–25 February 2023; pp. 1–6. [Google Scholar]

- Strubell, E.; Ganesh, A.; McCallum, A. Energy and Policy Considerations for Modern Deep Learning Research. Proc. AAAI Conf. Artif. Intell. 2020, 34, 13693–13696. [Google Scholar]

- Samsi, S.; Zhao, D.; McDonald, J.; Li, B.; Michaleas, A.; Jones, M.; Bergeron, W.; Kepner, J.; Tiwari, D.; Gadepally, V. From Words to Watts: Benchmarking the Energy Costs of Large Language Model Inference. In Proceedings of the 2023 IEEE High Performance Extreme Computing Conference (HPEC), Boston, MA, USA, 25–29 September 2023; pp. 1–9. [Google Scholar] [CrossRef]

- Husom, E.J.; Goknil, A.; Shar, L.K.; Sen, S. The Price of Prompting: Profiling Energy Use in Large Language Models Inference. arXiv 2024, arXiv:2410.18790. [Google Scholar]

- Li, P.; Mavromatis, I.; Farnham, T.; Aijaz, A.; Khan, A. Adapting MLOps for Diverse In-Network Intelligence in 6G Era: Challenges and Solutions. arXiv 2024, arXiv:2410.18793. [Google Scholar]

- Testi, M.; Ballabio, M.; Frontoni, E.; Iannello, G.; Moccia, S.; Soda, P.; Vessio, G. MLOps: A Taxonomy and a Methodology. IEEE Access 2022, 10, 63606–63618. [Google Scholar]

- Teo, T.W.; Chua, H.N.; Jasser, M.B.; Wong, R.T. Integrating Large Language Models and Machine Learning for Fake News Detection. In Proceedings of the 2024 20th IEEE International Colloquium on Signal Processing and Its Applications, CSPA 2024—Conference Proceedings, Langkawi, Malaysia, 1–2 March 2024; pp. 102–107. [Google Scholar]

- Satorras, V.G.; Akata, Z.; Welling, M. Combining Generative and Discriminative Models for Hybrid Inference. arXiv 2019, arXiv:1906.02547. [Google Scholar]

- Zhang, R.; Du, H.; Liu, Y.; Niyato, D.; Kang, J.; Xiong, Z.; Jamalipour, A.; In Kim, D. Generative AI Agents With Large Language Model for Satellite Networks via a Mixture of Experts Transmission. IEEE J. Sel. Areas Commun. 2024, 42, 3581–3596. [Google Scholar] [CrossRef]

- Mavromatis, I.; Katsaros, K.; Khan, A. Computing Within Limits: An Empirical Study of Energy Consumption in ML Training and Inference. In Proceedings of the International Scientific Conference on Information, Communication and Energy Systems and Technologies (ICEST 2024)—Workshop on Artificial Intelligence for Sustainable Development (ARISDE 2024), Sozopol, Bulgaria, 1–3 July 2024. [Google Scholar]

- Conti, G.; Jimenez, D.; del Rio, A.; Castano-Solis, S.; Serrano, J.; Fraile-Ardanuy, J. A Multi-Port Hardware Energy Meter System for Data Centers and Server Farms Monitoring. Sensors 2023, 23, 119. [Google Scholar] [CrossRef] [PubMed]

- Rinaldi, S.; Bonafini, F.; Ferrari, P.; Flammini, A.; Pasetti, M.; Sisinni, E. Software-based Time Synchronization for Integrating Power Hardware in the Loop Emulation in IEEE1588 Power Profile Testbed. In Proceedings of the 2019 IEEE International Symposium on Precision Clock Synchronization for Measurement, Control, and Communication (ISPCS), Portland, OR, USA, 22–27 September 2019; pp. 1–6. [Google Scholar]

- Lin, W.; Yu, T.; Gao, C.; Liu, F.; Li, T.; Fong, S.; Wang, Y. A Hardware-aware CPU Power Measurement Based on the Power-exponent Function model for Cloud Servers. Inf. Sci. 2021, 547, 1045–1065. [Google Scholar] [CrossRef]

- NVIDIA Corporation. nvidia-smi.txt. 2016. [Google Scholar]

- Katsenou, A.; Mao, J.; Mavromatis, I. Energy-Rate-Quality Tradeoffs of State-of-the-Art Video Codecs. In Proceedings of the 2022 Picture Coding Symposium (PCS), San Jose, CA, USA, 7–9 December 2022; pp. 265–269. [Google Scholar]

- Vogelsang, T. Understanding the Energy Consumption of Dynamic Random Access Memories. In Proceedings of the Annual IEEE/ACM International Symposium on Microarchitecture, Atlanta, GA, USA, 4–8 December 2010; pp. 363–374. [Google Scholar]

- Teo, J.; Chia, J.T. Deep Neural Classifiers For Eeg-Based Emotion Recognition In Immersive Environments. In Proceedings of the 2018 International Conference on Smart Computing and Electronic Enterprise (ICSCEE), Shah Alam, Malaysia, 11–12 July 2018; pp. 1–6. [Google Scholar]

- Gaona-Garcia, P.A.; Montenegro-Marin, C.E.; Martínez Mendivil, d.I.S.; Rodríguez, A.O.R.; Riano, M.A. Image Classification Methods Applied in Immersive Environments for Fine Motor Skills Training in Early Education. Int. J. Interact. Multimed. Artif. Intell. 2019, 5, 151–158. [Google Scholar]

- Krizhevsky, A. Learning Multiple Layers of Features from Tiny Images; University of Toronto: Toronto, ON, Canada, 2009. [Google Scholar]

- Mavromatis, I.; De Feo, S.; Carnelli, P.; Piechocki, R.J.; Khan, A. FROST: Towards Energy-efficient AI-on-5G Platforms—A GPU Power Capping Evaluation. In Proceedings of the 2023 IEEE Conference on Standards for Communications and Networking (CSCN), Munich, Germany, 6–8 November 2023; pp. 1–6. [Google Scholar]

- Aldin, N.B.; Aldin, S.S.A.B. Accuracy Comparison of Different Batch Size for a Supervised Machine Learning Task with Image Classification. In Proceedings of the 2022 9th International Conference on Electrical and Electronics Engineering (ICEEE), Alanya, Turkey, 29–31 March 2022; pp. 316–319. [Google Scholar]

- Kwon, W.; Li, Z.; Zhuang, S.; Sheng, Y.; Zheng, L.; Yu, C.H.; Gonzalez, J.E.; Zhang, H.; Stoica, I. Efficient Memory Management for Large Language Model Serving with PagedAttention. In Proceedings of the 29th Symposium on Operating Systems Principles, Koblenz, Germany, 23–26 October 2023. [Google Scholar]

- Zheng, L.; Chiang, W.L.; Sheng, Y.; Zhuang, S.; Wu, Z.; Zhuang, Y.; Lin, Z.; Li, Z.; Li, D.; Xing, E.P.; et al. Judging LLM-as-a-judge with MT-Bench and Chatbot Arena. arXiv 2023, arXiv:2306.05685. [Google Scholar]

- Ye, X. calflops: A FLOPs and Params calculate tool for neural networks in pytorch framework. 2023. [Google Scholar]

| HC-1 | HC-2 | HC-3 | HC-4 | |

|---|---|---|---|---|

| CPU * | i7-8700K (95 W) | i9-11900KF (125 W) | i5-12500 (65 W) | Xeon 8480+ (350 W) |

| DRAM | 4 × 16 GB DDR4 | 4 × 32 GB DDR4 | 2 × 16 GB DDR5 | 16 × 64 GB DDR5 |

| 3600 MHz | 3200 MHz | 3200 MHz | 2200 MHz | |

| GPU + | RTX 3080 (320 W) | RTX 3090 (350 W) | RTX A2000 (70 W) | 2 × H100 (2 × 300 W ) |

| 10 GB | 24 GB | 12 GB | 2 × 80 GB |

| Hyperparameter | Value |

|---|---|

| Batch Size | 128 |

| Learning Rate | 0.001 |

| Optimiser | Stochastic Gradient Descent |

| Loss Function | Categorical Cross-Entropy |

| Weight Decay |

| Metric | HC-1 | HC-2 | HC-3 |

|---|---|---|---|

| energy_per_sample | 1.000000 | 1.000000 | 1.000000 |

| macs_param | 0.902342 | 0.915271 | 0.852587 |

| model_size_to_ram | 0.521170 | 0.212621 | 0.457989 |

| overall_efficiency | −0.439481 | −0.340809 | −0.592853 |

| work_per_unit_power | −0.311792 | −0.388402 | −0.691502 |

| gpu_energy_scaling_factor | 0.229738 | 0.196945 | 0.390812 |

| energy_scaling_factor | −0.039773 | −0.106112 | −0.109445 |

| parameters | 0.200979 | 0.212926 | 0.142314 |

| work_done | 0.021486 | −0.052404 | −0.387764 |

| Hyperparameter | Value |

|---|---|

| Temperature | 0 |

| Top-p | 1 |

| Top-k | |

| Min-p | 0 |

| Detokenisation | True |

| ID | Question |

|---|---|

| 1 | What is the difference between OpenCL and CUDA? |

| 2 | Why did my parent not invite me to their wedding? |

| 3 | Fuji vs. Nikon, which is better? |

| Metric | HC-4 |

|---|---|

| energy_per_sample | 1.00 |

| flops | 0.32 |

| model_size_to_ram | 0.32 |

| parameters | 0.32 |

| request_rate | −0.95 |

| average_output_token_length | −0.26 |

| cache_hit_rate | −0.32 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Sánchez-Mompó, A.; Mavromatis, I.; Li, P.; Katsaros, K.; Khan, A. Green MLOps to Green GenOps: An Empirical Study of Energy Consumption in Discriminative and Generative AI Operations. Information 2025, 16, 281. https://doi.org/10.3390/info16040281

Sánchez-Mompó A, Mavromatis I, Li P, Katsaros K, Khan A. Green MLOps to Green GenOps: An Empirical Study of Energy Consumption in Discriminative and Generative AI Operations. Information. 2025; 16(4):281. https://doi.org/10.3390/info16040281

Chicago/Turabian StyleSánchez-Mompó, Adrián, Ioannis Mavromatis, Peizheng Li, Konstantinos Katsaros, and Aftab Khan. 2025. "Green MLOps to Green GenOps: An Empirical Study of Energy Consumption in Discriminative and Generative AI Operations" Information 16, no. 4: 281. https://doi.org/10.3390/info16040281

APA StyleSánchez-Mompó, A., Mavromatis, I., Li, P., Katsaros, K., & Khan, A. (2025). Green MLOps to Green GenOps: An Empirical Study of Energy Consumption in Discriminative and Generative AI Operations. Information, 16(4), 281. https://doi.org/10.3390/info16040281