PIF and ReCiF: Efficient Interest-Packet Forwarding Mechanisms for Named-Data Wireless Mesh Networks

Abstract

:1. Introduction

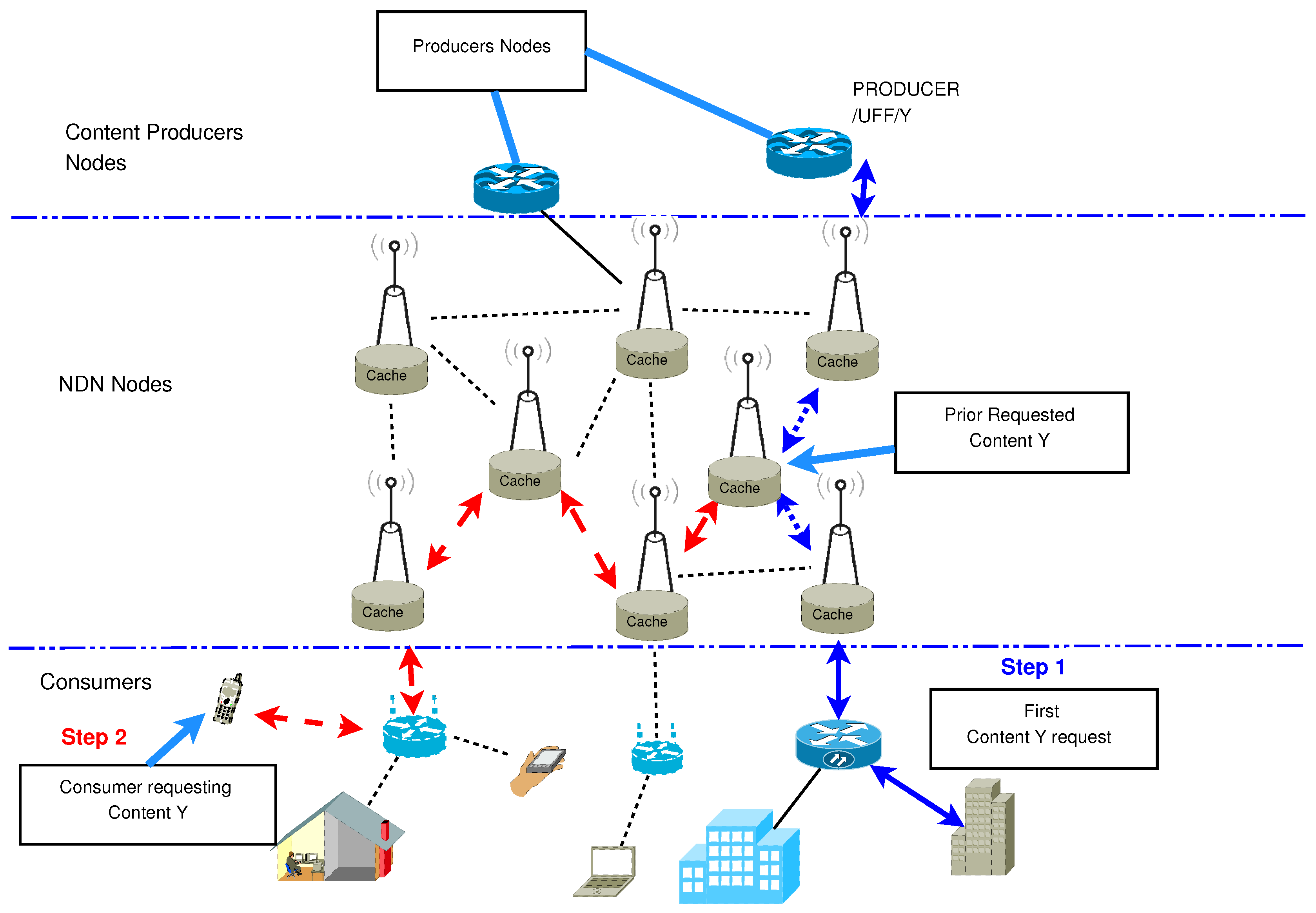

2. NDN-Based Wireless Mesh Networks

3. Related Works

3.1. Caching in Wireless Mesh Networks

3.2. Listen First Broadcast Later

3.3. Summary

4. The Proposed Mechanisms

4.1. The PIF Mechanism

| Algorithm 1: Probabilistic Interest Forwarding (PIF) Mechanism. |

|

4.2. The ReCIF Mechanism

| Algorithm 2: Retransmission-Counter-based Interest Forwarding (ReCIF)-hard mechanism. |

|

| Algorithm 3: ReCIF-soft mechanism. |

|

| Algorithm 4: ReCIF-soft G=0 Mechanism. |

|

4.3. The ReCIF+PIF Mechanism

| Algorithm 5: ReCIF+PIF mechanism. |

|

4.4. Implementation Details

- After the arrival of the interest packet, the node checks its neighbor list size.

- If the list of neighbors, called neighbor.LIST, is empty, the node adds the tuple node_ID, obtained from the interest packet header, and the interest packet arrival time, pckt.TIME to the list. After that, the neighbors list size neighbor.LIST.Size is incremented.

- If the list of neighbors is not empty, the node ID extracted from the packet is compared with IDs stored on the neighbor.LIST. If the ID is not found in the neighbor list, then the ID and the packet arrival time are added to the list, as previously described. Otherwise, if the ID is found on the list, then the packet arrival time is updated (pckt.TIME). A timeout is defined for each entry. If the timeout expires, the tuple is removed from the list, and the list size is updated. The timeout is used to remove nodes from the list that may be off because of some failure or administrator change. The amount of time used to set the expiration time might change to cover the specific network flexibility. For our work, that time was set to the time used in the simulation, since our nodes were fixed and the environment was controlled regarding node failures.

- The last step is to check if any tuple of the neighbor list has a packet time higher than the expiration time. In such cases, the tuple will be removed, and the neighbor list size will be decremented.

5. Results

5.1. Simulation Parameters

5.2. TCP/IP Stack with OLSR vs NDN

5.3. The Proposed Mechanisms

5.3.1. Parametrization

5.3.2. The Impact of the Content Request Rate

5.3.3. The Impact of the Number of Consumers

5.3.4. The Impact of Cache Size

5.3.5. The Impact of the Number of Nodes

6. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Canali, C.; Renda, M.E.; Santi, P.; Burresi, S. Enabling efficient peer-to-peer resource sharing in wireless mesh networks. IEEE Trans. Mob. Comput. 2010, 9, 333–347. [Google Scholar] [CrossRef]

- Yang, Y.; Wang, J.; Kravets, R. Designing routing metrics for mesh networks. In Proceedings of the IEEE Workshop on Wireless Mesh Networks (WiMesh), Santa Clara, CA, USA, 26 September 2005. [Google Scholar]

- Pathak, P.H.; Dutta, R. A survey of network design problems and joint design approaches in wireless mesh networks. IEEE Commun. Surv. Tutor. 2011, 13, 396–428. [Google Scholar] [CrossRef]

- Al-Arnaout, Z.; Fu, Q.; Frean, M. A content replication scheme for wireless mesh networks. In Proceedings of the 22nd international workshop on Network and Operating System Support for Digital Audio and Video, Toronto, ON, Canada, 7–8 June 2012; pp. 39–44. [Google Scholar]

- Oh, S.Y.; Lau, D.; Gerla, M. Content centric networking in tactical and emergency manets. In Proceedings of the Wireless Days (WD), Venice, Italy, 20–22 October 2010; pp. 1–5. [Google Scholar]

- De Brito, G.M.; Velloso, P.B.; Moraes, I.M. Information Centric Networks: A New Paradigm for the Internet; John Wiley & Sons: Hoboken, NJ, USA, 2013. [Google Scholar]

- Torres, J.V.; Alvarenga, I.D.; Boutaba, R.; Duarte, O.C.M. An autonomous and efficient controller-based routing scheme for networking Named-Data mobility. Comput. Commun. 2017, 103, 94–103. [Google Scholar] [CrossRef]

- Li, Z.; Simon, G. Cooperative caching in a content centric network for video stream delivery. J. Netw. Syst. Manag. 2015, 23, 445–473. [Google Scholar] [CrossRef]

- Kim, D.; Kim, J.H.; Moon, C.; Choi, J.; Yeom, I. Efficient content delivery in mobile ad-hoc networks using CCN. Ad Hoc Netw. 2016, 36, 81–99. [Google Scholar] [CrossRef]

- Meisel, M.; Pappas, V.; Zhang, L. Ad hoc networking via named data. In Proceedings of the Fifth ACM International Workshop on Mobility in the Evolving Internet Architecture, Chicago, IL, USA, 20–24 September 2010; pp. 3–8. [Google Scholar]

- Jacobson, V.; Smetters, D.K.; Thornton, J.D.; Plass, M.F.; Briggs, N.H.; Braynard, R.L. Networking named content. In Proceedings of the 5th International Conference on Emerging Networking Experiments and Technologies, Rome, Italy, 1–4 December 2009; pp. 1–12. [Google Scholar]

- Bosunia, M.R.; Kim, A.; Jeong, D.P.; Park, C.; Jeong, S.H. Enhanced Multimedia Data Delivery based on Content-Centric Networking in Wireless Networks. Appl. Math. 2015, 9, 579–589. [Google Scholar]

- Sandvine Top 10 Peak Period Applications. Available online: https://www.sandvine.com/pr/2016/6/22/sandvine-report-netflix-encoding-optimizations-result-in-north-american-traffic-share-decline.html (accessed on 15 July 2017).

- Amadeo, M.; Campolo, C.; Molinaro, A. Content-centric networking: Is that a solution for upcoming vehicular networks? In Proceedings of the Ninth ACM International Workshop on Vehicular Inter-Networking, Systems, and Applications, Ambleside, UK, 25–29 June 2012; pp. 99–102. [Google Scholar]

- Amadeo, M.; Molinaro, A.; Campolo, C.; Sifalakis, M.; Tschudin, C. Transport layer design for named data wireless networking. In Proceedings of the 2014 IEEE Conference on Computer Communications Workshops (INFOCOM WKSHPS), Toronto, ON, Canada, 27 April–2 May 2014; pp. 464–469. [Google Scholar]

- Akyildiz, I.F.; Wang, X.; Wang, W. Wireless mesh networks: A survey. Comput. Netw. 2005, 47, 445–487. [Google Scholar] [CrossRef]

- Carofiglio, G.; Gallo, M.; Muscariello, L. ICP: Design and evaluation of an interest control protocol for content-centric networking. In Proceedings of the 2012 IEEE Conference on Computer Communications Workshops (INFOCOM WKSHPS), Orlando, FL, USA, 25–30 May 2012; pp. 304–309. [Google Scholar]

- Liu, D.; Chen, B.; Yang, C.; Molisch, A.F. Caching at the wireless edge: Design aspects, challenges, and future directions. IEEE Commun. Mag. 2016, 54, 22–28. [Google Scholar] [CrossRef]

- IEEE Computer Society LAN MAN Standards Committee. Wireless LAN medium access control (MAC) and physical layer (PHY) specifications. IEEE Standard 802.11-1997 1997. [Google Scholar] [CrossRef]

- Meisel, M.; Pappas, V.; Zhang, L. Listen first, broadcast later: Topology-agnostic forwarding under high dynamics. In Proceedings of the Annual Conference of International Technology Alliance in Network and Information Science, London, UK, 15–16 September 2010; p. 8. [Google Scholar]

- Perkins, C.; Belding-Royer, E.; Das, S. Ad Hoc on-Demand Distance Vector (AODV) Routing. Technical Report. 2003. Available online: https://tools.ietf.org/html/rfc3561 (accessed on 27 September 2018).

- Chen, M.; Hao, Y.; Qiu, M.; Song, J.; Wu, D.; Humar, I. Mobility-aware caching and computation offloading in 5G ultra-dense cellular networks. Sensors 2016, 16, 974. [Google Scholar] [CrossRef] [PubMed]

- Afanasyev, A.; Jiang, X.; Yu, Y.; Tan, J.; Xia, Y.; Mankin, A.; Zhang, L. NDNS: A DNS-Like Name Service for NDN. In Proceedings of the 26th International Conference on Computer Communications and Networks (ICCCN), Vancouver, BC, Canada, 31 July–3 August 2017. [Google Scholar]

- Tatar, A.; de Amorim, M.D.; Fdida, S.; Antoniadis, P. A survey on predicting the popularity of web content. J. Internet Serv. Appl. 2014, 5, 1. [Google Scholar] [CrossRef]

- Rainer, B.; Posch, D.; Hellwagner, H. Investigating the performance of pull-based dynamic adaptive streaming in NDN. IEEE J. Sel. Areas Commun. 2016, 34, 2130–2140. [Google Scholar] [CrossRef]

- Burresi, S.; Canali, C.; Renda, M.E.; Santi, P. MeshChord: A location-aware, cross-layer specialization of Chord for wireless mesh networks (concise contribution). In Proceedings of the 2008 Sixth Annual IEEE International Conference on Pervasive Computing and Communications (PerCom), Hong Kong, China, 17–21 March 2008; pp. 206–212. [Google Scholar]

- Gibson, C.; Bermell-Garcia, P.; Chan, K.; Ko, B.; Afanasyev, A.; Zhang, L. Opportunities and Challenges for Named Data Networking to Increase the Agility of Military Coalitions. In Proceedings of the Workshop on Distributed Analytics InfraStructure and Algorithms for Multi-Organization Federations (DAIS), San Francisco, CA, USA, 4–8 August 2017. [Google Scholar]

- Hannan, A.; Arshad, S.; Azam, M.A.; Loo, J.; Ahmed, S.H.; Majeed, M.F.; Shah, S.C. Disaster management system aided by named data network of things: Architecture, design, and analysis. Sensors 2018, 18, 2431. [Google Scholar] [CrossRef] [PubMed]

- Chatzimisios, P.; Boucouvalas, A.; Vitsas, V. Effectiveness of RTS/CTS handshake in IEEE 802.11 a wireless LANs. Electron. Lett. 2004, 40, 915–916. [Google Scholar] [CrossRef]

- Amadeo, M.; Molinaro, A. CHANET: A content-centric architecture for IEEE 802.11 MANETs. In Proceedings of the 2011 International Conference on the.Network of the Future (NOF), Paris, France, 28–30 November 2011; pp. 122–127. [Google Scholar]

- Schneider, K.M.; Mast, K.; Krieger, U.R. CCN forwarding strategies for multihomed mobile terminals. In Proceedings of the 2015 International Conference and Workshops on Networked Systems (NetSys), Cottbus, Germany, 9–12 March 2015; pp. 1–5. [Google Scholar]

- Lu, Y.; Li, X.; Yu, Y.T.; Gerla, M. Information-centric delay-tolerant mobile ad-hoc networks. In Proceedings of the 2014 IEEE Conference on Computer Communications Workshops (INFOCOM WKSHPS), Toronto, ON, Canada, 27 April–2 May 2014; pp. 428–433. [Google Scholar]

- Rainer, B.; Posch, D.; Leibetseder, A.; Theuermann, S.; Hellwagner, H. A low-cost NDN testbed on banana pi routers. IEEE Commun. Mag. 2016, 54, 105–111. [Google Scholar] [CrossRef]

- Zhao, N.; Liu, X.; Yu, F.R.; Li, M.; Leung, V.C. Communications, caching, and computing oriented small cell networks with interference alignment. IEEE Commun. Mag. 2016, 54, 29–35. [Google Scholar] [CrossRef]

- Mascarenhas, D.M.; Moraes, I.M. Limiting the interest-packet forwarding in information-centric wireless mesh networks. In Proceedings of the Wireless Days (WD) 2014, Rio de Janeiro, Brazil, 12–14 November 2014; pp. 1–6. [Google Scholar]

- Clausen, T.; Jacquet, P. Optimized Link State Routing Protocol (OLSR). Technical Report. 2003. Available online: https://tools.ietf.org/html/rfc3626 (accessed on 27 September 2018).

- Akkaya, K.; Younis, M. A survey on routing protocols for wireless sensor networks. Ad Hoc Netw. 2005, 3, 325–349. [Google Scholar] [CrossRef] [Green Version]

- Afanasyev, A.; Moiseenko, I.; Zhang, L. ndnSIM: NDN Simulator for NS-3. Named Data Networking (NDN) Project, Technical Report NDN-0005. 2012, Volume 2. Available online: http://named-data.net/techreport/TR005-ndnsim.pdf (accessed on 27 September 2018).

- Senior, R.; Phillips, C. CRAWDAD Data Set Pdx/Metrofi (v.2011-10-24). Available online: http://crawdad.org/pdx/metrofi/ (accessed on 27 September 2018).

| Interest Packet Field | Description |

|---|---|

| FWD_counter | Incremented at each packet forwarding. |

| node_ID | Receives the identifier of the last forwarding node, modified at each hop. |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Matos Mascarenhas, D.; Monteiro Moraes, I. PIF and ReCiF: Efficient Interest-Packet Forwarding Mechanisms for Named-Data Wireless Mesh Networks. Information 2018, 9, 243. https://doi.org/10.3390/info9100243

Matos Mascarenhas D, Monteiro Moraes I. PIF and ReCiF: Efficient Interest-Packet Forwarding Mechanisms for Named-Data Wireless Mesh Networks. Information. 2018; 9(10):243. https://doi.org/10.3390/info9100243

Chicago/Turabian StyleMatos Mascarenhas, Dalbert, and Igor Monteiro Moraes. 2018. "PIF and ReCiF: Efficient Interest-Packet Forwarding Mechanisms for Named-Data Wireless Mesh Networks" Information 9, no. 10: 243. https://doi.org/10.3390/info9100243

APA StyleMatos Mascarenhas, D., & Monteiro Moraes, I. (2018). PIF and ReCiF: Efficient Interest-Packet Forwarding Mechanisms for Named-Data Wireless Mesh Networks. Information, 9(10), 243. https://doi.org/10.3390/info9100243