Exploring the Accuracy and Consistency of a School Readiness Assessment Tool for Preschoolers: Reliability, Validity and Measurement Invariance Analysis

Abstract

:1. Introduction

2. Literature Review

2.1. Children’s School Readiness and Assessment

- Fine-tuned co-ordination between writing and motion, a prerequisite for writing instruction (fine motor skills);

- Effective speech perception and auditory skills, a fundamental requirement for successful reading instruction (phoneme perception skills);

- Foundational vocabulary knowledge, essential for proficient verbal communication (reading comprehension);

- Fundamental arithmetic capabilities (pre-mathematics skills);

- Deduction based on experiential learning (deductive reasoning skills);

- Comprehension of relationships based on experimental learning, both pivotal for cognitive advancement (relational reasoning skills);

- Cultivation of social aptitudes, pivotal for school life and personality development (social skills).

2.2. Developmental Change by Age

2.3. Theoretical Perspectives to Assessments

2.4. Measurement Invariance (MI) and Its Assessing Methods

2.4.1. Configural Invariance

2.4.2. Metric Invariance

2.4.3. Scalar Invariance

2.4.4. Residual Invariance

2.5. Latent Mean Differences

2.6. Background Information

2.7. Context of the Current Study

- RQ1: Do students’ abilities align with the ability levels of items in the DIFER test?

- RQ2: What is the extent of the reliability and validity exhibited by the DIFER test?

- RQ3: Are there any noteworthy variations in performance on the DIFER test based on factors such as countries, genders, and ages?

3. Materials and Methods

3.1. Participants

3.2. Instrument and Procedure

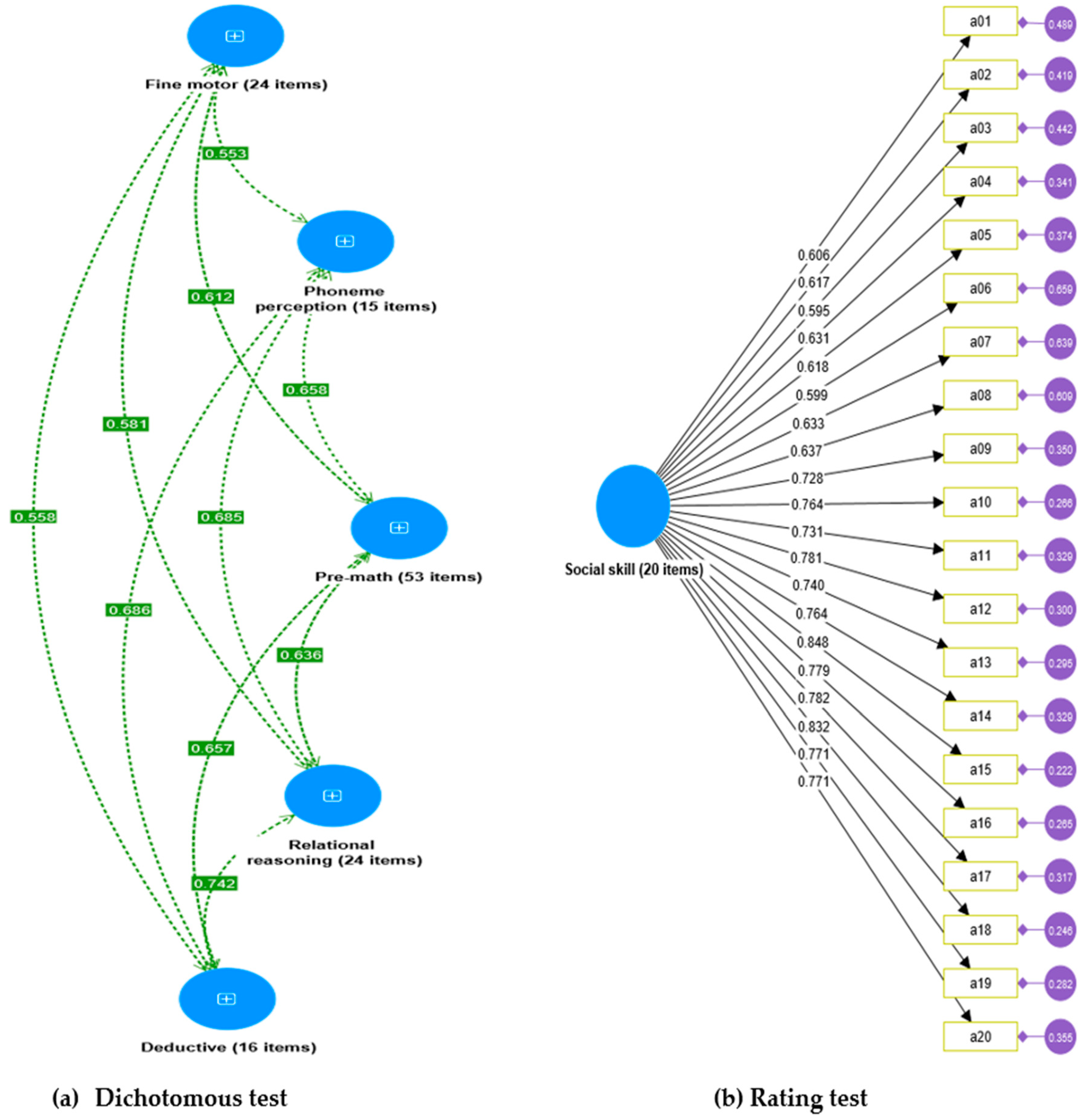

3.2.1. Dichotomous Test of DIFER

3.2.2. Rating Test of DIFER

3.3. Analysis

3.4. Preliminary Analyses

4. Results

4.1. Addressing RQ1

4.1.1. Differential Item Functioning (DIF) for Age Groups

4.1.2. Multidimensional Rasch Analysis

4.2. Addressing RQ2

Correlational Changes among Factors for Different Age Groups

4.3. Addressing RQ3

4.3.1. Measurement Invariance across Countries

4.3.2. Measurement Invariance across Genders

4.3.3. Measurement Invariance across Ages

4.3.4. Latent Mean Differences

5. Discussion

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- AL-Dossary, Saeed Abdulla. 2021. Psychometric properties and measurement invariance of the Arabic version of the Flourishing Scale. International Journal of Psychological Studies 13: 20. [Google Scholar] [CrossRef]

- Amukune, Stephen, Gabriella Józsa, and Krisztián Józsa. 2022b. Comparing executive functioning in the school readiness of Hungarian and Kenyan preschoolers. International Journal of Early Childhood 55: 205–22. [Google Scholar] [CrossRef]

- Amukune, Stephen, Karen Caplovitz Barrett, and Krisztián Józsa. 2022a. Game-based assessment of school readiness domains of 3–8-year-old-children: A scoping review. Journal of New Approaches in Educational Research 11: 146–67. [Google Scholar] [CrossRef]

- An, Xiaozhu, Timothy W. Curby, and Qingyuan Xie. 2018. Chinese teachers’ perceptions of early childhood school readiness. School Psychology International 39: 454–69. [Google Scholar] [CrossRef]

- Andrich, David. 2018. Advances in social measurement: A Rasch measurement theory. In Perceived Health and Adaptation in Chronic Disease. London: Routledge, pp. 66–91. [Google Scholar] [CrossRef]

- Anthony, Rebecca, Graham Moore, Nicholas Page, Gillian Hewitt, Simon Murphy, and G. J. Melendez-Torres. 2022. Measurement invariance of the short Warwick-Edinburgh Mental Wellbeing Scale and latent mean differences (SWEMWBS) in young people by current care status. Quality of Life Research 31: 205–13. [Google Scholar] [CrossRef]

- Åström, Elisabeth, Anna E. Sundström, and Per-Erik Lyrén. 2022. Examining the psychometric properties of the Swedish version of Clinical Outcomes in Routine Evaluation–Outcome Measure (CORE-OM) in a clinical sample using classical test theory and item response theory. Clinical Psychology & Psychotherapy 30: 398–409. [Google Scholar] [CrossRef]

- Ayanwale, Musa Adekunle, Julia Chere-Masopha, and Malebohang Catherine Morena. 2022. The classical test or item response measurement theory: The status of the framework at the Examination Council of Lesotho. International Journal of Learning, Teaching and Educational Research 21: 384–406. [Google Scholar] [CrossRef]

- Azizan, Nural Hafizah, Zamalia Mahmud, and Adzhar Rambli. 2020. Rasch rating scale item estimates using maximum likelihood approach: Effects of sample size on the accuracy and bias of the estimates. International Journal of Advanced Science and Technology 29: 2526–31. [Google Scholar]

- Bender, Stacy L., Andy V. Pham, and John S. Carlson. 2011. School readiness. In Encyclopedia of Child Behavior and Development. Edited by Sam Goldstein and Jack A. Naglieri. Boston: Springer. [Google Scholar] [CrossRef]

- Berhenke, Amanda, Alison L. Miller, Eleanor Brown, Ronald Seifer, and Susan Dickstein. 2011. Observed emotional and behavioral indicators of motivation predict school readiness in Head Start graduates. Early Childhood Research Quarterly 26: 430–41. [Google Scholar] [CrossRef]

- Blair, Clancy, and C. Cybele Raver. 2015. School Readiness and self-regulation: A developmental psychobiological approach. Annual Review of Psychology 66: 711–31. [Google Scholar] [CrossRef]

- Blair, Clancy. 2002. School readiness: Integrating cognition and emotion in a neurobiological conceptualization of children’s functioning at school entry. American Psychologist 57: 111–27. [Google Scholar] [CrossRef]

- Blasco, Patricia, Sage Saxton, Lily Marie Gullion, Tun Zaw Oo, Stephen Amukune, and Krisztián Józsa. 2023. Assessment of mastery motivation and neurodevelopment of young children at high risk for developmental delays. Journal of Intelligence 11: 115. [Google Scholar] [CrossRef] [PubMed]

- Bond, Trevor, and Christine M. Fox. 2015. Applying the Rasch Model: Fundamental Measurement in the Human Sciences, 3rd ed. New York: Routledge. [Google Scholar] [CrossRef]

- Boone, William J., John R. Staver, and Melissa S. Yale. 2014. Rasch Analysis in the Human Sciences. Berlin and Heidelberg: Springer. [Google Scholar]

- Bravo, Diana M., Juna C. Suárez-Falcón, Javier M. Bianchi, Miguel A. Segura-Vargas, and Franscio J. Ruiz. 2021. Psychometric properties and measurement invariance of the Maslach Burnout Inventory—General survey in Colombia. International Journal of Environmental Research and Public Health 18: 5118. [Google Scholar] [CrossRef] [PubMed]

- Brock, Laura L., Helyn Kim, and David W. Grissmer. 2018. Longitudinal associations among executive function, visuomotor integration, and achievement in a high-risk sample. Mind, Brain, and Education 12: 23–27. [Google Scholar] [CrossRef]

- Byrne, Barbara M. 2016. Structural Equation Modeling with AMOS: Basic Concepts, Applications, and Programming, 3rd ed. New York: Routledge. [Google Scholar]

- Calchei, Marcela, Tun Zaw Oo, and Krisztián Józsa. 2023. Subject specific mastery motivation in Moldovan Middle school students. Behavioral Sciences 13: 166. [Google Scholar] [CrossRef]

- Chen, Fang Fang, Karen H. Sousa, and Stephen G. West. 2018. Teacher’s corner: A note on reporting practices for measurement invariance tests with mean and covariance structures. Educational and Psychological Measurement 78: 361–80. [Google Scholar]

- Chen, Fang Fang. 2007. Sensitivity of goodness of fit indexes to lack of measurement invariance. Structural Equation Modeling 14: 464–504. [Google Scholar] [CrossRef]

- Chiu, Yen Lin, Chin-Chung Tsai, and J. C. Liang. 2015. Testing measurement invariance and latent mean differences across gender groups in college students’ internet-specific epistemic beliefs. Australasian Journal of Educational Technology 31: 486–99. [Google Scholar] [CrossRef]

- Cieciuch, Jan, and Eldad Davidov. 2015. Establishing measurement invariance across online and offline samples. a tutorial with the software packages amos and mplus. Studia Psychologia 2: 83–99. Available online: https://czasopisma.uksw.edu.pl/index.php/sp/article/view/50/49 (accessed on 15 June 2023). [CrossRef]

- Conant, Lisa L., Einat Liebenthal, Anjili Desai, and Jeffrey R. Binder. 2014. FMRI of phonemic perception and its relationship to reading development in elementary- to middle-school-age children. NeuroImage 89: 192–202. [Google Scholar] [CrossRef] [PubMed]

- Csapó, Benő, Gyöngyvér Molnár, and József Nagy. 2014. Computer-based assessment of school readiness and early reasoning. Journal of Educational Psychology 106: 639–50. [Google Scholar] [CrossRef]

- Curby, Timothy W., Elizabeth Berke, Vincent Alfonso, Jamilia J. Blake, Darlene DeMarie, George J. DuPaul, Roseanne Flores, Robyn S. Hess, Kimberly A. S. Howard, Janice C. C. Lepore, and et al. 2018. Transition practices into kindergarten and the barriers teachers encounter. In Kindergarten Transition and Readiness: Promoting Cognitive, Social-Emotional, and Self-Regulatory Development. Edited by Andrew J. Mashburn, Jennifer LoCasale-Crouch and Katherine Pears. New York: Springer. [Google Scholar]

- De Almeida Maia, Denise, Steffi Pohl, Paola Matiko Martins Okuda, Ting Liu, Marina Leite Puglisi, George Ploubidis, Michael Eid, and Hugo Cogo-Moreira. 2022. Psychometric properties and optimizing of the Bracken School Readiness Assessment. Educational Assessment, Evaluation and Accountability 34: 227–39. [Google Scholar] [CrossRef]

- De Beer, Leon T., Wilmar B. Schaufeli, and Hans De Witte. 2022. The psychometric properties and measurement invariance of the Burnout Assessment Tool (BAT-23) in South Africa. BMC Public Health 22: 1555. [Google Scholar] [CrossRef] [PubMed]

- De Los Reyes, Andres, Fanita A. Tyrell, Ashley L. Watts, and Gordon J. G. Asmundson. 2022. Conceptual, methodological, and measurement factors that disqualify use of measurement invariance techniques to detect informant discrepancies in youth mental health assessments. Frontiers in Psychology 13: 931296. [Google Scholar] [CrossRef] [PubMed]

- Dean, Charlotte E., Shazia Akhtar, Tim M. Gale, Karen Irvine, Richard Wiseman, and Keith R. Laws. 2021. Development of the Paranormal and Supernatural Beliefs Scale using classical and modern test theory. BMC Psychology 9: 98. [Google Scholar] [CrossRef]

- Demetriou, Andreas, Elena Kazali, Smaragda Kazi, and George Spanoudis. 2020. Cognition and cognizance in preschool predict school achievement in primary school. Cognitive Development 54: 100872. [Google Scholar] [CrossRef]

- Demetriou, Andreas, George Spanoudis, Constantinos Christou, Samuel Greiff, Nikolaos Makris, Mari-Pauliina Vainikainen, Hudson Golino, and Eleftheria Gonida. 2023. Cognitive and personality predictors of school performance from preschool to secondary school: An overarching model. Psychological Review 130: 480–512. [Google Scholar] [CrossRef]

- Denham, Susanne Ayers. 2006. Social-emotional competence as support for school readiness: What is it and how do we assess it? Early Education and Development 17: 57–89. [Google Scholar] [CrossRef]

- Diotaiuti, P., G. Valente, S. Mancone, L. Girelli, E. Cavicchiolo, and A. Chirico. 2022. Validation study of the Italian brief version of the multidimensional jealousy scale: Psychometric properties, measurement invariance across gender, and convergent validity. Frontiers in Psychology 13: 1013584. [Google Scholar] [CrossRef]

- Duncan, Grejg J., Chantelle J. Dowsett, Amy Claessens, Katherine Magnuson, Aletha C. Huston, Pamela Klebanov, Linda S. Pagani, Leon Feinstein, Mimi Engel, Jeanne Brooks-Gunn, and et al. 2007. School readiness and later achievement. Developmental Psychology 43: 1428. [Google Scholar] [CrossRef] [PubMed]

- European Commission/EaCEa/Eurydice. 2020. Slovakia. Early Childhood Education and Care. Available online: https://eacea.ec.europa.eu/national-policies/eurydice/content/early-childhood-education-and-care-72_en (accessed on 10 June 2023).

- Fink, Elina, Wendy V. Browne, Claire Hughes, and Jenny Gibson. 2019. Using a “child’s-eye view” of social success to understand the importance of school readiness at the transition to formal schooling. Social Development 28: 186–99. [Google Scholar] [CrossRef]

- Fischer, Ronald, and Johannes A. Karl. 2019. A primer to (cross-cultural) multi-group invariance testing possibilities in R. Frontiers in Psychology 10: 1–18. [Google Scholar] [CrossRef] [PubMed]

- Fischer, Ursula, Sebastian Paul Suggate, and Heidrun Stoeger. 2022. Fine motor skills and finger gnosia contribute to preschool children’s numerical competencies. Acta Psychologica 226: 103576. [Google Scholar] [CrossRef]

- Fornell, Claes, and David F. Larcker. 1981. Evaluating structural equation models with unobservable variables and measurement error. Journal of Marketing Research 18: 39–50. [Google Scholar] [CrossRef]

- Gliner, Jeffrey A., George A. Morgan, and Nancy L. Leech. 2017. Research Methods in Applied Settings: An Integrated Approach to Design and Analysis, 3rd ed. New York: Routledge. [Google Scholar]

- Guerin, Julia M., Shari L. Wade, and Quintino R. Mano. 2021. Does reasoning training improve fluid reasoning and academic achievement for children and adolescents? A systematic review. Trends in Neuroscience and Education 23: 100153. [Google Scholar] [CrossRef]

- Gygi, Jasmin T., Elode Fux, Alexander Grob, and Priska Hagmann Von Arx. 2016. Measurement invariance and latent mean differences in the Reynolds Intellectual Assessment Scales (RIAS): Does the German version of the RIAS allow a valid assessment of individuals with a migration background? PLoS ONE 11: e0166533. [Google Scholar] [CrossRef]

- Haw, Law Hui, Sabariah Bte Sharif, and Crispina Gregory K. Han. 2022. Analyzing the science achievement test: Perspective of classical test theory and Rasch analysis. International Journal of Evaluation and Research in Education (IJERE) 11: 1714–24. [Google Scholar] [CrossRef]

- Henseler, Jőrg, Christian M. Ringle, and Marko Sarstedt. 2015. A new criterion for assessing discriminant validity in variance-based structural equation modeling. Journal of the Academy of Marketing Science 43: 115–35. [Google Scholar] [CrossRef]

- Herlina, Yuke Indrati. 2010. Sejarah Perkembangan Kurikulum TAMAN kanak-Kanak di Indonesia dari masa ke masa [The History of the Kindergarten Curriculum Development in Indonesia from Time to Time]. Jakarta: Kemendikbud. [Google Scholar]

- Józsa, Krisztián, and Karen C. Barrett. 2018. Affective and social mastery motivation in preschool as predictors of early school success: A longitudinal study. Early Childhood Research Quarterly 45: 81–92. [Google Scholar] [CrossRef]

- Józsa, Krisztián, and Margit Fazekasné Fenyvesi. 2006. A DIFER Programcsomag alkalmazási lehetősége tanulásban akadályozott gyermekeknél − I. rész [The adaptation of DIFER tests for children with mild intellectual disabilities—Part 1]. Gyógypedagógiai Szemle 34: 133–41. [Google Scholar]

- Józsa, Krisztián, Balázs Török, and Cerissa Stevenson. 2018. Preschool and kindergarten in Hungary and the United States: A comparison within transnational development policy. International Journal of Educational Development 62: 88–95. [Google Scholar] [CrossRef]

- Józsa, Krisztián, Karen C. Barrett, and George A. Morgan. 2017. Game-like tablet assessment of approaches to learning: Assessing mastery motivation and executive functions. Electronic Journal of Research in Educational Psychology 15: 665–95. [Google Scholar] [CrossRef]

- Józsa, Krisztián, Stephen Amukune, Gabriella Józsa, and Karen C. Barrett. 2022a. Design, Development and Application of Focus App for Assessment of School Readiness. Paper presented at INTED2022 Proceedings, Online, March 7–8; vol. 1, pp. 4903–10. [Google Scholar] [CrossRef]

- Józsa, Krisztián, Stephen Amukune, Gabriella Zentai, and Karen C. Barrett. 2022b. School readiness test and intelligence in preschool as predictors of middle school success: Result of an eight-year longitudinal study. Journal of Intelligence 10: 66. [Google Scholar] [CrossRef]

- Kang, Xia, and Frederick K. S. Leung. 2022. Assessing expectancy and value beliefs in mathematics: Measurement invariance and latent mean differences across two ethnic cultures. International Journal of Science and Mathematics Education 21: 1985–2004. [Google Scholar] [CrossRef]

- Kim, Sun-Hee, Eunkyung Lee, and Jin-Hwa Park. 2022. Measurement invariance and latent mean differences in the Nurses’ Emotional Labour Scale. Journal of Nursing Research 30: 1–8. [Google Scholar] [CrossRef]

- Kline, Rex B. 2015. Principles and Practice of Structural Equation Modeling, 4th ed. London: Guilford Press. [Google Scholar]

- Langer-Buchwald, Judit. 2020. The situation of migrant children in the Hungarian and the Austrian education system: Multilingualism, interculturalism, and integration. Hungarian Educational Research Journal 9: 434–61. [Google Scholar] [CrossRef]

- Lau, Chloe, Francesca Chiesi, and Don Saklofske. 2022. The state-trait model of cheerfulness: Tests of measurement invariance and latent mean differences in European and Chinese Canadian students. Europe’s Journal of Psychology 18: 142–53. [Google Scholar] [CrossRef]

- Lepes, Josip, Szabolcs Halasi, and Ivanovic Josip. 2016. Motor abilities and school readiness of disadvantaged children. Croatian Journal of Education-Hrvatski Casopis Za Odgoj I Obrazovanje, 18 (Conference on Researching Paradigms of Childhood and Education) 7: 87–101. [Google Scholar]

- Li, Eva Yujia, Anthony J. Gambino, Betsy D. Mccoach, and Christopher. H. Rhoads. 2019. Psychometric Properties of the Motivation for Online Reading Questionnaire (MORQ). Available online: https://www.researchgate.net/publication/330511888_Psychometric_Properties_of_the_Motivation_for_Online_Reading_Questionnaire_MORQ?channel=doi&linkId=5c454e7892851c22a3849cb0&showFulltext=true (accessed on 5 June 2023).

- Liu, Jing, Hua Lin, Bing Hu, Zixiong Zhou, Elizabeth Agyeiwaah, and Ye Xu. 2022. Advancing the understanding of the resident pro-tourism behavior scale: An integration of item response theory and classical test theory. Journal of Business Research 141: 113–25. [Google Scholar] [CrossRef]

- Liu, Yujie, Qian Wang, Min Jou, Baohong Wang, Yang An, and Zifan Li. 2020. Psychometric properties and measurement invariance of the 7-item Game Addiction Scale (GAS) among Chinese college students. BMC Psychiatry 20: 484. [Google Scholar] [CrossRef] [PubMed]

- Macy, Marisa, Juli Pool, Ching-I Chen, Tonni-Ann Rusiana, and Mali Sawyer. 2022. A preliminary examination of a kindergarten school readiness assessment. Early Childhood Education Journal 50: 1035–46. [Google Scholar] [CrossRef]

- McWayne, Christine M., Katherine Cheung, Linnie E. Green Wright, and Debbie L. Hahs-Vaughn. 2012. Patterns of school readiness among head start children: Meaningful within-group variability during the transition to kindergarten. Journal of Educational Psychology 104: 862–78. [Google Scholar] [CrossRef]

- Miller, Michele M., and H. Hill Goldsmith. 2017. Profiles of social-emotional readiness for 4-year-old kindergarten. Frontiers in Psychology 8: 132. [Google Scholar] [CrossRef]

- Molnár, Gyöngyvér, and Zoltán Hermann. 2023. Short- and long-term effects of COVID-related kindergarten and school closures on first- to eighth-grade students’ school readiness skills and mathematics, reading and science learning. Learning and Instruction 83: 1–13. [Google Scholar] [CrossRef]

- Mukkiri, Sujitha, Pretti Kandasamy, Mahadevan Subramanian, Venkatesh Chandrasekaran, and Shivanand Kattimani. 2022. Content validation of school readiness module and school readiness scale for assessing school readiness in children with autism spectrum disorder. Asian Journal of Psychiatry 71: 103073. [Google Scholar] [CrossRef] [PubMed]

- Nagy, Ádám, László Trencsenyi, and Kinga Horvathova. 2018. The Hungarian education system and its school-critical responses. Journal of Educational Sciences 37: 18–26. [Google Scholar] [CrossRef]

- Nagy, József, Krisztián Józsa, Tibor Vidákovich, and Margit Fazekasné Fenyvesi. 2004a. DIFER programcsomag: Diagnosztikus fejlődésvizsgáló és kritériumorientált fejlesztő rendszer 4–8 évesek számára [DIFER: A Diagnostic System of Assessment and Criterion- Referenced Improvement between the Ages of 4–8.]. Szeged: Mozaik Kiadó. [Google Scholar]

- Nagy, József, Krisztián Józsa, Tibor Vidákovich, and Margit Fazekasné Fenyvesi. 2004b. Az elemi alapkészségek fejlődése 4–8 éves életkorban [The Development of Elementary Skills between the Ages of 4–8. A National Overview of the Seven Basic Skills Needed for Academic Success and Their Pedagogic Consequences]. Szeged: Mozaik Kiadó. [Google Scholar]

- Nagy, József. 1976. PREFER, Preventív Fejlettségvizsgáló Rendszer 5–6 éves gyermekek iskolakészültségének mérése [Preventive Development Assessment System for Children Aged 5–6 Years]. Budapest: MTA Pedagógiai Kutatócsoport. [Google Scholar]

- Nagy, József. 1980. 5–6 éves gyermekeink iskolakészültsége [School Readiness of Our 5–6 Year Old Children]. Budapest: Akadémiai Kiadó. [Google Scholar]

- Nair, M. K. C., Rekha Radhakrishnan, and Bolajoko O. Olusanya. 2023. Promoting school readiness in children with developmental disabilities in LMICs. Frontiers in Public Health 11: 993642. [Google Scholar] [CrossRef]

- Neumann, Denise, Elizabeth R. Peterson, Lisa Underwood, Susan M. B. Morton, and Karen E. Waldie. 2021. The development of cognitive functioning indices in early childhood. Cognitive Development 60: 101098. [Google Scholar] [CrossRef]

- OECD. 2016. Education at a Glance 2016. OECD Indicators. Paris: OECD Publishing. [Google Scholar] [CrossRef]

- Oo, Tun Zaw, Andrea Magyar, and Anita Habók. 2021. Effectiveness of the reflection-based reciprocal teaching approach for reading comprehension achievement in upper secondary school in Myanmar. Asia Pacific Education Review 4: 1–24. [Google Scholar] [CrossRef]

- Oo, Tun Zaw, Anita Habók, and Krisztián Józsa. 2023. Empowering educators to sustain reflective teaching practices: The validation of instruments. Sustainability 15: 7640. [Google Scholar] [CrossRef]

- Opozda-Suder, Sylwia, Kinga Karteczka-Świętek, and Malgorzata Piasecka. 2021. Psychometric properties and longitudinal measurement invariance of the drug craving scale: Modification of the Polish version of the Penn Alcohol Craving Scale (PACS). PLoS ONE 16: e0256018. [Google Scholar] [CrossRef] [PubMed]

- Panizzon, Matthew S., Eero Vuoksimaa, Kelly M. Spoon, Kristen C. Jacobson, Michael J. Lyons, Carol E. Franz, Hong Xian, Terrie Vasilopoulos, and William S. Kremen. 2014. Genetic and environmental influences on general cognitive ability: Is g a valid latent construct? Intelligence 43: 65–76. [Google Scholar] [CrossRef] [PubMed]

- Planinic, Maja, William J. Boone, Ana Susac, and Lana Ivanjek. 2019. Rasch analysis in physics education research: Why measurement matters. Physical Review Physics Education Research 15: 20111. [Google Scholar] [CrossRef]

- Polat, Murat, Nihan Sölpük Turhan, and Çetin Toraman. 2022. Comparison of classical test theory vs. multi-facet Rasch theory. Pegem Egitim ve Ogretim Dergisi 12: 213–25. [Google Scholar] [CrossRef]

- Polat, Murat. 2022. Comparison of performance measures obtained from foreign language tests according to item response theory vs classical test theory. International Online Journal of Education and Teaching 9: 471–85. [Google Scholar]

- Pupala, Branislav, Hani Yulindrasari, and Maria Melita Rahardjo. 2022. Diverstity and centrism in two contrasting early childhood education and care systems: Slovakia and Indonesia compared. Human Affairs 32: 145–61. [Google Scholar] [CrossRef]

- Putnick, Diane L., and Marc H. Bornstein. 2016. Measurement invariance conventions and reporting: The state of the art and future directions for psychological research. Developmental Review 41: 71–90. [Google Scholar] [CrossRef]

- Russo, Jaclyn M., Amanda P. Williford, Anna J. Markowitz, Virginia E. Vitiello, and Daphna Bassok. 2019. Examining the validity of a widely-used school readiness assessment: Implications for teachers and early childhood programs. Early Childhood Research Quarterly 48: 14–25. [Google Scholar] [CrossRef]

- Samuels, William Ellery, Nelly Tournaki, Sheldon Blackman, and Christopher Zilinski. 2016. Executive functioning predicts academic achievement in middle school: A four-year longitudinal study. Journal of Educational Research 109: 478–90. [Google Scholar] [CrossRef]

- Siregar, Hadijah N. I., and Asmin Panjaitan. 2022. A systematic literature review: The role of classical test theory and item response theory in item analysis to determine the Quality of Mathematics Tests. Jurnal Fibonaci: Jurnal Pendidikan Matematika 2: 29–48. [Google Scholar] [CrossRef]

- Sočan, Gregore, and Gaja Zager Kocjan. 2022. Person-level assessment of measurement invariance. Quality and Quantity 56: 3481–503. [Google Scholar] [CrossRef]

- Soeharto, Soeharto, and Benő Csapó. 2022. Assessing Indonesian student inductive reasoning: Rasch analysis. Thinking Skills and Creativity 46: 1–17. [Google Scholar] [CrossRef]

- Spätgens, Tessa, and Rob Schoonen. 2019. Individual differences in reading comprehension in monolingual and bilingual children: The influence of semantic priming during sentence reading. Learning and Individual Differences 76: 101777. [Google Scholar] [CrossRef]

- Štatistická ročenka–materské školy. 2019. [Statistical yearbook–Kindergartens (2019/2020.] Bratislava: Centrum vedecko-technických informácií Sr. Available online: https://www.cvtisr.sk/cvti-sr-vedecka-kniznica/informacie-o-skolstve/statistiky/statisticka-rocenka-publikacia/statisticka-rocenka-materske-skoly.html?page_id=9602 (accessed on 8 June 2023).

- Teo, Timothy, Fang Huang, and Jinbo He. 2022. Measurement invariance and latent mean differences of the digital native assessment scale across Chinese mainland, Macau, and Taiwan: An exploratory structural equation modeling approach. Interactive Learning Environments 20: 1–13. [Google Scholar] [CrossRef]

- The Jamaica Education Transformation Commission. 2021. The Reform of Education in Jamaica, 2021 Report. (Issue September). Kingston: The Jamaica Education Transformation Commission. [Google Scholar]

- Throuvala, Melina, Halley M. Pontes, Ioannis Tsaousis, Mark D. Griffiths, Mike Rennoldson, and Daria J. Kuss. 2021. Exploring the dimensions of smartphone distraction: Development, validation, measurement invariance, and latent mean differences of the Smartphone Distraction Scale (SDS). Frontiers in Psychiatry 12: 1–22. [Google Scholar] [CrossRef]

- Torregrosa Díez, María Soledad, María Isbel Gómez Núñez, Ricardo Sanmartín López, José Manuel García Fernández, Annette M. La Greca, Xinyue Zhou, Jesús Redondo Pacheco, and Candido J. Ingles Saura. 2022. Measurement invariance and latent mean differences between American, Spanish and Chinese adolescents using the Social Anxiety Scale for Adolescents (SAS-A). Psicothema 34: 126–33. [Google Scholar] [CrossRef]

- Tsaousis, Ionnas, and Mohammed H. Alghamdi. 2022. Examining academic performance across gender differently: Measurement invariance and latent mean differences using bias-corrected bootstrap confidence intervals. Frontiers in Psychology 13: 1–12. [Google Scholar] [CrossRef]

- Valiente, Carlos, Leach D. Doane, Sierra Clifford, Kevin J. Grimm, and Kathryn Lemery-Chalfant. 2021. School readiness and achievement in early elementary school: Moderation by students’ temperament. Journal of Applied Developmental Psychology 74: 101265. [Google Scholar] [CrossRef]

- Veas, Alejandro, Juan-Luis Castejón, Raquel Gilar, and Pablo Miñano. 2016. Validation of the Spanish adaptation of the School Atitude Assessment Survey-Revised using multidimensional Rasch analysis. Anales de Psicología 33: 74. [Google Scholar] [CrossRef]

- Visser, Linda, Carla Vlaskamp, Cornelius Emde, Selma A. J. Ruiter, and Marieke E. Timmerman. 2017. Difference or delay? A comparison of Bayley-III Cognition item scores of young children with and without developmental disabilities. Research in Developmental Disabilities 71: 109–19. [Google Scholar] [CrossRef] [PubMed]

- Yan, Zi, and Magdalena Mo Ching Mok. 2012. Validating the coping scale for Chinese athletes using multidimensional Rasch analysis. Psychology of Sport and Exercise 13: 271–79. [Google Scholar] [CrossRef]

- Zewude, Girum Tareke, and Maria Hercz. 2022. The Teacher Well-Being Scale (TWBS): Construct validity, model comparisons and measurement invariance in an Ethiopian setting. Journal of Psychology in Africa 32: 251–62. [Google Scholar] [CrossRef]

- Zhong, Sirui, Yuxiao Zhou, Wuerken Zhumajiang, Lifen Feng, Jing Gu, Xiao Lin, and Yuantao Hao. 2023. A psychometric evaluation of Chinese chronic hepatitis B virus infection-related stigma scale using classical test theory and item response theory. Frontiers in Psychology 14: 1035071. [Google Scholar] [CrossRef]

- Ziv, Yair. 2013. Social information processing patterns, social skills, and school readiness in preschool children. Journal of Experimental Child Psychology 114: 306–20. [Google Scholar] [CrossRef]

- Zwick, Rebecca, Dorothy T. Thayer, and Charles Lewis. 1999. An empirical Bayes approach to Mantel-Haenszel DIF analysis. Journal of Educational Measurement 36: 1–28. [Google Scholar] [CrossRef]

| Instruments | Authors (Time) | Contents/Factors | Assessor | Students | Reliability | MI | Study | Country |

|---|---|---|---|---|---|---|---|---|

| PREFER | Nagy (1976) |

| Teachers/ examiners | Children aged 5–6 years | - | - | National survey | Hungary |

| DIFER | Nagy et al. (2004a) |

| Teachers/ examiners | Children aged 4–8 years | Standardized as national test | - | National survey | Hungary |

| DIFER | Józsa and Fenyvesi (2006) |

| Teachers | Students with learning disabilities, aged 7–8 | - | - | Simple survey | Hungary |

| Computer-based DIFER | Csapó et al. (2014) |

| Teachers | First-grade students | Cronbach’s alpha | MG-CFA for media effects | Simple survey | Hungary |

| A game-like, computer-based assessment | Józsa et al. (2017) |

| Trained examiners | Students aged 3–8 years | - | - | Cross-cultural | Hungary and America |

| DIFER | Józsa and Barrett (2018) |

| Trained examiners | Children aged around 5 years | Cronbach’s alpha | - | Longitudinal study | Hungary |

| DIFER | Józsa et al. (2022b) |

| Trained examiners | Preschool children | Cronbach’s alpha | - | Longitudinal (8 years) | Hungary |

| FOCUS app (a game-like tablet-based assessment) | Józsa et al. (2022a) |

| Trained examiners | Students aged 3–8 years | - | - | Cross-cultural | Hungary and Kenya |

| CHEXI | Amukune et al. (2022b) |

| Teachers | Preschool children | Cronbach’s alpha | MG-CFA | Cross-cultural | Hungary and Kenya |

| Computer-based DIFER | Molnár and Hermann (2023) |

| Trained examiners | First-grade students | EAP reliability | - | Longitudinal study (before/after COVID) | Hungary |

| Variable | Slovakia | Hungary | Total |

|---|---|---|---|

| Number of Participants | 1609 (52.75%) | 1441 (47.25%) | 3050 |

| Gender | |||

| Male | 779 (47.5%) | 862 (52.5%) | 1641 |

| Female | 830 (58.87%) | 579 (41.13%) | 1409 |

| Age | |||

| 4th year | 159 (56.38%) | 123 (43.62%) | 282 |

| 5th year | 370 (56.74%) | 282 (43.26%) | 652 |

| 6th year | 429 (51.56%) | 403 (48.44%) | 832 |

| 7th year | 351 (50.87%) | 339 (49.13%) | 690 |

| 8th year | 300 (50.51%) | 294 (49.49%) | 594 |

| DIFER | Fine Motor | Phoneme Perception | Pre-Maths | Relational Reasoning | Deductive Reasoning | Social Skills | Total |

|---|---|---|---|---|---|---|---|

| N of items | 24 | 15 | 58 | 24 | 16 | 20 | 157 |

| Mean | 13.08 | 12.4 | 40.55 | 19.54 | 10.63 | 81.16 | 71.97 |

| SD | 6.6 | 2.59 | 12.7 | 3.86 | 4.12 | 12.77 | 16.04 |

| Skewness | −0.04 | −1.18 | −0.64 | −1.12 | −0.77 | −0.723 | 0.64 |

| Kurtosis | −0.949 | 1.36 | −0.43 | 1.7 | −0.05 | 0.51 | −0.02 |

| Psychometric Properties | Fine Motor Skills | Phoneme Perception | Pre-Maths | Relational Reasoning | Deductive Reasoning | Social Skills |

|---|---|---|---|---|---|---|

| N of items | 24 | 15 | 53 | 24 | 16 | 20 |

| Mean | 0.29 | 2.16 | 1.74 | 1.54 | 79 | 2.14 |

| SD | 1.94 | 1.43 | 2.66 | 0.98 | 1.29 | 1.59 |

| MNSQ (item-infit) | 0.99 | 1 | 0.98 | 1.00 | 1.01 | 0.99 |

| MNSQ (item-outfit) | 1.11 | 0.97 | 1.99 | 1.00 | 0.98 | 1.01 |

| MNSQ (person-infit) | 0.99 | 1.00 | 0.97 | 1.00 | 1.00 | 1.01 |

| MNSQ (person-outfit) | 1.04 | 0.97 | 1.2 | 1.00 | 0.98 | 1.01 |

| Item separation | 32.33 | 10.11 | 35.90 | 11.05 | 14.80 | 14.78 |

| Person separation | 2.79 | 2.72 | 4.26 | 3.44 | 2.65 | 3.07 |

| Unidimensionality | ||||||

| Raw variance by measure | 34.50% | 38.2% | 38.3% | 38% | 40.36% | 61.26% |

| Unexplained variance 1st contrast | 1.45 | 1.42 | 1.13 | 1.62 | 1.84 | 1.32 |

| DIFER | Items | Chisqr/df | p Value | Absolute Index, SRMR (<0.08 *) | Comparative Index, CFI (>0.90 *) | Parsimonious Index, RMSEA (<0.06 *) |

|---|---|---|---|---|---|---|

| Dichotomous test | 132 | 2.85 | 0.052 | 0.08 | 0.90 | 0.057 |

| Rating test | 20 | 2.50 | 0.073 | 0.07 | 0.92 | 0.046 |

| Age 4 | 2 | 3 | 4 | 5 | 6 |

|---|---|---|---|---|---|

| 1. Social skills | 0.284 ** | 0.446 ** | 0.374 ** | 0.432 ** | 0.452 ** |

| 2. Fine motor skills | 0.256 ** | 0.282 ** | 0.306 ** | 0.357 ** | |

| 3. Phoneme perception | 0.577 ** | 0.504 ** | 0.526 ** | ||

| 4. Relational reasoning | 0.489 ** | 0.486 ** | |||

| 5. Deductive reasoning | 0.529 ** | ||||

| 6. Pre-maths skills | |||||

| Age 5 | 2 | 3 | 4 | 5 | 6 |

| 1. Social skills | 0.282 ** | 0.381 ** | 0.367 ** | 0.413 ** | 0.465 ** |

| 2. Fine motor skills | 0.324 ** | 0.305 ** | 0.292 ** | 0.429 ** | |

| 3. Phoneme perception | 0.512 ** | 0.479 ** | 0.473 ** | ||

| 4. Relational reasoning | 0.500 ** | 0.510 ** | |||

| 5. Deductive reasoning | 0.485 ** | ||||

| 6. Pre-maths skills | |||||

| Age 6 | 2 | 3 | 4 | 5 | 6 |

| 1. Social skills | 0.301 ** | 0.351 ** | 0.413 ** | 0.425 ** | 0.334 ** |

| 2. Fine motor skills | 0.334 ** | 0.279 ** | 0.335 ** | 0.430 ** | |

| 3. Phoneme perception | 0.465 ** | 0.462 ** | 0.504 ** | ||

| 4. Relational reasoning | 0.515 ** | 0.524 ** | |||

| 5. Deductive reasoning | 0.464 ** | ||||

| 6. Pre-maths skills | |||||

| Age 7 | 2 | 3 | 4 | 5 | 6 |

| 1. Social skills | 0.237 ** | 0.414 ** | 0.373 ** | 0.417 ** | 0.358 ** |

| 2. Fine motor skills | 0.274 ** | 0.318 ** | 0.314 ** | 0.457 ** | |

| 3. Phoneme perception | 0.487 ** | 0.447 ** | 0.485 ** | ||

| 4. Relational reasoning | 0.540 ** | 0.463 ** | |||

| 5. Deductive reasoning | 0.453 ** | ||||

| 6. Pre-maths skills | |||||

| Age 8 | 2 | 3 | 4 | 5 | 6 |

| 1. Social skills | 0.273 ** | 0.373 ** | 0.349 ** | 0.393 ** | 0.440 ** |

| 2. Fine motor skills | 0.289 ** | 0.274 ** | 0.264 ** | 0.330 ** | |

| 3. Phoneme perception | 0.559 ** | 0.533 ** | 0.441 ** | ||

| 4. Relational reasoning | 0.505 ** | 0.543 ** | |||

| 5. Deductive reasoning | 0.434 ** | ||||

| 6. Pre-maths skills |

| Dimensions | N of Items | Mean (SD) | Cronbach’s Alpha | CR | AVE |

|---|---|---|---|---|---|

| (>0.60) * | (>0.70) * | (>0.50) * | |||

| Fine motor skills | 24 | 13.08 (6.60) | 0.92 | 0.72 | 0.50 |

| Phoneme perception | 15 | 12.40 (2.59) | 0.74 | 0.92 | 0.63 |

| Pre-mathematics | 53 | 40.55 (12.70) | 0.95 | 0.96 | 0.65 |

| Relational reasoning | 24 | 19.54 (3.86) | 0.80 | 0.86 | 0.55 |

| Deductive reasoning | 15 | 10.68 (4.12) | 0.86 | 0.71 | 0.50 |

| Social skills | 20 | 81.16 (12.77) | 0.95 | 0.94 | 0.51 |

| Total | 152 | 71.97(16.04) | 0.97 | 0.86 | 0.55 |

| Construct | 1 | 2 | 3 | 4 | 5 | 6 |

|---|---|---|---|---|---|---|

| 1. Fine motor skills | 0.69 | 0.76 | 0.68 | 0.73 | 0.41 | |

| 2. Phoneme perception | 0.74 | 0.66 | 0.77 | 0.54 | ||

| 3. Relational reasoning | 0.74 | 0.72 | 0.48 | |||

| 4. Deductive reasoning | 0.69 | 0.47 | ||||

| 5. Pre-mathematics | 0.50 | |||||

| 6. Social skills |

| DIFER | Groups | χ2 (df) | CFI | RMSEA [90% CI] | SRMR |

|---|---|---|---|---|---|

| Dichotomous test | Slovakia | 145,555.9 (17,005) | 0.942 | 0.050 [0.050, 0.050] | 0.060 |

| Hungary | 145,586.9 (17,005) | 0.943 | 0.050 [0.050, 0.050] | 0.060 | |

| Male | 117,642.8 (8778) | 0.948 | 0.051 [0.049, 0.052] | 0.060 | |

| Female | 114,522.7 (8778) | 0.949 | 0.050 [0.049, 0.052] | 0.059 | |

| 4th year | 117,882.8 (17,002) | 0.912 | 0.060 [0.059, 0.062] | 0.063 | |

| 5th year | 117,892.7 (17,002) | 0.912 | 0.060 [0.059, 0.062] | 0.063 | |

| 6th year | 118,222.8 (17,002) | 0.911 | 0.058 [0.058, 0.058] | 0.060 | |

| 7th year | 117,892.7 (17,002) | 0.932 | 0.058 [0.058, 0.058] | 0.061 | |

| 8th year | 118,222.8 (17,002) | 0.921 | 0.057 [0.055, 0.060] | 0.065 | |

| Rating test | Slovakia | 72,774.0 (210) | 0.931 | 0.065 [0.063, 0.066] | 0.063 |

| Hungary | 69,876.9 (210) | 0.931 | 0.065 [0.065, 0.065] | 0.061 | |

| Male | 7051.8 (210) | 0.939 | 0.063 [0.060, 0.065] | 0.062 | |

| Female | 7308.7 (210) | 0.940 | 0.060 [0.058, 0.062] | 0.060 | |

| 4th year | 3907.1 (210) | 0.943 | 0.039 [0.059, 0.062] | 0.034 | |

| 5th year | 3831.8 (210) | 0.947 | 0.043 [0.041, 0.044] | 0.033 | |

| 6th year | 3994.1 (210) | 0.965 | 0.038 [0.037, 0.040] | 0.044 | |

| 7th year | 4045.6 (239) | 0.914 | 0.047 [0.046, 0.048] | 0.049 | |

| 8th year | 5515.2 (265) | 0.922 | 0.039 [0.038, 0.040] | 0.042 |

| Models | χ2 (df) | CFI | RMSEA [90% CI] | SRMR | ∆CFI | ∆RMSEA | ∆SRMR | MI |

|---|---|---|---|---|---|---|---|---|

| MI across country (NSlovakia = 1609; NHungary = 1441) | ||||||||

| Configural | 145,587.9 (17,008) | 0.942 | 0.050 [0.050, 0.050] | 0.060 | - | - | - | - |

| Metric | 146,010.3 (17,135) | 0.941 | 0.050 [0.046, 0.050] | 0.060 | −0.001 | 0.000 | 0.000 | Yes |

| Scalar | 146,640.7 (17,267) | 0.939 | 0.050 [0.046, 0.050] | 0.057 | −0.002 | 0.000 | −0.003 | Yes |

| Residual | 146,653.8 (17,282) | 0.938 | 0.050 [0.046, 0.050] | 0.058 | −0.001 | 0.000 | 0.001 | Yes |

| MI across gender (Nmale = 1641; Nfemale = 1409) | ||||||||

| Configural | 117,642.8 (8778) | 0.947 | 0.049 [0.049, 0.049] | 0.056 | - | - | - | - |

| Metric | 116,114.5 (17,402) | 0.947 | 0.049 [0.049, 0.049] | 0.056 | 0.000 | 0.000 | 0.000 | Yes |

| Scalar | 146,114.5 (17,402) | 0.947 | 0.049 [0.049, 0.049] | 0.057 | 0.000 | 0.001 | 0.001 | Yes |

| Residual | 146,122.4 (17,408) | 0.946 | 0.047 [0.045, 0.048] | 0.053 | −0.001 | −0.001 | −0.004 | Yes |

| MI across age (Nyear4 = 282; Nyeat5 = 652; Nyeat6 = 832; Nyeat7 = 690; Nyeat8 = 594) | ||||||||

| Configural | 116,845.9 (17,477) | 0.921 | 0.059 [0.057, 0.060] | 0.056 | − | − | − | − |

| Metric | 116,779.5 (17,489) | 0.920 | 0.059 [0.055, 0.059] | 0.056 | −0.001 | 0.000 | 0.000 | Yes |

| Scalar | 146,884.5 (17,405) | 0.920 | 0.050 [0.049, 0.050] | 0.057 | 0.000 | 0.009 | 0.001 | Yes |

| Residual | 146,799.4 (17,411) | 0.900 | 0.067 [0.077, 0.078] | 0.079 | −0.020 | 0.017 | 0.022 | No |

| Residual (item74) | 146,712.8 (17,400) | 0.912 | 0.048 [0.046, 0.050] | 0.055 | −0.008 | −0.002 | −0.008 | Yes |

| Models | χ2 (df) | CFI | RMSEA [90% CI] | SRMR | ∆CFI | ∆RMSEA | ∆SRMR | MI |

|---|---|---|---|---|---|---|---|---|

| MI across country (NSlovakia = 1609; NHungary = 1441) | ||||||||

| Configural | 4090.4 (298) | 0.930 | 0.063 [0.050, 0.050] | 0.062 | − | − | − | − |

| Metric | 4130.7 (317) | 0.929 | 0.062 [0.061, 0.065] | 0.060 | −0.001 | −0.001 | −0.002 | Yes |

| Scalar | 4247.5 (337) | 0.929 | 0.062 [0.060, 0.063] | 0.060 | 0.000 | 0.000 | 0.000 | Yes |

| Residual | 4248.6 (332) | 0.929 | 0.062 [0.060, 0.063] | 0.060 | 0.000 | 0.000 | 0.000 | Yes |

| MI across gender (Nmale = 1641; Nfemale = 1409) | ||||||||

| Configural | 3550.5 (298) | 0.939 | 0.058 [0.049, 0.052] | 0.06 | − | − | − | − |

| Metric | 3574.5 (317) | 0.938 | 0.057 [0.056, 0.060] | 0.056 | −0.001 | −0.001 | −0.004 | Yes |

| Scalar | 3653.3 (337) | 0.938 | 0.057 [0.055, 0.058] | 0.055 | 0.000 | 0.000 | −0.001 | Yes |

| Residual | 3661.5 (338) | 0.936 | 0.054 [0.053, 0.056] | 0.053 | −0.002 | −0.003 | −0.002 | Yes |

| MI across age (Nyear4 = 282; Nyeat5 = 652; Nyeat6 = 832; Nyeat7 = 690; Nyeat8 = 594) | ||||||||

| Configural | 5533.8 (1007) | 0.912 | 0.038 [0.037, 0.039] | 0.035 | − | − | − | − |

| Metric | 5654.8 (1027) | 0.910 | 0.038 [0.037, 0.039] | 0.035 | −0.002 | 0.000 | 0.000 | Yes |

| Scalar | 5654.8 (1028) | 0.910 | 0.038 [0.037, 0.039] | 0.034 | 0.000 | 0.000 | 0.001 | Yes |

| Residual | 5792.6 (1069) | 0.908 | 0.038 [0.037, 0.039] | 0.034 | −0.002 | 0.000 | 0.000 | Yes |

| Group | DIFER Scales | Estimate | SE | CR Score | p Value |

|---|---|---|---|---|---|

| Country (Slovakia vs. Hungary) | ✓ Fine motor skills | 0.004 | 0.001 | 6.166 (7.173) | <.001 |

| ✓ Phoneme perception | 0.007 | 0.001 | 5.308 (4.968) | <.001 | |

| ✓ Pre-mathematics | 0.004 | 0.001 | 7.466 (7.007) | <.001 | |

| ✓ Relational reasoning | 0.002 | 0.000 | 3.226 (2.918) | <.01 | |

| ✓ Deductive reasoning | 0.047 | 0.005 | 10.047 (9.629) | <.001 | |

| ✓ Social skills | 0.251 | 0.021 | 12.024 (13.188) | <.001 | |

| Gender (Male vs. Female) | ✓ Fine motor skills | 0.006 | 0.001 | 9.462 (8.233) | <.001 |

| ✓ Phoneme perception | 0.007 | 0.001 | 7.264 (8.454) | <.001 | |

| ✓ Pre-mathematics | 0.005 | 0.000 | 10.331 (11.45) | <.001 | |

| ✓ Relational reasoning | 0.001 | 0.000 | 4.364 (4.671) | <.001 | |

| ✓ Deductive reasoning | 0.046 | 0.003 | 10.943 (9.842) | <.001 | |

| ✓ Social skills | 0.295 | 0.023 | 12.896 (12.040) | <.001 | |

| 4th year vs. 5th year | ✓ Fine motor skills | 0.006 | 0.001 | 9.462 (9.233) | <.001 |

| ✓ Phoneme perception | 0.007 | 0.001 | 7.264 (8.454) | <.001 | |

| ✓ Pre-mathematics | 0.260 | 0.032 | 8.097 (8.079) | <.001 | |

| ✓ Relational reasoning | 0.001 | 0.000 | 4.364 (4.671) | <.01 | |

| ✓ Deductive reasoning | 0.046 | 0.003 | 10.943 (9.842) | <.001 | |

| ✓ Social skills | 0.282 | 0.018 | 15.820 (15.820) | <.001 | |

| 4th year vs. 6th year | ✓ Fine motor skills | 0.006 | 0.001 | 9.462 (10.243) | <.001 |

| ✓ Phoneme perception | 0.007 | 0.001 | 7.264 (7.474) | <.001 | |

| ✓ Pre-mathematics | 0.260 | 0.032 | 8.097 (9.179) | <.001 | |

| ✓ Relational reasoning | 0.001 | 0.000 | 4.364 (5.672) | <.001 | |

| ✓ Deductive reasoning | 0.046 | 0.003 | 10.943 (11.892) | <.001 | |

| ✓ Social skills | 0.282 | 0.018 | 15.820 (15.820) | <.001 | |

| 4th year vs. 7th year | ✓ Fine motor skills | 0.006 | 0.001 | 9.462 (9.244) | <.001 |

| ✓ Phoneme perception | 0.007 | 0.001 | 7.264 (11.459) | <.001 | |

| ✓ Pre-mathematics | 0.260 | 0.032 | 8.097 (9.079) | <.001 | |

| ✓ Relational reasoning | 0.001 | 0.000 | 4.364 (5.671) | <.01 | |

| ✓ Deductive reasoning | 0.046 | 0.003 | 10.943 (11.842) | <.001 | |

| ✓ Social skills | 0.282 | 0.018 | 15.820 (15.820) | <.001 | |

| 4th year vs. 8th year | ✓ Fine motor skills | 0.006 | 0.001 | 9.462 (11.256) | <.001 |

| ✓ Phoneme perception | 0.007 | 0.001 | 7.264 (9.334) | <.001 | |

| ✓ Pre-mathematics | 0.260 | 0.032 | 8.097 (8.979) | <.001 | |

| ✓ Relational reasoning | 0.001 | 0.000 | 4.64 (4.699) | <.001 | |

| ✓ Deductive reasoning | 0.046 | 0.003 | 10.943 (9.842) | <.05 | |

| ✓ Social skills | 0.282 | 0.018 | 15.820 (15.820) | <.001 | |

| 5th year vs. 6th year | ✓ Fine motor skills | 0.349 | 0.021 | 16.820 (16.999) | <.001 |

| ✓ Phoneme perception | 0.288 | 0.017 | 17.425 (18.898) | <.001 | |

| ✓ Pre-mathematics | 0.270 | 0.017 | 15.447 (11.453) | <.001 | |

| ✓ Relational reasoning | 0.312 | 0.020 | 15.677 (14.679) | <.001 | |

| ✓ Deductive reasoning | 0.279 | 0.016 | 17.029 (19.842) | <.001 | |

| ✓ Social skills | 0.295 | 0.023 | 12.896 (12.870) | <.001 | |

| 5th year vs. 7th year | ✓ Fine motor skills | 0.349 | 0.021 | 16.820 (18.779) | <.001 |

| ✓ Phoneme perception | 0.288 | 0.017 | 17.425 (18.890) | <.001 | |

| ✓ Pre-mathematics | 0.270 | 0.017 | 15.447 (15.665) | <.001 | |

| ✓ Relational reasoning | 0.312 | 0.020 | 15.677 (18.556) | <.01 | |

| ✓ Deductive reasoning | 0.279 | 0.016 | 17.029 (19.842) | <.001 | |

| ✓ Social skills | 0.295 | 0.023 | 12.896 (12.870) | <.001 | |

| 5th year vs. 8th year | ✓ Fine motor skills | 0.349 | 0.021 | 16.820 (17.001) | <.001 |

| ✓ Phoneme perception | 0.288 | 0.017 | 17.425 (20.448) | <.001 | |

| ✓ Pre-mathematics | 0.270 | 0.017 | 15.447 (19.677) | <.001 | |

| ✓ Relational reasoning | 0.312 | 0.020 | 15.677 (18.679) | <.01 | |

| ✓ Deductive reasoning | 0.279 | 0.016 | 17.029 (19.842) | <.001 | |

| ✓ Social skills | 0.295 | 0.023 | 12.896 (12.870) | <.001 | |

| 6th year vs. 7th year | ✓ Fine motor skills | 0.006 | 0.001 | 9.462 (8.233) | <.001 |

| ✓ Phoneme perception | 0.007 | 0.001 | 7.264 (8.454) | <.001 | |

| ✓ Pre-mathematics | 0.282 | 0.018 | 15.820 (15.820) | <.001 | |

| ✓ Relational reasoning | 0.001 | 0.000 | 4.364 (4.671) | <.001 | |

| ✓ Deductive reasoning | 0.046 | 0.003 | 10.943 (9.842) | <.001 | |

| ✓ Social skills | 0.295 | 0.023 | 12.896 (12.040) | <.001 | |

| 6th year vs. 8th year | ✓ Fine motor skills | 0.006 | 0.001 | 9.462 (8.233) | <.001 |

| ✓ Phoneme perception | 0.007 | 0.001 | 7.264 (8.454) | <.001 | |

| ✓ Pre-mathematics | 0.282 | 0.018 | 15.820 (15.820) | <.001 | |

| ✓ Relational reasoning | 0.001 | 0.000 | 4.364 (4.671) | <.01 | |

| ✓ Deductive reasoning | 0.046 | 0.003 | 10.943 (9.842) | <.001 | |

| ✓ Social skills | 0.295 | 0.023 | 12.896 (12.040) | <.001 | |

| 7th year vs. 8th year | ✓ Fine motor skills | 0.373 | 0.011 | 32.905 (8.233) | <.001 |

| ✓ Phoneme perception | 0.302 | 0.009 | 33.452 (8.454) | <.001 | |

| ✓ Pre-mathematics | 0.282 | 0.018 | 15.820 (15.820) | <.001 | |

| ✓ Relational reasoning | 0.282 | 0.003 | 32.746 (4.671) | <.01 | |

| ✓ Deductive reasoning | 0.252 | 0.008 | 31.015 (9.842) | <.05 | |

| ✓ Social skills | 0.295 | 0.023 | 12.896 (12.040) | <.001 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Józsa, K.; Oo, T.Z.; Borbélyová, D.; Zentai, G. Exploring the Accuracy and Consistency of a School Readiness Assessment Tool for Preschoolers: Reliability, Validity and Measurement Invariance Analysis. J. Intell. 2023, 11, 189. https://doi.org/10.3390/jintelligence11100189

Józsa K, Oo TZ, Borbélyová D, Zentai G. Exploring the Accuracy and Consistency of a School Readiness Assessment Tool for Preschoolers: Reliability, Validity and Measurement Invariance Analysis. Journal of Intelligence. 2023; 11(10):189. https://doi.org/10.3390/jintelligence11100189

Chicago/Turabian StyleJózsa, Krisztián, Tun Zaw Oo, Diana Borbélyová, and Gabriella Zentai. 2023. "Exploring the Accuracy and Consistency of a School Readiness Assessment Tool for Preschoolers: Reliability, Validity and Measurement Invariance Analysis" Journal of Intelligence 11, no. 10: 189. https://doi.org/10.3390/jintelligence11100189

APA StyleJózsa, K., Oo, T. Z., Borbélyová, D., & Zentai, G. (2023). Exploring the Accuracy and Consistency of a School Readiness Assessment Tool for Preschoolers: Reliability, Validity and Measurement Invariance Analysis. Journal of Intelligence, 11(10), 189. https://doi.org/10.3390/jintelligence11100189