Abstract

Local structural equation models (LSEM) are structural equation models that study model parameters as a function of a moderator. This article reviews and extends LSEM estimation methods and discusses the implementation in the R package sirt. In previous studies, LSEM was fitted as a sequence of models separately evaluated as each value of the moderator variables. In this article, a joint estimation approach is proposed that is a simultaneous estimation method across all moderator values and also allows some model parameters to be invariant with respect to the moderator. Moreover, sufficient details on the main estimation functions in the R package sirt are provided. The practical implementation of LSEM is demonstrated using illustrative datasets and an empirical example. Moreover, two simulation studies investigate the statistical properties of parameter estimation and significance testing in LSEM.

1. Introduction

A structural equation model (SEM) is a statistical approach for analyzing multivariate data (Bartholomew et al. 2011; Bollen 1989; Browne and Arminger 1995; Jöreskog et al. 2016; Shapiro 2012; Yuan and Bentler 2007). These models relate a multivariate vector of observed I variables (also referred to as items or indicators) to a vector of latent variables (i.e., factors) of a dimension smaller than I. SEMs constrain the mean vector and the covariance matrix of the random variable as a function of an unknown parameter vector . By doing so, the mean vector is constrained as , and the covariance matrix is constrained as .

Local structural equation models (LSEM) study SEMs as a function of a univariate moderator variable Hildebrandt et al. (2009, 2016). The moderator variable is the age or time variable in most applications. LSEM has been mentioned as a general tool for assessing measurement invariance across age or other continuous indicators in social sciences (Dong and Dumas 2020; Han et al. 2019; Leitgöb et al. 2023). Note that LSEM has also been abbreviated as LOSEM Briley et al. (2015a, 2015b).

The LSEM method is particularly suited for studying differentiation or dedifferentiation hypotheses (see Hildebrandt et al. 2009 or Molenaar et al. 2010b). Differentiation hypotheses of intelligence and general scholastic abilities describe changes in the relationship between different cognitive abilities (i.e., their structural organization) depending on the level of general ability (ability differentiation), age (differentiation in children and adolescents; dedifferentiation in older adults), and their interaction. Breit et al. (2022) presented a systematic review of 33 reports with data from 51 studies with over 260,000 participants that examined differentiation effects. The findings indicated practically significant ability differentiation in children and adults, and significant age dedifferentiation in older adults, with effect sizes that implicate a practical significance of the effects. However, Breit et al. (2022) also showed that age differentiation in children and adolescents was not supported. Instead, small but negligible effect sizes were found for age dedifferentiation in adolescents.

The LSEM method has been extended to two moderator variables by Hartung et al. (2018). Molenaar (2021) proposed a semiparametric moderated factor modeling approach in which no assumption concerning the functional form between the moderator and the model parameters are imposed. In contrast to the original definition of LSEM (Hildebrandt et al. 2009), some model parameters are allowed to be invariant across the continuous moderator variable.

LSEM is closely related to moderated nonlinear factor analysis (MNFA; Bauer 2017; Curran et al. 2014; Molenaar and Dolan 2012). In MNFA, a functional form of SEM model parameters as a function of a single moderator (or multiple moderators) is imposed. In this sense, MNFA is often more confirmatory than LSEM. Nevertheless, differentiation hypotheses were also investigated by means of MNFA (Molenaar et al. (2010a, 2010b, 2011, 2017)). A tutorial on how to apply MNFA using the R package OpenMx (Boker et al. 2011) was given by Kolbe et al. (2022). LSEM also bears a similarity to the approach of individual parameter change (Oberski 2013; Arnold et al. (2020, 2021). Variation in SEM model parameters can also be tested with score-based invariance tests (Huth et al. 2022; Merkle and Zeileis 2013; Wang et al. 2014).

LSEM has been implemented in the R package sirt (Robitzsch 2023b) as a wrapper to the popular SEM package lavaan (Rosseel 2012). Moreover, the R package umx (Bates et al. 2019) can also be utilized for LSEM estimation.

This article reviews and extends LSEM estimation methods and discusses the implementation in the R package sirt. In previous literature, LSEM was fitted as a sequence of models that are separately evaluated as each value of the moderator variables. In this article, a joint estimation approach is proposed that is a simultaneous estimation method across all moderator values and also allows some model parameters to be invariant with respect to the moderator. Sufficient detail on the core estimation functions in the sirt package is provided. The article also evaluates two significance testing approaches to assess whether the moderator values are related to a model parameter in two simulation studies. Finally, an empirical example demonstrates the usefulness of the LSEM methodology.

The remainder of this article is structured as follows. Section 2 overviews the most important LSEM applications in the literature. In Section 3, different LSEM estimation and significance testing approaches are presented. Details about LSEM implementation in the sirt package can be found in Section 4. Section 5 discusses R input code and R output of an LSEM analysis involving illustrative datasets. Section 6 includes a simulation study investigating parameter recovery in LSEM regarding bias and root mean square error. Section 7 includes a simulation study that investigates different estimators of variability in parameter curves and the statistical properties of significance tests of parameter variation. In Section 8, an empirical example is presented that reanalyzes SON-R intelligence data for children aged between 2 and 7 years. Finally, Section 9 closes with a discussion.

2. Review of LSEM Applications

We now review important LSEM applications to demonstrate that this method is widely applied in substantive research. The original LSEM publication of Hildebrandt et al. (2009) (“Complementary and competing factor analytic approaches for the investigation of measurement invariance”) has been cited 93 times and 80 times, according to Google Scholar and ResearchGate (accessed on 18 July 2023), respectively. The second methodological LSEM publication by Hildebrandt et al. (2016) (“Exploring factor model parameters across continuous variables with local structural equation models”) has been cited 111 times, 89 times, and 77 times, according to Google Scholar, ResearchGate, and Web of Science (accessed on 18 July 2023), respectively. Hence, one could say that LSEM fills some niche in the researcher’s methodological toolbox.

In the following, some LSEM applications are briefly described. The studies are loosely organized according to the fields of application.

Olaru and Allemand (2022) examined differential and correlated change in personality across the adult lifespan using LSEM. Brandt et al. (2022) applied LSEM to four waves of data obtained with the full NEO Personality Inventory collected over 11 years from 1667 adults in a US sample using age as a continuous moderator. Hartung et al. (2021) investigated the age-moderated covariance structure of the satisfaction with life scale (SWLS) and the domains of health satisfaction and financial satisfaction using LSEM. Olaru et al. (2019) analyzed NEO personality indicators across ages between 16 and 66 years by means of LSEM. They selected items for short scales that had the greatest extent of measurement invariance across age. Seifert et al. (2022) studied whether the rank-order stability of personality increases until midlife and declines later in old age and found that this inverted U-shaped pattern was not consistently observed in two reanalyzes utilizing LSEM. Loneliness across different age levels was investigated by LSEM in Entringer and Gosling (2022) and Panayiotou et al. (2022). Van den Akker et al. (2021) applied LSEM for students aged between 8 and 18 years to investigate whether levels of conscientiousness and agreeableness decrease when levels of neuroticism increase, indicating a dip in personality maturation. Gnambs (2013) applied LSEM in a multitrait multi-informant meta-analysis for the big five factors.

Hartung et al. (2022) investigated the structure of the “dark personality factor” across age and gender using LSEM. Krasko and Kaiser (2023) investigated measurement invariance across age for the dark triad by means of LSEM.

Bratt et al. (2018) investigated levels of perceived age discrimination across early to late adulthood by employing LSEM, using data from the European social survey (ESS) collected in 29 countries. Dutton and Kirkegaard (2022) applied LSEM to investigate a particular question about the association between religiousness and intelligence. Allemand et al. (2022) used LSEM to investigate the effects of continuous age and COVID-19 virus worry on mean levels and correlations between gratitude and remaining opportunities and time. Allemand et al. (2021) examined age-related psychometrics and differences in the measurement, mean-levels, variances, and correlations of gratitude and future time perspective across adulthood using data in a representative Swiss sample for participants aged between 19 and 98 years.

Schroeders et al. (2015) studied the differentiation fluid and crystallized intelligence in German students of grades 5 to 12. Watrin et al. (2022) studied the age differentiation hypothesis of declarative knowledge, as proposed in Cattell’s investment theory. Hülür et al. (2011) studied with LSEM whether cognitive abilities become more differentiated with increasing age during childhood for children from age 2.5 to 7. Hartung et al. (2020) tested whether associations among executive functions strengthened from middle childhood to adolescence using cross-sectional data from a sample of children aged between 7 and 15 years. Gnambs and Schroeders (2020) examined the effects of cognitive abilities on the factor structure of the Rosenberg self-esteem scale across age by means of LSEM. Whitley et al. (2016) explored cross-sectional associations of age with five cognitive tests (word recall, verbal fluency, subtraction, number sequence, and numerical problem solving) in a large representative sample aged between 16 and 100 living in the UK. Breit et al. (2020) investigated ability differentiation, developmental differentiation, and their interaction with LSEM in two studies. Breit et al. (2021) provided a review of the literature on ability and developmental differentiation effects in children and youths. Breit et al. (2023) studied ability differentiation, including creativity measures, through LSEM for German students aged between 12 and 16 years.

Hildebrandt et al. (2010) employed LSEM to investigate structural invariance and age-related performance differences in face cognition. Hildebrandt et al. (2013) studied the specificity of face cognition compared with object cognition from individual differences and aging perspective by determining the amount of overlap between these abilities at the level of latent constructs across age. By utilizing LSEM, Liu et al. (2022) found that individual differences in white matter microstructure of the face processing brain network were more differentiated from global fibers with increasing ability.

LSEM was also applied in behavioral neurosciences Kaltwasser et al. (2017). Jokić-Begić et al. (2019) used LSEM for assessing measurement invariance across age for cyperchondria, a process of increased anxiety over one’s health as a result of excessive online searching. Lodi-Smith et al. (2021) found that autism characteristics measured by the autism-spectrum quotient scale were not strongly associated with age by utilizing LSEM. Cox et al. (2016) used LSEM to quantify microstructural properties of the human brain’s connections for understanding normal ageing and disease (see also Briley et al. (2015b)). Researchers de Mooij et al. (2018) used LSEM to study differences within and between brain and cognition across the adult life span. Zheng et al. (2019) investigated whether genetic and environmental influences on achievement goal orientations shift were moderated with age. Madole et al. (2019) applied LSEM in network analysis as a method for investigating symptom-level associations that underlie comorbidity connecting diagnostic syndromes.

Olaru et al. (2019) utilized LSEM in combination with ant colony optimization (see also Olaru and Jankowsky 2022) to resample and weight subjects to study differences in the measurement model across age as a continuous moderator variable.

An overview of different modeling strategies of LSEM for longitudinal data is presented in Olaru et al. (2020). Wagner et al. (2019) investigated through LSEM whether personality becomes more stable with age. They disentangled state and trait effects for the big five across the life span by applying LSEM to trait-state-occasion models. Gana et al. (2023) applied trait-state-occasion models in tandem with LSEM to investigate whether the characteristics of the depression EURO-D scale were associated with age.

LSEM was also applied to moderator variables different from age. Klieme and Schmidt-Borcherding (2023) employed LSEM to explore whether there is noninvariance for indicators of research self-efficacy regarding different training levels of students operationalized as the number of studied semesters. Weiss et al. (2020) investigated the threshold hypothesis of creativity by handling intelligence as a continuous moderator in LSEM. Schroeders and Jansen (2022) studied by means of LSEM whether the multidimensional structure of the science self-concept is moderated by levels of the cognitive ability in science. Basarkod et al. (2023) investigated whether reading self-concept dimensions vary across reading achievement levels in the PISA study. Olaru et al. (2022) examined the effects of family background on children’s receptive vocabulary using LSEM with latent growth curve models. Bolsinova and Molenaar (2019) (see also Bolsinova and Molenaar 2018) used LSEM for indicator-specific covariates and extended LSEM to the study of cognitive tests involving reaction times.

3. Estimating and Testing Local Structural Equation Models

3.1. Single-Group Structural Equation Model

In SEM, a measurement model is imposed that relates the observed variables to latent variables

In addition, the covariance matrix of is denoted by ; that is, . Moreover, and are multivariate normally distributed random variables. In addition, and are assumed to be uncorrelated. In CFA, the multivariate normal (MVN) distribution is represented as and . As we are only concerned with the covariance structure in SEM in this paper, we assume and . Then, the covariance matrix of in CFA can be computed as:

The parameter vector contains parameters in , , and that are estimated. Typically, the covariance matrix is a constrained matrix determined by the specification (2).

In a general SEM, relationships among the latent variables are modeled in path models. A matrix of regression coefficients is specified such that:

where denotes an endogeneous and an exogeneous multivariate normally distributed latent variables. Note that (3) can be written as:

where denotes the identity matrix. In this case, the covariance matrix of are represented in SEM as:

Some identification constraints must be imposed when estimating the covariance structure of the SEM in (2) or (5) (Bollen 1989; Bollen and Davis 2009). The purpose of identifying constraints primarily lies in a convenient interpretation of latent variables and is not primarily driven by improving the efficiency of estimating .

3.2. Multiple-Group Structural Equation Model

We now describe the general estimation of a multiple-group SEM. There exist G known groups . The allocation of a group to a subject is known in this case. Assume that group g has subjects and an empirical covariance matrix . The population covariance matrices are denoted by (). The model-implied covariance matrices are denoted by ). The unknown parameter vector can have common parameters across groups and parameters that are group-specific. For example, in a CFA, equal factor loadings and item intercepts across groups are frequently imposed (i.e., measurement invariance holds; Meredith 1993; Putnick and Bornstein 2016) by assuming the same loading matrix across groups, while covariance matrices of latent variables or the matrix of regression coefficients are allowed to differ across groups.

Up to constants, the maximum likelihood (ML) fitting function of the unknown parameter for the covariance structure in the multiple-group SEM is given by (see Bollen 1989 and Jöreskog et al. 2016):

Note that I refers to the number of observed variables; that is, the dimension of . The set denotes the set of G empirical covariance matrices that are sufficient statistics in multiple-group SEM estimation. The parameter vector is estimated by minimizing F in (6) and is denoted as the ML estimate. The estimated parameter is denoted by .

In practice, the model-implied covariance matrix can be misspecified (Boos and Stefanski 2013; Gourieroux et al. 1984; Kolenikov 2011; White 1982), and is a pseudo-true parameter defined as the minimizer of the fitting function F in (6). Importantly, does not refer to a parameter of the data-generating model in this case. In contrast, it should be interpreted as a summary of the data that are of central interest to the researcher.

The ML fitting function (6) can be considered a special case of discrepancy function. To this end, we define a general discrepancy function between an empirical covariance matrix and a population covariance matrix . The real-valued nonnegative function should only attain the value zero if (i.e., for correctly specified models). For the ML fitting function, the discrepancy function is defined as:

If an age moderator variable A is available, an SEM can, in principle, be estimated for all subgroups of subjects for different values of the age variable. In practice, sample sizes for concrete age values might be too small for separate estimation of the SEM. Moreover, discretizing the values of a continuous moderator variable A into G distinct groups of subjects might not be preferred due to loss of information (Hildebrandt et al. 2009). To circumvent these issues, LSEM has been proposed. We discuss LSEM estimation methods in the next subsections.

3.3. Local Weighting

Instead of grouping subjects that fall within a given range of the moderator, as in multiple-group SEMs, observations are locally weighted around focal points (i.e., specific values of the continuous moderator variable) in LSEM. In previous studies, SEMs are sequentially estimated on the basis of weighted samples of observations at all focal points (i.e., the pointwise LSEM estimation approach, see Section 3.5).

In LSEM, researchers are interested in investigating moderator-specific covariance structures. That is, they aim to model conditional covariances:

As argued in the previous section, sample sizes might be too small for estimating only for subjects with . To this end, subjects with moderator values a sufficiently close to a focal point (i.e., a chosen value of the moderator variable A) should also enter the estimation. For each focal point and each subject n, weights are computed that reflect the distance of the moderator value (e.g., a value of age) of person n (i.e., ) and the focal point . If , the weight should be one, and it should be zero for age values that strongly differ from .

The computation of weights relies on a kernel function K that is chosen by the researcher (Hildebrandt et al. (2009, 2016). The real-valued kernel function fulfills the properties , (i.e., it is a symmetry function), for all , and K is a decreasing function for . The subject-specific weight for subject n at a focal point with a pre-specified bandwidth is computed as:

By the definition of K, weights are bounded within the interval .

Typical choices of the weight function in the literature of nonparametric regression or density estimation are the Gaussian kernel, the Epanechnikov kernel, and the uniform kernel function. The Gaussian kernel function is defined as:

In density estimation involving the Gaussian kernel function, an optimal bandwidth is given by with , and is the standard deviation of the age moderator variable (Silverman 1986). The parameter h is referred to as the bandwidth factor in this article. The Epanechnikov kernel function is defined as:

For age values with , weights are zero. Finally, the uniform kernel function is defined as:

The uniform kernel can be used to define weights so that they reflect the discretization of the continuous age variable A into G distinct groups. The estimated LSEM will provide parameter results that are identical to the multiple-group SEM if the same identification constraints are utilized.

3.4. Estimation of Conditional Means and Conditional Covariances

We now describe the estimation conditional covariances . In a practical implementation of the LSEM, researchers define a discrete grid of moderator values (i.e., the focal points) of the age variable A. In most applications, a grid of equidistant focal points is chosen (Hildebrandt et al. 2016). However, the grid of focal points could also be chosen in such a way that it mimics the empirical distribution of the moderator variable. For example, researchers might use empirical percentiles of the moderator variable (e.g., a grid of 10 focal points using the pth percentile for ).

To estimate conditional covariances at a focal point , we first compute the conditional mean function for . For a variable for , a local quadratic regression model is specified to estimate the conditional mean at focal point . That is, one minimizes:

The conditional mean estimate of is given by . Note that the minimization in (14) is a weighted least squares estimation problem for a linear regression (i.e., it is linear in model parameters) and closed formulae are available for estimating (see Fox 2016).

We now describe the estimation of conditional covariances . First, residuals are computed using local quadratic regression parameters defined in (14) as:

The estimate of the conditional covariances can be obtained by simple weighting or a local regression model.

In the weighting approach, one estimates:

where . This approach was advocated in Hildebrandt et al. (2009) and Hildebrandt et al. (2016).

In recently proposed local regression modeling (see Olaru et al. 2020), one also specifies a local quadratic regression estimation problem for the computation of the conditional covariance:

The estimate of the conditional covariance is given as .

Note that the estimation of the conditional mean function in (14) and the conditional covariance function in (17) is essentially equivalent, except for the case that the former uses the values as the dependent variable (i.e., indicator i), while the latter uses the product residual of variables for indicators i and j for the computation of the moderator-specific conditional covariance.

The steps can be repeated for all pairs of variables i and j () and all focal points (). The resulting estimated conditional covariance matrices at focal points are denoted by (). The estimated covariance matrices are not guaranteed to be positive definite. Therefore, the estimate might be slightly modified to determine a close matrix to that fulfills the positive definiteness property (Bentler and Yuan 2011).

LSEM estimation methods rely on the estimated conditional covariances. Three different estimation approaches are described in Section 3.5, Section 3.6, and Section 3.8.

3.5. Pointwise LSEM Estimation

Pointwise LSEM estimation relies on the idea that a separate SEM is fitted to each focal point . The resulting parameter estimates are plotted or analyzed as a function of the age variable A. More formally, based on the conditional covariance estimate , at each focal point , the following fitting function is minimized:

where denotes the minimizer of . Note that in (18), the distance between the empirical conditional covariance and the model-implied conditional covariance at the focal point is minimized. This approach was proposed by Hildebrandt et al. (2009, 2016). The minimization in (18) is not restricted to ML estimation and can also be applied to weighted least estimation in SEM (Browne 1974) or model-robust fitting functions (Robitzsch 2023a).

Model fit statistics, such as RMSEA, SRMR, or TLI, are computed at each value of the focal point. Note that pointwise LSEM estimation provides parameter curves across different values of the moderator variable.

The pointwise LSEM estimation method allows the parameter vector to vary freely across a. However, this flexibility sometimes hinders interpretation. Moreover, some researchers might prefer to impose invariance constraints for some of the model parameters (Leitgöb et al. 2023). For this reason, a joint LSEM estimation approach is proposed that is described in the next Section 3.6.

3.6. Joint LSEM Estimation with Invariance Constraints

While pointwise LSEM estimation tackles the estimation problem by successively and separately estimating an SEM at each of the focal points, joint LSEM estimation defines a single estimation function that involves conditional covariance matrices of all focal points. By doing so, the parameter vector can contain parameters that are specific to each focal point and parameters that do not vary for different values of age. The fitting function is defined as:

where is the minimizer of and is the sum of weights specific to each focal point . Note that (19) looks like a fitting function in multiple-group SEM estimation. However, subjects can enter multiple groups (i.e., focal points) because they enter the estimated conditional covariances multiple times according to the weights . Hence, the fitting function F in (19) will not be an ML fitting function and falls in the general class of M-estimation problems (Stefanski and Boos 2002).

The parameter vector can be decomposed into components , where contains parameters that are invariant across age, and for contain the parameters that vary across age values. The fitting function in (19) can then be rewritten as:

Note that the originally proposed pointwise estimation of the fitting function in (18) is equivalent to joint LSEM estimation in (20) if there does not exist invariant model parameters .

In joint LSEM estimation, global model fit statistics are computed. These fit statistics can be interpreted similarly as in multiple-group SEMs.

3.7. Estimation of DIF Effects

In joint LSEM estimation defined by the fitting function F in (20), some parameters (i.e., the parameter vector ) have invariance constraints across the age moderator variable. These invariance constraints ease interpretation and have the advantage of specifying parsimonious SEMs. However, researchers might be interested in what would happen if these invariance constraints were freed.

Violations of measurement invariance are referred to as differential item functioning (DIF) in item response theory literature (Mellenbergh 1989; Holland and Wainer 1993; Millsap 2011). Noninvariant parameters are referred to as DIF effects in this literature. We also use this notation and now discuss the estimation of DIF effects. DIF effects emerge if all estimated age-specific parameters () are held fixed in (20), and the entries of the parameter vector are allowed to vary across age. We denote the focal-point-specific estimates of DIF effects by . To this end, invariant parameters are replaced with , and the following fitting function F is minimized to obtain DIF effect estimates ():

Note that there are no invariant model parameters in (21), and the DIF effects at the focal point could alternatively be obtained by pointwise minimization of:

The estimated DIF effects can be plotted or analyzed as a function of the age moderator to investigate whether the invariance constraints are substantially violated.

3.8. Joint LSEM Estimation with More General Parameter Constraints and Relation to Moderated Nonlinear Factor Analysis

In this subsection, joint LSEM estimation is slightly generalized. The fitting function is the same as in (20), but constrains across focal-point-specific parameters are allowed. In particular, we discuss the implementation of linear, quadratic, and piecewise linear or quadratic parameter constraints.

Assume a parameter curve for a particular parameter. Furthermore, assume that the focal points are equidistant; that is, are equal for .

We first describe a linear parameter constraint. A linear function of a parameter for age values a is given by . The first derivative of f is constant, and it holds that . Hence, the equality in derivatives can be translated into equalities in first-order differences in model parameters:

These constraints can be added in multiple-group SEM in typical SEM software such as lavaan (Rosseel 2012).

A quadratic function of a parameter is given by . This function has constant second-order derivatives; that is, . Hence, second-order differences in parameter values are constant, which translates into:

Similarly, cubic parameter constrains can be implemented by recognizing that the third-order differences in parameter values are constant. A slightly more tedious constraint than (24) can be derived.

The linearity and quadratic constraints in (23) and (24) can also be applied if parameter curves are broken into segments. Hence, piecewise linear or quadratic functions can be applied.

Applying (piecewise) quadratic parameter functions in joint LSEM estimation can be interpreted as a kind of smoothing procedure to stabilize parameter estimation. Furthermore, the raw data are smoothed when computing the estimated conditional covariance matrices . Hence, researchers have two choices for how stabilizing parameter estimation in LSEM.

Notably, parameter constraints in joint LSEM estimation are estimates of MNFA in a particular case. If the age moderator values A has only values at the grid of equidistant focal points , then using the uniform kernel with is equivalent to MNFA with appropriate parameter constraints. Such an approach is described in Tucker-Drob (2009).

3.9. Parameter Curve Summaries and Significance Testing

Finally, we discuss the definition of summary statistics and the test of significant parameter variation across age. Let be a parameter curve of some model parameter estimated at focal points (). The parameter curve can be summarized by the mean and the standard deviation. Let be the discrete density of the age variable A at focal point and assume that . The (weighted) average value of the parameter curve (i.e., the mean) is given as:

In practice, an estimate of (25) is obtained by

The standard deviation of a parameter curve quantifies the variability of a parameter curve across age and is given by:

An estimate of the standard deviation defined in (27) is given by:

The sample estimate is always positive in finite samples if no invariance constraints are imposed. Hence, the naive standard deviation estimate in (28) will be positively biased. The bootstrap resampling procedure (Efron and Tibshirani 1994) can be used to reduce the bias in an estimate of . For LSEM, nonparametric bootstrap is implemented, which resamples subjects with replacement. The pointwise standard deviation of a parameter value across bootstrap samples can be used as a standard error estimate. A bias-corrected estimate of the standard deviation is obtained by:

where and is the finite-sample bias of that can be determined by bootstrap resampling (Efron and Tibshirani 1994). A t-statistic for significant variation in an estimated parameter curve can be computed as:

where is the standard deviation of values defined in (28) across different bootstrap samples. Note that this test procedure relies on a normal distribution assumption for the test statistic t, although it is probably an incorrect null distribution.

An alternative test for parameter variation is based on a Wald test. A covariance matrix estimate for the vector can be obtained from bootstrap. It is assumed that is multivariate normally distributed. Let be a matrix that implements equality constraints across the values of the parameter curve. The null hypothesis of no parameter variation is given by . Consider the Wald test statistic:

This statistic is chi-square distributed with degrees of freedom.

In previous work, a permutation test has been proposed for testing parameter variation (Hartung et al. 2022; Hildebrandt et al. (2009, 2016)). A permutation test simultaneously assesses the effects on all parameters. In contrast, the test based on the standard deviation (30) and the Wald test (31) relies on a fitted model without modifying all other model parameters. Hence, we tend to favor the latter statistics over the permutation test.

4. Implementation of Local Structural Equation Models in the Sirt Package

In this section, we discuss the implementation of LSEM in the R (R Core Team 2023) package sirt (Robitzsch 2023b). The CRAN version can be installed within R using utils::install.packages(’sirt’), while the most recent GitHub version can be installed employing devtools::install_github(’alexanderrobitzsch/sirt’). The four primary LSEM functions are sirt::lsem.estimate(), sirt::lsem.bootstrap(), sirt::lsem.test() and sirt::lsem.permutationTest(), which will be discussed below. The new CRAN release of sirt from August 2023 (sirt 3.13-228; https://cran.r-project.org/web/packages/sirt/ accessed on 11 August 2023) includes the functionality described in this article.

LSEM estimation in sirt provides a wrapper to the SEM package lavaan (Rosseel 2012). The model specification follows the lavaan syntax, which eases the familiarity with R code for LSEM estimation because lavaan seems to be the most popular open-source SEM software.

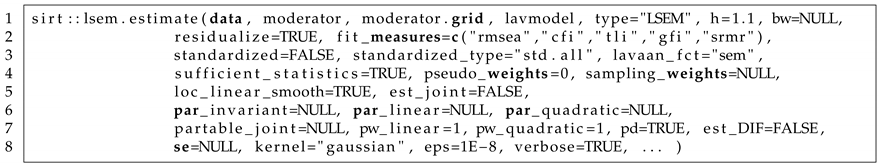

In Listing 1, the main function sirt::lsem.estimate() is displayed. This function is the main LSEM estimation function. We now discuss the most important arguments in detail.

| Listing 1. LSEM function sirt::lsem.estimate(). |

|

In data, a data frame must be provided by the user. The data frame should also include the moderator variable, whose variable name must be specified in moderator. The set of focal points can be defined as a vector moderator.grid. In lavmodel, lavaan syntax must be provided for estimating the LSEM. The default of the argument type is “LSEM”; that is, an LSEM is estimated. By choosing type=”MGM”, a multiple-group model with a discretized moderator variable is estimated. The bandwidth in sirt::lsem.estimate() can be specified by h or bw. The arguments are related through the formula:

where denotes the estimated standard deviation of the moderator variable A (i.e., the argument moderator). The logical argument residualize indicates whether local regression smoothing of the mean structure should be applied before estimating conditional covariances. The argument fit_measures defines fit statistics available in lavaan that should be included in the LSEM output. The logical argument standardized defines whether standardized parameters should appear in the LSEM output. The type of standardization is specified in standardized_type whose conventions follow the lavaan package. In lavaan_fct, the lavaan function is specified that is used for LSEM estimation. The default lavaan_fct="sem" refers to lavaan::sem(). Other options are "cfa" (for lavaan::cfa()) and "lavaan" (for lavaan::lavaan()). The logical argument sufficient_statistics indicates whether sufficient statistics (i.e., conditional mean and conditional covariances) should be used in estimation. Without missing data, ML can always rely on sufficient statistics. However, in the presence of missing data, conditional covariance matrices are estimated based on pairwise deletion. However, if full information maximum likelihood was utilized, the mean structure cannot be properly residualized. Hence, researchers are advised either to believe in missing data mechanisms close to missing completely at random that justify the usage of pairwise deletion or to apply an appropriate multiple imputation procedure prior to LSEM analysis if there are missing values in the dataset.

Users can also input a vector of sampling weights in sampling_weights. The logical argument loc_linear_smooth defines whether local quadratic regression (see (17)) should be applied in the estimation of conditional covariances. If the default loc_linear_smooth=TRUE is changed into loc_linear_smooth=FALSE, the weighting formula (16) is utilized. The logical argument est_joint indicates whether joint LSEM estimation (i.e., the default; see Section 3.6 or Section 3.8) or pairwise LSEM estimation (see Section 3.5) is applied. Invariant model parameters can be specified in the vector argument par_invariant. If there are some invariant parameters, joint LSEM estimation is automatically chosen (i.e., est_joint=TRUE). Linear or quadratic parameter constraints on model parameters (see Section 3.8) can be specified with par_linear and par_quadratic, respectively. The number of segments in piecewise linear or piecewise quadratic parameter constrained estimation can be specified with pw_linear or pw_quadratic. The default is that the constrains should be applied across all moderator values (i.e., there is only one segment of a piecewise linear or quadratic function). The argument partable_joint allows the input of a lavaan parameter table in joint estimation. This argument has the advantage that arbitrary parameter constraints can be specified by the user (e.g., additional equality constraints in piecewise quadratic functions). The logical argument pd indicates whether non-positive definite conditional covariance matrices should be smoothed to ensure positive definiteness. The logical argument est_DIF defines whether DIF effects should be estimated (see Section 3.7). Note that DIF effects can only be estimated if the LSEM model contains some invariant model parameters. The argument kernel allows the choice of the kernel function. Possible options are “gaussian”, “epanechnikov”, and “uniform”. Finally, the logical argument verbose indicates whether some output should be displayed in the R console when estimating the LSEM model.

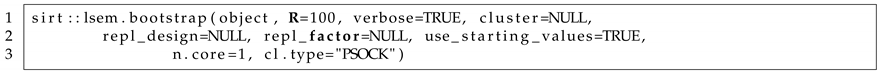

Listing 2 displays the LSEM bootstrapping function in the sirt package. An object object must be provided that is the output of the sirt::lsem.estimate() function. The number of bootstrap samples can be specified by the argument R. Bootstrap can also be applied at the level of higher-order units. For example, school classes, schools, or organizations can be bootstrapped instead of bootstrapping subjects. Such a kind of cluster bootstrap is required if there is an additional dependency structure in the data. In this case, users can define a vector of cluster units in cluster. The sirt::lsem.bootstrap() also allows more general replication designs such as jackknife, balanced repeated replication, or half sampling (Kolenikov 2010) by providing an matrix of resampling weights in the argument repl_design. In the case of more complex designs, a scale factor repl_factor must be defined by the user for a correct standard error computation. In the case of jackknife, it is 1 (or ), while it is in the case of bootstrap resampling. The bootstrap function sirt::lsem.bootstrap() is needed for computing the standard deviation statistic of parameter curves and its statistical inference (see Section 3.9). The sirt::lsem.bootstrap() function also allows an option for parallel computing. The number of employed cores can be specified by the argument n.core. The default is the use of one core which means that no parallel computing is applied in LSEM bootstrap estimation.

| Listing 2. LSEM function sirt::lsem.bootstrap(). |

|

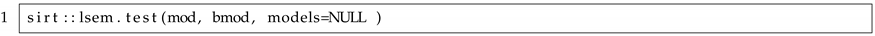

Listing 3 displays the LSEM function sirt::lsem.test() that performs the Wald tests for parameter variation (see Section 3.9). Instead of applying a test of the equality of a parameter curve on T focal points , the specification in models allows the test of significant regression parameters for a particular function. For example, a specification "FX=∼X1"=y∼m+I(m^2) tests whether the vector of the linear and the quadratic regression coefficient of the factor loading FX=∼X1 differs from . Note that sirt::lsem.test() requires the output of sirt::lsem.estimate() in mod and the output of the application of the bootstrap (or general resampling) of sirt::lsem.bootstrap() in bmod.

| Listing 3. LSEM function sirt::lsem.test(). |

|

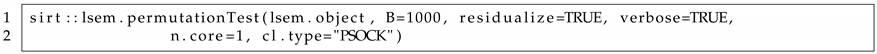

Listing 4 displays the LSEM function sirt::lsem.permutationTest() that carries out the permutation test for a statistical significance test for variation in parameter curves of the LSEM model Hildebrandt et al. (2009, 2016). In the permutation test, the values of the moderator variables are randomly resampled in the dataset to create a null distribution of parameter curves under the assumption of no relation to the moderator. The number of permutation samples can be specified in the argument B. As in sirt::lsem.bootstrap(), parallel computing can be requested by the number of cores in the argument n.core.

| Listing 4. LSEM function sirt::lsem.permutationTest(). |

|

5. Illustrative Datasets

In this section, we illustrate LSEM estimation with the R package sirt. Three simulated datasets involving six variables X1, X2, X3, Y1, Y2, and Y3 are used for illustration. The analysis model is a two-dimensional factor model with a simple loading structure, where the first factor FX is measured by X1, X2, and X3, and the second factor FY is measured by Y1, Y2, and Y3. The moderator variable age was assessed at 13 time points, referring to ages . An anonymous reviewer pointed out that using 13 time points would look like longitudinal data. However, we only used the 13 time points for illustratory purposes. For example, there could be 13 cross-sectional age groups that are assessed.

The population parameters of the factor model for each age and each of the three datasets DATA1, DATA2, and DATA3 can be found in the directory “POPPARS” at https://osf.io/puaz9/?view_only=63ffb2fd30f5400e89c59d03366bf793 (accessed on 3 June 2023). From these population parameters, 10,000 subjects were simulated at each of the 13 age points. The distribution at each age point exactly coincides with the specified conditional mean vector and the conditional covariance matrix (see, e.g., the lavaan::simulateData() function with the argument empirical=FALSE for a similar functionality). Data were simulated from a multivariate normal distribution. This simulation ensures that the population data involving 130,000 subjects (i.e., subjects) exactly follows the specified covariance structure. In DATA1, all model parameters except for residual variances were assumed noninvariant. In DATA2, only the structural parameters (i.e., factor correlation and factor variances) were noninvariant, while factor loadings and residual variances were assumed invariant. In DATA3, all measurement and structural model parameters were assumed invariant. The population datasets and the data-generating model parameters can be found in the directory “POPDATA” at https://osf.io/puaz9/?view_only=63ffb2fd30f5400e89c59d03366bf793 (accessed on 3 June 2023). The illustrative datasets used in this section were subsamples of 2000 subjects from datasets DATA1, DATA2, and DATA3. The main motivation for using a subsample of the data is to show that LSEM produces some variability in model parameter estimates even if the model parameter is invariant across the moderator values in the data-generating model. The subsamples were created by random sampling without replacement from the population datasets. These datasets can be found in the directory “ILLUSDATA” at https://osf.io/puaz9/?view_only=63ffb2fd30f5400e89c59d03366bf793 (accessed on 3 June 2023).

Listing 5 contains the specification of the LSEM model involving two factors FX and FY. In lines 5–10 in Listing 5, the lavaan syntax for the factor model is specified in the string lavmodel. Line 13 in Listing 5 defines the parameter names (i.e., the factor loadings of X2, X3, Y2, and Y3) that are assumed invariant across the values of the moderator variable age. Line 16 in Listing 5 specifies the vector of focal points at which the LSEM model should be estimated. Lines 19–21 in Listing 5 contain the R command for applying sirt::lsem.estimate(). Note that the invariant model parameters are provided with the argument par_invariant, DIF effects were estimated due to est_DIF=TRUE, and the bandwidth factor h was chosen as 1.1. Joint LSEM estimation was applied because invariance constraints among parameters were imposed. In line 25 in Listing 5, the random seed is fixed, which ensures that bootstrap resampling will not change when applying code at a different time. Line 26 in Listing 5 specifies bootstrapping using sirt::lsem.bootstrap(). In total, bootstrap samples were utilized. Note that the specified factor model in Listing 5 is misspecified for the dataset DATA1, but correctly specified for the datasets DATA2 and DATA3.

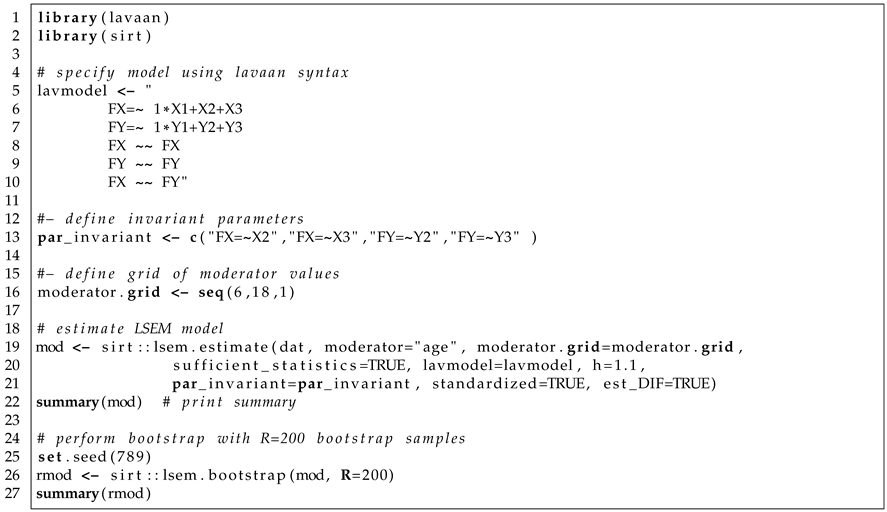

| Listing 5. Illustrative datasets: Specification of LSEM with invariant factor loadings in sirt::lsem.estimate() and subsequent bootstrap in sirt::lsem.bootstrap(). |

|

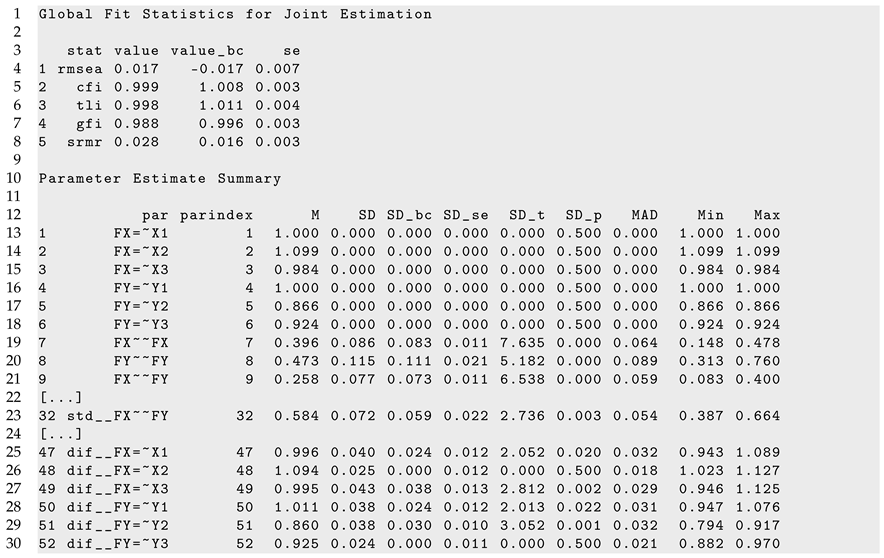

A part of R output of the sirt::lsem.bootstrap() function can be found in Listing 5. A slight misfit is detected in fit statistics RMSEA and SRMR. The CFI and TLI fit statistics are not indicative of the incorrect invariance assumption of factor loadings.

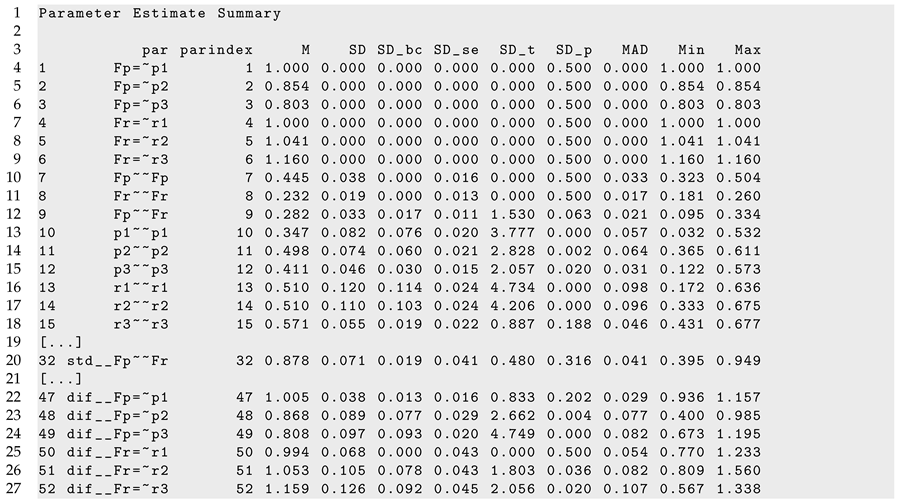

Figure 1 displays parameter curves for the two factor variances (i.e., FX∼∼FX and FY∼∼FY) and the factor correlation (i.e., std FX∼∼FY) for the illustrative dataset DATA1. From Listing 5, we see that the variance of FX had an average of 0.396 with significant parameter variation (, ), and FY had an average of 0.473 with significant parameter variation (, ). Moreover, the factor correlation had an average of 0.584 and also showed a significant parameter variation (, ).

Figure 1.

Illustrative datasets: Parameter curves for variances of the two factors (i.e., FX∼∼FX and FX∼∼FX) and the correlation of the two factors (std FX∼∼FY) for the illustrative dataset DATA1.

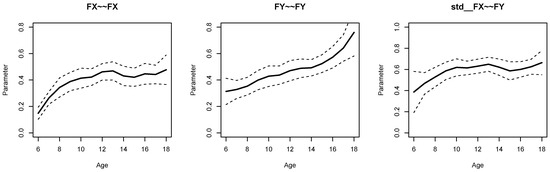

Figure 2 displays parameter curves for the two factor variances and the factor correlation for the illustrative dataset DATA3, which had no simulated parameter variation in these parameters. By comparing Figure 1 and Figure 2, it is evident that there is negligible parameter variation for the dataset DATA3 compared to the dataset DATA1.

Figure 2.

Illustrative datasets: Parameter curves for variances of the two factors (i.e., FX∼∼FX and FX∼∼FX) and the correlation of the two factors (std FX∼∼FY) for the illustrative dataset DATA3.

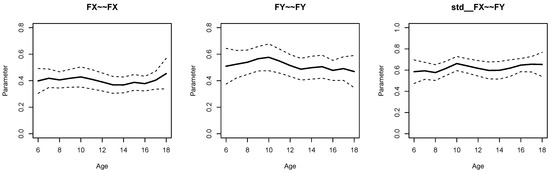

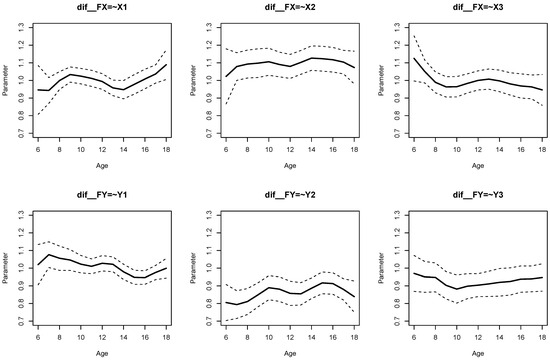

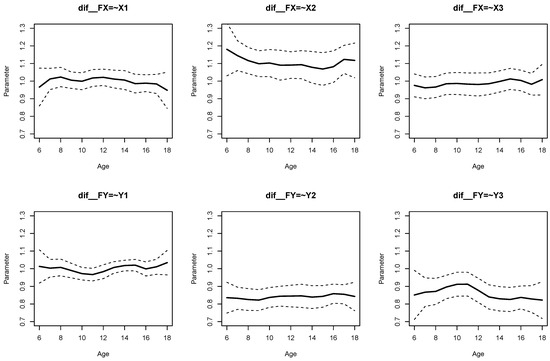

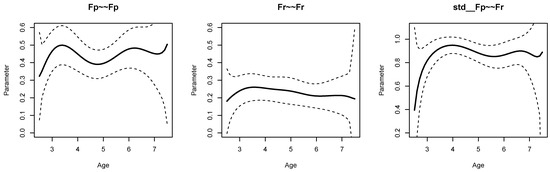

The parameter curves for DIF effects for factor loadings for datasets DATA1 and DATA2 are displayed in Figure 3 and Figure 4, respectively. For DATA1, factor loadings were simulated as noninvariant, while they were assumed invariant across age for DATA2. This fact is visible when comparing Figure 3 and Figure 4.

Figure 3.

Illustrative datasets: Parameter curves for DIF effects of factor loadings for the illustrative dataset DATA1.

Figure 4.

Illustrative datasets: Parameter curves for DIF effects of factor loadings for the illustrative dataset DATA2.

It can be seen from Listing 6 that DIF effects for factor loadings X1 (, ), X3 (, ), Y1 (, ), and Y2 (, ) had significant parameter variation for dataset DATA1, while they were not significant for loadings of X2 and Y3.

| Listing 6. Illustrative datasets: Part of the output of sirt::lsem.bootstrap() for the illustrative dataset DATA1. |

|

Finally, part of the R output of sirt::lsem.bootstrap() for dataset DATA3 is displayed in Listing 7. In accordance with the data-generating model, both factor variances, the factor correlation, and the DIF effects for factor loadings did not show significant parameter variation across age.

| Listing 7. Illustrative datasets: Part of the output of sirt::lsem.bootstrap() for the illustrative dataset DATA3. |

|

Note that a researcher will only have one dataset available for analysis. This section shows that LSEM model parameter output and figures are able to distinguish between situations of noninvariant and invariant model parameters. The standard deviation of a model parameter quantifies the variability of a model parameter across the values of the moderator.

For identification and interpretation reasons, it is useful to specify LSEM models with (some) invariant factor loadings. DIF effects reported in the LSEM output provide a post hoc assessment of the variability of parameter curves across the moderator values if parameter invariance was specified in the LSEM.

6. Simulation Study 1: Bias and RMSE

6.1. Method

In Simulation Study 1, the bias and the root mean square error (RMSE) of LSEM estimates of parameter curves were investigated. A one-factor model for three indicators, X1, X2, and X3, with a latent factor variable FX was specified. The data-generating model coincided with those from the illustrative datasets presented in Section 5. In contrast to Section 5, we only used the first three observed variables and considered a one-factor instead of a two-factor model in Simulation Study 1.

The population parameters can be found in the directory “POPPARS” at https://osf.io/puaz9/?view_only=63ffb2fd30f5400e89c59d03366bf793 (accessed on 3 June 2023). In this simulation, sample sizes N were chosen as 250, 500, 1000, 2000, and 4000. Instead of simulating data, random samples without replacement of sample size N were drawn from population datasets DATA1 (noninvariant factor loadings, noninvariant factor variances and correlations), resulting in the data-generating model (DGM) DGM1, DATA2 (invariant factor loadings, noninvariant factor variances and correlations) resulting in DGM2, and DATA3 (invariant factor loadings, invariant factor variances and correlations), resulting in DGM3. The population datasets that included 130,000 subjects each can be found in the directory “POPDATA” at https://osf.io/puaz9/?view_only=63ffb2fd30f5400e89c59d03366bf793 (accessed on 3 June 2023).

Joint LSEM estimation was carried out using invariant item loadings and bandwidth factor , 2, and 3, where the bandwidth was defined as . The Gaussian kernel function was used. We also compared the two choices of computing conditional covariances with local smoothing (SM; see (17)) and the weighting approach (16) (no smoothing; NSM). Moreover, we applied LSEM with a quadratic parameter constraint (“quad”) using a bandwidth factor . A grid of 13 focal points was chosen as .

We investigated the accuracy of the estimated parameter curves of the variance of the latent factor , the invariant factor loading of the indicator , and the DIF effect for factor loading of . Parameter accuracy was assessed by summarizing bias and RMSE of estimated parameter curves across the different age values. The bias of a parameter at a focal point is given by:

where is the parameter estimate of in the rth replication. The weighted absolute bias can then be defined as:

where denotes the proportion of values of the moderator variable that equal . The weighted root mean square error (weighted RMSE) is defined as:

which is a weighted point-wise RMSE summary statistic.

In total, 2500 replications (i.e., 2500 datasets were generated and analyzed in each condition of the simulation) were conducted. We used the R (R Core Team 2023) software for the entire analysis of the simulation and the sirt (Robitzsch 2023b) package for LSEM estimation.

6.2. Results

In Table 1, weighted absolute bias and weighted RMSE for the factor variance, the invariant factor loading of , and the DIF effect of factor loading of are presented.

Table 1.

Simulation Study 1: Weighted absolute bias and weighted root mean square error (RMSE) for the parameter curve for different model parameters as a function of sample size N and three data-generating models DGM1, DGM2 and DGM3.

It turned out that all three model parameters resulted in unbiased estimation for moderate or large sample sizes. For DGM1 or DGM2, the quadratic parameter constraint introduced some misspecification, which led to slight biases. Moreover, using the local quadratic smoothing approach SM for estimating conditional covariances instead of the weighted approach NM (e.g., no smoothing) resulted in a small error bias. Finally, biases increased with increasing the bandwidth factor h.

Notably, using local smoothing SM for conditional covariances added variability in terms of RMSE compared to NM. Regarding RMSE, one could conclude that seems preferable to or (see also Hildebrandt et al. 2016).

Overall, the findings of Simulation Study 1 demonstrated that joint LSEM estimation resulted in approximately unbiased parameter estimates. The decrease in RMSE values for increasing sample sizes also indicated that parameter estimates are consistent. Notably, the recommendation of using the bandwidth factor in pointwise LSEM (Hildebrandt et al. 2016) also transfers to the joint LSEM estimation method.

7. Simulation Study 2: Estimation of Variability of Model Parameters and Statistical Significance Tests

7.1. Method

In Simulation Study 2, the bias of standard deviation statistics for parameter variation and the properties of significance tests for parameter variation are investigated. The same three data-generating models DGM1, DGM2, and DGM3 as in Simulation Study 1 (see Section 6.1) were utilized.

The chosen sample sizes in this simulation were , 1000, 2000, and 4000. As in Simulation Study 1, samples of sample size N were drawn without replacement from population datasets DATA1, DATA2, and DATA3 for DGM1, DGM2, and DGM3, respectively. The population datasets that included 130,000 subjects can be found in the directory “POPDATA” at https://osf.io/puaz9/?view_only=63ffb2fd30f5400e89c59d03366bf793 (accessed on 3 June 2023).

As in Simulation Study 1, a one-factor model with indicators X1, X2, and X3 was specified. Throughout all simulation conditions, a bandwidth factor of was chosen. The bias of the two standard deviation estimators and defined in (28) and (29) was assessed. Significance testing for parameter variation was based on the standard deviation (see (30)), which uses a normal distribution approximation and the Wald test (see (31)), which uses a chi-square distribution as a null distribution. Statistical significance tests were performed with significance levels of 0.05 and 0.01. The bias of the standard deviation variability statistics and significance tests of parameter variation was computed for the variance of the latent factor, the three DIF effects of the factor loadings, and the three residual variances.

In total, 2500 replications were conducted in all simulation conditions. The R software (R Core Team 2023) was used for analyzing this simulation study, and the R package sirt (Robitzsch 2023b) was employed for LSEM estimation and significance testing.

7.2. Results

In Table 2, the bias of raw and bias-corrected (“bc”) estimates of the standard deviation variability measure are presented. In DGM1, all parameters have nonvanishing values for the population dataset DATA1. In this case, the raw SD estimate showed some slight positive bias for sample sizes and 1000. The bias-corrected estimates were generally negatively biased, although the biases were not very large. In DGM2, only the variance of the latent factor F had a true parameter variation larger than 0. In this situation, raw estimates were approximately unbiased, while the bias-corrected estimates were negatively biased. If there was no true parameter variation, such as for DIF effects or residual variances in DGM2 or all parameters in DGM3, the bias-corrected estimates were less biased than the raw standard deviation estimate.

Table 2.

Simulation Study 2: Bias of raw and bias-corrected estimators of the standard deviation for the parameter curve for different model parameters as a function of sample size N and three data-generating models DGM1, DGM2 and DGM3.

Overall, one could say that for smaller values of true variability, the positive bias in the raw SD estimate was larger than the underestimation of the bias-corrected SD estimate. An improved SD statistic might be obtained by computing some weighted average of the raw and the bias-corrected estimate.

Table 3 presents type I error and power rates for the different LSEM model parameters. Significance testing based on the SD statistics had inflated type I error rates. If the nominal level was chosen as 1%, the empirical error rate was about 5%. Moreover, the Wald statistic had type I error rates lower than the nominal level in many simulation conditions. Nevertheless, significance testing based on the standard deviation has substantially more statistical power. If a target nominal significance level for the SD test statistic were 5%, it is advised to use a significance level of 0.01.

Table 3.

Simulation Study 2: Type I and power rates for the significance test for variability in a parameter curve for the two test statistics based on (SD) and the Wald test (WA) as a function of sample size N and three data-generating models DGM1, DGM2 and DGM3.

8. Empirical Example: A Reanalysis of SON-R

8.1. Data

According to the age differentiation hypothesis, cognitive abilities become more differentiated with increasing age during childhood. Hülür et al. (2011) used data from the German standardization of the SON-R 2−7 intelligence test to examine age-related differentiation of cognitive abilities from age 2 to age 7. The SON-R 2−7 intelligence test is a nonverbal intelligence test for children and consists of six indicators (i.e., six subtests). The SON-R 2−7 test contains two subscales measured by three indicators each. The performance subscale (with factor Fp) contains indicators mosaics (p1), puzzles (p2), and patterns (p3). The reasoning subscale (with factor Fr) contains the indicators categories (r1), analogies (r2), and situations (r3).

Unfortunately, the SON-R dataset is not publicly available, and the authors of this paper cannot publicly share the dataset on the internet. To replicate the LSEM analysis of this example, we generated a synthetic dataset of the SON-R 2−7 data based on the original dataset used in Hülür et al. (2011). The same sample size of children was simulated. In the synthetic data generation, we relied on a recently proposed method by Jiang et al. (2021) (see also Grund et al. 2022, Nowok et al. 2016 or Reiter 2023) that combines the distinct approaches of simulating a dataset based on a known distribution and the approach of adding to noise to original data to prevent data disclosure or person identification. The noisy versions of the original dataset were simulated with a reliability of 0.95 (Grund et al. 2022), and quadratic relations among variables were allowed. The data synthesis model was separately carried out in 18 groups of children (i.e., in 9 age groups for male and female children, respectively). The values of the age and gender variables were held fixed in the analysis meaning that these demographic variables had the same distribution in the synthetic data as in the original data. In total, 50.8% of the children in the sample was male. The synthetic data and syntax for synthetic data generation can be found in the directory “SON-R” at https://osf.io/puaz9/?view_only=63ffb2fd30f5400e89c59d03366bf793 (accessed on 3 June 2023).

The indicator variables were linearly transformed such that the mean equaled zero and the standard deviation equaled one for children aged between 4.0 and 6.0 years. This is an arbitrary choice and only affects the scaling of the variables. The assessment of model parameter heterogeneity in the LSEM is independent of this choice. Alternatively, one might also standardize the indicator variables for children in the total sample with ages between 2.5 and 7.5 years.

A two-dimensional CFA model involving the performance and the reasoning factor was specified in an LSEM analysis. The mean structure remained unmodeled because the primary goal of this analysis was to investigate the age differentiation hypothesis. For model identification, the factor loadings were assumed as invariant across age, and the first loading of both scales (i.e., loadings of p1 and r1) were fixed at one. In accordance with Hildebrandt et al. (2016) and the findings of Simulation Study 1, the bandwidth factor of was chosen, resulting in a bandwidth , where is the estimated standard deviation of the age variable. Because the LSEM model involved invariance constraints among parameters, a joint estimation approach was employed. For statistical inference and the test of parameter variation, bootstrap samples were drawn. Replication syntax can also be found in the directory “SON-R” at https://osf.io/puaz9/?view_only=63ffb2fd30f5400e89c59d03366bf793 (accessed on 3 June 2023).

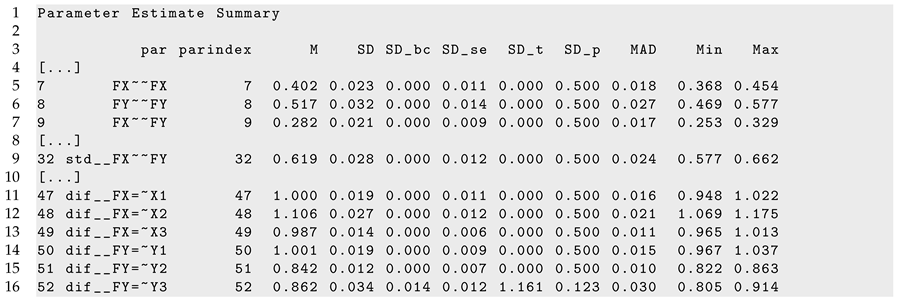

8.2. Results

Figure 5 displays the histogram of the age variable. The age of children ranged between 2.44 and 7.72 years, with a mean of 4.89 and a standard deviation of 1.23. The histogram indicated that the intended age range between 2.5 and 7 years of the SON-R 2−7 test was approximately uniformly distributed.

Figure 5.

SON-R example: Histogram for moderator age.

The estimated LSEM model had an acceptable model fit regarding typical model fit effect sizes. The fit statistics without bias correction were RMSEA = 0.061, CFI = 0.952, TLI = 0.960, GFI = 0.963, and SRMR = 0.055.

In Listing 8, parts of the LSEM output of lsem.bootstrap() are displayed. According to the specified model, the parameter variation (i.e., SD and SD_bc) for factor loadings (i.e., Fp=∼p1, …, F3=∼r3) was zero because the parameters were assumed invariant across age.

| Listing 8. SON-R example: Part of the output of lsem.bootstrap() function. |

|

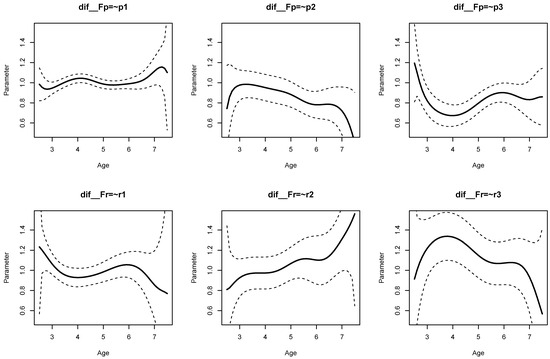

The age differentiation hypothesis refers to the variances of the performance scale (i.e., Fp∼∼Fp), the variance of the reasoning scale (i.e., Fr∼∼Fr), and the correlation of both factors (i.e., std Fp∼∼Fr). Figure 6 displays the parameter curves with confidence intervals for the two variances and the correlation. From the R output presented in Listing 8, it can be seen that the variances parameter curves did not show significant parameter variation, and the bias-corrected standard deviation estimate SD_bc was 0.000. The correlation between the performance and the reasoning scale was 0.878 on average, with a small bias-corrected standard deviation estimate of 0.019 that turned out to be nonsignificant (p = 0.316). Hence, there was no evidence for the age differentiation hypothesis in the SON-R dataset.

Figure 6.

SON-R example: Parameter curves for variances of performance (Fp∼∼Fp) and reasoning (Fr∼∼Fr) and the correlation of performance and reasoning (std Fp∼∼Fr).

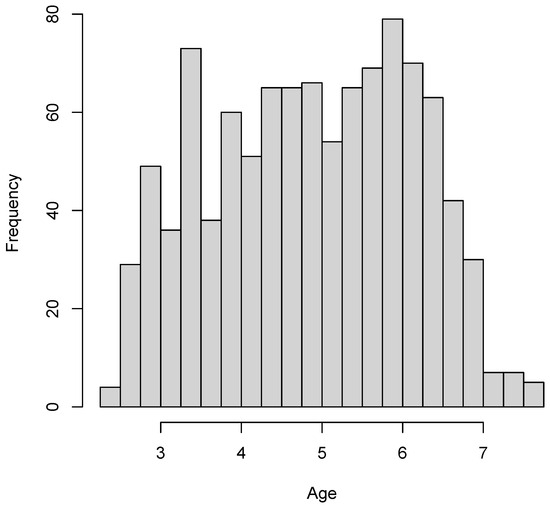

Figure 7 displays the parameter curves of the DIF effects of the factor loadings. The corresponding parameters for DIF effects can be found in lines 47 to 52 in Listing 8 (i.e., parameters dif Fp=∼p1, …, dif Fr=∼r3). There was substantial parameter variation in terms of the bias-corrected standard deviation SD_ bc for the loadings of p2 (, ), p3 (, ), r2 (, ), and r3 (, ).

Figure 7.

SON-R example: Parameter curves for DIF effects of factor loadings for performance (latent variable Fp) and reasoning (latent variable Fr).

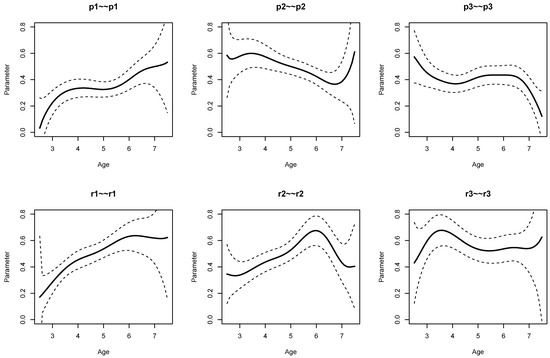

Finally, residual variances are displayed in Figure 8. From the results from Listing 8, it is evident that residual variances of p1 (, ), p2 (, ), r1 (, ), and r2 (, ) were statistically significant at the 0.01 significance level.

Figure 8.

SON-R example: Parameter curves for residual variances.

Note that Hülür et al. (2011) used a pointwise LSEM approach instead a joint LSEM estimation approach. The identification of parameters in the covariance structure of factors was achieved in Hülür et al. (2011) by the constraint that the pointwise average of factor loadings equaled 1. Due to the different estimation approaches, it is expected that there are slight differences between our joint LSEM estimation approach and the original analysis in Hülür et al. (2011). The parameter curve of the correlation between the performance and the reasoning factors was similar in both analyses, with the exception that the factor correlation for small age values was much lower in the joint estimation approach, as displayed in Figure 6.

An anonymous reviewer wondered whether the factor correlation could be meaningfully interpreted if factor loadings did not show invariance across the moderator values. We argued elsewhere that measurement invariance would be a helpful but not a necessary condition for a meaningful interpretation of a factor correlation or a factor variance (see Robitzsch and Lüdtke 2023). In fact, a violation of measurement invariance only implies that results would change if a subset of indicators was used in the factor model. Because the SON-R instrument is held fixed in test administration and statistical analysis, this property of item selection invariance is not required. Of course, any identification constraint on factor loadings must be imposed to identify a factor correlation. The choice of identification constraint is somehow arbitrary. It could be invariance of all factor loadings, invariance of loadings of a subset of indicators, or a pointwise constraint of the average loadings (i.e., the average loading should be 1 for all indicators of a factor).

9. Discussion

In this article, we discussed the implementation of LSEM in the R package sirt. Joint LSEM estimation and two different significance tests for a test of parameter variation were introduced and evaluated through two simulation studies.

Simulation Study 1 demonstrated that the joint LSEM estimation method can be successfully applied to structural equation models whose parameters vary across different values of the moderator variable. It turned out that the bandwidth factor can generally be recommended as a default choice. Notably, LSEM model parameters can be quite variable for small () or moderate sample sizes (). In Simulation Study 2, two significance testing approaches for constant parameter curves were investigated: a test statistic based on the standard deviation of a parameter curve and a Wald-type test statistic. Both testing approaches rely on bootstrap samples for statistical inference. The standard-deviation-based test statistics had a higher power than the Wald test-type test statistic, but also came with an inflated type-I error rate. It is recommended to use the significance test based on the standard deviation with a significance level of if a nominal significance level of is required.

The application of LSEM in applied research can be regarded more as an exploratory than a confirmatory statistical method (Jacobucci 2022). Functional forms of parameter curves obtained with LSEM can be validated in other samples or future studies with more confirmatory approaches, such as moderated nonlinear factor analysis. We would like to emphasize that sufficiently large sample sizes are required in LSEM in order to allow a reliable interpretation of the obtained nonlinear parameter curves. Moreover, the true variability in parameter curves must be sufficiently large to have enough power to statistically detect the significant parameter variability. A statistical significance test on parameter curve regression coefficients in a moderated nonlinear factor analysis might have more power than a test based on the nonparametric LSEM method. Finally, moderated nonlinear factor analysis, if estimated by maximum likelihood, allows likelihood ratio tests for testing among nested models or using information criteria for model comparisons.

In this article, the moderator variable was exclusively age and a bounded variable. There might be applications in which the moderator differs from age, such as unbounded self-concept factor variables or ability values obtained from item response models (Basarkod et al. 2023). Because the metric of such variables is often arbitrary, it is advised to transform such moderators into a bounded metric. For example, the percentage ranks of an unbounded moderator variable could be utilized to obtain a bounded moderator variable.

If the moderator variable is an error-prone variable such as a factor variable or a scale score, an expected a posteriori (EAP) factor score estimate can be used as a moderator to obtain unbiased estimates of LSEM model parameters (Bartholomew et al. 2011).

As explained in Section 4, datasets with missing values should either be handled with pairwise deletion methods for computing sufficient statistics (i.e., the conditional covariance matrices) in LSEM or should be multiply imputed. The imputation model should be flexibly specified to represent the complex associations modeled with LSEM. For example, the moderator variable could be discretized into 5 or 10 distinct groups, and the resulting datasets should be separately imputed in the separate subdatasets. Statistical inference should be carried out that involves the multiply imputed datasets (Little and Rubin 2002).

Finally, we only discussed LSEM in the case of one moderator variable. With more than one moderator variable (Hartung et al. 2018), moderated nonlinear factor analysis might be easier to estimate because multivariate kernel functions for LSEM are difficult to estimate with sparse data.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Datasets and R code is available as supplementary material at https://osf.io/puaz9/?view_only=63ffb2fd30f5400e89c59d03366bf793 (accessed on 3 June 2023).

Conflicts of Interest

The authors declare no conflict of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| CFA | confirmatory factor analysis |

| DGM | data-generating model |

| DIF | differential item functioning |

| LSEM | local structural equation model |

| ML | maximum likelihood |

| MNFA | moderated nonlinear factor analysis |

| SEM | structural equation model |

References

- Allemand, Mathias, Gabriel Olaru, and Patrick L. Hill. 2021. Age-related psychometrics and differences in gratitude and future time perspective across adulthood. Personality and Individual Differences 182: 111086. [Google Scholar] [CrossRef]

- Allemand, Mathias, Gabriel Olaru, and Patrick L. Hill. 2022. Gratitude and future time perspective during the COVID-19 pandemic: Effects of age and virus worry. The Journal of Positive Psychology 17: 819–31. [Google Scholar] [CrossRef]

- Arnold, Manuel, Andreas M. Brandmaier, and Manuel C. Voelkle. 2021. Predicting differences in model parameters with individual parameter contribution regression using the R package ipcr. Psych 3: 360–85. [Google Scholar] [CrossRef]

- Arnold, Manuel, Daniel L. Oberski, Andreas M. Brandmaier, and Manuel C. Voelkle. 2020. Identifying heterogeneity in dynamic panel models with individual parameter contribution regression. Structural Equation Modeling: A Multidisciplinary Journal 27: 613–28. [Google Scholar] [CrossRef]

- Bartholomew, David J., Martin Knott, and Irini Moustaki. 2011. Latent Variable Models and Factor Analysis: A Unified Approach. New York: Wiley. [Google Scholar] [CrossRef]

- Basarkod, Geetanjali, Herbert W. Marsh, Baljinder K. Sahdra, Philip D. Parker, Jiesi Guo, Theresa Dicke, and Oliver Lüdtke. 2023. The dimensionality of reading self-concept: Examining its stability using local structural equation models. Assessment 30: 873–90. [Google Scholar] [CrossRef] [PubMed]

- Bates, Timothy C., Hermine Maes, and Michael C. Neale. 2019. umx: Twin and path-based structural equation modeling in R. Twin Research and Human Genetics 22: 27–41. [Google Scholar] [CrossRef]

- Bauer, Daniel J. 2017. A more general model for testing measurement invariance and differential item functioning. Psychological Methods 22: 507–26. [Google Scholar] [CrossRef]

- Bentler, Peter M., and Ke-Hai Yuan. 2011. Positive definiteness via off-diagonal scaling of a symmetric indefinite matrix. Psychometrika 76: 119–23. [Google Scholar] [CrossRef]

- Boker, Steven, Michael Neale, Hermine Maes, Michael Wilde, Michael Spiegel, Timothy Brick, Jeffrey Spies, Ryne Estabrook, Sarah Kenny, Timothy Bates, and et al. 2011. OpenMx: An open source extended structural equation modeling framework. Psychometrika 76: 306–17. [Google Scholar] [CrossRef]

- Bollen, Kenneth A. 1989. Structural Equations with Latent Variables. New York: Wiley. [Google Scholar] [CrossRef]

- Bollen, Kenneth A., and Walter R. Davis. 2009. Two rules of identification for structural equation models. Structural Equation Modeling: A Multidisciplinary Journal 16: 523–36. [Google Scholar] [CrossRef]

- Bolsinova, Maria, and Dylan Molenaar. 2018. Modeling nonlinear conditional dependence between response time and accuracy. Frontiers in Psychology 9: 1525. [Google Scholar] [CrossRef]

- Bolsinova, Maria, and Dylan Molenaar. 2019. Nonlinear indicator-level moderation in latent variable models. Multivariate Behavioral Research 54: 62–84. [Google Scholar] [CrossRef] [PubMed]

- Boos, Dennis D., and Leonard A. Stefanski. 2013. Essential Statistical Inference. New York: Springer. [Google Scholar] [CrossRef]

- Brandt, Naemi D., Johanna Drewelies, Sherry L. Willis, K. Warner Schaie, Nilam Ram, Denis Gerstorf, and Jenny Wagner. 2022. Beyond big five trait domains: Stability and change in personality facets across midlife and old age. Journal of Personality. Epub ahead of print. [Google Scholar] [CrossRef] [PubMed]

- Bratt, Christopher, Dominic Abrams, Hannah J. Swift, Christin-Melanie Vauclair, and Sibila Marques. 2018. Perceived age discrimination across age in Europe: From an ageing society to a society for all ages. Developmental Psychology 54: 167–80. [Google Scholar] [CrossRef] [PubMed]

- Breit, Moritz, Julian Preuß, Vsevolod Scherrer, Tobias Moors, and Franzis Preckel. 2023. Relationship between creativity and intelligence: A multimethod investigation of alternative theoretical assumptions in two samples of secondary school students. Gifted Child Quarterly 67: 95–109. [Google Scholar] [CrossRef]

- Breit, Moritz, Martin Brunner, and Franzis Preckel. 2020. General intelligence and specific cognitive abilities in adolescence: Tests of age differentiation, ability differentiation, and their interaction in two large samples. Developmental Psychology 56: 364–84. [Google Scholar] [CrossRef]

- Breit, Moritz, Martin Brunner, and Franzis Preckel. 2021. Age and ability differentiation in children: A review and empirical investigation. Developmental Psychology 57: 325–46. [Google Scholar] [CrossRef]

- Breit, Moritz, Martin Brunner, Dylan Molenaar, and Franzis Preckel. 2022. Differentiation hypotheses of intelligence: A systematic review of the empirical evidence and an agenda for future research. Psychological Bulletin 148: 518–54. [Google Scholar] [CrossRef]

- Briley, Daniel A., K. Paige Harden, and Elliot M. Tucker-Drob. 2015b. Genotype × cohort interaction on completed fertility and age at first birth. Behavior Genetics 45: 71–83. [Google Scholar] [CrossRef]

- Briley, Daniel A., K. Paige Harden, Timothy C. Bates, and Elliot M. Tucker-Drob. 2015a. Nonparametric estimates of gene × environment interaction using local structural equation modeling. Behavior Genetics 45: 581–96. [Google Scholar] [CrossRef]

- Browne, Michael W. 1974. Generalized least squares estimators in the analysis of covariance structures. South African Statistical Journal 8: 1–24. Available online: https://bit.ly/3yviejm (accessed on 3 June 2023). [CrossRef]

- Browne, Michael W., and Gerhard Arminger. 1995. Specification and estimation of mean-and covariance-structure models. In Handbook of Statistical Modeling for the Social and Behavioral Sciences. Edited by Gerhard Arminger, Clifford C. Clogg and Michael E. Sobel. Boston: Springer, pp. 185–249. [Google Scholar] [CrossRef]

- Cox, Simon R., Stuart J. Ritchie, Elliot M. Tucker-Drob, David C. Liewald, Saskia P. Hagenaars, Gail Davies, Joanna M. Wardlaw, Catharine R. Gale, Mark E. Bastin, and Ian J. Deary. 2016. Ageing and brain white matter structure in 3513 UK Biobank participants. Nature Communications 7: 1–13. [Google Scholar] [CrossRef]

- Curran, Patrick J., James S. McGinley, Daniel J. Bauer, Andrea M. Hussong, Alison Burns, Laurie Chassin, Kenneth Sher, and Robert Zucker. 2014. A moderated nonlinear factor model for the development of commensurate measures in integrative data analysis. Multivariate Behavioral Research 49: 214–31. [Google Scholar] [CrossRef] [PubMed]

- de Mooij, Susanne M. M., Richard N. A. Henson, Lourens J. Waldorp, and Rogier A. Kievit. 2018. Age differentiation within gray matter, white matter, and between memory and white matter in an adult life span cohort. Journal of Neuroscience 38: 5826–36. [Google Scholar] [CrossRef]

- Dong, Yixiao, and Denis Dumas. 2020. Are personality measures valid for different populations? A systematic review of measurement invariance across cultures, gender, and age. Personality and Individual Differences 160: 109956. [Google Scholar] [CrossRef]

- Dutton, Edward, and Emil Kirkegaard. 2022. The negative religiousness-IQ nexus is a Jensen effect on individual-level data: A refutation of Dutton et al.’s “The myth of the stupid believer”. Journal of Religion and Health 61: 3253–75. [Google Scholar] [CrossRef] [PubMed]

- Efron, Bradley, and Robert J. Tibshirani. 1994. An Introduction to the Bootstrap. Boca Raton: CRC Press. [Google Scholar] [CrossRef]