A Psychometric Perspective on the Associations between Response Accuracy and Response Time Residuals

Abstract

:1. Introduction

1.1. Explaining RAR/RTR Statistical Dependencies

1.2. Attending to Item-Specific Factors in a Conceptual Psychometric Model

2. Materials and Methods

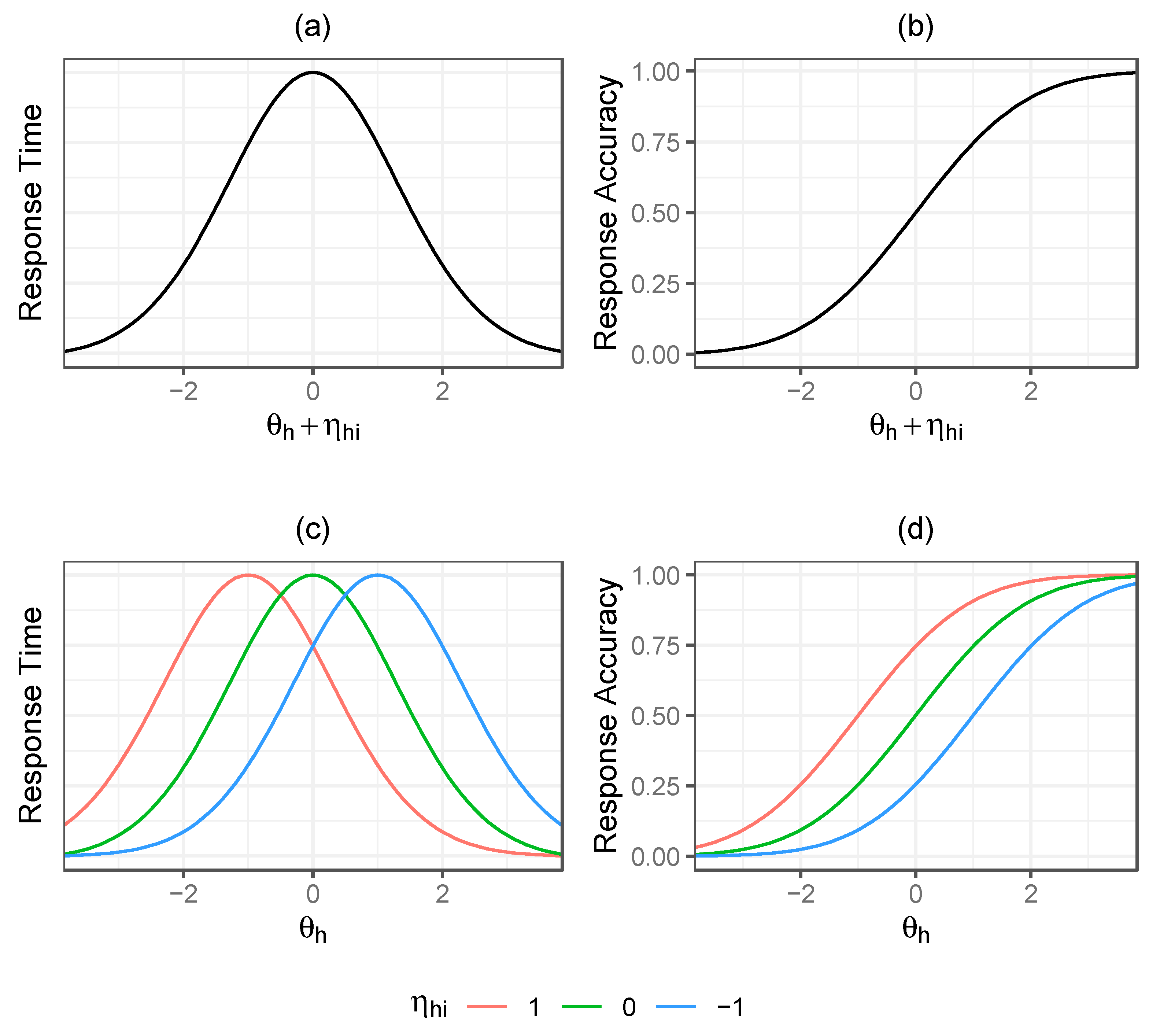

2.1. Distinguishing the General and Item-Specific Components of Proficiency Underlying Response Accuracy

2.2. Relating the General and Item-Specific Components of Proficiency to Response Times

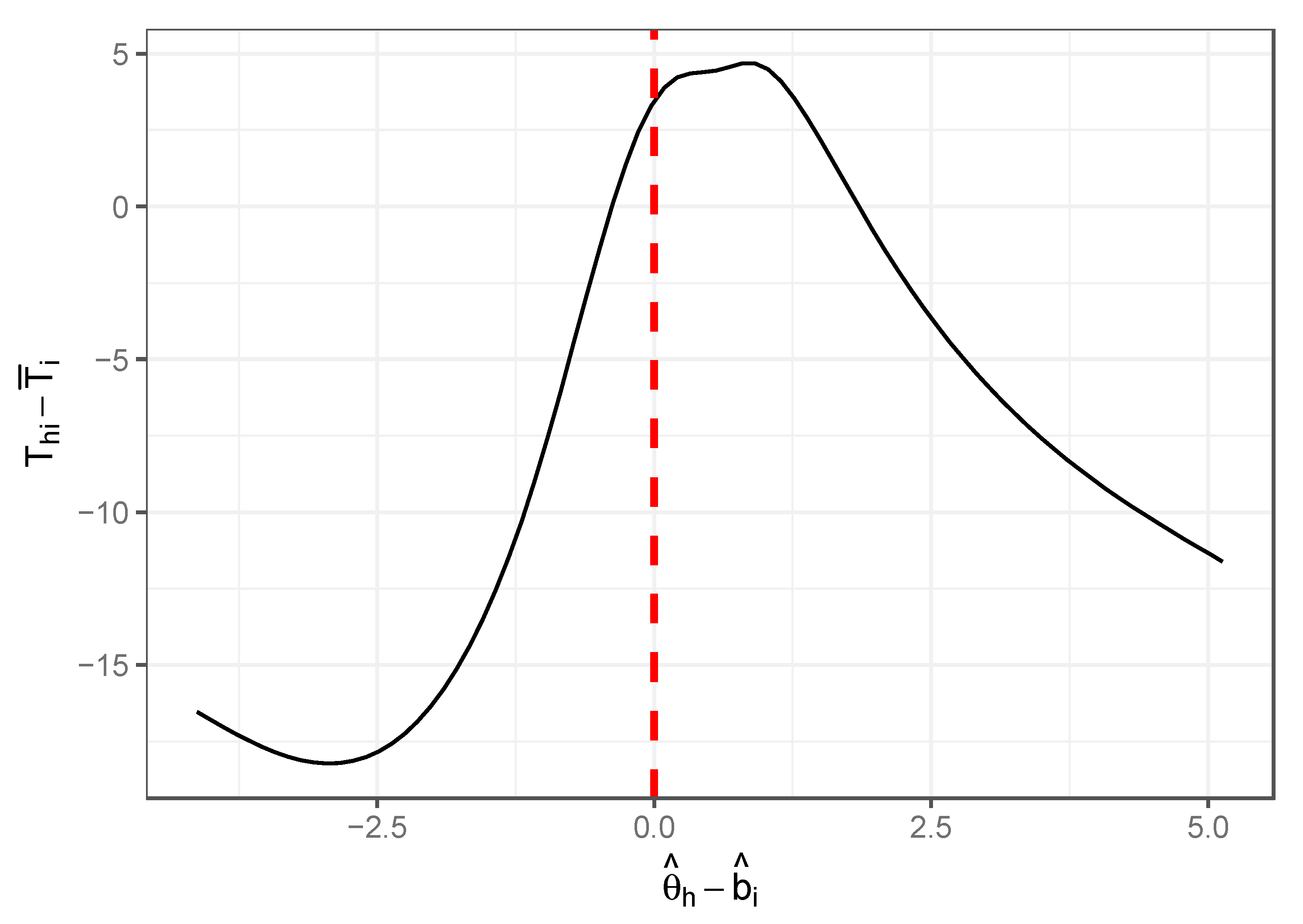

2.3. Empirical Examination of Response Time against Proficiency

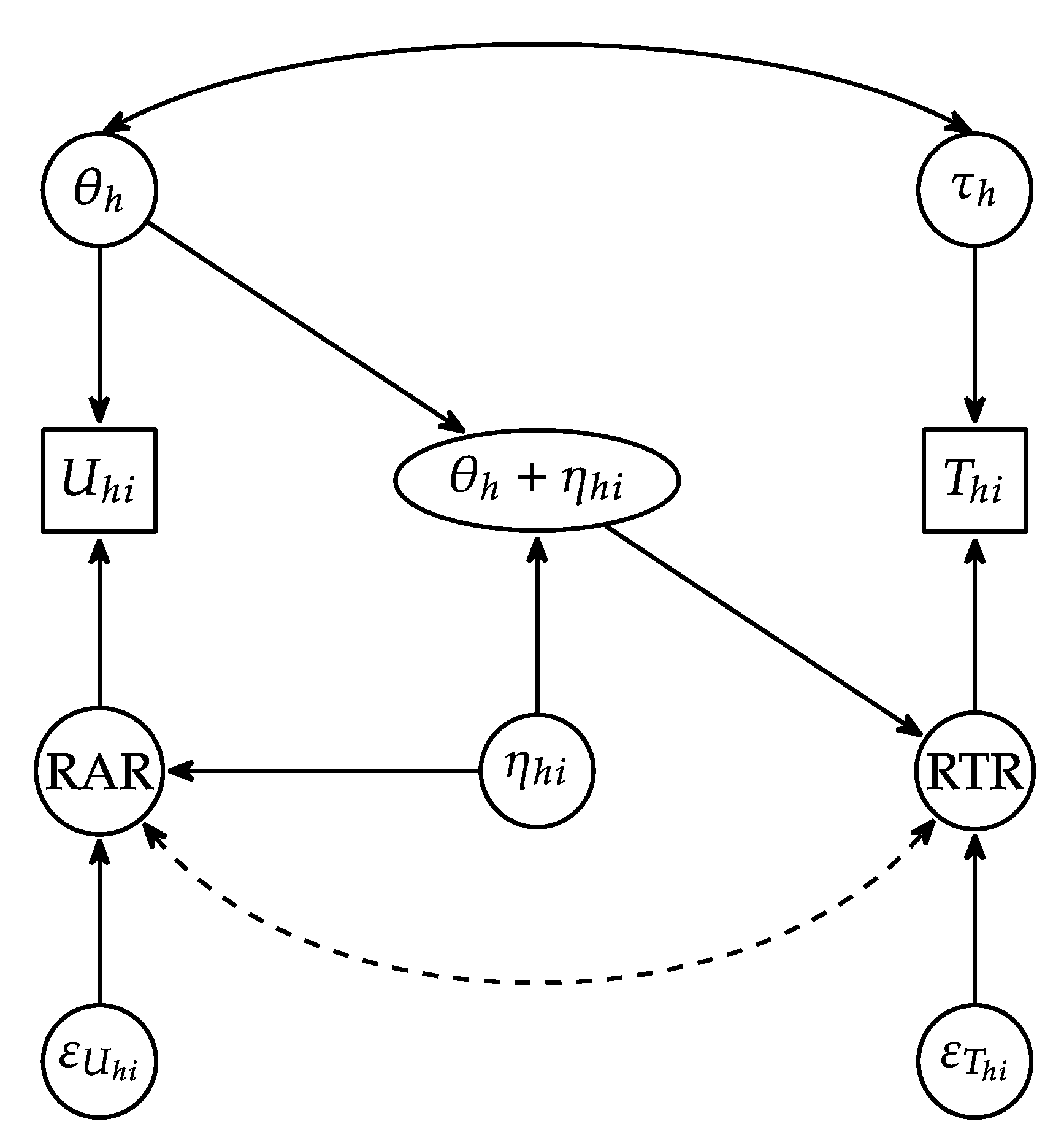

2.4. Resulting Psychometric Model Explaining RAR/RTR Dependence

2.5. Simulation Illustration

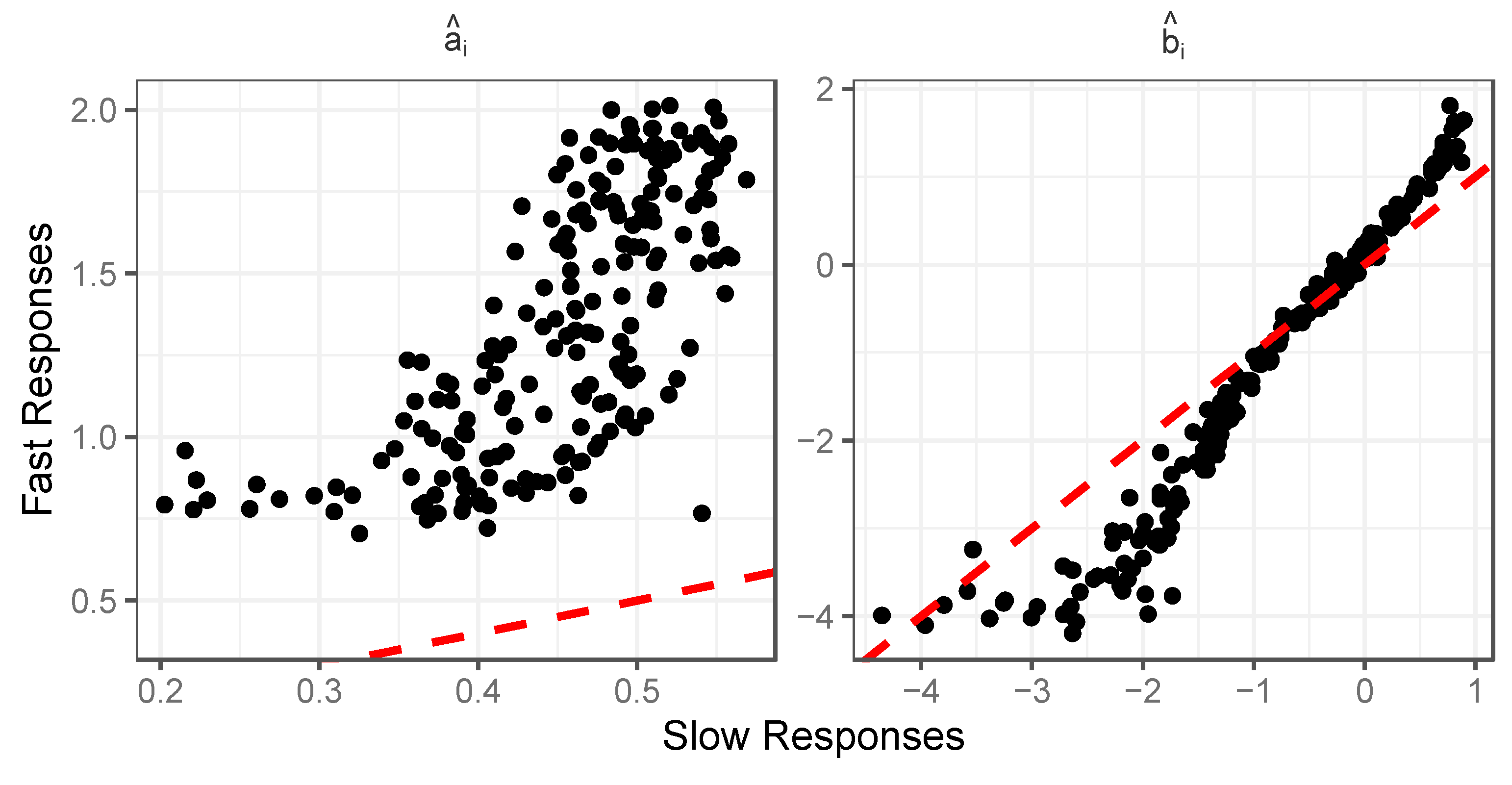

2.6. ApplyingResponse Mixture Analysis to the Simulated Data

3. Results

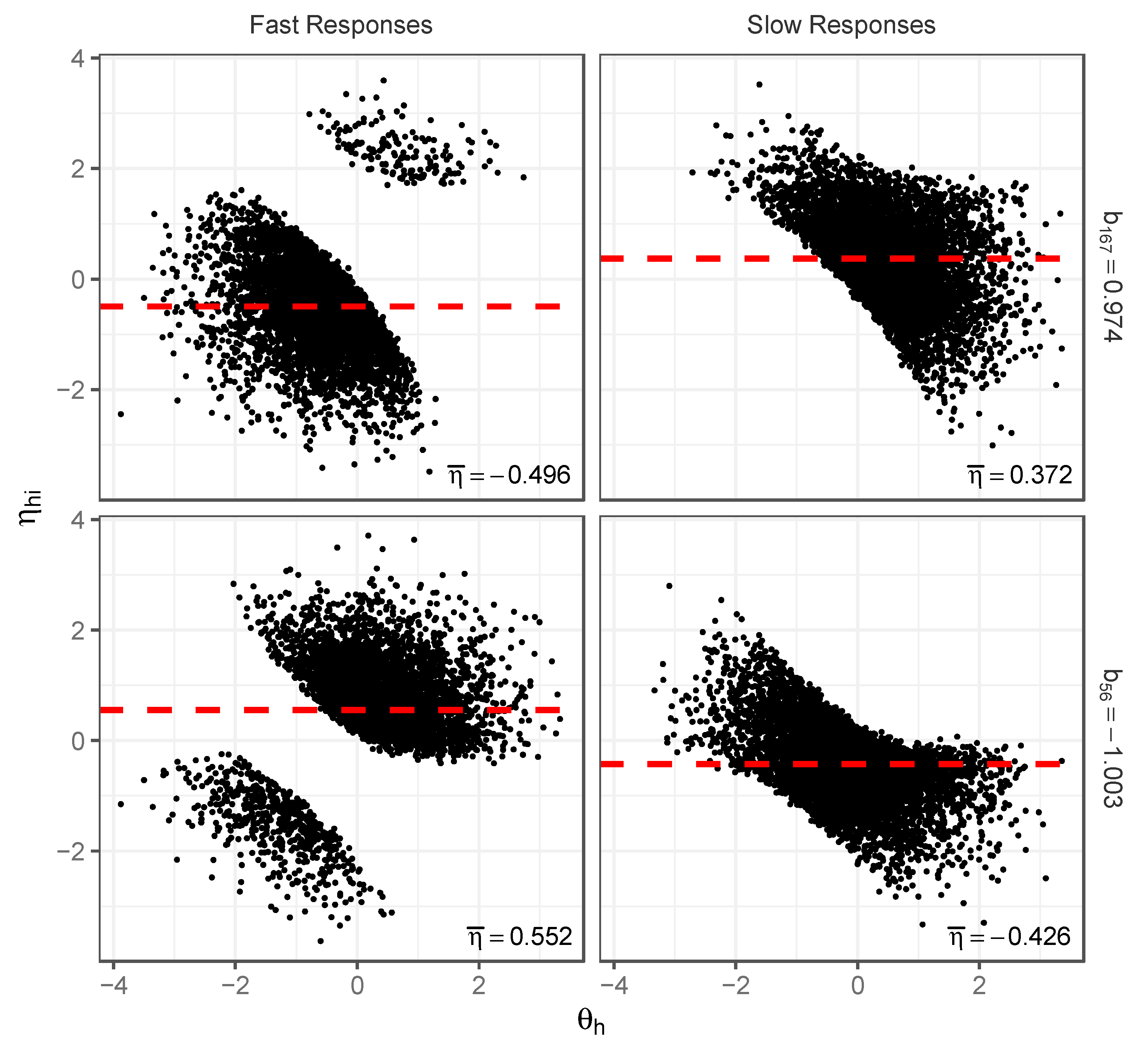

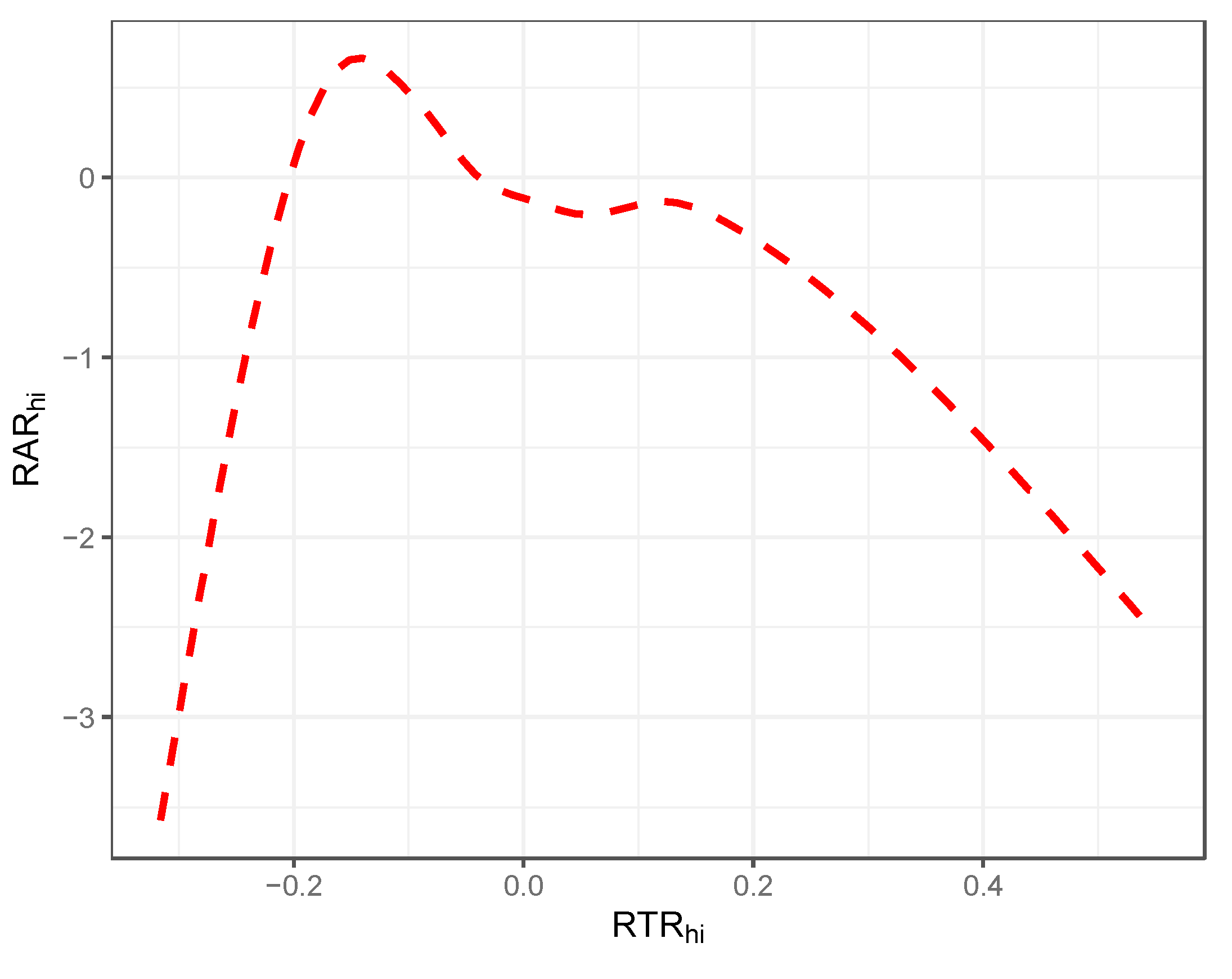

3.1. Response Mixture Analysis Simulation Results

3.2. Simulation Results That Replicate the RAR/RTR Patterns in De Boeck and Rijmen (2020)

4. Discussion

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Bolsinova, Maria, and Dylan Molenaar. 2018. Modeling Nonlinear Conditional Dependence between Response Time and Accuracy. Frontiers in Psychology 9: 1525. [Google Scholar] [CrossRef] [PubMed]

- Bolsinova, Maria, and Jesper Tijmstra. 2018. Improving Precision of Ability Estimation: Getting More from Response Times. British Journal of Mathematical and Statistical Psychology 71: 13–38. [Google Scholar] [CrossRef] [PubMed]

- Bolsinova, Maria, Jesper Tijmstra, Dylan Molenaar, and Paul De Boeck. 2017a. Conditional Dependence between Response Time and Accuracy: An Overview of Its Possible Sources and Directions for Distinguishing between Them. Frontiers in Psychology 8: 202. [Google Scholar] [CrossRef] [PubMed]

- Bolsinova, Maria, Paul De Boeck, and Jesper Tijmstra. 2017b. Modelling Conditional Dependence Between Response Time and Accuracy. Psychometrika 82: 1126–48. [Google Scholar] [CrossRef] [PubMed]

- Chen, Haiqin, Paul De Boeck, Matthew Grady, Chien-Lin Yang, and David Waldschmidt. 2018. Curvilinear Dependency of Response Accuracy on Response Time in Cognitive Tests. Intelligence 69: 16–23. [Google Scholar] [CrossRef]

- De Boeck, Paul, and Frank Rijmen. 2020. Response Times in Cognitive Tests. In Integrating Timing Considerations to Improve Testing Practices. Edited by Melissa J. Margolis and Richard A. Feinberg. New York: Routledge, pp. 142–49. [Google Scholar] [CrossRef]

- De Boeck, Paul, Haiqin Chen, and Mark Davison. 2017. Spontaneous and Imposed Speed of Cognitive Test Responses. British Journal of Mathematical and Statistical Psychology 70: 225–37. [Google Scholar] [CrossRef] [PubMed]

- DiTrapani, Jack, Minjeong Jeon, Paul De Boeck, and Ivailo Partchev. 2016. Attempting to Differentiate Fast and Slow Intelligence: Using Generalized Item Response Trees to Examine the Role of Speed on Intelligence Tests. Intelligence 56: 82–92. [Google Scholar] [CrossRef]

- Harman, Harry H. 1967. Modern Factor Analysis, 2nd, rev. ed. Chicago and London: University of Chicago Press. [Google Scholar]

- Kang, Inhan, Minjeong Jeon, and Ivailo Partchev. 2023. A Latent Space Diffusion Item Response Theory Model to Explore Conditional Dependence between Responses and Response Times. Psychometrika 88: 830–64. [Google Scholar] [CrossRef] [PubMed]

- Kang, Inhan, Paul De Boeck, and Roger Ratcliff. 2022. Modeling Conditional Dependence of Response Accuracy and Response Time with the Diffusion Item Response Theory Model. Psychometrika 87: 725–48. [Google Scholar] [CrossRef] [PubMed]

- Kim, Nana, and Daniel M. Bolt. 2023. Evaluating Psychometric Differences Between Fast Versus Slow Responses on Rating Scale Items. Journal of Educational and Behavioral Statistics. [Google Scholar] [CrossRef]

- Lyu, Weicong, Daniel M. Bolt, and Samuel Westby. 2023. Exploring the Effects of Item-Specific Factors in Sequential and IRTree Models. Psychometrika 88: 745–75. [Google Scholar] [CrossRef]

- Maris, Gunter, and Han Van der Maas. 2012. Speed-Accuracy Response Models: Scoring Rules Based on Response Time and Accuracy. Psychometrika 77: 615–33. [Google Scholar] [CrossRef]

- Molenaar, Dylan, and Paul De Boeck. 2018. Response Mixture Modeling: Accounting for Heterogeneity in Item Characteristics across Response Times. Psychometrika 83: 279–97. [Google Scholar] [CrossRef] [PubMed]

- Norton, Edward C., and Bryan E. Dowd. 2018. Log Odds and the Interpretation of Logit Models. Health Services Research 53: 859–78. [Google Scholar] [CrossRef] [PubMed]

- Partchev, Ivailo, and Paul De Boeck. 2012. Can Fast and Slow Intelligence Be Differentiated? Intelligence 40: 23–32. [Google Scholar] [CrossRef]

- Ranger, Jochen. 2013. Modeling Responses and Response Times in Personality Tests with Rating Scales. Psychological Test and Assessment Modeling 55: 361–82. [Google Scholar]

- Ranger, Jochen, and Tuulia M. Ortner. 2011. Assessing Personality Traits Through Response Latencies Using Item Response Theory. Educational and Psychological Measurement 71: 389–406. [Google Scholar] [CrossRef]

- Rizopoulos, Dimitris. 2007. ltm: An R Package for Latent Variable Modeling and Item Response Theory Analyses. Journal of Statistical Software 17: 1–25. [Google Scholar] [CrossRef]

- van der Linden, Wim J. 2007. A Hierarchical Framework for Modeling Speed and Accuracy on Test Items. Psychometrika 72: 287–308. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lyu, W.; Bolt, D. A Psychometric Perspective on the Associations between Response Accuracy and Response Time Residuals. J. Intell. 2024, 12, 74. https://doi.org/10.3390/jintelligence12080074

Lyu W, Bolt D. A Psychometric Perspective on the Associations between Response Accuracy and Response Time Residuals. Journal of Intelligence. 2024; 12(8):74. https://doi.org/10.3390/jintelligence12080074

Chicago/Turabian StyleLyu, Weicong, and Daniel Bolt. 2024. "A Psychometric Perspective on the Associations between Response Accuracy and Response Time Residuals" Journal of Intelligence 12, no. 8: 74. https://doi.org/10.3390/jintelligence12080074

APA StyleLyu, W., & Bolt, D. (2024). A Psychometric Perspective on the Associations between Response Accuracy and Response Time Residuals. Journal of Intelligence, 12(8), 74. https://doi.org/10.3390/jintelligence12080074