PASS Theory of Intelligence and Its Measurement Using the Cognitive Assessment System, 2nd Edition

Abstract

1. Introduction

2. The PASS Neurocognitive Theory

2.1. Planning

2.2. Attention

2.3. Simultaneous

2.4. Successive

3. Measurement of PASS Theory Using CAS2

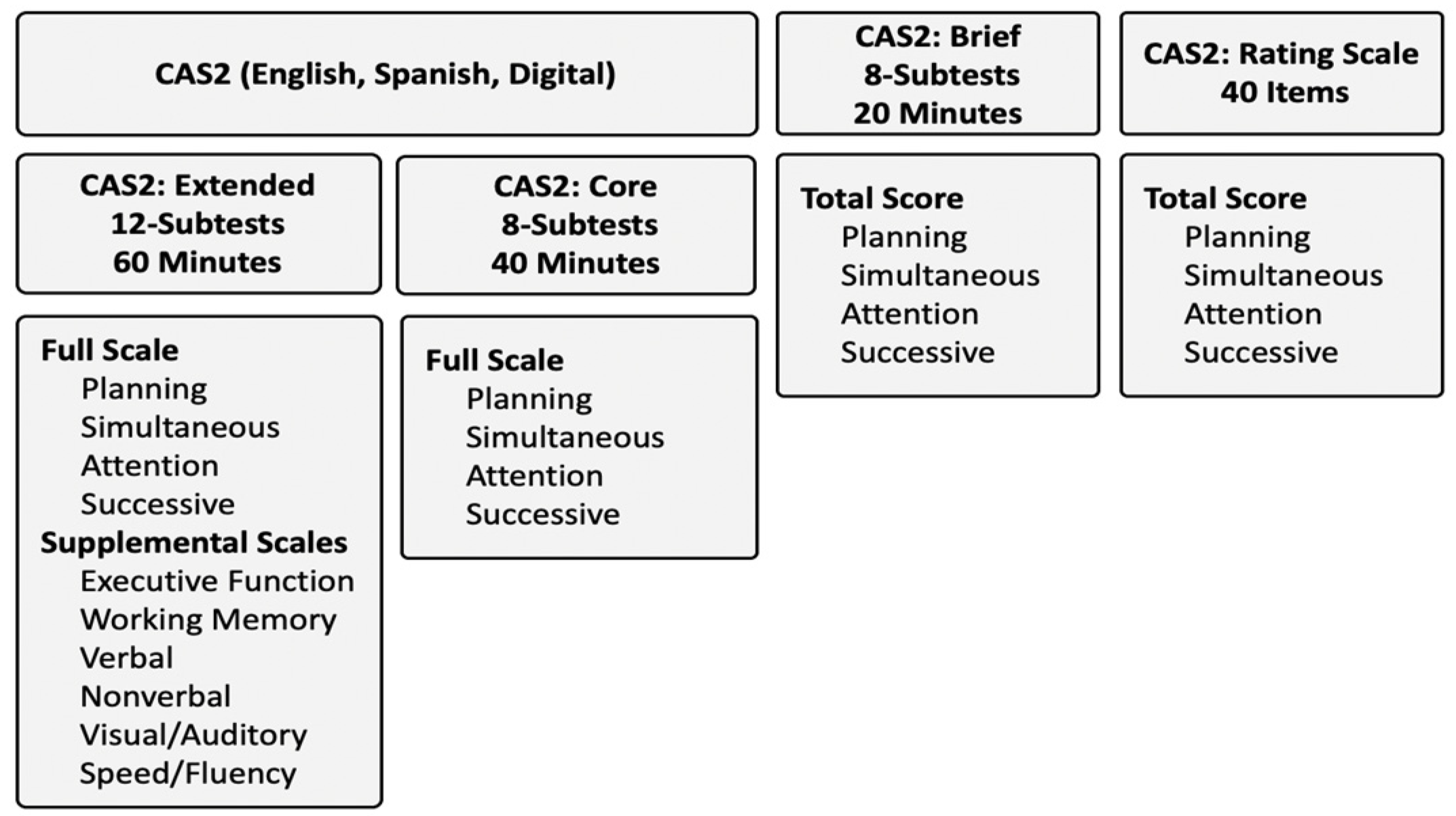

3.1. CAS2, CAS2: Brief & CAS2: Rating Scale

3.2. CAS2 Versions

3.3. Neuropsychological Underpinnings

3.4. Test Content and Equitable Assessment

- Planning subtests should measure how well a person creates and uses strategies to complete a task.

- Attention subtests should measure how well a person can focus and resist distractions.

- Simultaneous subtests should measure how well a person can understand relationships among things.

- Successive subtests should measure how well a person can manage the sequence of a task.

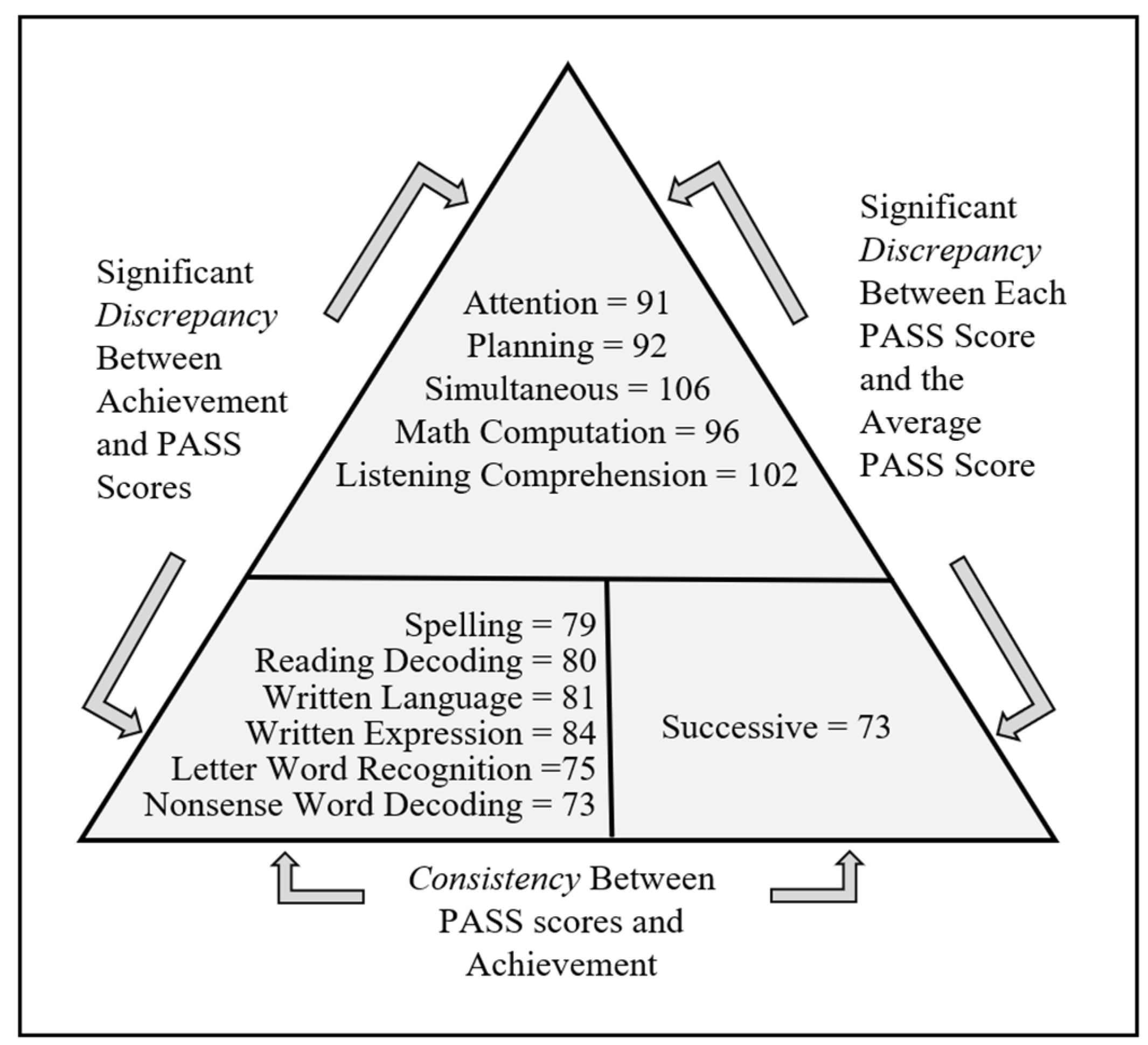

4. Empirical Support for the PASS Theory as Measured Using the CAS2

4.1. PASS Correlations with Achievement

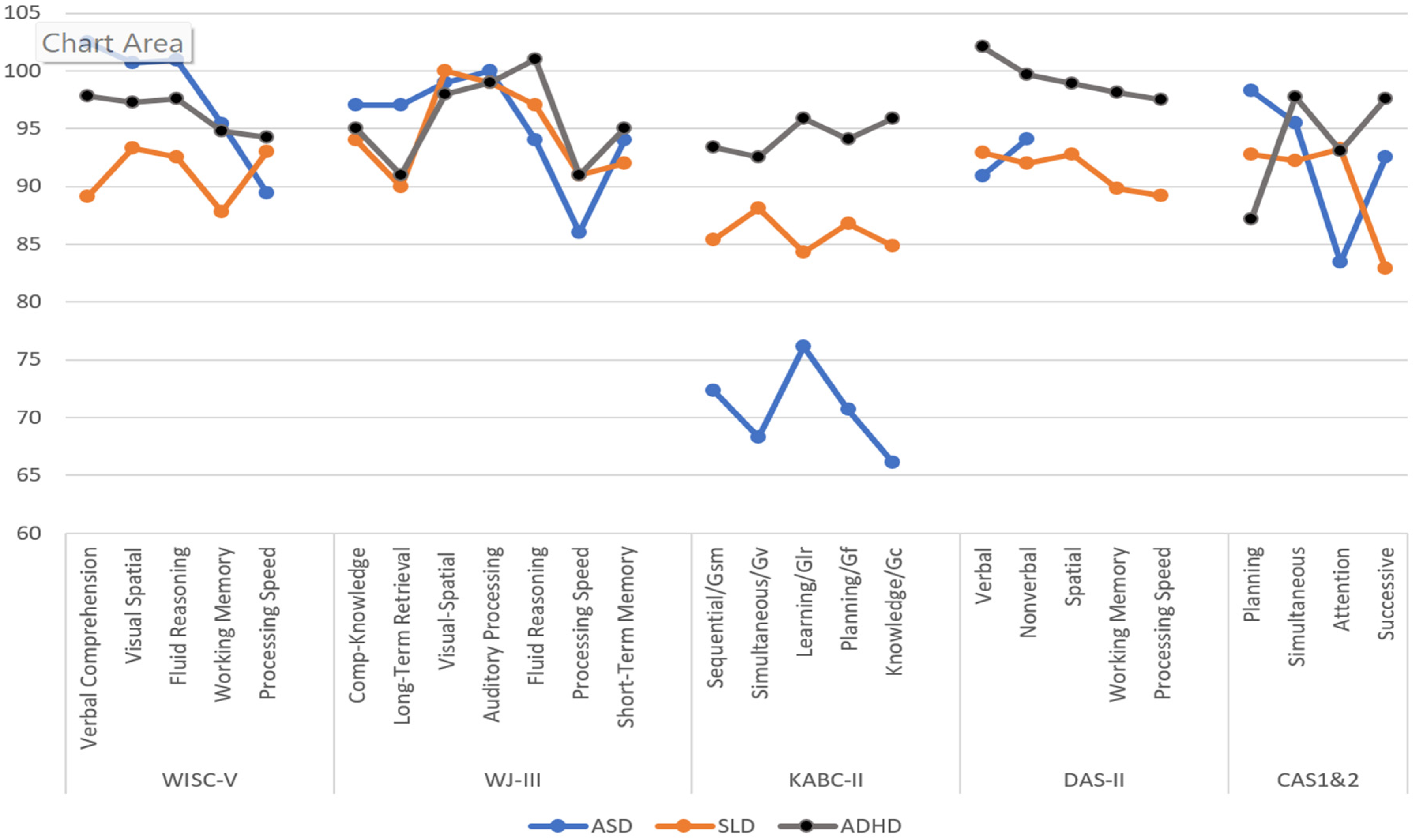

4.2. Intelligence Test Profiles

4.3. Diagnostic Implications

4.4. Intervention

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Ackerman, Phillip L. 2022. Intelligence Process vs. Content and Academic Performance: A Trip through a House of Mirrors. Journal of Intelligence 10: 128. [Google Scholar] [CrossRef] [PubMed]

- American Educational Research Association, American Psychological Association, and National Council on Measurement in Education. 2014. Standards for Educational and Psychological Testing. Washington, DC: American Educational Research Association. [Google Scholar]

- American Psychological Association. 2021. Apology to People of Color for APA’s Role in Promoting, Perpetuating, and Failing to Challenge Racism, Racial Discrimination, and Human Hierarchy in U.S. Washington, DC: American Psychological Association. Available online: https://www.apa.org/about/policy/racism-apology (accessed on 17 March 2023).

- Avant, Anna, and Marcia O’Neal. 1986. Investigation of the Otis-Lennon School Ability Test to Predict WISC-R Full Scale IQ for Referred Children. Paper presented at the 15th Annual Meeting of the Mid-South Educational Research Association, Memphis, TN, USA, November 19–21; Available online: https://files.eric.ed.gov/fulltext/ED286883.pdf (accessed on 14 March 2023).

- Avram, Mihai, Evgeny Gutyrchik, Yan Bao, Ernst Pöppel, Maximilian Reiser, and Janusch Blautzik. 2013. Neurofunctional correlates of esthetic and moral judgments. Neuroscience Letters 534: 128–32. [Google Scholar] [CrossRef]

- Benson, Nicholas F., A. Alexander Beaujean, Ryan J. McGill, and Stefan C. Dombrowski. 2018. Revisiting Carroll’s survey of factor-analytic studies: Implications for the clinical assessment of intelligence. Psychological Assessment 30: 1028–38. [Google Scholar] [CrossRef] [PubMed]

- Benson, Nicholas F., Randy G. Floyd, John H. Kranzler, Tanya L. Eckert, Sarah A. Fefer, and Grant B. Morgan. 2019. Test use and assessment practices of school psychologists in the United States: Findings from the 2017 National Survey. Journal of School Psychology 72: 29–48. [Google Scholar] [CrossRef] [PubMed]

- Binet, Alfred, and Theodore Simon. 1916. The Development of Intelligence in Children (The Binet-Simon Scale). Translated by Elizabeth S. Kite. Baltimore: Williams & Wilkins Co. [Google Scholar] [CrossRef]

- Boden, Carrie, and John R. Kirby. 1995. Successive processing, phonological coding, and the remediation of reading. Journal of Cognitive Education 4: 19–32. [Google Scholar]

- Brams, Andrew G. 1999. Utility of Das-Naglieri: Cognitive Assessment System in the discrimination of elementary school children with learning disabilities and speech impairments. (Doctoral dissertation, University of Northern Colorado, 1999). Dissertation Abstracts International 60: 1878. [Google Scholar]

- Bronner, Augusta F., William Healey, Gladys M. Lowe, and Myra E. Shimberg. 1927. A Manual of Individual Mental Tests and Testing. Boston: Little Brown and Company. [Google Scholar]

- Brulles, Dina, Kimberly Lansdowne, and Jack A. Naglieri. 2022. Understanding and Using the Naglieri General Ability Tests: A Call for Equity in Gifted Education. Minneapolis: Free Spirit Publishing. [Google Scholar]

- Canivez, Gary L. 2008. Orthogonal higher order factor structure of the Stanford-Binet Intelligence Scales--fifth edition for children and adolescents. School Psychology Quarterly 23: 533–41. [Google Scholar] [CrossRef]

- Canivez, Gary L. 2011. Hierarchical factor structure of the Cognitive Assessment System: Variance partitions from the Schmid-Leiman 1957 procedure. School Psychology Quarterly 26: 305–17. [Google Scholar] [CrossRef]

- Canivez, Gary L., and Ryan J. McGill. 2016. Factor structure of the Differential Ability Scales–Second Edition: Exploratory and hierarchical factor analyses with the core subtests. Psychological Assessment 28: 1475–88. [Google Scholar] [CrossRef] [PubMed]

- Canivez, Gary L., Marley W. Watkins, and Stephan C. Dombrowski. 2017. Structural Validity of the Wechsler Intelligence Scale for Children-Fifth Edition: Confirmatory Factor Analyses with the 16 Primary and Secondary Subtests. Psychological Assessment 29: 458–72. [Google Scholar] [CrossRef]

- Carlson, Jerry S., and Jagannath P. Das. 1997. A process approach to remediating word decoding deficiencies in Chapter 1 Children. Learning Disability Quarterly 20: 93–102. [Google Scholar] [CrossRef]

- Carman, Carol A., Christine A. Walther, and Robert A. Bartsch. 2018. Using the Cognitive Abilities Test (CogAT) 7 Nonverbal Battery to Identify the Gifted/Talented: An Investigation of Demographic Effects and Norming Plans. The Gifted Child Quarterly 62: 193–209. [Google Scholar] [CrossRef]

- Carroll, John B. 1993. Human Cognitive Abilities: A Survey of Factor-Analytic Studies. Cambridge: Cambridge University Press. [Google Scholar] [CrossRef]

- Cormier, Pierre, Jerry S. Carlson, and Jagannath P. Das. 1990. Planning ability and cognitive performance: The compensatory effects of a dynamic assessment approach. Learning and Individual Differences 2: 437–49. [Google Scholar] [CrossRef]

- D’Amico, Antonella, Maurizio Cardaci, Santo Di Nuovo, and Jack A. Naglieri. 2012. Differences in achievement not in intelligence in the north and south of Italy: Comments on Lynn (2010a, 2010b). Learning and Individual Differences 22: 128–32. [Google Scholar] [CrossRef]

- Das, Jagannath P. 2000. PREP: A cognitive remediation program in theory and practice. Developmental Disabilities Bulletin 28: 83–96. [Google Scholar]

- Das, Jagannath P. 2009. Reading Difficulties and Dyslexia, Rev. ed. New Delhi: Sage. [Google Scholar]

- Das, Jagannath P., Jack A. Naglieri, and John R. Kirby. 1994. Assessment of Cognitive Processes: The PASS Theory of Intelligence. Boston: Allyn & Bacon. [Google Scholar]

- Das, Jagannath P., John Cummins, John R. Kirby, and Ronald F. Jarman. 1979a. Simultaneous and successive processes, language and mental abilities. Canadian Psychological Review/Psychologie Canadienne 20: 1–11. [Google Scholar] [CrossRef]

- Das, Jagannath P., John R. Kirby, and Ronald F. Jarman. 1975. Simultaneous and successive syntheses: An alternative model for cognitive abilities. Psychological Bulletin 82: 87–103. [Google Scholar] [CrossRef]

- Das, Jagannath P., John R. Kirby, and Ronald F. Jarman. 1979b. Simultaneous and Successive Cognitive Processes. New York: Academic Press. [Google Scholar]

- Das, Jagannath P., Rama K. Mishra, and Judith E. Pool. 1995. An Experiment on Cognitive Remediation of Word-Reading Difficulty. Journal of Learning Disabilities 28: 66–79. [Google Scholar] [CrossRef] [PubMed]

- Dombrowski, Stefan C., Ryan J. McGill, and Gary L. Canivez. 2017. Exploratory and hierarchical factor analysis of the WJ-IV Cognitive at school age. Psychological Assessment 29: 394–407. [Google Scholar] [CrossRef]

- DuPaul, George J., Tanya L. Eckert, and Brigid Vilardo. 2012. The Effects of School-Based Interventions for Attention Deficit Hyperactivity Disorder: A Meta-Analysis 1996–2010. School Psychology Review 41: 387–412. [Google Scholar] [CrossRef]

- Edwards, Oliver, and Tom Oakland. 2006. Factorial Invariance of Woodcock-Johnson III Scores for African Americans and Caucasians Americans. Journal of Psychoeducational Assessment 24: 358–66. [Google Scholar] [CrossRef]

- Fagan, Joseph F., and Cynthia R. Holland. 2006. Racial equality in intelligence: Predictions from a theory of intelligence as processing. Intelligence 35: 361–387. [Google Scholar] [CrossRef]

- Flanagan, Dawn P., Vincent C. Alfonso, and Sam O. Ortiz. 2007. Essentials of Cross-Battery Assessment, 2nd ed. Hoboken: John Wiley & Sons. [Google Scholar]

- Freeman, Frank S. 1955. Theory and Practice of Psychological Testing. New York: Henry Holt and Company. [Google Scholar]

- Friston, Karl. 2002. Beyond phrenology: What can neu-reimaging tell us about distributed circuitry? Annual Review of Neuroscience 25: 221–50. [Google Scholar] [CrossRef] [PubMed]

- Georgiou, George K., Kan Guo, Nithya Naveenkumar, Vieira P. Vieira, and Jagannath P. Das. 2020. PASS theory of intelligence and academic achievement: A meta-analytic review. Intelligence 79: 101431. [Google Scholar] [CrossRef]

- Goldberg, Elkhonon. 2009. The New Executive Brain: Frontal Lobes in a Complex World. New York: Oxford University Press. [Google Scholar]

- Goldstein, Harold W., Kenneth P. Yusko, Charles A. Scherbaum, and Elliott C. Larson. 2023. Reducing black-white racial differences on intelligence tests used in hiring for public safety jobs. Journal of Intelligence 11: 62. [Google Scholar] [CrossRef] [PubMed]

- Haddad, Fred A., Yolanda E. Garcia, Jack A. Naglieri, Mark Grimditch, Andy McAndrews, and Julie Eubanks. 2003. Planning Facilitation and Reading Comprehension: Instructional Relevance of the Pass Theory. Journal of Psychoeducational Assessment 21: 282–89. [Google Scholar] [CrossRef]

- Hale, James B., and Catherine A. Fiorello. 2004. School Neuropsychology: A Practitioner’s Handbook. New York: Guilford Press. [Google Scholar]

- Huang, Leesa V., Achilles N. Bardos, and Rick C. D’Amato. 2010. Identifying students with learning disabilities: Composite profile analysis using the Cognitive Assessment System. Journal of Psychoeducational Assessment 28: 19–30. [Google Scholar] [CrossRef]

- Iseman, Jacqueline, and Jack A. Naglieri. 2011. A cognitive strategy instruction to improve math calculation for children with ADHD: A randomized controlled study. Journal of Learning Disabilities 44: 184–95. [Google Scholar] [CrossRef] [PubMed]

- Jensen, Arthur R. 1998. The G Factor: The Science of Mental Ability. Santa Barbara: Greenwood. [Google Scholar]

- Johnson, Jack A. 2001. The Planning-Attention-Simultaneous-Success model of cognitive processing in youth with and without written expression disabilities. Dissertation Abstracts International 62: 5988. [Google Scholar]

- Johnson, Mark H. 2005. Subcortical face processing. Nature Reviews Neuroscience 6: 766–74. [Google Scholar] [CrossRef]

- Kar, Bimal C., Upendra N. Dash, Jagannath P. Das, and Jerry S. Carlson. 1992. Two experiments on the dynamic assessment of planning. Learning and Individual Differences 5: 13–29. [Google Scholar] [CrossRef]

- Kaufman, Alan S., and Nadine L. Kaufman. 2004. Kaufman Assessment Battery for Children, 2nd ed. Circle Pines: American Guidance Service. [Google Scholar]

- Kaufman, Alan S., Susie Railford, and Diane Coalson. 2016. Intelligent Testing with the WISC-V. Hoboken: John Wiley. [Google Scholar]

- Kelly, John. 2023. Historically Discriminatory Assessment Practices and Changes We Need to Make. Paper presented at the National Association of School Psychologists (NASP) Annual Convention, Denver, CO, USA, February 9. [Google Scholar]

- Kirby, John R. 1984. Cognitive Strategies and Educational Performance. New York: Academic Press. [Google Scholar]

- Kirby, John R., and Noel H. Williams. 1991. Learning Problems: A Cognitive Approach. Toronto: Kagan & Woo. [Google Scholar]

- Koziol, Leonard F., and Michael C. Stevens. 2012. Neuropsychological assessment and the paradox of ADHD. Applied Neuropsychology: Child 12: 79–89. [Google Scholar] [CrossRef] [PubMed]

- Koziol, Leonard F., Lauren A. Barker, Arthur W. Joyce, and Skip Hrin. 2014. The small-world organization of large-scale brain systems and relationships with subcortical structures. Applied Neuropsychology: Child 3: 245–52. [Google Scholar] [CrossRef] [PubMed]

- Koziol, Leonard F., Paul Beljan, Kate Bree, John Mather, and Lauren Barker. 2016. Large-Scale Brain Systems and Neuropsychological Testing: An Effort to Move Forward. Cham: Springer. [Google Scholar]

- Lezak, Muriel D. 1995. Neuropsychological Assessment, 3rd ed. New York: Oxford University Press. [Google Scholar]

- Lichtenberger, Elizabeth O., Mary A. Volker, Alan S. Kaufman, and Nadine L. Kaufman. 2006. Assessing Gifted Children with the Kaufman Assessment Battery for Children-Second Edition (KABC-II). Gifted Education International 21: 99–126. [Google Scholar] [CrossRef]

- Lohman, David F. 2012. Cognitive Abilities Tests, form 7: Research and Development Guide. Rolling Meadows: Riverside Publishing. [Google Scholar]

- Lohman, David F., and Elizabeth P. Hagen. 2001. Cognitive Abilities Test. Itasca: Riverside. [Google Scholar]

- Lohman, David F., Katrina A. Korb, and Joni M. Lakin. 2008. Identifying Academically Gifted English-Language Learners Using Nonverbal Tests. Gifted Child Quarterly 52: 275–96. [Google Scholar] [CrossRef]

- Lubinski, David, and Camella P. Benbow. 2021. Intellectual Precocity: What Have We Learned Since Terman? Gifted Child Quarterly 65: 3–28. [Google Scholar] [CrossRef]

- Luria, Alexander R. 1966. Human Brain and Psychological Processes. New York: Harper & Row. [Google Scholar]

- Luria, Alexander R. 1973a. The origin and cerebral organization of man’s conscious action. In Children with Learning Problems: Readings in a Developmental-Interaction Approach. Edited by Selma G. Sapir and Ann C. Nitzburg. London: Brunner/Mazel. [Google Scholar]

- Luria, Alexander R. 1973b. The Working Brain: An Introduction to Neuropsychology. New York: Basic Books. [Google Scholar]

- Luria, Alexander R. 1980. Higher Cortical Functions in Man, 2nd ed. New York: Basic Books. [Google Scholar]

- Luria, Alexander R. 1982. Language and Cognition. New York: Wiley. [Google Scholar]

- Lynn, Richard. 2010. In Italy, north-south differences in IQ predict differences in income, education, infant mortality, stature, and literacy. Intelligence 38: 93–100. [Google Scholar] [CrossRef]

- Mahapatra, Shamita, Jagannath P. Das, Holly Stack-Cutler, and Rauno Parrila. 2010. Remediating reading comprehension difficulties: A cognitive processing approach. Reading Psychology 30: 428–53. [Google Scholar] [CrossRef]

- Matarazzo, Joe. 1972. Wechsler’s Measurement and Appraisal of Adult Intelligence. Baltimore: Williams and Wilkins. [Google Scholar]

- McGrew, Kevin S., and Dawn P. Flanagan. 1998. The Intelligence Test Desk Reference (ITDR): Gf-Gc Cross-Battery Assessment. Boston: Allyn & Bacon. [Google Scholar]

- McGrew, Kevin S., Erica M. LaForte, and Fred A. Schrank. 2014. Technical Manual: Woodcock-Johnson IV. Rolling Meadows: Riverside. [Google Scholar]

- McNemar, Quinn. 1964. Lost: Our intelligence? Why? American Psychologist. American Psychologist 19: 871–82. [Google Scholar] [CrossRef]

- Naglieri, Jack A. 1986. WISC-R and K-ABC comparison for matched samples of black and white children. Journal of School Psychology 24: 81–88. [Google Scholar] [CrossRef]

- Naglieri, Jack A. 1999. Essentials of CAS Assessment. New York: Wiley. [Google Scholar]

- Naglieri, Jack A. 2005. The Cognitive Assessment System. In Contemporary Intellectual Assessment, 2nd ed. Edited by Dawn P. Flanagan and Patti L. Harrison. New York: Guilford Press, pp. 441–60. [Google Scholar]

- Naglieri, Jack A. 2011. The discrepancy/consistency approach to SLD identification using the PASS theory. In Essentials of Specific Learning Disability Identification. Edited by Dawn P. Flanagan and Vincent C. Alfonso. Hoboken: Wiley, pp. 145–72. [Google Scholar]

- Naglieri, Jack A. 2016. Naglieri Nonverbal Ability Test, 3rd ed. San Antonio: Pearson. [Google Scholar]

- Naglieri, Jack A. 2020. Pass Score Analyzers. JackNaglieri.com. Available online: https://jacknaglieri.com/pass-score-analyzers (accessed on 14 March 2023).

- Naglieri, Jack A., and Brienan T. Bornstein. 2003. Intelligence and achievement: Just how correlated are they? Journal of Psychoeducational Assessment 21: 244–60. [Google Scholar] [CrossRef]

- Naglieri, Jack A., and Deane Johnson. 2000. Effectiveness of a cognitive strategy intervention to improve arithmetic computation based on the PASS theory. Journal of Learning Disabilities 33: 591–97. [Google Scholar] [CrossRef] [PubMed]

- Naglieri, Jack A., and Eric Pickering. 2010. Helping Children Learn: Intervention Handouts for Use in School and at Home, 2nd ed. Baltimore: Brookes. [Google Scholar]

- Naglieri, Jack A., and Jagannath P. Das. 1997a. Cognitive Assessment System: Administration and Scoring Manual. Itasca: Riverside. [Google Scholar]

- Naglieri, Jack A., and Jagannath P. Das. 1997b. Cognitive Assessment System. Itasca: Riverside. [Google Scholar]

- Naglieri, Jack A., and Jagannath P. Das. 2006. Cognitive Assessment System—Adattamento Italiano a Cura di S. Taddei. Firenze: OS. [Google Scholar]

- Naglieri, Jack A., and Johannas R. Rojahn. 2004. Validity of the PASS Theory and CAS: Correlations with Achievement. Journal of Educational Psychology 96: 174–81. [Google Scholar] [CrossRef]

- Naglieri, Jack A., and Margret Ronning. 2000. Comparison of White, African American, Hispanic, and Asian Children on the Naglieri Nonverbal Ability Test. Psychological Assessment 12: 328–34. [Google Scholar] [CrossRef] [PubMed]

- Naglieri, Jack A., and Steven Feifer. 2017. Intervention. In Essentials of CAS2 Assessment. Edited by Jack A. Naglieri and Tulio M. Otero. New York: Wiley. [Google Scholar]

- Naglieri, Jack A., and Steven Feifer. 2018. Pattern of strengths and weaknesses made easy: The discrepancy/consistency method. In Essentials of Specific Learning Disabilities Identification Second Edition. Edited by Dawn Flanagan and Vincent Alfonso. New York: Wiley. [Google Scholar]

- Naglieri, Jack A., and Suzane H. Gottling. 1995. A study of planning and mathematics instruction for students with learning disabilities. Psychological Reports 76: 1343–54. [Google Scholar] [CrossRef] [PubMed]

- Naglieri, Jack A., and Suzane H. Gottling. 1997. Mathematics instruction and PASS cognitive processes: An interview study. Journal of Learning Disabilities 30: 513–20. [Google Scholar] [CrossRef] [PubMed]

- Naglieri, Jack A., and Tulio M. Otero. 2011. Cognitive Assessment System: Redefining intelligence from a neuropsychological perspective. In Handbook of Pediatric Neuropsychology. Edited by Andrew Davis. New York: Springer, pp. 320–33. [Google Scholar]

- Naglieri, Jack A., and Tulio M. Otero. 2017. Essentials of CAS2 Assessment. New York: Wiley. [Google Scholar]

- Naglieri, Jack A., and Tulio M. Otero. 2018. The Cognitive Assessment System: From Theory to Practice, 2nd ed. New York: Guilford Press. [Google Scholar]

- Naglieri, Jack A., and Tulio M. Otero. forthcoming. The Cognitive Assessment System-2 Online Administration. Austin: ProEd.

- Naglieri, Jack A., Claudia J. Salter, and Gwenyth H. Edwards. 2004. Assessment of ADHD and reading disabilities using the PASS Theory and Cognitive Assessment System. Journal of Psychoeducational Assessment 22: 93–105. [Google Scholar] [CrossRef]

- Naglieri, Jack A., Dina Brulles, and Kimberly Lansdowne. 2021. Naglieri General Ability Tests: Verbal, Nonverbal and Quantitative Technical Manual. Toronto: MHS. [Google Scholar]

- Naglieri, Jack A., Dina Brulles, and Kimberly Lansdowne. 2022. Naglieri General Ability Tests: Verbal, Nonverbal and Quantitative. Toronto: MHS. [Google Scholar]

- Naglieri, Jack A., Jagannath P. Das, and Sam Goldstein. 2014a. CAS2: Online Scoring and Report System. Austin: ProEd. [Google Scholar]

- Naglieri, Jack A., Jagannath P. Das, and Sam Goldstein. 2014b. Cognitive Assessment System, 2nd ed. Austin: ProEd. [Google Scholar]

- Naglieri, Jack A., Jagannath P. Das, and Sam Goldstein. 2014c. Cognitive Assessment System, 2nd ed. Brief. Austin: ProEd. [Google Scholar]

- Naglieri, Jack A., Jagannath P. Das, and Sam Goldstein. 2014d. Cognitive Assessment System, 2nd ed. Rating Scale. Austin: ProEd. [Google Scholar]

- Naglieri, Jack A., Johanas R. Rojahn, and Holly Matto. 2007. Hispanic and Non-Hispanic Children’s Performance on PASS Cognitive Processes and Achievement. Intelligence 35: 568–79. [Google Scholar] [CrossRef]

- Naglieri, Jack A., Johnas R. Rojahn, Holly Matto, and Sally Aquilino. 2005. Black-white differences in intelligence: A study of the planning, attention, simultaneous, and successive theory of intelligence. Journal of Psychoeducational Assessment 23: 146–60. [Google Scholar] [CrossRef]

- Naglieri, Jack A., Mary A. Moreno, and Tulio M. Otero. 2017. Cognitive Assessment System–Español. Austin: ProEd. [Google Scholar]

- Naglieri, Jack A., Sam Goldstein, Jackie S. Iseman, and Andrew Schwebach. 2003. Performance of Children with Attention Deficit Hyperactivity Disorder and Anxiety/Depression on the WISC-III and Cognitive Assessment System (CAS). Journal of Psychoeducational Assessment 21: 32–42. [Google Scholar] [CrossRef]

- Naglieri, Jack A., Stefano Taddei, and Kevin M. Williams. 2013. US and Italian Children’s Performance on the Cognitive Assessment System: A Cross Cultural Equivalence Study. Psychological Assessment 25: 157–66. [Google Scholar] [CrossRef] [PubMed]

- Otero, Tulio M., and Jack A. Naglieri. 2023. PASS neurocognitive assessment of children with autism spectrum disorder. Psychology in the Schools 60: 452–59. [Google Scholar] [CrossRef]

- Otero, Tulio M., Lauren Gonzales, and Jack A. Naglieri. 2013. The neurocognitive assessment of Hispanic English language learners with reading failure. Journal of Applied Neuropsychology 2: 24–32. [Google Scholar] [CrossRef] [PubMed]

- Papadopoulos, Timothy C., Athena Charalambous, Androniki Kanari, and Maria Loizou. 2004. Kindergarten cognitive intervention for reading difficulties: The PREP remediation in Greek. European Journal of Psychology of Education 19: 79–105. [Google Scholar] [CrossRef]

- Papadopoulos, Timothy C., George C. Spanoudis, and Jack A. Naglieri. 2023. Factor Analytic Study of the Cognitive Assessment System, 2nd ed. Manuscript submitted for publication. [Google Scholar]

- Parrila, Rauno K., Maureen E. Kendrick, Timothy C. Papadopoulos, and John R. Kirby. 1999. Efficacy of cognitive reading remediation program for at-risk children in grade 1. Developmental Disabilities Bulletin 27: 1–31. [Google Scholar] [CrossRef]

- Passingham, Richard E. 2021. Understanding the Prefrontal Cortex: Selective Advantage, Connectivity, and Neural Operations. Oxford: Oxford University Press. [Google Scholar]

- Passingham, Richard E., and James B. Rowe. 2015. A Short Guide to Brain Imaging: The Neuroscience of Human Cognition. Oxford: Oxford University Press. [Google Scholar]

- Peng, Peng, Tengfei Wang, CuiCui Wang, and Xin Lin. 2019. A Meta-Analysis on the Relation Between Fluid Intelligence and Reading/Mathematics: Effects of Tasks, Age, and Social Economics Status. Psychological Bulletin 145: 189–236. [Google Scholar] [CrossRef]

- Pintner, Rudolf. 1923. Intelligence Testing. New York: Holt. [Google Scholar]

- Pintner, Rudolf, and Donald G. Paterson. 1925. A Scale of Performance Tests. London: Appleton and Company. [Google Scholar]

- Raven, John C. 1954. Progressive Matrices 1947. Series A, AB, B. London. In Coloured Progressive Matrices. Serie A, AB, B. 1984. Firenze: Organizzazioni Speciali. [Google Scholar]

- Reid, Robert C., and John W. Maag. 1998. Functional assessment: A method for developing classroom-based accommodations and interventions for children with ADHD. Reading and Writing Quarterly: Overcoming Learning Disabilities 14: 9–42. [Google Scholar] [CrossRef]

- Roth, Bettina, Nicolas Becker, Sara Romeyke, Sarah Schäfer, Florian Domnick, and Frank M. Spinath. 2015. Intelligence and school grades: A meta-analysis. Intelligence 53: 118–37. [Google Scholar] [CrossRef]

- Scheiber, Caroline, and Alan S. Kaufman. 2015. Which of the Three KABC-II Global Scores is the Least Biased? Journal of Pediatric Neuropsychology 1: 21–35. [Google Scholar] [CrossRef]

- Schneider, W. Joel. 2013. Principles of assessment of aptitude and achievement. In The Oxford Handbook of Child Psychological Assessment. Edited by Don H. Saklofske, Cecil R. Reynolds and Vicki L. Schwean. New York: Oxford University Press, pp. 286–330. [Google Scholar]

- Selvamenan, Matangi, Angelina Paolozza, Joanna Solomon, and Jack A. Naglieri. 2024. A Pilot Study of Race, Ethnic, Gender, and Parental Education Level Differences on the Naglieri General Ability Tests: Verbal, Nonverbal, and Quantitative. Manuscript submitted for publication. [Google Scholar]

- Sotelo-Dynega, Marlene, Samuel O. Ortiz, Dawn P. Flanagan, and William F. Chaplin. 2013. English Language Proficiency and Test Performance: An Evaluation of Bilingual Students with the Woodcock-Johnson III Tests of Cognitive Abilities. Psychology in the Schools 50: 781–97. [Google Scholar] [CrossRef]

- Sporns, Olaf, Joshua Faskowitz, Andreia Sofia Teixeira, Sarah A. Cutts, and Richard F. Betzel. 2021. Dynamic expression of brain functional systems disclosed by fine-scale analysis of edge time series. Network Neuroscience 5: 405–33. [Google Scholar] [CrossRef] [PubMed]

- Stern, William. 1914. Psychological Methods of Testing Intelligence. Baltimore: Warwick and York, p. 13. [Google Scholar]

- Stroop, J. Ridley. 1935. Studies of interference in serial verbal reactions. Journal of Experimental Psychology 18: 643–62. [Google Scholar]

- Terman, Lewis M. 1916. The Measurement of Intelligence. Boston: Houghton Mifflin. [Google Scholar]

- Van Luit, Johanas E. H., Everlyn H. Kroesbergen, and Jack A. Naglieri. 2005. Utility of the PASS Theory and Cognitive Assessment System for Dutch Children With and Without ADHD. Journal of Learning Disabilities 38: 434–39. [Google Scholar] [CrossRef] [PubMed]

- Wasserman, John D., and Kirk A. Becker. 2000. Racial and ethnic group mean score differences on intelligence tests. Paper Presented at the Symposium Conducted at the Annual Meeting of the American Psychological Association, Washington, DC, USA, August 10. [Google Scholar]

- Watkins, Marley W., and Gary L. Canivez. 2022. Are there cognitive profiles unique to students with learning disabilities? A Latent Profile Analysis of Wechsler Intelligence Scale for Children-Fourth Edition Scores. School Psychology Review 51: 634–46. [Google Scholar] [CrossRef]

- Wechsler, David. 1941. The Measurement of Adult Intelligence. Baltimore: Williams & Wilkins. [Google Scholar]

- Wechsler, David. 2014. Wechsler Intelligence Scale for Children, 5th ed. Bloomington: Pearson. [Google Scholar]

- Woodcock, Richard W., and Mary E. Bonner Johnson. 1989. Woodcock-Johnson Psycho-Educational Battery–Revised. Chicago: Riverside. [Google Scholar]

- Yeo, B. T. Thomas, Fenna M. Krienen, Jorge Sepulcre, Mert R. Sabuncu, Danial Lashkari, Marisa Hollinshead, Joshua L. Roffman, Jordan W. Smoller, Lilla Zöllei, Jonathan R. Polimeni, and et al. 2011. The organization of the human cerebral cortex estimated by intrinsic functional connectivity. Journal of Neuro-Physiology 106: 1125–65. [Google Scholar]

- Yerkes, Robert M. 1921. Psychological Examining in the United States Army. Washington, DC: National Academy of Sciences, vol. XV. [Google Scholar]

- Yoakum, Clarence, and Robert M. Yerkes. 1920. Army Mental Tests. New York: Holt and Company. [Google Scholar]

- Zaytseva, Yuliya, Evgeny Gutyrchik, Yan Bao, Ernst Pöppel, Shihui Han, Georg Northoff, Lorenz Welker, Thoams Meindl, and Janusch Blautzik. 2014. Self-processing in the brain: A paradigmatic fMRI case study with a professional singer. Brain and Cognition 87: 104–8. [Google Scholar] [CrossRef]

- Zelazo, David Z., and Stephanie M. Carlson. 2020. The neurodevelopment of executive function skills: Implications for academic achievement gaps. Psychology & Neuroscience 13: 273–98. [Google Scholar] [CrossRef]

| PASS Processes | Functional Units | Neuro-Network |

|---|---|---|

| Planning | 3rd Functional Unit | This neurocognitive process provides for the programming, regulation, and verification of behavior and is responsible for behaviors such as asking questions, solving problems, self-monitoring, regulation of voluntary activity, conscious impulse control, various linguistic skills such as spontaneous conversation, and the complex expression of personality. Planning is associated with the prefrontal lobes of the brain and interacts with the first and second units and their associated networks. |

| Attention | 1st Functional Unit | This neurocognitive process provides the brain with the appropriate level of arousal or cortical tone, as well as directive and selective attention. The first functional unit, along with its related networks, allows for orientating, sustaining, and reorienting attention to what has relevance at any moment in time. Attention (i.e., cortical arousal) is associated with the brain stem and reticular activating system, which interacts with the default mode network and activation of the ventral and dorsal attention networks. It is also associated with the fronto-parietal system, which facilitates Simultaneous and Successive processes. |

| Simultaneous and Successive | 2nd Functional Unit | Simultaneous neurocognitive process provides for the understanding and use of the interrelated nature of information. Successive neurocognitive processing provides for the understanding and use of sequential information. Activation of the frontal, parietal, and temporal regions is key to both Simultaneous and Successive processing. This region is considered the association cortex, which has many interrelated functions (such as attention, spatial representation, working memory, eye movements, an assortment of other sensory information, and the guidance of actions). |

| Race | Ethnicity | ||

|---|---|---|---|

| Tests that require knowledge | Mn = 9.4 | Mn = 6.6 | |

| Otis–Lennon School Ability Test | 13.6 | - | |

| Stanford–Binet IV | 12.6 | - | |

| WISC-V | 11.6 | - | |

| WJ-III (normative sample) | 10.9 | 10.7 | |

| CogAT 7 Nonverbal | 11.8 | 7.6 | |

| CogAT 7-Verbal | 6.6 | 5.3 | |

| CogAT 7-Quantitative | 5.6 | 3.6 | |

| CogAT-Nonverbal | 6.4 | 2.9 | |

| CogAT-Total (V, Q & NV) | 7.0 | 4.5 | |

| K-ABC II Fluid-Crystallized Index | 9.4 | 9.8 | |

| K-ABC II Mental Processing Index | 8.1 | 8.2 | |

| WISC-V (statistical controls) | 8.7 | - | |

| Tests that require minimal knowledge | Mn = 4.3 | Mn = 2.9 | |

| K-ABC (normative sample) | 7.0 | - | |

| K-ABC (matched samples) | 6.1 | - | |

| KABC-II (adjusted for gender & SES) | 6.7 | 5.4 | |

| CAS-2 (normative sample) | 6.3 | 4.5 | |

| CAS (statistical control normative data) | 4.8 | 4.8 | |

| CAS-2 (statistical control normative data) | 4.3 | 1.8 | |

| CAS-2 Brief (normative samples) | 2.0 | 2.8 | |

| NNAT (matched samples) | 4.2 | 2.8 | |

| Naglieri General Ability Test-Verbal | 2.2 | 1.6 | |

| Naglieri General Ability Test-Nonverbal | 1.0 | 1.1 | |

| Naglieri General Ability Test-Quantitative | 3.2 | 1.3 | |

| 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | |

|---|---|---|---|---|---|---|---|---|---|---|

| Planning | 120 | 116 | 105 | 103 | 100 | 111 | 102 | 87 | 93 | 79 |

| Simultaneous | 118 | 103 | 114 | 99 | 114 | 102 | 86 | 101 | 92 | 82 |

| Attention | 119 | 121 | 96 | 107 | 106 | 106 | 99 | 87 | 96 | 81 |

| Successive | 115 | 102 | 117 | 113 | 100 | 89 | 99 | 103 | 82 | 81 |

| Average PASS | 118 | 110 | 108 | 106 | 105 | 102 | 96 | 94 | 91 | 81 |

| Range | 5 | 19 | 21 | 14 | 14 | 23 | 15 | 16 | 14 | 3 |

| 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | 11 | 12 | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Planning | 99 | 112 | 101 | 99 | 95 | 86 | 87 | 82 | 85 | 88 | 78 | 76 |

| Simultaneous | 115 | 106 | 100 | 105 | 95 | 103 | 97 | 84 | 96 | 83 | 76 | 81 |

| Attention | 99 | 117 | 103 | 102 | 95 | 97 | 80 | 73 | 81 | 91 | 76 | 71 |

| Successive | 118 | 98 | 102 | 90 | 100 | 85 | 85 | 98 | 97 | 75 | 90 | 79 |

| Average PASS | 108 | 108 | 102 | 99 | 96 | 93 | 87 | 84 | 90 | 84 | 80 | 77 |

| Range | 19 | 19 | 3 | 15 | 6 | 18 | 17 | 25 | 15 | 16 | 14 | 10 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Naglieri, J.A.; Otero, T.M. PASS Theory of Intelligence and Its Measurement Using the Cognitive Assessment System, 2nd Edition. J. Intell. 2024, 12, 77. https://doi.org/10.3390/jintelligence12080077

Naglieri JA, Otero TM. PASS Theory of Intelligence and Its Measurement Using the Cognitive Assessment System, 2nd Edition. Journal of Intelligence. 2024; 12(8):77. https://doi.org/10.3390/jintelligence12080077

Chicago/Turabian StyleNaglieri, Jack A., and Tulio M. Otero. 2024. "PASS Theory of Intelligence and Its Measurement Using the Cognitive Assessment System, 2nd Edition" Journal of Intelligence 12, no. 8: 77. https://doi.org/10.3390/jintelligence12080077

APA StyleNaglieri, J. A., & Otero, T. M. (2024). PASS Theory of Intelligence and Its Measurement Using the Cognitive Assessment System, 2nd Edition. Journal of Intelligence, 12(8), 77. https://doi.org/10.3390/jintelligence12080077