How Multidimensional Is Emotional Intelligence? Bifactor Modeling of Global and Broad Emotional Abilities of the Geneva Emotional Competence Test

Abstract

1. Introduction

1.1. Measuring Emotional Intelligence

1.2. Is Emotional Intelligence Unidimensional?

Plausibility of Broad Subscales and General Factor Dominance

1.3. Incremental Validity of EI Branches for Emotional Criteria

Using the S-1 Bifactor Model to Test the Predictive Validity of EI Branches

1.4. Study Aims

2. Materials and Methods

2.1. Measures

2.1.1. Fluid Intelligence

2.1.2. Emotional Intelligence

2.1.3. Big Five Personality Traits

2.1.4. Subjective Well-Being

2.1.5. Affective Engagement

2.1.6. Cumulative Grade Point Average

2.2. Analyses

2.2.1. Model Estimation and Comparison

2.2.2. Psychometric Evaluations

3. Results

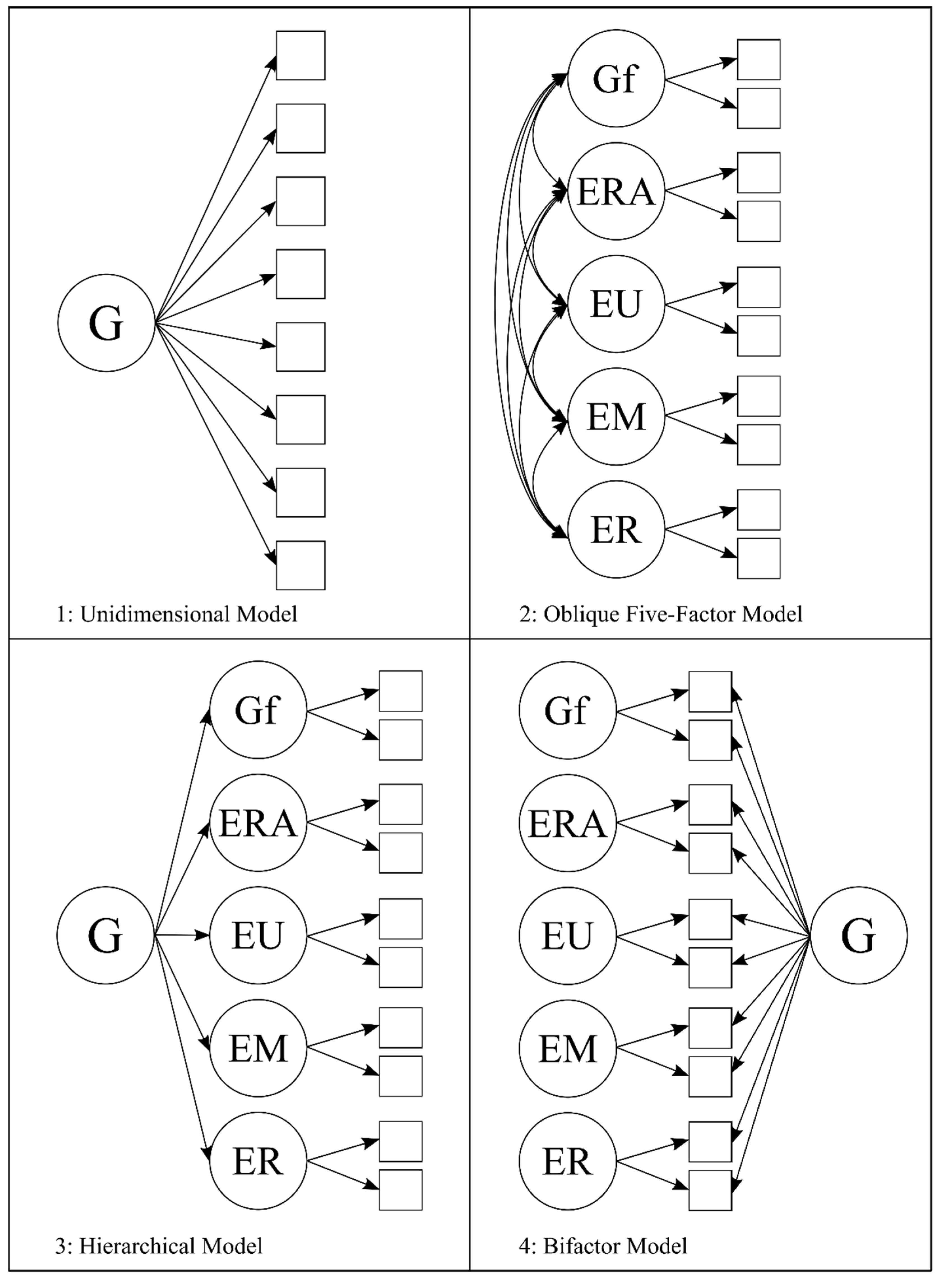

3.1. Structural Models

3.2. Psychometric Analyses of a Bifactor Model

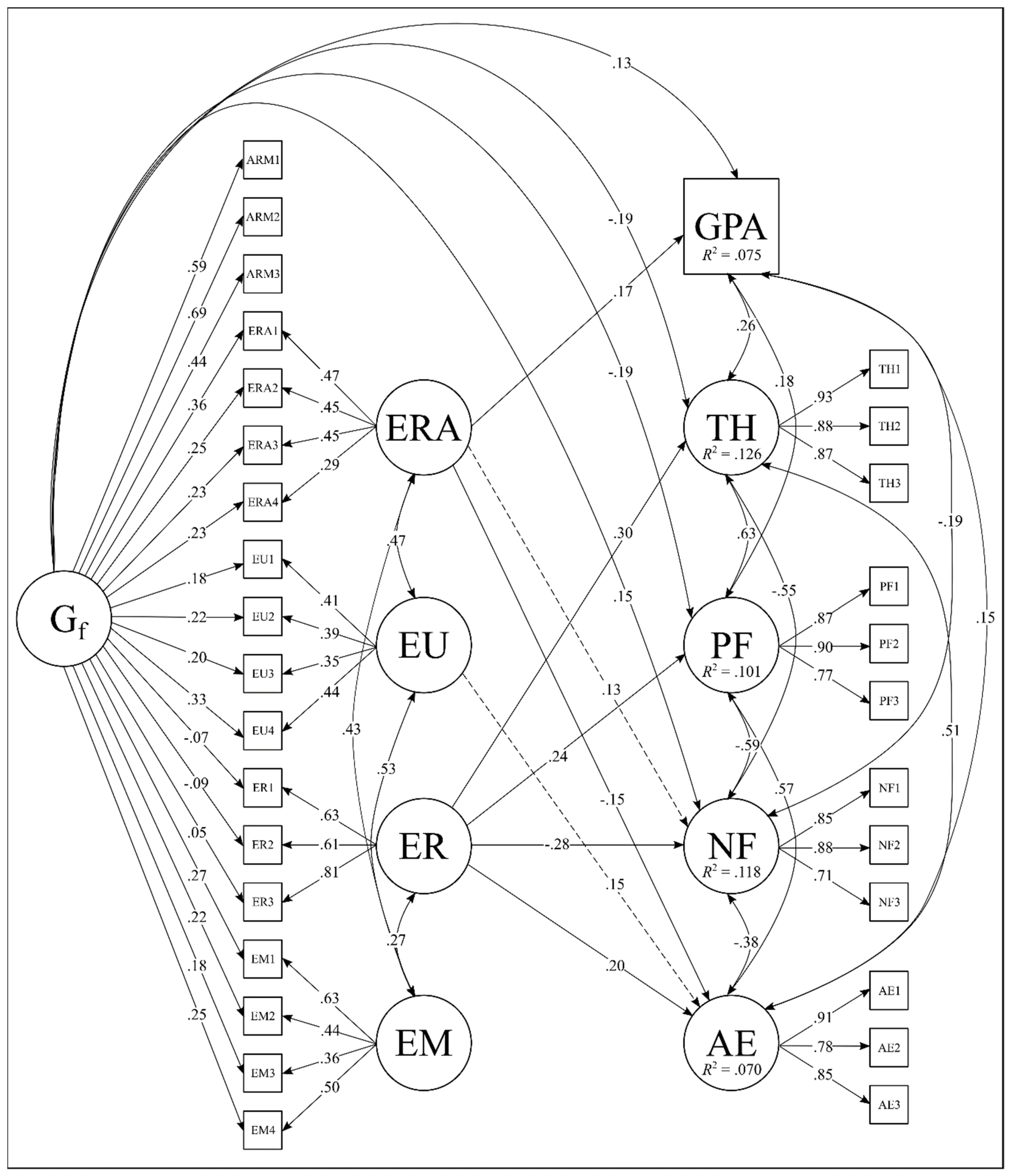

3.3. Bifactor S-1 Predictive Models

4. Discussion

4.1. Structural Evidence of the GECo in Relation to Fluid Intelligence and the MSCEIT

4.2. Emotion Regulation: Distinct Skill, Trait EI, or Methodological Artifact?

4.3. Predictive Effects and Alignment with Emotional Engagement

4.4. Bifactor Indices and Need for Refined Narrow Assessments

4.5. Limitations and Future Directions

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Ajzen, Icek, and Martin Fishbein. 1977. Attitude-behavior relations: A theoretical analysis and review of empirical research. Psychological Bulletin 84: 888. [Google Scholar] [CrossRef]

- Alkozei, Anna, Zachary J. Schwab, and William D. S. Killgore. 2016. The role of emotional intelligence during an emotionally difficult decision-making task. Journal of Nonverbal Behavior 40: 39–54. [Google Scholar] [CrossRef]

- Antonakis, John. 2004. On why “emotional intelligence” will not predict leadership effectiveness beyond IQ or the “big five”: An extension and rejoinder. Organizational Analysis 12: 171–82. [Google Scholar] [CrossRef]

- Austin, Elizabeth J. 2010. Measurement of ability emotional intelligence: Results for two new tests. British Journal of Psychology 101: 563–78. [Google Scholar] [CrossRef] [PubMed]

- Bagozzi, Richard P., and Jeffrey R. Edwards. 1998. A general approach for representing constructs in organizational research. Organizational Research Methods 1: 45–87. [Google Scholar] [CrossRef]

- Bänziger, Tanja, Marcello Mortillaro, and Klaus R. Scherer. 2012. Introducing the Geneva multimodal expression corpus for experimental research on emotion perception. Emotion 12: 1161. [Google Scholar] [CrossRef]

- Bastian, Veneta A., Nicholas R. Burns, and Ted Nettelbeck. 2005. Emotional intelligence predicts life skills, but not as well as personality and cognitive abilities. Personality and Individual Differences 39: 1135–45. [Google Scholar] [CrossRef]

- Beaujean, A. Alexander. 2015. John Carroll’s views on intelligence: Bi-factor vs. higher-order models. Journal of Intelligence 3: 121–36. [Google Scholar] [CrossRef]

- Beier, Margaret E., Harrison J. Kell, and Jonas W. B. Lang. 2019. Commenting on the “Great debate”: General abilities, specific abilities, and the tools of the trade. Journal of Intelligence 7: 5. [Google Scholar] [CrossRef] [PubMed]

- Bilker, Warren B., John A. Hansen, Colleen M. Brensinger, Jan Richard, Raquel E. Gur, and Ruben C. Gur. 2012. Development of abbreviated nine-item forms of the Raven’s standard progressive matrices test. Assessment 19: 354–69. [Google Scholar] [CrossRef] [PubMed]

- Brackett, Marc A., Susan E. Rivers, Sara Shiffman, Nicole Lerner, and Peter Salovey. 2006. Relating emotional abilities to social functioning: A comparison of self-report and performance measures of emotional intelligence. Journal of Personality and Social Psychology 91: 780. [Google Scholar] [CrossRef] [PubMed]

- Brackett, Marc A., Susan E. Rivers, Maria R. Reyes, and Peter Salovey. 2012. Enhancing academic performance and social and emotional competence with the RULER feeling words curriculum. Learning and Individual Differences 22: 218–24. [Google Scholar] [CrossRef]

- Brody, Nathan. 2004. What Cognitive Intelligence Is and What Emotional Intelligence Is Not. Psychological Inquiry 15: 234–38. [Google Scholar]

- Brown, Timothy A. 2015. Confirmatory Factor Analysis for Applied Research. New York: Guilford Publications. [Google Scholar]

- Brunner, Martin, Gabriel Nagy, and Oliver Wilhelm. 2012. A tutorial on hierarchically structured constructs. Journal of Personality 80: 796–846. [Google Scholar] [CrossRef]

- Buss, D. M. 2008. Human nature and individual differences: Evolution of human personality. In Handbook of Personality: Theory and Research. Edited by O. P. John, R. W. Robins and L. A. Pervin. New York: The Guilford Press, pp. 29–60. [Google Scholar]

- Cabello, Rosario, Miguel A. Sorrel, Irene Fernández-Pinto, Natalio Extremera, and Pablo Fernández-Berrocal. 2016. Age and gender differences in ability emotional intelligence in adults: A cross-sectional study. Developmental Psychology 52: 1486. [Google Scholar] [CrossRef] [PubMed]

- Carroll, John B. 1993. Human Cognitive Abilities: A Survey of Factor-Analytic Studies. Cambridge: Cambridge University Press. [Google Scholar]

- Castro, Vanessa L., Yanhua Cheng, Amy G. Halberstadt, and Daniel Grühn. 2016. EUReKA! A conceptual model of emotion understanding. Emotion Review 8: 258–68. [Google Scholar] [CrossRef] [PubMed]

- Cattell, Raymond B. 1943. The measurement of adult intelligence. Psychological Bulletin 40: 153. [Google Scholar] [CrossRef]

- Chen, Fang Fang, Yiming Jing, Adele Hayes, and Jeong Min Lee. 2013. Two concepts or two approaches? A bifactor analysis of psychological and subjective well-being. Journal of Happiness Studies 14: 1033–68. [Google Scholar] [CrossRef]

- Côté, Stéphane, and Ivona Hideg. 2011. The ability to influence others via emotion displays: A new dimension of emotional intelligence. Organizational Psychology Review 1: 53–71. [Google Scholar] [CrossRef]

- Côté, Stéphane. 2017. Enhancing managerial effectiveness via four core facets of emotional intelligence. Organizational Dynamics 3: 140–47. [Google Scholar] [CrossRef]

- Critchley, Hugo D., Stefan Wiens, Pia Rotshtein, Arne Öhman, and Raymond J. Dolan. 2004. Neural systems supporting interoceptive awareness. Nature Neuroscience 7: 189–95. [Google Scholar] [CrossRef] [PubMed]

- Cucina, Jeffrey, and Kevin Byle. 2017. The bifactor model fits better than the higher-order model in more than 90% of comparisons for mental abilities test batteries. Journal of Intelligence 5: 27. [Google Scholar] [CrossRef] [PubMed]

- Damasio, Antonio R. 2010. Self Comes to Mind: Constructing the Conscious Brain. New York: Random House, Inc. [Google Scholar]

- David, Nicole, Bettina H. Bewernick, Michael X. Cohen, Albert Newen, Silke Lux, Gereon R. Fink, N. Jon Shah, and Kai Vogeley. 2006. Neural representations of self versus other: Visual-spatial perspective taking and agency in a virtual ball-tossing game. Journal of Cognitive Neuroscience 18: 898–910. [Google Scholar] [CrossRef]

- DeSimone, Justin A., Peter D. Harms, and Alice J. DeSimone. 2015. Best practice recommendations for data screening. Journal of Organizational Behavior 36: 171–81. [Google Scholar] [CrossRef]

- Diener, Ed. 1984. Subjective well-being. Psych Bulletin 95: 542–75. [Google Scholar] [CrossRef]

- Diener, Ed, Eunkook M. Suh, Richard E. Lucas, and Heidi L. Smith. 1999. Subjective well-being: Three decades of progress. Psychological Bulletin 125: 276. [Google Scholar] [CrossRef]

- Diener, Ed, and Martin E. P. Seligman. 2002. Very happy people. Psychological Science 13: 81–84. [Google Scholar] [CrossRef]

- Diener, Ed, Derrick Wirtz, William Tov, Chu Kim-Prieto, Dong-won Choi, Shigehiro Oishi, and Robert Biswas-Diener. 2010. New well-being measures: Short scales to assess flourishing and positive and negative feelings. Social Indicators Research 97: 143–56. [Google Scholar] [CrossRef]

- Donnellan, M. Brent, Frederick L. Oswald, Brendan M. Baird, and Richard E. Lucas. 2006. The mini-IPIP scales: Tiny-yet-effective measures of the Big Five factors of personality. Psychological Assessment 18: 192. [Google Scholar] [CrossRef]

- Dueber, D.M. 2020. BifactorIndicesCalculator. Available online: https://CRAN.R-project.org/package=BifactorIndicesCalculator (accessed on 5 June 2020).

- Dunbar, Robin I. M. 2009. The social brain hypothesis and its implications for social evolution. Annals of Human Biology 36: 562–72. [Google Scholar] [CrossRef]

- Eid, Michael, Christian Geiser, Tobias Koch, and Moritz Heene. 2017. Anomalous results in G-factor models: Explanations and alternatives. Psychological Methods 22: 541. [Google Scholar] [CrossRef]

- Eid, Michael, Stefan Krumm, Tobias Koch, and Julian Schulze. 2018. Bifactor models for predicting criteria by general and specific factors: Problems of nonidentifiability and alternative solutions. Journal of Intelligence 6: 42. [Google Scholar] [CrossRef]

- Elfenbein, Hillary Anger, Daisung Jang, Sudeep Sharma, and Jeffrey Sanchez-Burks. 2017. Validating emotional attention regulation as a component of emotional intelligence: A Stroop approach to individual differences in tuning in to and out of nonverbal cues. Emotion 17: 348. [Google Scholar] [CrossRef]

- Elfenbein, Hillary Anger, and Carolyn MacCann. 2017. A closer look at ability emotional intelligence (EI): What are its component parts, and how do they relate to each other? Social and Personality Psychology Compass 11: e12324. [Google Scholar] [CrossRef]

- Fan, Huiyong, Todd Jackson, Xinguo Yang, Wenqing Tang, and Jinfu Zhang. 2010. The factor structure of the Mayer–Salovey–Caruso Emotional Intelligence Test V 2.0 (MSCEIT): A meta-analytic structural equation modeling approach. Personality and Individual Differences 48: 781–85. [Google Scholar] [CrossRef]

- Fiori, Marina. 2009. A new look at emotional intelligence: A dual-process framework. Personality and Social Psychology Review 13: 21–44. [Google Scholar] [CrossRef]

- Fiori, Marina, and John Antonakis. 2011. The ability model of emotional intelligence: Searching for valid measures. Personality and Individual Differences 50: 329–34. [Google Scholar] [CrossRef]

- Fiori, Marina, Jean-Philippe Antonietti, Moira Mikolajczak, Olivier Luminet, Michel Hansenne, and Jérôme Rossier. 2014. What is the ability emotional intelligence test (MSCEIT) good for? An evaluation using item response theory. PLoS ONE 9: e98827. [Google Scholar] [CrossRef] [PubMed]

- Floyd, Randy G., Jeffrey J. Evans, and Kevin S. McGrew. 2003. Relations between measures of Cattell-Horn-Carroll (CHC) cognitive abilities and mathematics achievement across the school-age years. Psychology in the Schools 40: 155–71. [Google Scholar] [CrossRef]

- Freudenthaler, H. Harald, Aljoscha C. Neubauer, and Ursula Haller. 2008. Emotional intelligence: Instruction effects and sex differences in emotional management abilities. Journal of Individual Differences 29: 105–15. [Google Scholar] [CrossRef]

- Gallup, Gordon G., Jr. 1998. Self-awareness and the evolution of social intelligence. Behavioural Processes 42: 239–47. [Google Scholar] [CrossRef]

- Gardner, Howard. 1983. Frames of Mind: The Theory of Multiple Intelligences. New York: Basic Books. [Google Scholar]

- Garnefski, Nadia, Vivian Kraaij, and Philip Spinhoven. 2001. Negative life events, cognitive emotion regulation and emotional problems. Personality and Individual Differences 30: 1311–27. [Google Scholar] [CrossRef]

- Gignac, Gilles E. 2008. Higher-order models versus direct hierarchical models: G as superordinate or breadth factor? Psychology Science 50: 21. [Google Scholar]

- Gignac, Gilles E., and André Kretzschmar. 2017. Evaluating dimensional distinctness with correlated-factor models: Limitations and suggestions. Intelligence 62: 138–47. [Google Scholar] [CrossRef]

- Goleman, D. 1995. Emotional Intelligence. New York: Bantam Books. [Google Scholar]

- Gorsuch, Richard L. 1983. Factor Analysis. Hillsdale: Lawrence Erlbaum Associates. [Google Scholar]

- Grice, James W. 2001. Computing and evaluating factor scores. Psychological Methods 6: 430. [Google Scholar] [CrossRef]

- Gross, James J. 1999. Emotion regulation: Past, present, future. Cognition & emotion 13: 551–73. [Google Scholar]

- Gustafsson, Jan-Eric. 1984. A unifying model for the structure of intellectual abilities. Intelligence 8: 179–203. [Google Scholar] [CrossRef]

- Hancock, Gregory R., and R. O. Mueller. 2000. Rethinking construct reliability within latent variable systems. In Scientific Software International. Edited by R. Cudek, S. H. C. duToit and dan D. F. Sorbom. Lincolnwood: Scientific Software International. [Google Scholar]

- Harms, Peter D., and Marcus Credé. 2010. Emotional intelligence and transformational and transactional leadership: A meta-analysis. Journal of Leadership & Organizational Studies 17: 5–17. [Google Scholar]

- Heinrich, Manuel, Pavle Zagorscak, Michael Eid, and Christine Knaevelsrud. 2018. Giving G a meaning: An application of the bifactor-(S-1) approach to realize a more symptom-oriented modeling of the Beck depression inventory–II. Assessment. [Google Scholar] [CrossRef]

- Hoerger, Michael, Benjamin P. Chapman, Ronald M. Epstein, and Paul R. Duberstein. 2012. Emotional intelligence: A theoretical framework for individual differences in affective forecasting. Emotion 12: 716. [Google Scholar] [CrossRef] [PubMed]

- Horn, John L., and Raymond B. Cattell. 1966. Refinement and test of the theory of fluid and crystallized general intelligences. Journal of Educational Psychology 57: 253. [Google Scholar] [CrossRef] [PubMed]

- Horn, John L., and Nayena Blankson. 2005. Foundations for better understanding of cognitive abilities. In Contemporary Intellectual Assessment: Theories, Tests, and Issues. Edited by D. P. Flanagan and P. L. Harrison. New York: The Guilford Press, pp. 41–68. [Google Scholar]

- Hu, Li-tze, and Peter M. Bentler. 1999. Cutoff criteria for fit indexes in covariance structure analysis: Conventional criteria versus new alternatives. Structural Equation Modeling: A Multidisciplinary Journal 6: 1–55. [Google Scholar] [CrossRef]

- Hughes, David J., and Thomas Rhys Evans. 2018. Putting ‘emotional intelligences’ in their place: Introducing the integrated model of affect-related individual differences. Frontiers in Psychology 9: 2155. [Google Scholar] [CrossRef]

- Jorgensen, Terrence D., Sunthud Pornprasertmanit, A. M. Schoemann, and Yves Rosseel. 2020. semTools: Useful Tools for Structural Equation Modeling. Available online: https://CRAN.R-project.org/package=semTools (accessed on 5 June 2020).

- Joseph, D. L., and D. A. Newman. 2010. Emotional intelligence: An integrative meta-analysis and cascading model. Journal of Applied Psychology 95: 54–78. [Google Scholar] [CrossRef]

- Keele, Sophie M., and Richard C. Bell. 2008. The factorial validity of emotional intelligence: An unresolved issue. Personality and Individual Differences 44: 487–500. [Google Scholar] [CrossRef]

- Kell, Harrison J., and Jonas W. B. Lang. 2017. Specific abilities in the workplace: More important than g? Journal of Intelligence 5: 13. [Google Scholar] [CrossRef]

- Kovacs, K., and A. R. A. Conway. 2016. Process overlap theory: A unified account of the general factor of intelligence. Psychological Inquiry 27: 151–77. [Google Scholar] [CrossRef]

- Lam, Shui-fong, Shane Jimerson, Bernard P. H. Wong, Eve Kikas, Hyeonsook Shin, Feliciano H. Veiga, Chryse Hatzichristou, Fotini Polychroni, Carmel Cefai, and Valeria Negovan. 2014. Understanding and measuring student engagement in school: The results of an international study from 12 countries. School Psychology Quarterly 29: 213. [Google Scholar] [CrossRef]

- Landis, Ronald S., Daniel J. Beal, and Paul E. Tesluk. 2000. A comparison of approaches to forming composite measures in structural equation models. Organizational Research Methods 3: 186–207. [Google Scholar] [CrossRef]

- Lea, Rosanna G, Pamela Qualter, Sarah K. Davis, Juan-Carlos Pérez-González, and Munirah Bangee. 2018. Trait emotional intelligence and attentional bias for positive emotion: An eye tracking study. Personality and Individual Differences 128: 88–93. [Google Scholar] [CrossRef]

- Little, Todd D., William A. Cunningham, Golan Shahar, and Keith F. Widaman. 2002. To parcel or not to parcel: Exploring the question, weighing the merits. Structural Equation Modeling 9: 151–73. [Google Scholar] [CrossRef]

- Locke, Edwin A. 2005. Why emotional intelligence is an invalid concept. Journal of Organizational Behavior 26: 425–31. [Google Scholar] [CrossRef]

- Lopes, Paulo N., Peter Salovey, Stéphane Côté, Michael Beers, and Richard E. Petty. 2005. Emotion regulation abilities and the quality of social interaction. Emotion 5: 113. [Google Scholar] [CrossRef]

- MacCann, Carolyn, Richard D. Roberts, Gerald Matthews, and Moshe Zeidner. 2004. Consensus scoring and empirical option weighting of performance-based emotional intelligence (EI) tests. Personality and Individual Differences 36: 645–62. [Google Scholar] [CrossRef]

- MacCann, Carolyn, and Richard D. Roberts. 2008. New paradigms for assessing emotional intelligence: Theory and data. Emotion 8: 540. [Google Scholar] [CrossRef]

- MacCann, Carolyn, Dana L. Joseph, Daniel A. Newman, and Richard D. Roberts. 2014. Emotional intelligence is a second-stratum factor of intelligence: Evidence from hierarchical and bifactor models. Emotion 14: 358. [Google Scholar] [CrossRef]

- MacCann, Carolyn, Yixin Jiang, Luke ER Brown, Kit S. Double, Micaela Bucich, and Amirali Minbashian. 2020. Emotional intelligence predicts academic performance: A meta-analysis. Psychological Bulletin 146: 150. [Google Scholar] [CrossRef]

- Maniaci, Michael R., and Ronald D. Rogge. 2014. Caring about carelessness: Participant inattention and its effects on research. Journal of Research in Personality 48: 61–83. [Google Scholar] [CrossRef]

- Marsh, Herbert W., Kit-Tai Hau, and David Grayson. 2005. Goodness of fit in structural equation models. In Multivariate Applications Book Series. Contemporary Psychometrics: A Festschrift for Roderick P. McDonald. Edited by A. Maydeu-Olivares and J. J. McArdle. Hillsdale: Larence Erlbaum Associates Publishers, pp. 275–340. [Google Scholar]

- Maul, Andrew. 2012. The validity of the Mayer–Salovey–Caruso Emotional Intelligence Test (MSCEIT) as a measure of emotional intelligence. Emotion Review 4: 394–402. [Google Scholar] [CrossRef]

- Mayer, John D., and Peter Salovey. 1997. What is emotional intelligence. In Emotional Development and Emotional Intelligence: Implications for Educators. Edited by P. Salovey and D. Sluyter. New York: Basic Books, pp. 3–31. [Google Scholar]

- Mayer, John D., David R. Caruso, and Peter Salovey. 1999. Emotional intelligence meets traditional standards for an intelligence. Intelligence 27: 267–98. [Google Scholar] [CrossRef]

- Mayer, John D., P. Caruso Salovey, D. R. Caruso, and G. Sitarenios. 2003. Measuring emotional intelligence with the MSCEIT V2.0. Emotion 3: 97–105. [Google Scholar] [CrossRef]

- Mayer, John D., Richard D. Roberts, and Sigal G. Barsade. 2008. Human abilities: Emotional intelligence. Annual Review of Psychology 59: 507–36. [Google Scholar] [CrossRef] [PubMed]

- Mayer, John D., David R. Caruso, and Peter Salovey. 2016. The ability model of emotional intelligence: Principles and updates. Emotion Review 8: 290–300. [Google Scholar] [CrossRef]

- McDonald, Roderick P. 2010. Structural models and the art of approximation. Perspectives on Psychological Science 5: 675–86. [Google Scholar] [CrossRef]

- McGrew, Kevin S. 2009. CHC theory and the human cognitive abilities project: Standing on the shoulders of the giants of psychometric intelligence research. Intelligence 37: 1–10. [Google Scholar] [CrossRef]

- Mestre, José M., Carolyn MacCann, Rocío Guil, and Richard D. Roberts. 2016. Models of Cognitive Ability and Emotion Can Better Inform Contemporary Emotional Intelligence Frameworks. Emotion Review 8: 322–30. [Google Scholar] [CrossRef]

- Mount, Michael K., In-Sue Oh, and Melanie Burns. 2008. Incremental validity of perceptual speed and accuracy over general mental ability. Personnel Psychology 61: 113–39. [Google Scholar] [CrossRef]

- Murray, Aja L, and Wendy Johnson. 2013. The limitations of model fit in comparing the bi-factor versus higher-order models of human cognitive ability structure. Intelligence 41: 407–22. [Google Scholar] [CrossRef]

- Naglieri, Jack A., and Tulio M. Otero. 2012. The Cognitive Assessment System: From theory to practice. In Contemporary Intellectual Assessment: Theories, Tests, and Issues. Edited by D. P. Flanagan and P. L. Harrison. New York: The Guilford Press, pp. 376–99. [Google Scholar]

- Nathanson, Lori, Susan E. Rivers, Lisa M. Flynn, and Marc A. Brackett. 2016. Creating emotionally intelligent schools with RULER. Emotion Review 8: 305–10. [Google Scholar] [CrossRef]

- Niven, Karen, Peter Totterdell, Christopher B. Stride, and David Holman. 2011. Emotion Regulation of Others and Self (EROS): The development and validation of a new individual difference measure. Current Psychology 30: 53–73. [Google Scholar] [CrossRef]

- Olderbak, Sally, Martin Semmler, and Philipp Doebler. 2019. Four-branch model of ability emotional intelligence with fluid and crystallized intelligence: A meta-analysis of relations. Emotion Review 11: 166–83. [Google Scholar] [CrossRef]

- Parke, Michael R., Myeong-Gu Seo, and Elad N. Sherf. 2015. Regulating and facilitating: The role of emotional intelligence in maintaining and using positive affect for creativity. Journal of Applied Psychology 100: 917. [Google Scholar] [CrossRef]

- Pekaar, Keri A., Arnold B. Bakker, Dimitri van der Linden, and Marise Ph Born. 2018. Self-and other-focused emotional intelligence: Development and validation of the Rotterdam Emotional Intelligence Scale (REIS). Personality and Individual Differences 120: 222–33. [Google Scholar] [CrossRef]

- Peña-Sarrionandia, Ainize, Moïra Mikolajczak, and James J. Gross. 2015. Integrating emotion regulation and emotional intelligence traditions: A meta-analysis. Frontiers in Psychology 6: 160. [Google Scholar] [CrossRef] [PubMed]

- R Core Team. 2019. R: A Language and Environment for Statistical Computing. Vienna: R Foundation for Statistical Computing. [Google Scholar]

- Reise, Steven P. 2012. The rediscovery of bifactor measurement models. Multivariate Behavioral Research 47: 667–96. [Google Scholar] [CrossRef]

- Reise, Steven P., Wes E. Bonifay, and Mark G. Haviland. 2013. Scoring and modeling psychological measures in the presence of multidimensionality. Journal of Personality Assessment 95: 129–40. [Google Scholar] [CrossRef]

- Rich, Bruce Louis, Jeffrey A. Lepine, and Eean R. Crawford. 2010. Job engagement: Antecedents and effects on job performance. Academy of Management Journal 53: 617–35. [Google Scholar] [CrossRef]

- Roberts, Richard D., Ralf Schulze, Kristin O’Brien, Carolyn MacCann, John Reid, and Andy Maul. 2006. Exploring the validity of the Mayer-Salovey-Caruso Emotional Intelligence Test (MSCEIT) with established emotions measures. Emotion 6: 663. [Google Scholar] [CrossRef]

- Rodriguez, Anthony, Steven P. Reise, and Mark G. Haviland. 2016a. Applying bifactor statistical indices in the evaluation of psychological measures. Journal of Personality Assessment 98: 223–37. [Google Scholar] [CrossRef]

- Rodriguez, Anthony, Steven P. Reise, and Mark G. Haviland. 2016b. Evaluating bifactor models: Calculating and interpreting statistical indices. Psychological Methods 21: 137. [Google Scholar] [CrossRef]

- Roseman, Ira J. 2001. A model of appraisal in the emotion system. Appraisal Processes in Emotion: Theory, Methods, Research, 68–91. [Google Scholar]

- Rosseel, Yves. 2012. Lavaan: An R package for structural equation modeling and more. Version 0.5-12 (BETA). Journal of Statistical Software 48: 1–36. [Google Scholar] [CrossRef]

- Rossen, Eric, and John H. Kranzler. 2009. Incremental validity of the Mayer–Salovey–Caruso Emotional Intelligence Test Version 2.0 (MSCEIT) after controlling for personality and intelligence. Journal of Research in Personality 43: 60–65. [Google Scholar] [CrossRef]

- Salovey, Peter, and John D. Mayer. 1990. Emotional intelligence. Imagination, Cognition and Personality 9: 185–211. [Google Scholar] [CrossRef]

- Sánchez-Álvarez, Nicolás, Natalio Extremera, and Pablo Fernández-Berrocal. 2016. The relation between emotional intelligence and subjective well-being: A meta-analytic investigation. The Journal of Positive Psychology 11: 276–85. [Google Scholar] [CrossRef]

- Satorra, Albert, and Peter M. Bentler. 2001. A scaled difference chi-square test statistic for moment structure analysis. Psychometrika 66: 507–14. [Google Scholar] [CrossRef]

- Scherer, Klaus R., Angela Schorr, and Tom Johnstone. 2001. Appraisal Processes in Emotion: Theory, Methods, Research. Oxford: Oxford University Press. [Google Scholar]

- Schlegel, Katja, Didier Grandjean, and Klaus R. Scherer. 2012. Emotion recognition: Unidimensional ability or a set of modality-and emotion-specific skills? Personality and Individual Differences 53: 16–21. [Google Scholar] [CrossRef]

- Schlegel, Katja, and Klaus R. Scherer. 2016. Introducing a short version of the Geneva Emotion Recognition Test (GERT-S): Psychometric properties and construct validation. Behavior Research Methods 48: 1383–92. [Google Scholar] [CrossRef]

- Schlegel, Katja, Joëlle S. Witmer, and Thomas H. Rammsayer. 2017. Intelligence and sensory sensitivity as predictors of emotion recognition ability. Journal of Intelligence 5: 35. [Google Scholar] [CrossRef] [PubMed]

- Schlegel, Katja, and Marcello Mortillaro. 2019. The Geneva Emotional Competence Test (GECo): An ability measure of workplace emotional intelligence. Journal of Applied Psychology 104: 559. [Google Scholar] [CrossRef]

- Schlegel, Katja. 2020. Inter-and intrapersonal downsides of accurately perceiving others’ emotions. In Social Intelligence and Nonverbal Communication. Edited by Robert J. Sternberg and Aleksandra Kostić. Cham: Switzerland Palgrave Macmillan, pp. 359–95. [Google Scholar]

- Schneider, W. Joel, and Daniel A. Newman. 2015. Intelligence is multidimensional: Theoretical review and implications of specific cognitive abilities. Human Resource Management Review 25: 12–27. [Google Scholar] [CrossRef]

- Schneider, W. Joel, John D. Mayer, and Daniel A. Newman. 2016. Integrating hot and cool intelligences: Thinking broadly about broad abilities. Journal of Intelligence 4: 1. [Google Scholar] [CrossRef]

- Schneider, W Joel, and Kevin S McGrew. 2018. The Cattell–Horn–Carroll theory of cognitive abilities. In Contemporary Intellectual Assessment: Theories, Tests, and Issues. Edited by D. P. Flanagan and E. M. McDonough. New York: The Guilford Press, pp. 73–163. [Google Scholar]

- Schulte, Melanie J., Malcolm James Ree, and Thomas R. Carretta. 2004. Emotional intelligence: Not much more than g and personality. Personality and Individual Differences 37: 1059–68. [Google Scholar] [CrossRef]

- Soto, Christopher J., Christopher M. Napolitano, and Brent W. Roberts. 2020. Taking Skills Seriously: Toward an Integrative Model and Agenda for Social, Emotional, and Behavioral Skills. Current Directions in Psychological Science. [Google Scholar] [CrossRef]

- Spearman, Charles. 1904. ‘General intelligence,’ objectively determined and measured. The American Journal of Psychology 15: 201–93. [Google Scholar] [CrossRef]

- Sternberg, Robert J. 1984. Toward a triarchic theory of human intelligence. Behavioral and Brain Sciences 7: 269–87. [Google Scholar] [CrossRef]

- Stucky, Brian D., and Maria Orlando Edelen. 2014. Using hierarchical IRT models to create unidimensional measures from multidimensional data. In Handbook of Item Response Theory Modeling: Applications to Typical Performance Assessment. New York: Routledge/Taylor & Francis Group, pp. 183–206. [Google Scholar]

- Styck, K. M. 2019. Psychometric issues pertaining to the measurement of specific broad and narrow intellectual abilities. In General and Specific mental Abilities. Edited by D. J. McFarland. Newcastle: Cambridge Scholars Publishing, pp. 80–107. [Google Scholar]

- Su, Rong, Louis Tay, and Ed Diener. 2014. The development and validation of the Comprehensive Inventory of Thriving (CIT) and the Brief Inventory of Thriving (BIT). Applied Psychology: Health and Well-Being 6: 251–79. [Google Scholar] [CrossRef]

- Tamir, Maya. 2016. Why do people regulate their emotions? A taxonomy of motives in emotion regulation. Personality and Social Psychology Review 20: 199–222. [Google Scholar] [CrossRef]

- Thomas, K. W. 1976. Conflict and Conflict Management. In Handbook of Industrial and Organizational Psychology. Edited by M. D. Dunnette. New York: Rand McNally, pp. 889–935. [Google Scholar]

- Thurstone, Louis Leon. 1938. Primary Mental Abilities. Chicago: University of Chicago Press Chicago, vol. 119. [Google Scholar]

- van Bork, Riet, Sacha Epskamp, Mijke Rhemtulla, Denny Borsboom, and Han L. J. van der Maas. 2017. What is the p-factor of psychopathology? Some risks of general factor modeling. Theory & Psychology 27: 759–73. [Google Scholar]

- van der Linden, Dimitri, Keri A. Pekaar, Arnold B. Bakker, Julie Aitken Schermer, Philip A. Vernon, Curtis S. Dunkel, and K. V. Petrides. 2017. Overlap between the general factor of personality and emotional intelligence: A meta-analysis. Psychological Bulletin 143: 36. [Google Scholar] [CrossRef]

- Van Der Maas, Han L. J., Conor V. Dolan, Raoul P. P. P. Grasman, Jelte M. Wicherts, Hilde M. Huizenga, and Maartje E. J. Raijmakers. 2006. A dynamical model of general intelligence: The positive manifold of intelligence by mutualism. Psychological Review 113: 842. [Google Scholar] [CrossRef]

- Vesely Maillefer, Ashley, Shagini Udayar, and Marina Fiori. 2018. Enhancing the prediction of emotionally intelligent behavior: The PAT integrated framework involving trait ei, ability ei, and emotion information processing. Frontiers in Psychology 9: 1078. [Google Scholar] [CrossRef]

- Vogeley, Kai, Patrick Bussfeld, Albert Newen, Sylvie Herrmann, Francesca Happé, Peter Falkai, Wolfgang Maier, Nadim J. Shah, Gereon R. Fink, and Karl Zilles. 2001. Mind reading: Neural mechanisms of theory of mind and self-perspective. Neuroimage 14: 170–81. [Google Scholar] [CrossRef]

- Völker, Juliane. 2020. An Examination of Ability Emotional Intelligence and Its Relationships with Fluid and Crystallized Abilities in A Student Sample. Journal of Intelligence 8: 18. [Google Scholar] [CrossRef]

- West, Stephen G., Aaron B. Taylor, and Wei Wu. 2012. Model fit and model selection in structural equation modeling. In Handbook of Structural Equation Modeling. Edited by R. Hoyle. New York: Guilford Press, pp. 209–31. [Google Scholar]

- Ybarra, Oscar, Ethan Kross, and Jeffrey Sanchez-Burks. 2014. The “big idea” that is yet to be: Toward a more motivated, contextual, and dynamic model of emotional intelligence. Academy of Management Perspectives 28: 93–107. [Google Scholar] [CrossRef]

- Yik, Michelle, James A. Russell, and James H. Steiger. 2011. A 12-point circumplex structure of core affect. Emotion 11: 705. [Google Scholar] [CrossRef] [PubMed]

- Yip, Jeremy A., and Stéphane Côté. 2013. The emotionally intelligent decision maker: Emotion-understanding ability reduces the effect of incidental anxiety on risk taking. Psychological Science 24: 48–55. [Google Scholar] [CrossRef] [PubMed]

- Zeidner, Moshe, and Dorit Olnick-Shemesh. 2010. Emotional intelligence and subjective well-being revisited. Personality and Individual Differences 48: 431–35. [Google Scholar] [CrossRef]

- Zeidner, Moshe, Gerald Matthews, and Richard D. Roberts. 2012. The emotional intelligence, health, and well-being nexus: What have we learned and what have we missed? Applied Psychology: Health and Well-Being 4: 1–30. [Google Scholar] [CrossRef]

| 1 | Technically Spearman proposed a two-factor theory of intelligence wherein each ability task is uniquely influenced by a second factor orthogonal to g. However, Spearman considered such factors largely nuisance and focused predominantly on a general ability. |

| 2 | We ran supplementary analyses applying a correlational parceling strategies (Landis et al. 2000), in which items were assigned in triplets based upon strongest associations. This expanded the number of factor indicators to 14 ERA parcels, 6 EU parcels, 9 ER parcels, and 7 EM parcels. Conclusions about model-quality and bifactor indices were the same; therefore, we retain the parsimonious facet-representative parceling strategy. Results for correlation parceling available upon request. |

| 3 | The reverse ordering is possible as well: people who are not doing so great at life may try to fix it by better focusing on improving skills in reading others’ emotions. |

| Statistical Index | 33rd Percentile | 66th Percentile |

|---|---|---|

| Omega (total scale) | .92 | .95 |

| Omega (subscale) | .82 | .90 |

| OmegaH | .76 | .84 |

| OmegaHS | .20 | .34 |

| ECV | .61 | .70 |

| PUC | .63 | .72 |

| FD (general) | .93 | .96 |

| FD (group) | .76 | .85 |

| H (general) | .90 | .93 |

| H (group) | .48 | .63 |

| Var | M | SD | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | 11 | 12 | 13 | 14 | 15 |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| ARM | 3.58 | 1.87 | .61 | ||||||||||||||

| ERA | .56 | .12 | .33 ** | .66 | |||||||||||||

| EU | .66 | .13 | .29 ** | .34 ** | .55 | ||||||||||||

| ER | .56 | .11 | −.03 | .00 | −.01 | .75 | |||||||||||

| EM | .44 | .16 | .26 ** | .34 ** | .35 ** | .15 ** | .61 | ||||||||||

| GECo | .55 | .08 | .35 ** | .65 ** | .68 ** | .39 ** | .79 ** | .79 | |||||||||

| O | 4.98 | 1.04 | .12 ** | .13 ** | .09 ** | .11 ** | .10 ** | .17 ** | .76 | ||||||||

| C | 4.65 | 1.17 | −.07 * | −.14 ** | −.07 | .17 ** | −.02 | −.03 | −.01 | .78 | |||||||

| E | 4.13 | 1.32 | −.13 ** | −.06 | −.10 ** | .15 ** | −.07 * | −.05 | .18 ** | .03 | .84 | ||||||

| A | 5.48 | .95 | −.01 | .11 ** | .13 ** | .06 | .13 ** | .17 ** | .24 ** | .03 | .22 ** | .77 | |||||

| N | 4.17 | 1.10 | .05 | .10 ** | .03 | −.30 ** | −.02 | −.06 | −.06 | −.19 ** | −.11 ** | .00 | .73 | ||||

| Thri | 5.54 | .98 | −.13 ** | −.12 ** | −.07 | .26 ** | −.02 | .00 | .10 ** | .27 ** | .34 ** | .20 ** | −.32 ** | .96 | |||

| PF | 4.97 | .92 | −.13 ** | −.10 ** | −.04 | .21 ** | −.03 | .00 | .07 * | .15 ** | .31 ** | .20 ** | −.29 ** | .61 ** | .92 | ||

| NF | 3.39 | 1.08 | .08 * | .11 ** | .05 | −.24 ** | −.00 | −.02 | −.04 | −.23 ** | −.18 ** | −.11 ** | .44 ** | −.52 ** | −.53 ** | .90 | |

| AfE | 5.30 | 1.02 | −.05 | −.06 | .03 | .17 ** | .03 | .06 | .11 ** | .13 ** | .13 ** | .20 ** | −.19 ** | .47 ** | .52 ** | −.38 ** | .95 |

| GPA | 3.22 | .51 | .11 ** | .19 ** | .16 ** | −.05 | .11 ** | .17 ** | .10 ** | .10 ** | .05 | .11 ** | .04 | .17** | .11 ** | −.10 ** | .08 * |

| Model | df | TLI | CFI | RMSEA (90% CI) | SRMR | AIC | BIC | |

|---|---|---|---|---|---|---|---|---|

| Model 1: One-factor | 894.262 | 135 | .546 | .599 | .083 (.078–.088) | .078 | −6079.704 | −5910.125 |

| Model 2: Five-factor | 117.990 | 125 | 1.00 | 1.00 | .000 (.000–.015) | .025 | −6842.713 | −6626.029 |

| Model 3: Hierarchical | 146.341 | 130 | .990 | .991 | .012 (.000–.022) | .033 | −6824.688 | −6631.556 |

| Model 4: Bifactor | 125.545 | 117 | .994 | .996 | .009 (.000–.020) | .030 | −6819.490 | −6565.122 |

| Model 5: Bifactor-mod | 100.662 | 116 | 1.00 | 1.00 | .000 (.000–.011) | .021 | −6842.358 | −6583.279 |

| Parcels | General | GF | ERA | EU | ER | EM |

|---|---|---|---|---|---|---|

| ARM1 | .345 (.012) | .486 (.016) | ||||

| ARM2 | .466 (.013) | .486 (.021) | ||||

| ARM3 | .262 (.009) | .365 (.011) | ||||

| ERA1 | .469 (.006) | .376 (.010) | ||||

| ERA2 | .400 (.010) | .298 (.015) | ||||

| ERA3 | .345 (.008) | .404 (.014) | ||||

| ERA4 | .321 (.011) | .151 (.015) | ||||

| EU1 | .329 (.009) | .419 (.022) | ||||

| EU2 | .357 (.009) | .252 (.016) | ||||

| EU3 | .318 (.011) | .231 (.020) | ||||

| EU4 | .499 (.010) | .186 (.018) | ||||

| ER1 | .009 (.005) | .617 (.005) | ||||

| ER2 | −.006 (.005) | .600 (.005) | ||||

| ER3 | .122 (.006) | .823 (.006) | ||||

| EM1 | .499 (.011) | .422 (.015) | ||||

| EM2 | .333 (.010) | .399 (.014) | ||||

| EM3 | .296 (.009) | .272 (.013) | ||||

| EM4 | .416 (.010) | .398 (.015) | ||||

| ECV_GS | .40 | .40 | .59 | .64 | .01 | .52 |

| ECV_SG | .40 | .11 | .07 | .06 | .25 | .10 |

| FD | .82 | .66 | .56 | .51 | .88 | .62 |

| H | .73 | .44 | .32 | .27 | .77 | .40 |

| .78 | .60 | .56 | .53 | .73 | .62 | |

| .59 | .36 | .22 | .18 | .77 | .40 |

| Correlations | Outcomes (Standardized Beta Weights) | |||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Factor | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | GPA | TH | PF | NF | AE |

| GA-FI | .138 ** | −.108 ** | −.125 ** | .090 * | −.039 | |||||||||

| ERA | .00 | .185 * | −.041 | −.046 | .018 | −.158 * | ||||||||

| EU | .00 | .49 ** | .115 | .029 | .038 | .029 | .131 | |||||||

| ER | .00 | .03 | .03 | −.129 * | .091 † | .075 | −.033 | .115 * | ||||||

| EM | .00 | .43 ** | .53 ** | .26 ** | −.021 | −.034 | −.011 | −.005 | −.024 | |||||

| O | .00 | .19 ** | .12 † | .16 ** | .14 * | .043 | −.056 | −.102 * | .117 * | .026 | ||||

| C | .00 | −.21 ** | −.09 | .23 ** | .02 | .02 | .178 ** | .189 ** | .040 | −.099 * | .105 * | |||

| E | .00 | −.03 | −.11 † | .18 ** | −.07 | .26 ** | .00 | .083 † | .295 ** | .272 ** | −.113 * | .056 | ||

| A | .00 | .18 ** | .22 ** | .07 | .24 ** | .36 ** | .04 | .26 ** | .052 | .169 ** | .198 ** | −.122 * | .214 ** | |

| N | .00 | .14 * | .03 | −.43 ** | −.11 † | −.18 ** | −.34 ** | −.17 ** | .02 | −.002 | −.263 ** | −.288 ** | .543 ** | −.117 * |

| R2 | .119 | .351 | .282 | .401 | .162 | |||||||||

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Simonet, D.V.; Miller, K.E.; Askew, K.L.; Sumner, K.E.; Mortillaro, M.; Schlegel, K. How Multidimensional Is Emotional Intelligence? Bifactor Modeling of Global and Broad Emotional Abilities of the Geneva Emotional Competence Test. J. Intell. 2021, 9, 14. https://doi.org/10.3390/jintelligence9010014

Simonet DV, Miller KE, Askew KL, Sumner KE, Mortillaro M, Schlegel K. How Multidimensional Is Emotional Intelligence? Bifactor Modeling of Global and Broad Emotional Abilities of the Geneva Emotional Competence Test. Journal of Intelligence. 2021; 9(1):14. https://doi.org/10.3390/jintelligence9010014

Chicago/Turabian StyleSimonet, Daniel V., Katherine E. Miller, Kevin L. Askew, Kenneth E. Sumner, Marcello Mortillaro, and Katja Schlegel. 2021. "How Multidimensional Is Emotional Intelligence? Bifactor Modeling of Global and Broad Emotional Abilities of the Geneva Emotional Competence Test" Journal of Intelligence 9, no. 1: 14. https://doi.org/10.3390/jintelligence9010014

APA StyleSimonet, D. V., Miller, K. E., Askew, K. L., Sumner, K. E., Mortillaro, M., & Schlegel, K. (2021). How Multidimensional Is Emotional Intelligence? Bifactor Modeling of Global and Broad Emotional Abilities of the Geneva Emotional Competence Test. Journal of Intelligence, 9(1), 14. https://doi.org/10.3390/jintelligence9010014