Convolutional Neural Network Based on Crossbar Arrays of (Co-Fe-B)x(LiNbO3)100−x Nanocomposite Memristors

Abstract

:1. Introduction

2. Materials and Methods

2.1. Device Fabrication

2.2. Electrical Measurements

2.3. TEM

2.4. Hardware Convolutional Layer Implementation

2.5. Neural Network Simulation

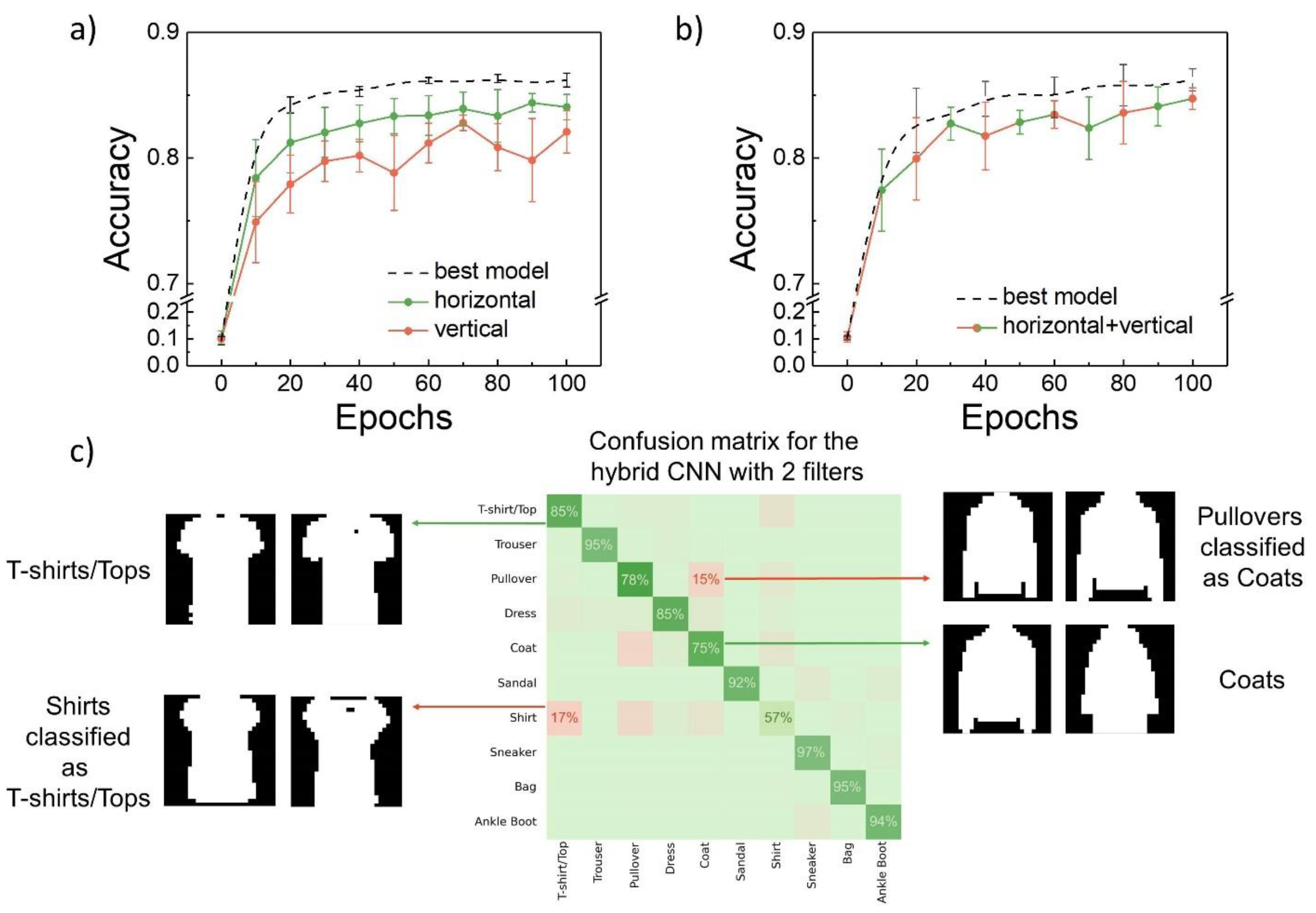

3. Results and Discussion

4. Conclusions

Supplementary Materials

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Ielmini, D.; Wong, H.-S.P. In-memory computing with resistive switching devices. Nat. Electron. 2018, 1, 333–343. [Google Scholar] [CrossRef] [Green Version]

- Zhang, Y.; Wang, Z.; Zhu, J.; Yang, Y.; Rao, M.; Song, W.; Zhuo, Y.; Zhang, X.; Cui, M.; Shen, L.; et al. Brain-inspired computing with memristors: Challenges in devices, circuits, and systems. Appl. Phys. Rev. 2020, 7, 011308. [Google Scholar] [CrossRef]

- Pi, S.; Li, C.; Jiang, H.; Xia, W.; Xin, H.; Yang, J.J.; Xia, Q. Memristor crossbar arrays with 6-nm half-pitch and 2-nm critical dimension. Nat. Nanotechnol. 2019, 14, 35–39. [Google Scholar] [CrossRef] [PubMed]

- Xia, Q.; Yang, J.J. Memristive crossbar arrays for brain-inspired computing. Nat. Mater. 2019, 18, 309–323. [Google Scholar] [CrossRef] [PubMed]

- Prezioso, M.; Merrikh-Bayat, F.; Hoskins, B.D.; Adam, G.C.; Likharev, K.K.; Strukov, D.B. Training and operation of an integrated neuromorphic network based on metal-oxide memristors. Nature 2015, 521, 61–64. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Shchanikov, S.; Zuev, A.; Bordanov, I.; Danilin, S.; Lukoyanov, V.; Korolev, D.; Belov, A.; Pigareva, Y.; Gladkov, A.; Pimashkin, A.; et al. Designing a bidirectional, adaptive neural interface incorporating machine learning capabilities and memristor-enhanced hardware. Chaos Solitons Fractals 2021, 142, 110504. [Google Scholar] [CrossRef]

- Demin, V.A.; Nekhaev, D.V.; Surazhevsky, I.A.; Nikiruy, K.E.; Emelyanov, A.V.; Nikolaev, S.N.; Rylkov, V.V.; Kovalchuk, M.V. Necessary conditions for STDP-based pattern recognition learning in a memristive spiking neural network. Neural Netw. 2021, 134, 64–75. [Google Scholar] [CrossRef] [PubMed]

- Sboev, A.; Davydov, Y.; Rybka, R.; Vlasov, D.; Serenko, A.A. Comparison of Two Variants of Memristive Plasticity for Solving the Classification Problem of Handwritten Digits Recognition. In Biologically Inspired Cognitive Architectures 2021, Proceedings of the 12th Annual Meeting of the BICA Society (BICA 2021), Kyoto, Japan, 13-19 September 2021; Springer: Cham, Switzerland; Volume 1032, p. 438.

- Li, C.; Wang, Z.; Rao, M.; Belkin, D.; Song, W.; Jiang, H.; Yan, P.; Li, Y.; Lin, P.; Hu, M.; et al. Long short-term memory networks in memristor crossbar arrays. Nat. Mach. Intell. 2019, 1, 49–57. [Google Scholar] [CrossRef] [Green Version]

- Sun, J.; Han, G.; Zeng, Z.; Wang, Y. Memristor-based neural network circuit of full-function pavlov associative memory with time delay and variable learning rate. IEEE Trans. Cybern. 2019, 50, 2935–2945. [Google Scholar] [CrossRef]

- Sun, J.; Han, J.; Wang, Y.; Liu, P. Memristor-based neural network circuit of emotion congruent memory with mental fatigue and emotion inhibition. IEEE Trans. Biomed. Circuits Syst. 2021, 15, 606–616. [Google Scholar] [CrossRef]

- Sun, J.; Han, J.; Liu, P.; Wang, Y. Memristor-based neural network circuit of pavlov associative memory with dual mode switching. AEU-Int. J. Electron. Commun. 2021, 129, 153552. [Google Scholar] [CrossRef]

- Yang, J.; Cho, H.; Ryu, H.; Ismail, M.; Mahata, C.; Kim, S. Tunable synaptic characteristics of a Ti/TiO2/Si memory device for reservoir computing. ACS Appl. Mater. Interfaces 2021, 13, 33244. [Google Scholar] [CrossRef] [PubMed]

- Wang, Z.; Li, C.; Lin, P.; Rao, M.; Nie, Y.; Song, W.; Qiu, Q.; Li, Y.; Yan, P.; Strachan, J.P.; et al. In situ training of feed-forward and recurrent convolutional memristor networks. Nat. Mach. Intell. 2019, 1, 434–442. [Google Scholar] [CrossRef]

- Qin, Y.-F.; Bao, H.; Wang, F.; Chen, J.; Li, Y.; Miao, X.-S. Recent progress on memristive convolutional neural networks for edge intelligence. Adv. Intell. Syst. 2020, 2, 2000114. [Google Scholar] [CrossRef]

- Huang, L.; Diao, J.; Nie, H.; Wang, W.; Li, Z.; Li, Q.; Liu, H. Memristor based binary convolutional neural network architecture with configurable neurons. Front. Neurosci. 2021, 15, 639526. [Google Scholar] [CrossRef]

- Gao, L.; Chen, P.Y.; Yu, S. Demonstration of convolution kernel operation on resistive cross-point array. IEEE Electron Device Lett. 2016, 37, 870–873. [Google Scholar] [CrossRef]

- Zeng, X.; Wen, S.; Zeng, Z.; Huang, T. Design of memristor-based image convolution calculation in convolutional neural network. Neural Comput. Appl. 2018, 30, 503–508. [Google Scholar] [CrossRef]

- Chen, J.; Chang, T.C.; Sze, S.M.; Miao, X.S.; Pan, W.Q.; Li, Y.; Kuang, R.; He, Y.H.; Lin, C.Y.; Duan, N.; et al. High-precision symmetric weight update of memristor by gate voltage ramping method for convolutional neural network accelerator. IEEE Electron Device Lett. 2020, 41, 353–356. [Google Scholar] [CrossRef]

- Zhang, W.; Pan, L.; Yan, X.; Zhao, G.; Chen, H.; Wang, X.; Tay, B.K.; Zhong, G.; Li, J.; Huang, M. Hardware-Friendly Stochastic and Adaptive Learning in Memristor Convolutional Neural Networks. Adv. Intell. Syst. 2021, 3, 2100041. [Google Scholar] [CrossRef]

- Chen, J.; Wu, Y.; Yang, Y.; Wen, S.; Shi, K.; Bermak, A.; Huang, T. An efficient memristor-based circuit implementation of squeeze-and-excitation fully convolutional neural networks. IEEE Trans. Neural Netw. Learn. Syst. 2022, 33, 1779–1790. [Google Scholar] [CrossRef]

- Yao, P.; Wu, H.; Gao, B.; Tang, J.; Zhang, Q.; Zhang, W.; Yang, J.J.; Qian, H. Fully hardware-implemented memristor convolutional neural network. Nature 2020, 577, 641–646. [Google Scholar] [CrossRef] [PubMed]

- Li, C.; Ignowski, J.; Sheng, X.; Wessel, R.; Jaffe, B.; Ingemi, J.; Graves, C.; Strachan, J.P. CMOS-integrated nanoscale memristive crossbars for CNN and optimization acceleration. In Proceedings of the 2020 IEEE International Memory Workshop IMW 2020-Proceedings, Dresden, Germany, 17–20 May 2020; Volume 2, p. 10. [Google Scholar]

- Ribeiro, M.T.; Singh, S.; Guestrin, C. “Why Should I Trust You?”: Explaining the Predictions of Any Classifier. In Proceedings of the 22nd ACM SIGKDD International Conference Knowledge Discovery and Data Mining, ACM, New York, NY, USA, 13–17 August 2016; pp. 1135–1144. [Google Scholar]

- Štrumbelj, E.; Kononenko, I. Explaining prediction models and individual predictions with feature contributions. Knowl. Inf. Syst. 2014, 41, 647–665. [Google Scholar] [CrossRef]

- Xiao, H.; Rasul, K.; Vollgraf, R. Fashion-MNIST: A Novel Image Dataset for Benchmarking Machine Learning Algorithms. 2017. Available online: http://arxiv.org/abs/1708.07747 (accessed on 19 September 2022).

- Ilyasov, A.I.; Nikiruy, K.E.; Emelyanov, A.V.; Chernoglazov, K.Y.; Sitnikov, A.V.; Rylkov, V.V.; Demin, V.A. Arrays of Nanocomposite Crossbar Memristors for the Implementation of Formal and Spiking Neuromorphic Systems. Nanobiotechnol. Rep. 2022, 17, 118–125. [Google Scholar] [CrossRef]

- Wang, S.; Wang, W.; Yakopcic, C.; Shin, E.; Subramanyam, G.; Taha, T.M. Experimental study of LiNbO3 memristors for use in neuromorphic computing. Microelectron. Eng. 2017, 168, 37–40. [Google Scholar] [CrossRef]

- Huang, S.; Luo, W.; Pan, X.; Zhao, J.; Qiao, S.; Shuai, Y.; Zhang, K.; Bai, X.; Niu, G.; Wu, C.; et al. Resistive Switching Effects of Crystal-Ion-Slicing Fabricated LiNbO3 Single Crystalline Thin Film on Flexible Polyimide Substrate. Adv. Electron. Mater. 2021, 7, 2100301. [Google Scholar] [CrossRef]

- Wang, J.; Pan, X.; Wang, Q.; Luo, W.; Shuai, Y.; Xie, Q.; Zeng, H.; Niu, G.; Wu, C.; Zhang, W. Reliable Resistive Switching and Synaptic Plasticity in Ar+-irradiated Single-crystalline LiNbO3 Memristor. App. Surf. Sci. 2022, 596, 153653. [Google Scholar] [CrossRef]

- Rylkov, V.V.; Emelyanov, A.V.; Nikolaev, S.N.; Nikiruy, K.E.; Sitnikov, A.V.; Fadeev, E.A.; Demin, V.A.; Granovsky, A.B. Transport Properties of Magnetic Nanogranular Composites with Dispersed Ions in an Insulating Matrix. J. Exp. Theor. Phys. 2020, 131, 160–176. [Google Scholar] [CrossRef]

- Martyshov, M.N.; Emelyanov, A.V.; Demin, V.A.; Nikiruy, K.E.; Minnekhanov, A.A.; Nikolaev, S.N.; Taldenkov, A.N.; Ovcharov, A.V.; Presnyakov, M.Y.; Sitnikov, A.V.; et al. Multifilamentary Character of Anticorrelated Capacitive and Resistive Switching in Memristive Structures Based on (Co – Fe − B)x(LiNbO3)100 − x Nanocomposite. Phys. Rev. Appl. 2020, 14, 034016. [Google Scholar] [CrossRef]

- Nikiruy, K.E.; Emelyanov, A.V.; Demin, V.A.; Sitnikov, A.V.; Minnekhanov, A.A.; Rylkov, V.V.; Kashkarov, P.K.; Kovalchuk, M.V. Dopamine-like STDP modulation in nanocomposite memristors. AIP Adv. 2019, 9, 065116. [Google Scholar] [CrossRef] [Green Version]

- Nikiruy, K.E.; Surazhevsky, I.A.; Demin, V.A.; Emelyanov, A.V. Spike-Timing-Dependent and Spike-Shape-Independent Plasticities with Dopamine-Like Modulation in Nanocomposite Memristive Synapses. Phys. Status Solidi Appl. Mater. Sci. 2020, 217, 1900938. [Google Scholar] [CrossRef]

- Emelyanov, A.V.; Nikiruy, K.E.; Serenko, A.V.; Sitnikov, A.V.; Presnyakov, M.Y.; Rybka, R.B.; Sboev, A.G.; Rylkov, V.V.; Kashkarov, P.K.; Kovalchuk, M.V.; et al. Self-adaptive STDP-based learning of a spiking neuron with nanocomposite memristive weights. Nanotechnology 2020, 31, 045201. [Google Scholar] [CrossRef] [PubMed]

- Gong, Y.; Zhang, P. Research on Mnist Handwritten Numbers Recognition based on CNN. J. Phys. Conf. Ser. 2021, 2138, 012002. [Google Scholar] [CrossRef]

- Sun, Y.; Zhang, L.; Schaeffer, H. NeuPDE: Neural Network Based Ordinary and Partial Differential Equations for Modeling Time-Dependent Data. Proc. Mach. Learn. Res. 2020, 107, 352–372. [Google Scholar]

- Sun, S.-Y.Y.; Xu, H.; Li, J.; Li, Q.; Liu, H. Cascaded architecture for memristor crossbar array based larger-scale neuromorphic computing. IEEE Access 2019, 7, 61679–61688. [Google Scholar] [CrossRef]

- Akiba, T.; Sano, S.; Yanase, T.; Ohta, T.; Koyama, M. Optuna: A next-generation hyperparameter optimization framework. In Proceedings of the 25th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining, Anchorage, AK, USA, 4–8 August 2019; p. 2623. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Matsukatova, A.N.; Iliasov, A.I.; Nikiruy, K.E.; Kukueva, E.V.; Vasiliev, A.L.; Goncharov, B.V.; Sitnikov, A.V.; Zanaveskin, M.L.; Bugaev, A.S.; Demin, V.A.; et al. Convolutional Neural Network Based on Crossbar Arrays of (Co-Fe-B)x(LiNbO3)100−x Nanocomposite Memristors. Nanomaterials 2022, 12, 3455. https://doi.org/10.3390/nano12193455

Matsukatova AN, Iliasov AI, Nikiruy KE, Kukueva EV, Vasiliev AL, Goncharov BV, Sitnikov AV, Zanaveskin ML, Bugaev AS, Demin VA, et al. Convolutional Neural Network Based on Crossbar Arrays of (Co-Fe-B)x(LiNbO3)100−x Nanocomposite Memristors. Nanomaterials. 2022; 12(19):3455. https://doi.org/10.3390/nano12193455

Chicago/Turabian StyleMatsukatova, Anna N., Aleksandr I. Iliasov, Kristina E. Nikiruy, Elena V. Kukueva, Aleksandr L. Vasiliev, Boris V. Goncharov, Aleksandr V. Sitnikov, Maxim L. Zanaveskin, Aleksandr S. Bugaev, Vyacheslav A. Demin, and et al. 2022. "Convolutional Neural Network Based on Crossbar Arrays of (Co-Fe-B)x(LiNbO3)100−x Nanocomposite Memristors" Nanomaterials 12, no. 19: 3455. https://doi.org/10.3390/nano12193455