1. Introduction

Compacted graphite iron (CGI) has been known since 1948, but its use only took place in the late 1960s, when serial production of automotive parts made of ferritic CGI began in Austria [

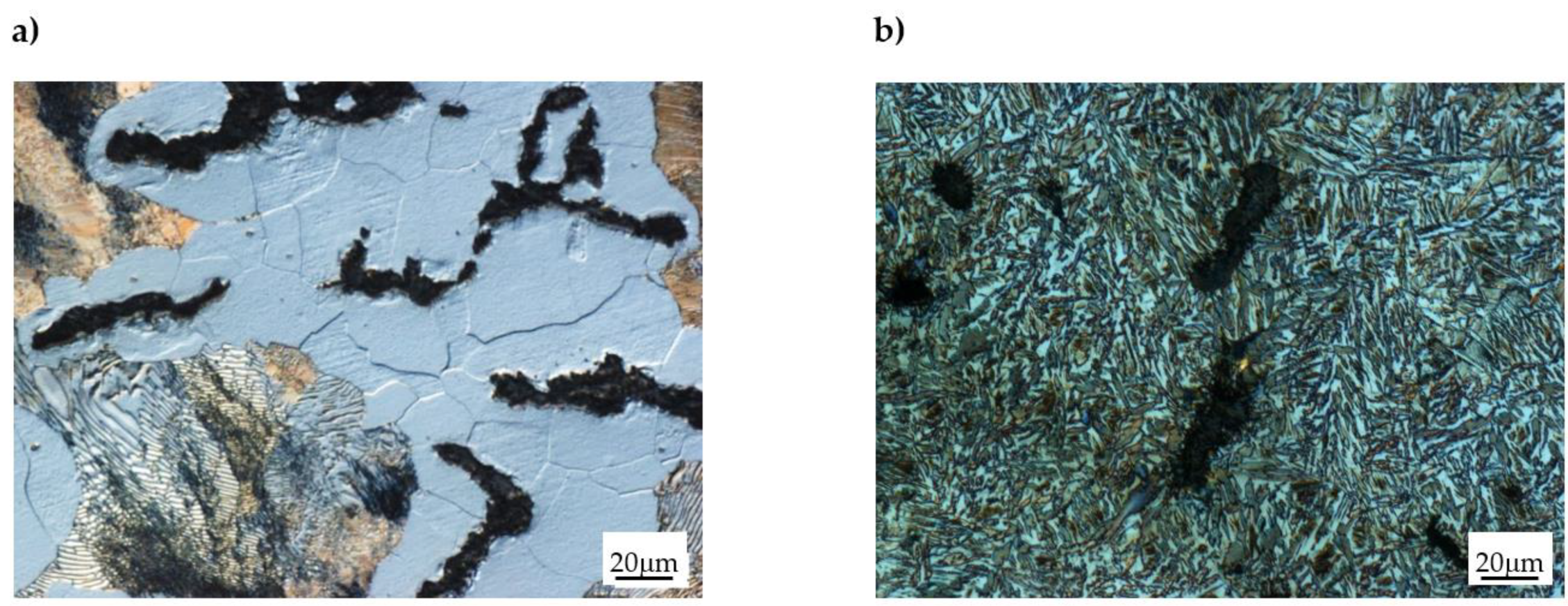

1]. Its production process requires maintaining a strict technological regime due to the narrow concentration range of elements ensuring the compacted (vermicular) shape of graphite. Owing to its unique properties combining good tensile strength with high thermal shock resistance, it is mostly used in the automotive industry. An example of a CGI microstructure is shown in

Figure 1a,b.

The matrix of unalloyed CGI usually consists of ferrite and pearlite (

Figure 1a). Due to the large contact surface of graphite with the metal matrix, this cast iron is characterized by a greater tendency to form ferrite compared to nodular or grey cast iron. As defined in EN-16079 standard, the typical CGI microstructure provides a minimum tensile strength of 300 to 500 MPa. To obtain pearlite, copper, nickel, or tin are used. Due to the unique properties of CGI, the technology is still being developed, which is reflected in many publications [

2,

3,

4,

5,

6,

7,

8,

9,

10,

11]. There is a lot of information on the effect of alloy additions on the microstructure and the properties of CGI, e.g., [

12,

13,

14]. As in nodular cast iron, in CGI it is also possible to obtain an ausferrite as a result of heat treatment [

15,

16,

17,

18]. Such cast iron is sometimes referred to as austempered vermicular iron (AVI) or austempered vermicular cast iron (AVCI). Ausferrite is a mixture of bainitic ferrite and high carbon austenite. To obtain the ausferrite in cast iron, the austempering process is required. Its course is shown in

Figure 2.

The essence of such heat treatment (called austempering) is heating to the austenitization temperature (approx. 900–950 °C) and holding at that temperature to obtain an austenite in the cast iron matrix. The next step is quenching in a salt bath at 400–250 °C. The isothermal holding time must be short enough to prevent the precipitation of carbides, which decrease the elongation. Carbide precipitation would result in the formation of bainite (upper and/or lower).

There is also an alternative way to obtain the ausferritic matrix, i.e., by modifying the chemical composition of cast iron, using molybdenum together with copper or nickel [

19,

20,

21]. The microstructure of ausferritic CGI is shown in

Figure 1b. The key element is molybdenum, which significantly increases the stability of austenite in the pearlitic area but does not significantly change it in the bainitic area, as schematically shown in

Figure 3. Experimental data on the effect of molybdenum on the stability of austenite on continuous cooling transformation (CCT) can be found in [

21].

As shown in

Figure 3, for the same cooling rate “v”, in cast iron without Mo, austenite will transform into ferrite and pearlite, while in cast iron with Mo, austenite will transform into bainitic ferrite. It allows the production of CGI without the use of heat treatment.

In the era of Industry 4.0, when all processes are successively automated and production is moving towards the model of cyber-physical systems, there is still room for human creativity. The needs and threats related to this aspect of the industrial revolution have been the subject of scientific publications for several years [

22,

23,

24]. Artificial intelligence allows us to improve people’s work and optimize processes, but it still often requires the human operator to make decisions. The role of humans is also essential in creating system concepts, in designing and planning production, or in designing new materials, and in these areas it remains unchallenged so far. Artificial intelligence can only solve clearly defined problems. Defining problems requires imagination and the ability to anticipate technological barriers to future concepts that only humans can boast of. The difference in artificial intelligence methods, such as data mining and machine learning, is also worth emphasizing. In many respects, data mining and machine learning are made up of the same group of algorithms. The main difference lies in the intended use of the results obtained by these methods. Machine learning leads to black box models to be processed by computer, to enable the machine to make decisions. Data mining is primarily aimed at discovering the knowledge that is contained in data structures, relationships, and patterns, but in the case of data mining, the beneficiary is the human being. Man should be given explanations; the result in numbers is not enough. The engineer expects a description of reality, a dependency model, and information on what basis a given solution has been obtained. This explains the popularity of methods called Explainable Artificial Intelligence, the purpose of which is to explain the rationale behind decisions made by artificial intelligence models. Individual algorithms used in intelligent data analysis have different levels of interpretability—the group of methods called decision trees [

25,

26,

27], which was very popular for many years, has been replaced by more precise techniques, i.e., artificial neural networks or support vector machines [

28], both offering greater precision at the expense of ease of interpretation. There is now a return to simpler (more transparent) methods for humans, but which are modified to increase efficiency. Unsupervised learning methods, i.e., cluster analysis, are also used to discover patterns between objects (materials, processes, etc.).

The problem analyzed by the authors of this paper concerns the prediction of the volumetric fraction of phases in the microstructure of compacted graphite iron. The problem is related to the earlier research conducted by the authors [

29,

30], but this time the focus is on a higher level of inference. The prediction of phases in a microstructure with division into individual constituents, particularly ausferrite, has already been partially resolved. However, a comprehensive model that would allow the experimenter to fit an appropriate model to the prediction of each of the constituents was still missing.

The use of ML methods in metal engineering is becoming more and more popular [

31]. There are many works that deal with the use of supervised learning (ANN [

32], kNN [

33], and DTs [

32], and others such as XGBoost or ridge regression [

34]) in predicting the properties of metals and works indicating the possibility of using unsupervised learning, especially k-means, in image segmentation [

35].

The use of machine learning methods to predict material properties is a very interesting topic. In [

36,

37], ML methods were used to link defects with mechanical properties. In many works, the use of ML tools was used to improve the level of control over the production process [

38].

However, the most similar studies to those presented in this article concern the prediction of the properties of metals. An example of such research is the use of neural networks to predict properties [

39,

40] or to predict the properties of new Fe2.5Ni2.5CrAl alloys [

41].

It is much more difficult to find examples of research using ML tools in the analysis of CGI properties. The latest publications on this material use either qualitative analysis [

42,

43,

44] or the traditional statistical approach to search for dependencies and build linear regression models [

45,

46].

The presented manuscript presents an innovative approach to the topic of microstructure-based property modeling. Supervised modeling techniques (ANN) adapted to the characteristics of different phases, and unsupervised techniques (k-means) for segmentation allowing for metamodel determination (DTs), which enables the selection of the correct submodel, were both used.

The solution proposed in this article consists of several elements, including both the white-box elements (classification trees), allowing the discovery of relationships and easy interpretation for an expert in the field of material design, and the black-box elements (neural networks), allowing for precise prediction of the contribution of individual microstructures. This approach combines the advantages of both techniques, allowing not only an easy interpretation of dependencies, but also precise prediction. An additional advantage is also the automation of the data analysis procedure (using k-means clustering) and microstructure prediction using data-driven models, which is expected to speed up and optimize the process of experimental design of products cast from various types of compacted graphite iron.

4. Model Construction Procedure

The procedure of creating prediction models for the percentage composition of the CGI microstructure was developed in parallel with the influx of experimental data, which included subsequent chemical components and their proportional fractions. However, only models driven by the latest data set should be included in the analysis and comparison. Some attention is deserved for the fact that each record in the data set is a separate experimental melt, and its properties and chemical composition constitute a separate, unique, and unrepeatable result. Obtaining this type of data is a tedious and costly process, requiring careful examination of each object in the database and interrelationships between individual objects. The diagram in

Figure 5 represents the idea of comparing three variants of the modeling procedure. The variant marked with number 1 is a classic approach; using a data set, we train neural networks to obtain, from the output, the percentage of each CGI phase, i.e., ferrite, pearlite, martensite, austenite, and ausferrite. This approach is a common way to start research and uses a comparative model.

More focused research involves creating and training separate neural networks for each phase (constituent) of the microstructure. In variant 2, depending on the microstructure composition, the data set is split into separate partitions. For each partition, a network is trained, and it returns the prediction of the content of a given constituent depending on the alloy chemical composition. Therefore, several models are obtained, and their application depends on the chemical composition and the knowledge of the expert/analyst who will use a specific model for the purpose of prediction. However, this expert must know the rough effect of a given chemical composition. This means that to predict the microstructure, the expert must know at the stage of designing the chemical composition what phase can be expected at the output, as only then he will be able to choose a model that will precisely determine the quantitative content of this phase. Hence it follows that, although the procedure is quite precise, it places great demands on the expert and the assumptions may result in an error if the wrong model has been chosen.

To avoid such doubts, variant 3 was developed (

Figure 5).

Variant 3 creates a metamodel that determines the selection of an appropriate submodel. This metamodel is designed to capture the knowledge about the basic relations prevailing in the data set, including relations between the microstructure composition and the type of alloying additives. The metamodel is therefore intended to replace the rough knowledge about the resulting composition of the microstructure and to run the correct submodel allowing for precise prediction. The metamodel used in this study was constructed using two machine learning techniques. Unsupervised learning (clustering) was used to obtain groups of materials with a similar microstructure and chemical composition, and then, for these groups (clusters), a classification tree was induced which, based on the chemical composition of the sample, was able to indicate the correct class of microstructure. In this way, the appropriate submodel of the ANN network was selected, allowing for further precise prediction of the quantitative percentage composition of the microstructure.