Abstract

Prior work has introduced a form of explainable artificial intelligence that is able to precisely explain, in a human-understandable form, why it makes decisions. It is also able to learn to make better decisions without potentially learning illegal or invalid considerations. This defensible system is based on fractional value rule-fact expert systems and the use of gradient descent training to optimize rule weightings. This software system has demonstrated efficacy for many applications; however, it utilizes iterative processing and thus does not have a deterministic completion time. It also requires comparatively expensive general-purpose computing hardware to run on. This paper builds on prior work in the development of hardware-based expert systems and presents and assesses the efficacy of a hardware implementation of this system. It characterizes its performance and discusses its utility and trade-offs for several application domains.

1. Introduction

Artificial intelligence has been used for applications, including robotics [1], advertising [2], personal digital assistants [3], college admissions [4], operations management [5], gaming [6], determining borrowers credit worthiness [7,8], performing surgery [9,10], and scientific experimentation [11]. It has been identified as a source of future cyber threats [12] and a key mechanism to detect [13] and respond to them. Its use is pervasive in modern society.

One factor that inhibits the utility of artificial intelligence systems is humans’ trust in them. To this end, a key issue with some systems is that humans cannot readily understand techniques’ operations and how particular decisions are made. This is particularly vexing for individuals impacted by these decisions [14]. So-called “explainable” techniques [15,16] have been developed in response to these concerns.

This paper seeks to solve the problem of developing a time-definite, defensible, and low-power/low-cost artificial intelligence technique that is suitable for applications such as cyber-physical system command. Many existing state-of-the-art solutions, which are implemented as software running on general purpose computer systems, are limited by the power and hardware cost of their host hardware and the time variability introduced by algorithms’ iterative processing. Existing hardware implementations (see, e.g., [17]) are responsive to power [18] and cost limitations and some reduce time variability. However, no defensible hardware implementation has been previously produced.

Defensible artificial intelligence, introduced in [19], goes beyond simply requiring the technique’s operations to be understood. It requires the technique to be demonstrably making the correct decision while still having the capability to learn from training and operations. Learning, however, is what creates the potential for artificial intelligence techniques to become “algorithms of oppression” [20] and associate outcomes with confounded characteristics, potentially illegally. Neural networks, in particular, are problematic as they have no specific meaning [21]; rather, they simply learn whatever associations may be present. Their decisions—also problematically—for a given set of inputs, could rapidly change with additional training.

The defensible artificial intelligence system, which was introduced in [19], demonstrated in [22], and refined in [23,24], utilizes expert systems as the basis of its design. Users begin by developing a logically valid network. Then, rule weights (which are floating point numbers between 0 and 1) are optimized using a gradient descent approach, similar to what is commonly used for neural networks.

Expert systems are an early form of artificial intelligence. They began with the Dendral and Mycin [25] systems in the 1960s and 1970s. Because of their design, they are inherently understandable. They utilize a rule-fact network with every fact, generally, having a specific meaning and every rule being logically valid. While they were initially designed to emulate the decisions an expert would make, they have grown and been used in other areas. Examples include control systems [26], facial expression analysis [27], and power system [28] analysis.

Versions of expert systems that use fuzzy logic have been proposed. They use fuzzy set concepts [29] and represent rules’ and facts’ value uncertainty. A taxonomy for these systems was defined by Mitra and Pal [30], who described a “knowledge-based connectionist expert system” that begins with “crude rules”, stores these as connection weights within a neural network, and uses training to produce refined rules. The floating point rule weighting values follow this conceptual model.

Both expert systems and neural networks have typically been implemented using an iterative algorithm. Expert systems use iteration for rule activation and neural networks utilize iteration for training. However, this is problematic for several reasons. First, the network’s output may differ based on the order that rules are selected for execution. The iteration of the expert system’s rule processing engine also means that the decision-making time can only be predicted, limiting the utility of expert systems for controlling robotics and other real-time applications.

The limitation of processing uncertainty can be removed by implementing the system in hardware, which allows rule execution to be performed in parallel. In [31,32], the use of a hardware-based (instead of a software-based) rule-fact network was proposed; however, these papers did not implement or test such a system. A basic hardware Boolean expert system was implemented previously [33] and its performance was characterized, showing that it had a relatively consistent operating speed and high accuracy. These factors, and its lower (as compared to a general-purpose computer) cost, made hardware implementation potentially beneficial for a number of different types of applications.

In this paper, the concept of hardware-based expert systems is further developed through the creation of a gradient descent trained version, which operates conceptually similarly to a neural network. While hardware AI systems have been developed previously, no defensible AI hardware system has been previously created. This paper describes the design of a hardware-implemented gradient descent trainable expert system and discusses its implementation. The efficacy and performance of hardware-based expert systems’ gradient descent-trainable networks are also explored and discussed.

In Section 2, this paper continues with a review of prior work, which provides a foundation for this paper. Section 3 presents the design of the system that was utilized for the experimentation presented herein. Section 4, then, presents the experimental design used for this experimentation. Next, Section 5 presents and analyzes the data collected using this system. Following this, in Section 6, several applications for the proposed system are discussed. Finally, the paper concludes with a discussion of key conclusions and future work in Section 7.

2. Background

This section reviews prior work in several areas, which provides a foundation for the work presented herein. First, prior work on machine learning techniques is reviewed. Next, work on expert systems is presented. Following this, the gradient descent expert system technique and its prior work are discussed. Finally, an overview of prior work on hardware-implemented artificial intelligence is provided.

2.1. Machine Learning

Machine learning is a popular area of artificial intelligence. It can be used to learn about and model complex phenomena from examples or intuit patterns within a dataset. With supervised learning [34], a system is provided a set of inputs and the desired answer. Reinforcement learning [35] is similar: a ‘prize’ is provided to the system to encourage it to learn a desired pattern. Finally, with unsupervised learning [36], the system is provided data and simply identifies patterns present in it (grouping like data, for example).

A number of techniques for training networks exist. One of the most well known is gradient descent [37]. With gradient descent, a partial correction is applied for multiple rounds for the error between the system’s current and target output. It is utilized as part of a supervised learning process and seeks to find the optimal (minimal) error level. The gradient descent technique is frequently used with neural networks.

Backpropagation [38] is a specific type of gradient descent that alters the weights throughout a neural network to correct the difference between the system’s current and target output values. It utilizes an iterative process. A gradient descent technique based on the backpropagation concept was introduced in [19] for use with GDES networks.

Gradient descent and backpropagation are not the only machine learning techniques that can be used. Other techniques have been proposed, which use spiking neural network concepts [39,40], memory use optimization [41], and speculative approaches [42]. Techniques for training neural networks without the use of backpropagation [43,44] have also been proposed.

2.2. Expert Systems

Expert systems began in the 1960s and 1970s with the Dendral [25,45] and Mycin systems [25]. Classical expert systems use rule-fact networks to perform inference [46]. Facts store binary values and rules identify output facts that can be asserted as true when two input facts are true. They utilize a processing engine, which scans through the knowledge base to identify rules that can be executed (based on having their input requirements satisfied). Rules that are identified are run, which results in setting their output facts to true.

In the intervening half-century since their introduction, numerous enhancements of expert systems have been introduced. Optimization techniques [47] and hybrid expert systems [48], which are neural networks, are examples of such enhancements. Fuzzy logic systems, which use fuzzy set concepts [29] and represent uncertainty, have also been proposed.

Mitra and Pal [30] defined a fuzzy logic expert system taxonomy, of which the most advanced level was “knowledge-based connectionist” systems. These start with “crude rules”, stored as neural network connection weights, and the network is trained for their refinement.

Expert systems have been used for application areas such as agriculture [49], autonomous vehicle control [50], education [51], geographic information systems [52], identification of facial features [27], medicine [53,54] (including diagnosing heart disease [55] and hypertension [56]), therapy [57], and software architecture [58].

2.3. Gradient Descent Expert Systems

A machine learning algorithm that trains an expert system was introduced in [19]. This technique, because of its rule-fact expert systems base, is inherently understandable. Moreover, because it is logically connected and cannot create new associations (it optimizes the weightings of existing ones), it is guaranteed to not learn invalid associations. This goes beyond Arrieta et al.’s [59] classifications of systems that are either inherently understandable or retrofit for understandability and was termed “defensible” artificial intelligence [19].

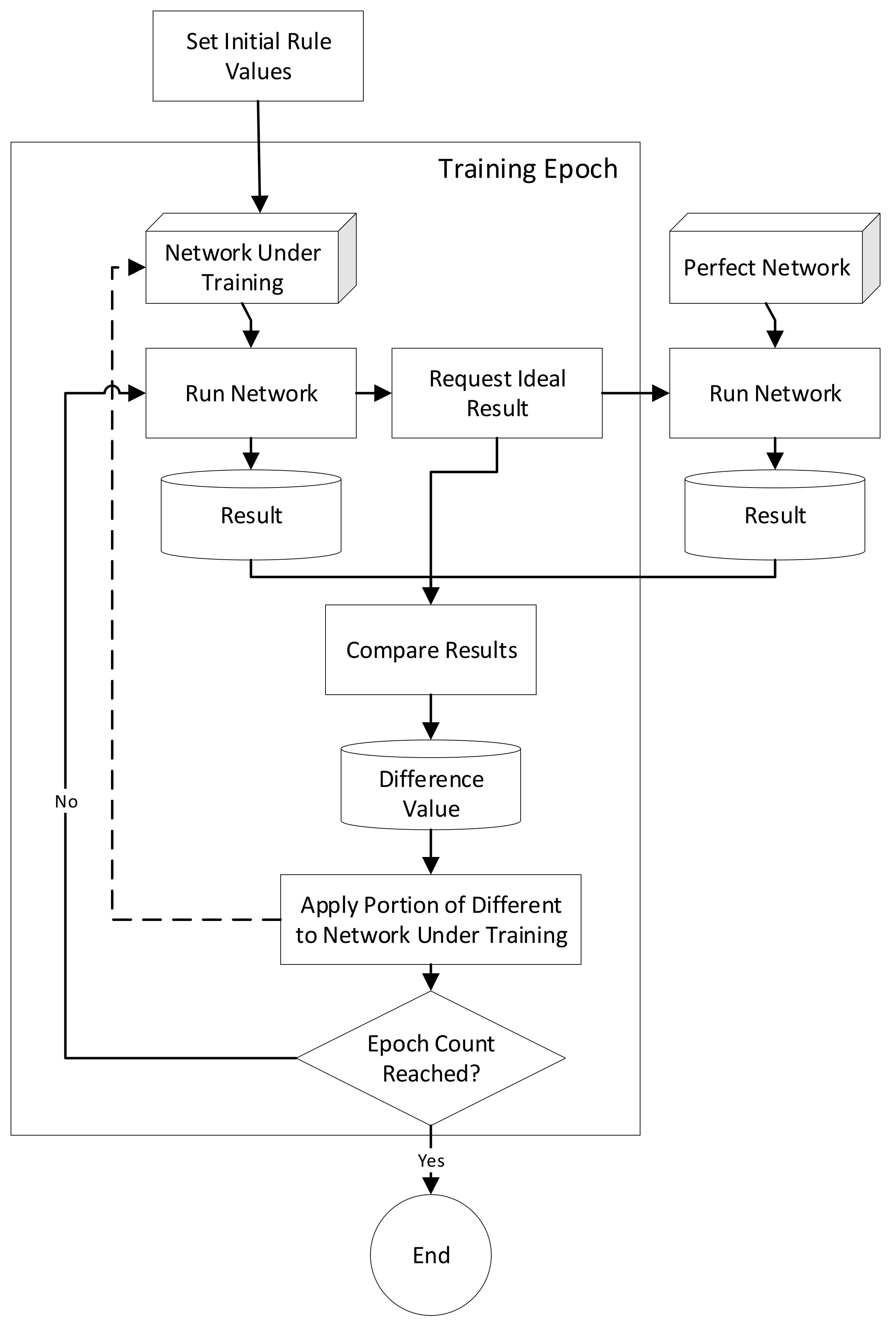

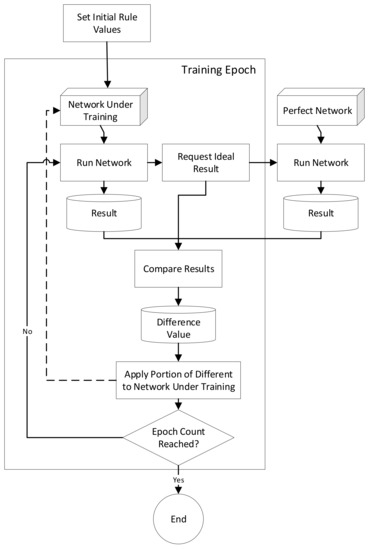

The system runs a gradient descent-style learning algorithm on an expert system rule-fact network to potentially change the weighting of its rule inputs. This is performed by iteratively allocating and correcting for a proportional fraction of the error, between the target and actual output of the system, to each node, which is identified as contributing to the target value. The algorithm is shown in Figure 1.

Figure 1.

Training process for gradient descent expert systems [19].

The gradient descent trained expert system (GDES) was introduced in [19] and techniques to enhance it by reducing error [24], using alternate training techniques [23] and automating network development [60], have been proposed. Its use has also been demonstrated for a limited number of applications (e.g., [22]).

2.4. Hardware AI

A significant amount of work has been performed in implementing artificial intelligence hardware. Batra et al. [17] proffer that AI hardware is creating “the best opportunities for semiconductor companies in decades”, referring both to developing AI-specific hardware and hardware that supports AI applications. Dally et al. [61] attribute the “current revolution in deep learning” as being “fueled by the availability of hardware fast enough to train deep networks in a reasonable amount of time”.

Many AI-related hardware implementations are accelerators. General hardware, such as field programable gate arrays (FPGAs), graphics processing units (GPUs), and application-specific integrated circuits (ASICs), have been utilized to enhance the performance of artificial intelligence systems [62] to meet speed and other needs. Specialized hardware includes ferroelectric memristors (which are suggested to be able to implement brain-inspired techniques) [63], hardware spiking neurons [64], and neuromorphic photonics [65], which can enable specific system types.

Using these and other hardware devices, a variety of hardware-based artificial intelligence systems have been developed. Examples include hardware-based Hopfield neural networks [66], expert systems [33], spiking neural networks [67], q-learning algorithm implementation [68], self-organizing maps [69], quantum neuromorphic systems [70], and hybrid oxide brain-inspired neuromorphic systems [71].

Hardware systems have been shown to provide benefits such as energy efficiency [72,73] and aid in delivering enhanced performance for various application areas, such as sustainable chemistry [74] and biomedical/healthcare [75]. As Berggren et al. [76] notes hardware capabilities set “the fundamental limit of the capability of machine learning” and thus the advancement of hardware capabilities—both generalized and bespoke—is critical to continued growth of machine learning capabilities and adoption.

Initiatives in this area have focused on delivering intelligence via edge computing [77], validating the capabilities, functionality, and reliability of hardware AI implementations [78] and incorporating knowledge of the hardware into system design decisions in hardware-aware [79], co-optimization [80], and co-design [81] processes. Research is also ongoing into hardware AI security [82], explainability [83], and dependability [83].

3. System Design and Implementation

The hardware-based GDES (HGDES) implementation utilizes a computer-based training mechanism (CBTM) and a hardware processing mechanism (HPM). The CBTM utilizes the GDES software that has been previously developed [84]. This software is utilized to determine weightings for the rules that are applied to the HPM. The HPM can serve as an advisor, such as how some traditional software expert systems were used, to another piece of command hardware or a software system (or even an in-the-loop human). The system, however, is designed so that its output can be used directly as a decision-making system via interfacing with and supplying its decisions (output) to other command and control hardware that communicates with sensors and actuators. It is, notably, in this last configuration that the time-determinism benefits of the system are most likely to be benefitted from.

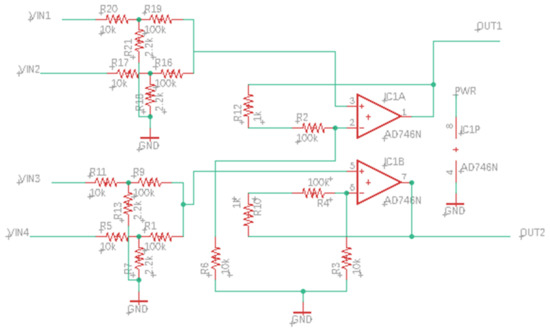

3.1. Hardware Processing Mechanism

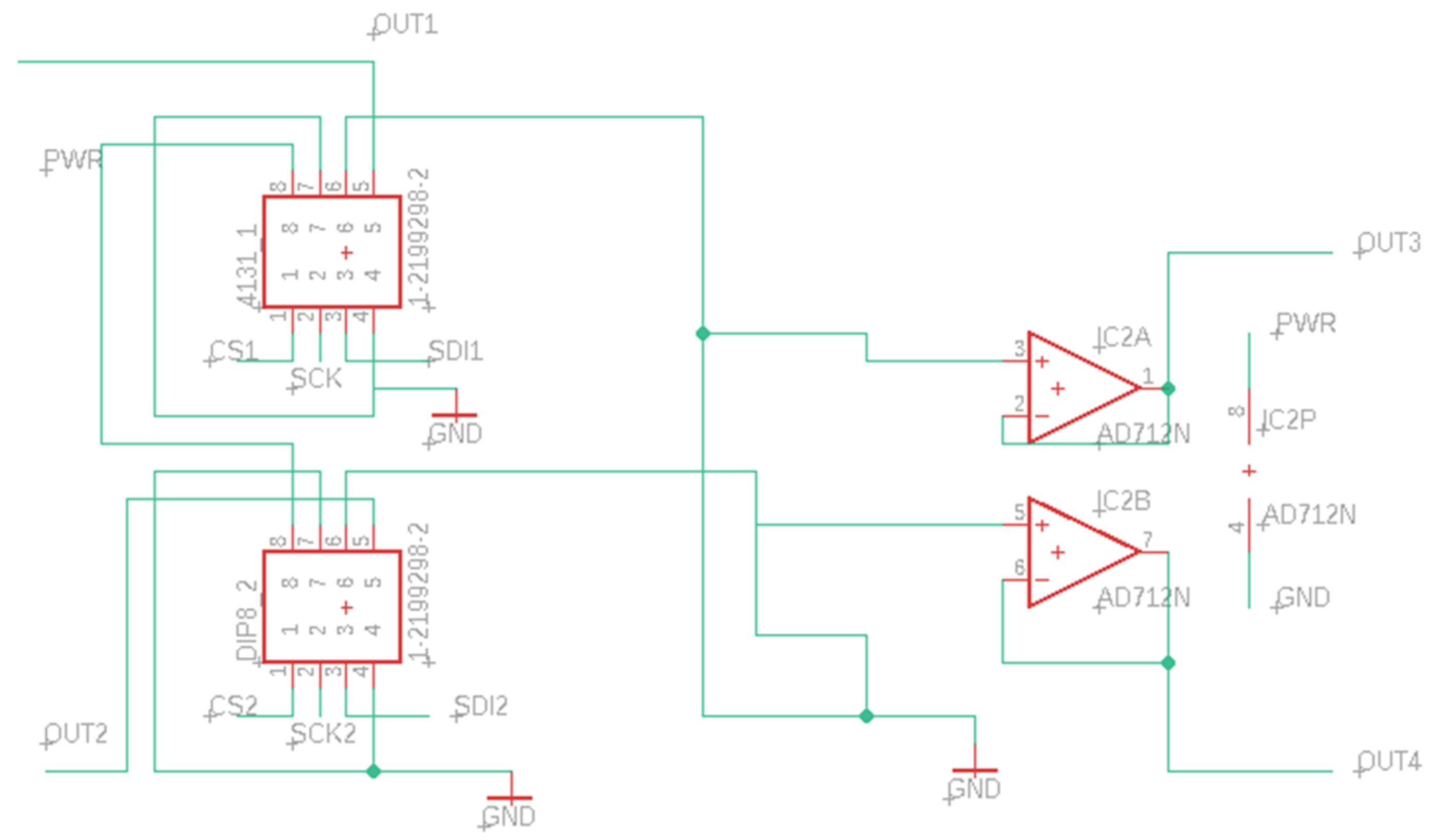

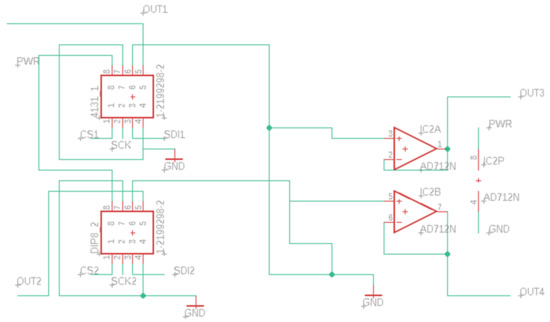

The HPM, shown in Figure 2, is comprised of an adder circuit and potentiometers. Each HGDES node takes in four direct current (DC) input voltages, in two pairs. For each component of the pair of input voltages, a potentiometer can be used to apply a weighting value to the source (note that, for experimental purpose, the power supplies’ were used to apply this weighting to the initial inputs). The HPM then adds the voltages from each component in the pair and passes the sum of each pair through another potentiometer, before adding these two results as well (as part of a second layer GDES node on each board). The output of this adder circuit is the output of the particular HGDES board (which can, potentially, serve as the input to another HGDES board). For experimental purposes, potentiometers were inserted between the boards, providing the scaling for the inputs to the next board.

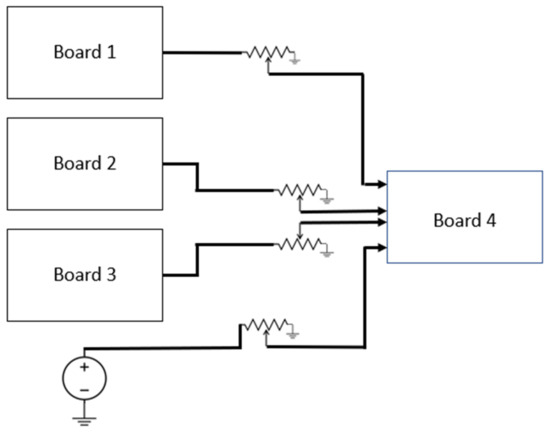

Figure 2.

HPM overview design schematic.

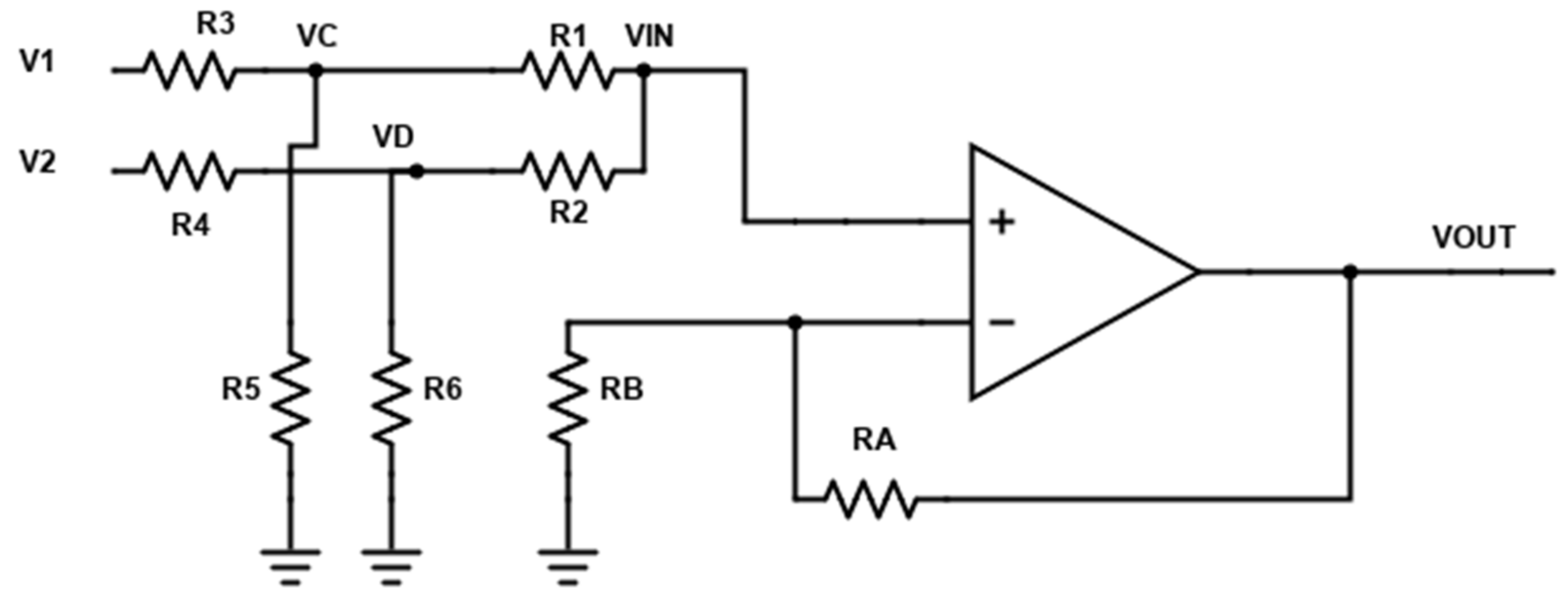

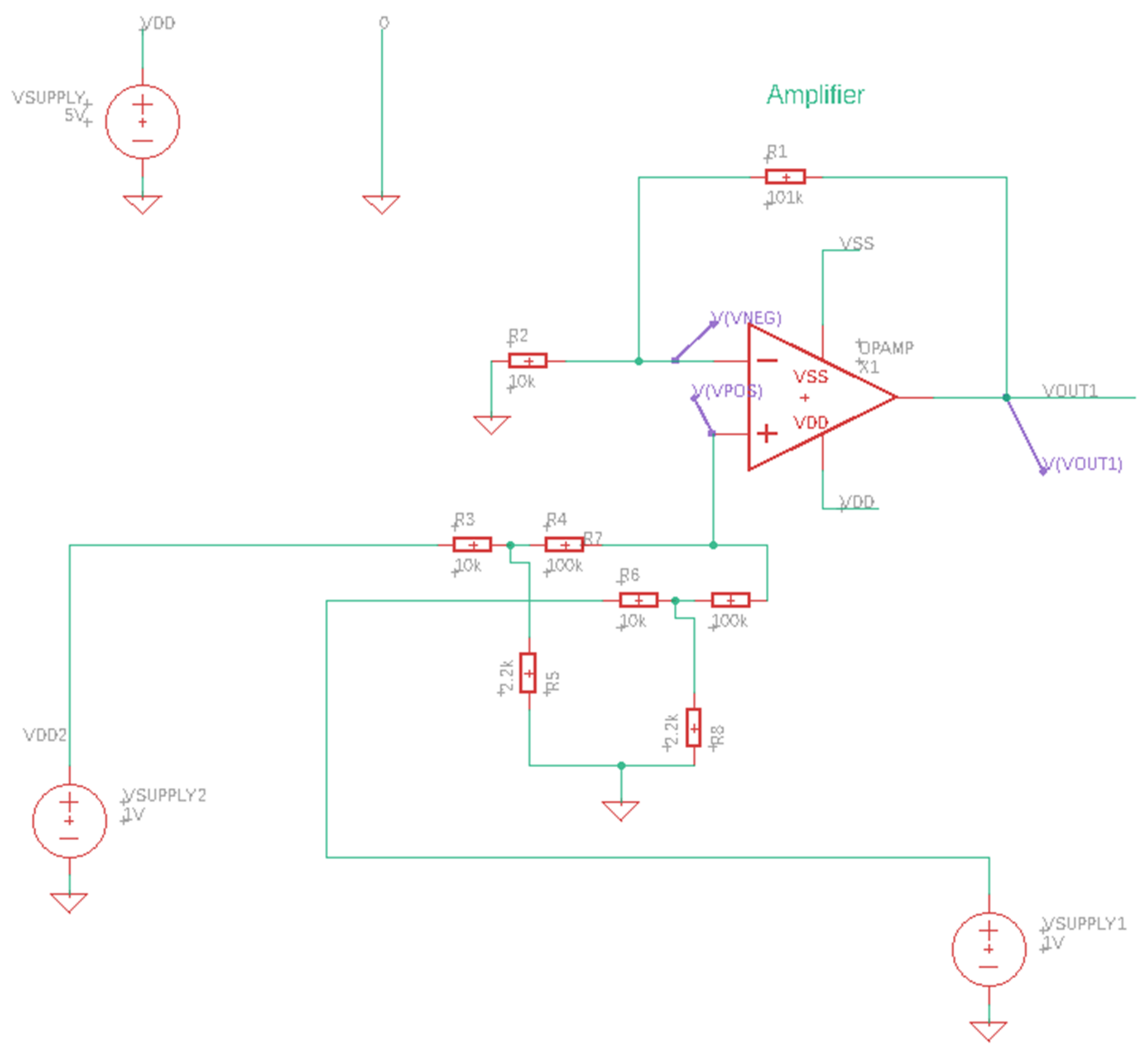

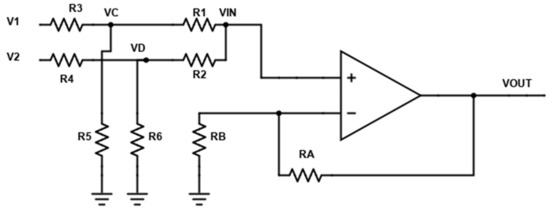

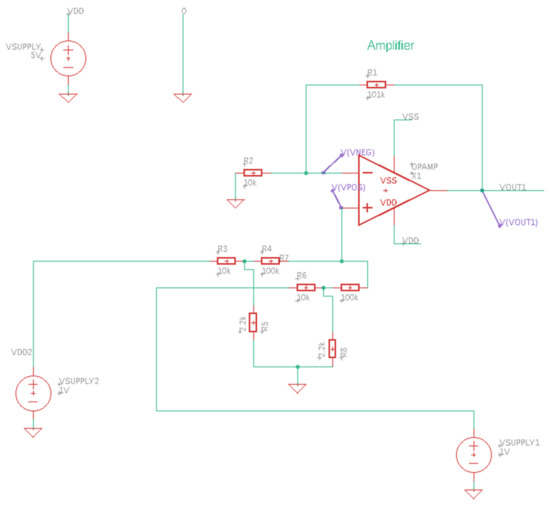

As the potentiometers control the voltage, allowing a voltage ranging from 0 to the full input value to be sent onwards, the combination of the adder and potentiometer provides the fundamental capability of a GDES node. The basic design of the adder circuit that was used is shown in Figure 3. The adder circuits consist of operational amplifiers set up in the non-inverting summing amplifier mode. For this design, 8 pin MCP 602 dual operational amplifiers IC were utilized.

Figure 3.

Adder circuit design.

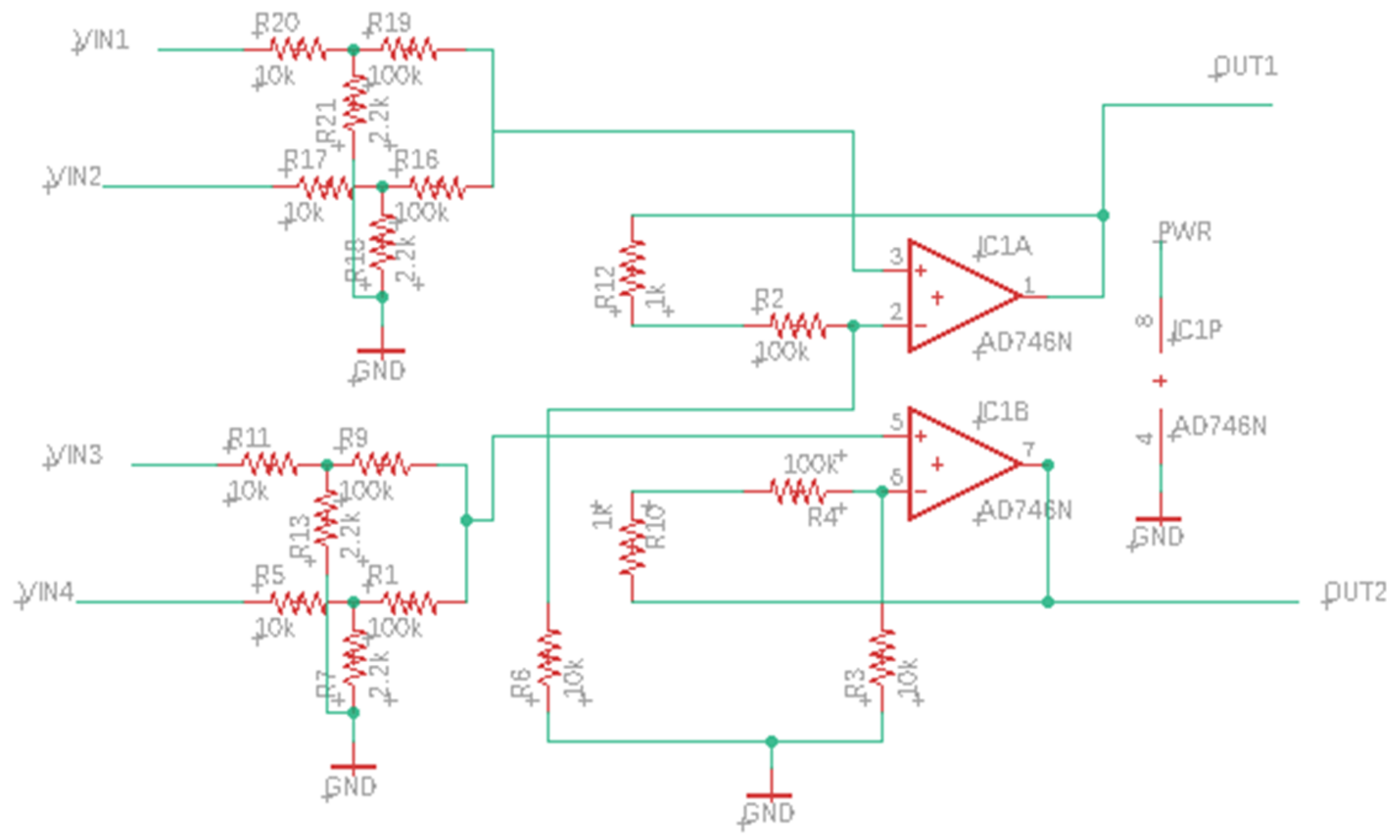

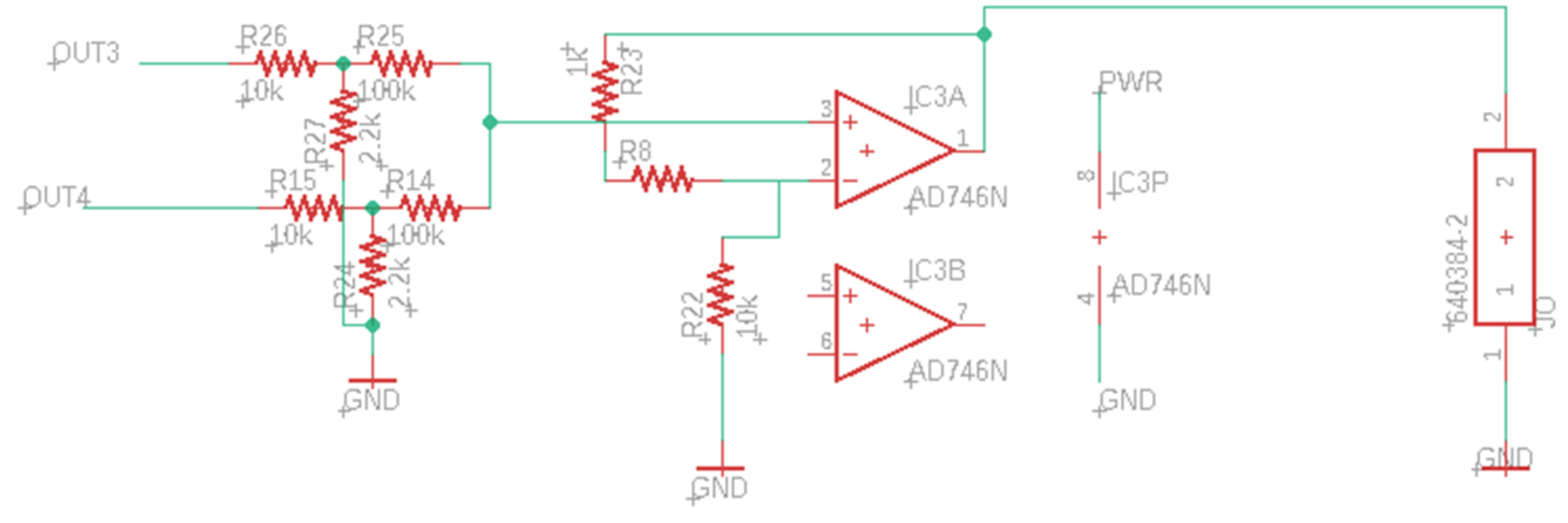

This circuit takes in four DC input voltages in two input pairs (V1, V2 and V3, V4) and adds the individual voltages in each pair, as shown in Figure 4.

Figure 4.

HPM detail design schematic (left region).

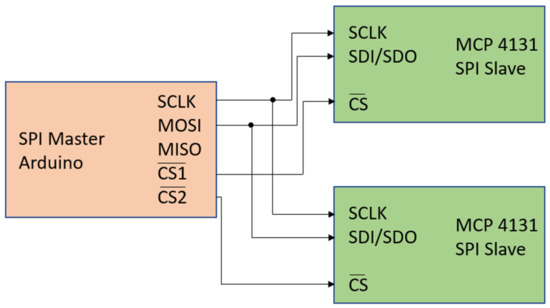

The outputs from the operational amplifiers are then passed through two separate potentiometers, as shown in Figure 5. These potentiometers are used to vary the sum voltage between 0 and the full supplied value to apply a weighting to the inputs to the next node. The potentiometers that were utilized were MCP 4131 ICs. They were controlled using the serial peripheral interface (SPI) communications protocol.

Figure 5.

HPM detail design schematic (center region).

Finally, the resulting voltages from the potentiometers are sent to another adder circuit, as shown in Figure 6. This is the last adder circuit in the schematic. The result of this adder is the output of the board, which can be supplied to another GDES node, if needed, for further processing. Note that this diagram includes a second OP AMP, which is shown without connections as the ICs have two packaged into a single chip and only one of the two is used in this instance.

Figure 6.

HPM detail design schematic (right region).

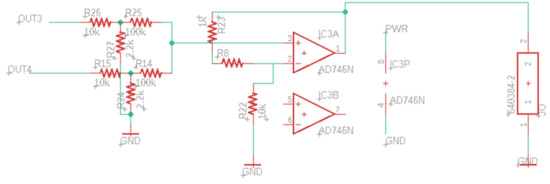

3.2. Hardware Processing Mechanism Design

To provide the requisite functionality, a low-error adder circuit was needed. An operational amplifier (OP AMP)-based adder circuit was designed for this purpose. The values of resistance used for this were derived using the basic non-inverting op amp gain equation in (1), where Vout is the output voltage and Vin is the voltage at the non-inverting input of the amplifier (also labeled the summing junction). Av is the voltage gain. The relationship between Vout, Vin, and Av is defined by (1):

The relationship in (2) is derived by applying Kirchoff’s current law [85] at the summing junction while keeping R1 and R2 equal:

Combining and simplifying (1) and (2) provides the relationship shown in (3):

The voltage divider rule [85] provides that and . By plugging in the values and simplifying further, (4) is derived:

It is necessary to select a value of such that when is multiplied by 0.09, . This value is calculated to be 11.1. Next, values of and are selected such that the target value of is satisfied, as shown in (5):

Given this, the value of RA/RB should ideally be equal to 10.1. In accordance with this ratio, values of 101 k and 10 k are chosen for RA and RB:

Simplifying this further provides the desired relationship, shown in (7), for the adder circuit:

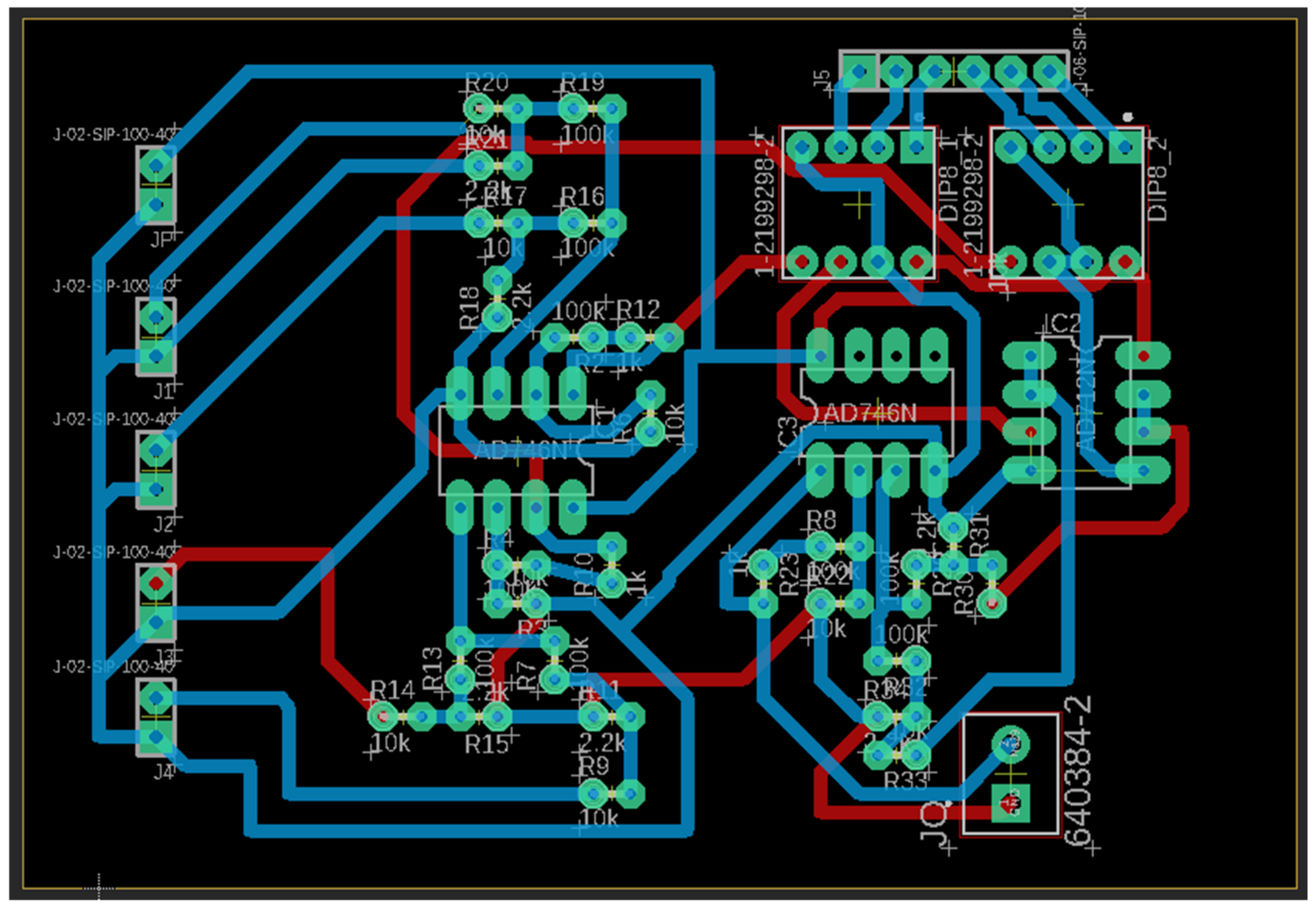

3.3. Hardware Processing Mechanism Implementation

The HGDES HPM modules were fabricated as 3.4 inch by 2.2-inch two-layer printed circuit boards (PCBs). This board design is shown in Figure 7. The inputs and outputs are located on the left and right sides of the board, respectively. A constant trace width of 35 mils was used to facilitate the use of a wide variety of fabrication options and to reduce voltage loss. In Figure 3, the blue traces represent the bottom copper layer, and the red traces represent the top copper layer.

Figure 7.

PCB design for a single HGDES HPM node.

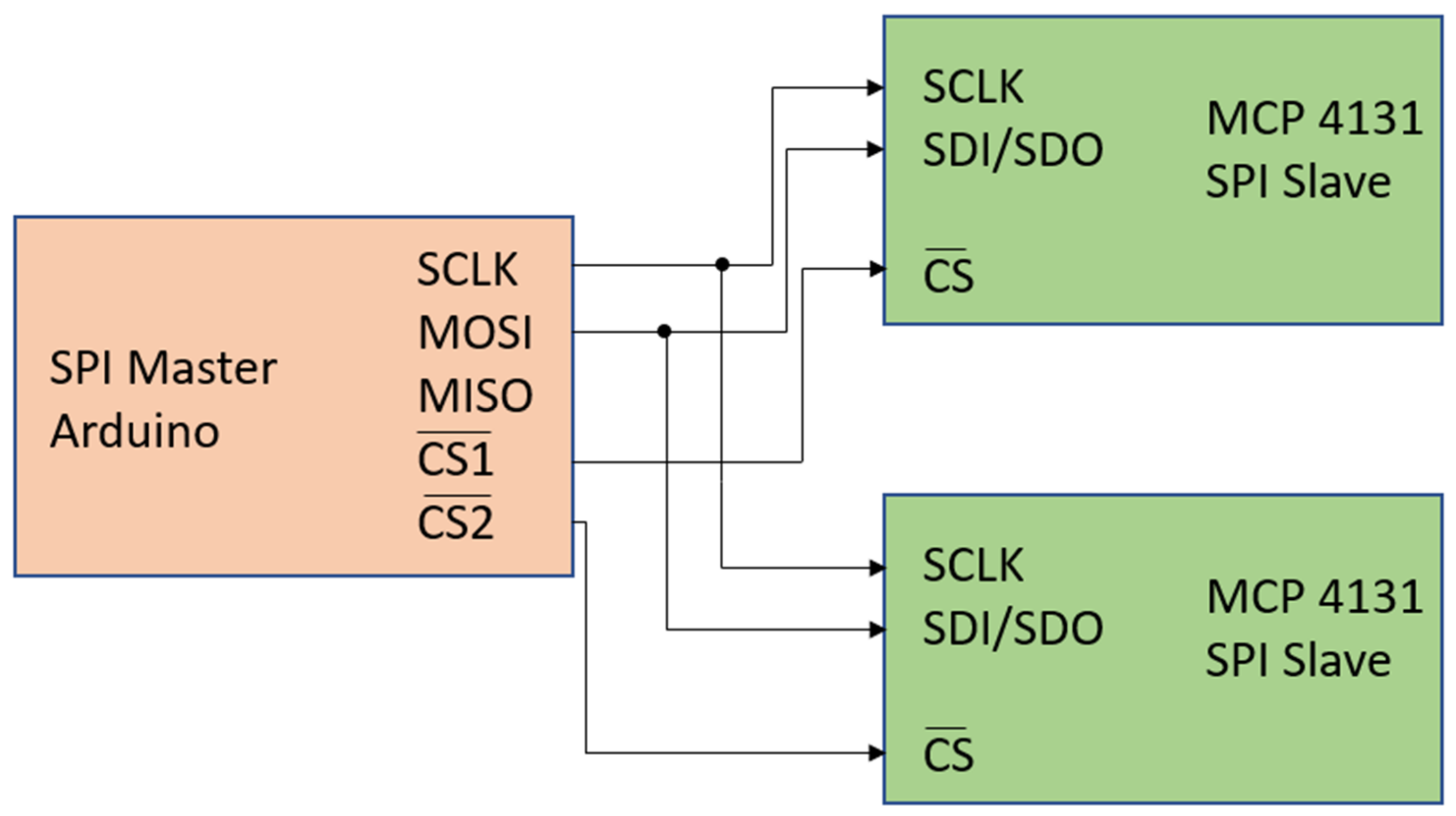

The HGDES HPM board is powered by a standard 5 V power supply and the MCP 4131 potentiometer ICs are connected to an external Arduino UNO using header pins located at the top right of the HPM board. The Arduino communicates with the potentiometers, via 3 pins, using the serial peripheral interface (SPI) communications protocol. Figure 8 depicts the Arduino MCP 4131 connections. Multiple potentiometers are connected to the same Arduino microcontroller simultaneously using a chip select pin and two buses.

Figure 8.

Serial peripheral interface (draws inspiration from [86]).

4. Experimental Design

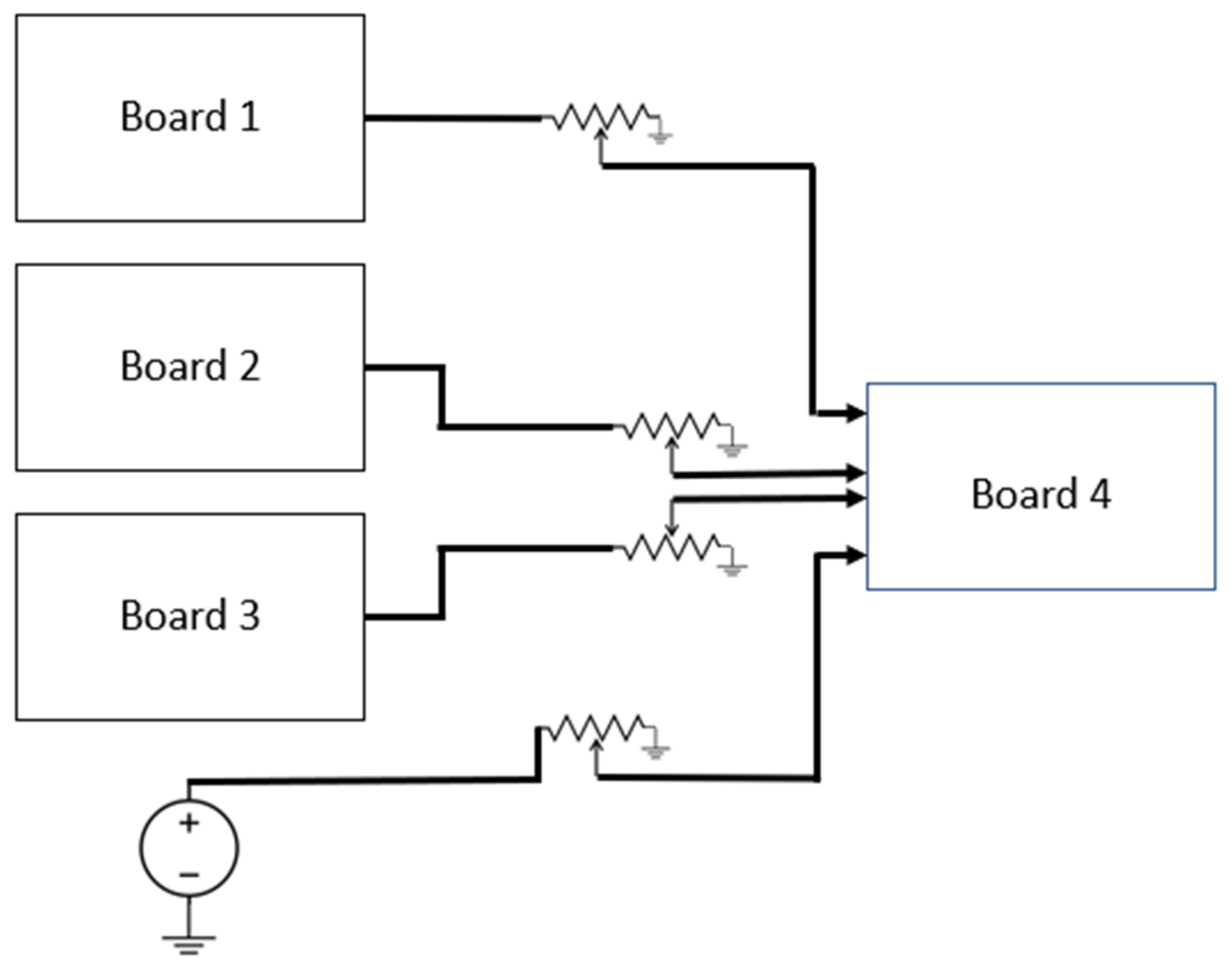

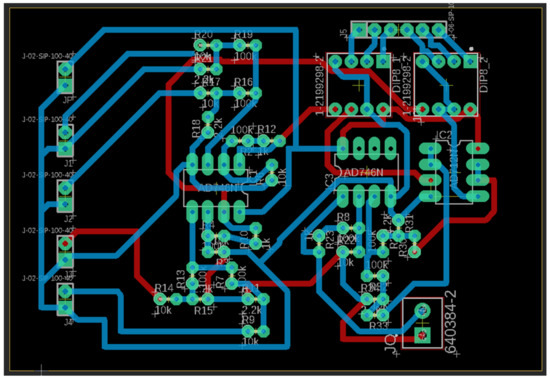

The experimentation that provides the data analyzed herein was conducted in several parts. First, individual components and boards were analyzed using standard techniques, which are briefly described before the data collected from this is presented in Section 5. Following this, an experiment was conducted using basic GDES networks. These were created using two configurations of four HGDES boards (each of which has three GDES nodes on them). Each of these boards has a set of four inputs labeled V1(top) to V4(bottom). In configuration 1, which is presented in Figure 9, the outputs of the three boards are used as input to the fourth board. Potentiometers are used to provide the weightings between the boards (controlling the input voltages of each of these four inputs to the first layer of adder circuits on the fourth board). The output of board 4 is the final system output. In this configuration, boards 1, 2, and 3 each have four individual inputs, which can be supplied with any voltage supply, which follows the previously described circuit constraints.

Figure 9.

First testing circuit configuration.

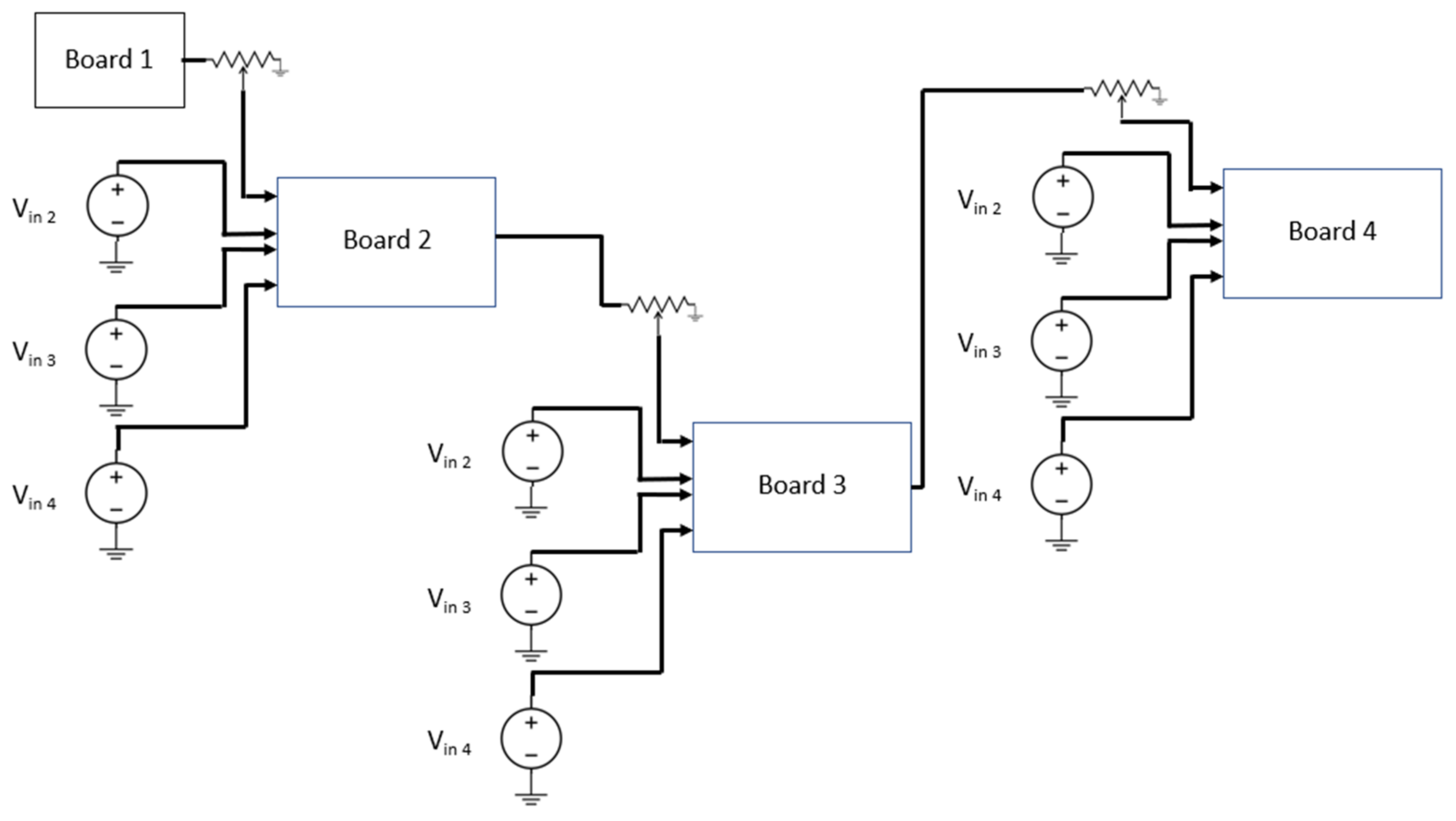

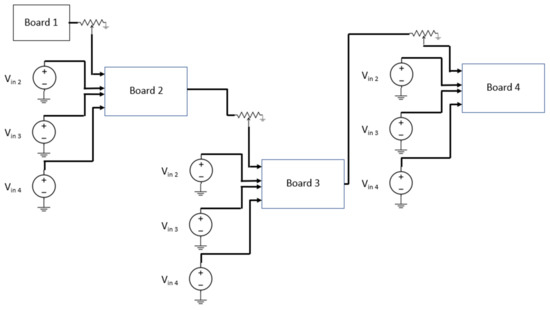

In the second configuration, shown in Figure 10, board 1 is connected to four independent inputs (not shown in the figure), as was the case in configuration 1. Boards 2, 3, and 4, receive their first input from the output of the previous board and the other inputs are connected to independent voltage sources.

Figure 10.

Second testing circuit configuration.

As previously noted, the power supplies’ voltage regulation was used to provide the inputs’ weighting for the initial boards, under the first configuration, for experimental purposes. For the second configuration, the power supplies’ voltage regulation provided the weighting for all inputs for board 1 and the second through fourth inputs for boards 2, 3, and 4.

5. Data and Analysis

This section presents the data that was collected and analysis of it. First, basic analysis of the performance of potentiometer chips used for this implementation and the basic three-node board is provided. Next, analysis of the combined (multi-node) circuits is presented. Finally, a basic hardware gradient descent expert system, including its training and performance, is analyzed.

5.1. Basic Circuit Analysis

The first testing that was conducted was to validate the accuracy of the circuit design. This was conducted using the built-in SPICE simulation capability in the Eagle PCB Design software. The circuit was implemented using the OP AMP-based adders and potentiometer-based voltage divider circuits, as previously discussed.

The first simulation, shown in Figure 11, tested the efficacy of a single OP AMP-based adder circuit using SPICE compatible components from the default ngspice library.

Figure 11.

Basic OP AMP-based adder circuit.

This simulation tested what level of error could occur, given different inputs to the adder circuit, which would introduce error into the result of the HGDES system. The results of this testing are recorded in Table 1.

Table 1.

Single OP amp circuit simulation.

In most cases, the level of error was minimal. The largest error was seen with the Vin1 = 1, Vin2 = 1 case, where a greater level of error was present. The results verify that the design of the adder circuit schematic is correct, acceptable for most uses, and operates as described in Section 3.2.

The potentiometer IC-based voltage divider circuit cannot be simulated using this simulation software, as it is operated using an Arduino microcontroller, which is not supported by the software. A hardware test of the MCP 4131 digital potentiometer IC was, thus, performed to characterize the values of resistance that it provides across the first two terminals of the potentiometer, given different input settings. These results are shown in Table 2. Note that the third column “Ratio” is the ratio of the resistance between terminals 1–2 and terminals 3–4 of the potentiometers and is also the number by which the input voltage of the potentiometers is multiplied.

Table 2.

Chip setting resistance values.

Next, the individual boards were tested to demonstrate the efficacy of the HGDES nodes and to verify their functionality. Table 3, Table 4, Table 5 and Table 6 present the results of the testing for boards 1 to 4, respectively.

Table 3.

First board’s HGDES nodes’ testing results.

Table 4.

Second board’s HGDES nodes’ testing results.

Table 5.

Third board’s HGDES nodes’ testing results.

Table 6.

Fourth board’s HGDES nodes’ testing results.

The error levels present in the boards are quite limited (ranging from 0.002 to 0.185, with an apparent anomaly of almost a volt error in once instance). These are within acceptable levels of error for many, if not most, applications.

5.2. Combined Circuit Analysis

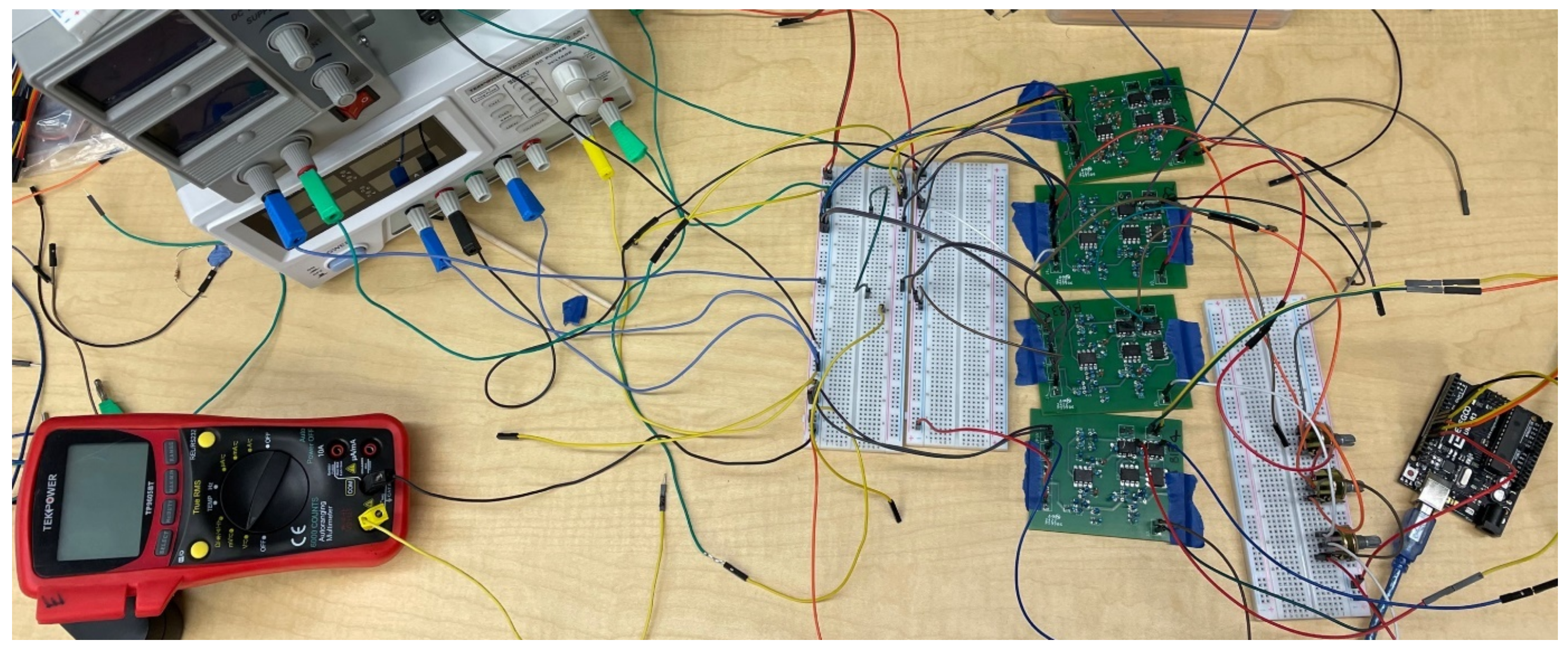

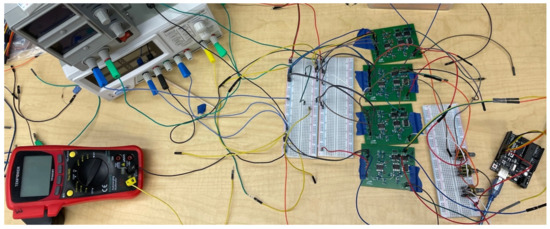

Next, testing was conducted using multiple boards in the configurations described in Section 4. The performance of these multi-board configurations was assessed. This testing was conducted using the experimental setup (with varying wiring between the two configurations) shown in Figure 12.

Figure 12.

Experimental setup for multi-board tests.

To test the multi-board configurations, pre-weighted inputs were supplied using voltage-regulated power supplies. The values of these supply voltages for the 11 tests are provided in Table 7. Note that a limited amount of error, not considered in this table, was introduced by the voltage sources. This is anticipated to be less than ±0.1 V. The potentiometer settings used for the 11 tests are presented in Table 8.

Table 7.

Input voltages for network configuration 1 tests.

Table 8.

Potentiometer settings for network configuration 1 tests.

Based on the input voltages and potentiometer settings presented in Table 7 and Table 8, the ideal output values were calculated. These are presented in Table 9. This table also includes the actual output values that were measured, using a digital multi-meter, from the hardware circuits. The percentage error was also calculated. These error values ranged from 0.02% to 2.63%. This level of error is tolerable for many, if not most, applications of this system.

Table 9.

Ideal and actual output voltages for network configuration 1 tests.

Experimentation was also conducted using the second network configuration presented in Section 4. The input voltages used for the 10 tests for this configuration are presented in Table 10 and the potentiometer settings used for these tests are presented in Table 11. Note that a limited amount of error, not considered in Table 10, was introduced by the voltage sources. This is anticipated to be less than ±0.1 V.

Table 10.

Input voltages for network configuration 2 tests.

Table 11.

Potentiometer settings for network configuration 2 tests.

Based on the voltage inputs and potentiometer settings provided in Table 10 and Table 11, the ideal output values were again computed. These are presented in Table 12. The actual output values, collected using a digital multi-meter, are also presented in this table and the level of error was again calculated. The error level for this (longer) configuration ranged from 0.50% to 1.89%. Notably, the range of error closes somewhat. These error levels are, similarly, within the range acceptable for many, if not most, applications. Additionally, this testing demonstrates that error does not increase dramatically (average error increases from 0.94% to 1.03%) with more layers of nodes, which is critical to implementing more complex HGDES networks.

Table 12.

Ideal and actual output voltages for network configuration 2 tests.

5.3. Training and Hardware Implementation

At present, the HGDES system relies upon the software implementation for its training operations, which generate the weighting values that are used as part of the HGDES networks. The digital implementation of the GDES system does not introduce error as part of the presentation function (which is the capability currently implemented via the HGDES system); however, its training mechanism is not perfect and introduces error. The error levels of the base system (without incorporating error reduction mechanisms) ranged from 5.2% to 8.3% [19], meaning that the training error will dominate the hardware implementation error. Even with error reduction, which showed potential to reduce the upper end of this range down to 5.5% [24], the training error would still be approximately 5 times greater than the hardware implementation presentation error.

Notably, the benefit of the hardware implementation, in terms of processing performance, is significant. It reduces the speed of processing from multiple iterations of network processing to the speed of the critical (longest time-wise) path of IC’s operating speeds. The ICs operate, inherently, in parallel while the software implementation must perform these same functions sequentially. Because of this, the level of benefit enjoyed will vary notably by network design. The assessment of this, with larger networks, is a planned area of future work.

In addition to the overall speed benefit, the hardware implementation will always process (for a given network) in the same amount of time. This is critical to time-sensitive applications, such as robotics, where decisions must be made within timeframes dictated by their real-world needs (e.g., the time between a robot sensing something up ahead and reaching its location). Thus, the hardware implementation will be suitable for some applications where a variable processing time software-based system would not be.

The hardware-based system also enjoys power consumption and cost-based benefits, as compared to operations on a general-purpose computer. For example, a laptop-based solution (such as what was used for testing) might cost hundreds of dollars and consume 20–50 watts of power [87]. The test boards cost less than $50 each (which would be further reduced significantly, on a per-GDES node basis, by the use of larger boards with more nodes on them and through mass production) and used only a small fraction (only about 3.475 micro amps) of the power [88,89]. Additional power savings are also enjoyed due to the fact that the HGDES system can be powered up, have its potentiometer values set, have needed tests run, and be powered-down, without requiring the boot-up and shut-down phases (introducing both a time and power cost) of conventional computers.

6. Application Areas

The HGDES system provides benefits that make it well suited for several types of application areas. Its key benefits are the lower cost and power requirements of the system, as compared to a conventional computer, solution defensibility, and processing time determinism. Applications that benefit from any (or several) of these are candidates for HGDES use. Each area of benefit is now discussed.

6.1. Cost and Power

Cost and power benefits can be enjoyed by most applications. Applications that may collect and process data in situ or in impoverished areas may benefit from the lower cost of purpose-developed computing hardware and the reduced power levels that it requires. The boards tested herein are estimated to require, on average, 10 milliwatts, as compared to many computers that may require hundreds of watts. Applications such as water sediment monitoring and prediction [90] and rainfall prediction [91] may benefit from performing processing using low-cost hardware at or near the points of data collection. In many cases, limited power may be available in these locations, which may be supplied using solar cells or batteries, allowing the application area to benefit from the hardware’s low power costs as well. Flore, Maximilien, and Sègbè [92] demonstrated the utility of using artificial intelligence to make public health decisions in under-resourced areas. These types of applications are also enabled by the low cost of the proposed HGDES. Robotic command applications may, similarly, benefit as robots typically have power limitations and operating cycles that are defined by their power consumption versus generation and storage capabilities.

6.2. Solution Defensibility

Defensibility is critical for numerous applications. Any application where liability may be associated with system performance failures can prospectively benefit from the concept of defensibility. This includes systems that make human-impacting decisions, such as for credit assessment and candidate screening purposes. Systems that operate in medical environments, such as those that aid in or perform diagnosis and surgery [93], may present a particular risk that defensibility can mitigate. Robots that interact with humans or other objects and could potentially injure or damage people or equipment nearby may need to explain and defend their decision making should an incident occur. Surgical robots [94,95], thus, may benefit particularly form this capability, which would allow them to prevent known issues from occurring and explain decisions made during surgery, both for continuous improvement and malpractice investigation purposes. Autonomous vehicles [96,97], similarly, make decisions that affect people and property and could, similarly, benefit from defensibility to attempt to avoid making problematic decisions and to justify the decisions that the system makes.

6.3. Processing Time Determinism

Processing time determinism can be critical for numerous applications, including those that must provide system responsiveness to users. It can also be useful for quantifying processing time needs for most applications. However, systems that operate in a real-world environment have a requirement for this determinism capability to allow them to make decisions fast enough to operate safely. A robot, for example, may need to make an avoidance decision or path planning change before colliding. An autonomous vehicle might face the difficult choice between colliding with a young or an elderly person, if no way to avoid them both is possible. In each of these cases, the decision must be made fast enough, after detection, to allow a maneuver to be performed before the obstacle is reached. Most robots, thus, have this type of a need, making the processing time determinism useful for surgical robots [94,95] and autonomous vehicles [96,97], as previously discussed. Supporting technologies for these types of systems, such as systems that evaluate data trust (e.g., [98]), may also require time determinism to support the time determinism of their dependent decision-making systems.

Some systems (e.g., [99]) will have needs that span two or more of these categories. Several examples of this have been discussed previously. These systems are the most logical candidates to make use of and benefit from HGDES.

7. Conclusions and Future Work

This paper has presented initial work on the implementation of a hardware-based implementation of a gradient-descent trained expert system. It has shown that the previously software-based GDES nodes can be readily implemented in hardware and an analog signal, voltage, can be sent between them as data. It has shown that the required circuits can be developed with a low level of data loss (an average of approximately 1%), making them suitable for many—if not most—applications. Further, it has discussed the speed, power reduction, and cost reduction benefits of this implementation approach.

Notably, the proposed approach also provides a time-determinism benefit as well, as the hardware-implemented GDES system operates within an a priori known amount of time (which does vary by GDES network). This makes the system suitable for applications that require real-time or near real-time processing, such as robotics. In many cases, unknown duration iterative-based processes (such as software-based GDES) cannot be used for real-time command or require compensation for potential processing delays via using hardware with capabilities that far exceed the typical needs (but are projected to be sufficient for worst case scenarios). Hardware-based GDES, thus, would be suitable for various real-world applications that software-based GDES would potentially either not be usable for or for which it would require additional hardware capabilities.

Based on this initial work, a number of areas of future work are planned. The implementation and testing of larger networks is one area of planned future work. With these larger networks, the potential for the training process to take the hardware implementation error into account when training will be assessed. A second area of potential future work is to develop a hardware-based training mechanism.

Overall, the work presented herein has demonstrated the potential efficacy of hardware implementation of the GDES system and its effectiveness and suitability for many applications. This initial work demonstrates the value of the system and serves as a potential justification for future work in the areas mentioned above, which will further advance the system to be ready for practical use.

Author Contributions

Conceptualization, J.S.; validation, F.A.; resources, J.S.; data curation, F.A.; writing—original draft preparation, F.A. and J.S.; writing—review and editing, F.A. and J.S.; supervision, J.S.; project administration, J.S. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

All relevant data is included in the paper.

Acknowledgments

Thanks are given to Noah Ritter and Trevor Schroeder for their initial work on the development of HGDES circuits. Thanks are also given to Jeffrey Erickson for his assistance at several times throughout this project.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Jacobsen, S.C.; Olivier, M.; Smith, F.M.; Knutti, D.F.; Johnson, R.T.; Colvin, G.E.; Scroggin, W.B. Research Robots for Applications in Artificial Intelligence, Teleoperation and Entertainment. Int. J. Robot. Res. 2004, 23, 319–330. [Google Scholar] [CrossRef]

- Kietzmann, J.; Paschen, J.; Treen, E. Artificial Intelligence in Advertising. J. Advert. Res. 2018, 58, 263–267. [Google Scholar] [CrossRef]

- Maedche, A.; Legner, C.; Benlian, A.; Berger, B.; Gimpel, H.; Hess, T.; Hinz, O.; Morana, S.; Söllner, M. AI-Based Digital Assistants: Opportunities, Threats, and Research Perspectives. Bus. Inf. Syst. Eng. 2019, 61, 535–544. [Google Scholar] [CrossRef]

- Marcinkowski, F.; Kieslich, K.; Starke, C.; Lünich, M. Implications of AI (Un-)Fairness in Higher Education Admissions the Effects of Perceived AI (Un-)Fairness on Exit, Voice and Organizational Reputation. In Proceedings of the 2020 Conference on Fairness, Accountability, and Transparency, Barcelona, Spain, 27–30 January 2020. [Google Scholar] [CrossRef]

- Kobbacy, K.A.H.; Vadera, S. A survey of AI in operations management from 2005 to 2009. J. Manuf. Technol. Manag. 2011, 22, 706–733. [Google Scholar] [CrossRef]

- He, S.; Wang, Y.; Xie, F.; Meng, J.; Chen, H.; Luo, S.; Liu, Z.; Zhu, Q. Game player strategy pattern recognition and how UCT algorithms apply pre-knowledge of player’s strategy to improve opponent AI. In Proceedings of the 2008 International Conference on Computational Intelligence for Modelling Control and Automation, CIMCA 2008, Vienna, Austria, 10–12 December 2008; pp. 1177–1181. [Google Scholar]

- Xia, Y.; Liu, C.; Da, B.; Xie, F. A novel heterogeneous ensemble credit scoring model based on bstacking approach. Expert Syst. Appl. 2018, 93, 182–199. [Google Scholar] [CrossRef]

- Dastile, X.; Celik, T.; Potsane, M. Statistical and machine learning models in credit scoring: A systematic literature survey. Appl. Soft Comput. 2020, 91, 106263. [Google Scholar] [CrossRef]

- Mirnezami, R.; Ahmed, A. Surgery 3.0, artificial intelligence and the next-generation surgeon. Br. J. Surg. 2018, 105, 463–465. [Google Scholar] [CrossRef]

- Mirchi, N.; Bissonnette, V.; Yilmaz, R.; Ledwos, N.; Winkler-Schwartz, A.; Del Maestro, R.F. The Virtual Operative Assistant: An explainable artificial intelligence tool for simulation-based training in surgery and medicine. PLoS ONE 2020, 15, e0229596. [Google Scholar] [CrossRef]

- Stevens, R.; Taylor, V.; Nichols, J.; Maccabe, A.B.; Yelick, K.; Brown, D. AI for Science: Report on the Department of Energy (DOE) Town Halls on Artificial Intelligence (AI) for Science; Argonne National Lab. (ANL): Argonne, IL, USA, 2020. [Google Scholar] [CrossRef]

- Kaloudi, N.; Jingyue, L.I. The AI-Based Cyber Threat Landscape. ACM Comput. Surv. 2020, 53, 1–34. [Google Scholar] [CrossRef]

- Baig, Z.A.; Baqer, M.; Khan, A.I. A pattern recognition scheme for Distributed Denial of Service (DDoS) attacks in wireless sensor networks. In Proceedings of the International Conference on Pattern Recognition, Hong Kong, China, 20–24 August 2006; Volume 3, pp. 1050–1054. [Google Scholar]

- Li, J.; Huang, J.S. Dimensions of artificial intelligence anxiety based on the integrated fear acquisition theory. Technol. Soc. 2020, 63, 101410. [Google Scholar] [CrossRef]

- Gunning, D.; Stefik, M.; Choi, J.; Miller, T.; Stumpf, S.; Yang, G.Z. XAI-Explainable artificial intelligence. Sci. Robot. 2019, 4, eaay7120. [Google Scholar] [CrossRef]

- Xu, F.; Uszkoreit, H.; Du, Y.; Fan, W.; Zhao, D.; Zhu, J. Explainable AI: A Brief Survey on History, Research Areas, Approaches and Challenges. In Lecture Notes in Computer Science (Including Subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics), Proceedings of the CCF International Conference on Natural Language Processing and Chinese Computing, Dunhuang, China, 9–14 October 2019; Springer: Berlin/Heidelberg, Germany, 2019; Volume 11839, pp. 563–574. [Google Scholar]

- Batra, G.; Jacobson, Z.; Madhav, S.; Queirolo, A.; Santhanam, N. Artificial-Intelligence Hardware: New Opportunities for Semiconductor Companies; McKinsey and Company: Atlanta, GA, USA, 2018. [Google Scholar]

- Zewe, A. New Hardware Offers Faster Computation for Artificial Intelligence, with Much Less Energy. Available online: https://news.mit.edu/2022/analog-deep-learning-ai-computing-0728 (accessed on 4 September 2022).

- Straub, J. Expert system gradient descent style training: Development of a defensible artificial intelligence technique. Knowl.-Based Syst. 2021, 228, 107275. [Google Scholar] [CrossRef]

- Noble, S.U. Algorithms of Oppression: How Search Engines Reinforce Racism Paperback; NYU Press: New York, NY, USA, 2018. [Google Scholar]

- Angelov, P.; Soares, E. Towards explainable deep neural networks (xDNN). Neural Netw. 2020, 130, 185–194. [Google Scholar] [CrossRef]

- Liang, X.S.; Straub, J. Deceptive Online Content Detection Using Only Message Characteristics and a Machine Learning Trained Expert System. Sensors 2021, 21, 7083. [Google Scholar] [CrossRef]

- Straub, J. Assessment of Gradient Descent Trained Rule-Fact Network Expert System Multi-Path Training Technique Performance. Computers 2021, 10, 103. [Google Scholar] [CrossRef]

- Straub, J. Impact of techniques to reduce error in high error rule-based expert system gradient descent networks. J. Intell. Inf. Syst. 2021, 58, 481–512. [Google Scholar] [CrossRef]

- Lindsay, R.K.; Buchanan, B.G.; Feigenbaum, E.A.; Lederberg, J. DENDRAL: A case study of the first expert system for scientific hypothesis formation. Artif. Intell. 1993, 61, 209–261. [Google Scholar] [CrossRef]

- Macias-Bobadilla, G.; Becerra-Ruiz, J.D.; Estévez-Bén, A.A.; Rodríguez-Reséndiz, J. Fuzzy control-based system feed-back by OBD-II data acquisition for complementary injection of hydrogen into internal combustion engines. Int. J. Hydrog. Energy 2020, 45, 26604–26612. [Google Scholar] [CrossRef]

- Pantic, M.; Rothkrantz, L.J.M. Expert system for automatic analysis of facial expressions. Image Vis. Comput. 2000, 18, 881–905. [Google Scholar] [CrossRef]

- Styvaktakis, E.; Bollen, M.H.J.; Gu, I.Y.H. Expert system for classification and analysis of power system events. IEEE Trans. Power Deliv. 2002, 17, 423–428. [Google Scholar] [CrossRef]

- Zadeh, L.A. Fuzzy sets. Inf. Control 1965, 8, 338–353. [Google Scholar] [CrossRef]

- Mitra, S.; Pal, S.K. Neuro-fuzzy expert systems: Relevance, features and methodologies. IETE J. Res. 1996, 42, 335–347. [Google Scholar] [CrossRef]

- Sebring, M.M.; Shellhouse, E.; Hanna, M.E. Expert Systems in Intrusion Detection: A Case Sudy. In Proceedings of the 11th National Computer Security Conference, Baltimore, MD, USA, 17–20 October 1988. [Google Scholar]

- Straub, J. Assessment of the comparative efficiency of software-based Boolean, electronic, software-based fractional value and simplified quantum principal expert systems. Expert Syst. 2021, 39, e12880. [Google Scholar] [CrossRef]

- Ritter, N.; Straub, J. Implementation of Hardware-Based Expert Systems and Comparison of Their Performance to Software-Based Expert Systems. Machines 2021, 9, 361. [Google Scholar] [CrossRef]

- Caruana, R.; Niculescu-Mizil, A. An empirical comparison of supervised learning algorithms. In Proceedings of the 23rd International Conference on Machine Learning, Pittsburgh, PA, USA, 25–29 June 2006; ACM Press: New York, NY, USA, 2006; Volume 148, pp. 161–168. [Google Scholar]

- Duan, Y.; Chen, X.; Houthooft, R.; Schulman, J.; Abbeel, P. Benchmarking Deep Reinforcement Learning for Continuous Control. In Proceedings of the 33rd International Conference on Machine Learning, New York, NY, USA, 19–24 June 2016. [Google Scholar]

- Paliouras, G.; Papatheodorou, C.; Karkaletsis, V.; Spyropoulos, C. Discovering user communities on the Internet using unsupervised machine learning techniques. Interact. Comput. 2002, 14, 761–791. [Google Scholar] [CrossRef]

- Ruder, S. An overview of gradient descent optimization algorithms. arXiv 2016, arXiv:1609.04747. [Google Scholar]

- Rojas, R. The Backpropagation Algorithm. In Neural Networks; Springer: Berlin/Heidelberg, Germany, 1996; pp. 149–182. [Google Scholar]

- Lee, C.; Sarwar, S.S.; Panda, P.; Srinivasan, G.; Roy, K. Enabling Spike-Based Backpropagation for Training Deep Neural Network Architectures. Front. Neurosci. 2020, 14, 119. [Google Scholar] [CrossRef]

- Mirsadeghi, M.; Shalchian, M.; Kheradpisheh, S.R.; Masquelier, T. STiDi-BP: Spike time displacement based error backpropagation in multilayer spiking neural networks. Neurocomputing 2021, 427, 131–140. [Google Scholar] [CrossRef]

- Beaumont, O.; Herrmann, J.; Pallez, G.; Shilova, A. Optimal memory-aware backpropagation of deep join networks. Philos. Trans. R. Soc. A Math. Phys. Eng. Sci. 2020, 378, 20190049. [Google Scholar] [CrossRef]

- Park, S.; Suh, T. Speculative Backpropagation for CNN Parallel Training. IEEE Access 2020, 8, 215365–215374. [Google Scholar] [CrossRef]

- Kim, S.; Ko, B.C. Building deep random ferns without backpropagation. IEEE Access 2020, 8, 8533–8542. [Google Scholar] [CrossRef]

- Kurt Ma, W.D.; Lewis, J.P.; Kleijn, W.B. The HSIC bottleneck: Deep learning without back-propagation. In Proceedings of the AAAI Conference on Artificial Intelligence, New York, NY, USA, 7–12 February 2020; Volume 34, pp. 5085–5092. [Google Scholar]

- Zwass, V. Expert System. Available online: https://www.britannica.com/technology/expert-system (accessed on 24 February 2021).

- Waterman, D. A Guide to Expert Systems; Addison-Wesley Pub. Co.: Reading, MA, USA, 1986. [Google Scholar]

- Renders, J.M.; Themlin, J.M. Optimization of Fuzzy Expert Systems Using Genetic Algorithms and Neural Networks. IEEE Trans. Fuzzy Syst. 1995, 3, 300–312. [Google Scholar] [CrossRef]

- Sahin, S.; Tolun, M.R.; Hassanpour, R. Hybrid expert systems: A survey of current approaches and applications. Expert Syst. Appl. 2012, 39, 4609–4617. [Google Scholar] [CrossRef]

- McKinion, J.M.; Lemmon, H.E. Expert systems for agriculture. Comput. Electron. Agric. 1985, 1, 31–40. [Google Scholar] [CrossRef]

- Chohra, A.; Farah, A.; Belloucif, M. Neuro-fuzzy expert system E_S_CO_V for the obstacle avoidance behavior of intelligent autonomous vehicles. Adv. Robot. 1997, 12, 629–649. [Google Scholar] [CrossRef]

- Kuehn, M.; Estad, J.; Straub, J.; Stokke, T.; Kerlin, S. An expert system for the prediction of student performance in an initial computer science course. In Proceedings of the IEEE International Conference on Electro Information Technology, Lincoln, NE, USA, 14–17 May 2017. [Google Scholar]

- Kalogirou, S. Expert systems and GIS: An application of land suitability evaluation. Comput. Environ. Urban Syst. 2002, 26, 89–112. [Google Scholar] [CrossRef]

- Arsene, O.; Dumitrache, I.; Mihu, I. Expert system for medicine diagnosis using software agents. Expert Syst. Appl. 2015, 42, 1825–1834. [Google Scholar] [CrossRef]

- Abu-Nasser, B. Medical Expert Systems Survey. Int. J. Eng. Inf. Syst. 2017, 1, 218–224. [Google Scholar]

- Ephzibah, E.P.; Sundarapandian, V. A Neuro Fuzzy Expert System for Heart Disease Diagnosis. Comput. Sci. Eng. Int. J. 2012, 2, 17–23. [Google Scholar] [CrossRef]

- Das, S.; Ghosh, P.K.; Kar, S. Hypertension diagnosis: A comparative study using fuzzy expert system and neuro fuzzy system. In Proceedings of the IEEE International Conference on Fuzzy Systems, Hyderabad, India, 7–10 July 2013. [Google Scholar]

- Sandham, W.A.; Hamilton, D.J.; Japp, A.; Patterson, K. Neural Network and Neuro-Fuzzy Systems for Improving Diabetes Therapy; Institute of Electrical and Electronics Engineers (IEEE): Piscataway, NJ, USA, 2002; pp. 1438–1441. [Google Scholar]

- Akinnuwesi, B.A.; Uzoka, F.M.E.; Osamiluyi, A.O. Neuro-Fuzzy Expert System for evaluating the performance of Distributed Software System Architecture. Expert Syst. Appl. 2013, 40, 3313–3327. [Google Scholar] [CrossRef]

- Barredo Arrieta, A.; Díaz-Rodríguez, N.; Del Ser, J.; Bennetot, A.; Tabik, S.; Barbado, A.; Garcia, S.; Gil-Lopez, S.; Molina, D.; Benjamins, R.; et al. Explainable Explainable Artificial Intelligence (XAI): Concepts, taxonomies, opportunities and challenges toward responsible AI. Inf. Fusion 2020, 58, 82–115. [Google Scholar] [CrossRef]

- Straub, J. Automating the Design and Development of Gradient Descent Trained Expert System Networks. arXiv 2022, arXiv:2207.02845. [Google Scholar] [CrossRef]

- Dally, W.J.; Gray, C.T.; Poulton, J.; Khailany, B.; Wilson, J.; Dennison, L. Hardware-Enabled Artificial Intelligence. In Proceedings of the IEEE Symposium VLSI Circuits Digest of Technical Papers, Honolulu, HI, USA, 18–22 June 2018; pp. 3–6. [Google Scholar] [CrossRef]

- Talib, M.A.; Majzoub, S.; Nasir, Q.; Jamal, D. A systematic literature review on hardware implementation of artificial intelligence algorithms. J. Supercomput. 2021, 77, 1897–1938. [Google Scholar] [CrossRef]

- Shi, M.; Zhang, M.; Yao, S.; Qin, Q.; Wang, M.; Wang, Y.; He, N.; Zhu, J.; Liu, X.; Hu, E.; et al. Ferroelectric Memristors Based Hardware of Brain Functions for Future Artificial Intelligence. J. Phys. Conf. Ser. 2020, 1631, 012042. [Google Scholar] [CrossRef]

- Abderrahmane, N.; Lemaire, E.; Miramond, B. Design Space Exploration of Hardware Spiking Neurons for Embedded Artificial Intelligence. Neural Netw. 2020, 121, 366–386. [Google Scholar] [CrossRef]

- Shastri, B.J.; Tait, A.N.; Ferreira de Lima, T.; Pernice, W.H.P.; Bhaskaran, H.; Wright, C.D.; Prucnal, P.R. Photonics for artificial intelligence and neuromorphic computing. Nat. Photonics 2021, 15, 102–114. [Google Scholar] [CrossRef]

- Yu, Z.; Abdulghani, A.M.; Zahid, A.; Heidari, H.; Imran, M.A.; Abbasi, Q.H. An overview of neuromorphic computing for artificial intelligence enabled hardware-based hopfield neural network. IEEE Access 2020, 8, 67085–67099. [Google Scholar] [CrossRef]

- Wunderlich, T.; Kungl, A.F.; Müller, E.; Schemmel, J.; Petrovici, M. Brain-Inspired Hardware for Artificial Intelligence: Accelerated Learning in a Physical-Model Spiking Neural Network. In Lecture Notes in Computer Science (Including Subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics), Proceedings of the International Conference on Artificial Neural Networks, Munich, Germany, 17–19 September 2019; Springer: Cham, Switzerland, 2019; Volume 11727, pp. 119–122. [Google Scholar] [CrossRef]

- Spanò, S.; Cardarilli, G.C.; Di Nunzio, L.; Fazzolari, R.; Giardino, D.; Matta, M.; Nannarelli, A.; Re, M. An efficient hardware implementation of reinforcement learning: The q-learning algorithm. IEEE Access 2019, 7, 186340–186351. [Google Scholar] [CrossRef]

- Jovanovic, S.; Hikawa, H. A Survey of Hardware Self-Organizing Maps. IEEE Trans. Neural Netw. Learn. Syst. 2022, 33, 1–20. [Google Scholar] [CrossRef]

- Prati, E. Quantum neuromorphic hardware for quantum artificial intelligence. J. Phys. Conf. Ser. 2017, 880, 12018. [Google Scholar] [CrossRef]

- Wang, J.; Zhuge, X.; Zhuge, F. Hybrid oxide brain-inspired neuromorphic devices for hardware implementation of artificial intelligence. Sci. Technol. Adv. Mater. 2021, 22, 326–344. [Google Scholar] [CrossRef]

- Wang, Y.; Huang, M.; Han, K.; Chen, H.; Zhang, W.; Xu, C.; Tao, D. AdderNet and its Minimalist Hardware Design for Energy-Efficient Artificial Intelligence. arXiv 2021, arXiv:2101.1001. [Google Scholar] [CrossRef]

- Capra, M.; Bussolino, B.; Marchisio, A.; Shafique, M.; Masera, G.; Martina, M. An Updated Survey of Efficient Hardware Architectures for Accelerating Deep Convolutional Neural Networks. Future Internet 2020, 12, 113. [Google Scholar] [CrossRef]

- Tai, X.Y.; Zhang, H.; Niu, Z.; Christie, S.D.R.; Xuan, J. The future of sustainable chemistry and process: Convergence of artificial intelligence, data and hardware. Energy AI 2020, 2, 100036. [Google Scholar] [CrossRef]

- Azghadi, M.R.; Lammie, C.; Eshraghian, J.K.; Payvand, M.; Donati, E.; Linares-Barranco, B.; Indiveri, G. Hardware Implementation of Deep Network Accelerators towards Healthcare and Biomedical Applications. IEEE Trans. Biomed. Circuits Syst. 2020, 14, 1138–1159. [Google Scholar] [CrossRef]

- Berggren, K.; Xia, Q.; Likharev, K.K.; Strukov, D.B.; Jiang, H.; Mikolajick, T.; Querlioz, D.; Salinga, M.; Erickson, J.R.; Pi, S.; et al. Roadmap on emerging hardware and technology for machine learning. Nanotechnology 2020, 32, 012002. [Google Scholar] [CrossRef]

- Deng, C.; Fang, X.; Wang, X.; Law, K. Software Orchestrated and Hardware Accelerated Artificial Intelligence: Toward Low Latency Edge Computing. IEEE Wirel. Commun. 2022, 29, 1–20. [Google Scholar] [CrossRef]

- Kundu, S.; Basu, K.; Sadi, M.; Titirsha, T.; Song, S.; Das, A.; Guin, U. Special session: Reliability analysis for AI/ML hardware. In Proceedings of the IEEE 39th VLSI Test Symposium (VTS), San Diego, CA, USA, 25–28 April 2021. [Google Scholar] [CrossRef]

- Gross, W.J.; Meyer, B.H.; Ardakani, A. Hardware-Aware Design for Edge Intelligence. IEEE Open J. Circuits Syst. 2020, 2, 113–127. [Google Scholar] [CrossRef]

- You, W.; Wu, C. RSNN: A Software/Hardware Co-Optimized Framework for Sparse Convolutional Neural Networks on FPGAs. IEEE Access 2021, 9, 949–960. [Google Scholar] [CrossRef]

- Zhang, X.; Jiang, W.; Shi, Y.; Hu, J. When Neural Architecture Search Meets Hardware Implementation: From Hardware Awareness to Co-Design. In Proceedings of the IEEE Computer Society Annual Symposium on VLSI (ISVLSI), Miami, FL, USA, 15–17 July 2019; pp. 25–30. [Google Scholar] [CrossRef]

- Xu, Q.; Arafin, M.T.; Qu, G. Security of Neural Networks from Hardware Perspective: A Survey and Beyond. In Proceedings of the 2021 26th Asia and South Pacific Design Automation Conference (ASP-DAC), Tokyo, Japan, 18–21 January 2021; IEEE: Tokyo, Japan, 2021. [Google Scholar]

- Shafik, R.; Wheeldon, A.; Yakovlev, A. Explainability and Dependability Analysis of Learning Automata based AI Hardware. In Proceedings of the 2020 IEEE 26th International Symposium on On-Line Testing and Robust System Design (IOLTS), Napoli, Italy, 13–15 July 2020. [Google Scholar] [CrossRef]

- Straub, J. Gradient descent training expert system. Softw. Impacts 2021, 10, 100121. [Google Scholar] [CrossRef]

- Nilsson, J.W.; Riedel, S. Electric Circuits; Pearson Education Inc.: London, UK, 2014. [Google Scholar]

- Cburnett File:SPI Single Slave.Svg—Wikimedia Commons. Available online: https://commons.wikimedia.org/wiki/File:SPI_single_slave.svg (accessed on 4 August 2022).

- Direct Energy Laptops vs. Desktops: Which Is More Energy-Efficient? Available online: https://business.directenergy.com/blog/2017/november/laptops-vs-desktops-energy-efficiency (accessed on 4 August 2022).

- Microchip Technology Inc. MCP413X/415X/423X/425X. Available online: http://ww1.microchip.com/downloads/en/devicedoc/22060b.pdf (accessed on 4 August 2022).

- Microchip Technology Inc. MCP601/1R/2/3/4 Spec Sheet. Available online: https://www.mouser.com/datasheet/2/268/21314g-2449592.pdf (accessed on 4 August 2022).

- Shadkani, S.; Abbaspour, A.; Samadianfard, S.; Hashemi, S.; Mosavi, A.; Band, S.S. Comparative study of multilayer perceptron-stochastic gradient descent and gradient boosted trees for predicting daily suspended sediment load: The case study of the Mississippi River, U.S. Int. J. Sediment Res. 2021, 36, 512–523. [Google Scholar] [CrossRef]

- Chattopadhyay, S.; Chattopadhyay, G. Conjugate gradient descent learned ANN for Indian summer monsoon rainfall and efficiency assessment through Shannon-Fano coding. J. Atmos. Sol.-Terr. Phys. 2018, 179, 202–205. [Google Scholar] [CrossRef]

- Dovonou Flore, M.; Nouvê wa Patrice Maximilien, B.; Christophe Sègbè, H. Identification of Unfavorable Climate and Sanitary Periods in Oueme Department in Benin (West Africa). Adv. Ecol. Environ. Res. 2018, 3, 257–274. [Google Scholar]

- Choi, H.I.; Jung, S.K.; Baek, S.H.; Lim, W.H.; Ahn, S.J.; Yang, I.H.; Kim, T.W. Artificial Intelligent Model with Neural Network Machine Learning for the Diagnosis of Orthognathic Surgery. J. Craniofac. Surg. 2019, 30, 1986–1989. [Google Scholar] [CrossRef]

- Choi, B.; Jo, K.; Choi, S.; Choi, J. Surgical-tools detection based on Convolutional Neural Network in laparoscopic robot-assisted surgery. In Proceedings of the 39th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Jeju, Korea, 11–15 July 2017; pp. 1756–1759. [Google Scholar] [CrossRef]

- Wang, Z.; Majewicz Fey, A. Deep learning with convolutional neural network for objective skill evaluation in robot-assisted surgery. Int. J. Comput. Assist. Radiol. Surg. 2018, 13, 1959–1970. [Google Scholar] [CrossRef]

- Kehtarnavaz, N.; Griswold, N.; Miller, K.; Lescoe, P. A transportable neural-network approach to autonomous vehicle following. IEEE Trans. Veh. Technol. 1998, 47, 694–702. [Google Scholar] [CrossRef]

- Rausch, V.; Hansen, A.; Solowjow, E.; Liu, C.; Kreuzer, E.; Hedrick, J.K. Learning a deep neural net policy for end-to-end control of autonomous vehicles. In Proceedings of the American Control Conference (ACC), Seattle, WA, USA, 24–26 May 2017; pp. 4914–4919. [Google Scholar] [CrossRef]

- Kianersi, D.; Uppalapati, S.; Bansal, A.; Straub, J. Evaluation of a Reputation Management Technique for Autonomous Vehicles. Future Internet 2022, 14, 31. [Google Scholar] [CrossRef]

- Bechtel, M.G.; McEllhiney, E.; Kim, M.; Yun, H. DeepPicar: A low-cost deep neural network-based autonomous car. In Proceedings of the 2018 IEEE 24th International Conference on Embedded and Real-Time Computing Systems and Applications RTCSA, Hakodate, Japan, 8–31 August 2018; pp. 11–21. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).