RAD-XP: Tabletop Exercises for Eliciting Resilience Requirements for Sociotechnical Systems

Abstract

:1. Introduction

- Shared Demand and Deviation Awareness: Each unit in the system is aware of changes from the norm and/or demands that require responses;

- Progressive Responding: The system engages in continuous sensemaking and decision making to align responses to demands;

- Response Coordination: The system has tacit and explicit mechanisms to support omnidirectional and adaptive coordination of resources;

- Maneuver Capacity: The system has a diversity of means, and can act in novel ways, to achieve goals;

- Guided Local Control: Higher work units provide flexible guidance to front-line units, who have the flexibility and authority to respond to incidents.

1.1. Agile Development

1.2. Tabletop Exercises & Wargames

1.3. Related Methods

1.4. Challenges & Potential Mitigations

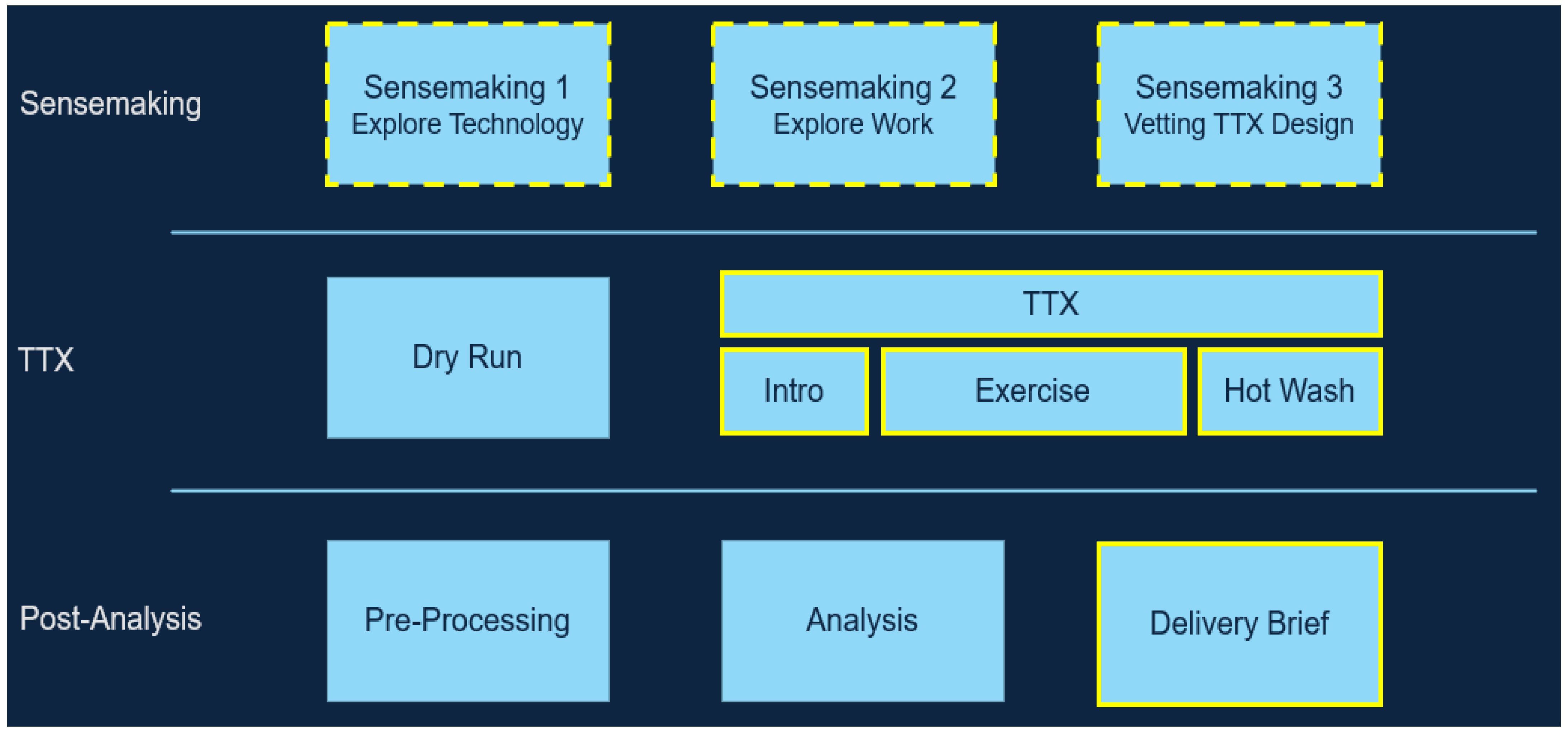

2. RAD-XP

2.1. Sensemaking Phase

- Purpose: Why is the technology being funded/built? What problem does it solve?

- People: Who uses the technology? Who else will interact with it (maintainers, administrators, etc.)? What are their goals?

- Form and Function: How does the technology work? What are the major functional components? How do users interact with it?

- Work: What missions or task threads will the technology be used in? What policies or procedures might govern technology use in work?

- Environment: Where is the technology used? When is the technology used? Will it be used under time pressure?

- Other Technology: What other technologies will this technology interact with (upstream or downstream)?

2.2. Exercise Phase

2.3. Analysis Phase

3. Pilot Study

3.1. Methods

- Inject 1: You are tasked with assessing the outcomes of a pilot study to measure the impact of OB telemedicine on a population (Response Coordination);

- Inject 2: The underlying criteria SDOH change, and you need to determine how this affects the technology’s models, and your patients’ qualifications for OB telehealth (Shared Demand and Deviation Awareness);

- Inject 3: Due to the changes in SDOH criteria, you need to override the systems’ outdated models to manually change which patients qualify for OB telehealth (Guided Local Control);

- Inject 4: You would like to re-examine a current OB telehealth patient’s fitness for OB telehealth after their living situation (and therefore SDOH) changed (Response Coordination);

- Inject 5: A patient’s data at a face-to-face appointment is inconsistent with what was logged during telehealth appointments (Progressive Responding);

- Inject 6: Medical students want to access and share deidentified data with another research hospital to collaborate on a paper about equity in OB telehealth (Guided Local Control).

3.2. Results

3.2.1. User Stories

- Shared Demand and Deviation Awareness (SDA, n = 3, 20%): As a physician, I want to be notified when the underlying models are changed, by whom, and why, so I can calibrate my trust in [The Technology];

- Response Coordination (REC, n = 1, 7%): As a physician, I want to provide organized and systemic notifications to patients when their qualifications for OB telemedicine change, so I can [unstated];

- Guided Local Control (GLC, n = 5, 33%): As a physician, I want to use local codes/rationales to override [The Technology] when necessary, so I can have appropriate localization and flexibility of data for my care team;

- Progressive Responding (PRG, n = 3, 20%): As a CMIO, I want to schedule planned periodic reviews of data and underlying models/criteria, so I can reevaluate current models;

- Maneuver Capacity (MAC, n = 2, 13%): As a CMIO (or Clinical Decision Support Team, CDST), I want to know when outliers occur or outcomes deviate from expectations, so I can evaluate the models and data earlier than periodic reviews;

- General (GEN, n = 4, 27%): As a physician, I want to use a standard set of questions to measure subjective experience, so I can [unstated].

3.2.2. Other Outcomes

3.2.3. Subjective Experience

4. Discussion

4.1. Key Findings

- The TTX was effective for generating user stories to support work system resilience. We found that 13 of 15 (87%) elicited user stories supported the resilience of the sociotechnical system into which the technology would be integrated;

- User stories generated from RAD-XP covered all of the big five factors of the TRUSTS framework;

- RAD-XP supported other user-centered design practices through the identification of stakeholders and various considerations for the operation, administration, and maintenance of the technology;

- Involvement of end users was crucial. Users drove discussion that resulted in user stories, and developers reported that such user commentary in the TTX was particularly helpful toward developing understanding of how the technology would be deployed in a sociotechnical system (see Table 5).

4.2. Limitations & Future Work

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Chopra, A.K.; Dalpiaz, F.; Aydemir, F.B.; Giorgini, P.; Mylopoulos, J.; Singh, M.P. Protos: Foundations for Engineering Innovative Sociotechnical Systems. In Proceedings of the 2014 IEEE 22nd International Requirements Engineering Conference (RE), Karlskrona, Sweden, 25–29 August 2014. [Google Scholar]

- Aydemir, F.B.; Giorgini, P.; Mylopoulos, J.; Dalpiaz, F. Exploring Alternative Designs for Sociotechnical Systems. In Proceedings of the 2014 IEEE Eighth International Conference on Research Challenges in Information Science, Merrakech, Morocco, 28–30 May 2014. [Google Scholar]

- Clegg, C.W. Sociotechnical Principles for System Design. Appl. Ergon. 2000, 31, 463–477. [Google Scholar] [CrossRef] [PubMed]

- Roth, E.M.; Patterson, E.S.; Mumaw, R.J. Cognitive Engineering: Issues in User-Centered System Design. Encycl. Softw. Eng. 2002, 1, 163–179. [Google Scholar]

- Woods, D.D. The Theory of Graceful Extensibility: Basic Rules that Govern Adaptive Systems. Environ. Syst. Decis. 2018, 38, 433–457. [Google Scholar] [CrossRef]

- Dorton, S.L.; Harper, S.B. A Naturalistic Investigation of Trust, AI, and Intelligence Work. J. Cogn. Eng. Decis. Mak. 2022, 16, 222–236. [Google Scholar] [CrossRef]

- Dorton, S.L.; Harper, S.B.; Neville, K.J. Adaptations to Trust Incidents with Artificial Intelligence. Proc. Hum. Factors Ergon. Soc. Annu. Meet. 2022, 66, 95–99. [Google Scholar] [CrossRef]

- Dorton, S.L.; Ministero, L.M.; Alaybek, B.; Bryant, D.J. Foresight for Ethical AI. Front. Artif. Intell. 2023, 6, 1143907. [Google Scholar] [CrossRef] [PubMed]

- Neville, K.J.; Pires, B.; Madhavan, P.; Booth, M.; Rosfjord, K.; Patterson, E.S. The TRUSTS Work System Resilience Framework: A Foundation for Resilience-Aware Development and Transition. Proc. Hum. Factors Ergon. Soc. Annu. Meet. 2022, 66, 2067–2071. [Google Scholar] [CrossRef]

- Nemeth, C.; Wears, R.; Woods, D.; Hollnagel, E.; Cook, R. Minding the Gaps: Creating Resilience in Healthcare. In Advances in Patient Safety: New Directions and Alternative Approaches; Agency for Healthcare Research and Quality (US): Rockville, MD, USA, 2008; Volume 3. [Google Scholar]

- Storm, B.L.; Schinnar, R.; Aberra, F.; Bilker, W.; Hennessy, S.; Leonard, C.E.; Pifer, E. Unintended Effects of a Computerized Physician Order Entry Nearly Hard-Stop Alert to Prevent a Drug Interaction: A Randomized Controlled Trial. Arch. Intern. Med. 2010, 170, 1578–1583. [Google Scholar] [CrossRef]

- Mirsa, S.; Kumar, V.; Kumar, U. Goal-Oriented or Scenario-Based Requirements Engineering Technique–What Should a Practitioner Select? In Proceedings of the Canadian Conference on Electrical and Computer Engineering, Saskatoon, SK, Canada, 1–4 May 2005; pp. 2288–2292. [Google Scholar]

- Raunak, M.S.; Binkley, D. Agile and Other Trends in Software Engineering. In Proceedings of the 2017 IEEE 28th Annual Software Technology Conference (STC), Gaithersburg, Maryland, USA, 25–28 September 2017. [Google Scholar]

- Fowler, M.; Highsmith, J. The Manifesto for Agile Software Development. 2001. Available online: agilemanifesto.org (accessed on 1 July 2023).

- Batra, M.; Bhatnagar, A. A Research Study on Critical Challenges in Agile Requirements Engineering. Int. Res. J. Eng. Technol. 2019, 6, 1214–1219. [Google Scholar]

- Masood, Z.; Hoda, R.; Blincoe, K. Real World Scrum: A Grounded Theory of Variations in Practice. IEEE Trans. Softw. Eng. 2020, 48, 1579–1591. [Google Scholar] [CrossRef]

- Ananjeva, A.; Persson, J.S.; Brunn, A. Integrating UX Work with Agile Development Through User Stories: An Action Research Study in a Small Software Company. J. Syst. Softw. 2020, 170, 110785. [Google Scholar] [CrossRef]

- Kelly, S. Towards an Evolutionary Framework for Agile Requirements Elicitation. In Proceedings of the 2nd ACM SIGCHI Symposium on Engineering Interactive Computing Systems, Berlin, Germany, 19–23 June 2010; pp. 349–352. [Google Scholar]

- Rodeghero, P.; Jiang, S.; Armaly, A.; McMillan, C. Detecting User Story Information in Developer-Client Conversations to Generate Extractive Summaries. In Proceedings of the 2017 IEEE/ACM 39th International Conference on Software Engineering (ICSE), Buenos Aires, Argentina, 20–28 May 2017; pp. 49–59. [Google Scholar]

- Younas, M.; Jawawi, D.N.A.; Ghani, I.; Kazmi, R. Non-Functional Requirements Elicitation Guideline for Agile Methods. JTEC 2017, 9, 49–59. [Google Scholar]

- Dorton, S.L.; Fersch, T.; Barrett, E.; Langone, A.; Seip, M.; Bilsborough, S.; Hudson, C.B.; Ward, P.; Neville, K.J. The Value of Wargames and Tabletop Exercises as Naturalistic Tools. In Proceedings of the HFES 67th International Annual Meeting, Washington DC, USA, 23–27 October 2023. article in press. [Google Scholar]

- Caffrey, M. Toward a History-Based Doctrine for Wargaming. Aerosp. Power J. 2000, 14, 33–56. [Google Scholar]

- Dorton, S.; Tupper, S.; Maryeski, L. Going Digital: Consequences of Increasing Resolution of a Wargaming Tool for Knowledge Elicitation. Proc. Hum. Factors Ergon. Soc. Annu. Meet. 2017, 61, 2037–2041. [Google Scholar] [CrossRef]

- De Rosa, F.; Strode, C. Exploring Trust in Unmanned Systems with the Maritime Unmanned System Trust Game. Hum. Factors Syst. Interact. 2022, 52, 9–16. [Google Scholar]

- De Rosa, F.; Strode, C. Games to Support Disruptive Technology Adoption: The MUST Game Use Case. IJSG 2022, 9, 93–114. [Google Scholar]

- Dorton, S.L.; Maryeski, L.R.; Ogren, L.; Dykens, I.T.; Main, A. A Wargame-Augmented Knowledge Elicitation Method for the Agile Development of Novel Systems. Systems 2020, 8, 27. [Google Scholar] [CrossRef]

- Smith, S.; Raio, S.; Erbacher, R.; Weisman, M.; Parker, T.; Ellis, J. Quantitative Measurement of Cyber Resilience: A Tabletop Exercise; DEVCOM Army Research Laboratory (US): Adelphi, MD, USA, 2022. [Google Scholar]

- Deal, S.V.; Hoffman, R.R. The Practitioner’s Cycles, Part 1: Actual World Problems. IEEE Intell. Syst. 2010, 25, 4–9. [Google Scholar]

- Hoffman, R.R.; Deal, S.V.; Potter, S.; Roth, E.M. The Practitioner’s Cycles, Part 2: Solving Envisioned World Problems. IEEE Intell. Syst. 2010, 25, 6–11. [Google Scholar] [CrossRef]

- Bryant, D.J.; Martin, L.B.; Bandali, F.; Rehak, L.; Vokac, R.; Lamoureux, T. Development and Evaluation of an Intuitive Operational Planning Process. J. Cogn. Eng. Decis. Mak. 2007, 1, 434–460. [Google Scholar] [CrossRef]

- Lombriser, P.; Dalpiaz, F.; Lucassen, G.; Brinkkemper, S. Gamified Requirements Engineering: Model and Experimentation. In Proceedings of the Requirements Engineering: Foundation for Software Quality, Gothenburg, Sweden, 14–17 March 2016; pp. 171–187. [Google Scholar]

- Hahn, H.A. What Can You Learn About Systems Engineering by Building a LegoTM Car? INCOSE Int. Symp. 2018, 28, 158–172. [Google Scholar] [CrossRef]

- Dykens, I.T.; Wetzel, A.; Dorton, S.L.; Batchelor, E. Towards a Unified Model of Gamification and Motivation. In Proceedings of the Adaptive Instructional Systems. Design and Evaluation, Virtual Event, 24–29 July 2021; pp. 53–70. [Google Scholar]

- Sutcliffe, A. Scenario-Based Requirements Engineering. J. Light. Technol. 2003. [Google Scholar] [CrossRef]

- Sutcliffe, R.B.; Ryan, M. Experience with SCRAM, a Scenario Requirements Analysis Method. In Proceedings of the IEEE International Symposium on Requirements Engineering: RE ’98, Colorado Springs, CO, USA, 10 April 1998; pp. 164–171. [Google Scholar]

- Van Lamsweerde, A.; Letier, E. Handling Obstacles in Goal-Oriented Requirements Engineering. IEEE Trans. Softw. Eng. 2000, 26, 978–1005. [Google Scholar] [CrossRef]

- Lutz, R.; Patterson-Hine, A.; Nelson, S.; Frost, C.R.; Tal, D.; Harris, R. Using Obstacle Analysis to Identify Contingency Requirements on an Unpiloted Aerial Vehicle. Requir. Eng. 2007, 12, 41–54. [Google Scholar] [CrossRef]

- Ponsard, C.; Darimont, R. Formalizing Security and Safety Requirements by Mapping Attack-Fault Trees on Obstacle Models with Constraint Programming Semantics. In Proceedings of the 2020 IEEE FORMREQ, Zurich, Switzerland, 31 August–4 September 2020; pp. 8–13. [Google Scholar]

- Karolita, D.; McIntosh, J.; Kanji, T.; Grundy, J.; Obie, H.O. Use of Personas in Requirements Engineering: A Systematic Mapping Study. Inf. Softw. Technol. 2023, 162, 107264. [Google Scholar] [CrossRef]

- Ferraris, D.; Fernandez-Gago, C. TrUStAPIS: A Trust Requirements Elicitation Method for IoT. Int. J. Inf. Secur. 2020, 19, 111–127. [Google Scholar] [CrossRef]

- Lim, S.; Henriksson, A.; Zdravkovic, J. Data-Driven Requirements Elicitation: A Systematic Literature Review. SN Comput. Sci. 2021, 2, 1–35. [Google Scholar] [CrossRef]

- Ramesh, B.; Cao, L.; Mohan, K.; Xu, P. Can Distributed Software Development be Agile? Commun. ACM 2006, 49, 41. [Google Scholar] [CrossRef]

- Tanzman, E.A.; Nieves, L.A. Analysis of the Argonne Distance Tabletop Exercise Method.; No. ANL/DIS/RP-60983; Argonne National Lab. (ANL): Argonne, IL, USA, 2008. [Google Scholar]

- Hansen, K.; Pounds, L. Beyond the Hit-and-Run Tabletop Exercise. J. Bus. Contin. Emerg. Plan. 2009, 3, 137–144. [Google Scholar]

- Raharjana, I.K.; Siahaan, D.; Fatichah, C. User Story Extraction from Online News for Software Requirements Elicitation: A Conceptual Model. In Proceedings of the 2019 16th International Joint Conference on Computer Science and Software Engineering (JCSSE), Chonburi, Thailand, 10–12 July 2019. [Google Scholar]

- Gall, M.; Berenbach, B. Towards a Framework for Real Time Requirements Elicitation. In Proceedings of the 2006 First International Workshop on Multimedia Requirements Engineering (MERE’06–RE’06 Workshop), Minnneapolis, MN, USA, 12 September 2006. [Google Scholar]

- Rietz, T.; Maedche, A. LadderBot: A Requirements Self-Elicitation System. In Proceedings of the 2019 IEEE 27th International Requirements Engineering Conference (RE), Jeju, Korea, 23–27 September 2019; pp. 357–362. [Google Scholar]

- Rasheed, A.; Zafar, B.; Shehryar, T.; Aslam, N.A.; Sajid, M.; Ali, N.; Dar, S.H.; Khalid, S. Requirement Engineering Challenges in Agile Software Development. Math. Probl. Eng. 2021, 2021, 6696695. [Google Scholar] [CrossRef]

- Heyn, H.-M.; Knauss, E.; Muhammad, A.P.; Eriksson, O.; Linder, J.; Subbiah, P.; Pradhan, S.K.; Tungal, S. Requirement Engineering Challenges for AI-Intense Systems Development. In Proceedings of the 2021 IEEE/ACM 1st Workshop on AI Engineering–Software Engineering for AI (WAIN), Madrid, Spain, 30–31 May 2021; pp. 89–96. [Google Scholar]

- Hosseini, M.; Shahri, A.; Phalp, K.; Taylor, J.; Ali, R.; Dalpiaz, F. Configuring Crowdsourcing for Requirements Elicitation. In Proceedings of the 2015 IEEE 9th International Conference on Research Challenges in Information Science (RCIS), Athens, Greece, 13–15 May 2015. [Google Scholar]

- Dorton, S.L.; Maryeski, L.M.; Costello, R.P.; Abrecht, B.R. A Case for User Centered Design Satellite Command and Control. Aerospace 2021, 8, 303. [Google Scholar] [CrossRef]

- Dorton, S.L.; Ganey, H.C.N.; Mintman, E.; Mittu, R.; Smith, M.A.B.; Winters, J. Human-Centered Alphabet Soup: Approaches to Systems Development from Related Disciplines. In Proceedings of the 2021 International Annual Meeting of the Human Factors and Ergonomics Society, Baltimore, MD, USA, 4–7 October 2021; Volume 65, pp. 1167–1170. [Google Scholar]

- Klein, G. Snapshots of the Mind; MIT Press: Cambridge, MA, USA, 2022. [Google Scholar]

- Dalpiaz, F.; Brinkkemper, S. Agile Requirements Engineering: From User Stories to Software Architectures. In Proceedings of the 2021 IEEE 29th International Requirements Engineering Conference (RE), Notre Dame, IN, USA, 20–24 September 2021. [Google Scholar]

- Lucassen, G.; Dalpiaz, F.; van der Werf, J.M.E.M.; Brinkkemper, S. Forging High-Quality User Stories: Towards a Discipline for Agile Requirements. In Proceedings of the 2015 IEEE 23rd International Requirements Engineering Conference (RE), Ottawa, ON, Canada, 24–28 August 2015; pp. 126–135. [Google Scholar]

- Lucassen, G.; Dalpiaz, F.; van der Werf, J.M.E.M.; Brinkkemper, S. The Use and Effectiveness of User Stories in Practice. In Proceedings of the Requirements Engineering: Foundation for Software Quality, Gothenburg, Sweden, 14–17 March 2016; pp. 205–222. [Google Scholar]

- Walker, D.H.T.; Bourne, L.M.; Shelley, A. Influence, Stakeholder Mapping and Visualization. Constr. Manag. Econ. 2008, 26, 645–658. [Google Scholar] [CrossRef]

- Marquez, J.J.; Downey, A.; Clement, R. Walking a Mile in the User’s Shoes: Customer Journey Mapping as a Method to Understanding the User Experience. Internet Ref. Serv. Q. 2015, 20, 135–150. [Google Scholar] [CrossRef]

- Molich, R.; Jeffries, R.; Dumas, J.S. Making Usability Recommendations Useful and Usable. J. Usability Stud. 2007, 2, 162–179. [Google Scholar]

- Jindal, R.; Malhotra, R.; Jain, A.; Bansal, A. Mining Non-Functional Requirements Using Machine Learning Techniques. e-Informatical Softw. Eng. J. 2021, 15, 85–114. [Google Scholar] [CrossRef]

- Franch, X.; Gómez, C.; Jedlitschka, A.; López, L.; Martínez-Fernández, S.; Oriol, M.; Partanen, J. Data-Driven Elicitation, Assessment and Documentation of Quality Requirements in Agile Software Development. In Proceedings of the Advanced Information Systems Engineering, Tallinn, Estonia, 11–15 June 2018; pp. 587–602. [Google Scholar]

| Challenge | TTX Mitigation |

|---|---|

| Software development is increasingly a distributed activity [42], and RE can be difficult when teams are distributed [15,20]. | TTXs are flexible and can be readily executed in geographically- [43] and temporally-distributed settings [44]. |

| RE in Agile relies heavily on direct engagement with end users and stakeholders; however, it can be difficult to get stakeholders involved in RE [18,39,45]. | Research has shown that busy stakeholders were highly motivated to participate in TTXs [26], and that such gamified methods for RE result in increased participation and better user stories [31,32]. |

| There is a lack of a common language to support the different mental models of users, stakeholders, and developers [46]. Users often struggle to articulate requirements [47,48], while developers and requirements engineers often do not know the right questions to ask users [19], despite needing domain knowledge to be successful [45,49]. | Hosseini et al. [50] found that involving large and diverse crowds in RE increased the accuracy, relevance, and creativity of resultant requirements. Military participants in a TTX reported that participation allowed them to meet and collaborate with their foreign counterparts that they had not met before, illustrating how TTXs foster collaboration across diverse groups of participants [25]. |

| The rapid and iterative nature of Agile creates challenges for RE, where traditional RE methods may be too slow to be impactful [26], and requirements must be iteratively developed as the technology matures with each sprint [18]. | Dorton et al. [26] demonstrated the ability to design and execute TTXs rapidly enough to have early impact in an Agile lifecycle. TTXs can be performed iteratively over periods of time [44], such as during sprints. |

| Stakeholders and end users can have difficulty seeing beyond the current situation into the future, and in thinking concretely about envisioned future states [18]. | Scenario-based requirements engineering approaches (including TTXs) have been shown to generate detailed discussion by grounding argument and reasoning in a specific scenario [12,34]. Depending on the scenario fidelity, TTXs may enable observational research, rather than discussion-based research that is more speculative [26]. |

| Annotated Example Transcript | User Story Slots |

|---|---|

| “If that happened, I would want to know if anything changed, in terms of outcomes, you know, between now and the last time, to make sure we should still be using it.” | User: As a CMIO 1… |

| Function: I want to know if outcomes have changed since the last periodic review… | |

| Rationale: So I can make an informed decision about whether we should be using the tool. |

| User Story ID | SDA | REC | GLC | PRG | MAC | GEN |

|---|---|---|---|---|---|---|

| 1 | 1 | |||||

| 2 | 1 | |||||

| 3 | 1 | |||||

| 4 | ||||||

| 5 | ||||||

| 6 | ||||||

| 7 | 1 | 1 | ||||

| 8 | 1 | 1 | 1 | |||

| 9 | 1 | |||||

| 10 | 1 | 1 | 1 | |||

| 11 | 1 | 1 | ||||

| 12 | 1 | 1 | ||||

| 13 | ||||||

| 14 | ||||||

| 15 | 1 | 1 | ||||

| Count | 3 (20%) | 1 (7%) | 5 (33%) | 3 (20%) | 2 (13%) | 4 (27%) |

| Statement | Agreement | ||||||

|---|---|---|---|---|---|---|---|

| Strongly Disagree | Disagree | Slightly Disagree | Neutral | Slightly Agree | Agree | Strongly Agree | |

| Survey 1: Post-TTX | |||||||

| The TTX made me think about the technology from a unique or novel perspective. | 1 | 4 | |||||

| The TTX helped our team uncover and discuss assumptions underlying operational use of the technology. | 1 | 1 | 3 | ||||

| I actively participated and was engaged in the TTX. | 2 | 2 | 1 | ||||

| I had fun and enjoyed participating in the TTX. | 5 | ||||||

| With a guidebook, I think our team could design and execute future TTXs without external support. | 1 | 1 | 1 | 2 | |||

| The TTX was an effective tool for generating user stories. a | 2 | 3 | |||||

| I think it would be valuable to conduct TTXs regularly throughout the entire development process. a | 1 | 1 | 1 | 2 | |||

| Survey 2: Post-Delivery of User Stories and Outputs | |||||||

| I was satisfied with the quantity of user stories generated by the TTX. | 2 | 3 | |||||

| I was satisfied with the novelty of the user stories generated by the TTX. | 2 | 3 | |||||

| For the same time and effort as the TTX, I could have generated a higher quantity of user stories using a different method. | 1 | 2 | 2 | ||||

| For the same time and effort as the TTX, I could have generated higher novelty user stories using a different method. | 1 | 1 | 3 | ||||

| I was satisfied with the other outputs of the TTX (i.e., non-requirement outputs such as design seeds, assumptions, info for CONOPS, etc.). | 1 | 1 | 3 | ||||

| The TTX was an effective tool for generating user stories. a | 2 | 3 | |||||

| I think it would be valuable to conduct TTXs regularly throughout the entire development process. a | 2 | 1 | 2 | ||||

| Statement | Example Response |

|---|---|

| Survey 1: Post-TTX | |

| What changes would need to be made to the TTX process, and/or what resources would you need to be able to conduct TTXs in the future without external support? | I think it would have been a bit better to have a clear separation on who were the developers versus end users. It would also have been good to have more of a setup of the technology in the beginning, including purpose, current stage in development, and current capabilities. |

| What were the benefits from conducting this TTX? Anything expected or unexpected? | It was interesting to hear how [end users] would deal with integrating [the tool] with other systems, and how they would make updates to the rules/algorithms being used. |

| Additional comments (general thoughts or pertaining to specific statements)? | I think part of the guidebook should talk about how the TTX should evolve when you’re progressing through development of a technology. |

| Survey 2: Post-Delivery of User Stories and Outputs | |

| Do you have any additional thoughts about the relative costs and/or benefits of using the TTX to elicit software requirements to support work system resiliency? | For the time investment, I thought it was worth it. The outputs of the exercises may be helpful in future [customer] engagements. |

| How should this process be modified to support future TTXs? Why do you recommend these changes? | When training others, I think it would be great if you can do one initial session like you did with ours, then a second one where you have the developers/leads go through the process of creating a TTX session and carrying it out. |

| Additional comments (general thoughts or pertaining to specific statements)? | I plan to see how and where TTX and the TRUSTS framework could be looped into my various projects. |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the MITRE Corporation. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Dorton, S.L.; Barrett, E.; Fersch, T.; Langone, A.; Neville, K.J. RAD-XP: Tabletop Exercises for Eliciting Resilience Requirements for Sociotechnical Systems. Systems 2023, 11, 487. https://doi.org/10.3390/systems11100487

Dorton SL, Barrett E, Fersch T, Langone A, Neville KJ. RAD-XP: Tabletop Exercises for Eliciting Resilience Requirements for Sociotechnical Systems. Systems. 2023; 11(10):487. https://doi.org/10.3390/systems11100487

Chicago/Turabian StyleDorton, Stephen L., Emily Barrett, Theresa Fersch, Andrew Langone, and Kelly J. Neville. 2023. "RAD-XP: Tabletop Exercises for Eliciting Resilience Requirements for Sociotechnical Systems" Systems 11, no. 10: 487. https://doi.org/10.3390/systems11100487

APA StyleDorton, S. L., Barrett, E., Fersch, T., Langone, A., & Neville, K. J. (2023). RAD-XP: Tabletop Exercises for Eliciting Resilience Requirements for Sociotechnical Systems. Systems, 11(10), 487. https://doi.org/10.3390/systems11100487