Siamese Network Tracker Based on Multi-Scale Feature Fusion

Abstract

:1. Introduction

- This paper analyzes the advantages and disadvantages of the Siamese network tracking model in detail and proposes a solution to address its disadvantages.

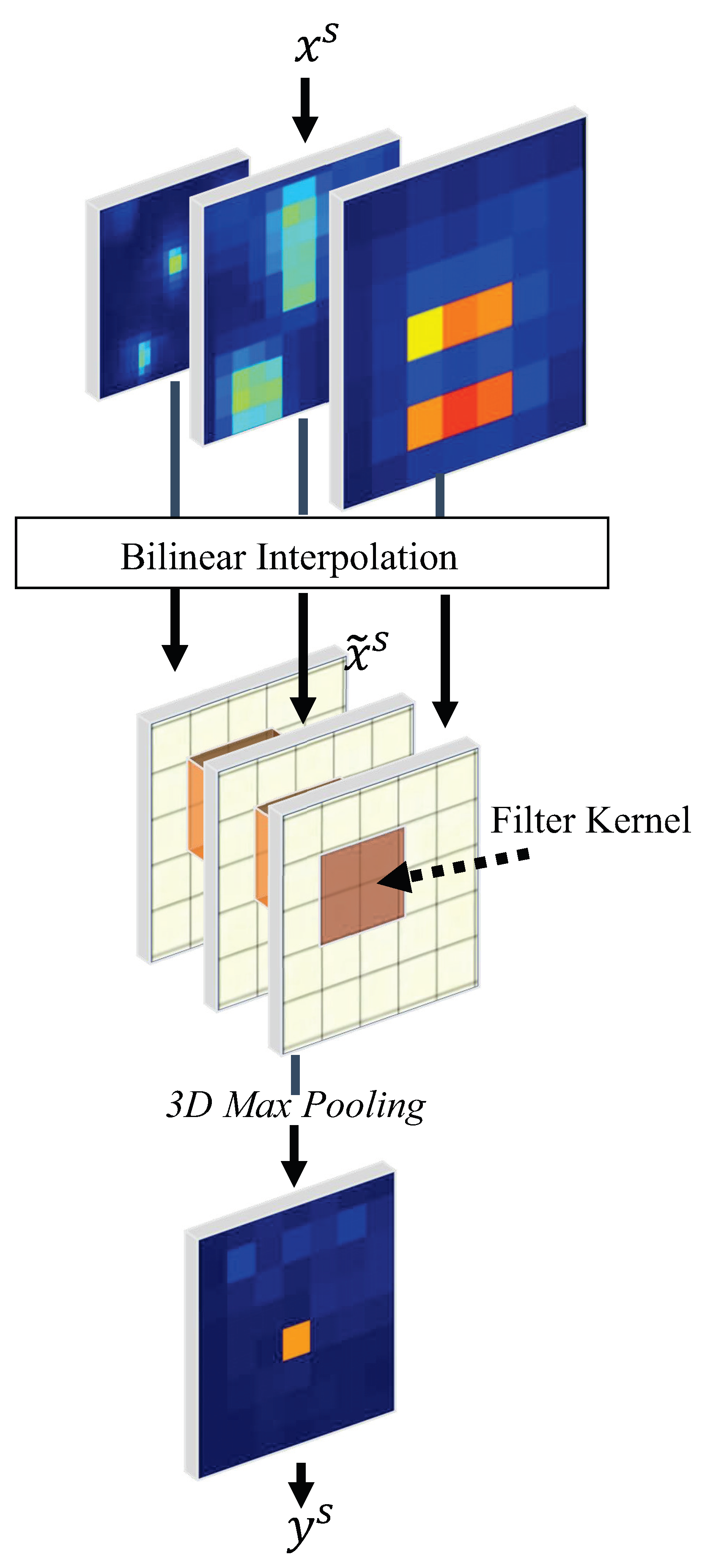

- In this paper, the features of multiple different scales are fused to increase the model’s ability to distinguish features and output multiple response maps at different scales. At the same time, the 3D Max Filtering [24] (3DMF) module is used to suppress repeated predictions under different scale features, which further improves tracking accuracy.

- Finally, this paper builds a new tracking network architecture. Our numerous experiments conducted using different datasets show that the algorithm presented in this paper greatly improves the robustness and accuracy of the tracking model based on the Siamese network, and the effect is particularly outstanding when dealing with object-scale changes.

2. Related Work

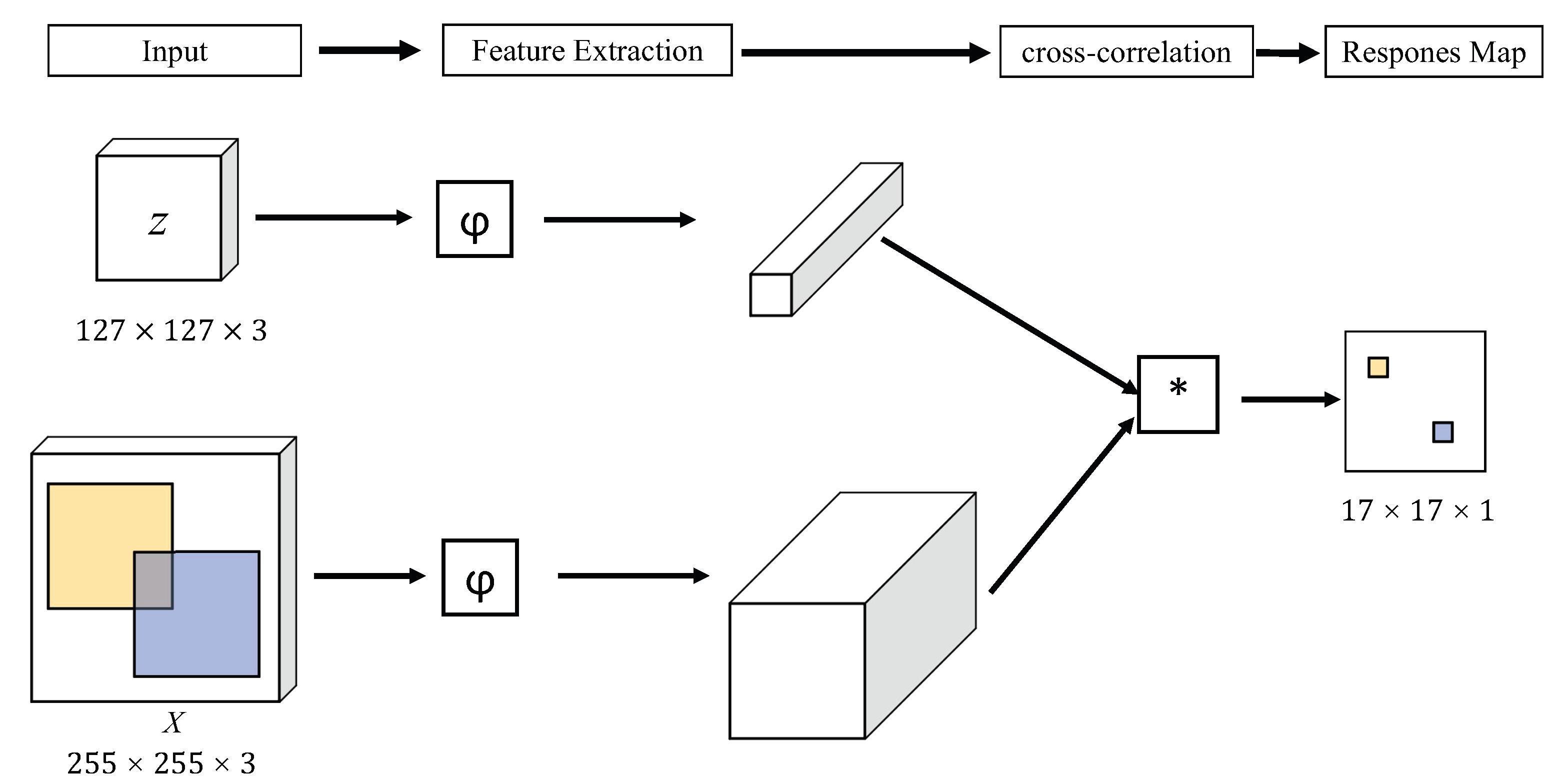

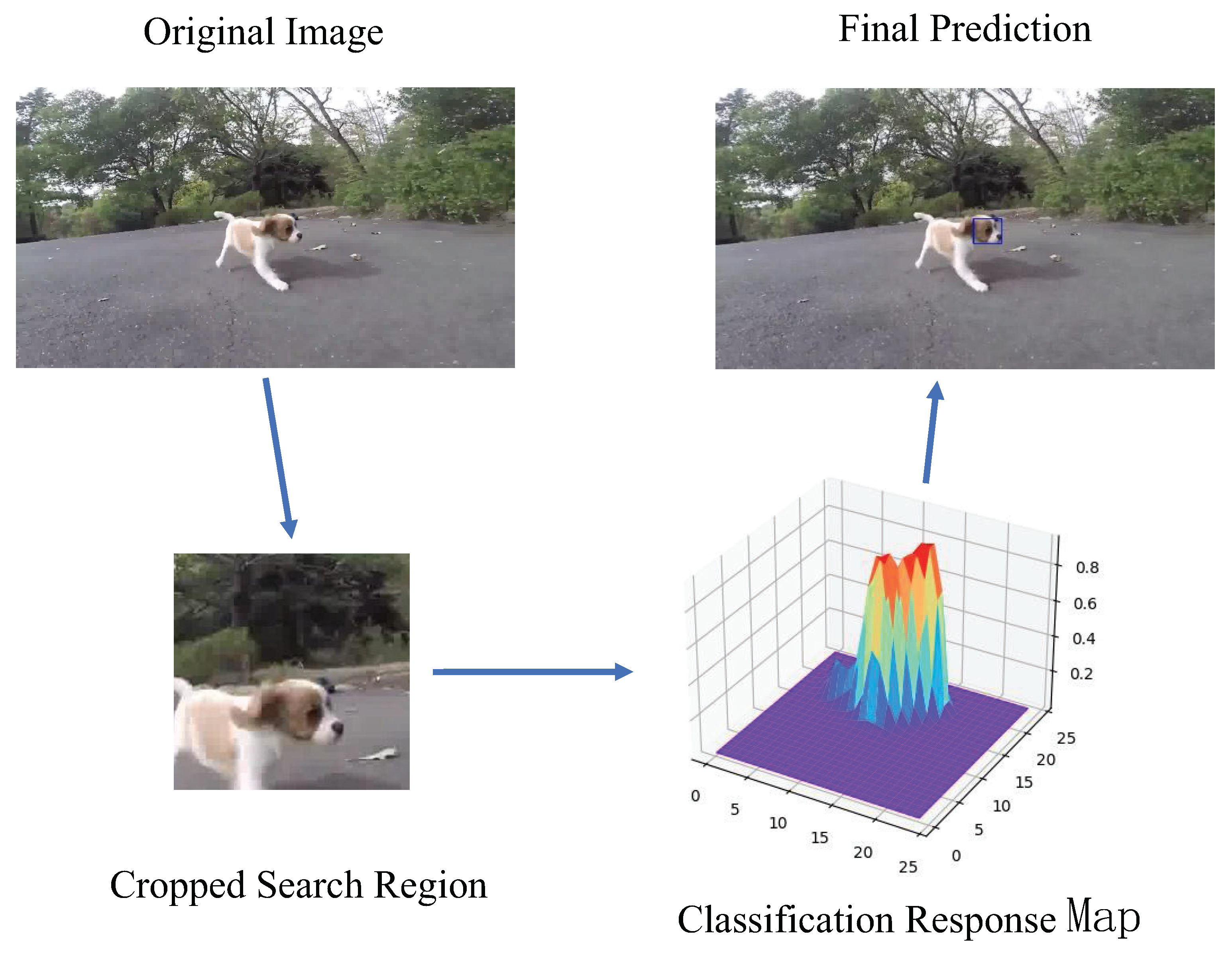

2.1. Siamese-Network-Based Tracking Framework

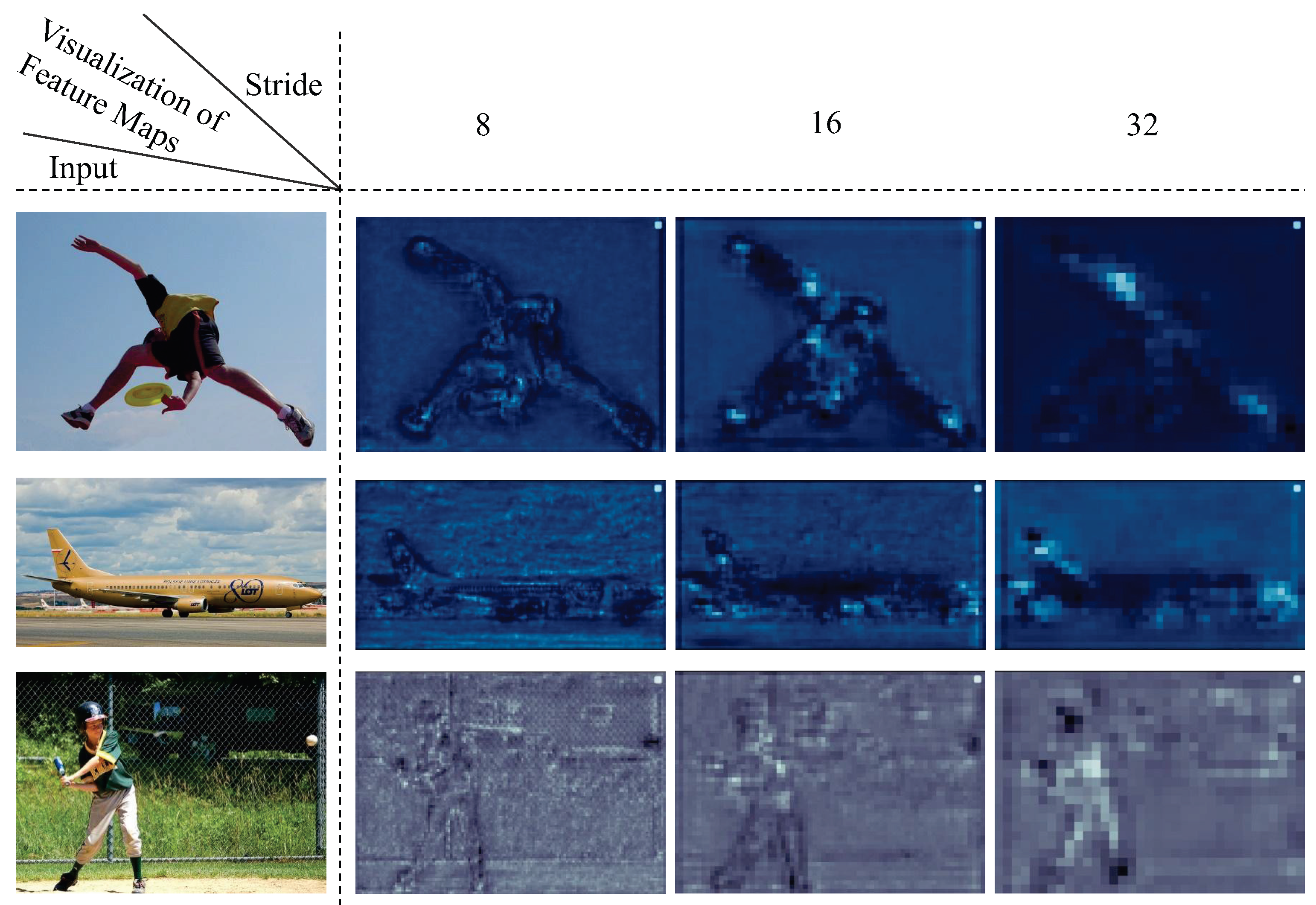

2.2. The Effect of Scale Change on Siamese Network Tracking and the Reason behind It

3. Our Method

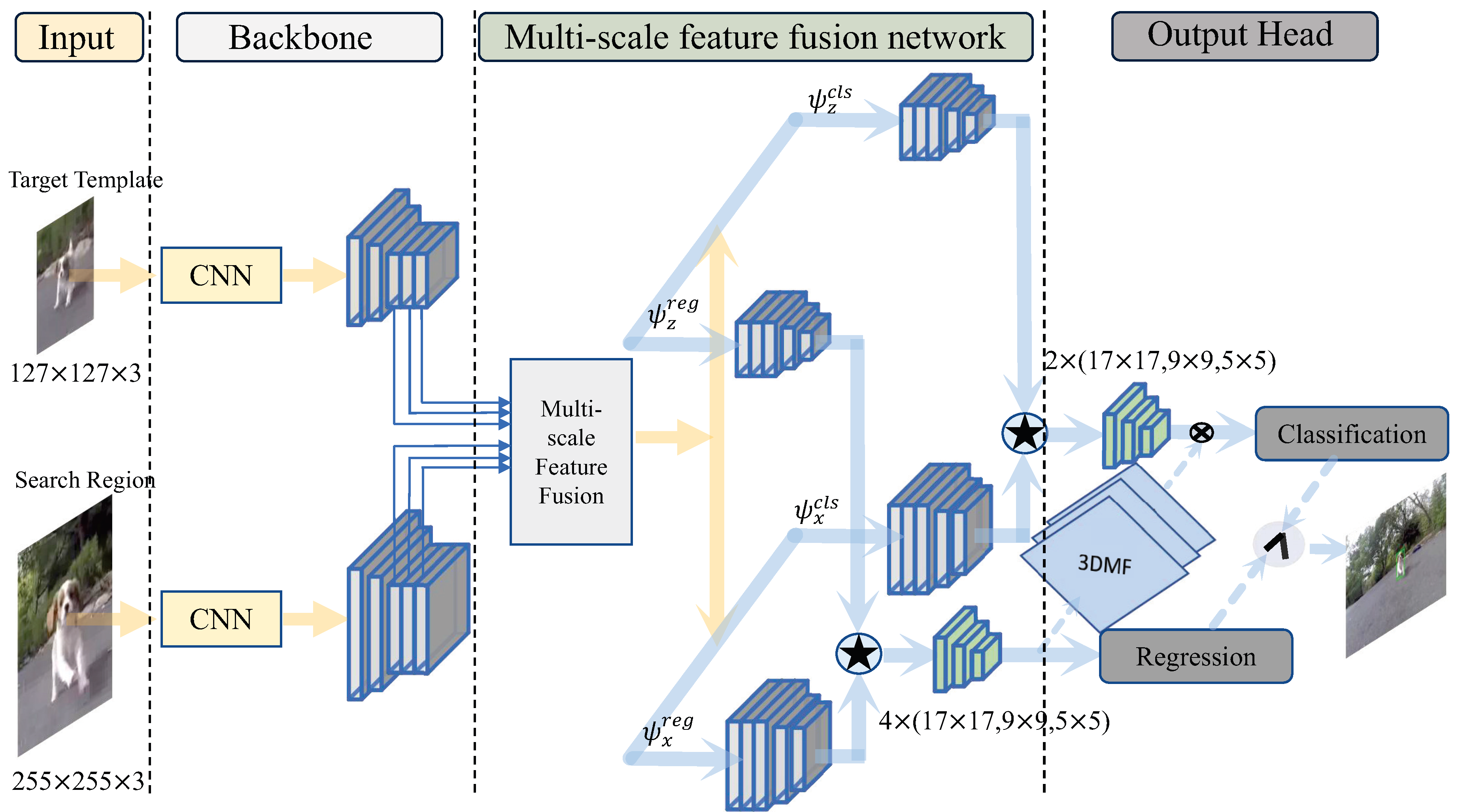

3.1. The General Framework of the Algorithm

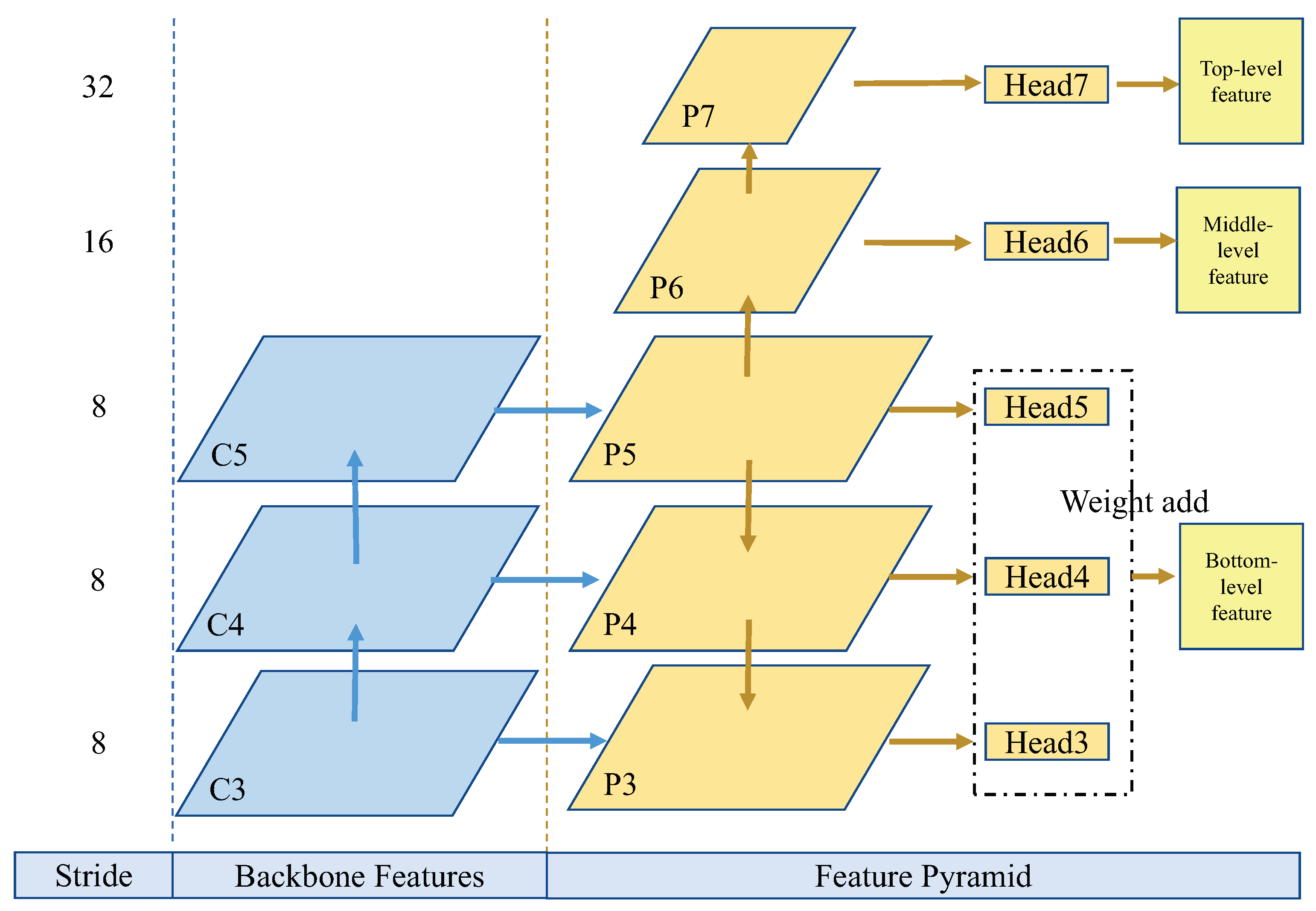

3.2. Multi-Scale Feature Fusion Network

3.3. 3D Max Filtering Module

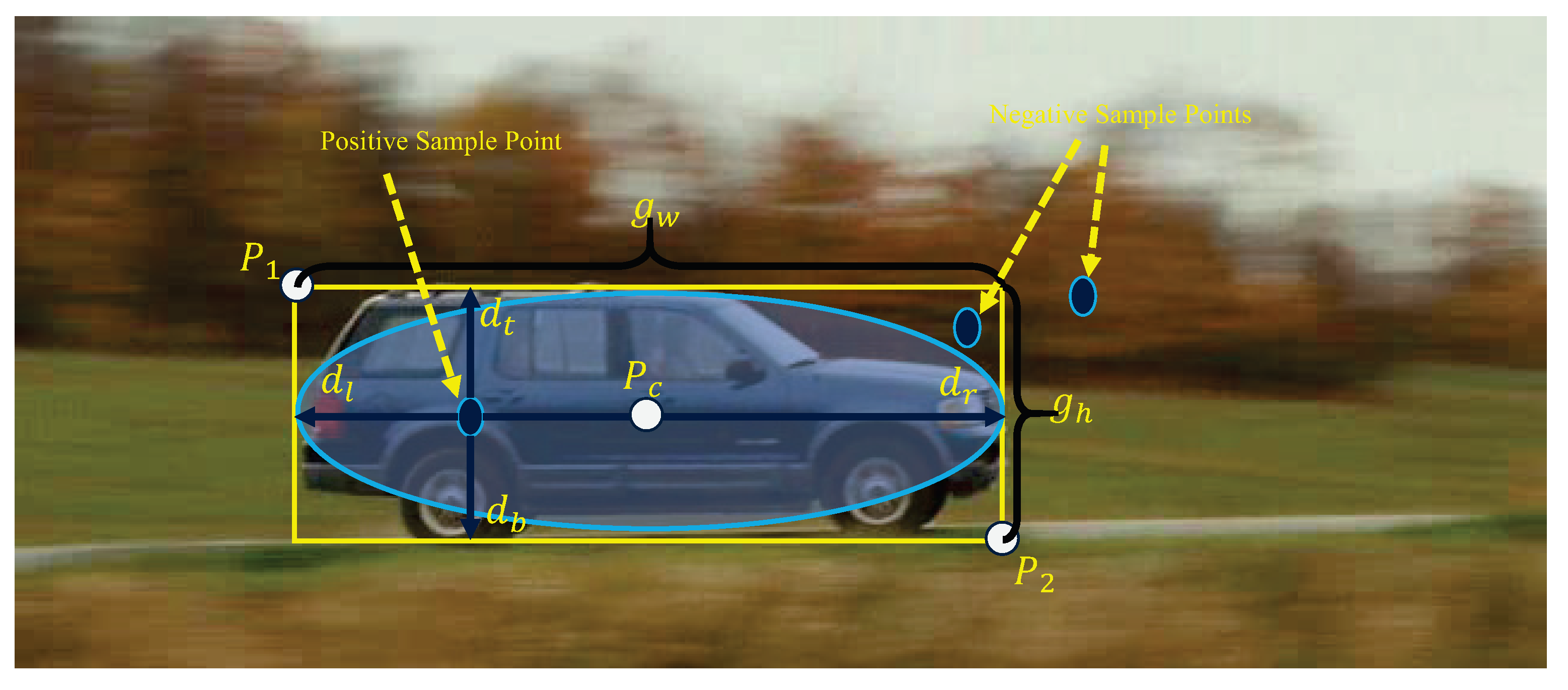

3.4. Design of Labels and Loss Functions

4. Experiment

4.1. Implementation Details

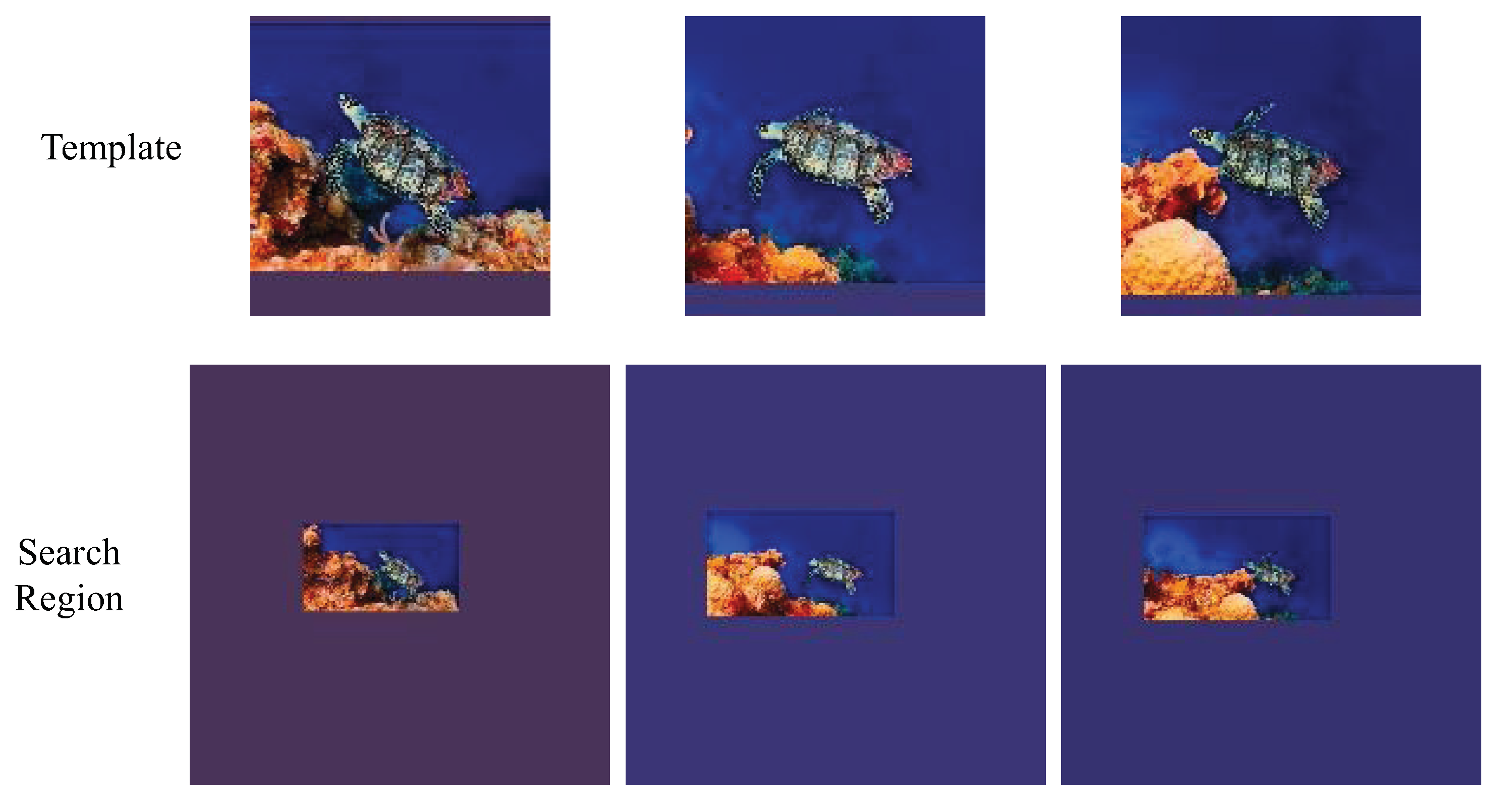

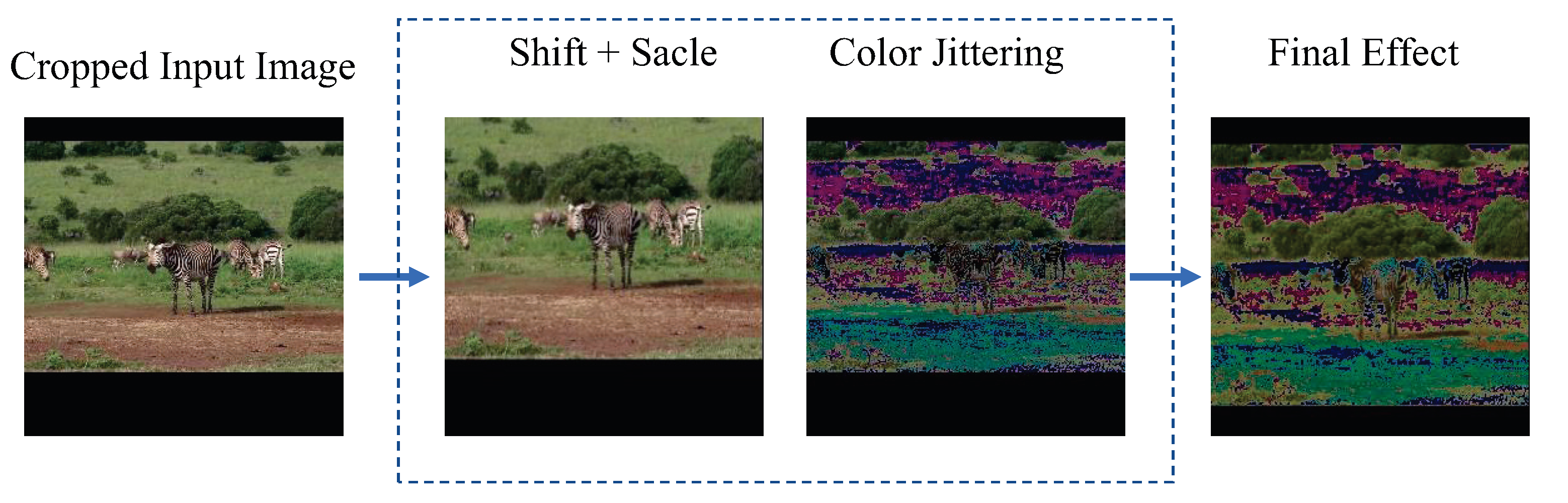

4.1.1. Training Phase

4.1.2. Inference Phase

4.1.3. Evaluation

4.2. Ablation Experiment

4.3. Comparison with Other Advanced Trackers

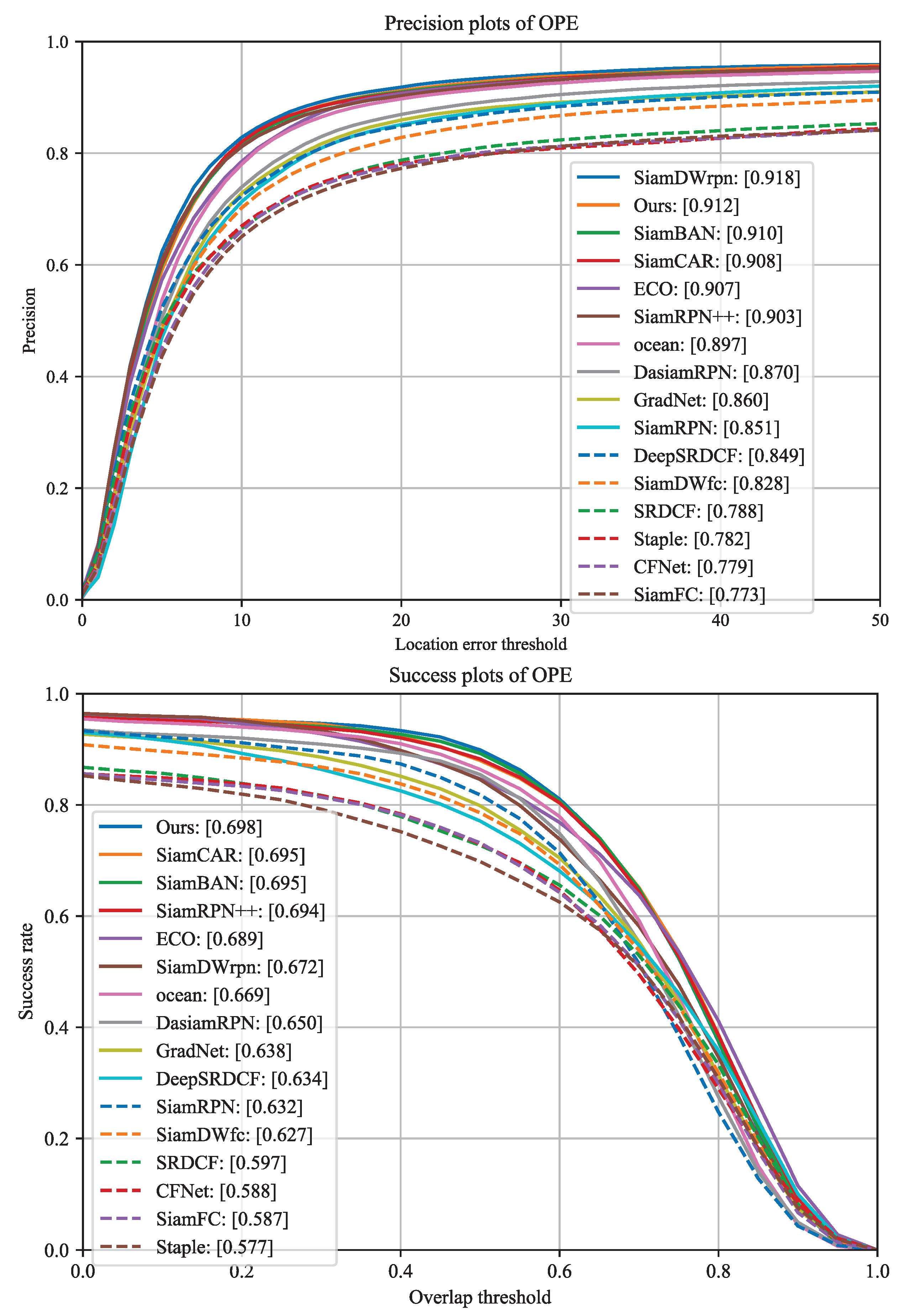

4.3.1. Comparison Using the OTB2015 Benchmark Dataset

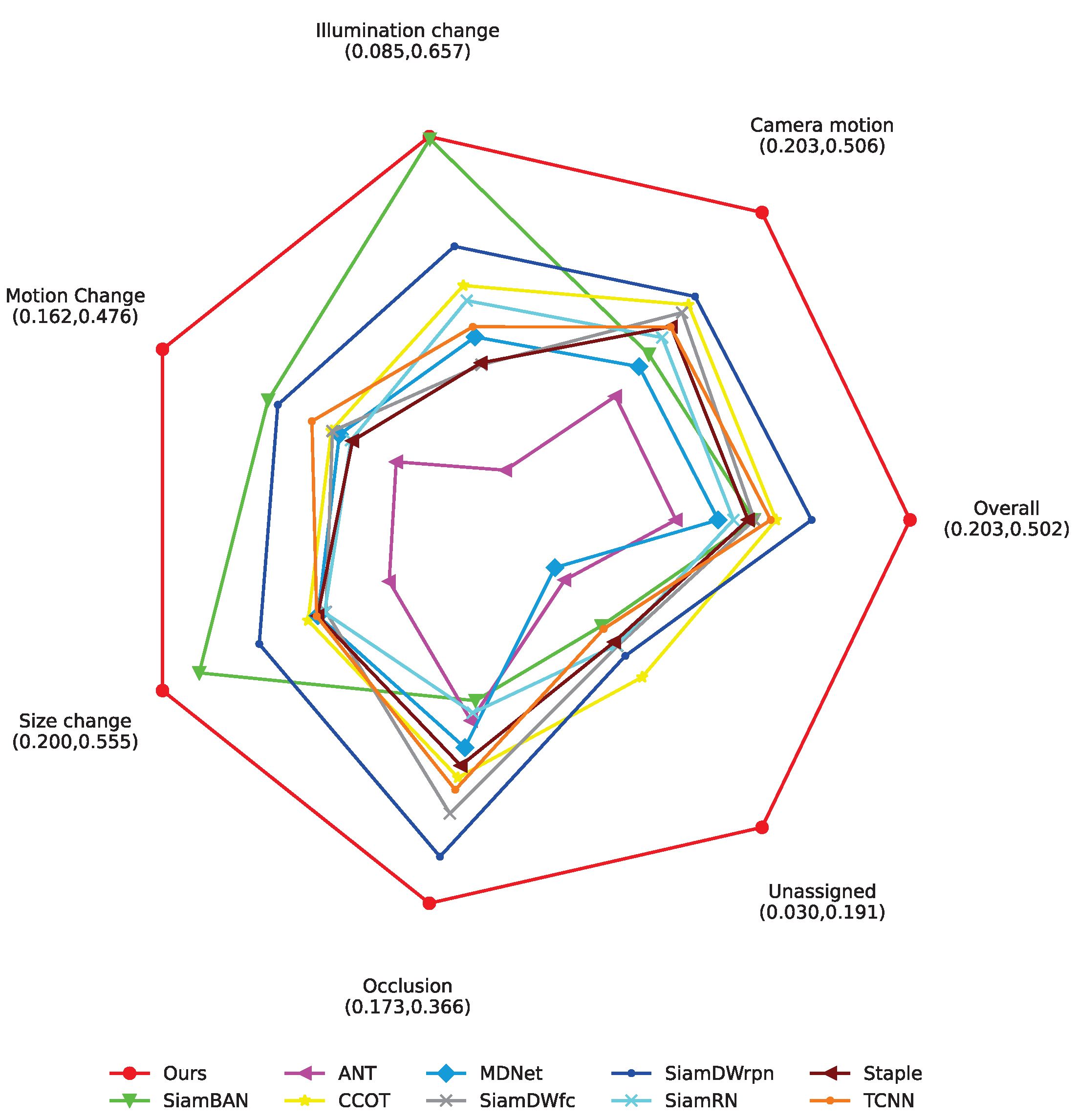

4.3.2. Comparison Using the VOT2016 Benchmark Dataset

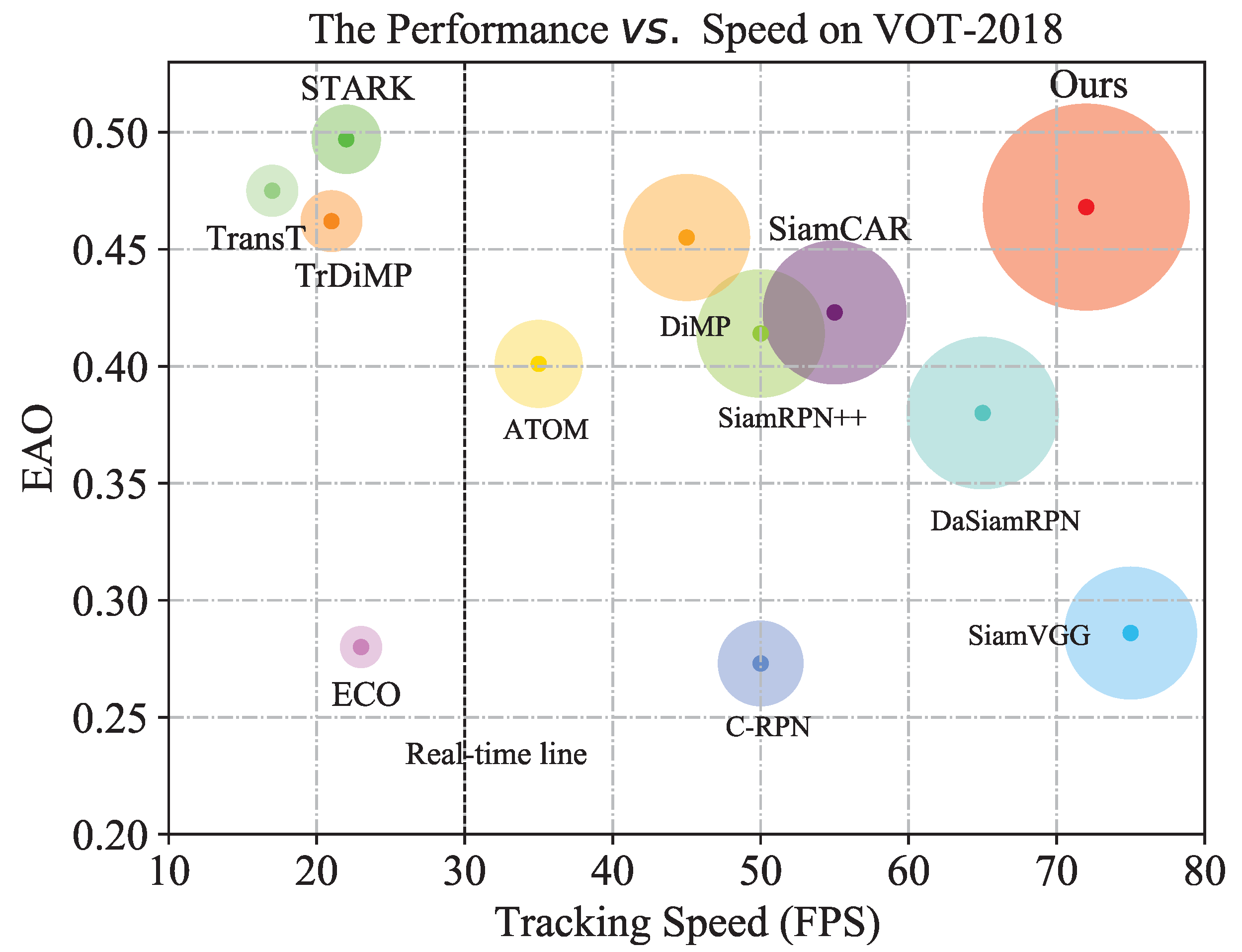

4.3.3. Comparison Using the VOT2018 Benchmark Dataset

4.3.4. Comparison Using the GOT10K Benchmark Dataset

4.3.5. Tracking Efficiency Analysis

4.3.6. Analysis and Conclusion of Comparison

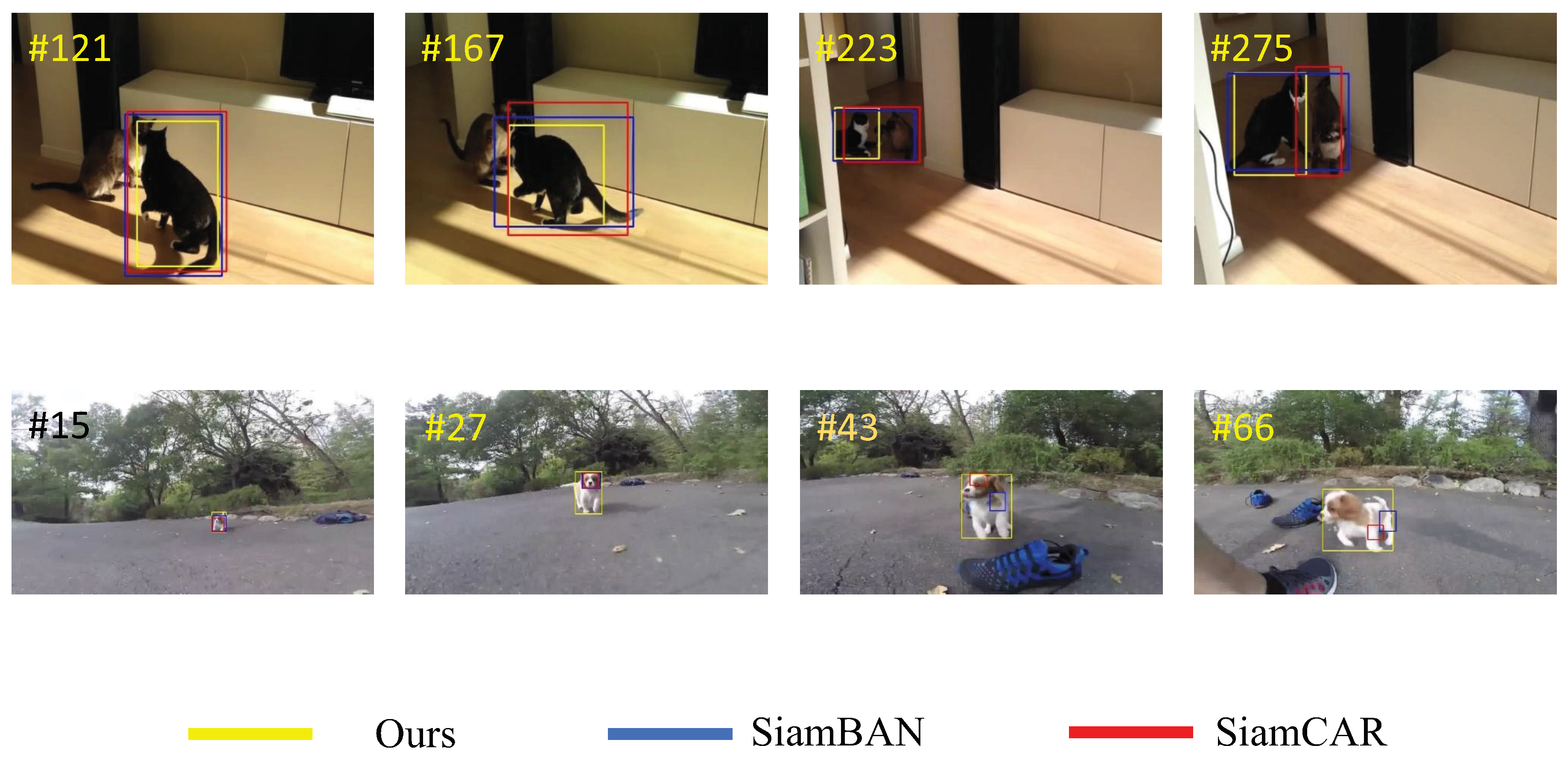

4.4. Demonstration and Comparison of Actual Effects

5. Conclusions and Future Work

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Jha, S.; Seo, C.; Yang, E. Real time object detection and tracking system for video surveillance system. Multimed. Tools Appl. 2021, 80, 1–16. [Google Scholar] [CrossRef]

- Premachandra, C.; Ueda, S.; Suzuki, Y. Detection and Tracking of Moving Objects at Road Intersections Using a 360-Degree Camera for Driver Assistance and Automated Driving. IEEE Access 2020, 99, 21–56. Available online: https://ieeexplore.ieee.org/document/9146556 (accessed on 10 July 2023). [CrossRef]

- Liu, Y.; Sivaparthipan, C.B.; Shankar, A. Human–Computer Interaction Based Visual Feedback System for Augmentative and Alternative Communication. Int. J. Speech Technol. 2022, 25, 305–314. Available online: https://link.springer.com/article/10.1007/s10772-021-09901-4 (accessed on 10 July 2023).

- Wang, H.; Deng, M.; Zhao, W. A Survey of Single Object Tracking Algorithms Based on Deep Learning. Comput. Syst. Appl. 2022, 31, 40–51. [Google Scholar]

- Meng, W.; Yang, X. A Survey of Object Tracking Algorithms. IEEE/CAA J. Autom. Sin. 2019, 7, 1244–1260. [Google Scholar]

- Bertinetto, L.; Valmadre, J.; Henriques, J.F.; Vedaldi, A.; Torr, P.H. Fully-convolutional siamese networks for object tracking. In Proceedings of the 14th European Conference on Computer Vision, Amsterdam, The Netherlands, 11–14 October 2016; pp. 850–860. [Google Scholar] [CrossRef]

- Qing, G.; Wei, F.; Ce, Z.; Rui, H.; Liang, W.; Song, W. Learning Dynamic Siamese Network for Visual Object Tracking. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 1763–1771. Available online: https://ieeexplore.ieee.org/abstract/document/8237458 (accessed on 10 July 2023).

- Wang, Q.; Teng, Z.; Xing, J.; Gao, J.; Hu, W.; Maybank, S. Learning attentions: Residual attentional siamese network for high performance online visual tracking. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 4854–4863. Available online: https://ieeexplore.ieee.org/document/8578608 (accessed on 10 July 2023).

- Li, B.; Yan, J.J.; Wu, W.; Zhu, Z.; Hu, X.L. High performance visual tracking with siamese region proposal network. In Proceedings of the 2018 IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 8971–8980. Available online: https://ieeexplore.ieee.org/document/8579033 (accessed on 10 July 2023).

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards real-time object detection with region proposal networks. Adv. Neural Inf. Process. Syst. 2015, 31, 91–99. [Google Scholar] [CrossRef] [PubMed]

- Li, B.; Wu, W.; Wang, Q.; Zhang, F.Y.; Xing, J.L.; Yan, J.J. SiamRPN++: Evolution of siamese visual tracking with very deep networks. In Proceedings of the 2019 IEEE Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 4282–4291. [Google Scholar] [CrossRef]

- Wang, Q.; Zhang, L.; Bertinetto, L.; Hu, W.; Torr, P.H. Fast online object tracking and segmentation: A unifying approach. In Proceedings of the IEEE/CVF conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 1382–1383. Available online: https://ieeexplore.ieee.org/document/8953931 (accessed on 10 July 2023).

- Zhang, Z.; Peng, H. Deeper and wider siamese networks for real-time visual tracking. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 4591–4600. Available online: https://ieeexplore.ieee.org/document/8953458 (accessed on 10 July 2023).

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. Available online: https://ieeexplore.ieee.org/document/7780459 (accessed on 10 July 2023).

- Xie, S.; Girshick, R.; Doll’ar, P.; Tu, Z.; He, Z. Aggregated residual transformations for deep neural networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 June 2017; pp. 1492–1500. [Google Scholar] [CrossRef]

- Andrew, G.; Zhu, M.; Bo, C.; Kalenichenko, D.; Wang, W.; Weyand, T.; Andreetto, M.; Adam, H. MobileNets: Effificient convolutional neural networks for mobile vision applications. arXiv 2017, arXiv:1704.04861. [Google Scholar] [CrossRef]

- Law, H.; Deng, J. Cornernet: Detecting objects as paired keypoints. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 734–750. [Google Scholar] [CrossRef]

- Zhou, X.; Wang, D.; Krähenbühl, P. Objects as points. arXiv 2019, arXiv:1904.07850. [Google Scholar] [CrossRef]

- Tian, Z.; Chu, X.; Wang, X.; Wei, X.; Shen, C. Fcos: Fully convolutional one-stage object detection. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 9627–9636. [Google Scholar] [CrossRef]

- Xu, Y.; Wang, Z.; Li, Z.; Yuan, Y.; Yu, G. Siamfc++: Towards robust and accurate visual tracking with target estimation guidelines. In Proceedings of the AAAI Conference on Artificial Intelligence, New York, NY, USA, 7–12 February 2020; pp. 12549–12556. [Google Scholar] [CrossRef]

- Guo, D.; Wang, J.; Cui, Y.; Wang, Z.; Chen, S. SiamCAR: Siamese fully convolutional classification and regression for visual tracking. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 6269–6277. Available online: https://ieeexplore.ieee.org/document/9157720 (accessed on 10 July 2023).

- Chen, Z.; Zhong, B.; Li, G.; Zhang, S.; Ji, R. Siamese box adaptive network for visual tracking. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 6668–6677. Available online: https://ieeexplore.ieee.org/document/9157457 (accessed on 10 July 2023).

- Zhang, Z.; Peng, H.; Fu, J.; Li, B.; Hu, W. Ocean: Object-aware anchorfree tracking. In Proceedings of the Computer Vision–ECCV 2020: 16th European Conference, Glasgow, UK, 23–28 August 2020; pp. 771–787. [Google Scholar] [CrossRef]

- Wang, J.; Song, L.; Li, Z.; Sun, H.; Sun, J.; Zheng, N. End-to-end object detection with fully convolutional network. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 15849–15858. [Google Scholar] [CrossRef]

- Marvasti-Zadeh, S.M.; Cheng, L.; Ghanei-Yakhdan, H.; Kasaei, S. Deep learning for visual tracking: A comprehensive survey. IEEE Trans. Intell. Transp. Syst. 2021, 23, 3943–3968. Available online: https://ieeexplore.ieee.org/document/9339950 (accessed on 10 July 2023). [CrossRef]

- Chen, L.C.; Papandreou, G.; Kokkinos, I.; Murphy, K.; Yuille, A.L. Deeplab: Semantic image segmentation with deep convolutional nets, atrous convolution, and fully connected CRFs. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 40, 834–848. [Google Scholar] [CrossRef] [PubMed]

- Rao, Y.; Cheng, Y.; Xue, J.; Pu, J.; Wang, Q.; Jin, R.; Wang, Q. FPSiamRPN: Feature pyramid Siamese network with region proposal network for target tracking. IEEE Access 2020, 8, 176158–176169. [Google Scholar] [CrossRef]

- Lin, T.Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Zitnick, C.L. Microsoft COCO: Common objects in context. In Proceedings of the 13th European Conference on Computer Vision, Zurich, Switzerland, 6–12 September 2014; pp. 740–755. [Google Scholar] [CrossRef]

- Sonka, M.; Hlavac, V.; Boyle, R. Image Processing, Analysis, and Machine Vision; Cengage Learning: Boston, MA, USA, 2014; Available online: https://link.springer.com/book/10.1007/978-1-4899-3216-7 (accessed on 10 July 2023).

- Lin, T.Y.; Goyal, P.; Girshick, R.; He, K.; Dollar, P. Focal loss for dense object detection. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2980–2988. [Google Scholar] [CrossRef]

- Russakovsky, O.; Deng, J.; Su, H.; Krause, J.; Satheesh, S.; Ma, S.; Huang, Z.; Karpathy, A.; Khosla, A.; Bernstein, M.; et al. Imagenet large scale visual recognition challenge. Int. J. Comput. Vis. 2015, 115, 211–252. [Google Scholar] [CrossRef]

- He, K.; Gkioxari, G.; Dollar, P.; Girshick, R. Mask r-cnn. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017. [Google Scholar] [CrossRef]

- Long, J.; Shelhamer, E.; Darrell, T. Fully Convolutional Networks for Semantic Segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; Available online: https://ieeexplore.ieee.org/document/7478072 (accessed on 10 July 2023).

- Huang, L.; Zhao, X.; Huang, K. GOT-10k: A large highdiversity benchmark for generic object tracking in the wild. IEEE Trans. Pattern Anal. Mach. Intell. 2019, 43, 1562–1577. Available online: https://ieeexplore.ieee.org/document/8922619 (accessed on 10 July 2023). [CrossRef] [PubMed]

- Fan, H.; Lin, L.; Yang, F.; Chu, P.; Deng, G.; Yu, S.; Bai, H.; Xu, Y.; Liao, C.; Ling, H. Lasot: A high-quality benchmark for large-scale single object tracking. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 5374–5383. [Google Scholar] [CrossRef]

- Wu, Y.; Lim, J.; Yang, M. Object tracking benchmark. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 37, 1834–1848. Available online: https://ieeexplore.ieee.org/document/7001050 (accessed on 10 July 2023). [CrossRef] [PubMed]

- Kristan, M.; Leonardis, A.; Matas, J.; Felsberg, M.; Chi, Z.Z. The visual object tracking VOT2016 challenge results. In Proceedings of the 14th European Conference on Computer Vision, Amsterdam, The Netherlands, 11–14 October 2016; pp. 191–217. [Google Scholar]

- Kristan, M.; Leonardis, A.; Matas, J.; Felsberg, M.; Pflugfelder, R.; Cehovin Zajc, L.; Vojir, T.; Bhat, G.; Lukezic, A.; Eldesokey, A.; et al. The sixth visual object tracking vot2018 challenge results. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018. [Google Scholar]

- Danelljan, M.; Robinson, A.; Shahbaz Khan, F.; Felsberg, M. Beyond correlation filters: Learning continuous convolution operators for visual tracking. In Proceedings of the European Conference on Computer Vision (ECCV), Amsterdam, The Netherlands, 11–14 October 2016; pp. 472–488. [Google Scholar] [CrossRef]

- Nam, H.; Baek, M.; Han, B. Modeling and propagating CNNs in a tree structure for visual tracking. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017. [Google Scholar] [CrossRef]

- Bertinetto, L.; Valmadre, J.; Golodetz, S.; Miksik, O.; Torr, P.H. Staple: Complementary learners for real-time tracking. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 1401–1409. [Google Scholar] [CrossRef]

- Cheng, S.; Zhong, B.; Li, G.; Liu, X.; Tang, Z.; Li, X.; Wang, J. Learning to filter: Siamese relation network for robust tracking. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 4421–4431. [Google Scholar]

- Nam, H.; Han, B. Learning multi-domain convolutional neural networks for visual tracking. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 4293–4302. [Google Scholar] [CrossRef]

- Qi, Y.; Zhang, S.; Zhang, W.; Su, L.; Huang, Q.; Yang, M.H. Learning Attribute-Specific Representations for Visual Tracking. In Proceedings of the National Conference on Artificial Intelligence, Wenzhou, China, 26–28 August 2019. [Google Scholar]

- Chen, X.; Yan, B.; Zhu, J.; Wang, D.; Yang, X.; Lu, H. Transformer tracking. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 8126–8135. Available online: https://ieeexplore.ieee.org/document/9578609 (accessed on 10 July 2023).

- Yan, B.; Peng, H.; Fu, J.; Wang, D.; Lu, H. Learning spatiotemporal transformer for visual tracking. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Montreal, BC, Canada, 11–17 October 2021; pp. 10448–10457. [Google Scholar] [CrossRef]

- Wang, N.; Zhou, W.; Wang, J.; Li, H. Transformer meets tracker: Exploiting temporal context for robust visual tracking. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 20–25 June 2021; pp. 1571–1580. [Google Scholar] [CrossRef]

- Bhat, G.; Danelljan, M.; Gool, L.V.; Timofte, R. Learning discriminative model prediction for tracking. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 6182–6191. [Google Scholar] [CrossRef]

- Xing, D.; Evangeliou, N.; Tsoukalas, A.; Tzes, A. Siamese transformer pyramid networks for real-time uav tracking. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Waikoloa, HI, USA, 3–8 January 2022; pp. 2139–2148. [Google Scholar]

- Guo, D.; Shao, Y.; Cui, Y.; Wang, Z.; Zhang, L.; Shen, C. Graph attention tracking. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 9543–9552. [Google Scholar] [CrossRef]

| Number | Baseline | Multi-Scale Feature Fusion | Ellipse Label | 3D Max Filtering | EAO | A |

|---|---|---|---|---|---|---|

| 1 | ✓ | 32.9 | 60.1 | |||

| 2 | ✓ | ✓ | ||||

| 3 | ✓ | ✓ | ✓ | |||

| 4 | ✓ | ✓ | ✓ | ✓ |

| Tracker Name | Accuracy ↑ | Robustness ↓ | EAO ↑ |

|---|---|---|---|

| Ours | 0.633 | 0.131 | 0.502 |

| SiamDWrpn [13] | 0.574 | 0.266 | 0.376 |

| CCOT [39] | 0.541 | 0.238 | 0.331 |

| TCNN [40] | 0.555 | 0.268 | 0.324 |

| SiamDWfc [13] | 0.535 | 0.303 | 0.303 |

| SiamBAN [22] | 0.632 | 0.396 | 0.303 |

| Staple [41] | 0.547 | 0.378 | 0.295 |

| SiamRN [42] | 0.550 | 0.382 | 0.277 |

| MDNet [43] | 0.542 | 0.337 | 0.257 |

| ANT [44] | 0.483 | 0.513 | 0.203 |

| FPSiamRPN [27] | 0.609 | - | 0.354 |

| Evaluation Metrics | SiamCAR [21] | SiamRPN++ [11] | SiamBAN [22] | DiMP [48] | SiamTPN [49] | Ours |

|---|---|---|---|---|---|---|

| 67.0 | 61.5 | 64.6 | 71.7 | 68.6 | 72.8 | |

| 41.5 | 32.9 | 40.4 | 49.3 | 44.2 | 59.7 | |

| AO | 56.9 | 51.7 | 54.5 | 61.1 | 57.6 | 64.9 |

| Speed (FPS) | 51 | 35 | 45 | 25 | 40 | 60 |

| Trackers | Tracking Speed (FPS) | No. of Parameters (M) | FLOPs (G) | GOT10k (AO) | OTB100(P) |

|---|---|---|---|---|---|

| SiamBAN [22] | 23.71 | 59.93 | 48.84 | - | 91.0 |

| SiamGAT [50] | 41.99 | 14.23 | 17.28 | 62.7 | 91.6 |

| SiamFC++ [20] | 45.27 | 13.89 | 18.03 | 59.5 | 89.6 |

| SiamRPN++ [11] | 5.17 | 53.95 | 48.92 | 51.7 | 91.5 |

| SiamDW [13] | 52.58 | 2.46 | 12.90 | 42.9 | 89.2 |

| DiMP-50 [48] | 30.67 | 43.10 | 10.35 | 61.1 | 88.8 |

| SiamRN [42] | 6.51 | 56.56 | 116.87 | - | 93.1 |

| Ours | 45.02 | 59.77 | 59.34 | 64.9 | 91.2 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhao, J.; Niu, D. Siamese Network Tracker Based on Multi-Scale Feature Fusion. Systems 2023, 11, 434. https://doi.org/10.3390/systems11080434

Zhao J, Niu D. Siamese Network Tracker Based on Multi-Scale Feature Fusion. Systems. 2023; 11(8):434. https://doi.org/10.3390/systems11080434

Chicago/Turabian StyleZhao, Jiaxu, and Dapeng Niu. 2023. "Siamese Network Tracker Based on Multi-Scale Feature Fusion" Systems 11, no. 8: 434. https://doi.org/10.3390/systems11080434

APA StyleZhao, J., & Niu, D. (2023). Siamese Network Tracker Based on Multi-Scale Feature Fusion. Systems, 11(8), 434. https://doi.org/10.3390/systems11080434