A Three-Pronged Verification Approach to Higher-Level Verification Using Graph Data Structures

Abstract

:1. Introduction

2. Background and Related Work

2.1. Semantic Web Technologies

2.2. Graph Theory

2.3. Verification Tasks

2.4. Digital Engineering Framework for Integration and Interoperability

3. Methods

3.1. Semantic System Verification Layer

3.2. Extend SSVL to Apply Three-Pronged Verification Approach

3.2.1. Description Logic Reasoning

3.2.2. Closed-World Constraint Analysis

3.2.3. Graph-Based Analysis

3.2.4. Update/Develop Ontologies to Align with Verification Tasks

3.2.5. Update Mapping to Accommodate Ontologies as Needed

3.2.6. Aggregate Analyses to Form Top-Level Verification

3.3. Verify Extended SSVL Approach

- Mapped-Graph Single Failure—This result aims to test each failure path in isolation from beginning to end. This means that, where possible, faults are seeded directly into the tool where they are expected to originate. This process tests both recognition of the failure path and the mapping process for translating the model with seeded fault into the ontology-aligned data.

- Generated-Graph Single Failure—This result aims to test that a programmatically generated graph produces results in line with a fully mapped graph. A base case (no faults) generated from the standard mapping procedure can be used as a starting point. Additional graphs can be generated to seed the base graph with the identified faults associated with each failure path. These results can then be compared to the results from the Mapped-Graph Single Failure results.

- Generated-Graph Random Failure Selection—This result aims to test random combinations of the seeded faults to ensure that multiple failures can be detected. It uses the base mapped graph and the methods used for seeding individual faults used in Step 2 and combines these with a random number generator to generate random combinations of the seeded failure. The random number is represented in binary with the number of digits determined by the number of failure paths identified (a 1 in the corresponding digit denotes that a particular failure path should be inserted). Further filtering for mutually exclusive failures may be needed depending on the context. These randomly generated failure graphs can then be used to test the verification suite to detect if the results produced are expected based on the failure of each individual fault.

3.3.1. Application Context—Digital Thread

3.3.2. Definition of System of Analysis in the DEFII Framework Context

3.3.3. Establish Definition of Verification Task—Well-Formed Construction of an SoA

- Allowed Connection—these requirements specifically look at how the SoA is connected. This includes how the system under analysis is connected, how different models are connected to each other, etc. Since connections are what make the edge aspects of the network, these requirements are important.

- Specification Requirements—these requirements describe things that should exist as part of a well-formed SoA.

- DEFII Requirements—these requirements are specific to an SoA as implemented using the DEFII framework. Generally, they will refer to ways that the SysML model should be configured in order for the expected mapping to align the ontology data.

- Graph-Based Requirements—these requirements are specific to a graph-based analysis of a subgraph of the ontology-aligned data.

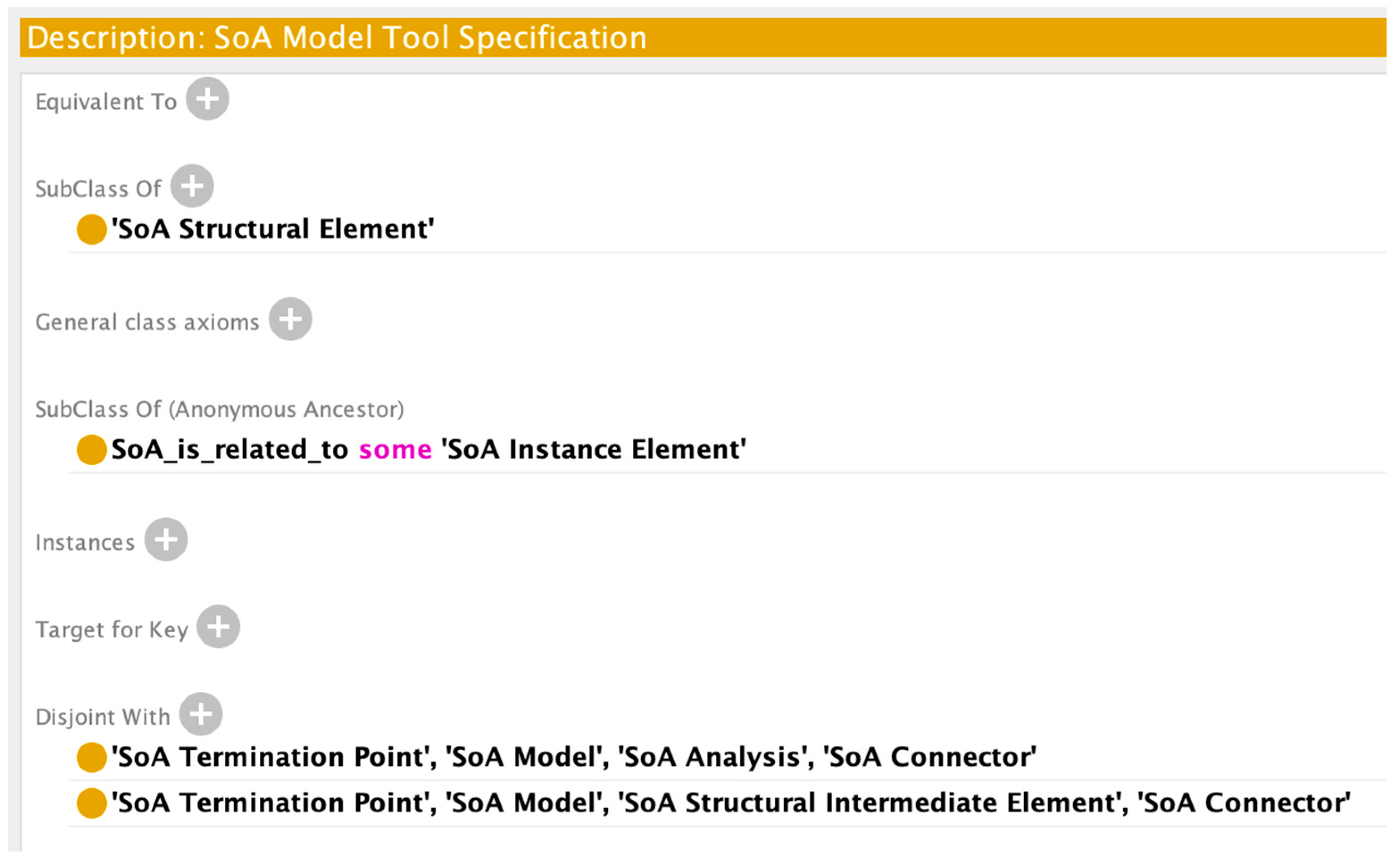

3.3.4. Develop the SoA Ontology

- System Under Analysis Value Properties (SuAVP)—these are base elements that will be manipulated by the SoA. They represent relevant system, mission, environment, etc. parameters. In DEFII, these value properties must be tagged with a class from a top, mid-, or domain ontology.

- Models—these models are the intermediate simulations, analysis models, etc., that are used in the SoA to characterize the system under analysis. The models include inputs and outputs. In DEFII, these models must be tagged to identify them as models in this context.

- Analysis Objectives—these are the objectives of the SoA. They can be included in the mission, system, etc., architecture, or they could be defined at the analysis level as external values that are being analyzed. In DEFII, these objectives must be tagged with a class from a top, mid-, or domain ontology.

- Connectors—these are connections between the relevant items to define the graph of the SoA. They show how the intermediate models connect to each other and how they relate to the system under analysis and the analysis objectives. In SysML, these are represented as binding connectors in a parametric diagram.

- System Blocks—the system architecture uses composition relationships between blocks to show the architectural hierarchy. While this is not necessarily explicitly displayed in the parametric diagram, these describe the relation between SuAVPs and the system. In DEFII, these objectives must be tagged with a class from a top, mid-, or domain ontology.

- High-Level Analysis Block—DEFII requires the definition of a high-level “Act of Analysis” to aggregate the models and systems associated with any given analysis. In SysML, this is a block (an example can be seen in Figure 11).

3.3.5. Update Mapping to Incorporate New Aspects Introduced in Ontology Development

3.3.6. DL Reasoning

3.3.7. Develop SoA Constraint Analysis

3.3.8. Develop Subgraph Generation and Analysis

3.4. Apply SoA Analysis to Catapult Case Study

- Impact Angle;

- Flight Time;

- Circular Error Probable (CEP);

- Range;

- Impact Velocity.

4. Results

4.1. Open-World Reasoning Result

4.2. Closed-World Reasoning Result

4.3. Graph-Based Analysis Result

4.4. Mapped and Generated Single-Test Results

4.5. Generated-Graph Random Failure Test Results

5. Discussion

5.1. Limitations

5.2. Future Work

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Appendix A. System of Analysis Ontology

Appendix B. Generated Test Case Full Results

| Scenario Name | Scenario Description | Expected Result | DL Reasoning | SHACL | GRAPH | Pass |

|---|---|---|---|---|---|---|

| RTC1 | 10101100000010011100111111 | [1, 1, 1] | 1 | 1 | 1 | TRUE |

| RTC2 | 01110000001010001011011111 | [1, 1, 1] | 1 | 1 | 1 | TRUE |

| RTC3 | 01100000001111000000110000 | [1, 1, 1] | 1 | 1 | 1 | TRUE |

| RTC4 | 00100001100110110110001011 | [1, 1, 1] | 1 | 1 | 1 | TRUE |

| RTC5 | 01111100000110001011000101 | [1, 1, 1] | 1 | 1 | 1 | TRUE |

| RTC6 | 11101000000100111110101010 | [1, 1, 1] | 1 | 1 | 1 | TRUE |

| RTC7 | 01101001001010010100010011 | [1, 1, 1] | 1 | 1 | 1 | TRUE |

| RTC8 | 00111001100000000000011100 | [1, 1, 0] | 1 | 1 | 0 | TRUE |

| RTC9 | 00101001001100101101001010 | [1, 1, 0] | 1 | 1 | 0 | TRUE |

| RTC10 | 10111010101011011111011110 | [1, 1, 1] | 1 | 1 | 1 | TRUE |

| RTC11 | 00011000101011011001110110 | [1, 1, 1] | 1 | 1 | 1 | TRUE |

| RTC12 | 01010101001000011100100011 | [1, 1, 1] | 1 | 1 | 1 | TRUE |

| RTC13 | 11001100100011001111010000 | [1, 1, 1] | 1 | 1 | 1 | TRUE |

| RTC14 | 00101100000010000111110001 | [1, 1, 0] | 1 | 1 | 0 | TRUE |

| RTC15 | 01100111101110001001001001 | [1, 1, 1] | 1 | 1 | 1 | TRUE |

| RTC16 | 10111010101010000010111111 | [1, 1, 1] | 1 | 1 | 1 | TRUE |

| RTC17 | 01011100000110011101101111 | [1, 1, 1] | 1 | 1 | 1 | TRUE |

| RTC18 | 00101011101000011000001000 | [1, 1, 1] | 1 | 1 | 1 | TRUE |

| RTC19 | 10010100001010000001101100 | [1, 1, 1] | 1 | 1 | 1 | TRUE |

| RTC20 | 00100000101110110011000001 | [1, 1, 1] | 1 | 1 | 1 | TRUE |

| RTC21 | 01101011101101000001011011 | [1, 1, 1] | 1 | 1 | 1 | TRUE |

| RTC22 | 00101011101110010111110100 | [1, 1, 1] | 1 | 1 | 1 | TRUE |

| RTC23 | 10000110001011111011100010 | [1, 1, 1] | 1 | 1 | 1 | TRUE |

| RTC24 | 01011110001011101011000011 | [1, 1, 1] | 1 | 1 | 1 | TRUE |

| RTC25 | 11101111000000010110010011 | [1, 1, 1] | 1 | 1 | 1 | TRUE |

| RTC26 | 00011000000100011011000011 | [1, 1, 1] | 1 | 1 | 1 | TRUE |

| RTC27 | 11001011000111100010000011 | [1, 1, 1] | 1 | 1 | 1 | TRUE |

| RTC28 | 10100100101111001111000011 | [1, 1, 1] | 1 | 1 | 1 | TRUE |

| RTC29 | 00011111001111010101000100 | [1, 1, 1] | 1 | 1 | 1 | TRUE |

| RTC30 | 11101001100101001000000010 | [1, 1, 1] | 1 | 1 | 1 | TRUE |

| RTC31 | 11010010001010000011111101 | [1, 1, 1] | 1 | 1 | 1 | TRUE |

| RTC32 | 01110001101101000011101100 | [1, 1, 1] | 1 | 1 | 1 | TRUE |

| RTC33 | 10001101001010011111101000 | [1, 1, 1] | 1 | 1 | 1 | TRUE |

| RTC34 | 10000101000001101000100011 | [1, 1, 1] | 1 | 1 | 1 | TRUE |

| RTC35 | 01001000010101011101110101 | [1, 1, 1] | 1 | 1 | 1 | TRUE |

| RTC36 | 10011001001100011111001001 | [1, 1, 1] | 1 | 1 | 1 | TRUE |

| RTC37 | 11111101001111011110100001 | [1, 1, 1] | 1 | 1 | 1 | TRUE |

| RTC38 | 10000000011010100000001001 | [1, 1, 1] | 1 | 1 | 1 | TRUE |

| RTC39 | 00011010000001011111111100 | [1, 1, 1] | 1 | 1 | 1 | TRUE |

| RTC40 | 11110100101101010010001110 | [1, 1, 1] | 1 | 1 | 1 | TRUE |

| RTC41 | 01101011000010011100111000 | [1, 1, 1] | 1 | 1 | 1 | TRUE |

| RTC42 | 00110001000000100010100010 | [1, 1, 0] | 1 | 1 | 0 | TRUE |

| RTC43 | 00100101100110011111000001 | [1, 1, 1] | 1 | 1 | 1 | TRUE |

| RTC44 | 00100011001001000111100010 | [1, 1, 0] | 1 | 1 | 0 | TRUE |

| RTC45 | 01000001100011111000100000 | [1, 1, 1] | 1 | 1 | 1 | TRUE |

| RTC46 | 00010000000111010100011100 | [1, 1, 1] | 1 | 1 | 1 | TRUE |

| RTC47 | 11011101100010111100000010 | [1, 1, 1] | 1 | 1 | 1 | TRUE |

| RTC48 | 10010000101001001100010100 | [1, 1, 1] | 1 | 1 | 1 | TRUE |

| RTC49 | 11011010101100001100100111 | [1, 1, 1] | 1 | 1 | 1 | TRUE |

| RTC50 | 00010100101011111000100011 | [1, 1, 1] | 1 | 1 | 1 | TRUE |

| RTC51 | 01011000100011010110110011 | [1, 1, 1] | 1 | 1 | 1 | TRUE |

| RTC52 | 10010000001000000000001101 | [1, 1, 1] | 1 | 1 | 1 | TRUE |

| RTC53 | 00111000101110101001001000 | [1, 1, 0] | 1 | 1 | 0 | TRUE |

| RTC54 | 01010100101110110101001011 | [1, 1, 1] | 1 | 1 | 1 | TRUE |

| RTC55 | 00100011001110000011001011 | [1, 1, 0] | 1 | 1 | 0 | TRUE |

| RTC56 | 10110001000100000000000001 | [1, 1, 1] | 1 | 1 | 1 | TRUE |

| RTC57 | 01101010000000010001000011 | [1, 1, 1] | 1 | 1 | 1 | TRUE |

| RTC58 | 11001101000011001100110011 | [1, 1, 1] | 1 | 1 | 1 | TRUE |

| RTC59 | 01010000101111110100101001 | [1, 1, 1] | 1 | 1 | 1 | TRUE |

| RTC60 | 11111000101111000111110101 | [1, 1, 1] | 1 | 1 | 1 | TRUE |

| RTC61 | 10010011001111101010100011 | [1, 1, 1] | 1 | 1 | 1 | TRUE |

| RTC62 | 11010111001100101001000001 | [1, 1, 1] | 1 | 1 | 1 | TRUE |

| RTC63 | 01001001100000001110101000 | [1, 1, 1] | 1 | 1 | 1 | TRUE |

| RTC64 | 10100011001010000001100000 | [1, 1, 1] | 1 | 1 | 1 | TRUE |

| RTC65 | 11011101001111000001011001 | [1, 1, 1] | 1 | 1 | 1 | TRUE |

| RTC66 | 10000011100000001111101000 | [1, 1, 1] | 1 | 1 | 1 | TRUE |

| RTC67 | 11000000011010010001101001 | [1, 1, 1] | 1 | 1 | 1 | TRUE |

| RTC68 | 11111111000010001110111010 | [1, 1, 1] | 1 | 1 | 1 | TRUE |

| RTC69 | 00000001001110010001111111 | [1, 1, 1] | 1 | 1 | 1 | TRUE |

| RTC70 | 01010001000101100110001010 | [1, 1, 1] | 1 | 1 | 1 | TRUE |

| RTC71 | 01000111100010001110000011 | [1, 1, 1] | 1 | 1 | 1 | TRUE |

| RTC72 | 00111000010110010001000101 | [1, 1, 1] | 1 | 1 | 1 | TRUE |

| RTC73 | 10000111001000010011100111 | [1, 1, 1] | 1 | 1 | 1 | TRUE |

| RTC74 | 11010111000011010001101100 | [1, 1, 1] | 1 | 1 | 1 | TRUE |

| RTC75 | 11110110000010001100101111 | [1, 1, 1] | 1 | 1 | 1 | TRUE |

| RTC76 | 11001110100010101000000010 | [1, 1, 1] | 1 | 1 | 1 | TRUE |

| RTC77 | 11101111100001011111011011 | [1, 1, 1] | 1 | 1 | 1 | TRUE |

| RTC78 | 11010111000100000111110101 | [1, 1, 1] | 1 | 1 | 1 | TRUE |

| RTC79 | 10010011000000011101011100 | [1, 1, 1] | 1 | 1 | 1 | TRUE |

| RTC80 | 10111000000100000010111001 | [1, 1, 1] | 1 | 1 | 1 | TRUE |

| RTC81 | 11011011000110001001100110 | [1, 1, 1] | 1 | 1 | 1 | TRUE |

| RTC82 | 10011100101110011001110001 | [1, 1, 1] | 1 | 1 | 1 | TRUE |

| RTC83 | 00100100100011011100100111 | [1, 1, 1] | 1 | 1 | 1 | TRUE |

| RTC84 | 00000000000010011101011111 | [1, 1, 1] | 1 | 1 | 1 | TRUE |

| RTC85 | 01101000011011001011010110 | [1, 1, 1] | 1 | 1 | 1 | TRUE |

| RTC86 | 01011001100100011100111111 | [1, 1, 1] | 1 | 1 | 1 | TRUE |

| RTC87 | 10110011101010010101001011 | [1, 1, 1] | 1 | 1 | 1 | TRUE |

| RTC88 | 10000101101000011110010110 | [1, 1, 1] | 1 | 1 | 1 | TRUE |

| RTC89 | 10110011000001000111101101 | [1, 1, 1] | 1 | 1 | 1 | TRUE |

| RTC90 | 01010101100100001111001000 | [1, 1, 1] | 1 | 1 | 1 | TRUE |

| RTC91 | 01001000000001000001110111 | [1, 1, 1] | 1 | 1 | 1 | TRUE |

| RTC92 | 11010100100000011010110110 | [1, 1, 1] | 1 | 1 | 1 | TRUE |

| RTC93 | 11110111000001110101100000 | [1, 1, 1] | 1 | 1 | 1 | TRUE |

| RTC94 | 10101000101101010110101010 | [1, 1, 1] | 1 | 1 | 1 | TRUE |

| RTC95 | 10110100100010000011011100 | [1, 1, 1] | 1 | 1 | 1 | TRUE |

| RTC96 | 00101001101000110011100010 | [1, 1, 1] | 1 | 1 | 1 | TRUE |

| RTC97 | 11111110001010011011100011 | [1, 1, 1] | 1 | 1 | 1 | TRUE |

| RTC98 | 01001000011110111010001000 | [1, 1, 1] | 1 | 1 | 1 | TRUE |

| RTC99 | 10010001101101001100011111 | [1, 1, 1] | 1 | 1 | 1 | TRUE |

| RTC100 | 11110110101000001111110111 | [1, 1, 1] | 1 | 1 | 1 | TRUE |

References

- Ansys ModelCenter|MBSE Software. Available online: https://www.ansys.com/products/connect/ansys-modelcenter (accessed on 19 August 2023).

- SAIC. Digital Engineering Validation Tool. Available online: https://www.saic.com/digital-engineering-validation-tool (accessed on 11 December 2022).

- Madni, A.M.; Sievers, M. Model-Based Systems Engineering: Motivation, Current Status, and Research Opportunities. Syst. Eng. 2018, 21, 172–190. [Google Scholar] [CrossRef]

- Defense Acquisition University Glossary. Available online: https://www.dau.edu/glossary/Pages/Glossary.aspx#!both|D|27345 (accessed on 15 May 2022).

- Wagner, D.A.; Chodas, M.; Elaasar, M.; Jenkins, J.S.; Rouquette, N. Semantic Modeling for Power Management Using CAESAR. In Handbook of Model-Based Systems Engineering; Madni, A.M., Augustine, N., Sievers, M., Eds.; Springer International Publishing: Cham, Switzerland, 2022; pp. 1–18. ISBN 978-3-030-27486-3. [Google Scholar]

- Wagner, D.A.; Chodas, M.; Elaasar, M.; Jenkins, J.S.; Rouquette, N. Ontological Metamodeling and Analysis Using openCAESAR. In Handbook of Model-Based Systems Engineering; Madni, A.M., Augustine, N., Sievers, M., Eds.; Springer International Publishing: Cham, Switzerland, 2022; pp. 1–30. ISBN 978-3-030-27486-3. [Google Scholar]

- Hennig, C.; Viehl, A.; Kämpgen, B.; Eisenmann, H. Ontology-Based Design of Space Systems. In The Semantic Web—ISWC 2016; Groth, P., Simperl, E., Gray, A., Sabou, M., Krötzsch, M., Lecue, F., Flöck, F., Gil, Y., Eds.; Lecture Notes in Computer Science; Springer International Publishing: Cham, Switzerland, 2016; Volume 9982, pp. 308–324. ISBN 978-3-319-46546-3. [Google Scholar]

- Eddy, D.; Krishnamurty, S.; Grosse, I.; Wileden, J. Support of Product Innovation With a Modular Framework for Knowledge Management: A Case Study. In Proceedings of the Volume 2: 31st Computers and Information in Engineering Conference, Parts A and B, Washington, DC, USA, 28–31 August 2011; ASMEDC: Washington, DC, USA, 2011; pp. 1223–1235. [Google Scholar]

- Yang, L.; Cormican, K.; Yu, M. Ontology-Based Systems Engineering: A State-of-the-Art Review. Comput. Ind. 2019, 111, 148–171. [Google Scholar] [CrossRef]

- Wagner, D.; Kim-Castet, S.Y.; Jimenez, A.; Elaasar, M.; Rouquette, N.; Jenkins, S. CAESAR Model-Based Approach to Harness Design. In Proceedings of the 2020 IEEE Aerospace Conference, Big Sky, MT, USA, 7–14 March 2020; IEEE: New York, NY, USA, 2020; pp. 1–13. [Google Scholar]

- Moser, T. The Engineering Knowledge Base Approach. In Semantic Web Technologies for Intelligent Engineering Applications; Biffl, S., Sabou, M., Eds.; Springer International Publishing: Cham, Switzerland, 2016; pp. 85–103. ISBN 978-3-319-41488-1. [Google Scholar]

- Arp, R.; Smith, B.; Spear, A.D. Building Ontologies with Basic Formal Ontology; MIT Press: Cambridge, MA, USA, 2015. [Google Scholar]

- Guizzardi, G.; Wagner, G.; Almeida, J.P.A.; Guizzardi, R.S.S. Towards Ontological Foundations for Conceptual Modeling: The Unified Foundational Ontology (UFO) Story. Appl. Ontol. 2015, 10, 259–271. [Google Scholar] [CrossRef]

- CUBRC, Inc. An Overview of the Common Core Ontologies; CUBRC, Inc.: San Diego, CA, USA, 2020. [Google Scholar]

- Shani, U. Can Ontologies Prevent MBSE Models from Becoming Obsolete? In Proceedings of the 2017 Annual IEEE International Systems Conference (SysCon), Montreal, QC, Canada, 24–27 April 2017; IEEE: New York, NY, USA, 2017; pp. 1–8. [Google Scholar]

- Riaz, F.; Ali, K.M. Applications of Graph Theory in Computer Science. In Proceedings of the 2011 Third International Conference on Computational Intelligence, Communication Systems and Networks, Bali, Indonesia, 26–28 July 2011; IEEE: New York, NY, USA, 2011; pp. 142–145. [Google Scholar]

- Medvedev, D.; Shani, U.; Dori, D. Gaining Insights into Conceptual Models: A Graph-Theoretic Querying Approach. Appl. Sci. 2021, 11, 765. [Google Scholar] [CrossRef]

- Cotter, M.; Hadjimichael, M.; Markina-Khusid, A.; York, B. Automated Detection of Architecture Patterns in MBSE Models. In Recent Trends and Advances in Model Based Systems Engineering; Madni, A.M., Boehm, B., Erwin, D., Moghaddam, M., Sievers, M., Wheaton, M., Eds.; Springer International Publishing: Cham, Switzerland, 2022; pp. 81–90. ISBN 978-3-030-82082-4. [Google Scholar]

- Mordecai, Y.; Fairbanks, J.P.; Crawley, E.F. Category-Theoretic Formulation of the Model-Based Systems Architecting Cognitive-Computational Cycle. Appl. Sci. 2021, 11, 1945. [Google Scholar] [CrossRef]

- Herzig, S.J.I.; Qamar, A.; Paredis, C.J.J. An Approach to Identifying Inconsistencies in Model-Based Systems Engineering. Procedia Comput. Sci. 2014, 28, 354–362. [Google Scholar] [CrossRef]

- NASA. NASA Handbook for Models and Simulations: An Implementation Guide for Nasa-Std-7009a; NASA: Washington, DC, USA, 2019.

- Chapurlat, V.; Nastov, B.; Bourdon, J. A Conceptual, Methodological and Technical Contribution for Modeling and V&V in MBSE Context. In Proceedings of the 2022 IEEE International Symposium on Systems Engineering (ISSE), Vienna, Austria, 24 October 2022; IEEE: New York, NY, USA, 2022; pp. 1–8. [Google Scholar]

- Nastov, B.; Chapurlat, V.; Pfister, F.; Dony, C. MBSE and V&V: A Tool-Equipped Method for Combining Various V&V Strategies. IFAC-PapersOnLine 2017, 50, 10538–10543. [Google Scholar] [CrossRef]

- Chapurlat, V. UPSL-SE: A Model Verification Framework for Systems Engineering. Comput. Ind. 2013, 64, 581–597. [Google Scholar] [CrossRef]

- Lykins, H.; Friedenthal, S. Adapting UML for an Object Oriented Systems Engineering Method (OOSEM). INCOSE Int. Symp. 2000, 10, 490–497. [Google Scholar] [CrossRef]

- Rodano, M.; Giammarco, K. A Formal Method for Evaluation of a Modeled System Architecture. Procedia Comput. Sci. 2013, 20, 210–215. [Google Scholar] [CrossRef]

- Dunbar, D.; Hagedorn, T.; Blackburn, M.; Dzielski, J.; Hespelt, S.; Kruse, B.; Verma, D.; Yu, Z. Driving Digital Engineering Integration and Interoperability through Semantic Integration of Models with Ontologies. Syst. Eng. 2023, 26, 365–378. [Google Scholar] [CrossRef]

- Jenkins, J.S.; Rouquette, N.F. Semantically-Rigorous Systems Engineering Modeling Using SysML and OWL. In Proceedings of the International Workshop on Systems & Concurrent Engineering for Space Applications (SECESA 2012), Lisbon, Portugal, 17–19 October 2012; p. 8. [Google Scholar]

- SPARQL 1.1 Overview. Available online: https://www.w3.org/TR/sparql11-overview/ (accessed on 3 March 2021).

- Shapes Constraint Language (SHACL). Available online: https://www.w3.org/TR/shacl/ (accessed on 15 May 2022).

- Dunbar, D.; Hagedorn, T.; Blackburn, M.; Verma, D. Use of Semantic Web Technologies to Enable System Level Verification in Multi-Disciplinary Models. In Advances in Transdisciplinary Engineering; Moser, B.R., Koomsap, P., Stjepandić, J., Eds.; IOS Press: Amsterdam, The Netherlands, 2022; ISBN 978-1-64368-338-6. [Google Scholar]

- Dunbar, D.; Blackburn, M.; Hagedorn, T.; Verma, D. Graph Representation of System of Analysis in Determining Well-Formed Construction. In Proceedings of the 2023 Conference on Systems Engineering Research, Toulouse, France, 26 December 2023; Wiley: Hoboken, NJ, USA, 2023. [Google Scholar]

- SHACL Advanced Features. Available online: https://www.w3.org/TR/shacl-af/ (accessed on 3 May 2023).

- Hagberg, A.A.; Schult, D.A.; Swart, P.J. Exploring Network Structure, Dynamics, and Function Using NetworkX. In Proceedings of the 7th Python in Science Conference, Pasadena, CA, USA, 21 August 2008; pp. 11–15. [Google Scholar]

- West, T.D.; Pyster, A. Untangling the Digital Thread: The Challenge and Promise of Model-Based Engineering in Defense Acquisition. Insight 2015, 18, 45–55. [Google Scholar] [CrossRef]

- Cilli, M.V. Improving Defense Acquisition Outcomes Using an Integrated Systems Engineering Decision Management (ISEDM) Approach. Ph.D. Thesis, Stevens Institute of Technology, Hoboken, NJ, USA, 2015. [Google Scholar]

- Noy, N.F.; Crubezy, M.; Fergerson, R.W.; Knublauch, H.; Tu, S.W.; Vendetti, J.; Musen, M.A. Protégé-2000: An Open-Source Ontology-Development and Knowledge-Acquisition Environment. In Proceedings of the AMIA Symposium, Washington, DC, USA, 8–12 November 2023; p. 2. [Google Scholar]

- Parsia, B.; Sirin, E. Pellet: An Owl Dl Reasoner. In Proceedings of the Third international semantic web conference-poster. Citeseer 2004, 18, 13. [Google Scholar]

- Ashley, S.; Nicholas, C. pySHACL 2022. [CrossRef]

- Dunbar, D.; Hagedorn, T.; West, T.D.; Chell, B.; Dzielski, J.; Blackburn, M.R. Transforming Systems Engineering Through Integrating Modeling and Simulation and the Digital Thread. In Systems Engineering for the Digital Age; Verma, D., Ed.; Wiley: Hoboken, NJ, USA, 2023; pp. 47–67. ISBN 978-1-394-20328-4. [Google Scholar]

- Gephi—The Open Graph Viz Platform. Available online: https://gephi.org/ (accessed on 4 May 2023).

- RDFLib. Available online: https://github.com/RDFLib/rdflib (accessed on 4 May 2023).

- Friedenthal, S.; Seidewitz, E. Systems Engineering Newsletter; Project Performance International (PPI): Melbourne, Australia, 2020; p. 18. [Google Scholar]

| # | Requirement | Verification Prong |

|---|---|---|

| 1 | All entitles of type A shall have as attribute at most 1 instance of B | DL Reasoning |

| 2 | All entities of type C shall have as attribute at least 1 instance of D | SHACL Constraint |

| 3 | A subgraph showing composition shall be a weakly connected digraph | Graph-Based |

| # | Requirement | Requirement Category | Assessment Approach | Context Dependent |

|---|---|---|---|---|

| 1 | Termination points shall not connect to like points (input–input, output–output, value property–value property) | Allowed Connection | DL Reasoning | No |

| 2 | Each SoA Connector shall be terminated at exactly two unique points | Allowed Connection | DL Reasoning, SHACL | No |

| 3 | Each SoA Connector shall be connected to a minimum of one <<model>> element | Allowed Connection | SHACL | No |

| 4 | Models shall not connect to themselves | Allowed Connection | DL Reasoning | No |

| 5 | At least one analysis objective shall be present | Specification | SHACL | No |

| 6 | Tool specification shall be included | Specification | SHACL | Yes |

| 7 | All value properties shall be tagged with a value in the loaded ontologies | DEFII | SHACL | No |

| 8 | Models shall be instantiated (there should be a value associated with every entry from the AFD) | DEFII | SHACL | No |

| 9 | Constraint parameters shall be directional (in SysML—have <<DirectedFeature>> stereotype with provided or required applied) | DEFII | SHACL | No |

| 10 | SoA shall form a Directed Acyclic Graph (DAG) when ordered by sequence | Graph-Based | Graph Analysis | Yes |

| C# | Req # | Description |

|---|---|---|

| 1 | Termination points shall not connect to like points (input–input, output–output, value property–value property) | |

| C1 | 1a | Input → Input |

| C2 | 1b | Output → Output |

| C3 | 1c | SoA Objective → SoA Objective |

| C4 | 1d | Value Property of SuA → Value Property of SuA |

| C5 | 1e | Value Property of SuA → SoA Objective |

| 2 | Each SoA Connector shall be terminated at exactly two unique points | |

| C6 | 2a | SoA Connector with 1 terminus |

| C7 | 2b | SoA Connector with 3 termini |

| C8 | 3 | Each SoA Connector shall be connected to a minimum of one <<model>> element |

| 4 | Models shall not connect to themselves | |

| C9 | 4a | Output → Output of same model |

| C10 | 4b | Input → Input of same model |

| C11 | 4c | Output → Input of same model |

| C12 | 5 | At least one objective shall be present and identified |

| C13 | 6 | Tool Spec shall be included for all models |

| 7 | All blocks and VPs associated with SoA shall be stereotyped with value in ontology | |

| C14 | 7a | Block shall be stereotyped |

| C15 | 7b | SuAVP shall be stereotyped |

| C16 | 7c | SoA Objective shall be stereotyped (beyond SoAObjective) |

| 8 | Models shall be instantiated (there shall be a value associated with every entry from the AFD) | |

| C17 | 8a | No Analysis Instantiation |

| C18 | 8b | Instantiation with model missing |

| C19 | 8c | Instantiation with SuAVP missing |

| C20 | 8d | Instantiation with Objective missing |

| C21 | 8e | Instantiation with Tool Spec Missing |

| 9 | Constraint Parameters shall be directional (in SysML—have <<DirectedFeature>> stereotype with provided or required applied) | |

| C22 | 9a | No DirectedFeature |

| C23 | 9b | DirectedFeature without direction specified |

| C24 | 9c | DirectedFeature with providedRequired specified |

| 10 | SoA shall form a Directed Acyclic Graph (DAG) when ordered by sequence | |

| C25 | 10a | Output of model to input of previous model |

| C26 | 10b | Output of model to Value Property to create a cycle |

| Scenario Name | Scenario Description | DL Reasoning | SHACL | GRAPH | Pellet Message |

|---|---|---|---|---|---|

| C10 | Gravity to Air Temp Inputs on Fire Simulation Model | 1 | 0 | 0 | 1) Functional SoA_terminated_to_target 09de70c2-0dfd-4599-8ebe-1dfaab9b7d61_SoA SoA_terminated_to_target 69ec40fc-3edd-4635-8e56-f21431071a86_SoA 09de70c2-0dfd-4599-8ebe-1dfaab9b7d61_SoA SoA_terminated_to_target a4f578a8-ce36-4417-ad15-81f982ebf855_SoA |

| Scenario Name | Scenario Description | DL Reasoning | SHACL | GRAPH | SHACL Messages |

|---|---|---|---|---|---|

| C16 | Removed CircularErrorProbability (CEP) from objective | 0 | 1 | 0 | Violation: Requirement 7—Value Property: ‘CEP’ is not tagged with a loaded ontology term |

| Mapped Results | Generated Results | |||||

|---|---|---|---|---|---|---|

| Scenario Name | DL Reasoning | SHACL | GRAPH | DL Reasoning | SHACL | GRAPH |

| C0 | 0 | 0 | 0 | |||

| C1 | 1 | 0 | 1 | 1 | 0 | 0 |

| C2 | 1 | 0 | 1 | 1 | 0 | 0 |

| C3 | 1 | 1 | 0 | |||

| C4 | 1 | 1 | 0 | |||

| C5 | 1 | 1 | 0 | |||

| C6 | 0 | 1 | 0 | 0 | 1 | 0 |

| C7 | 1 | 0 | 0 | |||

| C8 | 0 | 1 | 0 | 0 | 1 | 0 |

| C9 | 1 | 0 | 0 | 1 | 0 | 0 |

| C10 | 1 | 0 | 0 | 1 | 0 | 0 |

| C11 | 1 | 0 | 1 | 1 | 0 | 1 |

| C12 | 0 | 1 | 0 | 0 | 1 | 0 |

| C13 | 0 | 1 | 0 | 0 | 1 | 0 |

| C14 | 0 | 1 | 0 | 0 | 1 | 0 |

| C15 | 0 | 1 | 0 | 0 | 1 | 0 |

| C16 | 0 | 1 | 0 | 0 | 1 | 0 |

| C17 | 0 | 1 | 0 | 0 | 1 | 0 |

| C18 | 0 | 1 | 0 | 0 | 1 | 0 |

| C19 | 0 | 1 | 0 | 0 | 1 | 0 |

| C20 | 0 | 1 | 0 | 0 | 1 | 0 |

| C21 | 0 | 1 | 0 | 0 | 1 | 0 |

| C22 | 0 | 1 | 0 | 0 | 1 | 0 |

| C23 | 0 | 1 | 0 | 0 | 1 | 0 |

| C24 | 0 | 1 | 0 | 0 | 1 | 0 |

| C25 | 0 | 0 | 1 | 0 | 0 | 1 |

| C26 | 0 | 0 | 1 | 0 | 0 | 1 |

| Scenario Name | Scenario Description | Expected Result | DL Reasoning | SHACL | GRAPH | Pass |

|---|---|---|---|---|---|---|

| RTC1 | 11100010000110101000001001 | [1, 1, 1] | 1 | 1 | 1 | TRUE |

| RTC2 | 11100101000110011111110100 | [1, 1, 1] | 1 | 1 | 1 | TRUE |

| RTC3 | 01101111000110001000010010 | [1, 1, 1] | 1 | 1 | 1 | TRUE |

| RTC4 | 00100111000000000000010110 | [1, 1, 0] | 1 | 1 | 0 | TRUE |

| RTC5 | 01001000100101011001101001 | [1, 1, 1] | 1 | 1 | 1 | TRUE |

| RTC6 | 11100111001001000010101000 | [1, 1, 1] | 1 | 1 | 1 | TRUE |

| RTC7 | 01001011000000001110111000 | [1, 1, 1] | 1 | 1 | 1 | TRUE |

| RTC8 | 00110101001011000010100001 | [1, 1, 0] | 1 | 1 | 0 | TRUE |

| RTC9 | 11010101100011111110001000 | [1, 1, 1] | 1 | 1 | 1 | TRUE |

| RTC10 | 10010000001000010001100111 | [1, 1, 1] | 1 | 1 | 1 | TRUE |

| … | … | … | … | … | … | … |

| RTC91 | 00100101001011000110110101 | [1, 1, 0] | 1 | 1 | 0 | TRUE |

| RTC92 | 00100110101010101101000011 | [1, 1, 0] | 1 | 1 | 0 | TRUE |

| RTC93 | 01000110000001100000001001 | [1, 1, 1] | 1 | 1 | 1 | TRUE |

| RTC94 | 11000111001101001101011101 | [1, 1, 1] | 1 | 1 | 1 | TRUE |

| RTC95 | 01100001001010101010000011 | [1, 1, 1] | 1 | 1 | 1 | TRUE |

| RTC96 | 01000001100010111010000010 | [1, 1, 1] | 1 | 1 | 1 | TRUE |

| RTC97 | 10011010101111000000110100 | [1, 1, 1] | 1 | 1 | 1 | TRUE |

| RTC98 | 10110000010101101011100001 | [1, 1, 1] | 1 | 1 | 1 | TRUE |

| RTC99 | 11011010000110100010000010 | [1, 1, 1] | 1 | 1 | 1 | TRUE |

| RTC100 | 11110001100110010110101000 | [1, 1, 1] | 1 | 1 | 1 | TRUE |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Dunbar, D.; Hagedorn, T.; Blackburn, M.; Verma, D. A Three-Pronged Verification Approach to Higher-Level Verification Using Graph Data Structures. Systems 2024, 12, 27. https://doi.org/10.3390/systems12010027

Dunbar D, Hagedorn T, Blackburn M, Verma D. A Three-Pronged Verification Approach to Higher-Level Verification Using Graph Data Structures. Systems. 2024; 12(1):27. https://doi.org/10.3390/systems12010027

Chicago/Turabian StyleDunbar, Daniel, Thomas Hagedorn, Mark Blackburn, and Dinesh Verma. 2024. "A Three-Pronged Verification Approach to Higher-Level Verification Using Graph Data Structures" Systems 12, no. 1: 27. https://doi.org/10.3390/systems12010027

APA StyleDunbar, D., Hagedorn, T., Blackburn, M., & Verma, D. (2024). A Three-Pronged Verification Approach to Higher-Level Verification Using Graph Data Structures. Systems, 12(1), 27. https://doi.org/10.3390/systems12010027