A Systematic Review on Extended Reality-Mediated Multi-User Social Engagement

Abstract

:1. Introduction

- RQ1. What is the mechanism of XR for mediating MSE?

- ⮚

- RQ1.1. What are the cognitive–behavioral mechanisms of MSE and their manifestations?

- ⮚

- RQ1.2. What are the technology-mediated mechanisms of XR and their manifestations?

- ⮚

- RQ1.3. What are the strongly correlated SFs that influence MSE?

- RQ2. How has the effectiveness of XR-mediated MSE been proven?

- ⮚

- RQ2.1. What evaluation settings have been established for XR-mediated MSE?

- ⮚

- RQ2.2. What is the performance of these evaluations?

- Theoretical implications. By addressing RQ1.1, RQ1.2, and RQ1.3, this study proposes a novel analytical framework for XR-mediated MSE. This framework integrates comprehensive elements supported by interdisciplinary theories and is validated by sample data, providing an insightful foundation for future development. The framework highlights the critical role of engagement as a prerequisite for social processes and outcomes. It urges researchers to revisit and update their theoretical perspectives to thoroughly examine future empirical studies on XR-mediated MSE;

- Practical implications. By answering RQ2.1 and RQ2.2, this study analyzes validation methods and the current performance of XR-mediated MSE. A case study is also provided in Section 5.2.3 Significant Mediated Relationships, further explaining strongly correlated SFs and their mediation mechanisms to MSE. These insights emphasize both the potential advantages and limitations of current empirical research in the field. The findings offer a comprehensive blueprint for effective design and implementation for practitioners in both industry and academia, including design researchers, metaverse designers, and developers. For example, the results concerning SF-mediated mechanisms can guide the metaverse product pipeline by supporting knowledge graphs of cross-spatiotemporal or co-present activities;

- Societal implications. Although the metaverse is not yet fully ubiquitous, this study explores the significance of XR as an emerging socio-technical system for future human social activities through comprehensive answers to RQ1 and RQ2. The findings are broadly applicable, potentially attracting interdisciplinary and cross-sectoral interest. This study encourages further reflection on the evolving relationship between people and the metaverse, supporting innovative perspectives for transforming traditional industries (e.g., tourism to cloud tourism).

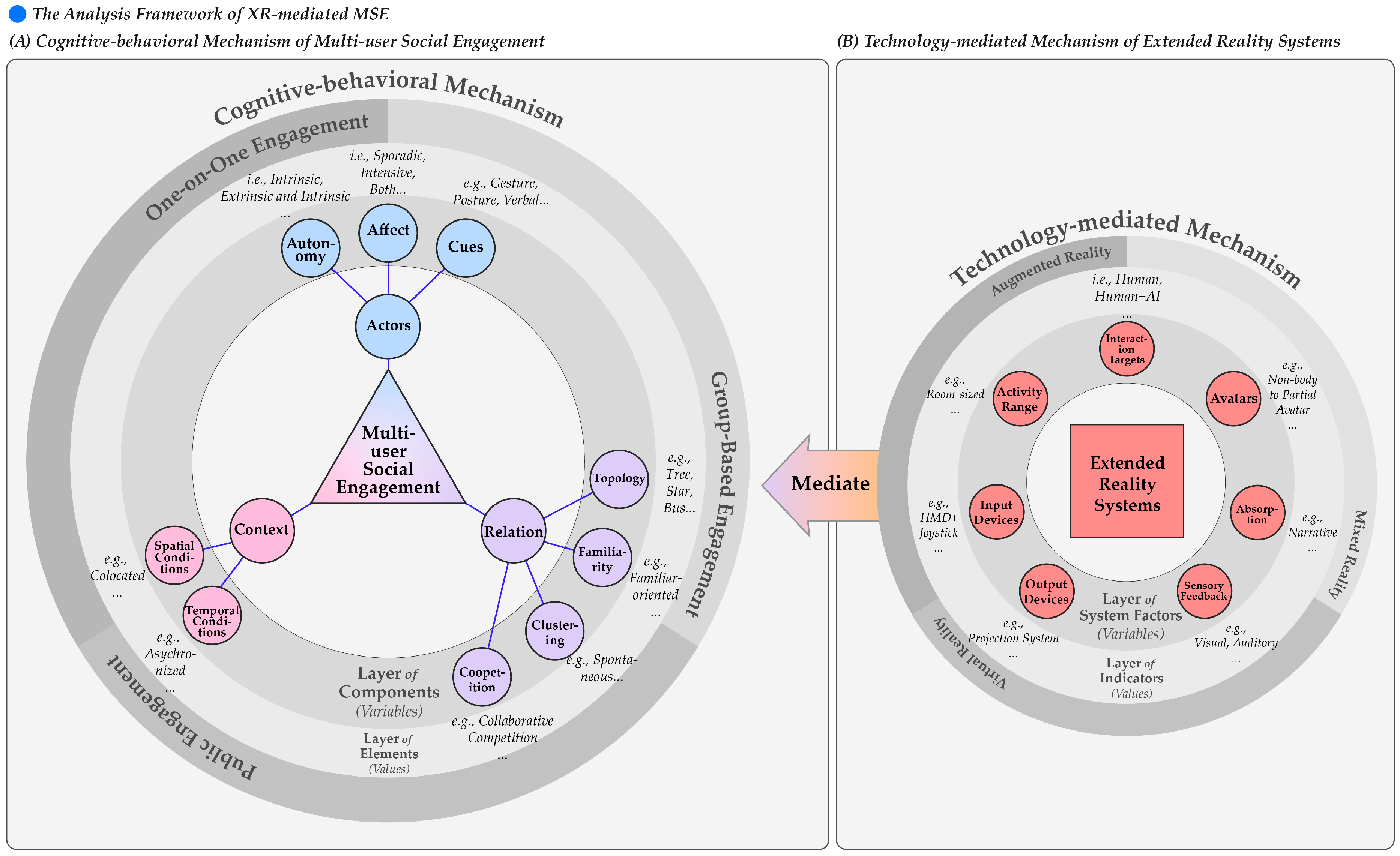

2. Concepts and Analysis Framework

2.1. Cognitive–Behavioral Mechanism of Multi-User Social Engagement (MSE)

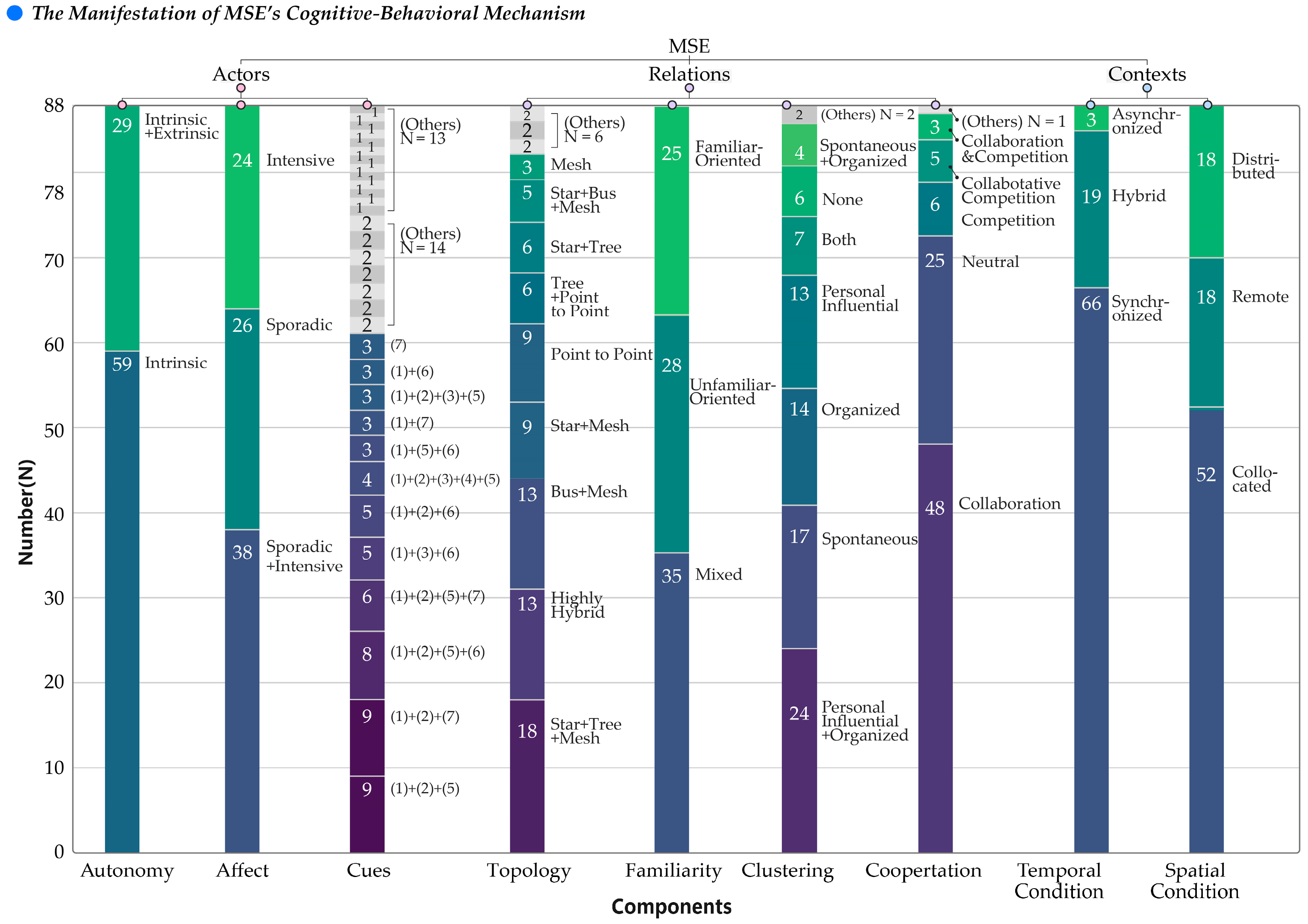

2.1.1. Actors: Autonomy, Affect, and Cues

- (1)

- Autonomy. Rooted in Self-Determination Theory (SDT) [44], autonomy in MSE arises from the interplay of personal agency and external influences [45]. SDT characterizes motivation as a spectrum, ranging from motivation through extrinsic motivation to intrinsic motivation [46]. This spectrum represents various motivational types for MSE—from pure intrinsic motivation, driven by personal enjoyment, to a blend of extrinsic and intrinsic motivations, where external rewards enhance personal interest. Notably, solely extrinsic motivation is uncommon in MSE contexts;

- (2)

- Affect. Affect encompasses a wide array of emotions sustained over time beyond transient reactions. Myers categorizes affect into three dimensions: physiological arousal, expressive behaviors, and conscious experience, with arousal as the core element [47]. While tools such as the Self-Assessment Manikin (SAM) represent arousal levels [48], they may not capture the full spectrum of affective states in MSE. These states can be intensive, sporadic, or a mix of both;

- (3)

- Cues. Essential for MSE, cues transmit information, intentions, and emotions, traditionally classified as verbal or non-verbal [49,50]. Verbal cues are essential in the interaction between human users and humanoid interfaces. Non-verbal cues are equally important. The importance of non-verbal cues becomes especially evident in situations where non-verbal engagement behaviors conflict with verbal behaviors [51]. This conflict can lead to difficulties in maintaining communication. However, this binary classification may lack the granularity for using XR systems. Incorporating insights from studies on Embodied Agents [52], Task Coordination and Communication [53], and Conversational Agents [54], an expanded classification of cues has been developed. This classification includes verbal communication, facial expressions, gestures, touch, posture, object control, and material sharing.

2.1.2. Relations: Topology, Familiarity, Clustering, and Coopetition

- (1)

- Topology. Analyzed using Graph Theory, topology describes the spatial structure of social relations, presenting society as an undirected network to reveal various social configurations [57]. Understanding the representational significance of topology in social relationships is crucial. For example, in a tree topology, a guidance agent acts as scaffolding. It provides instructions and emotional support to help learners complete tasks they cannot manage independently. This process establishes a tree-like relationship between the agent and the learners [58]. This study synthesizes Local Area Network (LAN) Topologies [59] to outline basic types: Point to Point: One-on-one interactions, e.g., private conversations. Star: Central communication hub, e.g., lectures and concerts. Mesh: Complex interconnections, e.g., research collaborations. Bus: Linear route (actors’ cognitive–behavioral focus) with their immediate entry/exit, e.g., exhibitions. Tree: Hierarchical structures, e.g., leadership or teacher–student relationships. Highly Hybrid: Combination of three or more topologies;

- (2)

- Familiarity. This represents the depth of relationships, or social connectivity, similar to the core measurement content of the Personal Acquaintance Measure (PAM) [60]. Familiarity captures nuances of relationship duration, frequency, and openness to disclosing personal information [61]. Categories include Familiar-oriented: Stable connections; Unfamiliar-oriented: No prior relationship; and Mixed: Combination of both;

- (3)

- Clustering. Drawing from the Two-step Flow of Communication Theory, opinion leaders, serving as key nodes, facilitate greater clustering of efficient information and promote two-step communication, especially in large events [62]. This influences social opinions and behaviors and enhances network vitality. Clustering describes group formations with dense internal and sparse external ties. Origins of clustering include Spontaneous: Shared interests; Organized: Organizational affiliations. Personal influential: Influence from opinion leaders; Combination: Mix of types; All: Inclusive of all types; and None: Subtle manifestation;

- (4)

- Coopetition. According to Game Theory [63] and Social Interdependence Theory [64], actors exhibit both self-interested and altruistic behaviors, characterized as coopetition—a fusion of cooperation and competition [63,65]. This encompasses cognitive processes (e.g., team cognition), motivational processes (e.g., cohesion), and behavioral processes (e.g., coordination), contributing to performance and effectiveness [66]. Forms include Competition, Collaboration, Collaborative Competition, Competitive Collaboration, Neutral, and Combination [67].

2.1.3. Contexts: Spatial and Temporal Conditions

- (1)

- Spatial conditions. Abbott proposes that this is a specifically Chicago School insight: ‘One cannot understand social life without understanding the arrangements of particular social actors in particular social times and places… Social facts are located’ [75,76]. The sub-classification of spatial conditions [8], based on MSE situations, effectively represents these contextual attributes. Spatial conditions are categorized into Collocated, Remote, and Mixed. Collocated settings involve direct, co-present communication, allowing for immediate feedback and richer non-verbal communication. Remote settings involve physical separation and rely on technology, which introduces different dynamics;

- (2)

- Temporal conditions. Social time is multi-dimensional, irregular, and multidirectional, with social time following a rhythm specified by human activity [77]. Emirbayer and Mische define agency as ‘temporally constructed engagement by actors’ [72]. Temporal conditions are classified as Synchronized (real-time interaction), Asynchronized (delayed interaction), and Hybrid (a mix of both). Analyzing these temporal conditions helps us understand the dynamics and effectiveness of interactions [77].

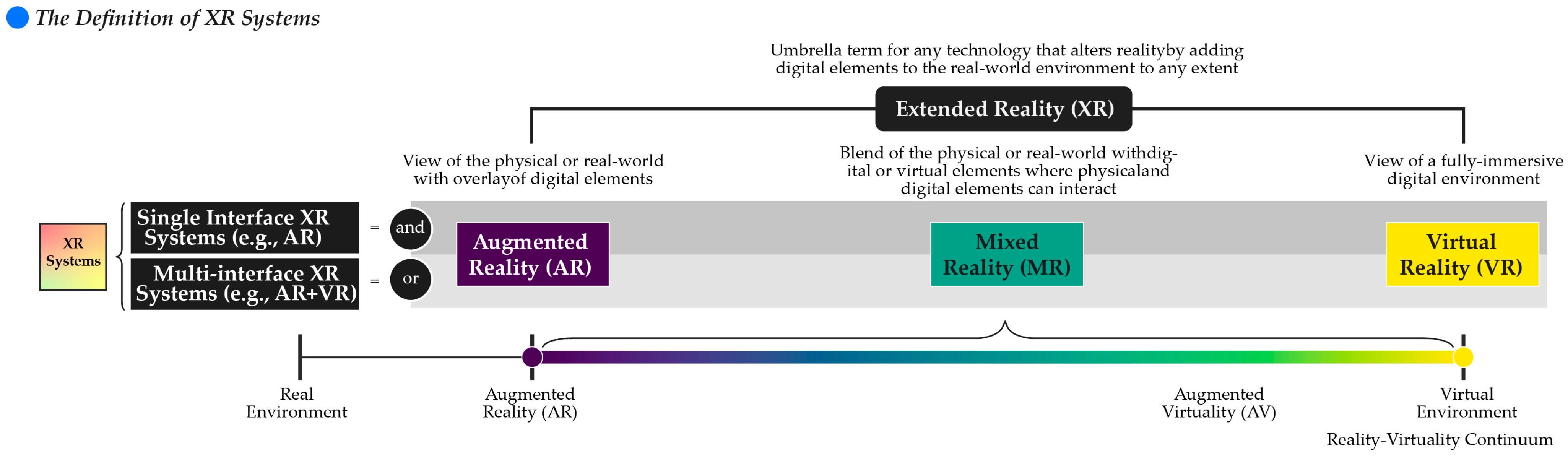

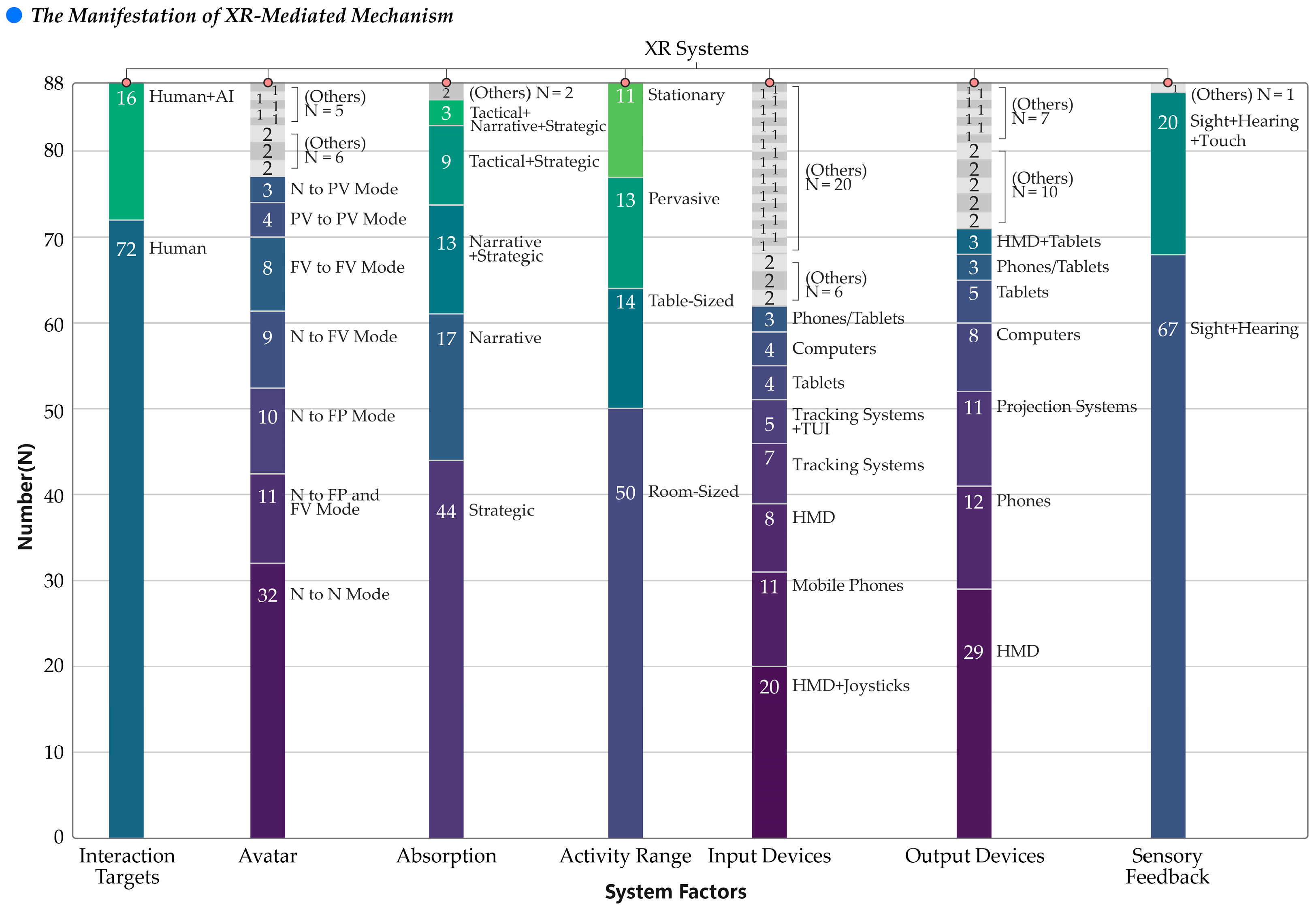

2.2. Extended Reality (XR) Systems

2.2.1. Interaction Targets

2.2.2. Avatars

2.2.3. Activity Range

2.2.4. Absorption

2.2.5. Input and Output Devices

2.2.6. Sensory Feedback

2.3. Overview of the XR-Mediated MSE Analysis Framework

3. Methodology

4. Results

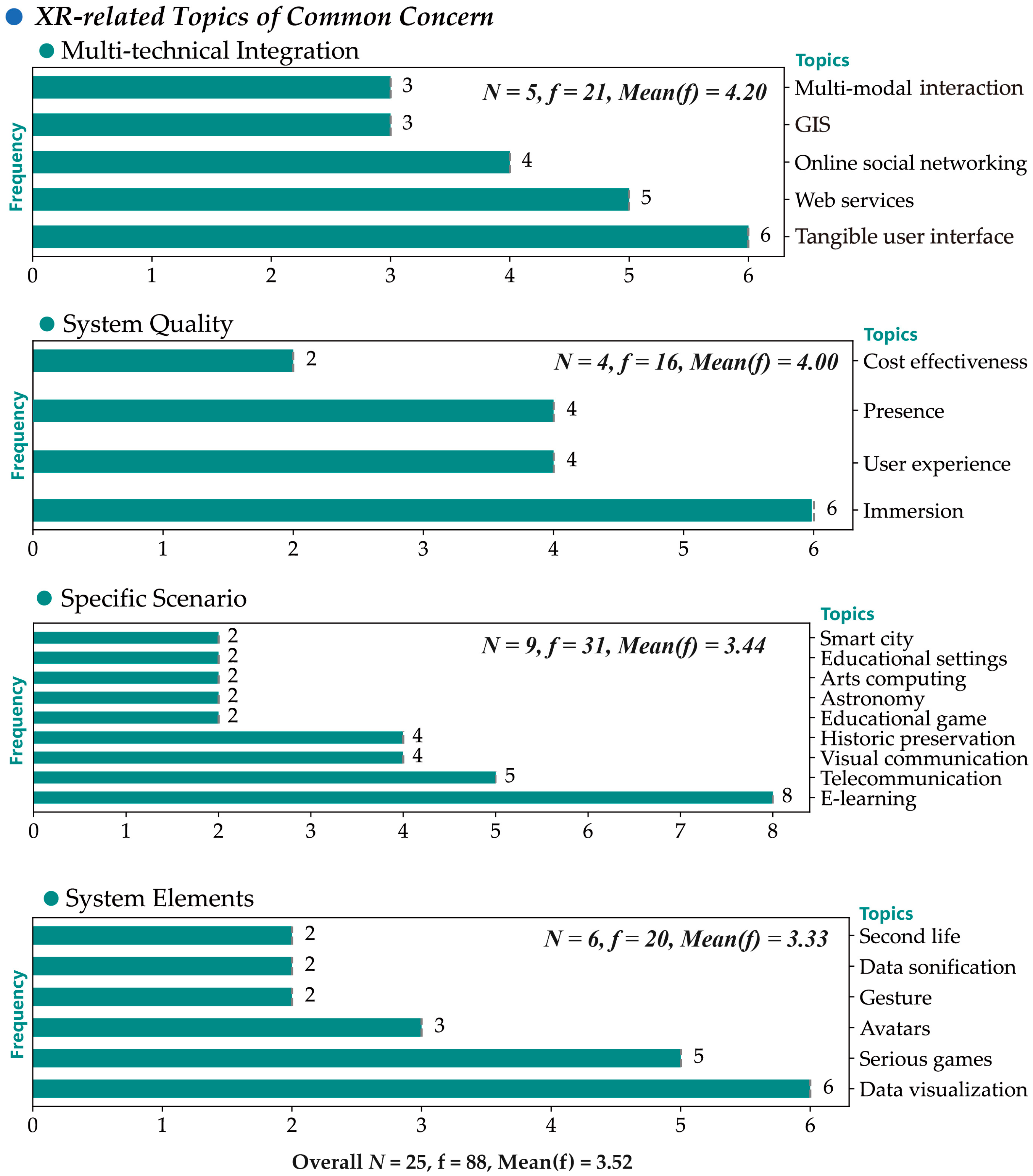

4.1. Bibliometric Information

4.1.1. Publication Trends and Topics

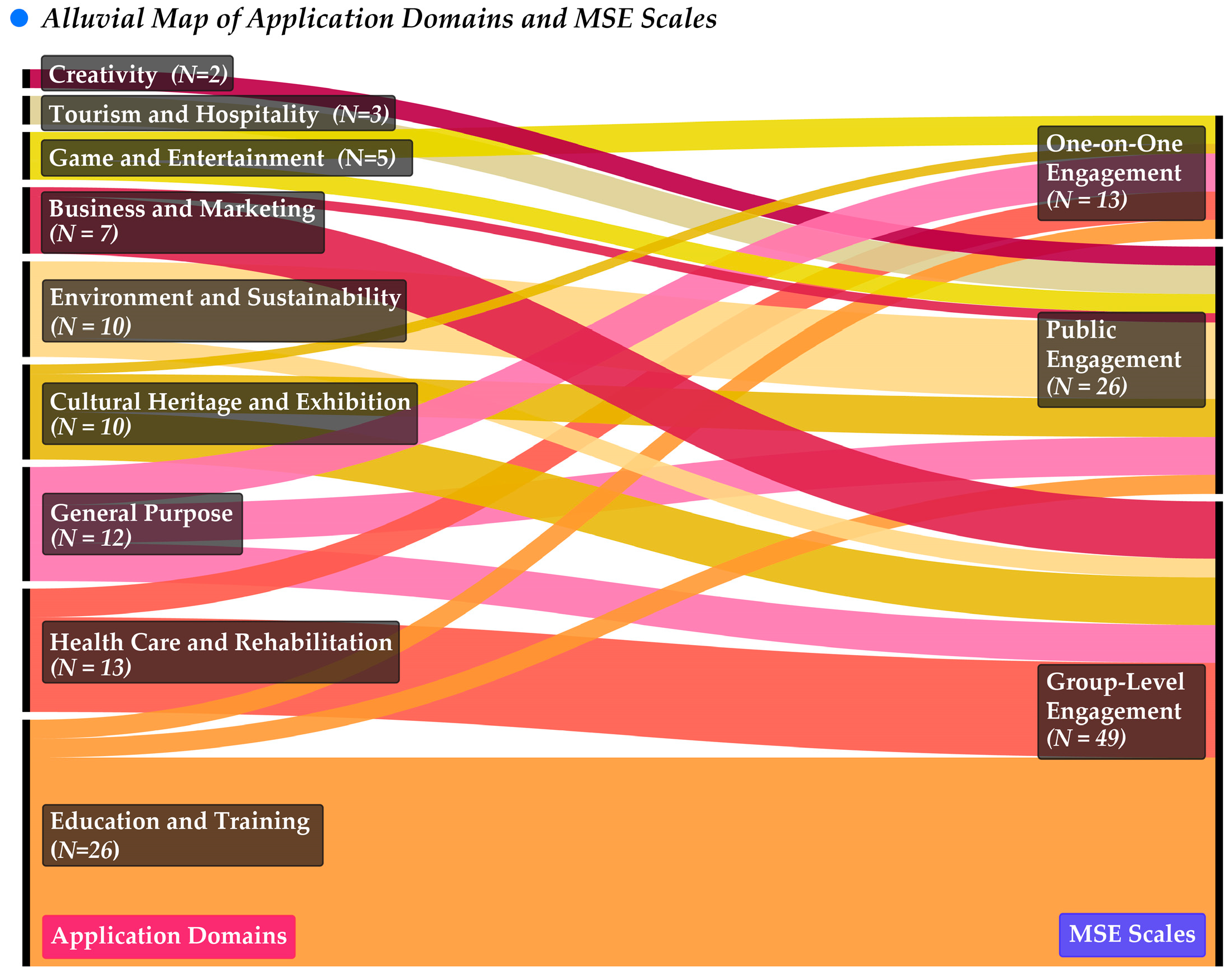

4.1.2. Application Domains, MSE Scales and XR Types

4.2. The Mechanism of XR-Mediated MSE

4.2.1. Manifestation of Cognitive–Behavioral Mechanism

4.2.2. Manifestation of the Technology-Mediated Mechanism

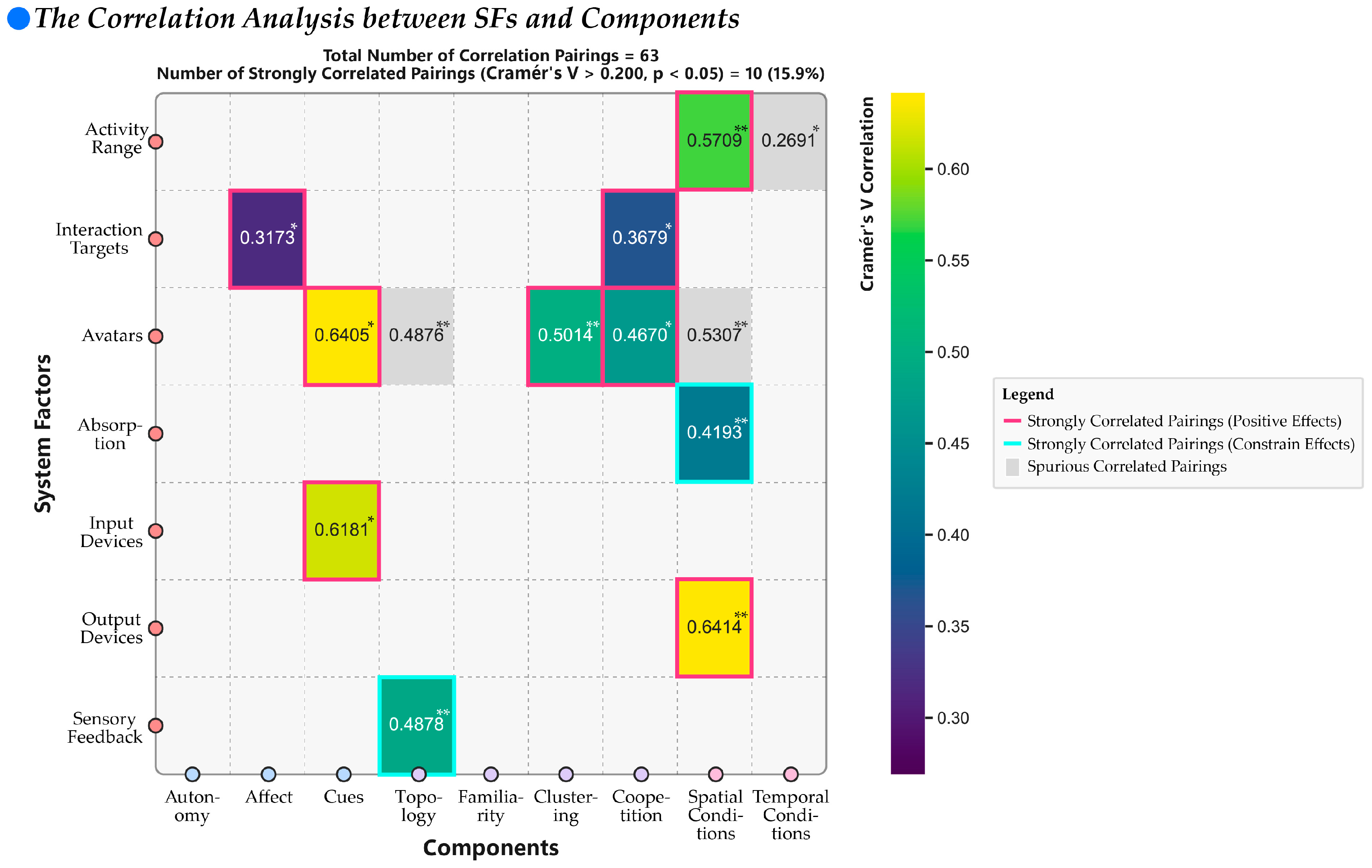

4.2.3. Strongly Correlated SFs for Mediating MSE

- (1)

- Output devices as strongly correlated SF for spatial conditions. Output devices mediate spatial conditions based on significant correlations (Cramér’s V = 0.641, χ2(40) = 72.403, p = 0.001). For instance, projection + speaker setups are exclusive to collocated conditions, creating dense, immersive communication environments for attendees. Meanwhile, Samayoa et al.’s projection system [123] also enhanced social presence in remote conditions. HMD and phones are more associated with collocated conditions, while computers are linked with remote conditions. Overall, corresponding output devices mediate spatial conditions; further case analyses are provided in Section 5.2.3 Output Devices → Spatial Conditions;

- (2)

- Input devices as strongly correlated SF for cues. Input devices mediate cues with significant correlations (Cramér’s V = 0.618, χ2(930) = 1008.478, p = 0.037). Examples include HMD+Joystick and various cue expression paradigms, tracking systems involving postures, and the amplification of body communication in MSE. TUI introduces object control cues, showing their potential as communication tools, as seen in cases [58,129,146,149,152,156,171]. Further enhanced modes are provided in Section 5.2.3 Input Devices → Cues;

- (3)

- Avatar as strongly correlated with SF for cues, clustering, and coopetition. The [Avatar → Cues] pairing showed a highly significant correlation (Cramér’s V = 0.641, χ2(434) = 505.415, p = 0.010). Further case analyses are provided in Section 5.2.3 Avatars → Cues. The [Avatar → Clustering] pairing also showed a significant correlation (Cramér’s V = 0.501, χ2(84) = 123.675, p = 0.003), with avatars facilitating organized and personal clustering. This suggests that avatars may facilitate personal Influential clustering by extending real-world identities. It provides anonymity or enables identity reshaping in massive social settings, granting more freedom for social expression, as seen in the SocialVR application [112] and VRdeo platform [181]. Further case analyses are provided in Section 5.2.3 Avatars → Coopetition. For [Avatar → Coopetition], a significant moderate correlation was found (Cramér’s V = 0.467, χ2(70) = 95.964, p = 0.021), with numerous tags binding its presence to collaboration. This proves its potential in supporting virtual collaboration, similar to non-avatar modes, as in cases [46,51,177,181,189]. Despite moderate significant correlations for [Avatar → Topology] and [Avatar → Spatial conditions] (Cramér’s V = 0.488, χ2(154) = 230.184, p = 0.000; Cramér’s V = 0.531, χ2(28) = 49.563, p = 0.007). These correlations are based on fragmented, low-co-occurrence tag combinations, indicating a pseudo-correlation. These tags’ low-frequency co-occurrences do not support causality and contradict logical expectations. Therefore, avatars do not mediate topology and spatial conditions. Further case analyses are provided in Section 5.2.3 Avatars → Clustering;

- (4)

- Activity range as strongly correlated SF for spatial conditions. Activity range mediates spatial conditions based on significant correlations. The pairing [Activity range → Spatial Conditions] showed a significant moderate correlation (Cramér’s V = 0.571, χ2(6) = 57.358, p = 0.000). Room-sized and table-sized ranges are more suited to collocated settings, pervasive ranges to hybrid settings, and stationary ranges to remote settings. However, designing static activity ranges for spatial conditions may limit designers’ creativity. Further analysis is provided in Section 5.2.3 Activity Range → Spatial Conditions. Activity range and temporal condition also showed significant moderate correlations (Cramér’s V = 0.269, χ2(6) = 12.742, p = 0.047). However, this only indicates a co-occurrence relationship, not causality. The correlation and significance levels are primarily due to synchronized interactions in room-sized ranges in many samples. Room-sized activity ranges inherently require less asynchronous and mixed communication, favoring immediate and cohesive interactions. For example, in the case of XRPublicSpectator [190], users in a room-sized range watched presenters engage in a high-interaction-density card battle game, negating the need for asynchronous or mixed communication channels. Other representative cases include [125,180,183]. Thus, activity range cannot be considered a strongly correlated SF for mediating temporal conditions;

- (5)

- Interaction targets as strongly correlated SF for affect and coopetition. Interaction targets are strongly correlated with affect and coopetition. Interaction targets mediate both affect and coopetition based on significant correlations. A moderately significant correlation was found between interaction targets and affect (Cramér’s V = 0.317, χ2(2) = 8.861, p = 0.012). Further case analyses are provided in Section 5.2.3 Interaction Targets → Affects. For coopetition, a moderately significant correlation (Cramér’s V = 0.368, χ2(5) = 11.91, p = 0.036) indicates AI’s potential in mediating collaborative settings. Thus, interaction targets and coopetition are strongly correlated. Further case analyses are provided in Section 5.2.3 Interaction Targets → Coopetition;

- (6)

- Spatial conditions as strongly correlated with SF for absorption. Absorption mediates spatial conditions based on significant correlations. The [Absorption → Spatial Conditions] pairing showed moderate positive correlations (Cramér’s V = 0.419, χ2(10) = 30.946, p = 0.001). Further case analyses are provided in Section 5.2.3 Spatial Conditions → Absorption;

- (7)

- Topology as strongly correlated component for limiting the SF sensory feedback. Topology mediates sensory feedback based on significant correlations, with a moderately significant correlation (Cramér’s V = 0.488, χ2(22) = 41.872, p = 0.007). Topology constrains the achievable sensory feedback, and the desired MSE structure limits technological deployment, indicating a reciprocal influence (see Section 5.2.3 Topology → Sensory Feedback).

4.3. The Effectiveness of XR-Mediated MSE

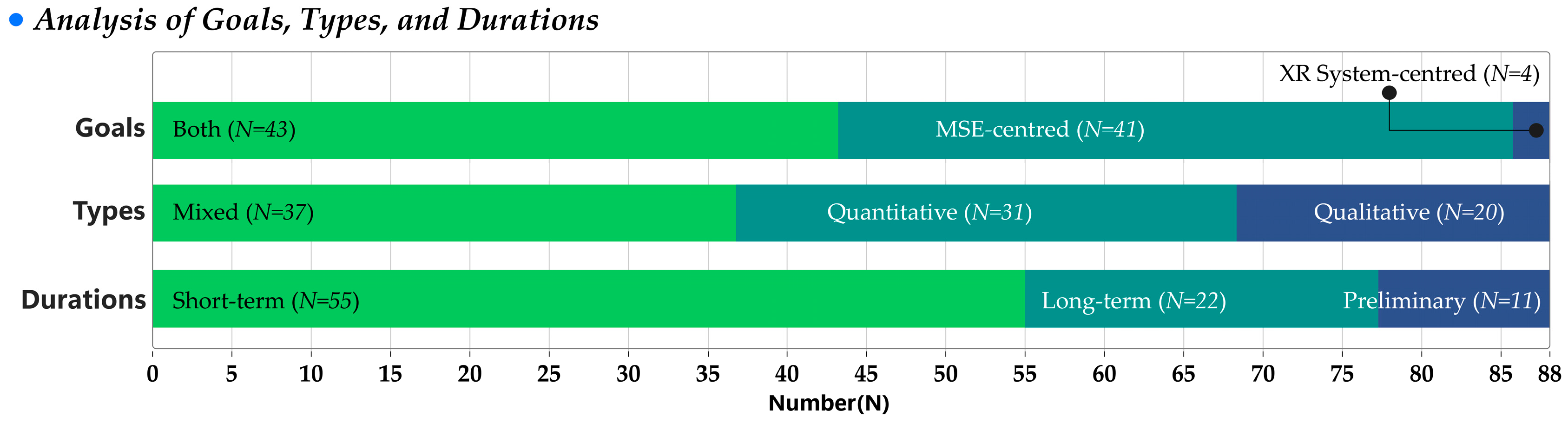

4.3.1. Settings of Evaluation

Basic Settings

- (1)

- Goals. Assessing the comprehensive effect of XR-mediated MSE is becoming increasingly significant, although many studies still focus on evaluating either MSE or XR system aspects separately (Figure 10). MSE-related goals primarily explore preferences in MSE activities, such as perceptions, engagement, and task efficacy. System-related goals focus on technical effectiveness, including usability, immersion, and interface design. Among the 88 cases, 84 involved MSE-related goals (95.4%), compared to only four focusing solely on testing the XR system (4.5%);

- (2)

- Types. There is a clear preference for mixed (combined qualitative and quantitative) and purely quantitative research types (Figure 10). The data show that mixed methods were used in 37 samples (42.0%), purely quantitative research in 31 samples (35.2%), and purely qualitative types in 20 samples (22.7%);

- (3)

- Durations. Study durations varied significantly to meet diverse research requirements, with a tendency towards short-term evaluations (Figure 10). Preliminary evaluations, aimed at quickly gathering initial user feedback to refine early prototypes, constituted 12.5% (11/88) of the samples. Short-term studies involving advanced XR systems employed formal, intensive experimental designs, with 62.5% (55/88) lasting a month or less, primarily conducted in field and laboratory settings. Only 22 samples (25.0%) extended beyond one month, typically featuring multiple experimental deployments to assess sustainability and potential long-term effects of XR-mediated MSE.

- (4)

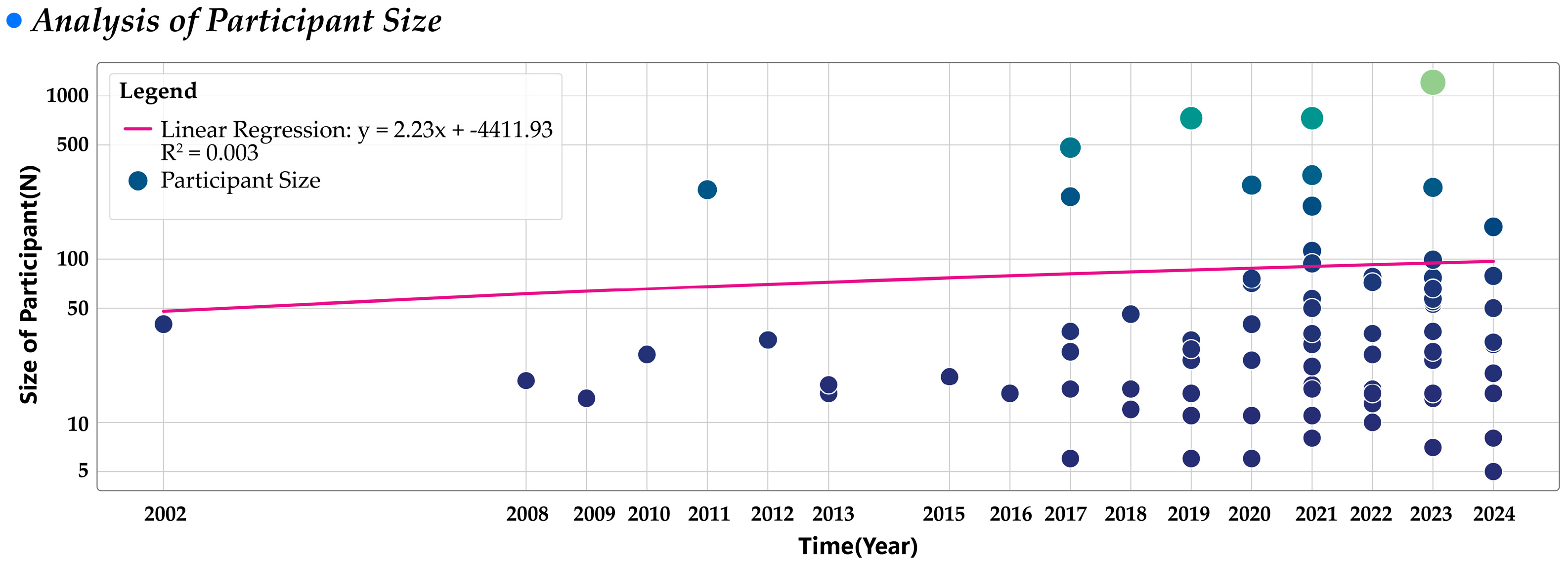

- Size. The size of participants varied widely across studies, with a noticeable trend toward increased average engagement in evaluations (Figure 11). Five samples did not report explicit participant numbers, comprising 5.7% (5/88) of the total. The trendline (y = 2.23x − 4411.93) created by linear regression was added programmatically to visually aid the understanding of variability and dispersion in participant numbers [191]. It is crucial to highlight two aspects. First, the trendline appeared less pronounced due to the logarithmic scale on the vertical axis. Second, the extremely low coefficient of determination (R2 = 0.003) suggested a substantial dispersion of data points, underscoring significant variability in participant numbers across samples. Specifically, 83 samples showed that, on average, around 88 individuals participated in each case, with considerable variability observed (mean = 87.80, standard deviation = 181.84);

- (5)

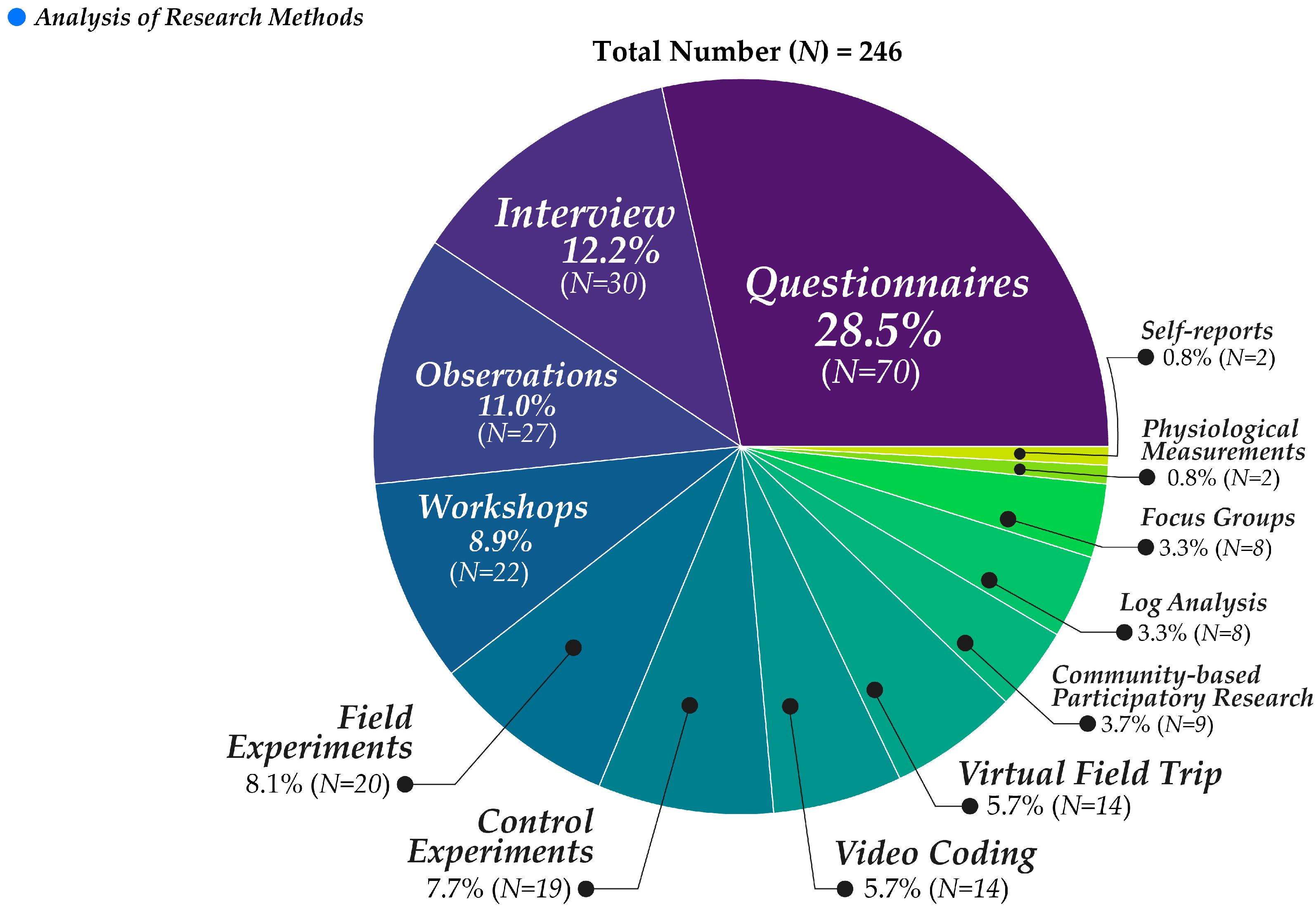

- Methods. The samples revealed significant diversity in organizing research methods in XR-mediated MSE studies (see Figure 12). Multiple methods were often employed within each sample, totaling 246 recorded uses. Questionnaires emerged as the most common method, constituting 28.5% (70/246) of all methods utilized, and were pivotal in collecting standardized feedback across studies. Interviews, accounting for 12.2% (30/246), were crucial for gaining deep insights. Observations, representing 11.0% (27/246), provided real-time insights into user behaviors and interactions. Workshops, which facilitated co-creation in evaluation settings, were used in 8.9% (22/246) of instances. Field experiments and control experiments were used with similar frequency, being employed 20 times (8.1%) and 19 times (7.7%), respectively. Video coding and the emerging method of ‘virtual field trips’ (VFT), each making up 5.7% (14/246), underscored their importance in behavioral analysis and immersive evaluations. VFT has gained popularity for simulating real-life field experiences in scenarios where onsite visits are impractical. Community-Based Participatory Research (CBPR), though less common at 3.7% (9/246), emphasizes the collaborative involvement of researchers, government, and community members throughout the research process. It focuses on joint decision-making, social justice, and community empowerment. This approach is typically found in studies related to the environment and sustainability, as seen in representative cases [124,130,163,171,176]. In contrast, workshops are more short-term and researcher-led, with a product/service improvement orientation. They focus on obtaining user perspectives rather than empowerment and are usually found in studies related to education and business, as seen in representative cases [116,120,129,140,155,172,175,185]. Physiological measurements and self-reports were rarely employed, appearing just twice in the study (0.8%, 2/248). Only two studies incorporating physiological sensors to assess bodily, cognitive, and social responses—namely ECG (electrocardiography) and EDA (electrodermal activity) in Sayis et al. [156] and EEG (electroencephalogram) in Fan et al. [164]. Behavioral indicators such as control data, task status, performance data, and state anxiety could be identified in these studies, representing 0.8% (2/248). Physiological measurements, which deliver precise, objective data, could be limited by their complexity and associated high costs. Two studies utilized self-reports to collect data. One study combined self-reports with video coding, psychophysiological data, and system logs to comprehensively assess users’ observable SI behaviors [156]. The other study focused on students’ self-reported feelings about a lab activity. Compared to structured questionnaires, self-reports are more qualitative, allowing participants to provide detailed and personalized responses [151]. This approach captures deeper personal insights and richer social context information. However, self-reports may be influenced by participants’ memory biases and inconsistencies in measurement dimensions, which limits their use.

Metric Settings

- (1)

- MSE-related scales. The Group Environment Questionnaire (GEQ) was frequently used (7.4%, 5/68), and the Self-Assessment Manikin (SAM) was used less often (4.4%, 3/68), both starting in 2017 (see Table 1). This trend indicates a growing recognition of the importance of measuring group dynamics and emotional responses. The Networked Minds Measure of Social Presence Inventory (NMMSPI) and the Montreal Cognitive Assessment (MoCA) were also repeatedly utilized (each 2.9%, 2/68), focusing on social presence and cognitive ability, respectively;

- (2)

- XR-related scales. The System Usability Scale (SUS) was the most commonly used (13.2%, 9/68) (see Table 2). The Simulator Sickness Questionnaire (SSQ) (7.4%, 5/68) and the NASA Task Load Index (NASA) (4.4%, 3/68) were more frequently used than the MEC Spatial Presence Questionnaire (MEC) (2.9%, 2/68). The consistent use of the SUS since 2013 underscores a strong consensus on its effectiveness in evaluating user interface ease and efficiency in XR technologies. The application of the MEC highlighted attention to spatial presence driven by technological quality. The use of the NASA and SSQ scales, beginning in 2023, indicates an emerging interest in addressing cognitive load and physical discomfort caused by system tasks and hardware. Despite their lower overall frequency, XR system-related scales demonstrated more consensus in their application compared to MSE-related scales. This indicates that while XR scales are less commonly used, they achieve greater agreement among researchers. In contrast, MSE-related scales were more varied, with many being used only once.

- (1)

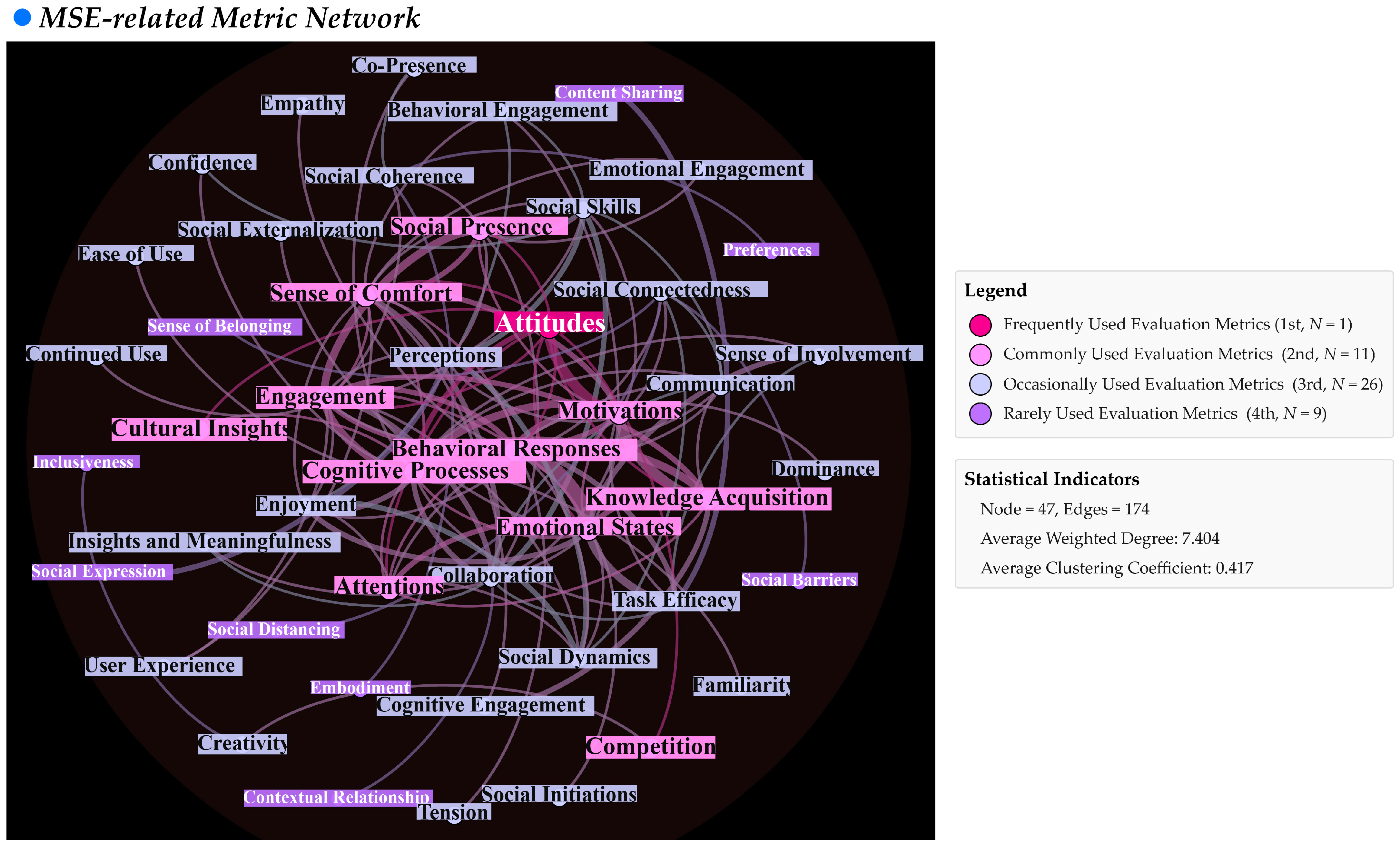

- MSE-related metric network. This network was relatively dispersed, with 47 metrics and 174 connections, an average weight degree of 7.404, and an average clustering coefficient of 0.417. The frequent metric was ‘Attitude’, reflecting subjective feedback on engagement (2.1%, 1/47). Secondary metrics with high co-occurrence included ‘Motivations’, ‘Emotional State’, ‘Cognitive Process’, and ‘Social Presence’, covering various engagement stages (23.4%, 11/47). Tertiary metrics (55.3%, 26/47), used occasionally, offered finer content granularity and stemmed from secondary metrics, such as ‘Perceptions’ from ‘Motivations’ and ‘Creativity’ from ‘Cognitive Process.’ Quaternary metrics (19.1%, 9/47), rarely used, evolved from tertiary metrics, and had very specialized characteristics, such as ‘Social Barriers’ and ‘Social Distancing’;

- (2)

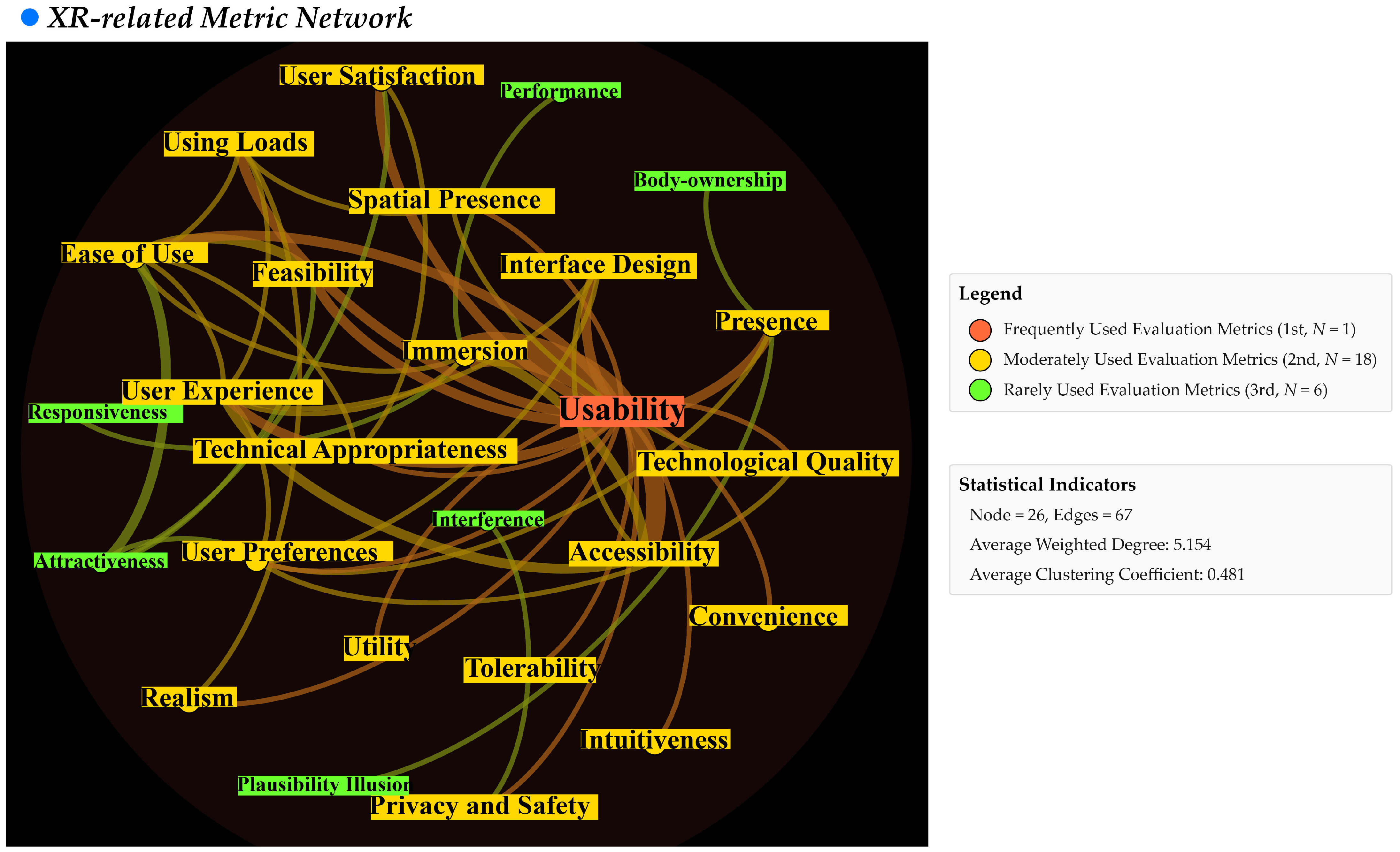

- System-related metric network. This network was denser, with 26 metrics and 67 connections, an average weight degree of 5.154, and an average clustering coefficient of 0.481. Here, ‘Usability’ stood out as the sole primary metric (3.8%, 1/26), emphasizing its authoritative role in evaluations. Secondary metrics (69.2%, 18/26), often used in conjunction with ‘Usability’, reflected distinct XR system features such as ‘Immersion’, ‘Presence’, and ‘Spatial Presence.’ Tertiary metrics (23.1%, 6/26) included niche ones such as ‘Plausibility Illusion’ and ‘Body-ownership’.

4.3.2. Performances of Evaluation

Positive Effects

- (1)

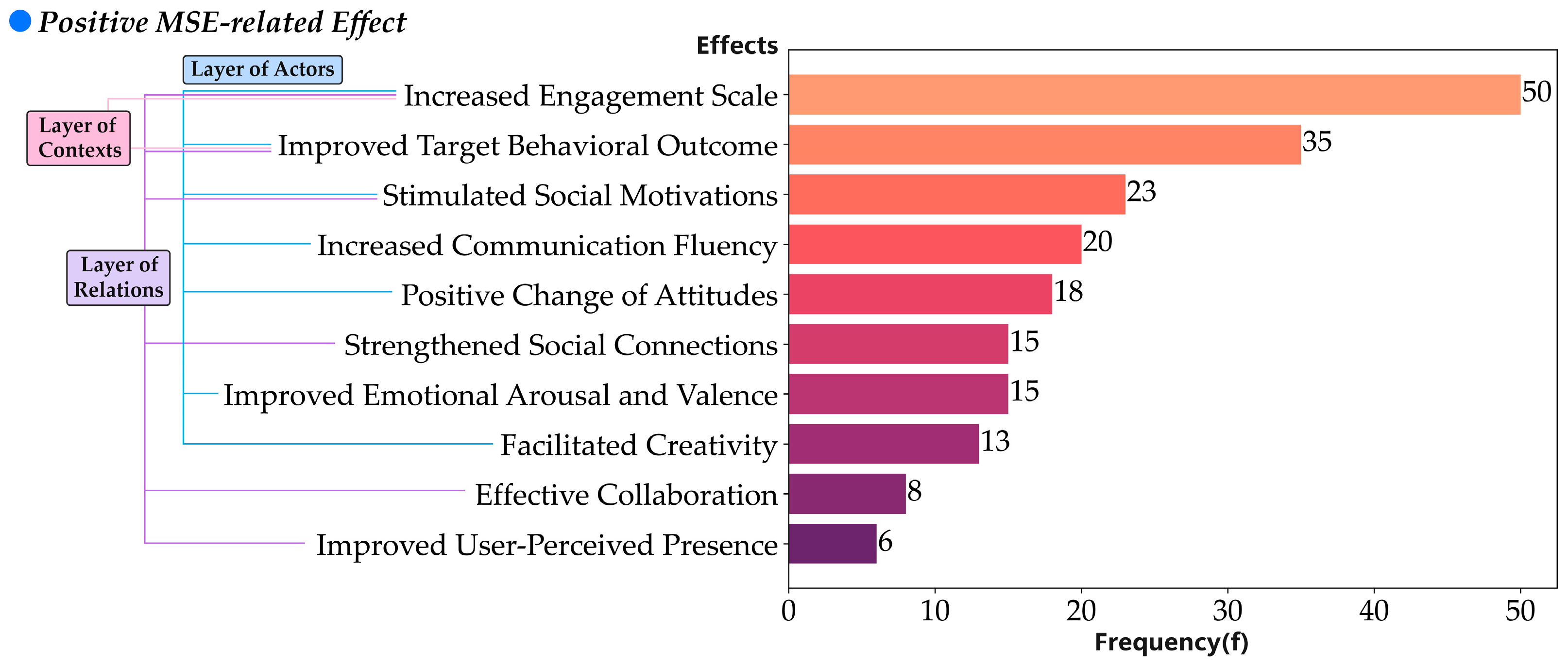

- MSE-related effects. The most significant positive outcome is the ‘Increased Engagement Scale’ (f = 50), driven by the expanded scalability of participant groups. Another notable metric is ‘Improved Target Behavioral Outcomes’ (f = 35), frequently observed in educational settings, where it improves learning outcomes, understanding, and reflection abilities among learners, and generates cultural insights in the cultural heritage domain. At the actor layer, ‘Increased Communication Fluency’ (f = 20) is particularly prominent in MR environments due to the potential for natural interactions, such as gesture-based interactions [23,164], TUI [129,152], and tracking systems [127,161]. These systems allow users to focus more on expressing cues, especially verbal and posture cues, with greater physical freedom (further explained in Section 5.2.3 Input Devices → Cues). Another significant effect is the ‘Positive Change of Attitudes’ (f = 18), which is more common in public MSE [124,138,163]. For example, Yavo-Ayalon et al. [124] used technology to help groups form bottom-up, informal, and low-mediation collaborative relationships, fostering a sense of community responsibility among members. Additionally, ‘Improved Emotional Arousal and Valence’ (f = 15) is widely noted, especially in group-level MSE, where strong connections in tree/star topologies provide favorable conditions for emotional shifts (see cases: [158,173,177,179]). At the relational layer, ‘Effective Collaboration’ (f = 8) and ‘Improved User-Perceived Presence’ (f = 6) are primarily attributed to avatar-based interactions, which highlight the importance of avatars for social presence [51,132,134,177] (further cases in Section 5.2.3 Avatars → Cues);

- (2)

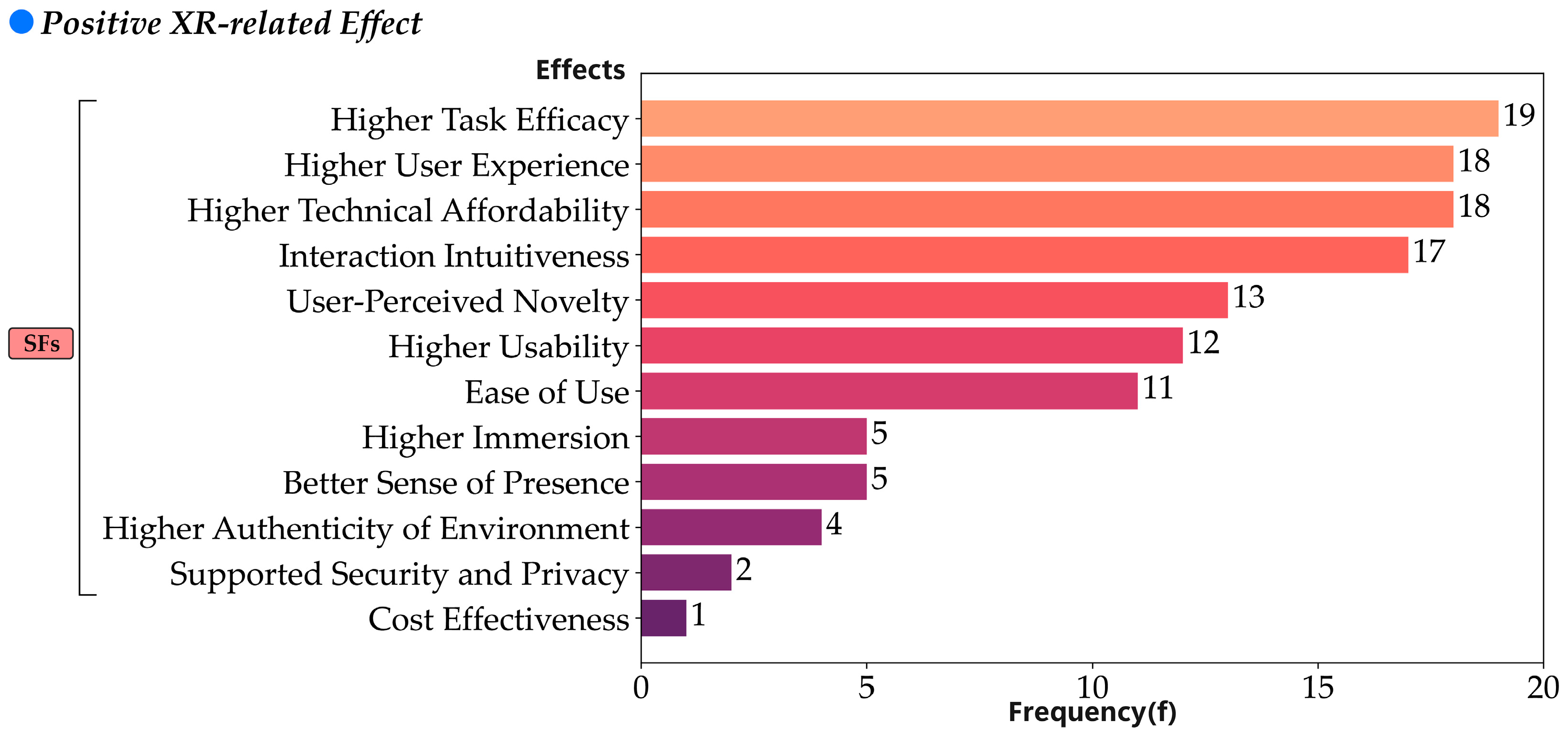

- XR-related effects. ‘Higher Task Efficacy’ (f = 19) emerges as the most frequently reported positive effect, referring to enhanced performance in various MSE tasks across three primary domains: in education (e.g., addressing cross-cultural stereotypes [186]), rehabilitation (e.g., upper limb and head flexibility training [164]), and general purposes (e.g., gesture-based virtual object manipulation [183]). ‘Higher Usability’ (f = 12) and ‘Ease of Use’ (f = 11), while conceptually similar are and are both widely mentioned. Usability focuses on the effectiveness of new interaction mechanisms and system performance. In contrast, ease of use is typically measured by error rates and task completion times, especially in complex systems. This highlights the positive mediating role of input devices on social interaction cues (further explained in Section 5.2.3 Input Devices → Cues). ‘Higher Immersion’ (f = 5), ‘Better Sense of Presence’ (f = 5), and ‘Higher Authenticity of Environment’ (f = 4) are more closely associated with VR or VR-based multi-interface systems. For example, cases [110,111,112,114,119,182] indicate higher immersion, primarily stemming from image rendering and audio–visual output quality. Cases [46,144,189] confirm enhanced sense of presence, defined as perceptions of virtual body ownership and consistency of one’s sense of self with the virtual environment. These three aspects are all related to spatial settings, underscoring the positive mediating role of absorption on spatial conditions (further detailed in Section 5.2.3 Interaction Targets → Affects). Lastly, ‘Cost Effectiveness’ (f = 1) is exemplified by Koukopoulos and Koukopoulos’s ‘Active Visitor’ system, which developed an asynchronous AR library application, expanding the scale and fluidity of visitor engagement and achieving more effective profit gains within established activity range limitations [113] (further detailed in Section 5.2.3 Activity Range → Spatial Conditions).

Negative Effects

- (1)

- MSE-related effects. ‘Disengagement’ (f = 5) is a multifaceted negative outcome, referring to low participation or withdrawal. This issue in public MSE primarily arises from unequal cooperative relationships due to imbalanced social topology (see Section 5.3). Distinct from ‘Disengagement’ is the ‘Attitude-behavior Gap’ (f = 6) at the layer of actors, which refers to participation without achieving the activity’s goals, such as insufficient personal reflection. A notable subjective effect at the layer of actors is ‘Social Pressures and Anxiety’ (f = 4). For instance, while ‘Pokémon GO’ encouraged players to ‘play outside’ and facilitated ‘ice-breaking moments’, it also challenged their physical abilities and heightened social anxiety [109]. The effects at the relations layer are mainly related to social-cultural conflicts. One effect is ‘Unmatching Cultural Values and Communication Styles’ (f = 5). Notably, two cases highlight negative effects due to limited verbal cues: Taylor et al. found that the synchronous use of verbal and written communication can confuse users [155], and Bruža et al. noted users’ preference for familiar interaction methods such as rotating objects with both hands, similar to smartphone swipe interactions [181]. This indicates that focusing solely on verbal cues while ignoring non-verbal cues is insufficient for MSE, as detailed in Section 5.3. Another notable effect is ‘Non-compliant Social Norms and Ethics’ (f = 4). For example, the system ‘MagicART’ reported these issues when group members had different visiting expectations and styles [155].

- (2)

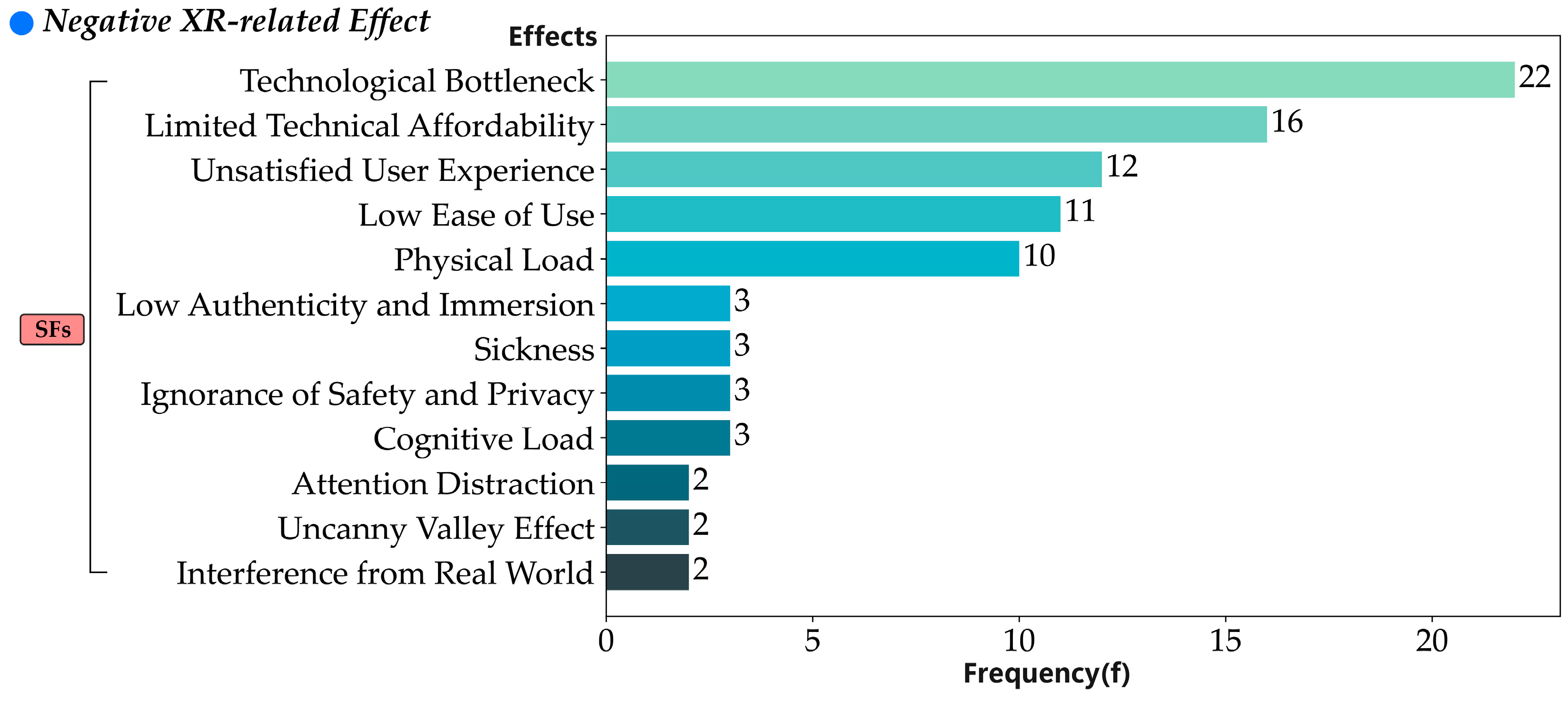

- XR-related effects. ‘Technological Bottleneck’ (f = 22) is the most frequently reported negative effect, highlighting the gap between technological expectations and actual implementation due to hardware and software limitations. Compared to VR, AR and MR more commonly face this issue, particularly in early-stage prototypes; VR encounters this less frequently. However, notably, ‘Physical Load’ (f = 10), typically caused by poor VR wearability, contrasts with MR systems using NUI and TUI, which rarely encounter such problems; see Section 5.2.3 Input Devices → Cues for details. VR also tends to cause ‘Sickness’ (f = 3). For example, Flynn’s VR study reported a support person experiencing a ‘tension headache’ due to the HMD and limited face padding of the Meta Quest Comfort Strap [184]. Among less frequent negative effects, ‘Ignorance of Privacy and Safety’ (f = 3) is notable, primarily concerning public MSE. Beyond addressing identity anonymity attacks in large VR social networks [112], attention should be given to privacy in public MSE. For example, Du noted that their design inadequately addresses privacy concerns, as participants highlighted a ‘lack of privacy’ during interactions with public displays, indicating the need for further research to ensure ‘personal space’ and mitigate interaction blindness in urban planning contexts [23]. Another public urban planning study echoed this point [118]. This point is detailed in Section 5.2.3 Avatars → Clustering. ‘Uncanny Valley Effect’ (f = 2) should not be overlooked. It now extends beyond avatars to real users; for instance, participants found face-mounted iPads in MR environments ‘weird’ or ‘creepy’ [125]. ‘Interference from Real World’ (f = 2) is also significant, typically occurring in VR systems, mainly due to sensory conflicts, as detailed in Section 5.2.3 Output Devices → Spatial Conditions.

5. Discussion and Conclusions

5.1. Limitations

5.2. Major Findings and Discussions

5.2.1. Mechanism of XR-Mediated MSE

5.2.2. Effectiveness of XR-Mediated MSE

5.2.3. Significant Mediated Relationships

Output Devices → Spatial Conditions

Input Devices → Cues

Avatars → Cues

Avatars → Coopetition

Avatars → Clustering

Activity Range → Spatial Conditions

Interaction Targets → Affects

Interaction Targets → Coopetition

Spatial Conditions → Absorption

Topology → Sensory Feedback

5.3. Future Research

- Non-verbal communication. Future studies should focus on refining these non-verbal communication systems to further align with the principles of ECT [97]. Specifically, exploring how different types of gestures, facial expressions, and postures can be systematically integrated into XR platforms to improve interaction quality will be crucial. Additionally, understanding how diverse user groups, particularly those with communication impairments or physical disabilities, engage with these non-verbal features will be key to expanding the inclusivity of XR experiences;

- Mixed and asynchronous communication. Key considerations should include managing delayed feedback, as the lack of real-time interaction can hinder social engagement. Additionally, integrating asynchronous and synchronous modes seamlessly remains challenging, especially in balancing live and pre-recorded content. Finally, maintaining long-term user interest is essential, as the absence of immediate feedback may lead to disengagement over time;

- Sensory feedback. Although audiovisual interfaces have advanced, technological limitations still hinder the implementation of multisensory feedback, particularly in tactile, olfactory, and gustatory areas. This restriction weakens emotional engagement and immersion. Furthermore, when the same sensory modality is simultaneously triggered by both virtual and real-world inputs, it can lead to sensory disruption and confusion for users, breaking their sense of presence in the virtual environment. Meanwhile, due to the counteracting force of social topology on MSE activities, tactile interactions at the group level and in public MSE may be difficult to achieve. When considering technology mediation, social topology should be reasonably deployed in activities to avoid technological inaccessibility.

- Coopetition modes. Collaborative competition and competitive collaboration have not been fully applied in coopetition, despite their significant importance in expanding group dynamics. It would be beneficial to compare the differences and similarities of various forms of coopetition further and their applicability to specific scenarios;

- AI agents. AI agents have a significant role in enhancing intensive affect mode and user empathy, yet this has not been emphasized. Designing vivid AI with customization and context awareness can deepen emotional engagement. The mediation of emotional modes still has much to explore, such as expanding AI’s role in emotional communication, regulation, analysis, expression, and deeper reflection. Currently, AI agents in MSE primarily focus on emotion mediation and collaboration guidance, with their broader functionality not fully realized;

- Immersion. Current research emphasizes guiding users into narrative and strategic immersion states, while the potential of tactical immersion has not been sufficiently highlighted. Additionally, excessive immersion may cause cognitive or physical overload. Researchers could further define a rule library for immersive task design to avoid negative effects;

- Multi-interface XR systems. Multi-interface XR systems effectively mediate the engagement of asymmetric groups, enhance user preferences, bridge the interruption of cue expression in asymmetric users, and align task execution, warranting further attention. More attempts to introduce MR systems in conjunction with VR devices can further enhance broader multi-person engagement under limited VR equipment conditions.

- NUI and TUI. NUI and TUI not only enhance system manipulation perception, reduce users’ physical and cognitive burdens, and support the social engagement of minority groups but also allow users to express more interesting cues, increasing the sense of enjoyment and promoting memorable experiences. Future work could further incorporate these interfaces into XR systems to create more embodied experiences;

- Dynamic spatial design. Static design methods within fixed contexts may overlook the benefits of dynamic approaches. Geographic location data leads to static information presentation, which fails to meet diverse user needs. Dynamic spatial design methods should be further expanded in XR spatial experiences to offer broader scene participation. An example can be seen in InnoVision Inc’s recent development of the MOON VR HOME app [200]. With just a few clicks, users can transform their virtual home into a stunning landscape, a sci-fi game scene, or an interior masterpiece [200];

- Avatars’ symmetry. As virtual extensions and reshaping of physical identities, avatars can enhance users’ emotional satisfaction and social desires. Avatars enable users to focus more on expressing and perceiving others’ cues and enhance the sense of presence, social presence, and spatial presence. No significant differences were found in situations with asymmetric use of avatars (e.g., one user with an avatar, another without), which requires further attention in future studies. Further exploration of visual symmetry differences and mediation strategies in specific task modes and contexts, along with defining rule sets, can maximize avatars’ expressive value as cue agents in SI;

- Avatars’ visual types. Half-body avatars are more often observed as pairs of collaborative, interactive content. They reduce computational and rendering complexity, concentrate cue expression, minimize unnecessary body movements, and provide a more comfortable and efficient collaborative environment. Full-body avatars are more neutral contexts. There is no significant coopetition. This supports higher presence and a perceived sense of closeness or social presence. It also increases awareness of each other’s avatars in the environment. These conclusions are preliminary and require a further systematic guidance framework;

- Standardized evaluation system. The lack of consensus in XR-mediated MSE evaluation frameworks hampers the reproducibility and comparability of findings. Future studies should address the following key areas: (1) Standardization of Core Metrics and Taxonomies: Current metrics should be further standardized and classified using taxonomies tailored to specific XR types, MSE scales, and domains. This involves applying feature engineering to refine evaluation toolboxes, ensuring greater granularity and selectivity. Building a knowledge graph of these metrics will help researchers map their work to existing knowledge and improve reproducibility. Effect size evaluation and further meta-analysis should support this process. (2) Comprehensive Measurement Integration: Current studies often fail to integrate both XR-related and MSE-related metrics comprehensively, limiting the potential to uncover blind spots in evaluation systems. Future research should focus on more holistic measurement approaches. (3) Mixed-Methods Approach: Physiological measurements can enhance objectivity and complement subjective assessments (e.g., questionnaires, interviews, self-reports). However, their application remains limited. Future evaluations should combine quantitative (e.g., physiological data) and qualitative (e.g., user feedback) methods, which can reduce bias and improve comparability. (4) Benchmark Datasets: Developing shared datasets across studies allows for benchmarking and replication. These datasets will facilitate cross-system comparisons and ensure cumulative research builds on existing findings. (5) Interdisciplinary Collaboration: Researchers from fields such as HCI, psychology, behavioral science, and computer science should collaborate to co-create standardized evaluation guidelines. Such interdisciplinary input ensures robustness and broader applicability of the evaluation system. (6) Evaluation Communities and Iterative Validation: Given the contextual complexity of XR-mediated MSE, regular validation of evaluation systems across different platforms and user groups is essential. Establishing evaluation communities and continuously updating knowledge repositories will ensure knowledge dissemination and iterative improvement;

- Ethical concerns of social identity. Ethical issues arise across XR platforms with varying levels of technological maturity and require targeted solutions. In highly developed VR social networking platforms, immersive virtual environments and full-body tracked avatars enhance social presence but also increase risks such as verbal harassment and bullying. These behaviors can negatively affect users, particularly minors, who are more susceptible to identity distortion and ‘false memories’ due to their developmental stage [112,201]. Current mitigation efforts focus on cybersecurity education, behavior management (e.g., account suspensions), and ethical guidance from guardians [112]. However, they often overlook the presence of negative identities related to avatars. One alternative is to replace human-like avatars with non-human, abstract social cues, such as biosensory data that anonymize users by representing physiological inputs as simple visual elements, i.e., biosensory cues [202]. This can help reduce biases linked to race, gender, and class while safeguarding user privacy and promoting a healthier social dynamic within VR spaces [203]. In some AR/MR applications, which are more task-oriented and at an earlier stage of development, insufficient attention to ethical issues in interface design can lead to power imbalances in public collaborative environments. Stakeholders, such as planners or designers, may resist equitable power distribution, creating a ‘tree topology’ that restricts information flow and limits engagement [118]. Interface designs should aim to provide both shared and personal spaces, balancing power dynamics and reducing social conflicts that arise from identity exposure [23]. In summary, future research should explore the extent to which personal identity is represented across different interfaces and task types in XR systems. Uncontrolled display of identity information may further exacerbate social and ethical issues;

- Privacy breaches of technical platforms. Privacy breaches in XR platforms vary depending on device type, necessitating tailored data protection strategies. For HMD-based VR, end-to-end encryption is critical, as these systems often track sensitive biometric data (e.g., gaze and body movements). AI-driven privacy measures can help anonymize this data, reducing leakage risks while maintaining immersion. An end-to-end communication approach—where data is transmitted directly without server storage—minimizes third-party risks but increases the demand for device-side processing [204]. In mobile-based AR and XR systems (e.g., prototypes) involving sensor integration, device-level biometric safeguards such as facial recognition and fingerprint authentication, combined with user-controlled settings, are essential for protecting personal information. Simpler XR systems should prioritize transparent consent and granular data-sharing options, allowing users to manage their exposure in public or shared spaces. In short, privacy protection in XR requires device-specific approaches. Future research should further explore data protection measures for input devices to avoid potential ethical issues.

- Long-term engagement. XR-mediated MSE should not only focus on short-term engagement but also on more sustainable and spontaneous MSE. Therefore, while evaluating the effects of technology mediation, long-term studies should also be considered;

- Expansion and iteration of emerging methods. Recent research has introduced emerging, XR-specific methods such as VFT and CBPR. However, their unique advantages and effectiveness remain underexplored. For example, CBPR methods should be further leveraged to manage stakeholder power dynamics while safeguarding participant privacy, thereby ensuring methodological integrity. These approaches require further expansion and iterative refinement to fully realize their potential in XR-mediated contexts;

- Physical and cognitive load. Details such as interference from the physical world, motion sickness, and physical load in VR can affect the accuracy and efficiency of effectiveness verification and should be addressed. A user-friendly technical guide might be necessary during experiments;

- Cultural and social differences. Cultural values, social personalities, expectations, and evaluations of MSE groups may lead to social misunderstandings, lack of motivation, and tension, affecting interaction effectiveness. Members should be more strategically organized, and attention should be paid to the engagement behavior of socially anxious or fearful users to enhance inclusiveness in group interactions.

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Data Availability Statement

Conflicts of Interest

| 1 | https://www.ibm.com/docs/en/cognos-analytics/11.1.0?topic=terms-cramrs-v (accessed on 1 September 2024). This study adhered to the IBM SPSS manual’s effect size standards for Cramér’s V, excluding variable combinations with p ≥ 0.05 due to weak interpretative value. Specifically, results were categorized as follows: ES ≤ 0.2 indicated a weak correlation, despite statistical significance, the fields were only weakly correlated; 0.2 < ES ≤ 0.6 indicated a moderate correlation; and ES > 0.6 indicated a strong correlation. |

| 2 | https://snap.stanford.edu/class/cs224w-readings/brandes01centrality.pdf (accessed on 1 September 2024). |

References

- Stephenson, N. Snow Crash; Spectra: Hong Kong, China, 2003; ISBN 0-553-08853-X. [Google Scholar]

- Mystakidis, S. Metaverse. Encyclopedia 2022, 2, 486–497. [Google Scholar] [CrossRef]

- Zhang, G.; Cao, J.; Liu, D.; Qi, J. Popularity of the Metaverse: Embodied Social Presence Theory Perspective. Front. Psychol. 2022, 13, 997751. [Google Scholar] [CrossRef]

- Dolata, M.; Schwabe, G. What Is the Metaverse and Who Seeks to Define It? Mapping the Site of Social Construction. J. Inf. Technol. 2023, 38, 239–266. [Google Scholar] [CrossRef]

- Founder’s Letter, 2021. Meta, 28 October 2021.

- Statista Metaverse—Worldwide | Statista Market Forecast. Available online: https://www.statista.com/outlook/amo/metaverse/worldwide (accessed on 4 January 2024).

- Hennig-Thurau, T.; Aliman, D.N.; Herting, A.M.; Cziehso, G.P.; Linder, M.; Kübler, R.V. Social Interactions in the Metaverse: Framework, Initial Evidence, and Research Roadmap. J. Acad. Mark. Sci. 2022, 51, 889–913. [Google Scholar] [CrossRef]

- Oh, C.S.; Bailenson, J.N.; Welch, G.F. A Systematic Review of Social Presence: Definition, Antecedents, and Implications. Front. Robot. AI 2018, 5, 409295. [Google Scholar] [CrossRef] [PubMed]

- Riva, G.; Galimberti, C. Computer-Mediated Communication: Identity and Social Interaction in an Electronic Environment. Genet. Soc. Gen. Psychol. Monogr. 1998, 124, 434–464. [Google Scholar]

- D’Ausilio, A.; Novembre, G.; Fadiga, L.; Keller, P.E. What Can Music Tell Us about Social Interaction? Trends Cogn. Sci. 2015, 19, 111–114. [Google Scholar] [CrossRef]

- Chetouani, M.; Delaherche, E.; Dumas, G.; Cohen, D. Interpersonal Synchrony: From Social Perception to Social Interaction. In Social Signal Processing; Burgoon, J.K., Magnenat-Thalmann, N., Pantic, M., Vinciarelli, A., Eds.; Cambridge University Press: Cambridge, UK, 2017; pp. 202–212. [Google Scholar]

- De Jaegher, H.; Di Paolo, E.; Gallagher, S. Can Social Interaction Constitute Social Cognition? Trends Cogn. Sci. 2010, 14, 441–447. [Google Scholar] [CrossRef]

- Berger, P.; Luckmann, T. The Social Construction of Reality. In Social Theory Re-Wired; Routledge: London, UK, 2016; pp. 110–122. [Google Scholar]

- Liu, S. Social Spaces: From Georg Simmel to Erving Goffman. J. Chin. Sociol. 2024, 11, 13. [Google Scholar] [CrossRef]

- Johnston, K.A. Toward a Theory of Social Engagement. In The Handbook of Communication Engagement; John Wiley & Sons, Ltd.: Hoboken, NJ, USA, 2018; pp. 17–32. ISBN 978-1-119-16760-0. [Google Scholar]

- Xiao, R.; Wu, Z.; Buruk, O.T.; Hamari, J. Enhance User Engagement Using Gamified Ineternet of Things. In Proceedings of the Hawaii International Conference on System Sciences, Maui, HI, USA, 5–8 January 2021. [Google Scholar]

- Homans, G.C. Social Behavior as Exchange. Am. J. Sociol. 1958, 63, 597–606. [Google Scholar] [CrossRef]

- Bowlby, J. Attachment and Loss; Random House: New York, NY, USA, 1969. [Google Scholar]

- Collins, R. Interaction Ritual Chains; Princeton University Press: Princeton, NJ, USA, 2004. [Google Scholar]

- Albert, M. Luhmann and Systems Theory. In Oxford Research Encyclopedia of Politics; Oxford University Press: Oxford, UK, 2016. [Google Scholar]

- Turner, J.H.; Maryanski, A. Functionalism; Benjamin/Cummings Publishing Company: Menlo Park, CA, USA, 1979. [Google Scholar]

- Newman, B.M.; Newman, P.R. Part III—Introduction. In Theories of Adolescent Development; Newman, B.M., Newman, P.R., Eds.; Academic Press: Cambridge, MA, USA, 2020; pp. 245–250. ISBN 978-0-12-815450-2. [Google Scholar]

- Du, G.; Degbelo, A.; Kray, C. User-Generated Gestures for Voting and Commenting on Immersive Displays in Urban Planning. Multimodal Technol. Interact. 2019, 3, 31. [Google Scholar] [CrossRef]

- Xiao, R.; Wu, Z.; Hamari, J. Internet-of-Gamification: A Review of Literature on IoT-Enabled Gamification for User Engagement. Int. J. Hum.–Comput. Interact. 2022, 38, 1113–1137. [Google Scholar] [CrossRef]

- Helliwell, J.; Putnam, R. The Social Context of Well-Being. Philos. Trans. R Soc. Lond. B Biol. Sci. 2004, 359, 1435–1446. [Google Scholar] [CrossRef]

- Jackson, M. Things Hidden Since the Foundation of the World. In Life Within Limits: Well-Being in a World of Want; Duke University Press: Durham, NC, USA, 2011; pp. 63–76. ISBN 978-0-8223-4892-4. [Google Scholar]

- Bales, R.F.; Strodtbeck, F.L. Phases in Group Problem-Solving. J. Abnorm. Soc. Psychol. 1951, 46, 485–495. [Google Scholar] [CrossRef]

- Krause, M.S. Use of Social Situations for Research Purposes. Am. Psychol. 1970, 25, 748–753. [Google Scholar] [CrossRef]

- Moos, R.H. Conceptualizations of Human Environments. Am. Psychol. 1973, 28, 652–665. [Google Scholar] [CrossRef]

- Price, R.H.; Blashfield, R.K. Explorations in the Taxonomy of Behavior Settings. Am. J. Community Psychol. 1975, 3, 335–351. [Google Scholar] [CrossRef]

- Forgas, J.P. 1—The Perception of Social Episodes: Categorical and Dimensional Representations in Two Different Social Milieus. J. Personal. Soc. Psychol. 1976, 34, 199–209. [Google Scholar] [CrossRef]

- King, G.A.; Sorrentino, R.M. Psychological Dimensions of Goal-Oriented Interpersonal Situations. J. Pers. Soc. Psychol. 1983, 44, 140–162. [Google Scholar] [CrossRef]

- Kreijns, K.; Kirschner, P.; Jochems, W.; Buuren, H. Determining Sociability, Social Space, and Social Presence in (A)Synchronous Collaborative Groups. Cyberpsychol. Behav. Impact Internet Multimed. Virtual Real. Behav. Soc. 2004, 7, 155–172. [Google Scholar] [CrossRef]

- Vrieling-Teunter, E.; Henderikx, M.; Nadolski, R.; Kreijns, K. Facilitating Peer Interaction Regulation in Online Settings: The Role of Social Presence, Social Space and Sociability. Front. Psychol. 2022, 13, 793798. [Google Scholar] [CrossRef] [PubMed]

- Wu, H.; Liu, X.; Hagan, C.C.; Mobbs, D. Mentalizing during Social InterAction: A Four Component Model. Cortex 2020, 126, 242–252. [Google Scholar] [CrossRef] [PubMed]

- Meijerink-Bosman, M.; Back, M.; Geukes, K.; Leenders, R.; Mulder, J. Discovering Trends of Social Interaction Behavior over Time: An Introduction to Relational Event Modeling. Behav. Res. Methods 2023, 55, 997–1023. [Google Scholar] [CrossRef] [PubMed]

- Yang, Y.; Read, S.J.; Miller, L.C. A Taxonomy of Situations from Chinese Idioms. J. Res. Personal. 2006, 40, 750–778. [Google Scholar] [CrossRef]

- Pervin, L.A. Definitions, Measurements, and Classifications of Stimuli, Situations, and Environments. Hum. Ecol. 1978, 6, 71–105. [Google Scholar] [CrossRef]

- Hoppler, S.S.; Segerer, R.; Nikitin, J. The Six Components of Social Interactions: Actor, Partner, Relation, Activities, Context, and Evaluation. Front. Psychol. 2022, 12, 743074. [Google Scholar] [CrossRef]

- Latour, B. On Actor-Network Theory: A Few Clarifications. Soz. Welt 1996, 47, 369–381. [Google Scholar]

- Reeves, B.; Nass, C.I. The Media Equation: How People Treat Computers, Television, and New Media Like Real People and Places; Cambridge University Press: New York, NY, USA, 1996; pp. xiv, 305. ISBN 1-57586-052-X. [Google Scholar]

- Evans, W. 1—Mapping Virtual Worlds. In Information Dynamics in Virtual Worlds; Evans, W., Ed.; Chandos Information Professional Series; Chandos Publishing: Hull, UK, 2011; pp. 3–21. ISBN 978-1-84334-641-8. [Google Scholar]

- Blumer, H. Symbolic Interactionism: Perspective and Method; Univ of California Press: Berkeley, CA, USA, 1986. [Google Scholar]

- Spahn, A. Mediation in Design for Values. In Handbook of Ethics, Values, and Technological Design; Springer: Dordrecht, The Netherlands, 2015. [Google Scholar] [CrossRef]

- Gagné, M.; Deci, E.L. Self-Determination Theory and Work Motivation. J. Organ. Behav. 2005, 26, 331–362. [Google Scholar] [CrossRef]

- Shah, S.H.H.; Karlsen, A.S.T.; Solberg, M.; Hameed, I.A. A Social VR-Based Collaborative Exergame for Rehabilitation: Codesign, Development and User Study. Virtual Real. 2023, 27, 3403–3420. [Google Scholar] [CrossRef]

- Myers, D.G. Theories of Emotion; Academic Press: Cambridge, MA, USA, 2004; Volume 500. [Google Scholar]

- Lang, P. Behavioral Treatment and Bio-Behavioral Assessment: Computer Applications. Technol. Ment. Health Care Deliv. Syst. 1980, 1, 119–137. [Google Scholar]

- Burgoon, J.K.; Buller, D.B.; Woodall, W.G. Nonverbal Communication: The Unspoken Dialogue; Routledge: London, UK, 1996. [Google Scholar]

- Kinzler, K.D. Language as a Social Cue. Annu. Rev. Psychol. 2021, 72, 241–264. [Google Scholar] [CrossRef] [PubMed]

- Le Tarnec, H.; Augereau, O.; Bevacqua, E.; De Loor, P. Impact of Augmented Engagement Model for Collaborative Avatars on a Collaborative Task in Virtual Reality. In Proceedings of the 2024 International Conference on Advanced Visual Interfaces, Genoa, Italy, 3–7 June 2024. [Google Scholar]

- Wallkötter, S.; Tulli, S.; Castellano, G.; Paiva, A.; Chetouani, M. Explainable Embodied Agents Through Social Cues: A Review. J. Hum.-Robot. Interact 2021, 10, 1–24. [Google Scholar] [CrossRef]

- Sauppé, A.; Mutlu, B. How Social Cues Shape Task Coordination and Communication. In Proceedings of the 17th ACM Conference on Computer Supported Cooperative Work & Social Computing, Baltimore, MD, USA, 15–19 February 2014; Association for Computing Machinery: New York, NY, USA, 2014; pp. 97–108. [Google Scholar]

- Feine, J.; Gnewuch, U.; Morana, S.; Maedche, A. A Taxonomy of Social Cues for Conversational Agents. Int. J. Hum.-Comput. Stud. 2019, 132, 138–161. [Google Scholar] [CrossRef]

- Aguilar-Zambrano, J.J.; Trujillo, M.J. Factors Influencing Interaction of Creative Teams in Generation of Ideas of New Products: An Approach from Collaborative Scripts. In Proceedings of the 2017 Portland International Conference on Management of Engineering and Technology (PICMET), Portland, OR, USA, 9–13 July 2017. [Google Scholar]

- Mallett, C.J.; Lara-Bercial, S. Chapter 14—Serial Winning Coaches: People, Vision, and Environment. In Sport and Exercise Psychology Research; Raab, M., Wylleman, P., Seiler, R., Elbe, A.-M., Hatzigeorgiadis, A., Eds.; Academic Press: San Diego, CA, USA, 2016; pp. 289–322. ISBN 978-0-12-803634-1. [Google Scholar]

- Barnes, J.A. Graph Theory and Social Networks: A Technical Comment on Connectedness and Connectivity. Sociology 1969, 3, 215–232. [Google Scholar] [CrossRef]

- Wu, Y.; You, S.; Guo, Z.; Li, X.; Zhou, G.; Gong, J. Designing a Remote Mixed-Reality Educational Game System for Promoting Children’s Social & Collaborative Skills. arXiv 2024, arXiv:2301.07310. [Google Scholar]

- IPCisco Network Topologies—•Bus•Ring•Star•Tree•Line•Mesh ⋆ IPCisco. Available online: https://ipcisco.com/lesson/network-topologies/ (accessed on 19 February 2024).

- Starzyk, K.B.; Holden, R.R.; Fabrigar, L.R.; MacDonald, T.K. The Personal Acquaintance Measure: A Tool for Appraising One’s Acquaintance with Any Person. J. Pers. Soc. Psychol. 2006, 90, 833–847. [Google Scholar] [CrossRef]

- Zhang, G.; Zhao, S.; Liang, Z.; Li, D.; Chen, H.; Chen, X. Social Interactions With Familiar and Unfamiliar Peers in Chinese Children: Relations With Social, School, and Psychological Adjustment. Int. Perspect. Psychol. 2015, 4, 239–253. [Google Scholar] [CrossRef]

- Mishra, N.; Schreiber, R.; Stanton, I.; Tarjan, R.E. Clustering Social Networks. In Proceedings of the Algorithms and Models for the Web-Graph; Bonato, A., Chung, F.R.K., Eds.; Springer: Berlin/Heidelberg, Germany, 2007; pp. 56–67. [Google Scholar]

- Smith, J.M. Evolution and the Theory of Games. In Did Darwin Get It Right? Essays on Games, Sex and Evolution; Springer: Berlin/Heidelberg, Germany, 1982; pp. 202–215. [Google Scholar]

- Johnson, D.W.; Johnson, R.T. Cooperation and Competition: Theory and Research; Interaction Book Company: Edina, MN, USA, 1989; pp. viii, 253. ISBN 0-939603-10-1. [Google Scholar]

- ADAM HAYES Game Theory. Available online: https://www.investopedia.com/terms/g/gametheory.asp (accessed on 8 September 2023).

- Patricio, R.; Moreira, A.C.; Zurlo, F. Gamification in Innovation Teams. Int. J. Innov. Stud. 2022, 6, 156–168. [Google Scholar] [CrossRef]

- Morschheuser, B.; Hamari, J.; Maedche, A. Cooperation or Competition—When Do People Contribute More? A Field Experiment on Gamification of Crowdsourcing. Int. J. Hum.-Comput. Stud. 2019, 127, 7–24. [Google Scholar] [CrossRef]

- Giddens, A. The Constitution of Society: Outline of the Theory of Structuration; Univ of California Press: Berkeley, CA, USA, 1984. [Google Scholar]

- Smith, E.R.; Semin, G.R. Socially Situated Cognition: Cognition in Its Social Context. In Advances in Experimental Social Psychology, Vol. 36; Elsevier Academic Press: San Diego, CA, USA, 2004; pp. 53–117. ISBN 0-12-015236-3. [Google Scholar]

- Sawyer, K. Unresolved Tensions in Sociocultural Theory: Analogies with Contemporary Sociological Debates. Cult. Psychol.-Cult. Psychol. 2002, 8, 283–305. [Google Scholar] [CrossRef]

- Sawyer, K. Extending Sociocultural Theory to Group Creativity. Vocat. Learn. 2012, 5, 59–75. [Google Scholar] [CrossRef]

- Eteläpelto, A.; Vähäsantanen, K.; Hökkä, P.; Paloniemi, S. What Is Agency? Conceptualizing Professional Agency at Work. Educ. Res. Rev. 2013, 10, 45–65. [Google Scholar] [CrossRef]

- Paliy, I.G.; Bogdanova, O.A.; Plotnikova, T.V.; Lipchanskaya, I.V. Space and Time in the Context of Social Measurement. Eur. Res. Stud. J. 2018, XXI, 350–358. [Google Scholar] [CrossRef]

- Klitkou, A.; Bolwig, S.; Huber, A.; Ingeborgrud, L.; Pluciński, P.; Rohracher, H.; Schartinger, D.; Thiene, M.; Żuk, P. The Interconnected Dynamics of Social Practices and Their Implications for Transformative Change: A Review. Sustain. Prod. Consum. 2022, 31, 603–614. [Google Scholar] [CrossRef]

- Logan, J. Making a Place for Space: Spatial Thinking in Social Science. Annu. Rev. Sociol. 2012, 38, 507–524. [Google Scholar] [CrossRef] [PubMed]

- Abbott, A. Of Time and Space: The Contemporary Relevance of the Chicago School*. Soc. Forces 1997, 75, 1149–1182. [Google Scholar] [CrossRef]

- Ijsselsteijn, W.; Baren, J.; Lanen, F. Staying in Touch: Social Presence and Connectedness through Synchronous and Asynchronous Communication Media. Smpte Motion Imaging J. 2003, 2, e928. [Google Scholar]

- Rauschnabel, P.A.; Felix, R.; Hinsch, C.; Shahab, H.; Alt, F. What Is XR? Towards a Framework for Augmented and Virtual Reality. Comput. Hum. Behav. 2022, 133, 107289. [Google Scholar] [CrossRef]

- Milgram, P.; Kishino, F. A Taxonomy of Mixed Reality Visual Displays. IEICE Trans. Inf. Syst. 1994, 77, 1321–1329. [Google Scholar]

- Skarbez, R.; Smith, M.; Whitton, M.C. Revisiting Milgram and Kishino’s Reality-Virtuality Continuum. Front. Virtual Real. 2021, 2, 647997. [Google Scholar] [CrossRef]

- Tremosa, L.; Interaction Design Foundation. Beyond AR vs. VR: What Is the Difference between AR vs. MR vs. VR vs. XR. Available online: https://www.interaction-design.org/literature/article/beyond-ar-vs-vr-what-is-the-difference-between-ar-vs-mr-vs-vr-vs-xr (accessed on 1 September 2024).

- Benyon, D.; Turner, P.; Turner, S. Designing Interactive Systems: People, Activities, Contexts, Technologies; Pearson Education: London, UK, 2005. [Google Scholar]

- Schleidgen, S.; Friedrich, O.; Gerlek, S.; Assadi, G.; Seifert, J. The Concept of “Interaction” in Debates on Human–Machine Interaction. Humanit. Soc. Sci. Commun. 2023, 10, 551. [Google Scholar] [CrossRef]

- Silver, K. What Puts the Design in Interaction Design. UX Matters 2007, 3, 3–77. [Google Scholar]

- Baykal, G.E.; Van Mechelen, M.; Goksun, T.; Yantac, A.E. Designing with and for Preschoolers: A Method to Observe Tangible Interactions with Spatial Manipulatives. In Proceedings of the ACM International Conference Proceeding Series; ACM Digital Library: Trondheim, Norway, 2018; pp. 45–54. [Google Scholar]

- Bombari, D.; Schmid Mast, M.; Canadas, E.; Bachmann, M. Studying Social Interactions through Immersive Virtual Environment Technology: Virtues, Pitfalls, and Future Challenges. Front. Psychol. 2015, 6, 869. [Google Scholar] [CrossRef] [PubMed]

- Bailenson, J.N.; Blascovich, J.; Beall, A.C.; Loomis, J.M. Interpersonal Distance in Immersive Virtual Environments. Pers. Soc. Psychol. Bull. 2003, 29, 819–833. [Google Scholar] [CrossRef]

- Jesse Fox, K.Y.S.; Ahn, S.J.; Janssen, J.H.; Yeykelis, L.; Bailenson, J.N. Avatars Versus Agents: A Meta-Analysis Quantifying the Effect of Agency on Social Influence. Hum.–Comput. Interact. 2015, 30, 401–432. [Google Scholar] [CrossRef]

- Nass, C.; Steuer, J.; Siminoff, E. Computer Are Social Actors. In Proceedings of the Conference on Human Factors in Computing Systems-Proceedings, Boston, MA, USA, 24–28 April 1994; p. 204. [Google Scholar]

- Kim, D.Y.; Lee, H.K.; Chung, K. Avatar-Mediated Experience in the Metaverse: The Impact of Avatar Realism on User-Avatar Relationship. J. Retail. Consum. Serv. 2023, 73, 103382. [Google Scholar] [CrossRef]

- Steed, A.; Schroeder, R. Collaboration in Immersive and Non-Immersive Virtual Environments. In Immersed in Media: Telepresence Theory, Measurement & Technology; Lombard, M., Biocca, F., Freeman, J., IJsselsteijn, W., Schaevitz, R.J., Eds.; Springer International Publishing: Cham, Germany, 2015; pp. 263–282. ISBN 978-3-319-10190-3. [Google Scholar]

- Xiao, R.; Zhang, R.; Buruk, O.; Hamari, J.; Virkki, J. Toward Next Generation Mixed Reality Games: AResearch Through Design Approach. Prepr. Version 1 Available Res. Sq. 2023, 28, 142. [Google Scholar] [CrossRef]

- Nilsson, N.C.; Nordahl, R.; Serafin, S. Immersion Revisited: A Review of Existing Definitions of Immersion and Their Relation to Different Theories of Presence. Hum. Technol. 2016, 12, 108–134. [Google Scholar] [CrossRef]

- Adams, E. Fundamentals of Game Design, 3rd ed.; New Riders: Hoboken, NJ, USA, 2006. [Google Scholar]

- Ryan, M.-L. Interactive Narrative, Plot Types, and Interpersonal Relations. In Proceedings of the Interactive Storytelling; Spierling, U., Szilas, N., Eds.; Springer: Berlin/Heidelberg, Germany, 2008; pp. 6–13. [Google Scholar]

- Almeida, L.; Menezes, P.; Dias, J. Telepresence Social Robotics towards Co-Presence: A Review. Appl. Sci. 2022, 12, 5557. [Google Scholar] [CrossRef]

- Foglia, L.; Wilson, R.A. Embodied Cognition. WIREs Cogn. Sci. 2013, 4, 319–325. [Google Scholar] [CrossRef]

- Chao, G. Human-Computer Interaction: Process and Principles of Human-Computer Interface Design. In Proceedings of the 2009 International Conference on Computer and Automation Engineering, Bangkok, Thailand, 8–10 March 2009; pp. 230–233. [Google Scholar]

- Nie, K.; Guo, M.; Gao, Z. Enhancing Emotional Engagement in Virtual Reality (VR) Cinematic Experiences through Multi-Sensory Interaction Design. In Proceedings of the 2023 Asia Conference on Cognitive Engineering and Intelligent Interaction (CEII), Hong Kong, China, 15–16 December 2023; pp. 47–53. [Google Scholar]

- Broll, W.; Grimm, P.; Herold, R.; Reiners, D.; Cruz-Neira, C. VR/AR Output Devices. In Virtual and Augmented Reality (VR/AR): Foundations and Methods of Extended Realities (XR); Doerner, R., Broll, W., Grimm, P., Jung, B., Eds.; Springer International Publishing: Cham, Germany, 2022; pp. 149–200. ISBN 978-3-030-79062-2. [Google Scholar]

- Bordegoni, M.; Carulli, M.; Spadoni, E. Multisensory Interaction in eXtended Reality. In Prototyping User eXperience in eXtended Reality; Bordegoni, M., Carulli, M., Spadoni, E., Eds.; Springer Nature Switzerland: Cham, Germany, 2023; pp. 49–63. ISBN 978-3-031-39683-0. [Google Scholar]

- Verbeek, P.-P. COVER STORYBeyond Interaction: A Short Introduction to Mediation Theory. Interactions 2015, 22, 26–31. [Google Scholar] [CrossRef]

- Ihde, D. Technology and the Lifeworld: From Garden to Earth; Indiana University Press: Bloomington, IN, USA, 1990. [Google Scholar]

- Moher, D.; Liberati, A.; Tetzlaff, J.; Altman, D.G.; The PRISMA Group. Preferred Reporting Items for Systematic Reviews and Meta-Analyses: The PRISMA Statement. Ann. Intern. Med. 2009, 151, 264–269. [Google Scholar] [CrossRef]

- Scopus—Document Search | Signed In. Available online: https://www.scopus.com/search/form.uri?display=basic#basic (accessed on 18 October 2023).

- Brown, K.E.; Heise, N.; Eitel, C.M.; Nelson, J.; Garbe, B.A.; Meyer, C.A.; Ivie, K.R.; Clapp, T.R. A Large-Scale, Multiplayer Virtual Reality Deployment: A Novel Approach to Distance Education in Human Anatomy. Med. Sci. Educ. 2023, 33, 409–421. [Google Scholar] [CrossRef]

- Bird, J.M.; Smart, P.A.; Harris, D.J.; Phillips, L.A.; Giannachi, G.; Vine, S.J. A Magic Leap in Tourism: Intended and Realized Experience of Head-Mounted Augmented Reality in a Museum Context. J. Travel Res. 2023, 62, 1427–1447. [Google Scholar] [CrossRef]

- Healey, J.; Wang, D.; Wigington, C.; Sun, T.; Peng, H. A Mixed-Reality System to Promote Child Engagement in Remote Intergenerational Storytelling. In Proceedings of the 2021 IEEE International Symposium on Mixed and Augmented Reality Adjunct (ISMAR-Adjunct), Bari, Italy, 4–8 October 2021; Volume 2021, pp. 274–279. [Google Scholar] [CrossRef]

- Vella, K.; Johnson, D.; Cheng, V.W.S.; Davenport, T.; Mitchell, J.; Klarkowski, M.; Phillips, C. A Sense of Belonging: Pokémon GO and Social Connectedness. Games Cult. 2019, 14, 583–603. [Google Scholar] [CrossRef]

- Ballestin, G.; Bassano, C.; Solari, F.; Chessa, M. A Virtual Reality Game Design for Collaborative Team-Building: A Proof of Concept. In Proceedings of the UMAP ’20: 28th ACM Conference on User Modeling, Adaptation and Personalization, Genoa, Italy, 14–17 July 2020; pp. 159–162. [Google Scholar] [CrossRef]

- Cassidy, B.; Sim, G.; Robinson, D.W.; Gandy, D. A Virtual Reality Platform for Analyzing Remote Archaeological Sites. Interact. Comput. 2019, 31, 167–176. [Google Scholar] [CrossRef]

- Maloney, D.; Freeman, G.; Robb, A. A Virtual Space for All: Exploring Children’s Experience in Social Virtual Reality. In Proceedings of the CHI PLAY’20: The Annual Symposium on Computer-Human Interaction in Play, Virtual Event, Canada, 2–4 November 2020; pp. 472–483. [Google Scholar] [CrossRef]

- Koukopoulos, Z.; Koukopoulos, D. Active Visitor: Augmenting Libraries into Social Spaces. In Proceedings of the 2018 3rd Digital Heritage International Congress (DigitalHERITAGE) Held Jointly with 2018 24th International Conference on Virtual Systems & Multimedia (VSMM 2018), San Francisco, CA, USA, 26–30 October 2018. [Google Scholar] [CrossRef]

- Jung, S.; Wu, Y.; McKee, R.; Lindeman, R.W. All Shook Up: The Impact of Floor Vibration in Symmetric and Asymmetric Immersive Multi-User VR Gaming Experiences. In Proceedings of the 2022 IEEE Conference on Virtual Reality and 3D User Interfaces (VR), Christchurch, New Zealand, 12–16 March 2022; pp. 737–745. [Google Scholar] [CrossRef]

- Gillespie, D.; Qin, Z.; Aish, F. An Extended Reality Collaborative Design System: In-Situ Design Reviews in Uncontrolled Environments. In Proceedings of the 2021 ACADIA, Association for Computer-Aided Design in Architecture, Conference, Calgary, AB, Canada, 3–6 November 2021. [Google Scholar]

- Echavarria, K.R.; Dibble, L.; Bracco, A.; Silverton, E.; Dixon, S. Augmented Reality (AR) Maps for Experiencing Creative Narratives of Cultural Heritage. In EUROGRAPHICS Workshop on Graphics and Cultural Heritage; The Eurographics Association: Eindhoven, The Netherlands, 2019; pp. 7–16. [Google Scholar] [CrossRef]

- Jiang, S.; Moyle, B.; Yung, R.; Tao, L.; Scott, N. Augmented Reality and the Enhancement of Memorable Tourism Experiences at Heritage Sites. Curr. Issues Tour. 2023, 26, 242–257. [Google Scholar] [CrossRef]

- Ahmadi Oloonabadi, S.; Baran, P. Augmented Reality Participatory Platform: A Novel Digital Participatory Planning Tool to Engage under-Resourced Communities in Improving Neighborhood Walkability. Cities 2023, 141, 104441. [Google Scholar] [CrossRef]

- Prandi, C.; Nisi, V.; Ceccarini, C.; Nunes, N. Augmenting Emerging Hospitality Services: A Playful Immersive Experience to Foster Interactions among Locals and Visitors. Int. J. Hum.-Comput. Interact. 2023, 39, 363–377. [Google Scholar] [CrossRef]

- Wang, Y.; Gardner, H.; Martin, C.; Adcock, M. Augmenting Sculpture with Immersive Sonification. In Proceedings of the 2022 IEEE Conference on Virtual Reality and 3D User Interfaces Abstracts and Workshops (VRW), Christchurch, New Zealand, 12–16 March 2022; pp. 626–627. [Google Scholar] [CrossRef]

- Kohler, T.; Fueller, J.; Stieger, D.; Matzler, K. Avatar-Based Innovation: Consequences of the Virtual Co-Creation Experience. Comput. Hum. Behav. 2011, 27, 160–168. [Google Scholar] [CrossRef]

- Xu, Y.; Gandy, M.; Deen, S.; Schrank, B.; Spreen, K.; Gorbsky, M.; White, T.; Barba, E.; Radu, I.; Bolter, J.; et al. BragFish: Exploring Physical and Social Interaction in Co-Located Handheld Augmented Reality Games. In Proceedings of the 2008 International Conference on Advances in Computer Entertainment Technology, Yokohama, Japan, 3–5 December 2008; Association for Computing Machinery: New York, NY, USA, 2008; pp. 276–283. [Google Scholar]

- Samayoa, A.G.; Talavera, J.; Sium, S.G.; Xie, B.; Huang, H.; Yu, L.-F. Building a Motion-Aware, Networked Do-It-Yourself Holographic Display. In Proceedings of the 2021 IEEE International Conference on Intelligent Reality (ICIR), Virtual Conference, 12–13 May 2021; pp. 39–48. [Google Scholar] [CrossRef]

- Yavo-Ayalon, S.; Joshi, S.; Zhang, Y.; Han, R.; Mahyar, N.; Ju, W. Building Community Resiliency through Immersive Communal Extended Reality (CXR). Multimodal Technol. Interact. 2023, 7, 43. [Google Scholar] [CrossRef]

- Faridan, M.; Kumari, B.; Suzuki, R. ChameleonControl: Teleoperating Real Human Surrogates through Mixed Reality Gestural Guidance for Remote Hands-on Classrooms. In Proceedings of the CHI’23: CHI Conference on Human Factors in Computing Systems, Hamburg, Germany, 23–28 April 2023. [Google Scholar] [CrossRef]

- Chung, C.-Y.; Awad, N.; Hsiao, H. Collaborative Programming Problem-Solving in Augmented Reality: Multimodal Analysis of Effectiveness and Group Collaboration. Australas. J. Educ. Technol. 2021, 37, 17–31. [Google Scholar] [CrossRef]

- Feng, Y.; Perugia, G.; Yu, S.; Barakova, E.I.; Hu, J.; Rauterberg, G.W.M. Context-Enhanced Human-Robot Interaction: Exploring the Role of System Interactivity and Multimodal Stimuli on the Engagement of People with Dementia. Int. J. Soc. Robot. 2022, 14, 807–826. [Google Scholar] [CrossRef]

- Frydenberg, M.; Andone, D. Converging Digital Literacy through Virtual Reality. In Proceedings of the 2021 IEEE Frontiers in Education Conference (FIE), Lincoln, NE, USA, 13–16 October 2021. [Google Scholar] [CrossRef]

- Neate, T.; Roper, A.; Wilson, S.; Marshall, J.; Cruice, M. CreaTable Content and Tangible Interaction in Aphasia. In Proceedings of the Conference on Human Factors in Computing Systems-Proceedings, Honolulu, HI, USA, 23 April 2020; pp. 1–14. [Google Scholar]

- Spiel, K.K.; Werner, K.; Hödl, O.; Ehrenstrasser, L.; Fitzpatrick, G. Creating Community Fountains by (Re-)Designing the Digital Layer of Way-Finding Pillars. In Proceedings of the 19th International Conference on Human-Computer Interaction with Mobile Devices and Services, Vienna, Austria, 4–7 September 2017. [Google Scholar] [CrossRef]

- Li, Y.; Yu, L.; Liang, H.-N. CubeMuseum: An Augmented Reality Prototype of Embodied Virtual Museum. In Proceedings of the 2021 IEEE International Symposium on Mixed and Augmented Reality Adjunct (ISMAR-Adjunct), Bari, Italy, 4–8 October 2021; pp. 13–17. [Google Scholar] [CrossRef]

- Kim, M.; Lee, S.-H. Deictic Gesture Retargeting for Telepresence Avatars in Dissimilar Object and User Arrangements. In Proceedings of the Web3D’20: The 25th International Conference on 3D Web Technology, Virtual Event, Republic of Korea, 9–13 November 2020. [Google Scholar] [CrossRef]

- Costa, M.C.; Manso, A.; Patrício, J. Design of a Mobile Augmented Reality Platform with Game-Based Learning Purposes. Information 2020, 11, 127. [Google Scholar] [CrossRef]

- Xu, T.B.; Mostafavi, A.; Kim, B.C.; Lee, A.A.; Boot, W.; Czaja, S.; Kalantari, S. Designing Virtual Environments for Social Engagement in Older Adults: A Qualitative Multi-Site Study. In Proceedings of the CHI’23: CHI Conference on Human Factors in Computing Systems, Hamburg, Germany, 23–28 April 2023. [Google Scholar] [CrossRef]

- Oksman, V.; Kulju, M. Developing Online Illustrative and Participatory Tools for Urban Planning: Towards Open Innovation and Co-Production through Citizen Engagement. Int. J. Serv. Technol. Manag. 2017, 23, 445–464. [Google Scholar] [CrossRef]

- Siriaraya, P.; Ang, C.S. Developing Virtual Environments for Older Users: Case Studies of Virtual Environments Iteratively Developed for Older Users and People with Dementia. In Proceedings of the 2017 2nd International Conference on Information Technology (INCIT), Nakhonpathom, Thailand, 2–3 November 2017; pp. 1–6. [Google Scholar] [CrossRef]

- Schmitt, L.; Buisine, S.; Chaboissier, J.; Aoussat, A.; Vernier, F. Dynamic Tabletop Interfaces for Increasing Creativity. Comput. Hum. Behav. 2012, 28, 1892–1901. [Google Scholar] [CrossRef]

- Pokric, B.; Krco, S.; Pokric, M.; Knezevic, P.; Jovanovic, D. Engaging Citizen Communities in Smart Cities Using IoT, Serious Gaming and Fast Markerless Augmented Reality. In Proceedings of the 2015 International Conference on Recent Advances in Internet of Things (RIoT), Singapore, 7–9 April 2015. [Google Scholar] [CrossRef]

- Erzetic, C.; Dobbs, T.; Fabbri, A.; Gardner, N.; Haeusler, M.H.; Zavoleas, Y. Enhancing User-Engagement in the Design Process through Augmented Reality Applications. Proc. Int. Conf. Educ. Res. Comput. Aided Archit. Des. Eur. 2019, 2, 423–432. [Google Scholar] [CrossRef]

- Li, J.; Van Der Spek, E.D.; Yu, X.; Hu, J.; Feijs, L. Exploring an Augmented Reality Social Learning Game for Elementary School Students. In Proceedings of the interaction design and children conference, London, UK, 21–24 June 2020; pp. 508–518. [Google Scholar] [CrossRef]

- McCaffery, J.; Miller, A.; Kennedy, S.; Dawson, T.; Allison, C.; Vermehren, A.; Lefley, C.; Strickland, K. Exploring Heritage through Time and Space Supporting Community Reflection on the Highland Clearances. In Proceedings of the 2013 Digital Heritage International Congress (DigitalHeritage), Marseille, France, 28 October–1 November 2013; Volume 1, pp. 371–378. [Google Scholar] [CrossRef]

- Kersting, M.; Steier, R.; Venville, G. Exploring Participant Engagement during an Astrophysics Virtual Reality Experience at a Science Festival. Int. J. Sci. Educ. Part B Commun. Public Engagem. 2021, 11, 17–34. [Google Scholar] [CrossRef]

- Gruber, A.; Canto, S.; Jauregi-Ondarra, K. Exploring the Use of Social Virtual Reality for Virtual Exchange. ReCALL 2023, 35, 258–273. [Google Scholar] [CrossRef]

- Gugenheimer, J.; Stemasov, E.; Sareen, H.; Rukzio, E. FaceDisplay: Towards Asymmetric Multi-User Interaction for Nomadic Virtual Reality. In Proceedings of the 2018 CHI Conference on Human Factors in Computing Systems, Montreal, QC, Canada, 21–26 April 2018; Association for Computing Machinery: New York, NY, USA, 2018; pp. 1–13. [Google Scholar]

- DiBenigno, M.; Kosa, M.; Johnson-Glenberg, M.C. Flow Immersive: A Multiuser, Multidimensional, Multiplatform Interactive Covid-19 Data Visualization Tool. Front. Psychol. 2021, 12, 661613. [Google Scholar] [CrossRef]

- Vets, T.; Nijs, L.; Lesaffre, M.; Moens, B.; Bressan, F.; Colpaert, P.; Lambert, P.; Van de Walle, R.; Leman, M. Gamified Music Improvisation with BilliArT: A Multimodal Installation with Balls. J. Multimodal User Interfaces 2017, 11, 25–38. [Google Scholar] [CrossRef]

- Planey, J.; Rajarathinam, R.J.; Mercier, E.; Lindgren, R. Gesture-Mediated Collaboration with Augmented Reality Headsets in a Problem-Based Astronomy Task. Int. J. Comput.-Support. Collab. Learn. 2023, 18, 259–289. [Google Scholar] [CrossRef]

- Williams, S.; Enatsky, R.; Gillcash, H.; Murphy, J.J.; Gracanin, D. Immersive Technology in the Public School Classroom: When a Class Meets. In Proceedings of the 2021 7th International Conference of the Immersive Learning Research Network (iLRN), Eureka, CA, USA, 17 May–10 June 2021. [Google Scholar] [CrossRef]

- Pereira, F.; Bermudez I Badia, S.; Jorge, C.; Da Silva Cameirao, M. Impact of Game Mode on Engagement and Social Involvement in Multi-User Serious Games with Stroke Patients. In Proceedings of the 2019 international conference on virtual rehabilitation (ICVR), Tel Aviv, Israel, 21–24 July 2019. [Google Scholar] [CrossRef]

- Doukianou, S.; Daylamani-Zad, D.; O’Loingsigh, K. Implementing an Augmented Reality and Animated Infographics Application for Presentations: Effect on Audience Engagement and Efficacy of Communication. Multimed. Tools Appl. 2021, 80, 30969–30991. [Google Scholar] [CrossRef]