A Testing and Evaluation Method for the Car-Following Models of Automated Vehicles Based on Driving Simulator

Abstract

:1. Introduction

2. Literature Review

2.1. AV Testing Scenario

2.2. AV Testing Methods

- (1)

- Simulation Testing: High-fidelity simulations leverage advanced platforms to create realistic driving environments, enabling safe and controlled testing. These simulations replicate complex interactions and various conditions, allowing developers to assess the CAV’s safety performance and protocols [33,34,35,36]. By integrating Extended Reality (XR), an immersive environment is created [37,38,39], enhancing the understanding of how CAVs perceive and respond to different safety-critical scenarios, which is crucial for identifying potential risks and ensuring robust safety measures.

- (2)

- Closed-Track Testing: Conducted in controlled environments, closed-track testing mimics real-world conditions, allowing for the safe execution of high-risk scenarios. By systematically running predefined safety scenarios, researchers can observe and measure the CAV’s behavior, response times, and decision-making processes [40,41]. This method ensures precise evaluation of safety performance metrics and highlights areas for improvement before progressing to public road testing, thereby mitigating risks associated with real-world deployment. Some notable closed-track testing facilities around the world include Mcity (Michigan, USA) [42], Millbrook Proving Ground (Bedfordshire, UK) [43], AstaZero (Sweden) [44], and National Intelligent Connected Vehicle Pilot Demonstration Zone (Shanghai, China) [45].

- (3)

- Real-World Testing: Deploying CAVs on public roads gathers performance data in actual traffic conditions, revealing how well the vehicles handle real-world unpredictability. This phase is crucial for validating the safety of CAVs in dynamic environments. By continuously monitoring and analyzing the CAV’s response to various traffic situations, developers can ensure the vehicle’s ability to manage real-world risks and maintain high safety standards [46,47].

- (4)

- Corner Case Testing: Focusing on rare but critical scenarios, corner case testing ensures that CAVs can manage unexpected and high-risk situations [48,49]. Stress testing subjects CAVs to extreme conditions, evaluating their robustness and safety under the most challenging circumstances. These tests are essential for ensuring that CAVs are prepared for a wide range of potential hazards, thereby enhancing their reliability and overall safety performance.

2.3. Driving Simulator Applications in AV Testing

3. Methodology

3.1. Testing Methods for AVs in Mixed Traffic Scenarios

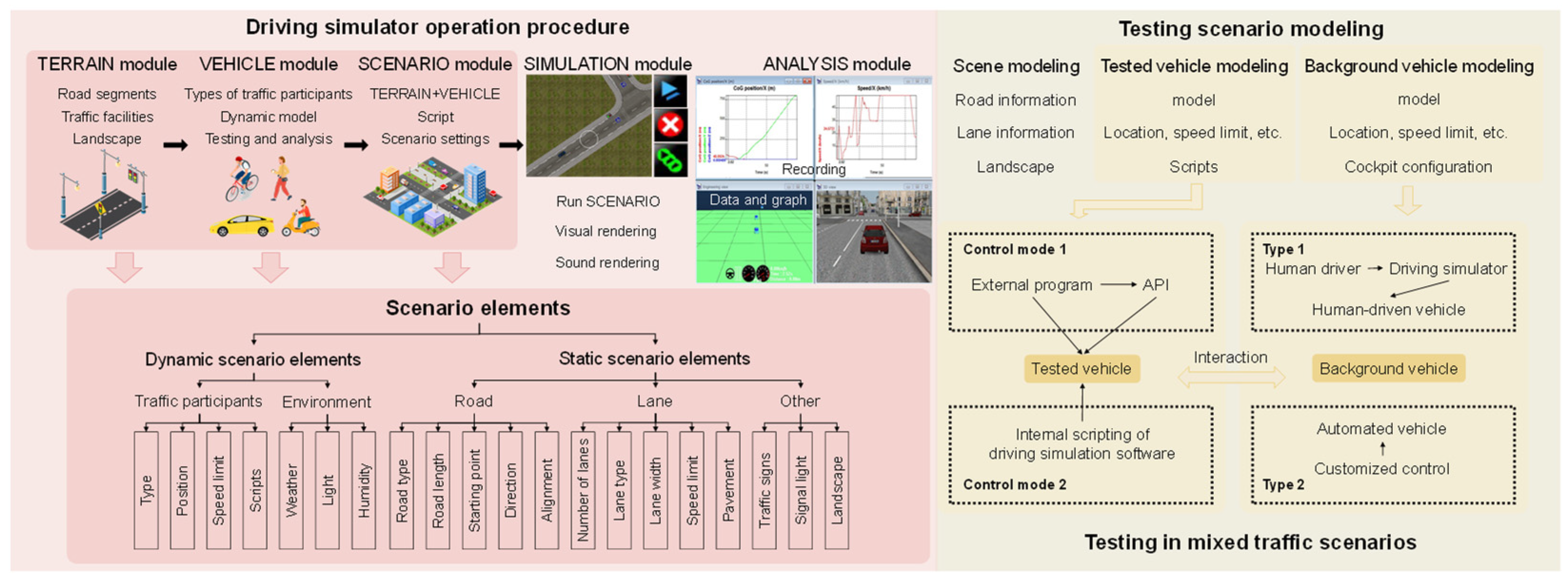

3.1.1. Framework

3.1.2. Scenario Modeling

- Scene modeling: This involves defining critical road characteristics such as road type, length, starting coordinates, direction, and alignment. Lane specifications include the number of lanes, lane types, permissible vehicle types, lane width, speed limits, and pavement materials. Additionally, the modeling includes surrounding infrastructures such as buildings, integrated traffic signage, street lights, trees, and other static objects. Environmental conditions such as weather, lighting, and air quality are meticulously adjusted to simulate various driving conditions. Collectively, these elements construct a comprehensive and realistic testing environment, facilitating detailed evaluations of automated vehicles’ performance across diverse simulated driving scenarios.

- TV modeling: Modeling the TV is a complex process tailored to meet specific testing scenario requirements. This process entails selecting the TV’s type, brand, model, and color, and positioning it within the designated driving lane at predetermined coordinates. The TV is also subject to speed constraints, typically aligned with the lane’s speed limit. The TV’s planning and decision-making model is structured into a three-tier system: global path planning generates an initial path, behavioral decisions respond to environmental factors, and a trajectory adheres to specific constraints. Software scripting functions then control the TV, ensuring the model accurately reflects real-world driving complexity and responds dynamically to elements introduced in testing scenarios.

- BV modeling: Following the setup of static scenario elements, attention shifts to dynamic scenarios critical for assessing AV driving capabilities. These dynamic scenarios create varied environmental conditions for the TV, ensuring comprehensive testing across diverse contexts. Simulation data from these scenarios provide a holistic evaluation of model performance. BV behavior significantly influences the TV within these dynamic scenarios, with BVs controlled by human drivers using the simulator, introducing realistic driving variations in judgment and behavior.

3.1.3. Testing Method of AV Decision and Planning Model

3.2. Evaluation Method

3.2.1. Evaluation Indicator

- Qualitative indicators. Qualitative indicators evaluate the safety of the TV, including its compliance with regulations and rules, and its adherence to the behavioral attributes expected of the test item.

- Safety indicator Q.

- Distance D2 from the contour of the vehicle to the edge of the roadway.

- Distance D3 from the vehicle contour to the roadway boundary line.

- Behavior attribute indicator G.

- 2.

- Quantitative indicators. Quantitative indicators are used to evaluate the comfort level of TV and the completion efficiency of the expected behavior of the tested item.

- Expected behavior completion efficiency u.

- Comfort indicator j.

3.2.2. Evaluation Criteria

- Safety evaluation. The actual distance between TV and BV must be no closer than the theoretical safe distance, namely,

- Violation evaluation. The outline of the vehicle must not cross the edge of the roadway and the boundary line of the roadway, namely,

- Behavioral attribute evaluation. Determine the range of behavioral attribute indicators based on experimental conditions. The range is used as the benchmark of calculation efficiency, and the real result in the testing should be in the range.

- 4.

- Efficiency evaluation. Set a total score of w1. According to the proportional relationship, the corresponding scores R1 can be achieved as follows:

- 5.

- Comfort evaluation. The total score can be set to w2. The lateral and longitudinal comfort indicator scores each account for half. According to the proportional relationship, the longitudinal comfort score value Rx, the lateral comfort score value Ry, and the final score R2 can be obtained.

4. Method Verification

4.1. Model under Testing

- GM model. Developed in the late 1950s, the GM model is characterized by its simplicity and clear physical interpretation [62]. This pioneering model, significant in the early research of car-following dynamics, derives from driving dynamics models. It introduces a concept wherein the “reaction”—expressed as the acceleration or deceleration of the following vehicle—is the product of “sensitivity” and “stimulation”. Here, “stimulation” is defined by the relative speed between the following and leading vehicles, while “sensitivity” varies based on the model’s application consistency. The general formula is outlined below,where an+1(t + T) denotes the acceleration of the vehicle n + 1 at t + T, vn+1(t + T) denotes the speed of the vehicle n + 1 at t + T, ∆v(t) denotes the speed difference between the vehicle n and the vehicle n + 1 at t, ∆x(t) denotes the distance between the vehicle n and the vehicle n + 1 at t, and c, m, l are constants.

- 2.

- Gipps model. Originating from the safety distance model, the Gipps car-following model [63] utilizes classical Newton’s laws of motion to determine a specific following distance, diverging from the stimulation-response relationship used in the GM model. This model computes the safe speed for the TV relative to the vehicle ahead (BV) by imposing limitations on driver and vehicle performance. These limitations guide the TV in selecting a driving speed that ensures it can safely stop without rear-ending the preceding vehicle in case of a sudden halt. The mathematical expression of this model is outlined below,where vn(t) denotes the speed of vehicle n at t, an denotes the maximum acceleration of vehicle n, Vn denotes the speed limit of the vehicle n in the current design scenario, bn denotes the maximum deceleration of the vehicle n, bn−1 denotes the maximum deceleration of the vehicle n − 1, xn(t) denotes the position of vehicle n at t, Sn−1 denotes the effective length of the vehicle n − 1, τ denotes the reaction time of the vehicle n − 1, θ denotes the additional reaction time of the vehicle n − 1 to ensure safety.

- 3.

- Fuzzy Reasoning model. Initially proposed by [64], the Fuzzy Reasoning model focuses on analyzing driving behaviors by predicting the driver’s future logical reasoning. This model fundamentally explores the relationship between stimulus and response, similar to other behavioral models. A distinctive characteristic of the Fuzzy Reasoning model is its use of fuzzy sets to manage inputs. These fuzzy sets, which partially overlap, categorize the degree of membership for each input variable. The mathematical formulation of the model is presented below,

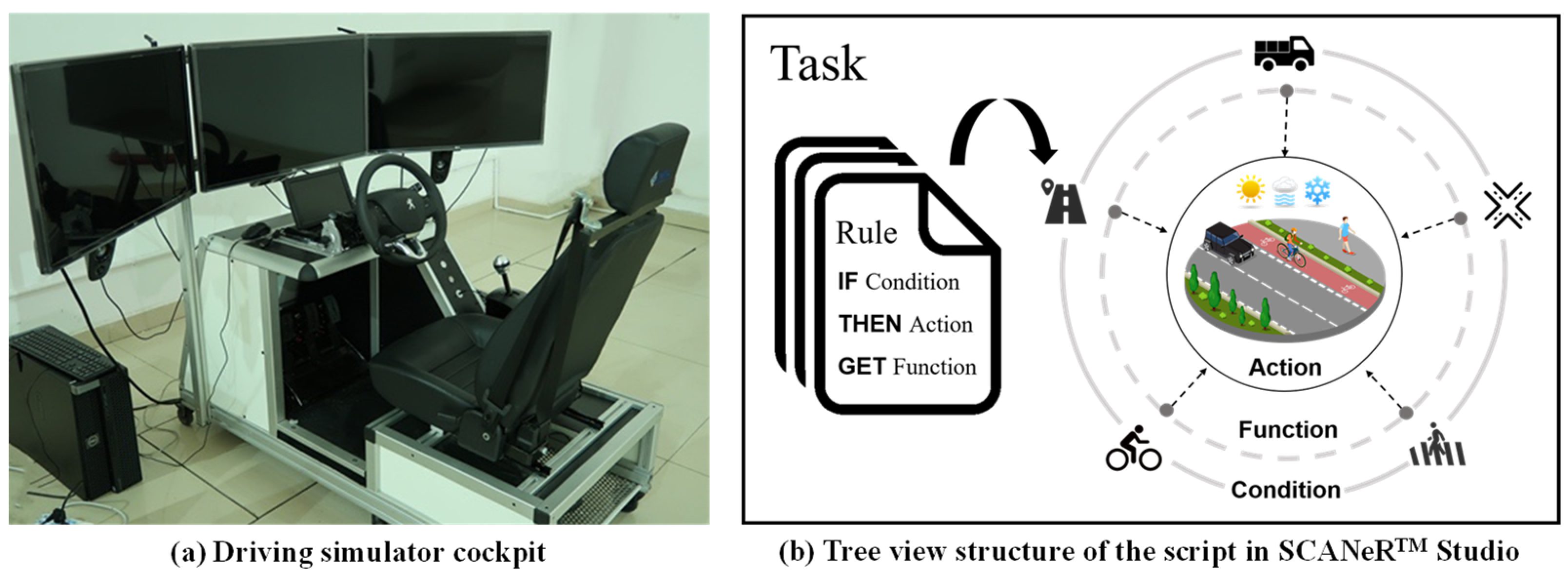

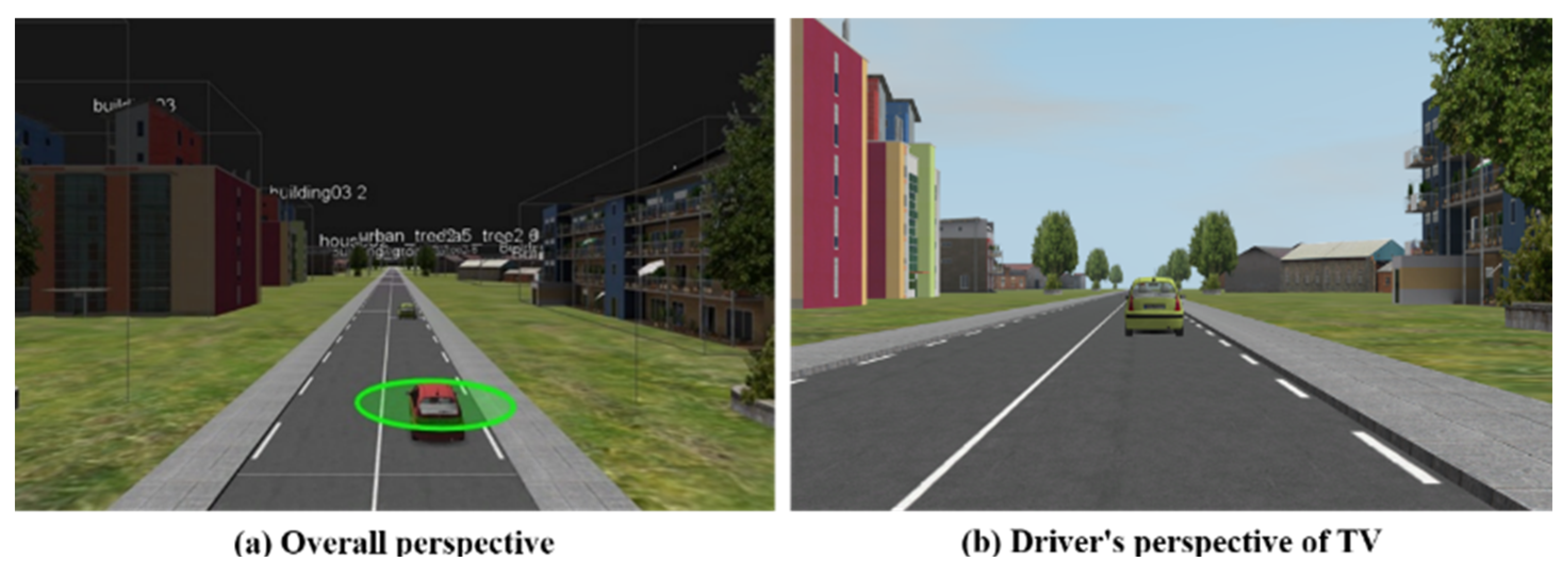

4.2. Mixed Traffic Scenario Construction

- TV model. The vehicle selected as the TV in SCANeR Studio is the “Citroen_C3_Green”. The TV is positioned at the starting point of the road. Speed limits used in this study are set at 60 km/h, 90 km/h, and 120 km/h. Custom control of the TV is implemented through scripting based on the car-following model mentioned earlier.

- BV model. The vehicle designated as the background vehicle (BV) in SCANeR Studio is the “Citroen_C3_Red”. The BV is placed 20 m in front of the TV and is controlled by a human driver using the driving simulator. Prior to the experiment, the following procedures are conducted: (1) Ensure the proper functioning of the driving simulator equipment. (2) Set the speed limit of TV. (3) Align the BV’s speed limit with that of the TV. (4) Start the BV and gradually accelerate to the speed limit according to driving habits. During this process, the BV can be accelerated and decelerated irregularly. (5) Towards the end of the road (approximately 50 m from the endpoint), initiate emergency braking maneuvers to decelerate the BV’s speed to zero. (6) Terminate the simulation, and the testing ends. The total travel distance covered by the BV throughout the experiment is approximately 980 m. Prior to formally commencing the experiment, preliminary trials were conducted with each participant’s session duration limited to 30 min. The successful completion of these preliminary trials indicated that the experiment duration was generally within the tolerable range for participants. This precaution aimed to mitigate potential discomfort or dizziness that participants might experience during the experiment.

4.3. Mixed Traffic Scenario Construction

- Safety evaluation. The theoretical safety distance of Gapsafe is as follows:where, Gapsafe denotes the safety distance of TV, b0 denotes the maximum deceleration of BV, b1 denotes the maximum deceleration of TV, τ1 denotes the reaction time of the driver of TV, v0(t) denotes the speed of BV at time t, and v1(t) denotes the traveling speed of TV at time t.

- 2.

- Violation evaluation. The total score for this section is set at 30 points. As in Formula (10), the contour of the vehicle does not cross the edge of the roadway and the boundary line of the roadway, the evaluation is qualified. If D2 > 0, 15 would be obtained. Similarly, if D3 > 0, 15 would be obtained.

- 3.

- Car-following characteristic evaluation. In the car-following project testing, the car-following characteristic indicator G is expressed by the difference between D1 and the theoretical safety distance Gapsafe.

- 4.

- Car-following efficiency evaluation. The car-following efficiency indicator u is expressed by the difference between D1 and Gapsafe divided by 0.2 times Gapsafe.where ut denotes the efficiency of sampling point t during the driving process, denotes the actual distance between the two vehicles at point t, denotes the theoretical safe distance at point t.where u denotes the average efficiency, and n is the number of sampling points.

- 5.

- Comfort evaluation. A total score of 20 points is set, of which the horizontal and vertical comfort indicator scores each account for 10 points. According to the proportional relationship, the longitudinal comfort score Rx, the lateral comfort score Ry, and the final score R2 can be obtained.

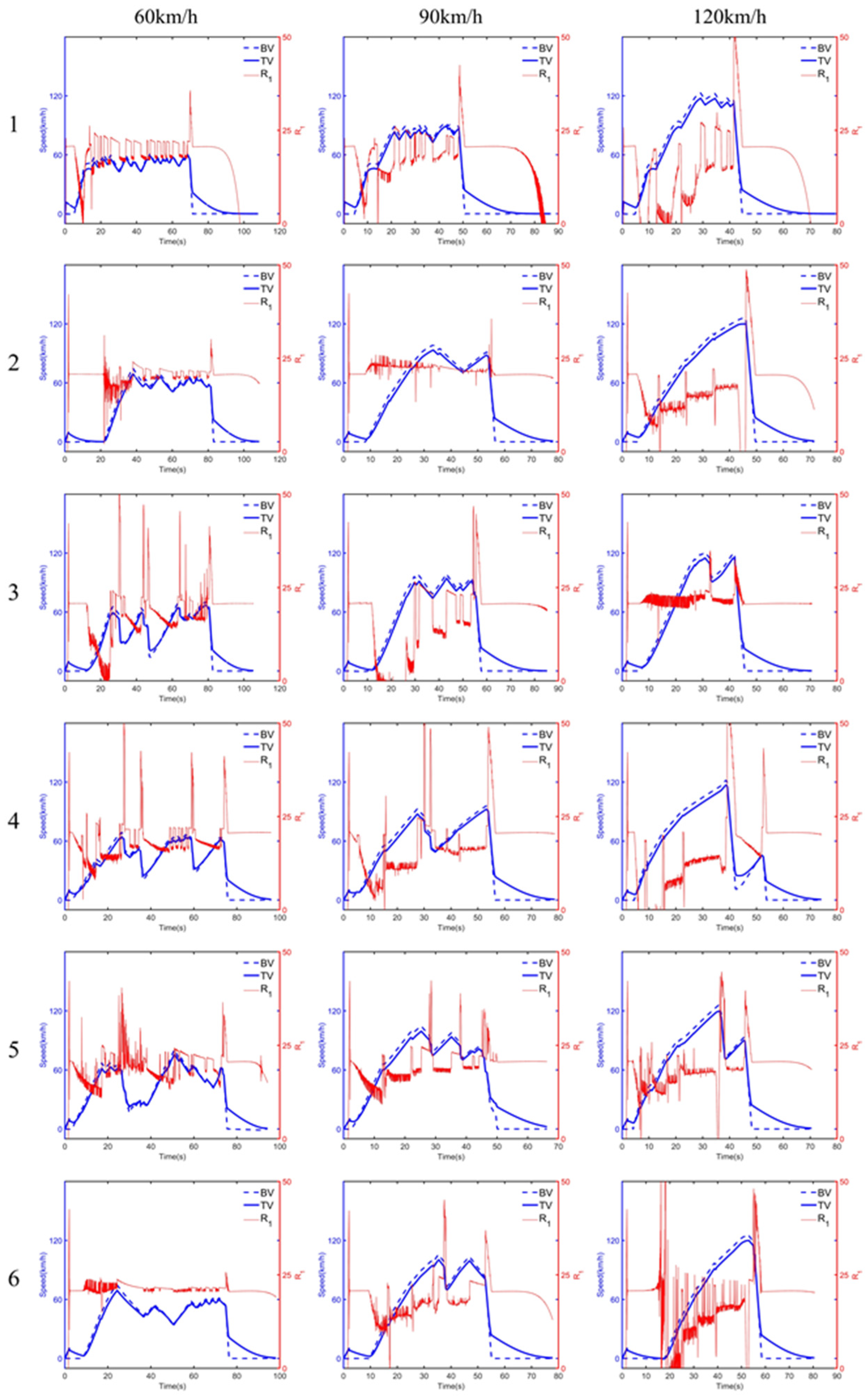

4.4. Results

4.5. Limitations and Outlook

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Liu, W.; Hua, M.; Deng, Z.; Meng, Z.; Huang, Y.; Hu, C.; Song, S.; Gao, L.; Liu, C.; Shuai, B. A Systematic Survey of Control Techniques and Applications in Connected and Automated Vehicles. IEEE Internet Things J. 2023, 10, 21892–21916. [Google Scholar] [CrossRef]

- Shi, H.; Zhou, Y.; Wang, X.; Fu, S.; Gong, S.; Ran, B. A Deep Reinforcement Learning-based Distributed Connected Automated Vehicle Control under Communication Failure. Comput. Aided Civ. Infrastruct. Eng. 2022, 37, 2033–2051. [Google Scholar] [CrossRef]

- Ren, R.; Li, H.; Han, T.; Tian, C.; Zhang, C.; Zhang, J.; Proctor, R.W.; Chen, Y.; Feng, Y. Vehicle Crash Simulations for Safety: Introduction of Connected and Automated Vehicles on the Roadways. Accid. Anal. Prev. 2023, 186, 107021. [Google Scholar] [CrossRef]

- Sohrabi, S.; Khodadadi, A.; Mousavi, S.M.; Dadashova, B.; Lord, D. Quantifying the Automated Vehicle Safety Performance: A Scoping Review of the Literature, Evaluation of Methods, and Directions for Future Research. Accid. Anal. Prev. 2021, 152, 106003. [Google Scholar] [CrossRef]

- Wang, L.; Zhong, H.; Ma, W.; Abdel-Aty, M.; Park, J. How Many Crashes Can Connected Vehicle and Automated Vehicle Technologies Prevent: A Meta-Analysis. Accid. Anal. Prev. 2020, 136, 105299. [Google Scholar] [CrossRef]

- Dong, C.; Wang, H.; Li, Y.; Shi, X.; Ni, D.; Wang, W. Application of Machine Learning Algorithms in Lane-Changing Model for Intelligent Vehicles Exiting to off-Ramp. Transp. A Transp. Sci. 2021, 17, 124–150. [Google Scholar] [CrossRef]

- National Highway Traffic Safety Administration. Summary Report: Standing General Order on Crash Reporting for Level 2 Advanced Driver Assistance Systems; US Department of Transport: Washington, DC, USA, 2022.

- Chougule, A.; Chamola, V.; Sam, A.; Yu, F.R.; Sikdar, B. A Comprehensive Review on Limitations of Autonomous Driving and Its Impact on Accidents and Collisions. IEEE Open J. Veh. Technol. 2023, 5, 142–161. [Google Scholar] [CrossRef]

- Feng, S.; Yan, X.; Sun, H.; Feng, Y.; Liu, H.X. Intelligent Driving Intelligence Test for Autonomous Vehicles with Naturalistic and Adversarial Environment. Nat. Commun. 2021, 12, 748. [Google Scholar] [CrossRef]

- De Gelder, E.; Op Den Camp, O. How Certain Are We That Our Automated Driving System Is Safe? Traffic Inj. Prev. 2023, 24, S131–S140. [Google Scholar] [CrossRef] [PubMed]

- Wei, S.; Shao, M. Existence of Connected and Autonomous Vehicles in Mixed Traffic: Impacts on Safety and Environment. Traffic Inj. Prev. 2024, 25, 390–399. [Google Scholar] [CrossRef] [PubMed]

- Huang, W.; Wang, K.; Lv, Y.; Zhu, F. Autonomous Vehicles Testing Methods Review. In Proceedings of the 2016 IEEE 19th International Conference on Intelligent Transportation Systems (ITSC), Rio de Janeiro, Brazil, 1–4 November 2016; IEEE: Piscataway, NJ, USA, 2016; pp. 163–168. [Google Scholar]

- Schilling, R.; Schultz, T. Validation of Automated Driving Functions. In Simulation and Testing for Vehicle Technology; Gühmann, C., Riese, J., Von Rüden, K., Eds.; Springer International Publishing: Cham, Switzerland, 2016; pp. 377–381. ISBN 978-3-319-32344-2. [Google Scholar]

- Cao, L.; Feng, X.; Liu, J.; Zhou, G. Automatic Generation System for Autonomous Driving Simulation Scenarios Based on PreScan. Appl. Sci. 2024, 14, 1354. [Google Scholar] [CrossRef]

- Zhang, S.; Zhou, X.; Chen, Q.; Yang, X.; Zhou, H.; Luo, L.; Luo, Y. Carsim-Based Simulation Study on the Performances of Raised Speed Deceleration Facilities under Different Profiles. Traffic Inj. Prev. 2024, 25, 810–818. [Google Scholar] [CrossRef] [PubMed]

- Lauer, A.R. The Psychology of Driving: Factors of Traffic Enforcement; National Academies: Washington, DC, USA, 1960. [Google Scholar]

- Comte, S.L.; Jamson, A.H. Traditional and Innovative Speed-Reducing Measures for Curves: An Investigation of Driver Behaviour Using a Driving Simulator. Saf. Sci. 2000, 36, 137–150. [Google Scholar] [CrossRef]

- Abe, G.; Richardson, J. The Influence of Alarm Timing on Braking Response and Driver Trust in Low Speed Driving. Saf. Sci. 2005, 43, 639–654. [Google Scholar] [CrossRef]

- Lin, T.-W.; Hwang, S.-L.; Green, P.A. Effects of Time-Gap Settings of Adaptive Cruise Control (ACC) on Driving Performance and Subjective Acceptance in a Bus Driving Simulator. Saf. Sci. 2009, 47, 620–625. [Google Scholar] [CrossRef]

- Choudhary, P.; Velaga, N.R. Effects of Phone Use on Driving Performance: A Comparative Analysis of Young and Professional Drivers. Saf. Sci. 2019, 111, 179–187. [Google Scholar] [CrossRef]

- Eriksson, A.; Stanton, N.A. Takeover Time in Highly Automated Vehicles: Noncritical Transitions to and from Manual Control. Hum. Factors 2017, 59, 689–705. [Google Scholar] [CrossRef] [PubMed]

- Saifuzzaman, M.; Zheng, Z. Incorporating Human-Factors in Car-Following Models: A Review of Recent Developments and Research Needs. Transp. Res. Part C Emerg. Technol. 2014, 48, 379–403. [Google Scholar] [CrossRef]

- Schieben, A.; Heesen, M.; Schindler, J.; Kelsch, J.; Flemisch, F. The Theater-System Technique: Agile Designing and Testing of System Behavior and Interaction, Applied to Highly Automated Vehicles. In Proceedings of the 1st International Conference on Automotive User Interfaces and Interactive Vehicular Applications, Essen, Germany, 21–22 September 2009; ACM: New York, NY, USA, 2009; pp. 43–46. [Google Scholar]

- Elrofai, H.; Worm, D.; Op Den Camp, O. Scenario Identification for Validation of Automated Driving Functions. In Advanced Microsystems for Automotive Applications 2016; Schulze, T., Müller, B., Meyer, G., Eds.; Lecture Notes in Mobility; Springer International Publishing: Cham, Switzerland, 2016; pp. 153–163. ISBN 978-3-319-44765-0. [Google Scholar]

- Feng, S.; Feng, Y.; Yu, C.; Zhang, Y.; Liu, H.X. Testing Scenario Library Generation for Connected and Automated Vehicles, Part I: Methodology. IEEE Trans. Intell. Transp. Syst. 2020, 22, 1573–1582. [Google Scholar] [CrossRef]

- Feng, S.; Feng, Y.; Sun, H.; Zhang, Y.; Liu, H.X. Testing Scenario Library Generation for Connected and Automated Vehicles: An Adaptive Framework. IEEE Trans. Intell. Transp. Syst. 2022, 23, 1213–1222. [Google Scholar] [CrossRef]

- Feng, S.; Feng, Y.; Yan, X.; Shen, S.; Xu, S.; Liu, H.X. Safety Assessment of Highly Automated Driving Systems in Test Tracks: A New Framework. Accid. Anal. Prev. 2020, 144, 105664. [Google Scholar] [CrossRef] [PubMed]

- Feng, S.; Feng, Y.; Sun, H.; Bao, S.; Zhang, Y.; Liu, H.X. Testing Scenario Library Generation for Connected and Automated Vehicles, Part II: Case Studies. IEEE Trans. Intell. Transp. Syst. 2020, 22, 5635–5647. [Google Scholar] [CrossRef]

- Fellner, A.; Krenn, W.; Schlick, R.; Tarrach, T.; Weissenbacher, G. Model-Based, Mutation-Driven Test-Case Generation Via Heuristic-Guided Branching Search. ACM Trans. Embed. Comput. Syst. 2019, 18, 1–28. [Google Scholar] [CrossRef]

- Rocklage, E.; Kraft, H.; Karatas, A.; Seewig, J. Automated Scenario Generation for Regression Testing of Autonomous Vehicles. In Proceedings of the 2017 IEEE 20th International Conference on Intelligent Transportation Systems (ITSC), Yokohama, Japan, 16–19 October 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 476–483. [Google Scholar]

- Gao, F.; Duan, J.; He, Y.; Wang, Z. A Test Scenario Automatic Generation Strategy for Intelligent Driving Systems. Math. Probl. Eng. 2019, 2019, 3737486. [Google Scholar] [CrossRef]

- Zhao, D.; Huang, X.; Peng, H.; Lam, H.; LeBlanc, D.J. Accelerated Evaluation of Automated Vehicles in Car-Following Maneuvers. IEEE Trans. Intell. Transport. Syst. 2018, 19, 733–744. [Google Scholar] [CrossRef]

- Donà, R.; Ciuffo, B. Virtual Testing of Automated Driving Systems. A Survey on Validation Methods. IEEE Access 2022, 10, 24349–24367. [Google Scholar] [CrossRef]

- Fadaie, J. The State of Modeling, Simulation, and Data Utilization within Industry: An Autonomous Vehicles Perspective. arXiv 2019, arXiv:1910.06075. [Google Scholar]

- Li, Y.; Yuan, W.; Zhang, S.; Yan, W.; Shen, Q.; Wang, C.; Yang, M. Choose Your Simulator Wisely: A Review on Open-Source Simulators for Autonomous Driving. IEEE Trans. Intell. Veh. 2024, 9, 4861–4876. [Google Scholar] [CrossRef]

- Zhong, Z.; Tang, Y.; Zhou, Y.; Neves, V.d.O.; Liu, Y.; Ray, B. A Survey on Scenario-Based Testing for Automated Driving Systems in High-Fidelity Simulation. arXiv 2021, arXiv:2112.00964. [Google Scholar]

- Gafert, M.; Mirnig, A.G.; Fröhlich, P.; Tscheligi, M. TeleOperationStation: XR-Exploration of User Interfaces for Remote Automated Vehicle Operation. In Proceedings of the CHI Conference on Human Factors in Computing Systems Extended Abstracts, New Orleans, LA, USA, 29 April–5 May 2022; ACM: New York, NY, USA, 2022; pp. 1–4. [Google Scholar]

- Goedicke, D.; Bremers, A.W.D.; Lee, S.; Bu, F.; Yasuda, H.; Ju, W. XR-OOM: MiXed Reality Driving Simulation with Real Cars for Research and Design. In Proceedings of the CHI Conference on Human Factors in Computing Systems, New Orleans, LA, USA, 29 April–5 May 2022; ACM: New York, NY, USA, 2022; pp. 1–13. [Google Scholar]

- Kim, G.; Yeo, D.; Jo, T.; Rus, D.; Kim, S. What and When to Explain?: On-Road Evaluation of Explanations in Highly Automated Vehicles. In Proceedings of the ACM on Interactive, Mobile, Wearable and Ubiquitous Technologies, Cancún, Mexico, 8–12 October 2023; IMWUT: Cancún, Mexico, 2023; Volume 7, pp. 1–26. [Google Scholar] [CrossRef]

- Di, X.; Shi, R. A Survey on Autonomous Vehicle Control in the Era of Mixed-Autonomy: From Physics-Based to AI-Guided Driving Policy Learning. Transp. Res. Part C Emerg. Technol. 2021, 125, 103008. [Google Scholar] [CrossRef]

- Mohammad Miqdady, T.F. Studying the Safety Impact of Sharing Different Levels of Connected and Automated Vehicles Using Simulationbased Surrogate Safety Measures. Ph.D. Thesis, Universidad de Granada, Granada, Spain, 2023. [Google Scholar]

- Briefs, U. Mcity Grand Opening. Res. Rev. 2015, 46, 1–3. [Google Scholar]

- Connolly, C. Instrumentation Used in Vehicle Safety Testing at Millbrook Proving Ground Ltd. Sens. Rev. 2007, 27, 91–98. [Google Scholar] [CrossRef]

- Jacobson, J.; Eriksson, H.; Janevik, P.; Andersson, H. How Is Astazero Designed and Equipped for Active Safety Testing? In Proceedings of the 24th International Technical Conference on the Enhanced Safety of Vehicles (ESV) National Highway Traffic Safety Administration, Gothenburg, Sweden, 8–11 June 2015. [Google Scholar]

- Feng, Q.; Kang, K.; Ding, Q.; Yang, X.; Wu, S.; Zhang, F. Study on Construction Status and Development Suggestions of Intelligent Connected Vehicle Test Area in China. Int. J. Glob. Econ. Manag. 2024, 3, 264–273. [Google Scholar] [CrossRef]

- Fremont, D.J.; Kim, E.; Pant, Y.V.; Seshia, S.A.; Acharya, A.; Bruso, X.; Wells, P.; Lemke, S.; Lu, Q.; Mehta, S. Formal Scenario-Based Testing of Autonomous Vehicles: From Simulation to the Real World. In Proceedings of the 2020 IEEE 23rd International Conference on Intelligent Transportation Systems (ITSC), Rhodes, Greece, 20–23 September 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 1–8. [Google Scholar]

- Vishnukumar, H.J.; Butting, B.; Müller, C.; Sax, E. Machine Learning and Deep Neural Network—Artificial Intelligence Core for Lab and Real-World Test and Validation for ADAS and Autonomous Vehicles: AI for Efficient and Quality Test and Validation. In Proceedings of the 2017 Intelligent Systems Conference (IntelliSys), London, UK, 7–8 September 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 714–721. [Google Scholar]

- Tian, H.; Reddy, K.; Feng, Y.; Quddus, M.; Demiris, Y.; Angeloudis, P. Enhancing Autonomous Vehicle Training with Language Model Integration and Critical Scenario Generation. arXiv 2024, arXiv:2404.08570. [Google Scholar]

- Zhang, X.; Tao, J.; Tan, K.; Törngren, M.; Sánchez, J.M.G.; Ramli, M.R.; Tao, X.; Gyllenhammar, M.; Wotawa, F.; Mohan, N.; et al. Finding Critical Scenarios for Automated Driving Systems: A Systematic Mapping Study. IEEE Trans. Softw. Eng. 2023, 49, 991–1026. [Google Scholar] [CrossRef]

- Ma, J.; Zhou, F.; Huang, Z.; Melson, C.L.; James, R.; Zhang, X. Hardware-in-the-Loop Testing of Connected and Automated Vehicle Applications: A Use Case for Queue-Aware Signalized Intersection Approach and Departure. Transp. Res. Rec. 2018, 2672, 36–46. [Google Scholar] [CrossRef]

- Koppel, C.; Van Doornik, J.; Petermeijer, B.; Abbink, D. Investigation of the Lane Change Behavior in a Driving Simulator. ATZ Worldw. 2019, 121, 62–67. [Google Scholar] [CrossRef]

- Suzuki, H.; Wakabayashi, S.; Marumo, Y. Intent Inference of Deceleration Maneuvers and Its Safety Impact Evaluation by Driving Simulator Experiments. J. Traffic Logist. Eng. 2019, 7, 28–34. [Google Scholar] [CrossRef]

- Mohajer, N.; Asadi, H.; Nahavandi, S.; Lim, C.P. Evaluation of the Path Tracking Performance of Autonomous Vehicles Using the Universal Motion Simulator. In Proceedings of the 2018 IEEE International Conference on Systems, Man, and Cybernetics (SMC), Miyazaki, Japan, 7–10 October 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 2115–2121. [Google Scholar]

- Manawadu, U.; Ishikawa, M.; Kamezaki, M.; Sugano, S. Analysis of Individual Driving Experience in Autonomous and Human-Driven Vehicles Using a Driving Simulator. In Proceedings of the 2015 IEEE International Conference on Advanced Intelligent Mechatronics (AIM), Busan, Republic of Korea, 7–11 July 2015; IEEE: Piscataway, NJ, USA, 2015; pp. 299–304. [Google Scholar]

- Kalra, N.; Paddock, S.M. Driving to Safety: How Many Miles of Driving Would It Take to Demonstrate Autonomous Vehicle Reliability? Transp. Res. Part A Policy Pract. 2016, 94, 182–193. [Google Scholar] [CrossRef]

- Stellet, J.E.; Zofka, M.R.; Schumacher, J.; Schamm, T.; Niewels, F.; Zöllner, J.M. Testing of Advanced Driver Assistance towards Automated Driving: A Survey and Taxonomy on Existing Approaches and Open Questions. In Proceedings of the 2015 IEEE 18th International Conference on Intelligent Transportation Systems, Gran Canaria, Spain, 15–18 September 2015; IEEE: Piscataway, NJ, USA, 2015; pp. 1455–1462. [Google Scholar]

- Hoss, M.; Scholtes, M.; Eckstein, L. A Review of Testing Object-Based Environment Perception for Safe Automated Driving. Automot. Innov. 2022, 5, 223–250. [Google Scholar] [CrossRef]

- Wishart, J.; Como, S.; Forgione, U.; Weast, J.; Weston, L.; Smart, A.; Nicols, G. Literature Review of Verification and Validation Activities of Automated Driving Systems. SAE Int. J. Connect. Autom. Veh. 2020, 3, 267–323. [Google Scholar] [CrossRef]

- Nalic, D.; Mihalj, T.; Bäumler, M.; Lehmann, M.; Eichberger, A.; Bernsteiner, S. Scenario Based Testing of Automated Driving Systems: A Literature Survey. In Proceedings of the FISITA Web Congress, Prague, Czech Republic, 14–18 September 2020; Volume 10, p. 1. [Google Scholar]

- Jiang, Y.; Wang, S.; Yao, Z.; Zhao, B.; Wang, Y. A Cellular Automata Model for Mixed Traffic Flow Considering the Driving Behavior of Connected Automated Vehicle Platoons. Phys. A Stat. Mech. Its Appl. 2021, 582, 126262. [Google Scholar] [CrossRef]

- Chen, L.; Li, Y.; Huang, C.; Li, B.; Xing, Y.; Tian, D.; Li, L.; Hu, Z.; Na, X.; Li, Z. Milestones in Autonomous Driving and Intelligent Vehicles: Survey of Surveys. IEEE Trans. Intell. Veh. 2022, 8, 1046–1056. [Google Scholar] [CrossRef]

- Gazis, D.C.; Herman, R.; Rothery, R.W. Nonlinear Follow-the-Leader Models of Traffic Flow. Oper. Res. 1961, 9, 545–567. [Google Scholar] [CrossRef]

- Gipps, P.G. A Behavioural Car-Following Model for Computer Simulation. Transp. Res. Part B Methodol. 1981, 15, 105–111. [Google Scholar] [CrossRef]

- Kikuchi, S.; Chakroborty, P. Car-Following Model Based on Fuzzy Inference System; Transportation Research Board: Washington, DC, USA, 1992; p. 82. [Google Scholar]

| Traffic Scenarios | Traditional Traffic | Mixed Traffic | Ideal Intelligent Traffic |

|---|---|---|---|

| Penetration rate of AVs | 0 | (0, 100%) | 100% |

| Vehicle automation levels | Consistent (All HDVs) | Inconsistent (Vehicles with different automation levels) | Consistent (All fully autonomous vehicles) |

| Uniformity of testing criteria | Uniform | Non-uniform | Uniform |

| Types of vehicle-to-vehicle interaction | HDV-HDV | HDV-HDV AV-AV AV-HDV | AV-AV |

| Emphasis of testing | Vehicle factory testing | Vehicle factory testing Driving abilities testing Interaction capabilities testing | Vehicle factory testing Driving abilities testing Operational human-friendliness testing |

| Basic Road Information | Lane Information | Environmental Information | |||

|---|---|---|---|---|---|

| Road type | Urban expressway | Number of lanes | Two-way two-lane | Weather | Sunny 80% |

| Road length | 1000 m | Lane type | Asphalt concrete | Snow, rain, clouds, and fog at 0% | |

| Starting point | Origin | Type of vehicle | car | Lighting | 80% |

| Road direction | 0° | Lane width | 3.75 m | Landscape | Office buildings, schools; |

| Road alignment | Straight line | Lane speed limit | 120 km/h | Green trees and bushes | |

| Evaluation Items | Requirements | Judging Results |

|---|---|---|

| Safety | Q ≥ 0 | 15 points/Unqualified |

| Car-following characteristics | 0 < G < 0.2 Gapsafe | 15 points/Unqualified |

| Violation | D2 > 0 | 15 points/Unqualified |

| D3 > 0 | 15 points/Unqualified | |

| Car-following efficiency | R1 = (1 − u) × 20 | R1 |

| Comfort | R2 = Rx + Ry = (2 − jx − jy) × 10 | R2 |

| Total score | Qualified: R = 60 + R1 + R2 | R |

| Unqualified: R = 15 × r |

| Group 1 | 60 km/h | 90 km/h | 120 km/h | Group 2 | 60 km/h | 90 km/h | 120 km/h |

| Gipps Model | 71.4308 | 77.6676 | 45 | Gipps Model | 74.5781 | 76.7838 | 66.5414 |

| GM Model | 45 | 45 | 45 | GM Model | 45 | 45 | 45 |

| Fuzzy Reasoning Model | 45 | 45 | 45 | Fuzzy Reasoning Model | 45 | 45 | 45 |

| Group 3 | 60 km/h | 90 km/h | 120 km/h | Group 4 | 60 km/h | 90 km/h | 120 km/h |

| Gipps Model | 77.3397 | 71.1287 | 72.8375 | Gipps Model | 73.8108 | 65.7953 | 63.3177 |

| GM Model | 45 | 45 | 45 | GM Model | 45 | 45 | 45 |

| Fuzzy Reasoning Model | 45 | 45 | 45 | Fuzzy Reasoning Model | 45 | 45 | 45 |

| Group 5 | 60 km/h | 90 km/h | 120 km/h | Group 6 | 60 km/h | 90 km/h | 120 km/h |

| Gipps Model | 70.6911 | 68.9145 | 65.1808 | Gipps Model | 76.5556 | 69.3031 | 67.7908 |

| GM Model | 45 | 45 | 45 | GM Model | 45 | 45 | 45 |

| Fuzzy Reasoning Model | 45 | 45 | 45 | Fuzzy Reasoning Model | 45 | 45 | 45 |

| 60 km/h | 90 km/h | 120 km/h | |

|---|---|---|---|

| R1 | 9.2454 | 8.4706 | 3.5456 |

| R2 | 4.8223 | 3.1283 | 2.3991 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, Y.; Shao, Y.; Shi, X.; Ye, Z. A Testing and Evaluation Method for the Car-Following Models of Automated Vehicles Based on Driving Simulator. Systems 2024, 12, 298. https://doi.org/10.3390/systems12080298

Zhang Y, Shao Y, Shi X, Ye Z. A Testing and Evaluation Method for the Car-Following Models of Automated Vehicles Based on Driving Simulator. Systems. 2024; 12(8):298. https://doi.org/10.3390/systems12080298

Chicago/Turabian StyleZhang, Yuhan, Yichang Shao, Xiaomeng Shi, and Zhirui Ye. 2024. "A Testing and Evaluation Method for the Car-Following Models of Automated Vehicles Based on Driving Simulator" Systems 12, no. 8: 298. https://doi.org/10.3390/systems12080298