A Wargame-Augmented Knowledge Elicitation Method for the Agile Development of Novel Systems

Abstract

:1. Introduction

1.1. Problem Space

1.1.1. Extreme Novelty

1.1.2. Constraints to Traditional Approaches

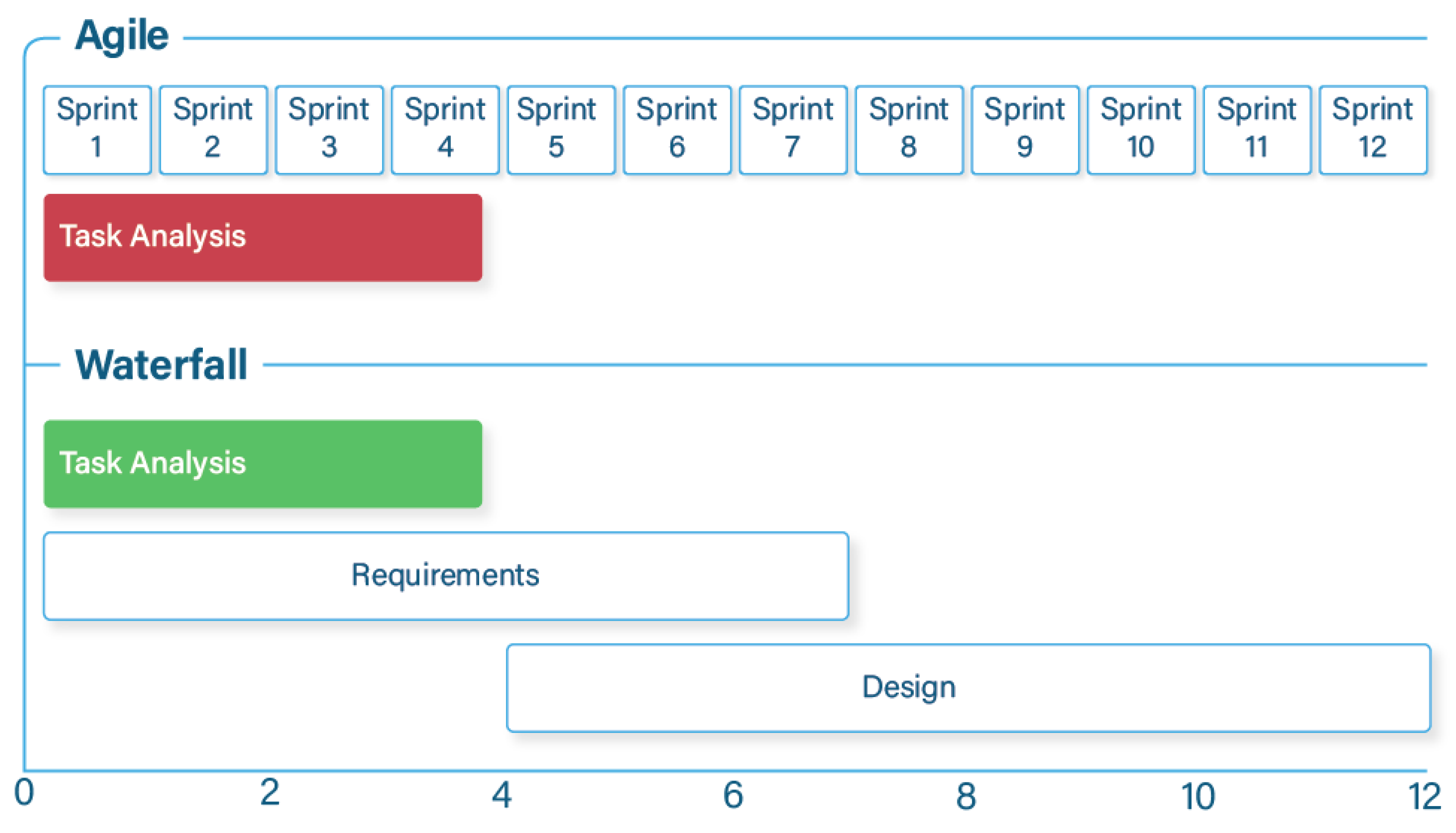

1.1.3. Agile/Scrum Development and the Fear of Missing out

1.2. Possible Approaches

1.2.1. Stretched Systems, Resilience, and Learning by Doing

1.2.2. Design Thinking

1.2.3. Wargaming

1.3. Goals

- Provide insights without relying upon existing HMIs or experienced operators/users.

- Enable concurrent development and assessment of the HMI, operator workflow(s), and CONOPS for new use cases, all of which are highly interdependent.

- Use probes to directly capture the insights and perceptions of future system operators, and enable assessment of their decision making under various conditions.

- Assess how the human-system team would stretch under various conditions and assumptions, and proactively identify any unintended consequences of use.

- Be planned, executed, and analyzed in a matter of weeks, enabling timely HSI inputs into agile system development lifecycles.

2. Materials and Participants

2.1. Materials

2.2. Participants

3. Methods

3.1. WAKE Data Collection Procedure

- An overview of the gameboard, the various geospatial boundaries and features, and their significance.

- A review of the assumed capabilities and limitations of the system (characteristics such as range, power, and reliability of different system components).

- Rules for gameplay (the length of each turn, the scale of the map, when and how information can be requested from the game master).

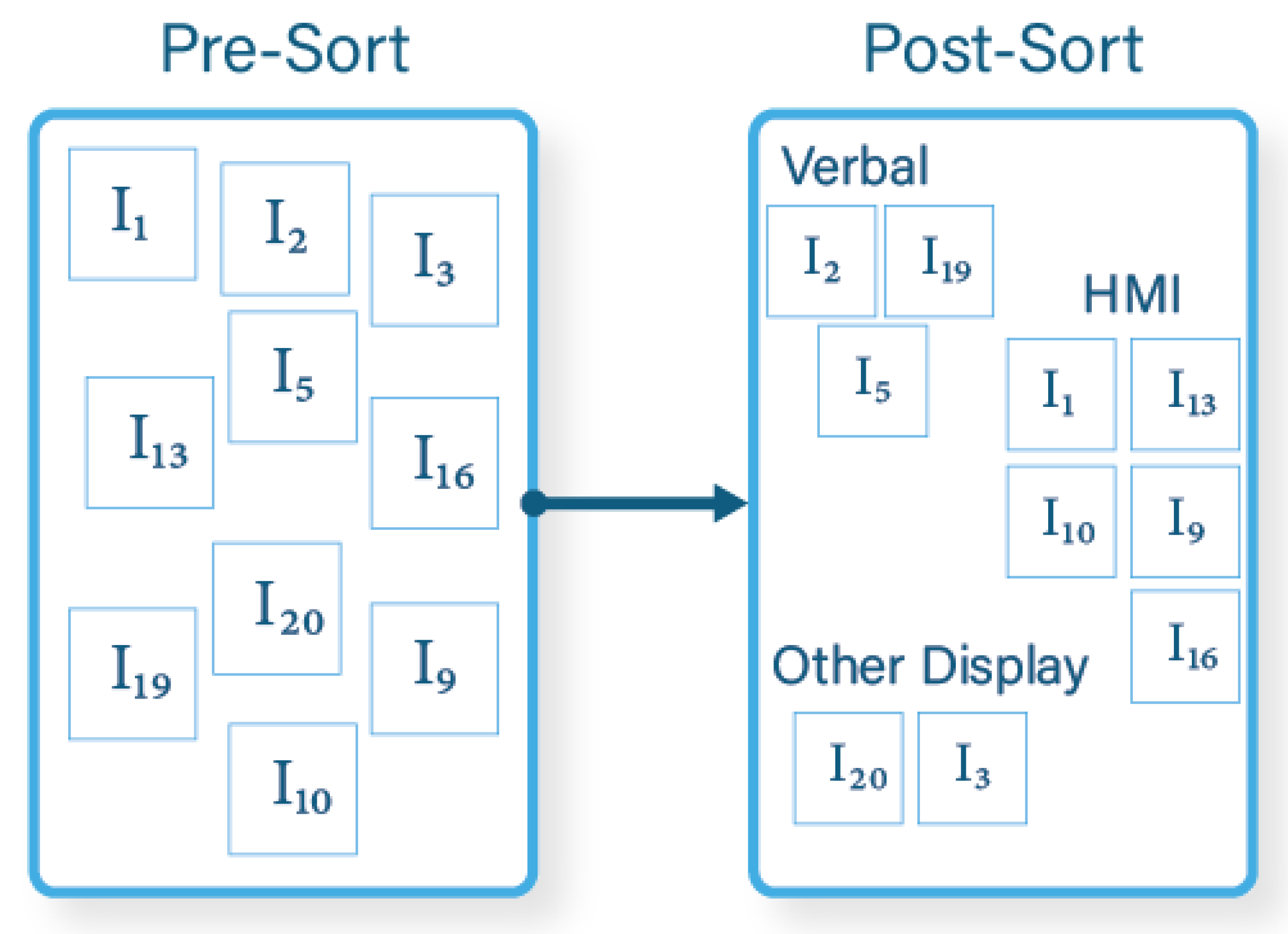

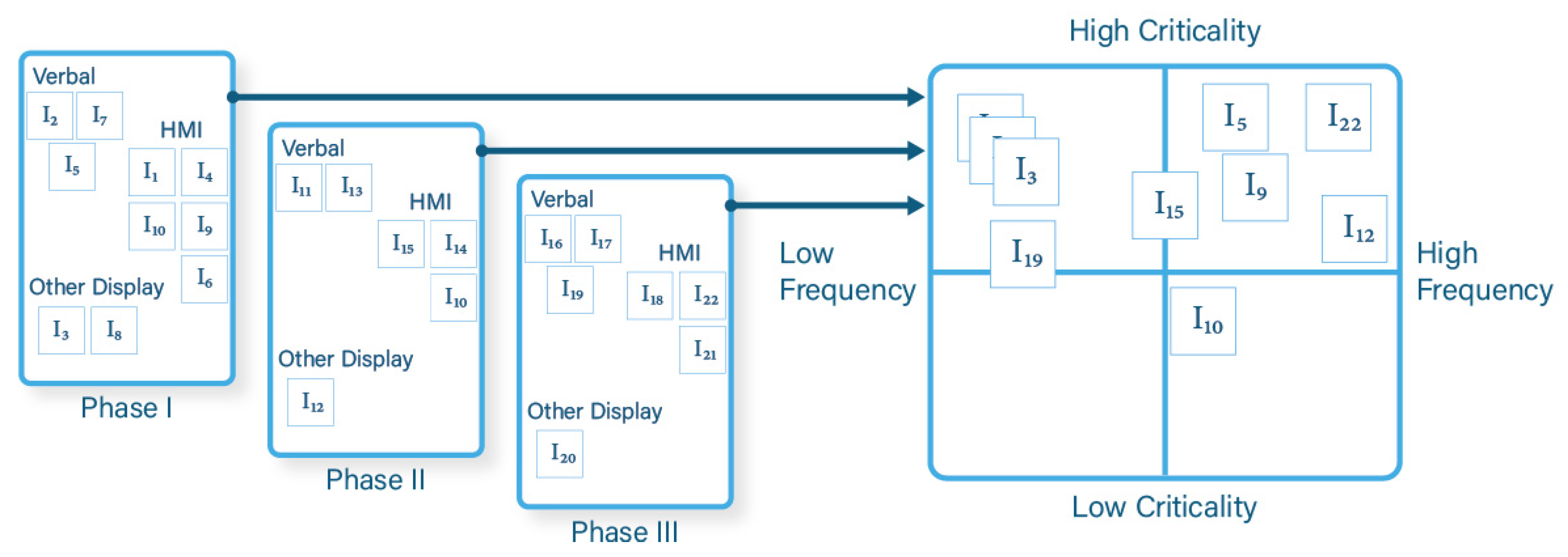

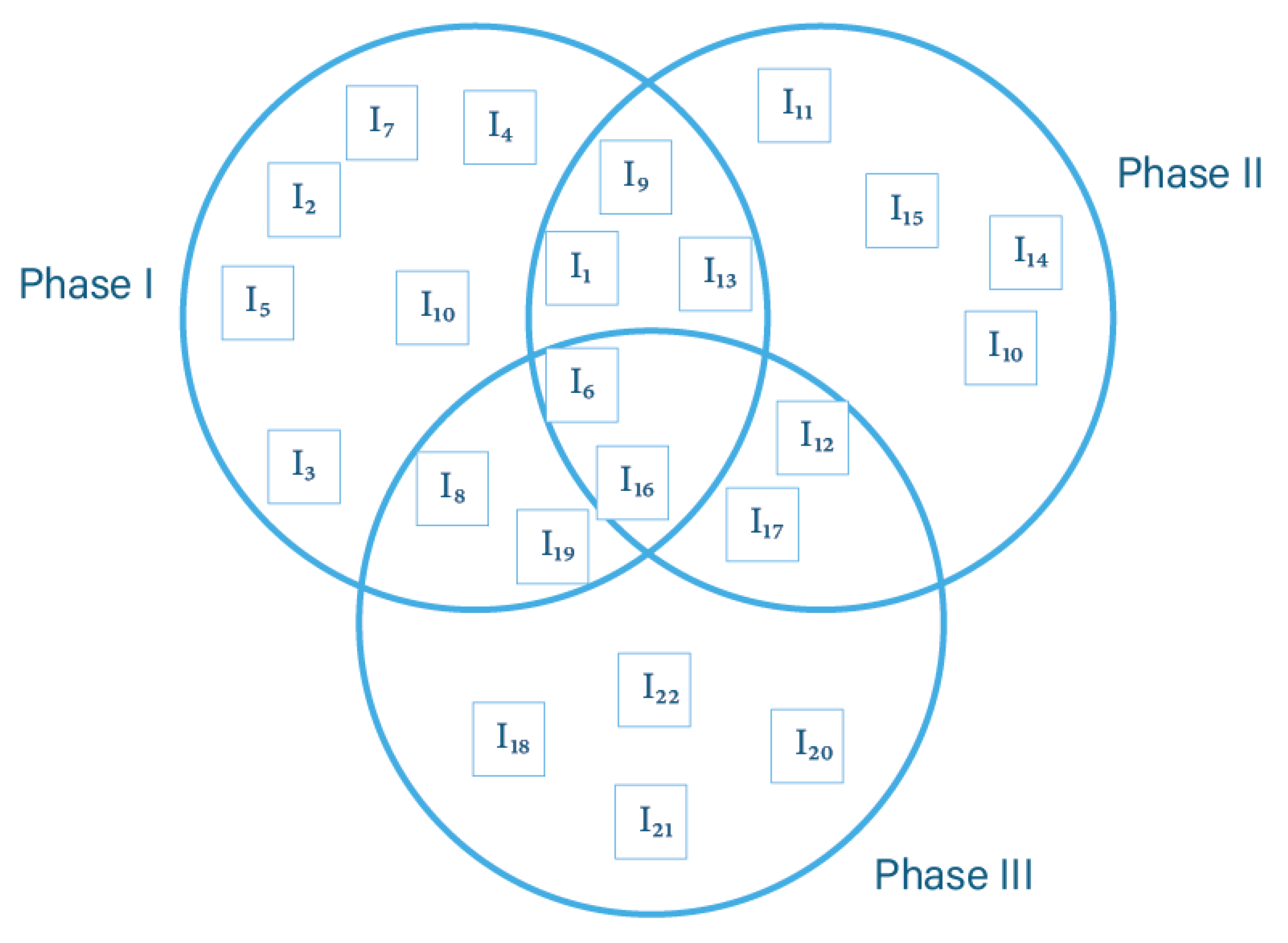

3.2. WAKE Analysis Procedure

- HMI: Information that participants would like to access natively through the HMI being developed.

- Verbal: Information that participants would like to access by verbal communications with the crew, rather than through an HMI.

- Other: Information that participants would like to access through another system or interface, rather than through the HMI or verbally from someone else.

4. Results and Conclusions

4.1. Outcomes

4.2. Participant Engagement

4.3. Limitations and Future Work

- When assessing a new system or technology, participants may need prompting or “nudging” to arrive at the capabilities and limitations of the new system, and not existing analogical systems. Future research and employment of WAKE will explore priming participants to think about the new technological capabilities and limitations in an interview phase before the wargaming event.

- Intermittent questions and probes by the facilitators were helpful in keeping discussion on track during gameplay.

- The assumptions and Parking Lot canvases were useful not only in capturing information, but as a means to constrain superfluous conversation and maintain focus.

- Some social loafing was observed among participants, which may be expected as teams approach or exceed 10 members [39].

- The participative analytical methods are, by their team-oriented nature, susceptible to the adverse effects of group think and mutual influence among participants. This can be exacerbated with military participants where rank is a substantial factor in team dynamics (i.e., junior participants are less likely to contradict senior participants). We managed these risks through proactive facilitation (e.g., asking the most junior participant to start the analysis or present the findings to the group). Future work will investigate how to apply one or more existing methods or tools to gather individual votes or priorities [37,40].

- The 2 × 2 method had limited diagnosticity, since several required pieces of information were all-or-nothing criticality (i.e., the mission is guaranteed to fail without them), causing an n-way tie at the top of the board. Future work will look at alternative methods to prioritize information requirements to avoid such issues, such as implementing a forced-choice rule where no two pieces of information can be parallel to each other on one or more axis.

- Data analysis can be time intensive beyond reporting enumerated information requirements (i.e., reviewing video footage and transcribing data). New methods for collection, processing, and exploitation of data will be investigated for future applications of WAKE. Such technologies may include, but are not limited to, speech-to-text transcription and digital sticky notes through a touch screen display or interactive projector (obviating the need for transcription).

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Sinclair, M.A. Participative assessment. In Evaluation of Human Work, 3rd ed.; Wilson, J.R., Corlett, N., Eds.; Taylor and Francis: Boca Raton, FL, USA, 2005; pp. 83–112. [Google Scholar]

- Shadbolt, N.; Burton, A.M. The empirical study of knowledge elicitation techniques. SIGART Bull. 1989, 108, 15–18. [Google Scholar] [CrossRef]

- Cooke, N.J. Varieties of knowledge elicitation techniques. Int. J. Hum.-Comput. Stud. 1994, 41, 801–849. [Google Scholar] [CrossRef]

- Klein, G.; Armstrong, A.A. Critical decision method. In Handbook of Human Factors Ergonomics Methods; Stanton, N.A., Hedge, A., Brookhuis, K., Salas, E., Hendrick, H., Eds.; CRC Press: Boca Raton, FL, USA, 2005; pp. 58:1–58:6. [Google Scholar]

- Shadbolt, N.R.; Smart, P.R. Knowledge elicitation: Methods, tools, and techniques. In Evaluation of Human Work; Wilson, J.R., Sharples, S., Eds.; CRC Press: Boca Raton, FL, USA, 2015; pp. 174–176. [Google Scholar]

- Stanton, N.A.; Salmon, P.M.; Walker, G.H.; Baber, C.; Jenkins, D.P. Cognitive task analysis methods. In Human Factors Methods: A Practical Guide for Engineering and Design; Stanton, N., Salmon, P., Eds.; Ashgate Publishing: Surry, UK, 2005; pp. 75–108. [Google Scholar]

- Polson, P.G.; Lewis, C.; Wharton, C. Cognitive walkthroughs: A method for theory-based evaluation of user interfaces. Int. J. Man-Mach. Stud. 1992, 36, 741–773. [Google Scholar] [CrossRef]

- Wharton, C.; Rieman, J.; Lewis, C.; Polson, P. The cognitive walkthrough method: A practitioner’s guide. In Usability Inspection Methods; Nielsen, J., Mack, R., Eds.; Wiley: Hoboken, NJ, USA, 1994; Available online: http://www.colorado.edu/ics/technical-reports-1990-1999 (accessed on 11 August 2020).

- Rogers, Y.; Sharp, H.; Preece, J. Evaluation: Inspections, analytics, and models. In Interaction Design: Beyond Human-Computer Interaction; Rogers, Y., Ed.; John Wiley and Sons: West Sussex, UK, 2013; pp. 514–517. [Google Scholar]

- Doshi, H. Scrum Insights for Practitioners: The Scrum Guide Companion; Hiren Doshi: Lexington, KY, USA, 2016. [Google Scholar]

- Ludwigson, J. DOD Space Acquisitions: Including Users Early and Often in Software Development Could Benefit Programs; GAO-19-136; Government Accountability Office (GAO): Washington, DC, USA, 2019; pp. 1–55.

- McConnell, S. Rapid Development: Taming Wild Software Schedules; Microsoft Press: Redmond, WA, USA, 1996. [Google Scholar]

- Woods, D.D. The law of stretched systems in action: Exploring robotics. In Proceedings of the 2006 ACM Conference on Human-Robot Interaction, HRI 2006, Salt Lake City, UT, USA, 2–3 March 2006. [Google Scholar] [CrossRef]

- Sheridan, T.B. Risk, human error, and system resilience: Fundamental ideas. Hum. Factors 2008, 50, 418–426. [Google Scholar] [CrossRef] [PubMed]

- Woods, D.D. Four concepts for resilience and the implications for the future of resilience engineering. Reliab. Eng. Syst. Saf. 2015, 141, 5–9. [Google Scholar] [CrossRef]

- Hoffman, R.R.; Hancock, P.A. Measuring resilience. Hum. Factors 2017, 59, 564–581. [Google Scholar] [CrossRef] [PubMed]

- Arrow, K. The economic implications of learning by doing. Rev. Econ. Stud. 1962, 29, 155–173. [Google Scholar] [CrossRef]

- von Hippel, E.; Tyre, M. How “learning by doing” is done: Problem identification in novel process equipment. Res. Policy 1995, 24, 1–12. [Google Scholar] [CrossRef] [Green Version]

- Brown, T. Change by Design; Harper Business: New York, NY, USA, 2009. [Google Scholar]

- IDEO—Design Thinking. Available online: http://www.ideou.com/pages/design-thinking (accessed on 5 February 2020).

- Liedtka, J. Evaluating the Impact of Design Thinking in Action; Darden Working Paper Series; Darden School of Business: Charlottesville, VA, USA, 2018; pp. 1–48. [Google Scholar]

- Oriesek, D.F.; Schwarz, J.O. Business Wargaming: Securing Corporate Value; Gower Publishing: Aldershot, UK, 2008. [Google Scholar]

- Perla, P.P. The Art of Wargaming; Naval Institute Press: Annapolis, MD, USA, 1990. [Google Scholar]

- Erwin, M.; Dorton, S.; Tupper, S. Rolling the dice: Using low-cost tabletop simulation to develop and evaluate high-value CONOPS in uncertain tactical environments. In Proceedings of the MODSIM World 2017, Virginia Beach, VA, USA, 25–27 June 2017. [Google Scholar]

- Johnson, R.B. Examining the validity structure of qualitative research. Education 1997, 118, 282–292. [Google Scholar]

- Dorton, S.; Tupper, S.; Maryeski, L. Going digital: Consequences of increasing resolution of a wargaming tool for knowledge elicitation. Proc. 2017 Int. Annu. Meet. HFES 2017, 61, 2037–2041. [Google Scholar] [CrossRef]

- Morabito, T. Targeted fidelity: Cutting costs by increasing focus. In Proceedings of the MODSIM World 2016, Virginia Beach, VA, USA, 26–28 April 2016. [Google Scholar]

- Smallman, H.S.; Cook, M.B.; Manes, D.I.; Cowan, M.B. Naïve realism in terrain appreciation: Advanced display aids for routing. Proc. 2008 Int. Annu. Meet. HFES 2008, 52, 1160–1164. [Google Scholar] [CrossRef]

- Bracht, G.H.; Glass, G.V. The external validity of experiments. Am. Educ. Res. J. 1968, 5, 437–474. [Google Scholar] [CrossRef]

- Klein, G. Sources of Power: How People Make Decisions, 20th Anniversary ed.; MIT Press: Cambridge, MA, USA, 2017. [Google Scholar]

- Tirpak, J.A. Find, Fix, Track, Target, Engage, Assess. Air Force Mag. 2000, 83, 24–29. Available online: http://www.airforcemag.com/MagazineArchive/Pages/2000/July%202000/0700find.aspx (accessed on 11 August 2020).

- Menner, W.A. The Navy’s tactical aircraft strike planning process. Johns Hopkins APL Tech. Digest 1997, 18, 90–104. [Google Scholar]

- Burton, A.M.; Shadbolt, N.R.; Rugg, G.; Hedgecock, A.P. The efficacy of knowledge elicitation techniques: A comparison across domains and levels of expertise. Knowl. Acquis. 1990, 2, 167–178. [Google Scholar] [CrossRef] [Green Version]

- Fincher, S.; Tenenberg, J. Making sense of card sorting data. Expert Syst. 2005, 22, 89–93. [Google Scholar] [CrossRef]

- Jaaskelainen, R. Think-Aloud Protocol. In Handbook of Translation Studies; Gambier, Y., van Doorslaer, L., Eds.; John Benjamins Publishing Company: Amsterdam, The Netherlands, 2010; pp. 371–373. [Google Scholar]

- Cooke, L. Assessing concurrent think-aloud protocol as a usability test method: A technical communication approach. IEEE Trans. Prof. Commun. 2010, 53, 202–215. [Google Scholar] [CrossRef]

- Dorton, S.L.; Smith, C.M.; Upham, J.B. Applying visualization and collective intelligence for rapid group decision making. Proc. 2018 Int. Annu. Meet. HFES 2018, 62, 167–171. [Google Scholar] [CrossRef] [Green Version]

- Harper, S.; Dorton, S. A context-driven framework for selecting mental model elicitation methods. Proc. 2019 Int. Annu. Meet. HFES 2019, 63, 367–371. [Google Scholar] [CrossRef]

- Hackman, J.R. Collaborative Intelligence; Berrett-Koehler Publishers: San Francisco, CA, USA, 2011. [Google Scholar]

- Van de Ven, A.H.; Delbecq, A.L. The effectiveness of nominal, Delphi, and interacting group decision making processes. Acad. Manag. J. 1974, 17, 605–621. [Google Scholar] [CrossRef]

| Positively-Oriented Survey Statement | M | SD | Agreement Level |

|---|---|---|---|

| … Promotes discussion and critical thinking | 4.68 | 0.47 | Strongly Agree |

| I feel involved in gameplay during … sessions | 4.50 | 0.58 | Strongly Agree |

| The entire group gets involved in playing… | 4.00 | 0.93 | Agree |

| I have fun and enjoy the … sessions | 4.52 | 0.65 | Strongly Agree |

| … helps uncover and discuss assumptions | 4.54 | 0.50 | Strongly Agree |

| … helps identify spatiotemporal issues with concepts | 4.38 | 0.64 | Agree |

| … supports determining effectiveness of concepts | 4.36 | 0.69 | Agree |

| … is a useful tool for developing advanced concepts | 4.54 | 0.65 | Strongly Agree |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Dorton, S.L.; Maryeski, L.R.; Ogren, L.; Dykens, I.T.; Main, A. A Wargame-Augmented Knowledge Elicitation Method for the Agile Development of Novel Systems. Systems 2020, 8, 27. https://doi.org/10.3390/systems8030027

Dorton SL, Maryeski LR, Ogren L, Dykens IT, Main A. A Wargame-Augmented Knowledge Elicitation Method for the Agile Development of Novel Systems. Systems. 2020; 8(3):27. https://doi.org/10.3390/systems8030027

Chicago/Turabian StyleDorton, Stephen L., LeeAnn R. Maryeski, Lauren Ogren, Ian T. Dykens, and Adam Main. 2020. "A Wargame-Augmented Knowledge Elicitation Method for the Agile Development of Novel Systems" Systems 8, no. 3: 27. https://doi.org/10.3390/systems8030027

APA StyleDorton, S. L., Maryeski, L. R., Ogren, L., Dykens, I. T., & Main, A. (2020). A Wargame-Augmented Knowledge Elicitation Method for the Agile Development of Novel Systems. Systems, 8(3), 27. https://doi.org/10.3390/systems8030027