Assessment of OpenMP Master–Slave Implementations for Selected Irregular Parallel Applications

Abstract

1. Introduction

2. Related Work

- frameworks related to or using OpenMP that target programming abstractions even easier to use or at a higher level than OpenMP itself;

- parallelization of master–slave and producer–consumer in OpenMP including details of analyzed models and proposed implementations.

2.1. OpenMP Related Frameworks and Layers for Parallelization

2.2. Parallelization of Master–Slave with OpenMP

- #pragma omp parallel along with #pragma omp master directives or#pragma omp parallel with distinguishing master and slave codes based on thread ids.

- #pragma omp parallel with threads fetching tasks in a critical section, a counter can be used to iterate over available tasks. In [19], it is called an all slave model.

- Tasking with the #pragma omp task directive.

- Assignment of work through dynamic scheduling of independent iterations of a for loop.

3. Motivations, Application Model and Implementations

- Master generates a predefined number of data chunks from a data source if there is still data to be fetched from the data source.

- Data chunks are distributed among slaves for parallel processing.

- Results of individually processed data chunks are provided to the master for integration into a global result.

Implementations of the Master–Slave Pattern with OpenMP

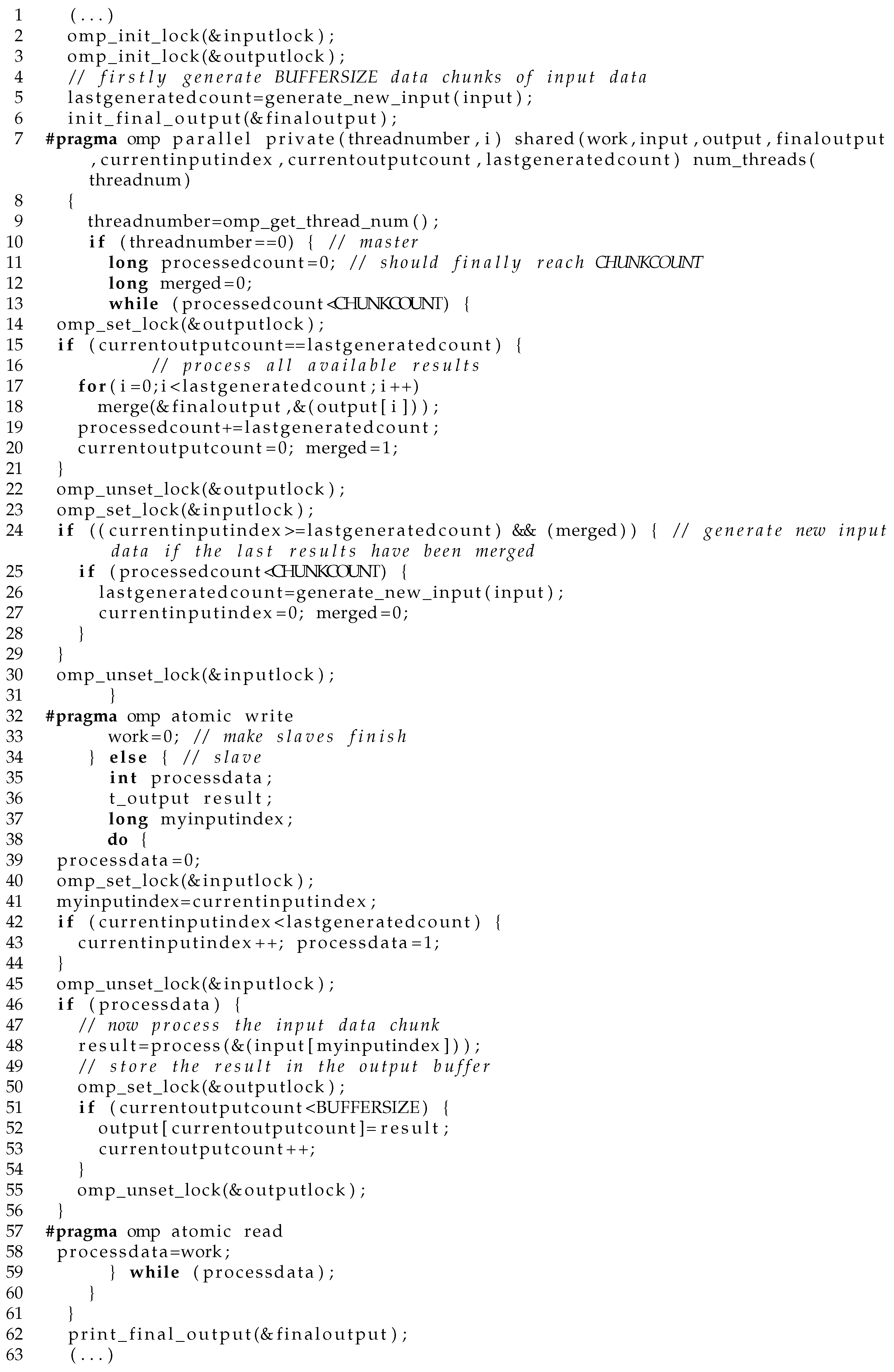

- designated-master (Figure 1)—direct implementation of master–slave in which a separate thread is performing the master’s tasks of input data packet generation as well as data merging upon filling in the output buffer. The other launched threads perform slaves’ tasks.

- integrated-master (Figure 2)—modified implementation of the designated-master code. Master’s tasks are moved to within a slave thread. Specifically, if a consumer thread has inserted the last result into the result buffer, it merges the results into a global shared result, clears its space and generates new data packets into the input buffer. If the buffer was large enough to contain all input data, such implementation would be similar to the all slave implementation shown in [19].

- tasking (Figure 3)—code using the tasking construct. Within a region in which threads operate in parallel (created with #pragma omp parallel), one of the threads generates input data packets and launches tasks (in a loop) each of which is assigned processing of one data packet. These are assigned to the aforementioned threads. Upon completion of processing of all the assigned tasks, results are merged by the one designated thread, new input data is generated and the procedure is repeated.

- tasking2—this version is an evolution of tasking. It potentially allows overlapping of generation of new data into the buffer and merging of latest results into the final result by the thread that launched computational tasks in version tasking. The only difference compared to the tasking version is that data generation is executed using #pragma omp task.

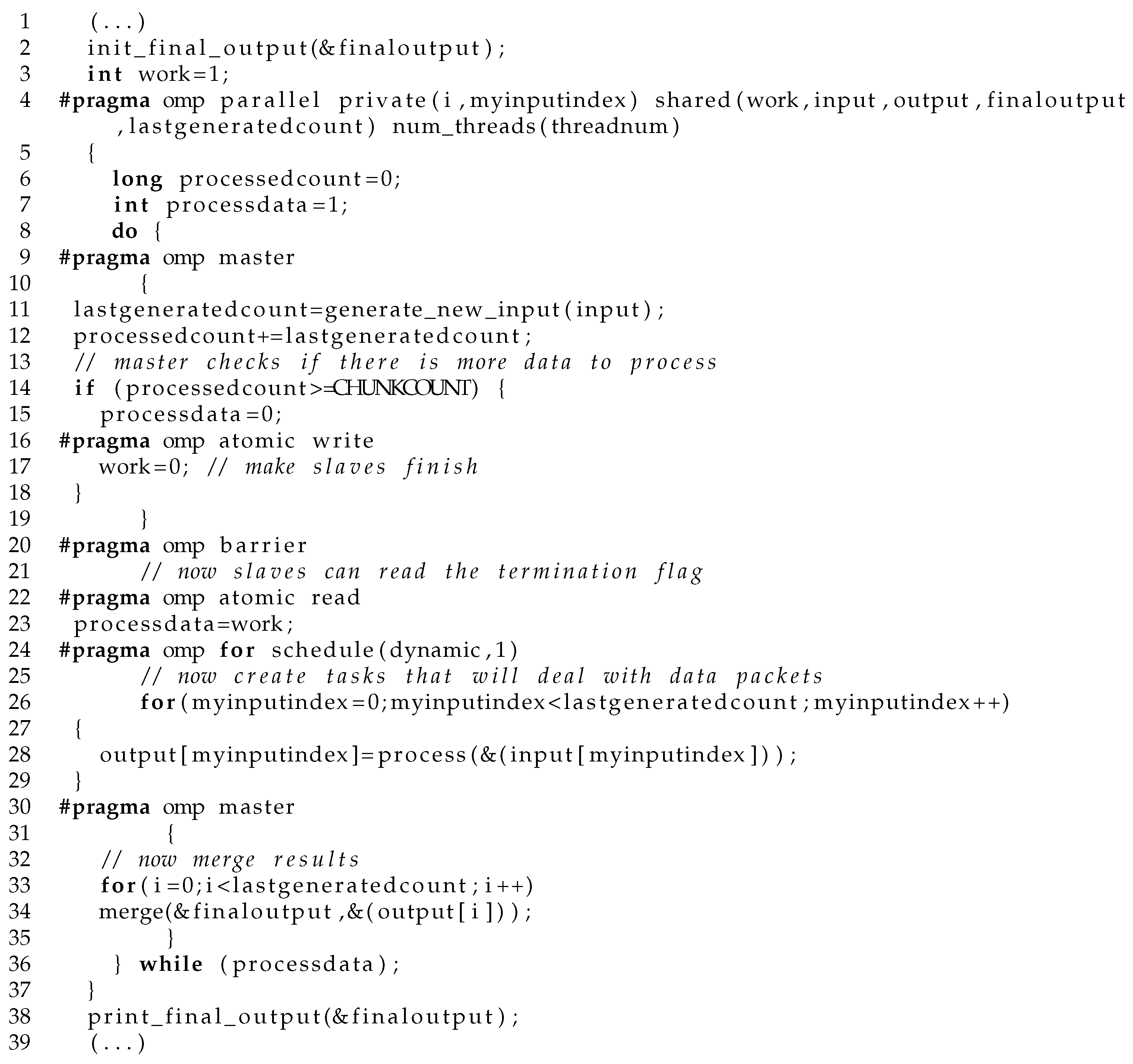

- dynamic-for (Figure 4)—this version is similar to the tasking one with the exception that instead of tasks, in each iteration of the loop a function processing a given input data packet is launched. Parallelization of the for loop is performed with #pragma omp for with a dynamic chunk 1 size scheduling clause. Upon completion, output is merged, new input data is generated and the procedure is repeated.

- dynamic-for2 (Figure 5)—this version is an evolution of dynamic-for. It allows overlapping of generation of new data into the buffer and merging of latest results into the final result through assignment of both operations to threads with various ids (such as 0 and 4 in the listing). It should be noted that ids of these threads can be controlled in order to make sure that these are threads running on different physical cores as was the case for the two systems tested in the following experiments.

4. Experiments

4.1. Parametrized Irregular Testbed Applications

4.1.1. Parallel Adaptive Quadrature Numerical Integration

- if the area of triangle is smaller than(k = 18) then the sum of areas of two trapezoidsandis returned as a result,

- otherwise, recursive partitioning into two subranges and is performed and the aforementioned procedure is repeated for each of these until the condition is met.

4.1.2. Parallel Image Recognition

4.2. Testbed Environment and Methodology of Tests

4.3. Results

4.4. Observations and Discussion

4.4.1. Performance

- For numerical integration, best implementations are tasking and dynamic-for2 (or dynamic-for for system 1) with practically very similar results. These are very closely followed by tasking2 and dynamic-for and then by visibly slower integrated-master and designated-master.

- For image recognition best implementations for system 1 are dynamic-for2/dynamic-for and integrated-master with very similar results, followed by tasking, designated-master and tasking2. For system 2, best results are shown by dynamic-for2/dynamic-for and tasking2, followed by tasking and then by visibly slower integrated-master and designated-master.

- For system 2, we can see benefits from overlapping for dynamic-for2 over dynamic-for for numerical integration and for both tasking2 over tasking, as well as dynamic-for2 over dynamic-for for image recognition. The latter is expected as those configurations operate on considerably larger data and memory access times constitute a larger part of the total execution time, compared to integration.

- For the compute intensive numerical integration example we see that best results were generally obtained for oversubscription, i.e., for tasking* and dynamic-for* best numbers of threads were 64 rather than generally 32 for system 2 and 16 rather than 8 for system 1. The former configurations apparently allow to mitigate idle time without the accumulated cost of memory access in the case of oversubscription.

- In terms of thread affinity, for the two applications best configurations were measured for default/noprocbind for numerical integration for both systems and for thrclose/corspread for system 1 and sockets for system 2 for smaller compute coefficients and default for system 1 and noprocbind for system 2 for compute coefficient 8.

- For image recognition, configurations generally show visibly larger standard deviation than for numerical integration, apparently due to memory access impact.

- We can notice that relative performance of the two systems is slightly different for the two applications. Taking into account best configurations, for numerical integration system 2’s times are approx. 46–48% of system 1’s times while for image recognition system 2’s times are approx. 53–61% of system 1’s times, depending on partitioning and compute coefficients.

- We can assess gain from HyperThreading for the two applications and the two systems (between 4 and 8 threads for system 1 and between 16 and 32 threads for system 2) as follows: for numerical integration and system 1 it is between 24.6% and 25.3% for the coefficients tested, for system 2 it is between 20.4% and 20.9%; for image recognition and system 1, it is between 10.9% and 11.3% and similarly for system 2 between 10.4% and 11.3%.

- We can see that ratios of best system 2 to system 1 times for image recognition are approx. 0.61 for coefficient 2, 0.57 for coefficient 4 and 0.53 for coefficient 8 which means that results for system 2 for this application get relatively better compared to system 1’s. As outlined in Table 1, system 2 has larger cache and for subsequent passes more data can reside in the cache. This behavior can also be seen when results for 8 threads are compared—for coefficients 2 and 4 system 1 gives shorter times but for coefficient 8 system 2 is faster.

- integrated-master is relatively better compared to the best configuration for system 1 as opposed to system 2—in this case, the master’s role can be taken by any thread, running on one of the 2 CPUs.

4.4.2. Ease of Programming

- code length—the order from the shortest to the longest version of the code is as follows: tasking, dynamic-for, tasking2, integrated-master, dynamic-for2 and designated-master,

- the numbers of OpenMP directives and functions. In this case the versions can be characterized as follows:

- designated-master—3 directives and 13 function calls;

- integrated-master—1 directive and 6 function calls;

- tasking—4 directives and 0 function calls;

- tasking2—6 directives and 0 function calls;

- dynamic-for—7 directives and 0 function calls;

- dynamic-for2—7 directives and 1 function call,

which makes tasking the most elegant and compact solution. - controlling synchronization—from the programmer’s point of view this seems more problematic than the code length, specifically how many distinct thread codes’ points need to synchronize explicitly in the code. In this case, the easiest code to manage is tasking/tasking2 as synchronization of independently executed tasks is performed in a single thread. It is followed by integrated-master which synchronizes with a lock in two places and dynamic-for/dynamic-for2 which require thread synchronization within #pragma omp parallel, specifically using atomics and designated-master which uses two locks, each in two places. This aspect potentially indicates how prone to errors each of these implementations can be for a programmer.

5. Conclusions and Future Work

Funding

Conflicts of Interest

References

- Czarnul, P. Parallel Programming for Modern High Performance Computing Systems; Chapman and Hall/CRC Press/Taylor & Francis: Boca Raton, FL, USA, 2018. [Google Scholar]

- Klemm, M.; Supinski, B.R. (Eds.) OpenMP Application Programming Interface Specification Version 5.0; OpenMP Architecture Review Board: Chicago, IL, USA, 2019; ISBN 978-1795759885. [Google Scholar]

- Ohberg, T.; Ernstsson, A.; Kessler, C. Hybrid CPU–GPU execution support in the skeleton programming framework SkePU. J. Supercomput. 2019, 76, 5038–5056. [Google Scholar] [CrossRef]

- Ernstsson, A.; Li, L.; Kessler, C. SkePU 2: Flexible and Type-Safe Skeleton Programming for Heterogeneous Parallel Systems. Int. J. Parallel Program. 2018, 46, 62–80. [Google Scholar] [CrossRef]

- Augonnet, C.; Thibault, S.; Namyst, R.; Wacrenier, P.A. StarPU: A unified platform for task scheduling on heterogeneous multicore architectures. Concurr. Comput. Pract. Exp. 2011, 23, 187–198. [Google Scholar] [CrossRef]

- Pop, A.; Cohen, A. OpenStream: Expressiveness and data-flow compilation of OpenMP streaming programs. ACM Trans. Archit. Code Optim. 2013, 9. [Google Scholar] [CrossRef]

- Pop, A.; Cohen, A. A Stream-Computing Extension to OpenMP. In Proceedings of the 6th International Conference on High Performance and Embedded Architectures and Compilers, HiPEAC ’11, Crete, Greece, 24–26 January 2011; Association for Computing Machinery: New York, NY, USA, 2011; pp. 5–14. [Google Scholar] [CrossRef]

- Seo, S.; Amer, A.; Balaji, P.; Bordage, C.; Bosilca, G.; Brooks, A.; Carns, P.; Castelló, A.; Genet, D.; Herault, T.; et al. Argobots: A Lightweight Low-Level Threading and Tasking Framework. IEEE Trans. Parallel Distrib. Syst. 2018, 29, 512–526. [Google Scholar] [CrossRef]

- Pereira, A.D.; Ramos, L.; Góes, L.F.W. PSkel: A stencil programming framework for CPU-GPU systems. Concurr. Comput. Pract. Exp. 2015, 27, 4938–4953. [Google Scholar] [CrossRef]

- Balart, J.; Duran, A.; Gonzalez, M.; Martorell, X.; Ayguade, E.; Labarta, J. Skeleton driven transformations for an OpenMP compiler. In Proceedings of the 11th Workshop on Compilers for Parallel Computers (CPC 04), Chiemsee, Germany, 7–9 July 2004; pp. 123–134. [Google Scholar]

- Bondhugula, U.; Baskaran, M.; Krishnamoorthy, S.; Ramanujam, J.; Rountev, A.; Sadayappan, P. Automatic Transformations for Communication-Minimized Parallelization and Locality Optimization in the Polyhedral Model; Compiler Construction; Hendren, L., Ed.; Springer: Berlin/Heidelberg, Germany, 2008; pp. 132–146. [Google Scholar]

- Czarnul, P. Parallelization of Divide-and-Conquer Applications on Intel Xeon Phi with an OpenMP Based Framework. In Information Systems Architecture and Technology: Proceedings of 36th International Conference on Information Systems Architecture and Technology—ISAT 2015—Part III; Swiatek, J., Borzemski, L., Grzech, A., Wilimowska, Z., Eds.; Springer: Cham, Switzerland, 2016; pp. 99–111. [Google Scholar]

- Fernández, A.; Beltran, V.; Martorell, X.; Badia, R.M.; Ayguadé, E.; Labarta, J. Task-Based Programming with OmpSs and Its Application. In Euro-Par 2014: Parallel Processing Workshops; Lopes, L., Žilinskas, J., Costan, A., Cascella, R.G., Kecskemeti, G., Jeannot, E., Cannataro, M., Ricci, L., Benkner, S., Petit, S., et al., Eds.; Springer: Cham, Switzerland, 2014; pp. 601–612. [Google Scholar]

- Vidal, R.; Casas, M.; Moretó, M.; Chasapis, D.; Ferrer, R.; Martorell, X.; Ayguadé, E.; Labarta, J.; Valero, M. Evaluating the Impact of OpenMP 4.0 Extensions on Relevant Parallel Workloads. In OpenMP: Heterogenous Execution and Data Movements; Terboven, C., de Supinski, B.R., Reble, P., Chapman, B.M., Müller, M.S., Eds.; Springer: Cham, Switzerland, 2015; pp. 60–72. [Google Scholar]

- Ciesko, J.; Mateo, S.; Teruel, X.; Beltran, V.; Martorell, X.; Badia, R.M.; Ayguadé, E.; Labarta, J. Task-Parallel Reductions in OpenMP and OmpSs. In Using and Improving OpenMP for Devices, Tasks, and More; DeRose, L., de Supinski, B.R., Olivier, S.L., Chapman, B.M., Müller, M.S., Eds.; Springer: Cham, Switzerland, 2014; pp. 1–15. [Google Scholar]

- Bosch, J.; Vidal, M.; Filgueras, A.; Álvarez, C.; Jiménez-González, D.; Martorell, X.; Ayguadé, E. Breaking master–slave Model between Host and FPGAs. In Proceedings of the 25th ACM SIGPLAN Symposium on Principles and Practice of Parallel Programming, PPoPP ’20, San Diego, CA, USA, 22–26 February 2020; Association for Computing Machinery: New York, NY, USA, 2020; pp. 419–420. [Google Scholar] [CrossRef]

- Dongarra, J.; Gates, M.; Haidar, A.; Kurzak, J.; Luszczek, P.; Wu, P.; Yamazaki, I.; Yarkhan, A.; Abalenkovs, M.; Bagherpour, N.; et al. PLASMA: Parallel Linear Algebra Software for Multicore Using OpenMP. ACM Trans. Math. Softw. 2019, 45. [Google Scholar] [CrossRef]

- Valero-Lara, P.; Catalán, S.; Martorell, X.; Usui, T.; Labarta, J. sLASs: A fully automatic auto-tuned linear algebra library based on OpenMP extensions implemented in OmpSs (LASs Library). J. Parallel Distrib. Comput. 2020, 138, 153–171. [Google Scholar] [CrossRef]

- Schmider, H. Shared-Memory Programming Programming with OpenMP; Ontario HPC Summer School, Centre for Advance Computing, Queen’s University: Kingston, ON, Canada, 2018. [Google Scholar]

- Hadjidoukas, P.E.; Polychronopoulos, E.D.; Papatheodorou, T.S. OpenMP for Adaptive master–slave Message Passing Applications. In High Performance Computing; Veidenbaum, A., Joe, K., Amano, H., Aiso, H., Eds.; Springer: Berlin/Heidelberg, Germany, 2003; pp. 540–551. [Google Scholar]

- Liu, G.; Schmider, H.; Edgecombe, K.E. A Hybrid Double-Layer master–slave Model For Multicore-Node Clusters. J. Phys. Conf. Ser. 2012, 385, 12011. [Google Scholar] [CrossRef]

- Leopold, C.; Süß, M. Observations on MPI-2 Support for Hybrid Master/Slave Applications in Dynamic and Heterogeneous Environments. In Recent Advances in Parallel Virtual Machine and Message Passing Interface; Mohr, B., Träff, J.L., Worringen, J., Dongarra, J., Eds.; Springer: Berlin/Heidelberg, Germany, 2006. [Google Scholar]

- Scogland, T.; de Supinski, B. A Case for Extending Task Dependencies. In OpenMP: Memory, Devices, and Tasks; Maruyama, N., de Supinski, B.R., Wahib, M., Eds.; Springer: Cham, Switzerland, 2016; pp. 130–140. [Google Scholar]

- Wang, C.K.; Chen, P.S. Automatic scoping of task clauses for the OpenMP tasking model. J. Supercomput. 2015, 71, 808–823. [Google Scholar] [CrossRef]

- Wittmann, M.; Hager, G. A Proof of Concept for Optimizing Task Parallelism by Locality Queues. arXiv 2009, arXiv:0902.1884. [Google Scholar]

- Czarnul, P. Programming, Tuning and Automatic Parallelization of Irregular Divide-and-Conquer Applications in DAMPVM/DAC. Int. J. High Perform. Comput. Appl. 2003, 17, 77–93. [Google Scholar] [CrossRef]

- Eijkhout, V.; van de Geijn, R.; Chow, E. Introduction to High Performance Scientific Computing; lulu.com. 2011. Available online: http://www.tacc.utexas.edu/$\sim$eijkhout/istc/istc.html (accessed on 16 April 2021).

- Eijkhout, V. Parallel Programming in MPI and OpenMP. 2016. Available online: https://pages.tacc.utexas.edu/$\sim$eijkhout/pcse/html/index.html (accessed on 16 April 2021).

- Czarnul, P.; Proficz, J.; Krzywaniak, A. Energy-Aware High-Performance Computing: Survey of State-of-the-Art Tools, Techniques, and Environments. Sci. Program. 2019, 2019, 8348791. [Google Scholar] [CrossRef]

- Krzywaniak, A.; Proficz, J.; Czarnul, P. Analyzing Energy/Performance Trade-Offs with Power Capping for Parallel Applications On Modern Multi and Many Core Processors. In Proceedings of the 2018 Federated Conference on Computer Science and Information Systems (FedCSIS), Poznań, Poland, 9–12 September 2018; pp. 339–346. [Google Scholar]

- Krzywaniak, A.; Czarnul, P.; Proficz, J. Extended Investigation of Performance-Energy Trade-Offs under Power Capping in HPC Environments; 2019. In Proceedings of the High Performance Computing Systems Conference, International Workshop on Optimization Issues in Energy Efficient HPC & Distributed Systems, Dublin, Ireland, 15–19 July 2019. [Google Scholar]

- Prabhakar, A.; Getov, V.; Chapman, B. Performance Comparisons of Basic OpenMP Constructs. In High Performance Computing; Zima, H.P., Joe, K., Sato, M., Seo, Y., Shimasaki, M., Eds.; Springer: Berlin/Heidelberg, Germany, 2002; pp. 413–424. [Google Scholar]

- Berrendorf, R.; Nieken, G. Performance characteristics for OpenMP constructs on different parallel computer architectures. Concurr. Pract. Exp. 2000, 12, 1261–1273. [Google Scholar] [CrossRef]

| Testbed | 1 | 2 |

|---|---|---|

| CPUs | Intel(R) Core(TM) i7-7700 CPU 3.60 GHz Kaby Lake, 8 MB cache | 2 × Intel(R) Xeon(R) CPU E5-2620 v4 2.10 GHz Broadwell, 20 MB cache per CPU |

| CPUs— total number of physical/logical processors | 4/8 | 16/32 |

| System memory size (RAM) [GB] | 16 GB | 128 GB |

| Operating system | Ubuntu 18.04.1 LTS | Ubuntu 20.04.1 LTS |

| Compiler/version | gcc version 9.3.0 (Ubuntu 9.3.0-11ubuntu0 18.04.1), | gcc version 9.3.0 (Ubuntu 9.3.0-17ubuntu1 20.04), |

| Part. Coeff. | Version | Time 1/std dev/Affinity/Number of Threads | Time 2/std dev/Affinity/Number of Threads | Time 3/std dev/Affinity/Number of Threads |

|---|---|---|---|---|

| 1 | integrated-master | 18.555/0.062/thrclose/8 | 18.610/0.068/corspread/8 | 18.641/0.086/corclose/8 |

| designated-master | 21.088/0.078/corspread/8 | 21.098/0.090/default/8 | 21.106/0.096/noprocbind/8 | |

| tasking | 18.363/0.047/noprocbind/16 | 18.416/0.094/default/16 | 18.595/0.071/thrclose/8 | |

| tasking2 | 18.394/0.088/noprocbind/16 | 18.411/0.093/default/16 | 18.654/0.092/thrclose/8 | |

| dynamic-for | 18.389/0.079/default/16 | 18.428/0.105/noprocbind/16 | 18.554/0.073/corclose/16 | |

| dynamic-for2 | 18.399/0.093/default/16 | 18.416/0.101/noprocbind/16 | 18.572/0.073/corclose/16 | |

| 8 | integrated-master | 27.333/0.105/thrclose/8 | 27.341/0.106/default/8 | 27.373/0.084/noprocbind/8 |

| designated-master | 30.885/0.102/corspread/8 | 30.898/0.111/thrclose/8 | 30.956/0.130/noprocbind/8 | |

| tasking | 26.844/0.081/default/16 | 26.898/0.146/noprocbind/16 | 27.325/0.116/default/8 | |

| tasking2 | 26.865/0.131/default/16 | 26.901/0.161/noprocbind/16 | 27.299/0.134/thrclose/8 | |

| dynamic-for | 26.865/0.105/default/16 | 26.899/0.155/noprocbind/16 | 27.217/0.121/corspread/16 | |

| dynamic-for2 | 26.830/0.073/noprocbind/16 | 26.930/0.158/default/16 | 27.204/0.115/corclose/16 | |

| 32 | integrated-master | 34.492/0.157/thrclose/8 | 34.526/0.137/corspread/8 | 34.555/0.202/corclose/8 |

| designated-master | 39.005/0.149/thrclose/8 | 39.015/0.199/corclose/8 | 39.039/0.174/default/8 | |

| tasking | 33.816/0.151/noprocbind/16 | 33.889/0.333/default/16 | 34.356/0.149/noprocbind/8 | |

| tasking2 | 33.828/0.174/noprocbind/16 | 33.838/0.152/default/16 | 34.340/0.148/thrclose/8 | |

| dynamic-for | 33.781/0.165/noprocbind/16 | 33.808/0.148/default/16 | 34.354/0.165/thrclose/16 | |

| dynamic-for2 | 33.826/0.180/noprocbind/16 | 33.860/0.155/default/16 | 34.260/0.127/corclose/16 |

| Part. Coeff. | Version | Time 1/std dev/Affinity/Number of Threads | Time 2/std dev/Affinity/Number of Threads | Time 3/std dev/Affinity/Number of Threads |

|---|---|---|---|---|

| 1 | integrated-master | 9.158/0.117/corspread/32 | 9.201/0.145/thrclose/32 | 9.214/0.217/sockets/32 |

| designated-master | 9.585/0.149/corclose/33 | 9.601/0.197/thrclose/33 | 9.638/0.122/default/33 | |

| tasking | 8.567/0.017/default/64 | 8.585/0.027/noprocbind/64 | 8.664/0.025/sockets/64 | |

| tasking2 | 8.599/0.033/default/64 | 8.602/0.025/noprocbind/64 | 8.677/0.026/sockets/64 | |

| dynamic-for | 8.584/0.025/noprocbind/64 | 8.584/0.032/default/64 | 8.649/0.024/sockets/64 | |

| dynamic-for2 | 8.570/0.024/default/64 | 8.573/0.021/noprocbind/64 | 8.636/0.024/sockets/64 | |

| 8 | integrated-master | 13.718/0.127/corclose/32 | 13.748/0.182/corspread/32 | 13.770/0.111/default/32 |

| designated-master | 14.402/0.105/corclose/33 | 14.447/0.529/thrclose/32 | 14.481/0.677/sockets/32 | |

| tasking | 12.724/0.034/default/64 | 12.727/0.040/noprocbind/64 | 12.776/0.038/sockets/64 | |

| tasking2 | 12.749/0.044/default/64 | 12.771/0.035/noprocbind/64 | 12.796/0.044/sockets/64 | |

| dynamic-for | 12.792/0.041/default/64 | 12.796/0.033/noprocbind/64 | 12.845/0.031/sockets/64 | |

| dynamic-for2 | 12.731/0.031/default/64 | 12.753/0.040/noprocbind/64 | 12.811/0.047/sockets/64 | |

| 32 | integrated-master | 17.471/0.080/corspread/32 | 17.486/0.105/corclose/32 | 17.551/0.152/thrclose/32 |

| designated-master | 18.359/0.839/corspread/32 | 18.423/0.447/default/32 | 18.431/0.205/corclose/33 | |

| tasking | 16.116/0.051/noprocbind/64 | 16.120/0.055/sockets/64 | 16.175/0.420/default/64 | |

| tasking2 | 16.119/0.039/default/64 | 16.142/0.062/noprocbind/64 | 16.157/0.042/sockets/64 | |

| dynamic-for | 16.181/0.049/default/64 | 16.210/0.043/noprocbind/64 | 16.228/0.046/sockets/64 | |

| dynamic-for2 | 16.116/0.025/default/64 | 16.119/0.043/noprocbind/64 | 16.152/0.038/sockets/64 |

| Comp. Coeff. | Version | Time 1/std dev/Affinity/Number of Threads | Time 2/std dev/Affinity/Number of Threads | Time 3/std dev/Affinity/Number of Threads |

|---|---|---|---|---|

| 2 | integrated-master | 9.530/0.2104/noprocbind/8 | 9.561/0.173/default/8 | 9.578/0.183/thrclose/8 |

| designated-master | 10.388/0.125/thrclose/8 | 10.434/0.179/noprocbind/8 | 10.450/0.222/default/8 | |

| tasking | 9.576/0.175/default/8 | 9.622/0.166/noprocbind/8 | 9.697/0.188/corclose/8 | |

| tasking2 | 12.762/0.059/noprocbind/8 | 12.777/0.093/thrclose/8 | 12.782/0.081/default/8 | |

| dynamic-for | 9.389/0.131/thrclose/8 | 9.392/0.156/noprocbind/8 | 9.403/0.151/default/8 | |

| dynamic-for2 | 9.378/0.135/thrclose/8 | 9.395/0.165/default/8 | 9.446/0.176/default/16 | |

| 4 | integrated-master | 18.406/0.297/noprocbind/8 | 18.428/0.329/corclose/8 | 18.492/0.352/default/8 |

| designated-master | 20.175/0.196/corspread/8 | 20.219/0.305/default/8 | 20.404/0.367/noprocbind/8 | |

| tasking | 18.505/0.428/noprocbind/8 | 18.514/0.308/thrclose/8 | 18.540/0.353/default/8 | |

| tasking2 | 24.935/0.154/noprocbind/8 | 24.940/0.150/corspread/8 | 24.967/0.244/thrclose/8 | |

| dynamic-for | 18.332/0.264/noprocbind/8 | 18.332/0.475/default/8 | 18.405/0.442/corspread/8 | |

| dynamic-for2 | 18.282/0.229/corspread/8 | 18.318/0.407/thrclose/8 | 18.367/0.408/default/8 | |

| 8 | integrated-master | 35.995/0.678/noprocbind/8 | 36.096/0.726/default/8 | 36.282/0.612/thrclose/8 |

| designated-master | 39.969/0.526/default/8 | 40.120/0.595/corclose/8 | 40.163/0.623/thrclose/8 | |

| tasking | 36.223/0.718/noprocbind/8 | 36.307/0.691/corspread/8 | 36.372/0.664/thrclose/8 | |

| tasking2 | 49.418/0.225/default/8 | 49.438/0.411/noprocbind/8 | 49.444/0.326/corspread/8 | |

| dynamic-for | 35.852/0.503/default/8 | 36.018/0.596/corspread/16 | 36.129/0.597/noprocbind/16 | |

| dynamic-for2 | 35.969/0.462/thrclose/8 | 36.099/0.669/default/8 | 36.190/0.675/noprocbind/8 |

| Comp. Coeff. | Version | Time 1/std dev/Affinity/Number of Threads | Time 2/std dev/Affinity/Number of Threads | Time 3/std dev/Affinity/Number of Threads |

|---|---|---|---|---|

| 2 | integrated-master | 6.406/0.321/thrclose/32 | 6.880/0.918/default/32 | 7.002/0.738/corclose/32 |

| designated-master | 6.283/0.311/sockets/33 | 6.644/0.364/noprocbind/33 | 6.697/0.463/default/33 | |

| tasking | 6.164/0.145/corclose/64 | 6.223/0.181/corspread/64 | 6.249/0.117/sockets/64 | |

| tasking2 | 5.981/0.208/corclose/64 | 5.995/0.165/corspread/64 | 5.997/0.067/sockets/64 | |

| dynamic-for | 5.705/0.208/default/32 | 5.722/0.105/sockets/64 | 5.739/0.088/corclose/32 | |

| dynamic-for2 | 5.682/0.072/sockets/32 | 5.697/0.055/noprocbind/32 | 5.709/0.099/default/32 | |

| 4 | integrated-master | 11.583/0.564/noprocbind/32 | 11.661/0.218/sockets/32 | 11.716/0.420/corclose/32 |

| designated-master | 11.808/1.572/corclose/32 | 11.857/1.097/sockets/33 | 11.878/0.803/noprocbind/33 | |

| tasking | 10.848/0.085/default/32 | 10.889/0.128/corclose/32 | 10.903/0.142/sockets/32 | |

| tasking2 | 10.460/0.141/sockets/32 | 10.472/0.145/corspread/32 | 10.485/0.170/default/32 | |

| dynamic-for | 10.625/0.140/default/32 | 10.629/0.133/corclose/32 | 10.635/0.161/noprocbind/32 | |

| dynamic-for2 | 10.585/0.150/sockets/32 | 10.598/0.100/noprocbind/32 | 10.610/0.140/default/32 | |

| 8 | integrated-master | 20.556/0.620/noprocbind/32 | 20.595/0.708/corclose/32 | 20.738/0.861/corspread/32 |

| designated-master | 20.705/0.836/default/33 | 20.924/4.271/sockets/32 | 21.224/0.987/noprocbind/33 | |

| tasking | 20.014/0.197/sockets/32 | 20.054/0.201/corclose/32 | 20.076/0.235/corspread/32 | |

| tasking2 | 19.120/0.305/noprocbind/32 | 19.152/0.187/sockets/32 | 19.240/0.292/corspread/32 | |

| dynamic-for | 19.758/0.193/default/32 | 19.825/0.210/thrclose/32 | 19.828/0.219/corspread/32 | |

| dynamic-for2 | 19.816/0.229/noprocbind/32 | 19.828/0.249/default/32 | 19.863/0.256/thrclose/32 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Czarnul, P. Assessment of OpenMP Master–Slave Implementations for Selected Irregular Parallel Applications. Electronics 2021, 10, 1188. https://doi.org/10.3390/electronics10101188

Czarnul P. Assessment of OpenMP Master–Slave Implementations for Selected Irregular Parallel Applications. Electronics. 2021; 10(10):1188. https://doi.org/10.3390/electronics10101188

Chicago/Turabian StyleCzarnul, Paweł. 2021. "Assessment of OpenMP Master–Slave Implementations for Selected Irregular Parallel Applications" Electronics 10, no. 10: 1188. https://doi.org/10.3390/electronics10101188

APA StyleCzarnul, P. (2021). Assessment of OpenMP Master–Slave Implementations for Selected Irregular Parallel Applications. Electronics, 10(10), 1188. https://doi.org/10.3390/electronics10101188