Visual-Feedback-Based Frame-by-Frame Synchronization for 3000 fps Projector–Camera Visual Light Communication †

Abstract

:1. Introduction

2. Visual Feedback-Based Projector-Camera Synchronization

2.1. System Configuration

2.2. Visual-Feedback-Based Projector–Camera Synchronization

2.3. Verification

3. Real-Time Video Streaming Using Vlc System

3.1. Transmitter

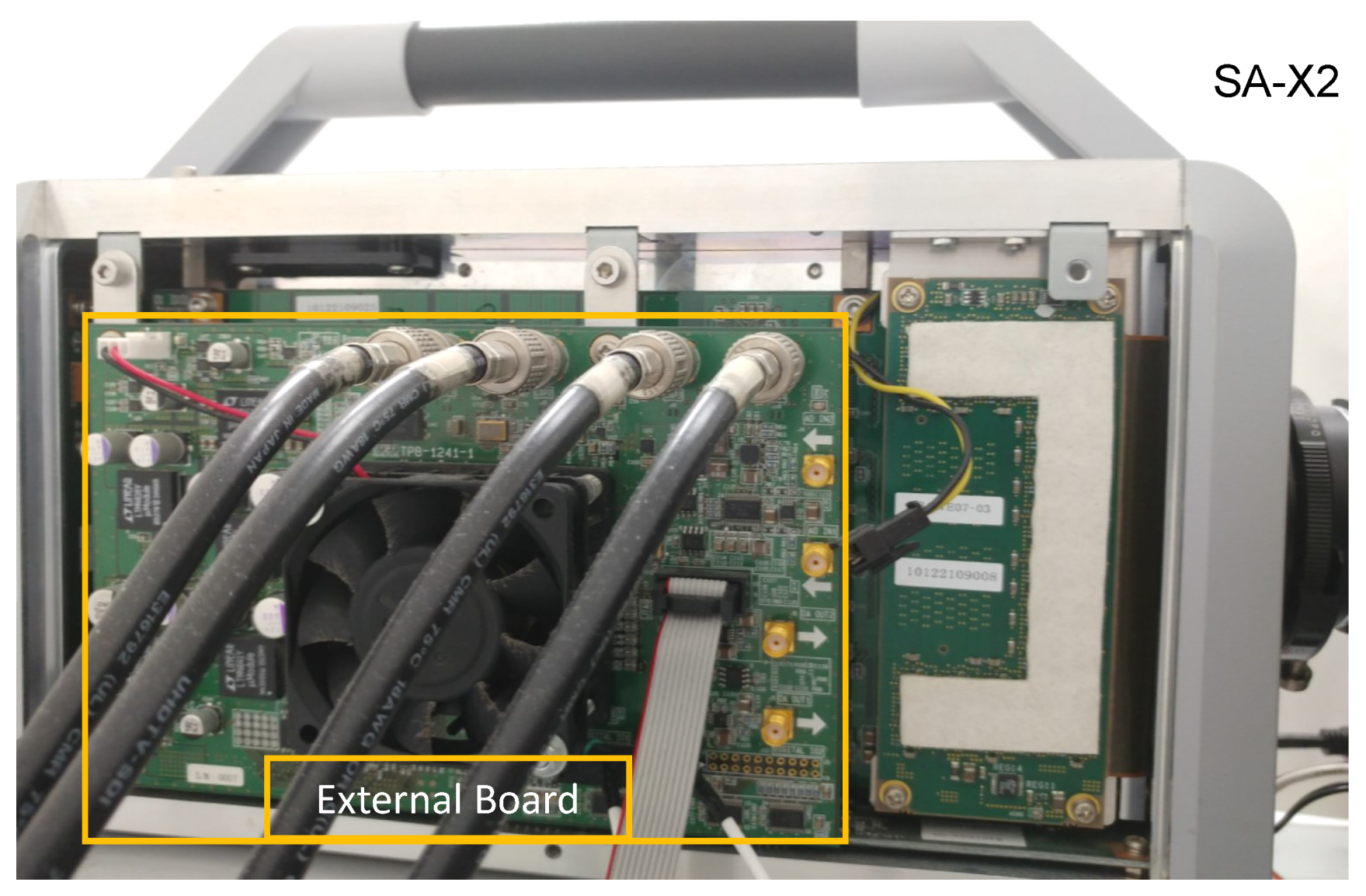

3.2. Receiver

3.3. Evaluation Parameter of Image Quality

4. Experiments

4.1. Synchronized Real-Time Video Reconstruction

4.2. Real-Time Video Reconstruction Using Two HFR Projectors

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Cevik, T.; Yilmaz, S. An overview of visible light communication systems. IJCNC 2015, 7, 139–150. [Google Scholar] [CrossRef]

- Bhalerao, M.; Sonavane, S.; Kumar, V. A survey of wireless communication using visible light. Int. J. Adv. Eng. Technol. 2013, 5, 188–197. [Google Scholar]

- Jovicic, A.; Li, J.; Richardson, T. Visible light communication: Opportunities, challenges and the path to market. IEEE Commun. Mag. 2013, 51, 26–32. [Google Scholar] [CrossRef]

- Haruyama, S.; Yamazato, T. [Tutorial] Visible light communications. In Proceedings of the IEEE International Conference on Communications, Kyoto, Japan, 5–9 June 2011. [Google Scholar]

- Yamazato, T.; Takai, I.; Okada, H.; Fujii, T.; Yendo, T.; Arai, S.; Andoh, M.; Harada, T.; Yasutomi, K.; Kagawa, K.; et al. Image sensor based visible light communication for automotive applications. IEEE Commun. Mag. 2014, 52, 88–97. [Google Scholar] [CrossRef]

- Chaudhary, N.; Alves, L.N.; Ghassemlooy, Z. Current Trends on Visible Light Positioning Techniques. In Proceedings of the 2019 2nd West Asian Colloquium on Optical Wireless Communications (WACOWC), Tehran, Iran, 27–28 April 2019; pp. 100–105. [Google Scholar]

- Chaudhary, N.; Younus, O.I.; Alves, L.N.; Ghassemlooy, Z.; Zvanovec, S.; Le-Minh, H. An Indoor Visible Light Positioning System Using Tilted LEDs with High Accuracy. Sensors 2021, 21, 920. [Google Scholar] [CrossRef]

- Palacios Játiva, P.; Román Cañizares, M.; Azurdia-Meza, C.A.; Zabala-Blanco, D.; Dehghan Firoozabadi, A.; Seguel, F.; Montejo-Sánchez, S.; Soto, I. Interference Mitigation for Visible Light Communications in Underground Mines Using Angle Diversity Receivers. Sensors 2020, 20, 367. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Rajagopal, N.; Lazik, P.; Rowe, A. Visual light landmarks for mobile devices. In Proceedings of the 13th International Symposium on Information Processing in Sensor Networks, Berlin, Germany, 15–17 April 2014; pp. 249–260. [Google Scholar]

- Boubezari, R.; Le Minh, H.; Bouridane; Pham, A. Data detection for Smartphone visible light communications. In Proceedings of the 9th International Symposium on Communication Systems, Networks and Digital Signal Processing (CSNDSP), Manchester, UK, 23–25 July 2014; pp. 1034–1038. [Google Scholar]

- Corbellini, G.; Akşit, K.; Schmid, S.; Mangold, S.; Gross, T. Connecting networks of toys and smartphones with visible light communication. IEEE Commun. Mag. 2014, 52, 72–78. [Google Scholar] [CrossRef]

- Kasashima, T.; Yamazato, T.; Okada, H.; Fujii, T.; Yendo, T.; Arai, S. Interpixel interference cancellation method for road-to-vehicle visible light communication. In Proceedings of the 2013 IEEE 5th International Symposium on Wireless Vehicular Communications (WiVeC), Dresden, Germany, 2–3 June 2013; pp. 1–5. [Google Scholar]

- Chinthaka, H.; Premachandra, N.; Yendo, T.; Yamasato, T.; Fujii, T.; Tanimoto, M.; Kimura, Y. Detection of LED traffic light by image processing for visible light communication system. In Proceedings of the 2009 IEEE Intelligent Vehicles Symposium, Xi’an, China, 3–5 June 2009; pp. 179–184. [Google Scholar]

- Nakajima, M.; Haruyama, S. New indoor navigation system for visually impaired people using visible light communication. EURASIP J. Wirel. Commun. Netw. 2013, 2013, 1–10. [Google Scholar] [CrossRef] [Green Version]

- Uchiyama, H.; Yoshino, M.; Saito, H.; Nakagawa, M.; Haruyama, S.; Kakehashi, T.; Nagamoto, N. Photogrammetric system using visible light communication. In Proceedings of the 34th Annual Conference of IEEE Industrial Electronics (IECON), Orlando, FL, USA, 10–13 November 2008; pp. 1771–1776. [Google Scholar]

- Mikami, H.; Kakehashi, T.; Nagamoto, N.; Nakagomi, M.; Takeomi, Y. Practical Applications of 3D Positioning Systemusing Visible Light Communication; Sumitomo Mitsui Construction Co. Ltd.: Tokyo, Japan, 2011; pp. 79–84. [Google Scholar]

- Tanaka, T.; Haruyama, S. New position detection method using image sensor and visible light LEDs. In Proceedings of the 2nd International Conference on Machine Vision (ICMV), Dubai, United Arab Emirates, 28–30 December 2009; pp. 150–153. [Google Scholar]

- Nakazawa, Y.; Makino, H.; Nishimori, K.; Wakatsuki, D.; Komagata, H. Indoor positioning using a high-speed, fish-eye lens-equipped camera in visible light communication. In Proceedings of the 2013 International Conference on Indoor Positioning and Indoor Navigation (IPIN), Montbeliard, France, 2–31 October 2013. [Google Scholar]

- Nakazawa, Y.; Makino, H.; Nishimori, K.; Wakatsuki, D.; Komagata, H. High-speed, fish-eye lens-equipped camera based indoor positioning using visible light communication. In Proceedings of the 2015 International Conference on Indoor Positioning and Indoor Navigation (IPIN), Banff, AB, Canada, 13–16 October 2015. [Google Scholar]

- Wang, J.; Kang, Z.; Zou, N. Research on indoor visible light communication system employing white LED lightings. In Proceedings of the IET International Conference on Communication Technology and Application (ICCTA 2011), Beijing, China, 14–16 October 2011; pp. 934–937. [Google Scholar]

- Bui, T.C.; Kiravittaya, S. Demonstration of using camera communication based infrared LED for uplink in indoor visible light communication. In Proceedings of the IEEE Sixth International Conference on Communications and Electronics (ICCE), Ha Long, Vietnam, 27–29 July 2016; pp. 71–76. [Google Scholar]

- Nitta, T.; Mimura, A.; Harashima, H. Virtual Shadows in Mixed Reality Environment Using Flashlight-Like Devices. Trans. Virtual Real. Soc. 2002, 7, 227–237. [Google Scholar]

- Nii, H.; Hashimoto, Y.; Sugimoto, M.; Inami, M. Optical interface using LED array projector. Trans. Virtual Real. Soc. 2007, 12, 109–117. [Google Scholar]

- Dai, J.; Chung, R. Embedding imperceptible codes into video projection and applications in robotics. In Proceedings of the 2012 IEEE/RSJ International Conference on Intelligent Robots and Systems, Vilamoura, Portugal, 7–12 October 2012; pp. 4399–4404. [Google Scholar]

- Zhang, B.; Ren, K.; Xing, G.; Fu, X.; Wang, C. SBVLC: Secure barcode-based visible light communication for smartphones. IEEE Trans. Mob. Comput. 2016, 15, 432–446. [Google Scholar] [CrossRef] [Green Version]

- Watanabe, Y.; Komuro, T.; Ishikawa, M. 955-fps real-time shape measurement of a moving/deforming object using high-speed vision for numerous-point analysis. In Proceedings of the IEEE International Conference on Robotics and Automation, Roma, Italy, 10–14 April 2007; pp. 3192–3197. [Google Scholar]

- Ishii, I.; Taniguchi, T.; Sukenobe, R.; Yamamoto, K. Development of high-speed and real-time vision platform, H3 vision. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), St. Louis, MO, USA, 10–15 October 2009; pp. 3671–3678. [Google Scholar]

- Ishii, I.; Tatebe, T.; Gu, Q.; Moriue, Y.; Takaki, T.; Tajima, K. 2000 fps real-time vision system with high-frame-rate video recording. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), Anchorage, AK, USA, 3–7 May 2010; pp. 1536–1541. [Google Scholar]

- Sharma, A.; Shimasaki, K.; Gu, Q.; Chen, J.; Aoyama, T.; Takaki, T.; Ishii, I.; Tamura, K.; Tajima, K. Super high-speed vision platform that can process 1024 × 1024 images in real time at 12,500 fps. In Proceedings of the IEEE/SICE International Symposium on System Integration, Sapporo, Japan, 13–15 December 2016; pp. 544–549. [Google Scholar]

- Yamazaki, T.; Katayama, H.; Uehara, S.; Nose, A.; Kobayashi, M.; Shida, S.; Odahara, M.; Takamiya, K.; Hisamatsu, Y.; Matsumoto, S.; et al. A 1ms high-speed vision chip with 3D-stacked 140GOPS column-parallel PEs for spatio-temporal image processing. In Proceedings of the IEEE International Solid-State Circuits Conference (ISSCC), San Francisco, CA, USA, 5–9 February 2017; pp. 82–83. [Google Scholar]

- Gu, Q.; Raut, S.; Okumura, K.; Aoyama, T.; Takaki, T.; Ishii, I. Real-time image mosaicing system using a high-frame-rate video sequence. J. Robot. Mechat. 2015, 27, 204–215. [Google Scholar] [CrossRef]

- Ishii, I.; Tatebe, T.; Gu, Q.; Takaki, T. Color-histogram-based tracking at 2000 fps. J. Electron. Imaging 2012, 21, 013010. [Google Scholar] [CrossRef]

- Ishii, I.; Taniguchi, T.; Yamamoto, K.; Takaki, T. High-frame-rate optical flow system. IEEE Trans. Circ. Sys. Video Tech. 2012, 22, 105–112. [Google Scholar] [CrossRef]

- Gu, Q.; Nakamura, N.; Aoyama, T.; Takaki, T.; Ishii, I. A full-pixel optical flow system using a GPU-based high-frame-rate vision. In Proceedings of the 2015 Conference on Advances in Robotics, Goa, India, 2–4 July 2015. [Google Scholar]

- Gao, H.; Aoyama, T.; Takaki, T.; Ishii, I. A Self-Projected Light-Section Method for Fast Three-Dimensional Shape Inspection. Int. J. Optomechatron. 2012, 6, 289–303. [Google Scholar] [CrossRef] [Green Version]

- Liu, Y.; Gao, H.; Gu, Q.; Aoyama, T.; Takaki, T.; Ishii, I. High-frame-rate structured light 3-D vision for fast moving objects. J. Robot. Mechatron. 2014, 26, 311–320. [Google Scholar] [CrossRef]

- Li, B.; An, Y.; Cappelleri, D.; Xu, J.; Zhang, S. High-accuracy, high-speed 3D structured light imaging techniques and potential applications to intelligent robotics. Int. J. Intell. Robot Appl. 2017, 1, 86–103. [Google Scholar] [CrossRef]

- Moreno, D.; Calakli, F.; Taubin, G. Unsynchronized structured light. ACM Trans. Graph. 2015, 34, 1–11. [Google Scholar] [CrossRef]

- Chen, J.; Yamamoto, T.; Aoyama, T.; Takaki, T.; Ishii, I. Simultaneous projection mapping using high-frame-rate depth vision. In Proceedings of the 2014 IEEE International Conference on Robotics and Automation (ICRA), Hong Kong, China, 31 May–7 June 2014; pp. 4506–4511. [Google Scholar]

- Watanabe, Y.; Narita, G.; Tatsuno, S.; Yuasa, T.; Sumino, K.; Ishikawa, M. High-speed 8-bit image projector at 1000 fps with 3 ms delay. In Proceedings of the International Display Workshops (IDW2015), Shiga, Japan, 11 December 2015; pp. 1064–1065. [Google Scholar]

- Narita, G.; Watanabe, Y.; Ishikawa, M. Dynamic projection mapping onto deforming non-rigid surface using deformable dot cluster marker. IEEE Trans. Vis. Comput. Graph. 2017, 23, 1235–1248. [Google Scholar] [CrossRef]

- Fleischmann, O.; Koch, R. Fast projector-camera calibration for interactive projection mapping. In Proceedings of the 23rd International Conference on Pattern Recognition (ICPR), Cancun, Mexico, 4–8 December 2016; pp. 3798–3803. [Google Scholar]

- Hornbeck, L.J. Digital light processing and MEMS: Timely convergence for a bright future. In Proceedings of the Plenary Session, SPIE Micromachining and Microfabrication’95, Austin, TX, USA, 24 October 1995. [Google Scholar]

- Younse, J.M. Projection display systems based on the Digital Micromirror Device (DMD). In Proceedings of the SPIE Conference on Microelectronic Structures and Microelectromechanical Devices for Optical Processing and Multimedia Applications, Austin, TX, USA, 24 October 1995; Volume 2641, pp. 64–75. [Google Scholar]

- Fujiyoshi, H.; Shimizu, S.; Nishi, T. Fast 3D Position Measurement with Two Unsynchronized Cameras. In Proceedings of the 2003 IEEE International Symposium on Computational Intelligence in Robotics and Automation, Kobe, Japan, 16–20 July 2003; pp. 1239–1244. [Google Scholar]

- El Asmi, C.; Roy, S. Fast Unsynchronized Unstructured Light. In Proceedings of the 2018 15th Conference on Computer and Robot Vision (CRV), Toronto, ON, Canada, 8–10 May 2018; pp. 277–284. [Google Scholar]

- Tuytelaars, T.; Gool, L.V. Synchronizing Video Sequences. In Proceedings of the 2004 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Washington, DC, USA, 27 June–2 July 2004; Volume 1, pp. 762–768. [Google Scholar]

- Wolf, L.; Zomet, A. Correspondence-Free Synchronization and Reconstruction in a Non-Rigid Scene. In Proceedings of the Workshop on Vision and Modeling of Dynamic Scenes, Copenhagen, Denmark, 28–31 May 2002; pp. 1–19. [Google Scholar]

- Tresadern, P.; Reid, I. Synchronizing Image Sequences of Non-Rigid Objects. In Proceedings of the British Machine Vision Conference, Norwich, UK, 9–11 September 2003; Volume 2, pp. 629–638. [Google Scholar]

- Whitehead, A.; Laganiere, R.; Bose, P. Temporal Synchronization of Video Sequences in Theory and in Practice. In Proceedings of the IEEE Workshop on Motion and Video Computing, Breckenridge, CO, USA, 5–7 January 2005; pp. 132–137. [Google Scholar]

- Rai, P.K.; Tiwari, K.; Guha, P.; Mukerjee, A. A Cost-effective Multiple Camera Vision System Using FireWire Cameras and Software Synchronization. In Proceedings of the 10th International Conference on High Performance Computing, Hyderabad, India, 17–20 December 2003. [Google Scholar]

- Litos, G.; Zabulis, X.; Triantafyllidis, G. Synchronous Image Acquisition based on Network Synchronization. In Proceedings of the Conference on Computer Vision and Pattern Recognition Workshop, Washington, DC, USA, 17 June 2006; p. 167. [Google Scholar]

- Cho, H. Time Synchronization for Multi-hop Surveillance Camera Systems. In Theory and Applications of Smart Cameras; KAIST Research Series; Kyung, C.M., Ed.; Springer: Dordrecht, The Netherlands, 21 July 2015. [Google Scholar]

- Ansari, S.; Wadhwa, N.; Garg, R.; Chen, J. Wireless Software Synchronization of Multiple Distributed Cameras. In Proceedings of the 2019 IEEE International Conference on Computational Photography (ICCP), Tokyo, Japan, 15–17 May 2019; pp. 1–9. [Google Scholar]

- Sivrikaya, F.; Yener, B. Time synchronization in sensor networks: A survey. IEEE Netw. 2004, 18, 45–50. [Google Scholar] [CrossRef]

- Hou, L.; Kagami, S.; Hashimoto, K. Illumination-Based Synchronization of High-Speed Vision Sensors. Sensors 2010, 10, 5530–5547. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Sharma, A.; Raut, S.; Shimasaki, K.; Senoo, T.; Ishii, I. HFR Projector Camera Based Visible Light Communication System for Real-Time Video Streaming. Sensors 2020, 20, 5368. [Google Scholar] [CrossRef] [PubMed]

- Hornbeck, L.J. Digital light processing: A new MEMS-based display technology. In Proceedings of the Technical Digest of the IEEJ 14th Sensor Symposium, Kawasaki, Japan, 4–5 June 1996; pp. 297–304. [Google Scholar]

- Gove, R.J. DMD display systems: The impact of an all-digital display. In Proceedings of the Information Display International Symposium, Austin, TX, USA, 13 September 1994; pp. 1–12. [Google Scholar]

- Hornbeck, L.J. Digital light processing and MEMS: An overview. In Proceedings of the Digest IEEE/Leos 1996 Summer Topical Meeting, Advanced Applications of Lasers in Materials and Processing, Keystone, CO, USA, 5–9 August 1996; pp. 7–8. [Google Scholar]

- Wang, Z.; Bovik, A.C.; Simoncelli, E.P. Image quality assessment: From error visibility to structural similarity. IEEE Trans. Image Process. 2004, 13, 600–612. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Blender Foundation. 2008. Available online: www.bigbuckbunny.org (accessed on 6 July 2020).

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Sharma, A.; Raut, S.; Shimasaki, K.; Senoo, T.; Ishii, I. Visual-Feedback-Based Frame-by-Frame Synchronization for 3000 fps Projector–Camera Visual Light Communication. Electronics 2021, 10, 1631. https://doi.org/10.3390/electronics10141631

Sharma A, Raut S, Shimasaki K, Senoo T, Ishii I. Visual-Feedback-Based Frame-by-Frame Synchronization for 3000 fps Projector–Camera Visual Light Communication. Electronics. 2021; 10(14):1631. https://doi.org/10.3390/electronics10141631

Chicago/Turabian StyleSharma, Atul, Sushil Raut, Kohei Shimasaki, Taku Senoo, and Idaku Ishii. 2021. "Visual-Feedback-Based Frame-by-Frame Synchronization for 3000 fps Projector–Camera Visual Light Communication" Electronics 10, no. 14: 1631. https://doi.org/10.3390/electronics10141631

APA StyleSharma, A., Raut, S., Shimasaki, K., Senoo, T., & Ishii, I. (2021). Visual-Feedback-Based Frame-by-Frame Synchronization for 3000 fps Projector–Camera Visual Light Communication. Electronics, 10(14), 1631. https://doi.org/10.3390/electronics10141631