Investigation of Shape-from-Focus Precision by Texture Frequency Analysis

Abstract

:1. Introduction

2. Materials and Methods

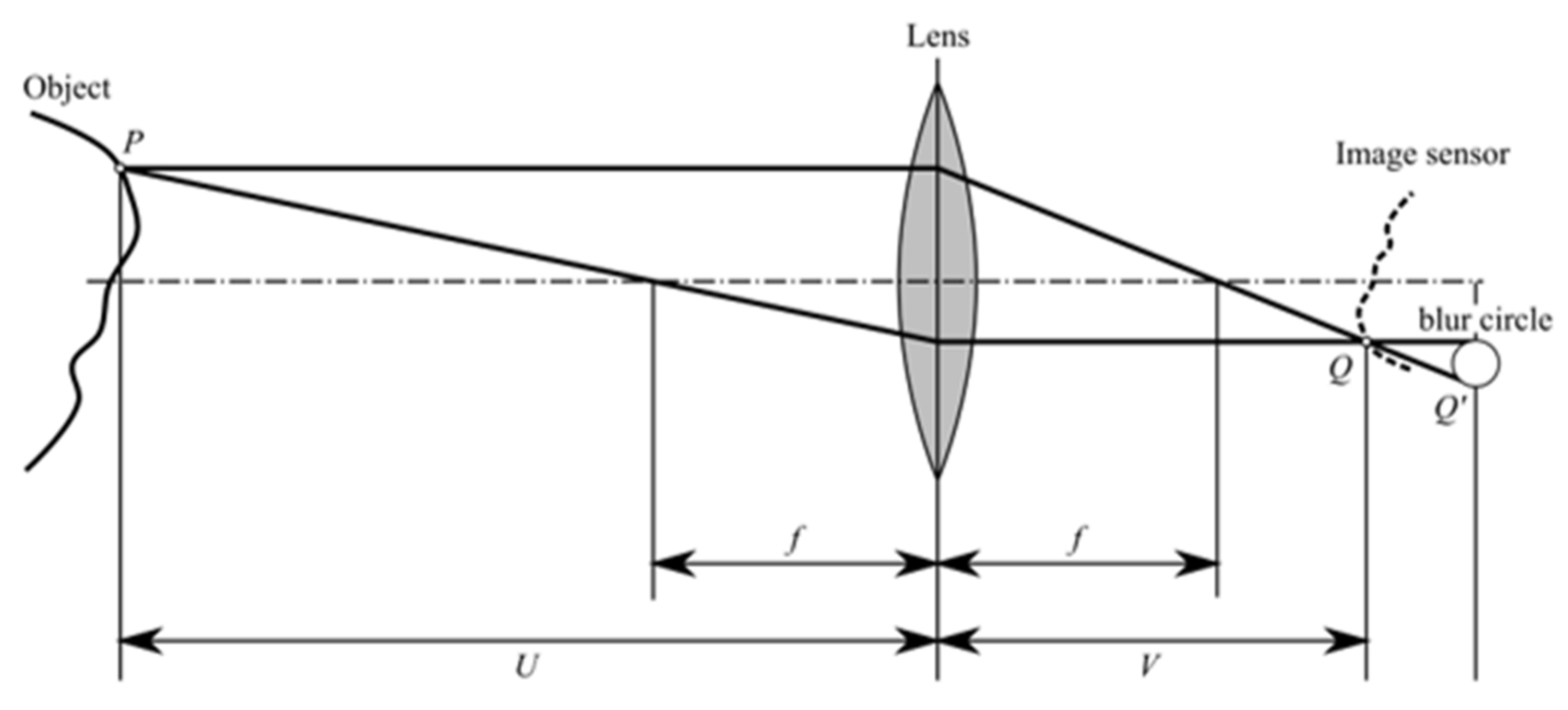

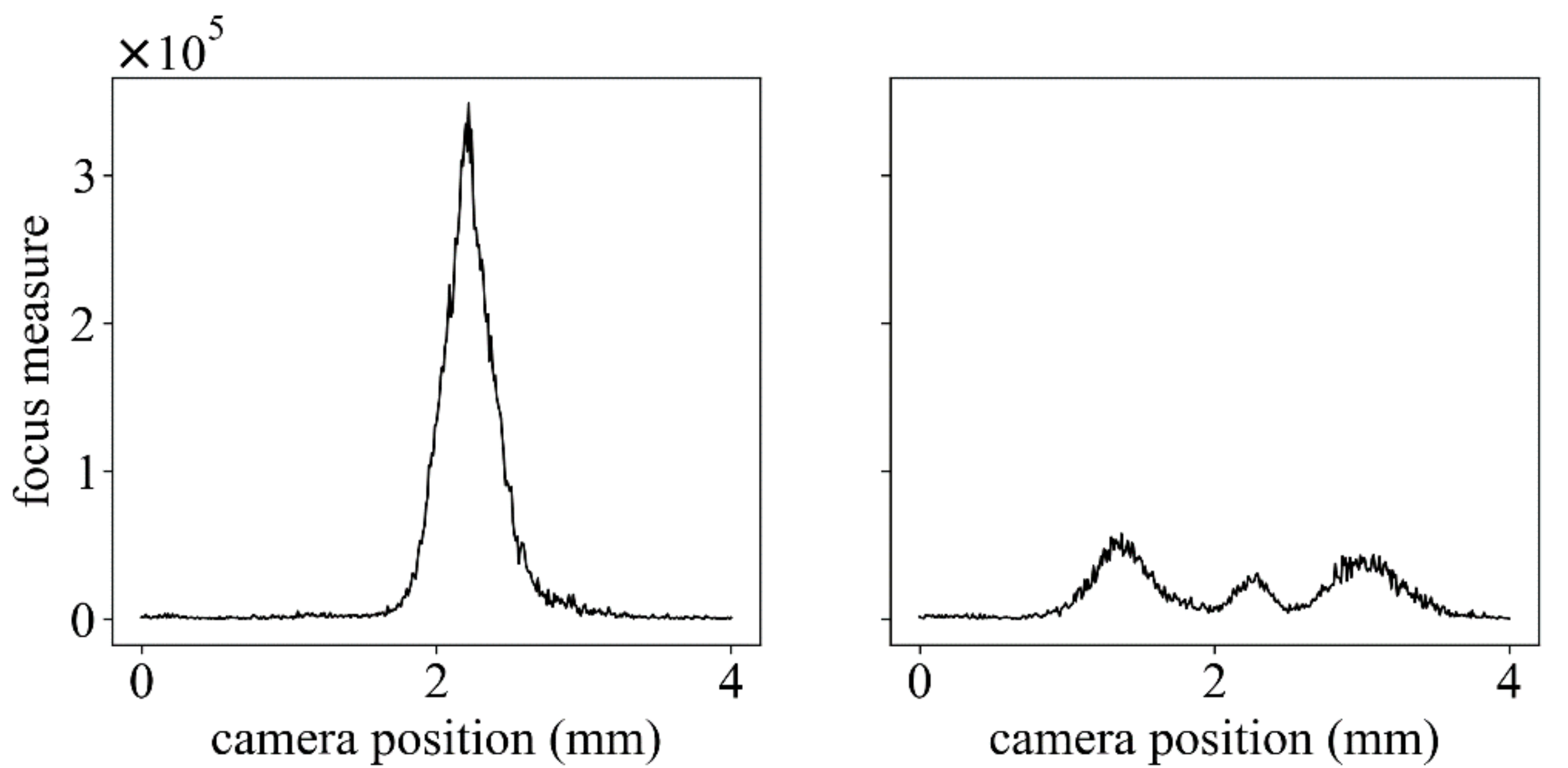

2.1. Shape-from-Focus

2.2. Experimental Setup

3. Experiments

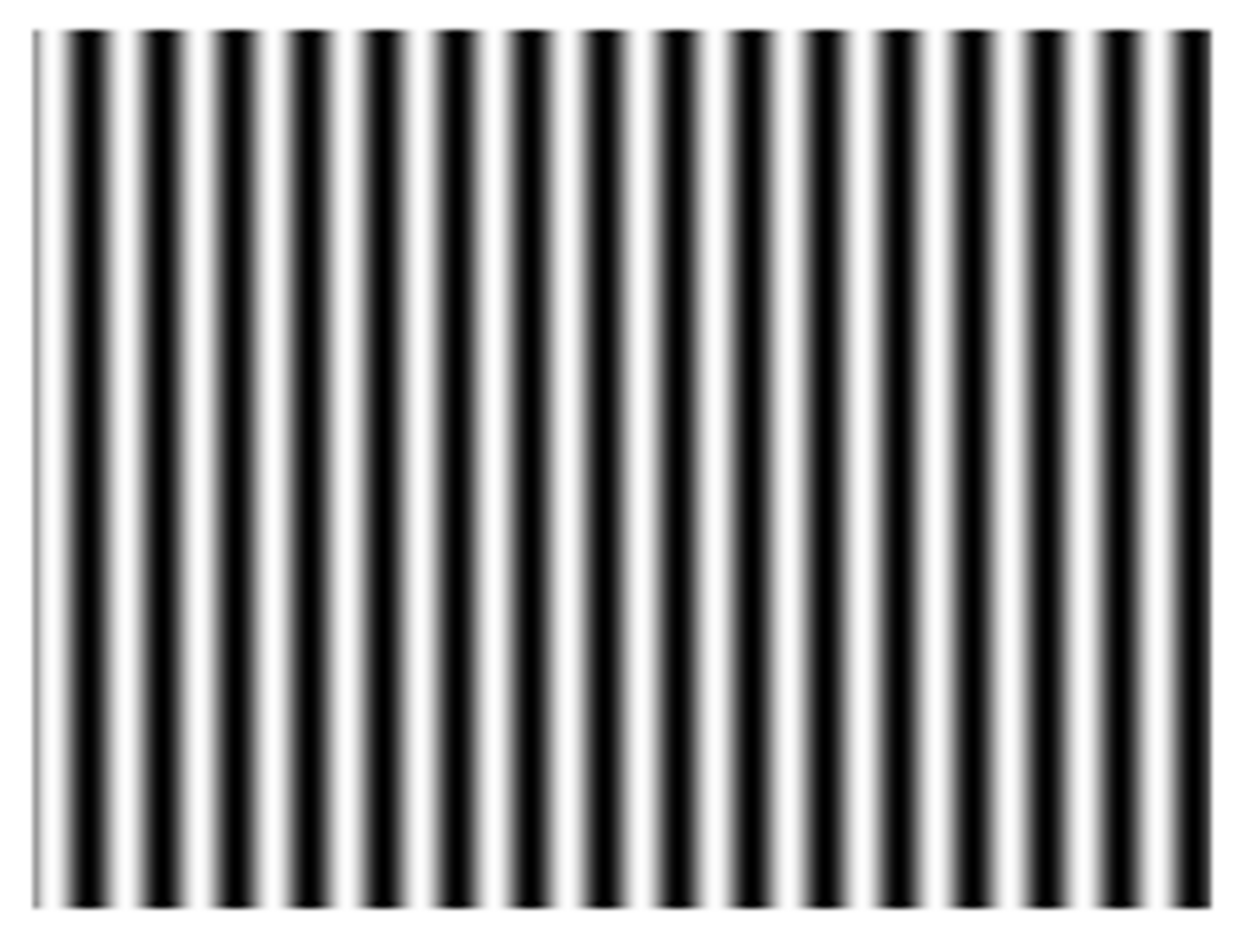

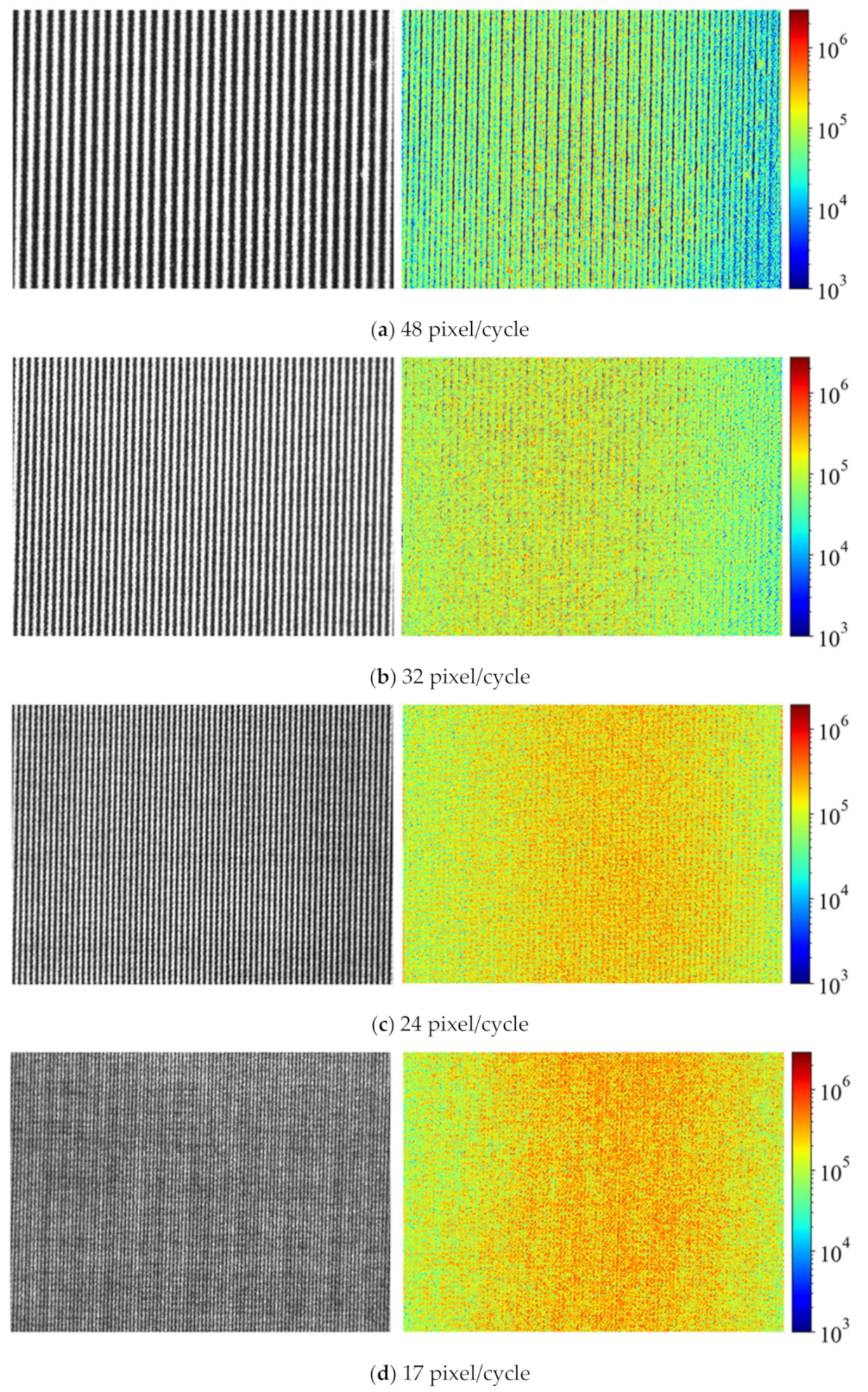

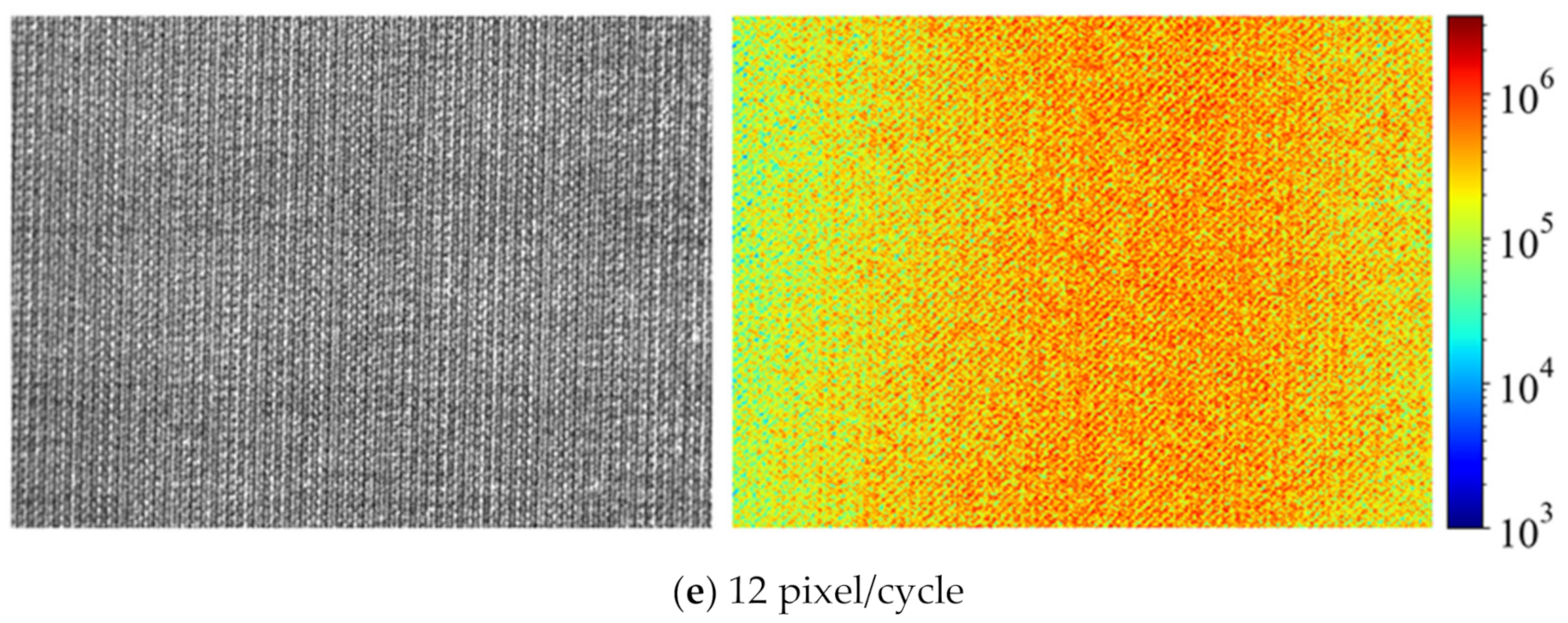

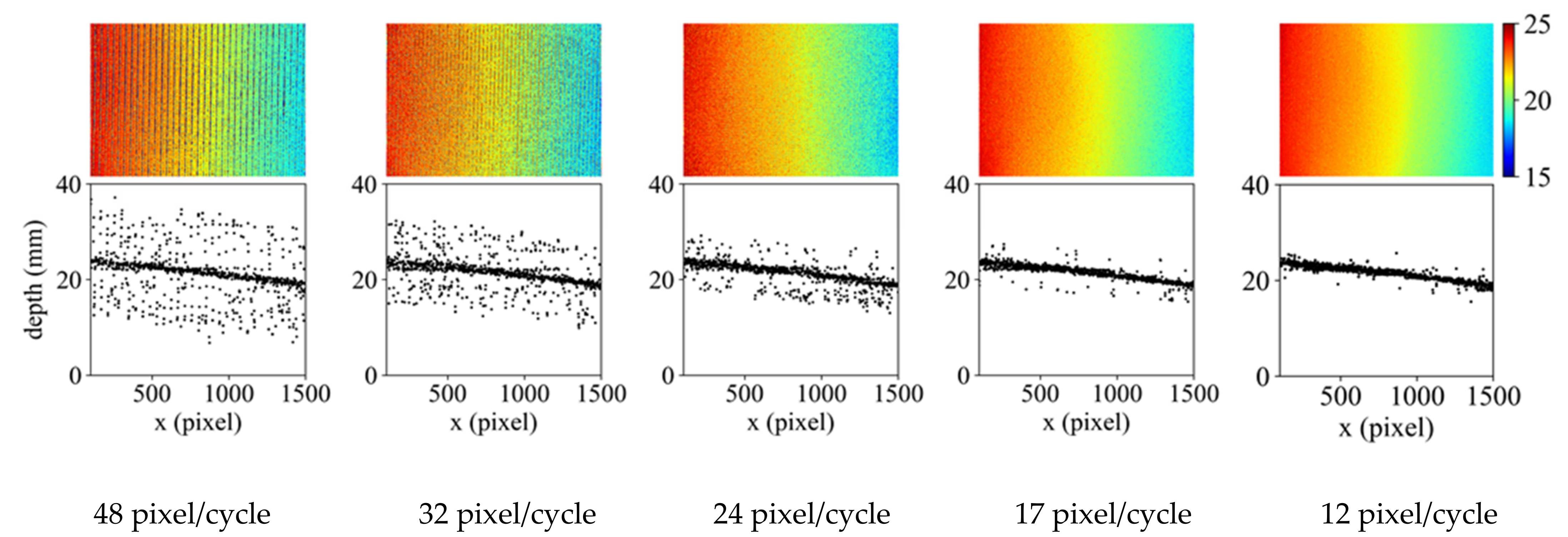

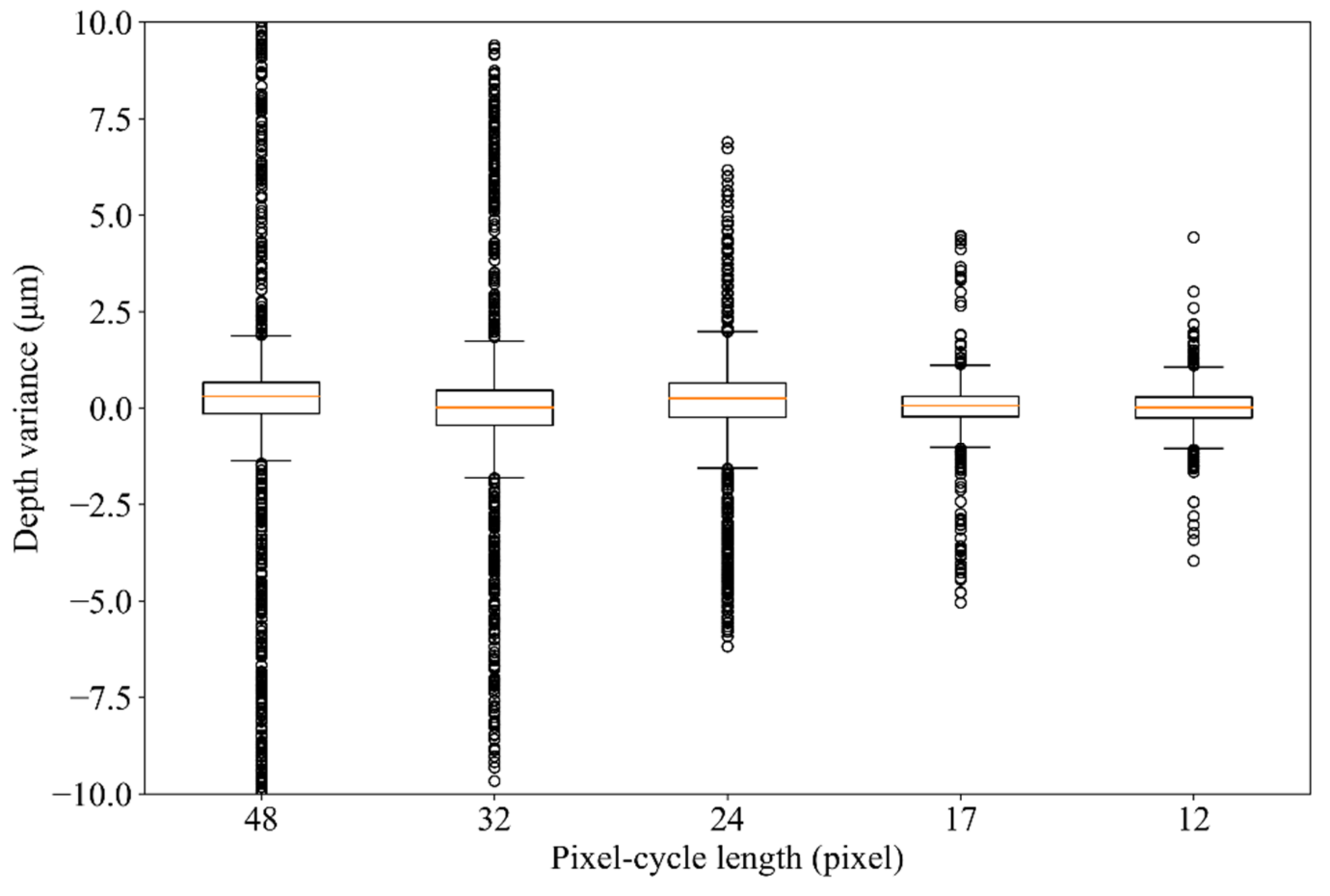

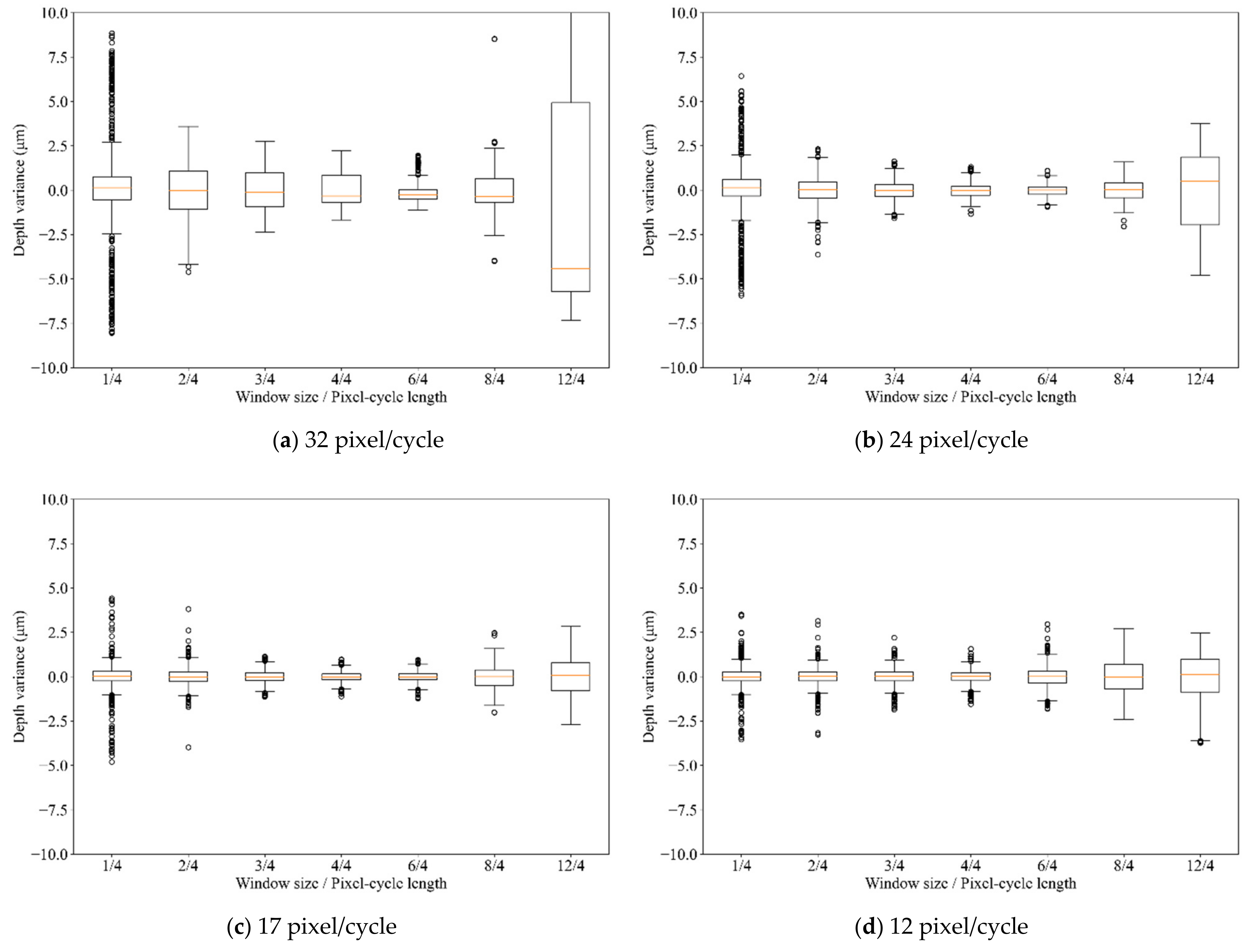

3.1. Precision Validation by Texture Frequencies

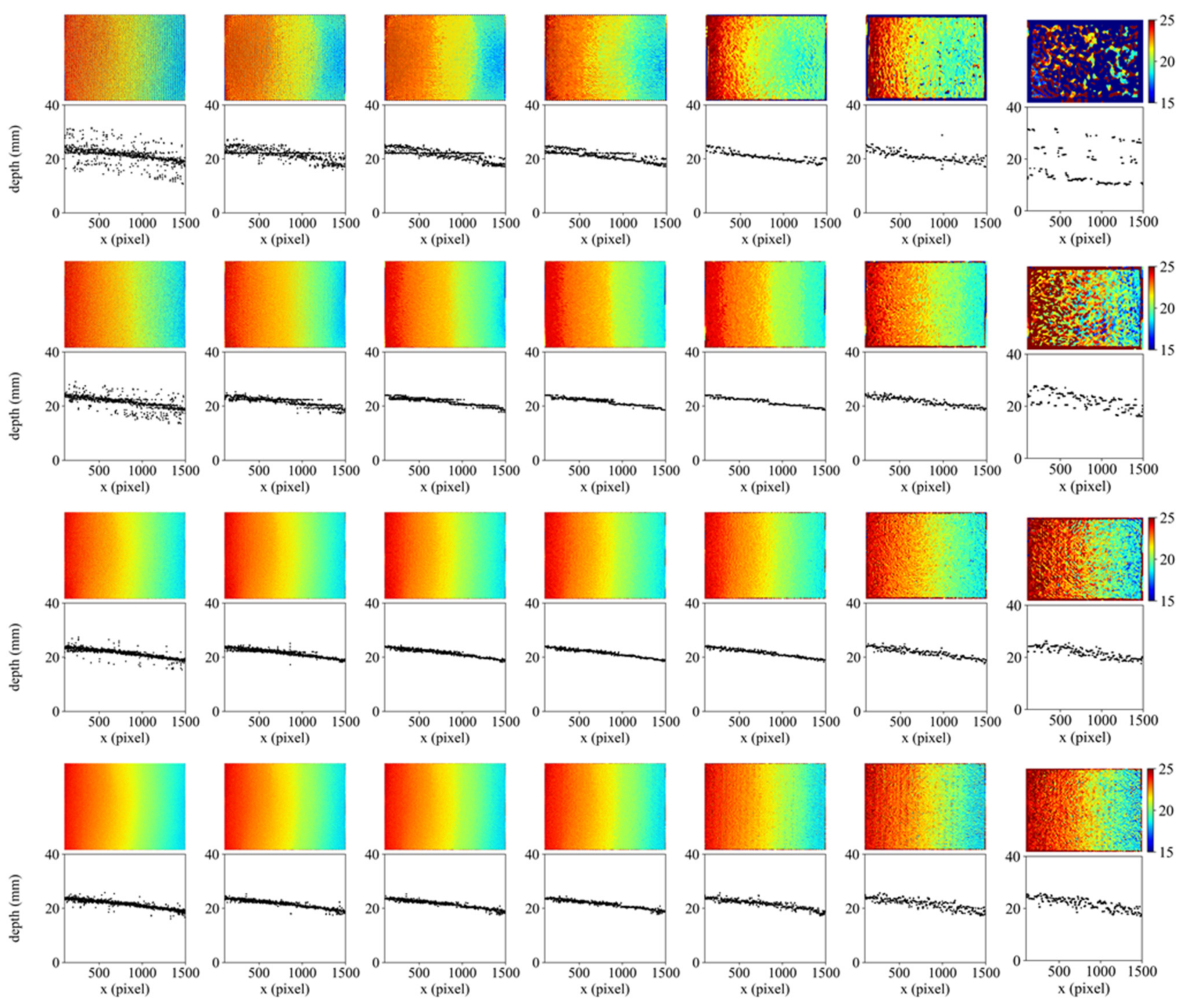

3.2. SFF Precision by Window Size

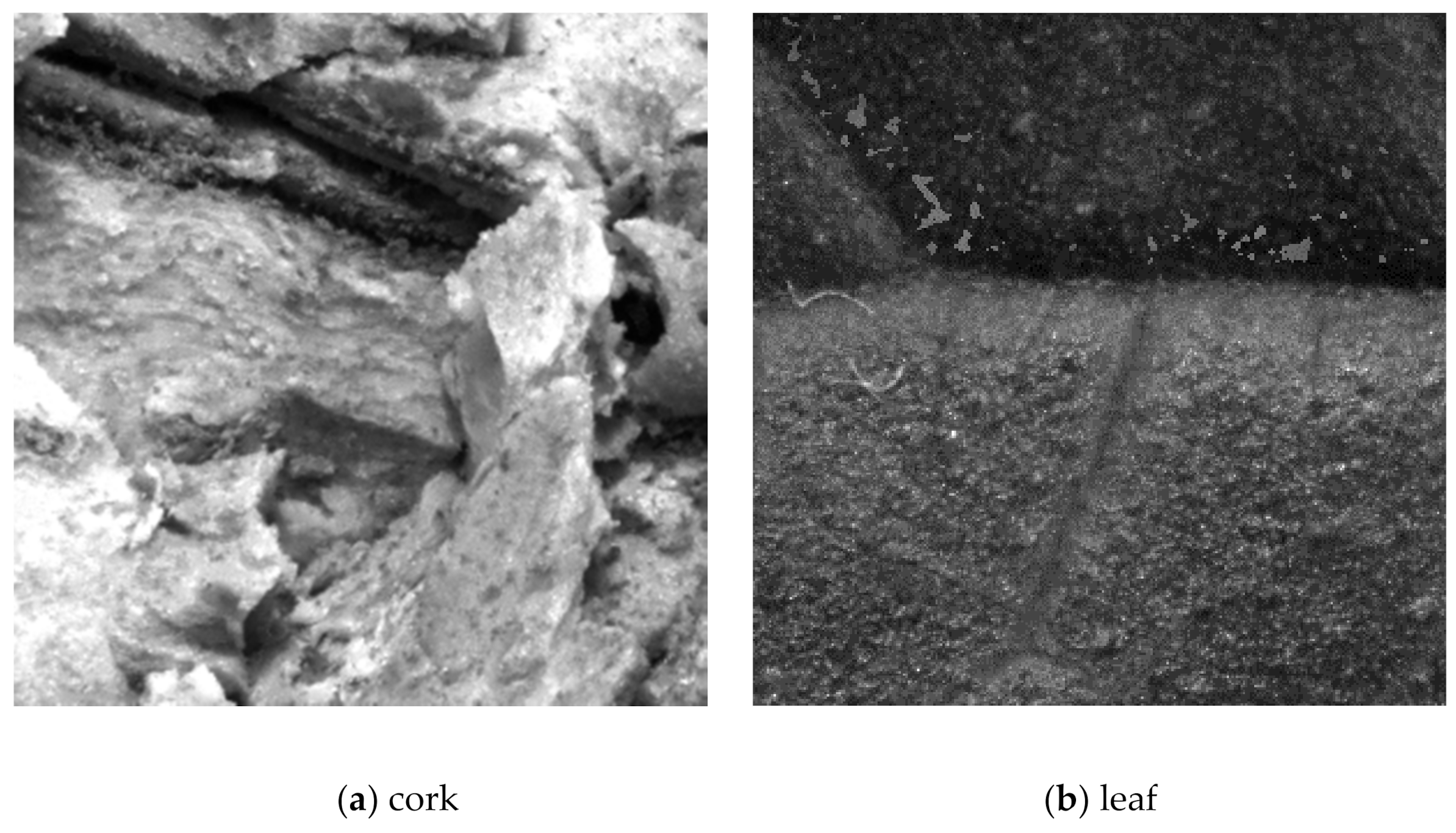

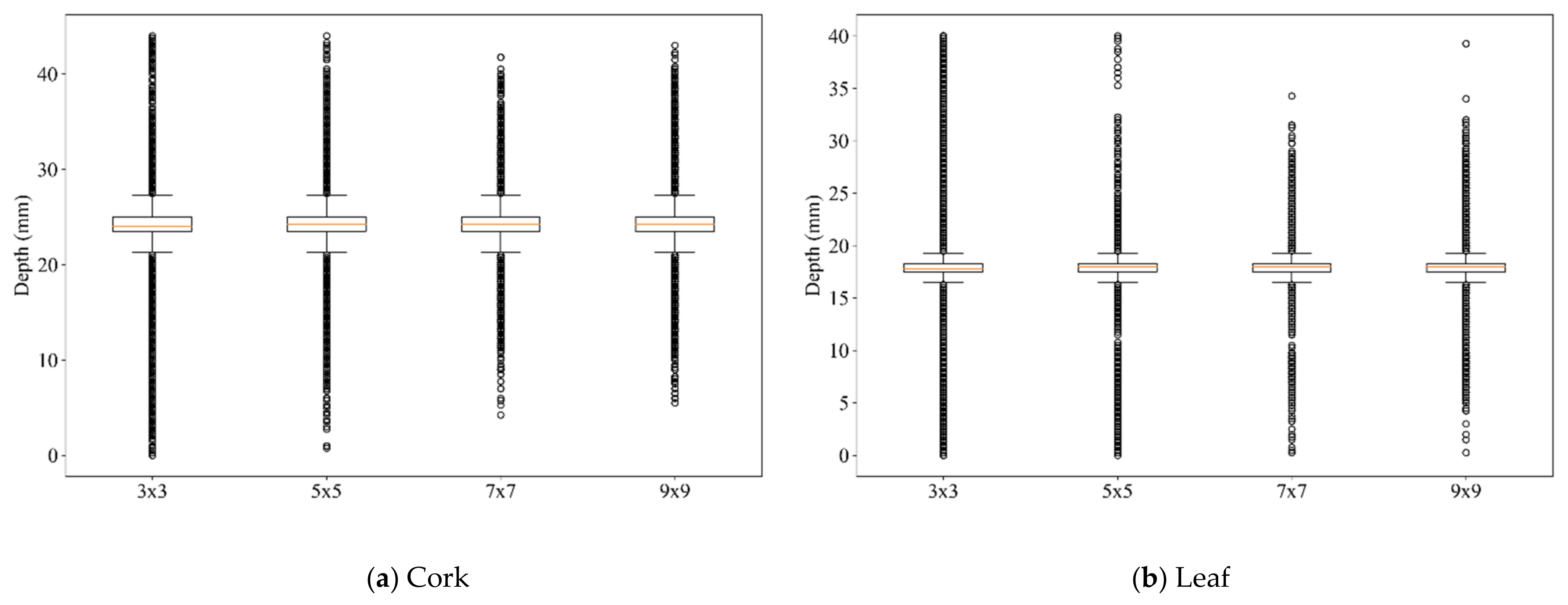

3.3. Qualitative Tests with Real Objects

4. Discussion

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Thanusutiyabhorn, P.; Kanongchaiyos, P.; Mohammed, W. Image-based 3D laser scanner. In Proceedings of the 8th Electrical Engineering/Electronics, Computer, Telecommunications and Information Technology (ECTI) Association of Thailand—Conference 2011, Khon Kaen, Thailand, 17–19 May 2011; Institute of Electrical and Electronics Engineers (IEEE): Piscataway, NJ, USA, 2011; pp. 975–978. [Google Scholar]

- Nayar, S.K.; Nakagawa, Y. Shape from focus. IEEE Trans. Pattern Anal. Mach. Intell. 1994, 16, 824–831. [Google Scholar] [CrossRef] [Green Version]

- Takeshita, T.; Kim, M.; Nakajima, Y. 3-D shape measurement endoscope using a single-lens system. Int. J. Comput. Assist. Radiol. Surg. 2012, 8, 451–459. [Google Scholar] [CrossRef]

- Thelen, A.; Frey, S.; Hirsch, S.; Hering, P. Improvements in Shape-From-Focus for Holographic Reconstructions With Regard to Focus Operators, Neighborhood-Size, and Height Value Interpolation. IEEE Trans. Image Process. 2008, 18, 151–157. [Google Scholar] [CrossRef] [PubMed]

- Groen, F.; Young, I.; Ligthart, G. A comparison of different focus functions for use in auto focus algorithms. Cytometry 1985, 6, 81–91. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Yeo, T.; Ong, S.; Jayasooriah; Sinniah, R. Autofocusing for tissue microscopy. Imag. Vis. Comput. 1993, 11, 629–639. [Google Scholar] [CrossRef]

- Pertuz, S.; Puig, D.; Garcia, M.A. Analysis of focus measure operators for shape-from-focus. Pattern Recognit. 2013, 46, 1415–1432. [Google Scholar] [CrossRef]

- Malik, A.S.; Choi., T.S. Consideration of illumination effects and optimization of window size for accurate calculation of depth map for 3D shape recovery. Pattern Recognit. 2007, 40, 154–170. [Google Scholar] [CrossRef]

- Marshall, J.; Burbeck, C.; Ariely, D.; Rolland, J.; Martin, K. Occlusion edge blur: A cue to relative visual depth. J. Opt. Soc. Am. A 1996, 13, 681–688. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Lee, I.; Mahmood, M.T.; Choi, T.S. Adaptive window selection for 3D shape recovery from image focus. Opt. Laser Technol. 2013, 45, 21–31. [Google Scholar] [CrossRef]

- Pertuz, S.; Puig, D.; García, M.Á. Improving Shape-from-Focus by Compensating for Image Magnification Shift. In Proceedings of the 2010 20th International Conference on Pattern Recognition, Istanbul, Turkey, 23–26 August 2010; Institute of Electrical and Electronics Engineers (IEEE): Piscataway, NJ, USA, 2010; pp. 802–805. [Google Scholar]

| Cycle Length (Pixel/Cycle) | 48 | 32 | 24 | 17 | 12 |

|---|---|---|---|---|---|

| Std. Err. (mm) | 4.18 | 2.80 | 1.68 | 0.86 | 0.60 |

| Coefficient of Determination | 0.11 | 0.19 | 0.47 | 0.75 | 0.86 |

| Cycle Length (Pixel) | Window Size | ||||||

|---|---|---|---|---|---|---|---|

| 12 | 3 × 3 | 7 × 7 | 9 × 9 | 13 × 13 | 19 × 19 | 25 × 25 | 37 × 37 |

| 17 | 5 × 5 | 9 × 9 | 13 × 13 | 17 × 17 | 25 × 25 | 35 × 35 | 51 × 51 |

| 24 | 7 × 7 | 13 × 13 | 19 × 19 | 25 × 25 | 37 × 37 | 49 × 49 | 73 × 73 |

| 32 | 9 × 9 | 17 × 17 | 25 × 25 | 33 × 33 | 49 × 49 | 65 × 65 | 97 × 97 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Onogi, S.; Kawase, T.; Sugino, T.; Nakajima, Y. Investigation of Shape-from-Focus Precision by Texture Frequency Analysis. Electronics 2021, 10, 1870. https://doi.org/10.3390/electronics10161870

Onogi S, Kawase T, Sugino T, Nakajima Y. Investigation of Shape-from-Focus Precision by Texture Frequency Analysis. Electronics. 2021; 10(16):1870. https://doi.org/10.3390/electronics10161870

Chicago/Turabian StyleOnogi, Shinya, Toshihiro Kawase, Takaaki Sugino, and Yoshikazu Nakajima. 2021. "Investigation of Shape-from-Focus Precision by Texture Frequency Analysis" Electronics 10, no. 16: 1870. https://doi.org/10.3390/electronics10161870