A Study of Cutaneous Perception Parameters for Designing Haptic Symbols towards Information Transfer

Abstract

:1. Introduction

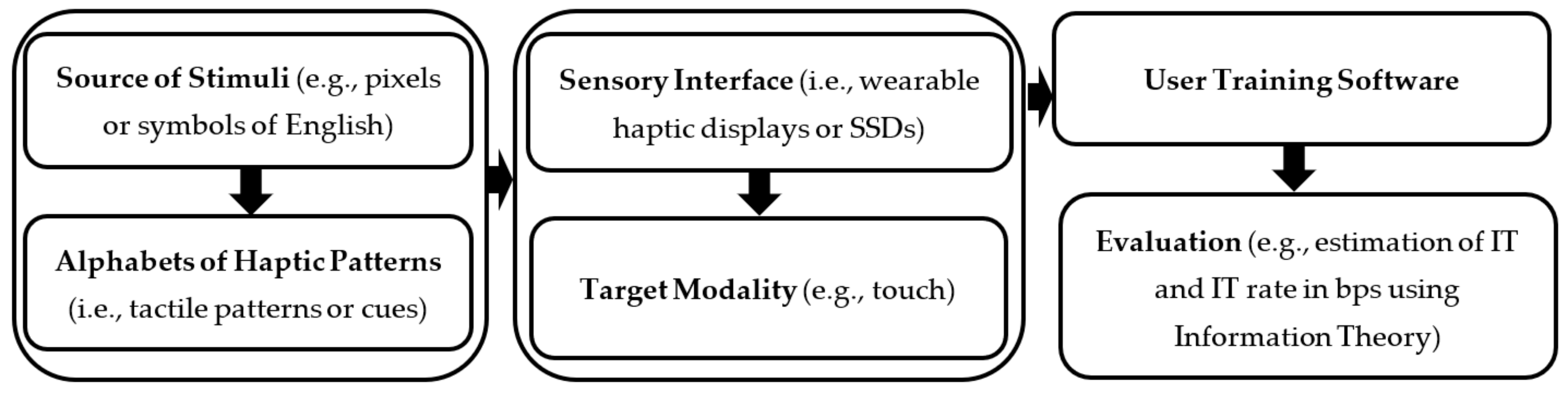

2. Considerations of SSS Design

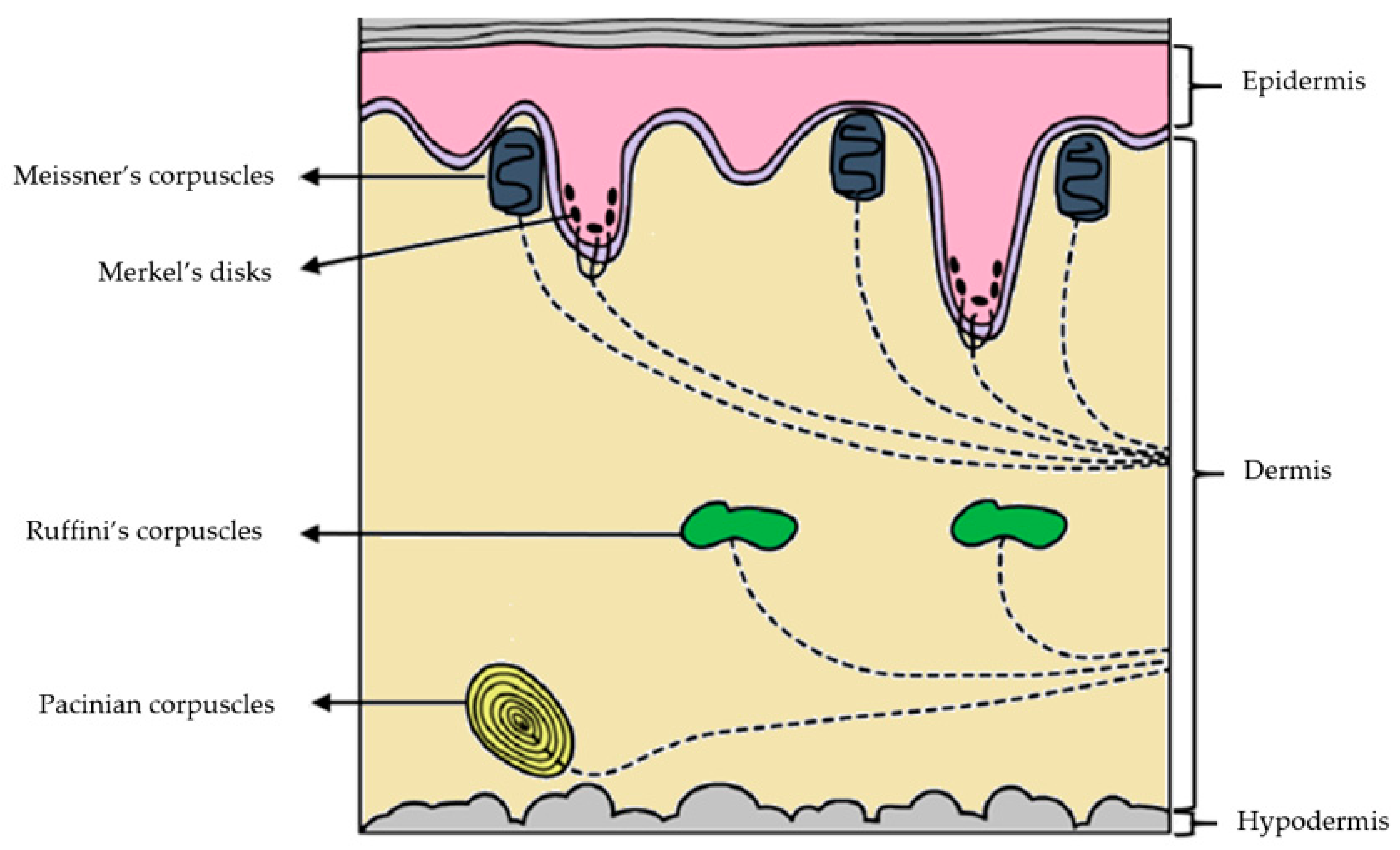

2.1. Psychophysics of the Cutaneous Sense

2.2. Cutaneous Parameters Relevant to SSD Design

3. Strategies for Designing Tactile Patterns without Illusions

4. Strategies for Designing Tactile Patterns by Engaging Illusions

4.1. Apparent Motion (The Illusion of Movement)

4.2. Tactile Sensory Saltation (The Illusion of Mislocalization)

4.3. Funneling Illusion (Localization Errors)

5. Discussion

Future Work

Author Contributions

Funding

Conflicts of Interest

References

- Tan, H.Z.; Durlach, N.I.; Rabinowitz, W.M.; Reed, C.M.; Santos, J.R. Reception of Morse code through motional, vibrotactile, and auditory stimulation. Percept. Psychophys. 1997, 59, 1004–1017. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Giri, G.; Maddahi, Y.; Zareinia, K. An Application-Based Review of Haptics Technology. Robotics 2021, 10, 29. [Google Scholar] [CrossRef]

- Tai, Y.; Shi, J.; Wei, L.; Huang, X.; Chen, Z.; Li, Q. Real-time visuo-haptic surgical simulator for medical education—A review. In International Conference on Mechatronics and Intelligent Robotics; Springer: Berlin/Heidelberg, Germany, 2017; pp. 531–537. [Google Scholar]

- Summers, I.R. Tactile Aids for the Hearing Impaired; Whurr Publishers: London, UK, 1992. [Google Scholar]

- Franklin, D. Tactile aids, new help for the profoundly deaf. Hear. J. 1984, 37, 20–23. [Google Scholar]

- Kaczmarek, K.A.; Webster, J.G.; Bach-y-Rita, P.; Tompkins, W.J. Electrotactile and vibrotactile displays for sensory substitution systems. IEEE Trans. Biomed. Eng. 1991, 38, 1–16. [Google Scholar] [CrossRef] [Green Version]

- De Volder, A.G.; Catalan-Ahumada, M.; Robert, A.; Bol, A.; Labar, D.; Coppens, A.; Michel, C.; Veraart, C. Changes in occipital cortex activity in early blind humans using a sensory substitution device. Brain Res. 1999, 826, 128–134. [Google Scholar]

- Luzhnica, G.; Veas, E.; Pammer, V. Skin reading: Encoding text in a 6-channel haptic display. In Proceedings of the 2016 ACM International Symposium on Wearable Computers, Heidelberg, Germany, 12–16 September 2016; pp. 148–155. [Google Scholar]

- Novich, S.D.; Eagleman, D.M. Using space and time to encode vibrotactile information: Toward an estimate of the skin’s achievable throughput. Exp. Brain Res. 2015, 233, 2777–2788. [Google Scholar] [CrossRef] [PubMed]

- Tan, H.Z.; Choi, S.; Lau, F.W.; Abnousi, F. Methodology for Maximizing Information Transmission of Haptic Devices: A Survey. Proc. IEEE 2020, 108, 945–965. [Google Scholar] [CrossRef]

- Nahri, S.N.F.; Du, S.; Van Wyk, B. Haptic System Interface Design and Modelling for Bilateral Teleoperation Systems. In Proceedings of the 2020 International SAUPEC/RobMech/PRASA Conference, Cape Town, South Africa, 29–31 January 2020; IEEE: New York, NY, USA, 2020; pp. 1–6. [Google Scholar]

- Carvalheiro, C.; Nóbrega, R.; da Silva, H.; Rodrigues, R. User redirection and direct haptics in virtual environments. In Proceedings of the 24th ACM international conference on Multimedia, Amsterdam, The Netherlands, 15–19 October 2016; pp. 1146–1155. [Google Scholar]

- Feng, L.; Ali, A.; Iqbal, M.; Bashir, A.K.; Hussain, S.A.; Pack, S. Optimal haptic communications over nanonetworks for E-health systems. IEEE Trans. Ind. Inform. 2019, 15, 3016–3027. [Google Scholar] [CrossRef] [Green Version]

- Jones, L.A. Haptics; MIT Press Essential Knowledge Series: Cambridge, MA, USA, 2018. [Google Scholar]

- Tan, H.Z.; Reed, C.M.; Jiao, Y.; Perez, Z.D.; Wilson, E.C.; Jung, J.; Martinez, J.S.; Severgnini, F.M. Acquisition of 500 english words through a TActile Phonemic Sleeve (TAPS). IEEE Trans. Haptics 2020, 13, 745–760. [Google Scholar] [CrossRef]

- Hoffmann, R.; Valgeirsdóttir, V.V.; Jóhannesson, Ó.I.; Unnthorsson, R.; Kristjánsson, Á. Measuring relative vibrotactile spatial acuity: Effects of tactor type, anchor points and tactile anisotropy. Exp. Brain Res. 2018, 236, 3405–3416. [Google Scholar] [CrossRef] [Green Version]

- Hoffmann, R.; Spagnol, S.; Kristjánsson, Á.; Unnthorsson, R. Evaluation of an audio-haptic sensory substitution device for enhancing spatial awareness for the visually impaired. Optom. Vis. Sci. 2018, 95, 757. [Google Scholar] [CrossRef]

- Kristjánsson, Á.; Moldoveanu, A.; Jóhannesson, Ó.I.; Balan, O.; Spagnol, S.; Valgeirsdóttir, V.V.; Unnthorsson, R. Designing sensory-substitution devices: Principles, pitfalls and potential 1. Restor. Neurol. Neurosci. 2016, 34, 769–787. [Google Scholar] [CrossRef] [Green Version]

- Luzhnica, G.; Veas, E.; Seim, C. Passive haptic learning for vibrotactile skin reading. In Proceedings of the 2018 ACM International Symposium on Wearable Computers, Singapore, 8–12 October 2018; pp. 40–43. [Google Scholar]

- Luzhnica, G. Leveraging Optimisations on Spatial Acuity for Conveying Information through Wearable Vibrotactile Display. 2019. Available online: https://diglib.tugraz.at/leveraging-optimisations-on-spatial-acuity-for-conveying-information-through-wearable-vibrotactile-displays-2019 (accessed on 12 February 2021).

- Skedung, L.; Arvidsson, M.; Chung, J.Y.; Stafford, C.M.; Berglund, B.; Rutland, M.W. Feeling small: Exploring the tactile perception limits. Sci. Rep. 2013, 3, 1–6. [Google Scholar] [CrossRef] [Green Version]

- Reed, C.M.; Durlach, N.I. Note on information transfer rates in human communication. Presence 1998, 7, 509–518. [Google Scholar] [CrossRef]

- Spence, C. In Touch with the Future. In Proceedings of the 2015 International Conference on Interactive Tabletops & Surfaces, Funchal, Portugal, 15–18 November 2015; p. 1. [Google Scholar]

- Reed, C.M.; Durlach, N.I.; Delhorne, L.A. Tactile Aids Hear. Impair. 1992. Available online: https://www.wiley.com/en-us/Tactile+Aids+for+the+Hearing+Impaired-p-9781870332170 (accessed on 14 January 2021).

- Purves, D.; Augustine, G.J.; Fitzpatrick, D.; Hall, W.C.; LaMantia, A.-S.; McNamara, J.O.; White, L.E. Neuroscience, 4th ed.; Sinauer xvii: Sunderland, MA, USA, 2008; Volume 857, p. 944. [Google Scholar]

- Novich, S.D. Sound-to-Touch Sensory Substitution and Beyond. 2015. Available online: https://scholarship.rice.edu/handle/1911/88379 (accessed on 10 January 2021).

- Shneiderman, B.; Plaisant, C.; Cohen, M.S.; Jacobs, S.; Elmqvist, N.; Diakopoulos, N. Designing the User Interface: Strategies for Effective Human-Computer Interaction; Pearson: London, UK, 2016. [Google Scholar]

- Brewster, S.A.; Brown, L.M. Non-visual information display using tactons. In Proceedings of the CHI’04 Extended Abstracts on Human Factors in Computing Systems, Vienna, Austria, 24–29 April 2004; pp. 787–788. [Google Scholar]

- Azadi, M.; Jones, L.A. Evaluating vibrotactile dimensions for the design of tactons. IEEE Trans. Haptics 2014, 7, 14–23. [Google Scholar] [CrossRef] [PubMed]

- Choi, S.; Kuchenbecker, K.J. Vibrotactile display: Perception, technology, and applications. Proc. IEEE 2012, 101, 2093–2104. [Google Scholar] [CrossRef]

- Basdogan, C.; Giraud, F.; Levesque, V.; Choi, S. A review of surface haptics: Enabling tactile effects on touch surfaces. IEEE Trans. Haptics 2020, 13, 450–470. [Google Scholar] [CrossRef]

- Zhao, S.; Israr, A.; Lau, F.; Abnousi, F. Coding tactile symbols for phonemic communication. In Proceedings of the 2018 CHI Conference on Human Factors in Computing Systems, Montreal, QC, Canada, 21–26 April 2018; pp. 1–13. [Google Scholar]

- Jones, L.A.; Tan, H.Z. Application of psychophysical techniques to haptic research. IEEE Trans. Haptics 2012, 6, 268–284. [Google Scholar] [CrossRef] [Green Version]

- Plaisier, M.A.; Tiest, W.B.; Kappers, A. Haptic object individuation. IEEE Trans. Haptics 2010, 3, 257–265. [Google Scholar] [CrossRef]

- Durlach, N.; Tan, H.; Macmillan, N.; Rabinowitz, W.; Braida, L. Resolution in one dimension with random variations in background dimensions. Percept. Psychophys. 1989, 46, 293–296. [Google Scholar] [CrossRef] [PubMed]

- Vallbo, A.B.; Johansson, R.S. Properties of cutaneous mechanoreceptors in the human hand related to touch sensation. Hum. Neurobiol. 1984, 3, 3–14. [Google Scholar]

- Goldstein, E. Sensation and Perception; Wadsworth-Thomson Learning Cop: Pacific Grove, CA, USA, 2002. [Google Scholar]

- Craig, J.C. Temporal integration of vibrotactile patterns. Percept. Psychophys. 1982, 32, 219–229. [Google Scholar] [CrossRef]

- Hoggan, E.; Anwar, S.; Brewster, S.A. Mobile multi-actuator tactile displays. In International Workshop on Haptic and Audio Interaction Design; Springer: Berlin/Heidelberg, Germany, 2007; pp. 22–33. [Google Scholar]

- Horvath, S.; Galeotti, J.; Wu, B.; Klatzky, R.; Siegel, M.; Stetten, G. FingerSight: Fingertip haptic sensing of the visual environment. IEEE J. Transl. Eng. Health Med. 2014, 2, 1–9. [Google Scholar] [CrossRef]

- Sorgini, F.; Caliò, R.; Carrozza, M.C.; Oddo, C.M. Haptic-assistive technologies for audition and vision sensory disabilities. Disabil. Rehabil. Assist. Technol. 2018, 13, 394–421. [Google Scholar] [CrossRef] [PubMed]

- Reed, C.M.; Tan, H.Z.; Perez, Z.D.; Wilson, E.C.; Severgnini, F.M.; Jung, J.; Martinez, J.S.; Jiao, Y.; Israr, A.; Lau, F.; et al. A phonemic-based tactile display for speech communication. IEEE Trans. Haptics 2018, 12, 2–17. [Google Scholar] [CrossRef] [PubMed]

- Wong, E.Y.; Israr, A.; O’Malley, M. Discrimination of consonant articulation location by tactile stimulation of the forearm. In Proceedings of the 2010 IEEE Haptics Symposium, Waltham, MA, USA, 25–26 March 2010; pp. 47–54. [Google Scholar] [CrossRef]

- Chen, H.-Y.; Santos, J.; Graves, M.; Kim, K.; Tan, H.Z. Tactor localization at the wrist. In International Conference on Human Haptic Sensing and Touch Enabled Computer Applications; Springer: Berlin/Heidelberg, Germany, 2008; pp. 209–218. [Google Scholar]

- Kammoun, S.; Jouffrais, C.; Guerreiro, T.; Nicolau, H.; Jorge, J. Guiding blind people with haptic feedback. Available online: https://scholar.google.com/scholar?hl=en&as_sdt=0%2C5&q=.+Guiding+blind+people+with+haptic+feedback&btnG= (accessed on 14 February 2021).

- Kim, H.; Seo, C.; Lee, J.; Ryu, J.; Yu, S.-B.; Lee, S. Vibrotactile display for driving safety information. In Proceedings of the 2006 IEEE Intelligent Transportation Systems Conference, Toronto, ON, Canada, 17–20 September 2006; IEEE: New York, NY, USA, 2006; pp. 573–577. [Google Scholar]

- Lederman, S.J.; Klatzky, R.L. Haptic perception: A tutorial. Atten. Percept. Psychophys. 2009, 71, 1439–1459. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Hoggan, E.; Brewster, S. New parameters for tacton design. In Proceedings of the CHI’07 Extended Abstracts on Human Factors in Computing Systems, San Jose, CA, USA, 28 April–3 May 2007; pp. 2417–2422. [Google Scholar]

- Human Factors (HF). Guidelines on the multimodality of icons, symbols, and pictograms. 2002. Available online: https://www.etsi.org/deliver/etsi_eg/202000_202099/202048/01.01.01_60/eg_202048v010101p.pdf (accessed on 5 January 2021).

- Goff, G.D. Differential discrimination of frequency of cutaneous mechanical vibration. J. Exp. Psychol. 1967, 74, 294. [Google Scholar] [CrossRef] [PubMed]

- Brewster, S.A.; Brown, L.M. Tactons: Structured Tactile Messages for Non-Visual Information Display. 2004. Available online: https://eprints.gla.ac.uk/3443/ (accessed on 8 March 2021).

- Gescheider, G.A. Resolving of successive clicks by the ears and skin. J. Exp. Psychol. 1966, 71, 378. [Google Scholar] [CrossRef]

- Israr, A.; Poupyrev, I. Tactile brush: Drawing on skin with a tactile grid display. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, Vancouver, BC, Canada, 7–12 May 2011; pp. 2019–2028. [Google Scholar]

- Hoffmann, R.; Brinkhuis, M.A.; Unnthorsson, R.; Kristjánsson, Á. The intensity order illusion: Temporal order of different vibrotactile intensity causes systematic localization errors. J. Neurophysiol. 2019, 122, 1810–1820. [Google Scholar] [CrossRef]

- Lederman, S.J.; Jones, L.A. Tactile and haptic illusions. IEEE Trans. Haptics 2011, 4, 273–294. [Google Scholar] [CrossRef]

- Goldstein, M.H., Jr.; Proctor, A. Tactile aids for profoundly deaf children. J. Acoust. Soc. Am. 1985, 77, 258–265. [Google Scholar] [CrossRef]

- Leder, S.B.; Spitzer, J.B.; Milner, P.; Flevaris-Phillips, C.; Richardson, F. Vibrotactile stimulation for the adventitiously deaf: An alternative to cochlear implantation. Arch. Phys. Med. Rehabil. 1986, 67, 754–758. [Google Scholar] [CrossRef]

- Geldard, F.A. Adventures in tactile literacy. Am. Psychol. 1957, 12, 115. [Google Scholar] [CrossRef]

- Tan, H.Z.; Durlach, N.I.; Reed, C.M.; Rabinowitz, W.M. Information transmission with a multifinger tactual display. Percept. Psychophys. 1999, 61, 993–1008. [Google Scholar] [CrossRef] [Green Version]

- Azadi, M.; Jones, L. Identification of vibrotactile patterns: Building blocks for tactons. In Proceedings of the 2013 World Haptics Conference (WHC), Daejeon, Korea, 14–17 April 2013; IEEE: New York, NY, USA, 2013; pp. 347–352. [Google Scholar]

- Loomis, J.M. Tactile letter recognition under different modes of stimulus presentation. Percept. Psychophys. 1974, 16, 401–408. [Google Scholar] [CrossRef]

- Velázquez, R.; Bazán, O.; Alonso, C.; Delgado-Mata, C. Vibrating insoles for tactile communication with the feet. In Proceedings of the 2011 15th International Conference on Advanced Robotics (ICAR), Tallinn, Estonia, 20–23 June 2011; IEEE: New York, NY, USA, 2011; pp. 118–123. [Google Scholar]

- Velázquez, R.; Pissaloux, E. Constructing tactile languages for situational awareness assistance of visually impaired people. In Mobility of Visually Impaired People; Springer: Berlin/Heidelberg, Germany, 2018; pp. 597–616. [Google Scholar]

- Saida, S.; Shimizu, Y.; Wake, T. Computer-controlled TVSS and some characteristics of vibrotactile letter recognition. Percept. Mot. Ski. 1982, 55, 651–653. [Google Scholar] [CrossRef]

- Janidarmian, M.; Fekr, A.R.; Radecka, K.; Zilic, Z. Designing and evaluating a vibrotactile language for sensory substitution systems. In International Conference on Wireless Mobile Communication and Healthcare; Springer: Berlin/Heidelberg, Germany, 2017; pp. 58–66. [Google Scholar]

- Janidarmian, M.; Fekr, A.R.; Radecka, K.; Zilic, Z. Wearable Vibrotactile System as an Assistive Technology Solution. Mob. Netw. Appl. 2019, 2019, 1–9. [Google Scholar] [CrossRef]

- Wu, J.; Zhang, J.; Yan, J.; Liu, W.; Song, G. Design of a vibrotactile vest for contour perception. Int. J. Adv. Robot. Syst. 2012, 9, 166. [Google Scholar] [CrossRef]

- Barralon, P.; Ng, G.; Dumont, G.; Schwarz, S.K.; Ansermino, M. Development and evaluation of multidimensional tactons for a wearable tactile display. In Proceedings of the 9th International Conference on Human Computer Interaction with Mobile Devices and Services, Singapore, 9–12 September 2007; pp. 186–189. [Google Scholar]

- Brown, L.M.; Brewster, S.A.; Purchase, H.C. Multidimensional tactons for non-visual information presentation in mobile devices. In Proceedings of the 8th conference on Human-Computer Interaction with Mobile Devices and Services, Helsinki, Finland, 12–15 September 2006; pp. 231–238. [Google Scholar]

- Van Erp, J.B. Guidelines for the use of vibro-tactile displays in human computer interaction. In Proceedings of the Eurohaptics: Citeseer, Edinburgh, UK, 8–10 July 2002; pp. 18–22. [Google Scholar]

- Luzhnica, G.; Veas, E. Vibrotactile patterns using sensitivity prioritisation. In Proceedings of the 2017 ACM International Symposium on Wearable Computers, Maui, HI, USA, 11–15 September 2017; pp. 74–81. [Google Scholar]

- Neuhaus, W. Experimentelle Untersuchung der Scheinbewegung. Arch. Gesamte Psychol. 1930, 75, 315–458. [Google Scholar]

- Kirman, J.H. Tactile apparent movement: The effects of interstimulus onset interval and stimulus duration. Percept. Psychophys. 1974, 15, 1–6. [Google Scholar] [CrossRef] [Green Version]

- Cholewiak, R.W.; Collins, A.A. The generation of vibrotactile patterns on a linear array: Influences of body site, time, and presentation mode. Percept. Psychophys. 2000, 62, 1220–1235. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Lee, J.; Han, J.; Lee, G. Investigating the information transfer efficiency of a 3 × 3 watch-back tactile display. In Proceedings of the 33rd Annual ACM Conference on Human Factors in Computing Systems, Seoul, Korea, 18–23 April 2015; pp. 1229–1232. [Google Scholar]

- Trojan, J.; Stolle, A.M.; Mršić Carl, A.; Kleinböhl, D.; Tan, H.Z.; Hölzl, R. Spatiotemporal integration in somatosensory perception: Effects of sensory saltation on pointing at perceived positions on the body surface. Front. Psychol. 2010, 1, 206. [Google Scholar] [CrossRef] [Green Version]

- Geldard, F.A. Saltation in somesthesis. Psychol. Bull. 1982, 92, 136. [Google Scholar] [CrossRef] [PubMed]

- Von Békésy, G. Sensations on the skin similar to directional hearing, beats, and harmonics of the ear. J. Acoust. Soc. Am. 1957, 29, 489–501. [Google Scholar] [CrossRef]

- Park, G.; Choi, S. Tactile information transmission by 2D stationary phantom sensations. In Proceedings of the 2018 CHI Conference on Human Factors in Computing Systems, Montreal, QC, Canada, 21–26 April 2018; pp. 1–12. [Google Scholar]

| Meissner’s Corpuscles | Ruffini’s Corpuscles | Pacinian Corpuscles | Merkel’s Discs | |

|---|---|---|---|---|

| Perceived stimuli | Touch and moving stimuli | Stretching of the skin | Vibration and deep pressure | Touch and static stimuli |

| Spatial acuity | 3 mm | 7 mm | 10 mm | 0.5 mm |

| Response | FA | SA | FA | SA |

| Location | Primarily hairless skin | All skin | Subcutaneous tissue, all skin | All skin |

| Frequency range | 1–300 Hz | Unknown | 5–1000 Hz | 0.4–100 Hz. |

| Peak sensitivity | 50 Hz | 0.5–7 Hz | 250 Hz | 5 Hz. |

| Reference | Body Location | Number of Tactors | Parameters | Engaged Task | Outcome |

|---|---|---|---|---|---|

| Velázquez, Pissaloux [62,63] | Foot. | 4 | Five temporal patterns of short and long durations encoding words. | Recognize words, and word sequences (i.e., sentences). | Recognition performance of sentences in percentage correct scores: one word, 84%; two words, 77%, three words, 77%; four words, 66%. |

| Saida et al. [64] | Back. | 100 (10 × 10) | Three modes of tactile encoding: Static (i.e., spatial encoding), tracing (i.e., fingerspelling), moving (characters passed horizontally through the back). | Identify letters of the Japanese alphabet. | Identification accuracy in terms of percentage correct scores: static 27%, moving 39%, tracing 95%. No performance difference between blind and sighted subjects. |

| Loomis [61] | Back. | 400 (20 × 20) | Spatial and Spatiotemporal encoding is divided into five modes of presentation. | Identification of the 26 letters of English. | Identification performance varied between modes of presentation. Static patterns were worst, and spatiotemporal patterns are best in terms of identification. |

| Tan et al. [59] | Fingers. | 3 | Frequency, magnitude, location. | Identification of 120 stimulus patterns. | Achieved an IT of 6.50 bits: 90 patterns were separable. |

| Azadi, Jones [29,60] | Forearm. | 8 | Frequency, magnitude, pulse duration. | Identification of eight stimulus patterns. | Achieved an IT of 2.41 bits: three patterns were separable. Time and frequency are not integral parameters of vibrotactile stimuli. |

| Novich and Eagleman [9] | Back. | 9 (3 × 3) | Space, Time, and Intensity. | Identification of patterns. | Patterns engaging space and time (spatiotemporal) outperforms the rest in terms of identification performance. Spatial patterns are least performing in that respect. |

| Luzhnica, et al. [8,20] | Hand. | 6 | Space and Time (overlapping spatiotemporal encoding), spatial encoding. | Identification of letters of the English alphabet. | Prioritizing site sensitivity is critical when encoding information. Overlapping spatiotemporal encoding performs better than spatial encoding in terms of identification. |

| Janidarmian et al. [65,66] | Back. | 9 (3 × 3) | Personalized spatiotemporal encoding by adjusting the vibration variables (i.e., frequency, intensity, duration) to suit the user’s preference. | Identification of numbers. | Personalized encoding achieves better identification performance when compared to generalized encoding. |

| Wu et al. [67] | Back. | 48 (8 × 6) | Three types of spatiotemporal encoding methods: scanning, handwriting, and tracing. | Two tasks were involved: Identify physical shapes as acquired by a camera, identification of letters of the English alphabet. | In both cases, high identification accuracy was achieved with tracing, handwriting, and scanning respectively. |

| Barralon et al. [68] | Waist. | 8 | Locus, roughness (amplitude modulation), and rhythm (durations of pulses separated by distinct pauses). | Identification of 36 stimulus patterns. Investigate the individual effects of the engaged cutaneous parameters on recognition accuracy. | Achieved an IT of 4.18: 18.07 patterns were separable. Rhythm and location achieve high recognition accuracy: (96.3%), (91.6%) respectively. Roughness is least in terms of recognition accuracy: (88.7%). |

| Brown et al. [69] | Forearm. | 3 | Spatial location, rhythm, and roughness. | Compare the identification performance of two-dimensional stimuli with three-dimensional stimuli. | Tactons encoding two dimensions of information (rhythm and roughness) achieved an identification rate of 70% with an IT of 3.27 bits. Tactons with a further dimension (spatial location) achieved a rate of 80% with an IT of 3.37 bits. |

| Kim, et al. [46] | Foot. | 25 (5 × 5) | Space and Time: two modes of sequential stimulation to mimic tracing by engaging either one or two tactors. | Identification of Letters. | Sequential activation of two tactors improves identification performance. |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Nyasulu, T.D.; Du, S.; Steyn, N.; Dong, E. A Study of Cutaneous Perception Parameters for Designing Haptic Symbols towards Information Transfer. Electronics 2021, 10, 2147. https://doi.org/10.3390/electronics10172147

Nyasulu TD, Du S, Steyn N, Dong E. A Study of Cutaneous Perception Parameters for Designing Haptic Symbols towards Information Transfer. Electronics. 2021; 10(17):2147. https://doi.org/10.3390/electronics10172147

Chicago/Turabian StyleNyasulu, Tawanda Denzel, Shengzhi Du, Nico Steyn, and Enzeng Dong. 2021. "A Study of Cutaneous Perception Parameters for Designing Haptic Symbols towards Information Transfer" Electronics 10, no. 17: 2147. https://doi.org/10.3390/electronics10172147

APA StyleNyasulu, T. D., Du, S., Steyn, N., & Dong, E. (2021). A Study of Cutaneous Perception Parameters for Designing Haptic Symbols towards Information Transfer. Electronics, 10(17), 2147. https://doi.org/10.3390/electronics10172147