A Survey on QoE-Oriented VR Video Streaming: Some Research Issues and Challenges

Abstract

:1. Introduction

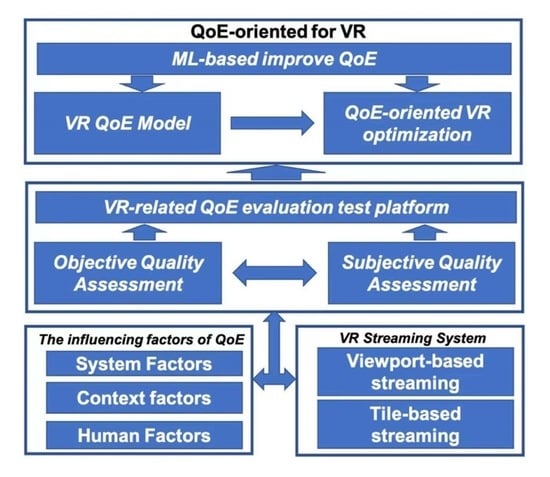

1.1. Survey Novelty and Contributions

- An overview of QoE and VR video streaming is provided, and the benefits of QoE evaluation for the development of VR service applications are illustrated;

- Subjective and objective ways of evaluating VR QoE, and VR-related test and evaluation platforms are discussed;

- An evaluation model for QoE based on VR video streams is investigated, the optimization of VR systems is described as a QoE optimization option, and machine learning is considered an active way to support the QoE optimization of VR video streaming (machine learning is considered to be an active way to support QoE optimization of VR video streaming);

- For the enhancement of VR user experience, the challenges facing VR video streaming QoE and future research directions are described.

1.2. Survey Structure

- Section 2: overview of the basic background knowledge on the main QoE influencing factors and VR video streaming;

- Section 3: description of QoE approach evaluation and testbed in targeting VR video applications;

- Section 4: constructed QoE models are surveyed on the basis of VR video streams, and QoE-oriented optimization problems for VR video streams; research on machine-learning methods for use in QoE optimization is examined;

- Section 5: recent findings are combined to provide an outlook on future research challenges and trends;

- Section 6: main points of this survey are summarized.

2. Overview

2.1. QoE-Influencing Factors

2.1.1. System Factors

2.1.2. Context Factors

2.1.3. Human Factors

- Physiological factorsMost studies found that the user’s physiological characteristics play a key role in in user QoE, with visual perception being of particular interest. Laghari et al. [30] analyzed a variety of factors in the human body itself (e.g., gender, age, etc.) to find the main influencing factors that may affect user perception quality. Most of these factors have been studied and modeled, but how an individual’s physiological characteristics affect QoE is equally important. Owsley et al. [31] demonstrated that factors such as visual acuity and loss of contrast sensitivity due to aging can affect visibility (and annoyance) in visual impairment. However, they are hardly included in the QoE model. M.S. El-Nasr et al. [32] found that physiological deficit disorders of user vision directly impact the user experience. Colorblind users have a different perceptual experience, while stereoblindness can also hugely impact it when faced with an immersive visual experience. In addition, human auditory characteristics can impact the QoE of the medium. Saleme et al. [33] studied 360° mulsemedia, an emerging VR application, with the aim of uncovering the physiological factors that may impact the experience. Unlike other factors, they introduced a specific factor of odor sensitivity. The study also found that women showed higher sensitivity when considering multisensory situations. P. Orero et al. [34] argue that the physiological situation can vary greatly in the assessment of the QoE in different situations due to the many different characteristics of the individual, minimizing the consideration of this aspect.

- Psychological factorsThe user’s psychological state is likely to play a large role in the level of satisfaction with the user experience. Some of the existing literature [35,36,37,38,39,40] indicated that personal psychological factors influence QoE in various ways, and Wechsung et al. [35] indicate that more variable factors, such as motivation, attention level, or user’s mood, i.e., affective factors, also play an important role in dealing with QoE influencing factors. Another study [36], on the other hand, found that the effect of emotion and multimedia experience is reciprocal. A good experience leads to good emotions, which are more likely to produce a good experience. The authors of [37,38,39] found that interest is the influencing factor that plays a decisive role in QoE as an influencing factor of emotion, and that interest may be triggered by some content that may impact the perception of QoE. In [40], the authors experimentally found that, when people watch content in which they are interested, the effect of the video quality is ignored. Regarding video quality, interest is positively correlated with QoE. In addition, the authors in [41] argued that some other factors, such as education or occupation background, can also impact the QoE of users. In conclusion, the influence of personal psychological factors on perceived quality is complex and closely related.

| QoE IFs | Type | Examples/References |

|---|---|---|

| System Factors | Objective | Network layer parameters (e.g., latency, throughput, packet loss, buffering event rate, buffering time, average bit rate, bandwidth [18]), application layer parameters (e.g., resolution, frame rate [19]), service layer parameters (e.g., type of content viewed, application level, viewing mode [18,22]) |

| Context Factors | Objective | Physical environment factors (e.g., lighting, sound, location [23,25]), the Economic factors (e.g., desired price, budget [26,27,28,29]). |

| Physiological Factors | Sbjective | Basic user profile (e.g., age, gender, education level [30,34]), the physical state (e.g., vision, hearing, sense of smell [31,32,33]) |

| Psychological Factors | Sbjective | physical/Mental status (e.g., user preferences, mood [35,35]), background (e.g., educational background, occupational background [41]), hobbies/interests [37,38,39,40] |

2.2. VR Streaming System

2.2.1. Full-View Streaming

2.2.2. Viewport-Based Streaming

2.2.3. Tile-Based Streaming

3. State of the Art of VR QoE

3.1. Subjective Quality Assessment

3.2. Objective Quality Assessment

3.3. VR-Related QoE Evaluation Test Platform

4. QoE-Oriented Optimization for Virtual-Reality Video

4.1. VR QoE MODEL

4.2. QoE-Oriented VR Optimization Problem

4.3. ML-Based Approaches Improve QoE

5. Discussion: Challenges, Issues Future Directions

5.1. Challenges and Impacts

5.2. Issues for Future Directions

5.2.1. Research on User Personalization Modeling for VR Video Streaming QoE

5.2.2. Trade-Off of QoE Based on MEC Solutions

6. Conclusions

Author Contributions

Funding

Conflicts of Interest

Abbreviations

| ACR | Absolute category rating |

| BP | Backpropagation |

| CMP | Cube map |

| CNN | Convolutional neural networks |

| CPP-PSNR | Craster’s parabolic projection peak signal-to-noise ratio |

| CSV | Comma-separated values |

| DASH | Dynamic adaptive streaming over HTTP |

| DMOS | Difference mean opinion scores |

| EM | Eye movement |

| ERP | Equirectangular projection |

| FoV | Field of view |

| HD | High-definition video |

| HEVC | High-efficiency video coding |

| HM | Head movement |

| HMD | Head-mounted display |

| ITU | International Telecommunication Union |

| MEC | Mobile edge computing |

| ML | Machine learning |

| M-ACR | Modified absolute category rating |

| MOS | Mean Opinion Score |

| M-SSIM | Mean structural similarity |

| PSNR | Peak signal-to-noise ratio |

| QEC | Quality emphasis center |

| QP | Quantizer parameter |

| S-PSNR | Spherical PSNR |

| SRD | Systems reference document |

| SSIM | Structural similarity index |

| VIFP | Visual information fidelity in pixel domain |

| VQA | Visual-quality assessment |

| VQM | Video-quality metric |

| VR | Virtual reality |

| WS-PSNR | Weighted to Spherically uniform PSNR |

| 2D | Two-dimensional |

| 3D | Three-dimensional |

| 4K | 4K resolution |

References

- Du, J.; Yu, F.R.; Lu, G.; Wang, J.; Jiang, J.; Chu, X. Mec-assisted immersive vr video streaming over terahertz wireless networks: A deep reinforcement learning approach. IEEE Internet Things J. 2020, 7, 9517–9529. [Google Scholar] [CrossRef]

- Sukhmani, S.; Sadeghi, M.; Erol-Kantarci, M.; Saddik, A.E. Edge caching and computing in 5g for mobile ar/vr and tactile internet. IEEE Multimed. 2018, 26, 21–30. [Google Scholar] [CrossRef]

- ITU-T Recommendation ITU-T P. 10/g. 100 (11/2017). Vocabulary for Performance, Quality of Service and Quality of Experience. 2017. Available online: https://www.itu.int/rec/T-REC-P.10 (accessed on 2 September 2021).

- Avcibas, I.; Sankur, B.; Sayood, K. Statistical evaluation of image quality measures. J. Electron. Imaging 2002, 11, 206–223. [Google Scholar]

- Wang, Z.; Lu, L.; Bovik, A.C. Video quality assessment based on structural distortion measurement. Signal Process. Image Commun. 2004, 19, 121–132. [Google Scholar] [CrossRef] [Green Version]

- Pinson, M.H.; Wolf, S. A new standardized method for objectively measuring video quality. IEEE Trans. Broadcast. 2004, 50, 312–322. [Google Scholar] [CrossRef]

- ITU Telecommunication Standardization Sector. Objective perceptual multimedia video quality measurement in the presence of a full reference. ITU-T Recomm. J 2008, 247, 18. [Google Scholar]

- Sousa, I.; Queluz, M.P.; Rodrigues, A. A survey on qoe-oriented wireless resources scheduling. J. Netw. Comput. Appl. 2020, 158, 102594. [Google Scholar] [CrossRef] [Green Version]

- Zhao, T.; Liu, Q.; Chen, C.W. Qoe in video transmission: A user experience-driven strategy. IEEE Commun. Surv. Tutor. 2016, 19, 285–302. [Google Scholar] [CrossRef]

- Juluri, P.; Tamarapalli, V.; Medhi, D. Measurement of quality of experience of video-on-demand services: A survey. IEEE Commun. Surv. Tutor. 2015, 18, 401–418. [Google Scholar] [CrossRef]

- Yuan, H.; Hu, X.; Hou, J.; Wei, X.; Kwong, S. An ensemble rate adaptation framework for dynamic adaptive streaming over http. IEEE Trans. Broadcast. 2019, 66, 251–263. [Google Scholar] [CrossRef] [Green Version]

- Barman, N.; Martini, M.G. Qoe modeling for http adaptive video streaming—A survey and open challenges. IEEE Access 2019, 7, 30831–30859. [Google Scholar] [CrossRef]

- Chen, Y.; Wu, K.; Zhang, Q. From qos to qoe: A tutorial on video quality assessment. IEEE Commun. Surv. Tutor. 2014, 17, 1126–1165. [Google Scholar] [CrossRef]

- Husić, J.B.; Baraković, S.; Cero, E.; Slamnik, N.; Oćuz, M.; Dedović, A.; Zupčić, O. Quality of experience for unified communications: A survey. Int. J. Netw. Manag. 2020, 30, e2083. [Google Scholar]

- Wang, Y.; Zhou, W.; Zhang, P. QoE Management in Wireless Networks; Springer: Berlin/Heidelberg, Germany, 2017. [Google Scholar]

- Brunnström, K.; Beker, S.A.; Moor, K.D.; Dooms, A.; Egger, S.; Garcia, M.; Hossfeld, T.; Jumisko-Pyykkö, S.; Keimel, C.; Larabi, M.; et al. Qualinet White Paper on Definitions of Quality of Experience. 2013. Available online: https://hal.archives-ouvertes.fr/hal-00977812/document (accessed on 2 September 2021).

- ITUT Recommendation. Vocabulary for Performance and Quality of Service; International Telecommunications Union—Radiocommunication (ITU-T), RITP: Geneva, Switzerland, 2006. [Google Scholar]

- Dobrian, F.; Sekar, V.; Awan, A.; Stoica, I.; Joseph, D.; Ganjam, A.; Zhan, J.; Zhang, H. Understanding the impact of video quality on user engagement. ACM SIGCOMM Comput. Commun. Rev. 2011, 41, 362–373. [Google Scholar] [CrossRef]

- Ghinea, G.; Thomas, J.P. Quality of perception: User quality of service in multimedia presentations. IEEE Trans. Multimed. 2005, 7, 786–789. [Google Scholar] [CrossRef] [Green Version]

- Ickin, S.; Wac, K.; Fiedler, M.; Janowski, L.; Hong, J.H.; Dey, A.K. Factors influencing quality of experience of commonly used mobile applications. IEEE Commun. Mag. 2012, 50, 48–56. [Google Scholar] [CrossRef] [Green Version]

- Balachandran, A.; Sekar, V.; Akella, A.; Seshan, S.; Stoica, I.; Zhang, H. A quest for an internet video quality-of-experience metric. In Proceedings of the 11th ACM Workshop on Hot Topics in Networks, Washington, WA, USA, 29–30 October 2012; pp. 97–102. [Google Scholar]

- Huynh-Thu, Q.; Ghanbari, M. Temporal aspect of perceived quality in mobile video broadcasting. IEEE Trans. Broadcast. 2008, 54, 641–651. [Google Scholar] [CrossRef]

- Han, B.; Zhang, X.; Qi, Y.; Gao, Y.; Yang, D. Qoe model based optimization for streaming media service considering equipment and environment factors. Wirel. Pers. Commun. 2012, 66, 595–612. [Google Scholar] [CrossRef]

- Westerink, J.H.D.M.; Roufs, J.A.J. Subjective image quality as a function of viewing distance, resolution, and picture size. SMPTE J. 1989, 98, 113–119. [Google Scholar] [CrossRef]

- Staelens, N.; Moens, S.; den Broeck, W.V.; Marien, I.; Vermeulen, B.; Lambert, P.; de Walle, R.V.; Demeester, P. Assessing quality of experience of iptv and video on demand services in real-life environments. IEEE Trans. Broadcast. 2010, 56, 458–466. [Google Scholar] [CrossRef] [Green Version]

- Yamori, K.; Tanaka, Y. Relation between willingness to pay and guaranteed minimum bandwidth in multiple-priority service. In APCC/MDMC’04, Proceedings of the 2004 Joint Conference of the 10th Asia-Pacific Conference on Communications and the 5th International Symposium on Multi-Dimensional Mobile Communications Proceeding Beijing, China, 29 August–1 September 2004; IEEE: Washington, DC, USA, 2004; Volume 1, pp. 113–117. [Google Scholar]

- Sackl, A.; Schatz, R.; Raake, A. More than i ever wanted or just good enough? user expectations and subjective quality perception in the context of networked multimedia services. Qual. User Exp. 2017, 2, 3. [Google Scholar] [CrossRef]

- Sackl, A.; Schatz, R. Got what you want? modeling expectations to enhance web qoe prediction. In Proceedings of the 2014 Sixth International Workshop on Quality of Multimedia Experience (QoMEX), Singapore, 18–20 September 2014; pp. 57–58. [Google Scholar]

- Sackl, A.; Schatz, R. Evaluating the influence of expectations, price and content selection on video quality perception. In Proceedings of the 2014 Sixth International Workshop on Quality of Multimedia Experience (QoMEX), Singapore, 18–20 September 2014; pp. 93–98. [Google Scholar]

- Laghari, K.U.R.; Connelly, K. Toward total quality of experience: A qoe model in a communication ecosystem. IEEE Commun. Mag. 2012, 50, 58–65. [Google Scholar] [CrossRef]

- Owsley, C.; Sekuler, R.; Siemsen, D. Contrast sensitivity throughout adulthood. Vis. Res. 1983, 23, 689–699. [Google Scholar] [CrossRef]

- El-Nasr, M.S.; Yan, S. Visual attention in 3d video games. In Proceedings of the 2006 ACM SIGCHI International Conference on Advances in Computer Entertainment Technology, Hollywood, CA, USA, 14–16 June 2006; p. 22. [Google Scholar]

- Saleme, E.B.; Covaci, A.; Assres, G.; Comsa, I.; Trestian, R.; Santos, C.A.S.; Ghinea, G. The influence of human factors on 360∘ mulsemedia qoe. Int. J. Hum.-Comput. Stud. 2021, 146, 102550. [Google Scholar] [CrossRef]

- Orero, P.; Remael, A.; Cintas, J.D. Media for All: Subtitling for the Deaf, Audio Description, and Sign Language; Rodopi: Amsterdam, The Netherlands, 2007. [Google Scholar]

- Wechsung, I.; Schulz, M.; Engelbrecht, K.P.; Niemann, J.; Möller, S. All users are (not) equal-the influence of user characteristics on perceived quality, modality choice and performance. In Proceedings of the Paralinguistic Information and Its Integration in Spoken Dialogue Systems Workshop; Springer: Berlin/Heidelberg, Germany, 2011; pp. 175–186. [Google Scholar]

- Möller, S.; Raake, A. Quality of Experience: Advanced Concepts, Applications and Methods; Springer: Berlin/Heidelberg, Germany, 2014. [Google Scholar]

- Silvia, P.J. Interest—The curious emotion. Curr. Dir. Psychol. Sci. 2008, 17, 57–60. [Google Scholar] [CrossRef]

- O’Brien, H.L.; Toms, E.G. What is user engagement? a conceptual framework for defining user engagement with technology. J. Am. Soc. Inf. Sci. Technol. 2008, 59, 938–955. [Google Scholar] [CrossRef] [Green Version]

- Kortum, P.; Sullivan, M. The effect of content desirability on subjective video quality ratings. Hum. Factors 2010, 52, 105–118. [Google Scholar] [CrossRef] [PubMed]

- Palhais, J.; Cruz, R.S.; Nunes, M.S. Quality of experience assessment in internet tv. In International Conference on Mobile Networks and Management; Springer: Berlin/Heidelberg, Germany, 2011; pp. 261–274. [Google Scholar]

- Zhu, Y.; Heynderickx, I.; Redi, J.A. Understanding the role of social context and user factors in video quality of experience. Comput. Hum. Behav. 2015, 49, 412–426. [Google Scholar] [CrossRef]

- Afzal, S.; Chen, J.; Ramakrishnan, K.K. Characterization of 360-degree videos. In Proceedings of the Workshop on Virtual Reality and Augmented Reality Network, Los Angeles, CA, USA, 25 August 2017; pp. 1–6. [Google Scholar]

- Corbillon, X.; Simon, G.; Devlic, A.; Chakareski, J. Viewport-adaptive navigable 360-degree video delivery. In Proceedings of the 2017 IEEE International Conference on Communications (ICC), Paris, France, 21–25 May 2017; pp. 1–7. [Google Scholar]

- Sreedhar, K.K.; Aminlou, A.; Hannuksela, M.M.; Gabbouj, M. Viewport-adaptive encoding and streaming of 360-degree video for virtual reality applications. In Proceedings of the 2016 IEEE International Symposium on Multimedia (ISM), San Jose, CA, USA, 11–13 December 2016; pp. 583–586. [Google Scholar]

- Nguyen, D.V.; Tran, H.T.T.; Thang, T.C. Impact of delays on 360-degree video communications. In Proceedings of the 2017 TRON Symposium (TRONSHOW), Tokyo, Japan, 13–15 December 2017; pp. 1–6. [Google Scholar]

- He, D.; Westphal, C.; Garcia-Luna-Aceves, J.J. Joint rate and fov adaptation in immersive video streaming. In Proceedings of the 2018 Morning Workshop on Virtual Reality and Augmented Reality Network, Budapest, Hungary, 24 August 2018; pp. 27–32. [Google Scholar]

- Zhou, C.; Li, Z.; Liu, Y. A measurement study of oculus 360 degree video streaming. In Proceedings of the 8th ACM on Multimedia Systems Conference, Taipei, Taiwan, 20–23 June 2017; pp. 27–37. [Google Scholar]

- Skupin, R.; Sanchez, Y.; Hellge, C.; Schierl, T. Tile based hevc video for head mounted displays. In Proceedings of the 2016 IEEE International Symposium on Multimedia (ISM), San Jose, CA, USA, 11–13 December 2016; pp. 399–400. [Google Scholar]

- Graf, M.; Timmerer, C.; Mueller, C. Towards bandwidth efficient adaptive streaming of omnidirectional video over http: Design, implementation, and evaluation. In Proceedings of the 8th ACM on Multimedia Systems Conference, Taipei, Taiwan, 20–23 June 2017; pp. 261–271. [Google Scholar]

- Yu, M.; Lakshman, H.; Girod, B. Content adaptive representations of omnidirectional videos for cinematic virtual reality. In Proceedings of the 3rd International Workshop on Immersive Media Experiences, Brisbane, Australia, 30 October 2015; pp. 1–6. [Google Scholar]

- Ozcinar, C.; Cabrera, J.; Smolic, A. Visual attention-aware omnidirectional video streaming using optimal tiles for virtual reality. IEEE J. Emerg. Sel. Top. Circuits Syst. 2019, 9, 217–230. [Google Scholar] [CrossRef]

- Nguyen, D.V.; Tran, H.T.T.; Pham, A.T.; Thang, T.C. An optimal tile-based approach for viewport-adaptive 360-degree video streaming. IEEE J. Emerg. Sel. Top. Circuits Syst. 2019, 9, 29–42. [Google Scholar] [CrossRef]

- Hosseini, M.; Swaminathan, V. Adaptive 360 vr video streaming: Divide and conquer. In Proceedings of the 2016 IEEE International Symposium on Multimedia (ISM), San Jose, CA, USA, 11–13 December 2016; pp. 107–110. [Google Scholar]

- Xie, L.; Xu, Z.; Ban, Y.; Zhang, X.; Guo, Z. 360probdash: Improving qoe of 360 video streaming using tile-based http adaptive streaming. In Proceedings of the 25th ACM International Conference on Multimedia, New York, NY, USA, 23–27 October 2017; pp. 315–323. [Google Scholar]

- Zare, A.; Aminlou, A.; Hannuksela, M.M.; Gabbouj, M. Hevc-compliant tile-based streaming of panoramic video for virtual reality applications. In Proceedings of the 24th ACM international conference on Multimedia, Amsterdam, The Netherlands, 15–19 October 2016; pp. 601–605. [Google Scholar]

- Tan, T.K.; Weerakkody, R.; Mrak, M.; Ramzan, N.; Baroncini, V.; Ohm, J.; Sullivan, G.J. Video quality evaluation methodology and verification testing of hevc compression performance. IEEE Trans. Circuits Syst. Video Technol. 2015, 26, 76–90. [Google Scholar] [CrossRef]

- Seshadrinathan, K.; Soundararajan, R.; Bovik, A.C.; Cormack, L.K. Study of subjective and objective quality assessment of video. IEEE Trans. Image Process. 2010, 19, 1427–1441. [Google Scholar] [CrossRef]

- Huang, M.; Shen, Q.; Ma, Z.; Bovik, A.C.; Gupta, P.; Zhou, R.; Cao, X. Modeling the perceptual quality of immersive images rendered on head mounted displays: Resolution and compression. IEEE Trans. Image Process. 2018, 27, 6039–6050. [Google Scholar] [CrossRef]

- Perrin, A.F.; Bist, C.; Cozot, R.; Ebrahimi, T. Measuring quality of omnidirectional high dynamic range content. In Applications of Digital Image Processing XL; International Society for Optics and Photonics: Bellingham, WA, USA, 2017; Volume 10396, p. 1039613. [Google Scholar]

- Anwar, M.S.; Wang, J.; Ahmad, S.; Ullah, A.; Khan, W.; Fei, Z. Evaluating the factors affecting qoe of 360-degree videos and cybersickness levels predictions in virtual reality. Electronics 2020, 9, 1530. [Google Scholar] [CrossRef]

- de A Azevedo, R.G.; Birkbeck, N.; De Simone, F.; Janatra, I.; Adsumilli, B.; Frossard, P. Visual distortions in 360-degree videos. arXiv 2019, arXiv:1901.01848. [Google Scholar] [CrossRef]

- Van Kasteren, A.; Brunnström, K.; Hedlund, J.; Snijders, C. Quality of experience assessment of 360-degree video. Electron. Imaging 2020, 2020, 91. [Google Scholar] [CrossRef]

- Anwar, M.S.; Wang, J.; Khan, W.; Ullah, A.; Ahmad, S.; Fei, Z. Subjective qoe of 360-degree virtual reality videos and machine learning predictions. IEEE Access 2020, 8, 148084–148099. [Google Scholar] [CrossRef]

- Kono, T.; Hayashi, T. Subjective qoe assessment method for 360° videos. IEICE Commun. Express 2020, 10, 174–178. [Google Scholar] [CrossRef]

- Tran, H.T.T.; Ngoc, N.P.; Pham, C.T.; Jung, Y.J.; Thang, T.C. A subjective study on qoe of 360 video for vr communication. In Proceedings of the 2017 IEEE 19th International Workshop on Multimedia Signal Processing (MMSP), London-Luton, UK, 16–18 October 2017; pp. 1–6. [Google Scholar]

- Younus, M.U. Analysis of the impact of different parameter settings on wireless sensor network lifetime. Int. J. Adv. Comput. Sci. Appl. 2018, 9, 16–21. [Google Scholar]

- Schatz, R.; Sackl, A.; Timmerer, C.; Gardlo, B. Towards subjective quality of experience assessment for omnidirectional video streaming. In Proceedings of the 2017 Ninth International Conference on Quality of Multimedia Experience (QoMEX), Erfurt, Germany, 29 May–2 June 2017; pp. 1–6. [Google Scholar]

- Singla, A.; Göring, S.; Raake, A.; Meixner, B.; Koenen, R.; Buchholz, T. Subjective quality evaluation of tile-based streaming for omnidirectional videos. In Proceedings of the 10th ACM Multimedia Systems Conference, Amherst, MA, USA, 18–21 June 2019; pp. 232–242. [Google Scholar]

- Singla, A.; Fremerey, S.; Robitza, W.; Raake, A. Measuring and comparing qoe and simulator sickness of omnidirectional videos in different head mounted displays. In Proceedings of the 2017 Ninth International Conference on Quality of Multimedia Experience (QoMEX), Erfurt, Germany, 29 May–2 June 2017; pp. 1–6. [Google Scholar]

- Singla, A.; Fremerey, S.; Robitza, W.; Lebreton, P.; Raake, A. Comparison of subjective quality evaluation for hevc encoded omnidirectional videos at different bit-rates for uhd and fhd resolution. In Proceedings of the on Thematic Workshops of ACM Multimedia 2017, Mountain View, CA, USA, 23–27 October 2017; pp. 511–519. [Google Scholar]

- Albert, R.; Patney, A.; Luebke, D.; Kim, J. Latency requirements for foveated rendering in virtual reality. ACM Trans. Appl. Percept. (TAP) 2017, 14, 1–13. [Google Scholar] [CrossRef]

- Fernandes, A.S.; Feiner, S.K. Combating vr sickness through subtle dynamic field-of-view modification. In Proceedings of the 2016 IEEE Symposium on 3D User Interfaces (3DUI), Greenville, SC, USA, 19–20 March 2016; pp. 201–210. [Google Scholar]

- Steed, A.; Frlston, S.; Lopez, M.M.; Drummond, J.; Pan, Y.; Swapp, D. An ‘in the wild’ experiment on presence and embodiment using consumer virtual reality equipment. IEEE Trans. Vis. Comput. Graph. 2016, 22, 1406–1414. [Google Scholar] [CrossRef] [Green Version]

- Tran, H.T.T.; Ngoc, N.P.; Pham, A.T.; Thang, T.C. A multi-factor qoe model for adaptive streaming over mobile networks. In Proceedings of the 2016 IEEE Globecom Workshops (GC Wkshps), Washington, WA, USA, 4–8 December 2016; pp. 1–6. [Google Scholar]

- Yang, S.; Zhao, J.; Jiang, T.; Wang, J.; Rahim, T.; Zhang, B.; Xu, Z.; Fei, Z. An objective assessment method based on multi-level factors for panoramic videos. In Proceedings of the 2017 IEEE Visual Communications and Image Processing (VCIP), Petersburg, FL, USA, 10–13 December 2017; pp. 1–4. [Google Scholar]

- Yang, M.; Zou, W.; Song, J.; Yang, F. Enhancing qoe for viewport-adaptive 360-degree video streaming: Perception analysis and implementation. IEEE Multimed. 2020. [Google Scholar] [CrossRef]

- Tran, H.T.T.; Ngoc, N.P.; Bui, C.M.; Pham, M.H.; Thang, T.C. An evaluation of quality metrics for 360 videos. In Proceedings of the 2017 Ninth International Conference on Ubiquitous and Future Networks (ICUFN), Milan, Italy, 4–7 July 2017; pp. 7–11. [Google Scholar]

- Liu, Y.; Yang, L.; Xu, M.; Wang, Z. Rate control schemes for panoramic video coding. J. Vis. Commun. Image Represent. 2018, 53, 76–85. [Google Scholar] [CrossRef]

- Zakharchenko, V.; Choi, K.P.; Park, J.H. Quality metric for spherical panoramic video. In Optics and Photonics for Information Processing X; International Society for Optics and Photonics: Bellingham, WA, USA, 2016; Volume 9970, p. 99700C. [Google Scholar]

- Chen, S.; Zhang, Y.; Li, Y.; Chen, Z.; Wang, Z. Spherical structural similarity index for objective omnidirectional video quality assessment. In Proceedings of the 2018 IEEE International Conference on Multimedia and Expo (ICME), San Diego, CA, USA, 23–27 July 2018; pp. 1–6. [Google Scholar]

- Upenik, E.; Rerabek, M.; Ebrahimi, T. On the performance of objective metrics for omnidirectional visual content. In Proceedings of the 2017 Ninth International Conference on Quality of Multimedia Experience (QoMEX), Erfurt, Germany, 29 May–2 June 2017; pp. 1–6. [Google Scholar]

- Egan, D.; Brennan, S.; Barrett, J.; Qiao, Y.; Timmerer, C.; Murray, N. An evaluation of heart rate and electrodermal activity as an objective qoe evaluation method for immersive virtual reality environments. In Proceedings of the 2016 Eighth International Conference on Quality of Multimedia Experience (QoMEX), Lisbon, Portugal, 6–8 June 2016; pp. 1–6. [Google Scholar]

- Ahmadi, H.; Eltobgy, O.; Hefeeda, M. Adaptive multicast streaming of virtual reality content to mobile users. In Proceedings of the Thematic Workshops of ACM Multimedia 2017, Mountain View, CA, USA, 23–27 October 2017; pp. 170–178. [Google Scholar]

- Upenik, E.; Řeřábek, M.; Ebrahimi, T. Testbed for subjective evaluation of omnidirectional visual content. In Proceedings of the 2016 Picture Coding Symposium (PCS), Nuremberg, Germany, 4–7 December 2016; pp. 1–5. [Google Scholar]

- Regal, G.; Schatz, R.; Schrammel, J.; Suette, S. Vrate: A unity3d asset for integrating subjective assessment questionnaires in virtual environments. In Proceedings of the 2018 Tenth International Conference on Quality of Multimedia Experience (QoMEX), Sardinia, Italy, 29–31 May 2018; pp. 1–3. [Google Scholar]

- Bessa, M.; Melo, M.; Narciso, D.; Barbosa, L.; Vasconcelos-Raposo, J. Does 3d 360 video enhance user’s vr experience? An evaluation study. In Proceedings of the XVII International Conference on Human Computer Interaction, Salamanca, Spain, 13–16 September 2016; pp. 1–4. [Google Scholar]

- Schatz, R.; Regal, G.; Schwarz, S.; Suettc, S.; Kempf, M. Assessing the qoe impact of 3d rendering style in the context of vr-based training. In Proceedings of the 2018 Tenth International Conference on Quality of Multimedia Experience (QoMEX), Sardinia, Italy, 29–31 May 2018; pp. 1–6. [Google Scholar]

- Hupont, I.; Gracia, J.; Sanagustin, L.; Gracia, M.A. How do new visual immersive systems influence gaming qoe? a use case of serious gaming with oculus rift. In Proceedings of the 2015 Seventh International Workshop on Quality of Multimedia Experience (QoMEX), Messinia, Greece, 26–29 May 2015; pp. 1–6. [Google Scholar]

- Han, Y.; Yu, C.; Li, D.; Zhang, J.; Lai, Y. Accuracy analysis on 360∘ virtual reality video quality assessment methods. In Proceedings of the 2020 IEEE/ACM 13th International Conference on Utility and Cloud Computing (UCC), Leicester, UK, 7–10 December 2020; pp. 414–419. [Google Scholar]

- Gomes, G.D.; Flynn, R.; Murray, N. A crowdsourcing-based qoe evaluation of an immersive vr autonomous driving experience. In Proceedings of the 2021 13th International Conference on Quality of Multimedia Experience (QoMEX), Montreal, QC, Canada, 14–17 June 2021; pp. 25–30. [Google Scholar]

- Midoglu, C.; Klausen, M.; Alay, Ö; Yazidi, A.; Haugerud, H.; Griwodz, C. Poster: Qoe-based analysis of real-time adaptive 360-degree video streaming. In Proceedings of the 2019 on Wireless of the Students, by the Students, and for the Students Workshop, Los Cabos, Mexico, 21 October 2019; p. 13. [Google Scholar]

- Simone, F.D.; Li, J.; Debarba, H.G.; Ali, A.E.; Gunkel, S.N.B.; Cesar, P. Watching videos together in social virtual reality: An experimental study on user’s qoe. In Proceedings of the 2019 IEEE Conference on Virtual Reality and 3D User Interfaces (VR), Osaka, Japan, 23–27 March 2019; pp. 890–891. [Google Scholar]

- Mao, H.; Netravali, R.; Alizadeh, M. Neural adaptive video streaming with pensieve. In Proceedings of the Conference of the ACM Special Interest Group on Data Communication, Los Angeles, CA, USA, 21–25 August 2017; pp. 197–210. [Google Scholar]

- Petrangeli, S.; Famaey, J.; Claeys, M.; Latré, S.; Turck, F.D. Qoe-driven rate adaptation heuristic for fair adaptive video streaming. ACM Trans. Multimed. Comput. Commun. Appl. (TOMM) 2015, 12, 1–24. [Google Scholar] [CrossRef] [Green Version]

- Yin, X.; Jindal, A.; Sekar, V.; Sinopoli, B. A control-theoretic approach for dynamic adaptive video streaming over http. In Proceedings of the 2015 ACM Conference on Special Interest Group on Data Communication, London, UK, 17–21 August 2015; pp. 325–338. [Google Scholar]

- Kim, J.; Kim, W.; Ahn, S.; Kim, J.; Lee, S. Virtual reality sickness predictor: Analysis of visual-vestibular conflict and vr contents. In Proceedings of the 2018 Tenth International Conference on Quality of Multimedia Experience (QoMEX), Sardinia, Italy, 29 May–1 June 2018; pp. 1–6. [Google Scholar]

- Yao, S.; Fan, C.; Hsu, C. Towards quality-of-experience models for watching 360 videos in head-mounted virtual reality. In Proceedings of the 2019 Eleventh International Conference on Quality of Multimedia Experience (QoMEX), Berlin, Germany, 5–7 June 2019; pp. 1–3. [Google Scholar]

- Xie, S.; Xu, Y.; Qian, Q.; Shen, Q.; Ma, Z.; Zhang, W. Modeling the perceptual impact of viewport adaptation for immersive video. In Proceedings of the 2018 IEEE International Symposium on Circuits and Systems (ISCAS), Florence, Italy, 27–30 May 2018; pp. 1–5. [Google Scholar]

- Yu, M.; Lakshman, H.; Girod, B. A framework to evaluate omnidirectional video coding schemes. In Proceedings of the 2015 IEEE International Symposium on Mixed and Augmented Reality, Fukuoka, Japan, 29 September–3 October 2015; pp. 31–36. [Google Scholar]

- Fiedler, M.; Hossfeld, T.; Tran-Gia, P. A generic quantitative relationship between quality of experience and quality of service. IEEE Netw. 2010, 24, 36–41. [Google Scholar] [CrossRef] [Green Version]

- Reichl, P.; Egger, S.; Schatz, R.; D’Alconzo, A. The logarithmic nature of qoe and the role of the weber-fechner law in qoe assessment. In Proceedings of the 2010 IEEE International Conference on Communications, Enschede, The Netherlands, 7–9 July 2010; pp. 1–5. [Google Scholar]

- Cermak, G.; Pinson, M.; Wolf, S. The relationship among video quality, screen resolution, and bit rate. IEEE Trans. Broadcast. 2011, 57, 258–262. [Google Scholar] [CrossRef]

- Belmudez, B.; Moller, S. An approach for modeling the effects of video resolution and size on the perceived visual quality. In Proceedings of the 2011 IEEE International Symposium on Multimedia, Dana Point, CA, USA, 5–7 December 2011; pp. 464–469. [Google Scholar]

- Han, Y.; Ma, Y.; Liao, Y.; Muntean, G. Qoe oriented adaptive streaming method for 360° virtual reality videos. In Proceedings of the 2019 IEEE SmartWorld, Ubiquitous Intelligence & Computing, Advanced & Trusted Computing, Scalable Computing & Communications, Cloud & Big Data Computing, Internet of People and Smart City Innovation (SmartWorld/SCALCOM/UIC/ATC/CBDCom/IOP/SCI), Leicester, UK, 19–23 August 2019; pp. 1655–1659. [Google Scholar]

- Piamrat, K.; Viho, C.; Bonnin, J.M.; Ksentini, A. Quality of experience measurements for video streaming over wireless networks. In Proceedings of the 2009 Sixth International Conference on Information Technology: New Generations, Las Vegas, NV, USA, 27–29 April 2009; pp. 1184–1189. [Google Scholar]

- Saxena, A.; Subramanyam, S.; Cesar, P.; van der Mei, R.; van den, B.J. Efficient, qoe aware delivery of 360∘ videos on vr headsets over mobile links. In Proceedings of the 13th EAI International Conference on Performance Evaluation Methodologies and Tools, Tsukuba, Japan, 18–20 May 2020; pp. 156–163. [Google Scholar]

- Roberto, G.D.; Birkbeck, N.; Janatra, I.; Adsumilli, B.; Frossard, P. Multi-feature 360 video quality estimation. IEEE Open J. Circuits Syst. 2021, 2, 338–349. [Google Scholar]

- Hu, Y.; Liu, Y.; Wang, Y. Vas360: Qoe-driven viewport adaptive streaming for 360 video. In Proceedings of the 2019 IEEE International Conference on Multimedia & Expo Workshops (ICMEW), Shanghai, China, 8–12 July 2019; pp. 324–329. [Google Scholar]

- Huang, W.; Ding, L.; Wei, H.Y.; Hwang, J.; Xu, Y.; Zhang, W. Qoe-oriented resource allocation for 360-degree video transmission over heterogeneous networks. arXiv 2018, arXiv:1803.07789. [Google Scholar]

- Zai, M.; Chen, J.; Wu, D.; Zhou, Y.; Wang, Y.; Dai, H. Tvg-streaming: Learning user behaviors for qoe-optimized 360-degree video streaming. IEEE Trans. Circuits Syst. Video Technol. 2020. [Google Scholar] [CrossRef]

- Xie, L.; Zhang, X.; Guo, Z. Cls: A cross-user learning based system for improving qoe in 360-degree video adaptive streaming. In Proceedings of the 26th ACM International Conference on Multimedia, Seoul, Korea, 22–26 October 2018; pp. 564–572. [Google Scholar]

- Wang, S.; Tan, X.; Li, S.; Xu, X.; Yang, J.; Zheng, Q. A qoe-based 360° video adaptive bitrate delivery and caching scheme for c-ran. In Proceedings of the 2020 16th International Conference on Mobility, Sensing and Networking (MSN), Tokyo, Japan, 17–19 December 2020; pp. 49–56. [Google Scholar]

- Zhang, Y.; Guan, Y.; Bian, K.; Liu, Y.; Tuo, H.; Song, L.; Li, X. Epass360: Qoe-aware 360-degree video streaming over mobile devices. IEEE Trans. Mob. Comput. 2020, 20, 2338–2353. [Google Scholar] [CrossRef]

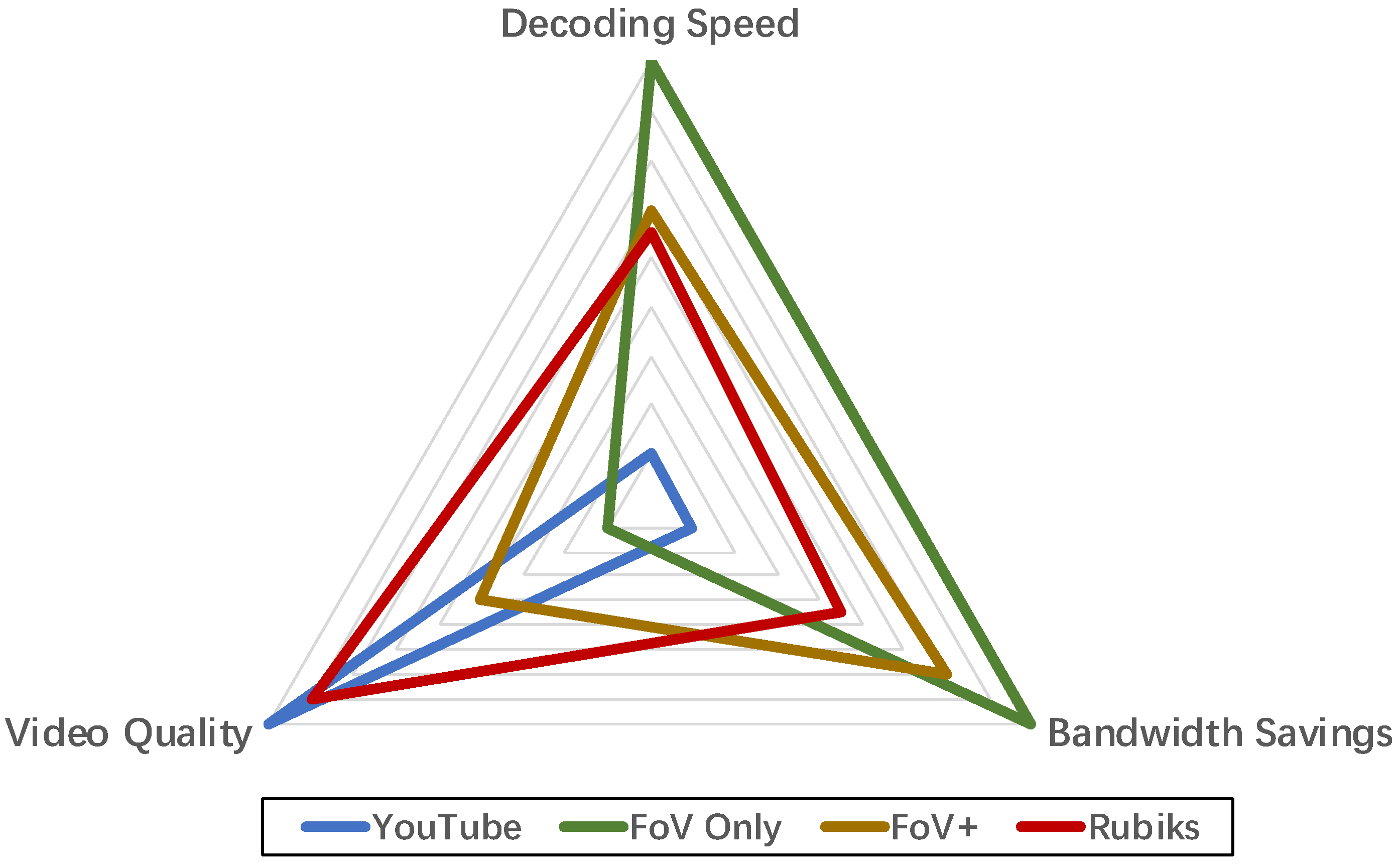

- He, J.; Qureshi, M.A.; Qiu, L.; Li, J.; Li, F.; Han, L. Rubiks: Practical 360-degree streaming for smartphones. In Proceedings of the 16th Annual International Conference on Mobile Systems, Applications, and Services, Munich, Germany, 10–15 June 2018; pp. 482–494. [Google Scholar]

- Qian, F.; Ji, L.; Han, B.; Gopalakrishnan, V. Optimizing 360 video delivery over cellular networks. In Proceedings of the 5th Workshop on All Things Cellular: Operations, Applications and Challenges, New York City, NY, USA, 3–7 October 2016; pp. 1–6. [Google Scholar]

- Bao, Y.; Wu, H.; Zhang, T.; Ramli, A.A.; Liu, X. Shooting a moving target: Motion-prediction-based transmission for 360-degree videos. In Proceedings of the 2016 IEEE International Conference on Big Data (Big Data), Washington, WA, USA, 5–8 December 2016; pp. 1161–1170. [Google Scholar]

- Yu, L.; Tillo, T.; Xiao, J. Qoe-driven dynamic adaptive video streaming strategy with future information. IEEE Trans. Broadcast. 2017, 63, 523–534. [Google Scholar] [CrossRef] [Green Version]

- Perfecto, C.; Elbamby, M.S.; Ser, J.D.; Bennis, M. Taming the latency in multi-user vr 360°: A qoe-aware deep learning-aided multicast framework. IEEE Trans. Commun. 2020, 68, 2491–2508. [Google Scholar] [CrossRef] [Green Version]

- Qian, L.; Cheng, Z.; Fang, Z.; Ding, L.; Yang, F.; Huang, W. A qoe-driven encoder adaptation scheme for multi-user video streaming in wireless networks. IEEE Trans. Broadcast. 2016, 63, 20–31. [Google Scholar] [CrossRef]

- Yang, J.; Luo, J.; Meng, D.; Hwang, J.N. Qoe-driven resource allocation optimized for uplink delivery of delay-sensitive vr video over cellular network. IEEE Access 2019, 7, 60672–60683. [Google Scholar] [CrossRef]

- Guan, Y.; Zheng, C.; Zhang, X.; Guo, Z.; Jiang, J. Pano: Optimizing 360 video streaming with a better understanding of quality perception. In Proceedings of the ACM Special Interest Group on Data Communication, Beijing, China, 19–23 August 2019; pp. 394–407. [Google Scholar]

- da Costa Filho, R.I.T.; Luizelli, M.C.; Vega, M.T.; van der Hooft, J.; Petrangeli, S.; Wauters, T.; Turck, F.D.; Gaspary, L.P. Predicting the performance of virtual reality video streaming in mobile networks. In Proceedings of the 9th ACM Multimedia Systems Conference, Amsterdam, The Netherlands, 12–15 June 2018; pp. 270–283. [Google Scholar]

- Li, C.; Xu, M.; Du, X.; Wang, Z. Bridge the gap between vqa and human behavior on omnidirectional video: A large-scale dataset and a deep learning model. In Proceedings of the 26th ACM International Conference on Multimedia, Seoul, Korea, 22–26 October 2018; pp. 932–940. [Google Scholar]

- Li, C.; Xu, M.; Jiang, L.; Zhang, S.; Tao, X. Viewport proposal cnn for 360deg video quality assessment. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–20 June 2019; pp. 10177–10186. [Google Scholar]

- Wu, C.; Wang, Z.; Sun, L. Paas: A preference-aware deep reinforcement learning approach for 360° video streaming. In Proceedings of the 31st ACM Workshop on Network and Operating Systems Support for Digital Audio and Video, Istanbul, Turkey, 28 September–1 October 2021; pp. 34–41. [Google Scholar]

- Ban, Y.; Zhang, Y.; Zhang, H.; Zhang, X.; Guo, Z. Ma360: Multi-agent deep reinforcement learning based live 360-degree video streaming on edge. In Proceedings of the 2020 IEEE International Conference on Multimedia and Expo (ICME), London, UK, 6–10 July 2020; pp. 1–6. [Google Scholar]

- Gaddam, V.R.; Riegler, M.; Eg, R.; Griwodz, C.; Halvorsen, P. Tiling in interactive panoramic video: Approaches and evaluation. IEEE Trans. Multimed. 2016, 18, 1819–1831. [Google Scholar] [CrossRef]

- Zhang, B.; Zhao, J.; Yang, S.; Zhang, Y.; Wang, J.; Fei, Z. Subjective and objective quality assessment of panoramic videos in virtual reality environments. In Proceedings of the 2017 IEEE International Conference on Multimedia & Expo Workshops (ICMEW), Hong Kong, China, 10–14 July 2017; pp. 163–168. [Google Scholar]

- Mangiante, S.; Klas, G.; Navon, A.; GuanHua, Z.; Ran, J.; Silva, M.D. Vr is on the edge: How to deliver 360 videos in mobile networks. In Proceedings of the Workshop on Virtual Reality and Augmented Reality Network, Los Angeles, CA, USA, 25 August 2017; pp. 30–35. [Google Scholar]

- Cicco, L.D.; Mascolo, S. An adaptive video streaming control system: Modeling, validation, and performance evaluation. IEEE/ACM Trans. Netw. 2013, 22, 526–539. [Google Scholar] [CrossRef]

- Xu, Y.; Zhou, Y.; Chiu, D.M. Analytical qoe models for bit-rate switching in dynamic adaptive streaming systems. IEEE Trans. Mob. Comput. 2014, 13, 2734–2748. [Google Scholar] [CrossRef]

- Hsu, C. Mec-assisted fov-aware and qoe-driven adaptive 360° video streaming for virtual reality. In Proceedings of the 2020 16th International Conference on Mobility, Sensing and Networking (MSN), Tokyo, Japan, 17–19 December 2020; pp. 291–298. [Google Scholar]

| Full View Streaming | Viewport-Based Streaming | Tile-Based Streaming | |

|---|---|---|---|

| 360 video features | Whole data frame in same quality | Viewport area in high quality | The tiles in same or different quality |

| Projection | CMP, ERP | TSP, Pyramid, Offset cubemap | CMP, ERP, TSP, Pyramid, Offset cubemap |

| Encoding pressure | High | Medium | Low |

| Cache pressure | High | Medium | Medium |

| Bandwidth pressure | High | Medium | Medium |

| Adaptive influencing factors | Network | Network/Viewport, Viewport size | Network/Viewport, Viewport/Tiles size |

| Works | Important Influencing Factors | QoE Aspects | Years |

|---|---|---|---|

| [65,66] | resolution, bit rate, quantization parameter QP, content characteristics | 360 degree video perception quality | 2017 and 2018 |

| [67] | stalling patterns and variations in encoding quality | low perceived resolution, low wearing comfort, signs of cybersickness, etc. | 2017 |

| [68,69,70] | (videos sequences, resolution, bandwidth, and network round-trip delay) | simulator sickness | 2017, 2018 and 2019 |

| [71] | eye-tracking latency | amount of foveation that users | 2017 |

| [72] | field-of-view modification | VR sickness | 2016 |

| [73] | different types of system | perception devices track the hands and body | 2016 |

| [74] | initial delay, quality variations and interruptions | 360 degree video perception quality | 2016 |

| [76] | the saccadic suppression, foveal vision, and contrast sensitivity of the human visual system | 360 degree video perception quality | 2020 |

| Works | Metrics/Methods | Contribution | Years |

|---|---|---|---|

| [77] | PSNR | Evaluation based on the correlation between objective quality indicators and subjective quality. | 2017 |

| [75] | S-PSNR | The objective evaluation and subjective relevance of this work is higher than existing methods. | 2017 |

| [78] | S-PSNR and P-PSNR | The Rate control scheme is effective in improving the S-PSNR and P-PSNR of panoramic video coding | 2018 |

| [79] | weighted PSNR | The accuracy and reliability of the proposed objective quality estimation method have been verified, and it has a good correlation with subjective quality estimation. | 2016 |

| [80] | S-SSIM | S-SSIM outperforms state-of-the-art objective quality assessment metrics in omnidirectional video quality assessment. | 2018 |

| [81] | S-PSNR/WS-PSNR/CPP-PSNR/VIFP | VIFP objective indicators provide the best performance indicators. New algorithms are also needed to better predict the perceived quality of omni-directional content. | 2017 |

| [82] | Heart Rate and ElectroDermal | The first work to show the real relationship between the EDA/HR combination and the QoE of users in an immersive VR environment. | 2016 |

| Strategy | Major Contributions | Objectives/Functionality |

|---|---|---|

| Application-level optimization | Flexibility QoE parameters adjustable, Increase client QoE-awareness [54,117]. | Use contextual information to improve the client’s bit rate selection strategy. |

| QoE-aware/driven adaptive streaming based on user data | Set QoE targets based on forecast results and allocation strategies [110,111,113]. | Reduce content delivery latency and improve network resource utilization. |

| Video encoder with appropriate settings to improve user QoE | Tile-based layered coding provides a balance between quality and video coding efficiency [49,114,120,121]. | Improved tile coding method, Optimize resource allocation (RA), Increase the efficiency of QoE and bandwidth usage. |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ruan, J.; Xie, D. A Survey on QoE-Oriented VR Video Streaming: Some Research Issues and Challenges. Electronics 2021, 10, 2155. https://doi.org/10.3390/electronics10172155

Ruan J, Xie D. A Survey on QoE-Oriented VR Video Streaming: Some Research Issues and Challenges. Electronics. 2021; 10(17):2155. https://doi.org/10.3390/electronics10172155

Chicago/Turabian StyleRuan, Jinjia, and Dongliang Xie. 2021. "A Survey on QoE-Oriented VR Video Streaming: Some Research Issues and Challenges" Electronics 10, no. 17: 2155. https://doi.org/10.3390/electronics10172155