Energy-Efficient Virtual Network Function Reconfiguration Strategy Based on Short-Term Resources Requirement Prediction

Abstract

:1. Introduction

- We consider the boot-up energy cost of infrastructures to establish a more accurate energy consumption model;

- In consideration of the real SFC request scenarios, we use a time-varying traffic dataset. We adopt LSTM models to predict the resource demand of VNFs in short term and make use of the prediction result, making it possible to migrate VNF in advance proactively in certain timing;

- We propose the RP-EDM algorithm to minimize the energy consumption of the network while considering SLA. The migration is executed non-periodically, considering servers in overload and low load conditions simultaneously. Additionally, we simulate our algorithm using different prediction models to verify the superiority of our proposed strategy.

2. Related Work

3. System Model and Problem Description

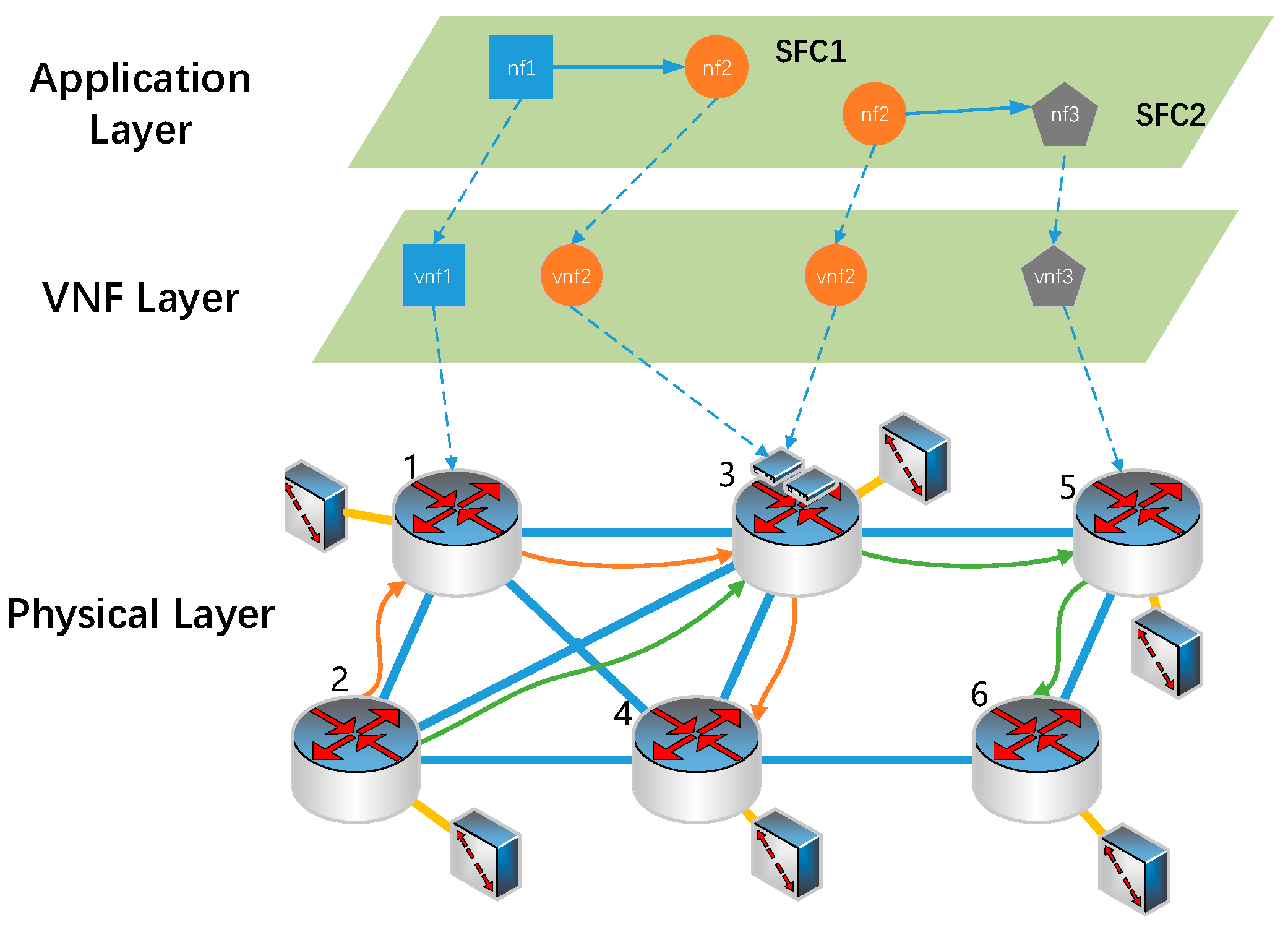

3.1. Network Model

3.2. SFC and VNF

3.3. NF Energy-Efficient Migration Problem

4. Algorithm Design

4.1. RP-EDM Algorithm

| Algorithm 1 RP-EDM |

| Input:, the switch on/off policy of the network in the current period , CPU requirement of all nodes by predicting ; |

| Output: New mapping strategy of after reconfiguration; |

|

|

|

|

|

|

|

|

4.2. Resource Prediction Based Network Function Separation Algorithm

| Algorithm 2 Resource Prediction Based Network Function Separation (PNFS) |

| Input:, the switch on/off policy of the network in the current period , the over-loader node , CPU requirement of all nodes by predicting ; |

| Output: The separation strategy ; |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

4.3. Resource Prediction Based Network Function Consolidation Algorithm

| Algorithm 3 Resource Prediction Based Network Function Consolidation (PNFC) |

| Input:, the switch on/off policy of the network in the current period , the over-loader node , CPU requirement of all nodes by predicting ; |

| Output: The consolidation strategy ; |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

5. Performance Evaluation

5.1. Simulation Setup

5.2. Simulation Result and Analysis

6. Conclusions

6.1. Summary of the Performance

6.2. Limitation of This Study

6.3. Potential Future Research Directions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Kaur, K.; Mangat, V.; Kumar, K. A comprehensive survey of service function chain provisioning approaches in SDN and NFV architecture. Comput. Sci. Rev. 2020, 38, 100298. [Google Scholar] [CrossRef]

- Eramo, V.; Miucci, E.; Ammar, M.; Lavacca, F.G. An Approach for Service Function Chain Routing and Virtual Function Network Instance Migration in Network Function Virtualization Architectures. IEEE ACM Trans. Netw. 2017, 25, 2008–2025. [Google Scholar] [CrossRef]

- Yang, S.; Li, F.; Trajanovski, S.; Yahyapour, R.; Fu, X. Recent Advances of Resource Allocation in Network Function Virtualization. IEEE Trans. Parallel Distrib. Systs. 2021, 32, 295–314. [Google Scholar] [CrossRef]

- Qu, K.; Zhuang, W.; Shen, X.; Li, X.; Rao, J. Dynamic Resource Scaling for VNF Over Nonstationary Traffic: A Learning Approach. IEEE Trans. Cogn. Commun. Netw. 2021, 7, 648–662. [Google Scholar] [CrossRef]

- Beloglazov, A.; Abawajy, J.; Buyya, R. Energy-Aware Resource Allocation Heuristics for Efficient Management of Data Centers for Cloud Computing. Future Gen. Comput. Syst. 2012, 28, 755–768. [Google Scholar] [CrossRef] [Green Version]

- Wen, T.; Yu, H.; Sun, G.; Liu, L. Network function consolidation in service function chaining orchestration. In Proceedings of the IEEE International Conference on Communications (ICC), Kuala Lumpur, Malaysia, 22–27 May 2016; pp. 1–6. [Google Scholar]

- Qi, D.; Shen, S.; Wang, G. Virtualized Network Function Consolidation Based on Multiple Status Characteristics. IEEE Access 2019, 7, 9665–59679. [Google Scholar] [CrossRef]

- Eramo, V.; Ammar, M.; Lavacca, F.G. Migration Energy Aware Reconfigurations of Virtual Network Function Instances in NFV Architectures. IEEE Access 2017, 5, 4927–4938. [Google Scholar] [CrossRef]

- Rais, I.; Orgerie, A.-C.; Quinson, M.; Lefevre, L. Quantifying the impact of shutdown techniques for energy-efficient data centers. Concurr. Comput. Pr. Exp. 2018, 30, e4471. [Google Scholar] [CrossRef] [Green Version]

- Sun, G.; Zhou, R.; Sun, J.; Yu, H.; Vasilakos, A.V. Energy-Efficient Provisioning for Service Function Chains to Support Delay-Sensitive Applications in Network Function Virtualization. IEEE Internet Things J. 2020, 7, 6116–6131. [Google Scholar] [CrossRef]

- Tang, L.; He, X.; Zhao, P.; Zhao, G.; Zhou, Y.; Chen, Q. Virtual Network Function Migration Based on Dynamic Resource Requirements Prediction. IEEE Access 2019, 7, 112348–112362. [Google Scholar] [CrossRef]

- Kim, H.-G.; Lee, D.-Y.; Jeong, S.-Y.; Choi, H.; Yoo, J.-H.; Hong, J.W.-K. Machine Learning-Based Method for Prediction of Virtual Network Function Resource Demands. In Proceedings of the 2019 IEEE Conference on Network Softwarization (NetSoft), Paris, France, 24–28 June 2019; pp. 405–413. [Google Scholar] [CrossRef]

- Strunk, A. Costs of Virtual Machine Live Migration: A Survey. In Proceedings of the 2012 IEEE Eighth World Congress on Services, Honolulu, HI, USA, 24–29 June 2012; pp. 323–329. [Google Scholar]

- Chaurasia, N.; Kumar, M.; Chaudhry, R.; Verma, O.P. Comprehensive survey on energy-aware server consolidation techniques in cloud computing. J. Supercomput. 2021, 77, 11682–11737. [Google Scholar] [CrossRef]

- Kolesar, P.J. A Branch and Bound Algorithm for the Knapsack Problem. Manag. Sci. 1967, 13, 723–735. [Google Scholar] [CrossRef] [Green Version]

- SFCSim Simulation Platform. Available online: https://pypi.org/project/sfcsim/ (accessed on 5 January 2021).

- Tang, L.; He, L.; Tan, Q.; Chen, Q. Virtual Network Function Migration Optimization Algorithm Based on Deep Deterministic Policy Gradient. J. Electron. Inf. Technol. 2021, 43, 404–411. [Google Scholar]

- Tang, L. Multi-priority Based Joint Optimization Algorithm of Virtual Network Function Migration Cost and Network Energy Consumption. J. Electron. Inf. Technol. 2019, 41, 2079–2086. [Google Scholar]

- VNFDataset: Virtual IP Multimedia IP System. Available online: https://www.kaggle.com/imenbenyahia/clearwatervnf-virtual-ip-multimedia-ip-system (accessed on 30 January 2021).

| Ref. | Time-Varying Traffic | Aperiodic Execution | Consolidation | Separation | Boot-Up Energy | Proactive Migration | Use Prediction Results |

|---|---|---|---|---|---|---|---|

| [6] | ✓ | ✕ | ✓ | ✕ | ✕ | ✕ | ✕ |

| [7] | ✓ | ✕ | ✓ | ✕ | ✕ | ✕ | ✕ |

| [8] | ✕ | ✕ | ✓ | ✕ | ✕ | ✕ | ✕ |

| [10] | ✓ | ✕ | ✓ | ✓ | ✓ | ✕ | ✕ |

| [11] | ✓ | ✕ | ✕ | ✓ | ✕ | ✓ | ✓ |

| [12] | ✓ | ✕ | ✕ | ✕ | ✕ | ✕ | ✕ |

| Our approach | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ |

| Metric | Basic-LSTM | CAT-LSTM |

|---|---|---|

| loss | 0.3316 | 0.0345 |

| mse | 30.4405 | 13.9401 |

| rmse | 5.5172 | 3.7336 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liu, Y.; Ran, J.; Hu, H.; Tang, B. Energy-Efficient Virtual Network Function Reconfiguration Strategy Based on Short-Term Resources Requirement Prediction. Electronics 2021, 10, 2287. https://doi.org/10.3390/electronics10182287

Liu Y, Ran J, Hu H, Tang B. Energy-Efficient Virtual Network Function Reconfiguration Strategy Based on Short-Term Resources Requirement Prediction. Electronics. 2021; 10(18):2287. https://doi.org/10.3390/electronics10182287

Chicago/Turabian StyleLiu, Yanyang, Jing Ran, Hefei Hu, and Bihua Tang. 2021. "Energy-Efficient Virtual Network Function Reconfiguration Strategy Based on Short-Term Resources Requirement Prediction" Electronics 10, no. 18: 2287. https://doi.org/10.3390/electronics10182287