Networked VR: State of the Art, Solutions, and Challenges

Abstract

:1. Introduction

- This paper discusses the architecture of VR video streaming. The VR content preprocessing stages, such as content acquisition, projection, and encoding, are organized and discussed. Subsequently, the transmission and consumption of 360-degree video is described in detail.

- The proven streaming technologies for 360-degree video are presented and discussed in detail, including viewport-based, tile-based, and viewport-tracking delivery solutions. We describe how high-resolution content can be delivered to single or multiple users. Different technical- and design-related challenges and implications are presented for the interactive, immersive, and engaging experience of VR video.

- We describe the state of the art in some recent research optimizing VR transmission by leveraging wireless communication, computational, and caching resources at the network edge in order to significantly improve the performance of VR networking.

- We outline some open research questions in the field of VR and some interesting research directions in order to stimulate future research activities in related areas.

2. Background: VR Representation Principles and Typical Transmission Mechanism

2.1. Capture and Representation of VR

2.1.1. Projection Conversion

2.1.2. Video Encoding

2.2. Typical VR Transmission Mechanisms

2.2.1. VR Video Transmission Based on DASH

2.2.2. Transmission Scheme Based on Tile and View Switching

2.2.3. The Progress of Viewport-Tracking Optimization

2.3. The Main Challenges Facing VR Networking

2.3.1. VR Network Computing Power Challenges

2.3.2. The Challenge of VR Network Communication Efficiency

2.3.3. The Challenge of VR Network Service Latency

2.4. Enabling Technologies for VR

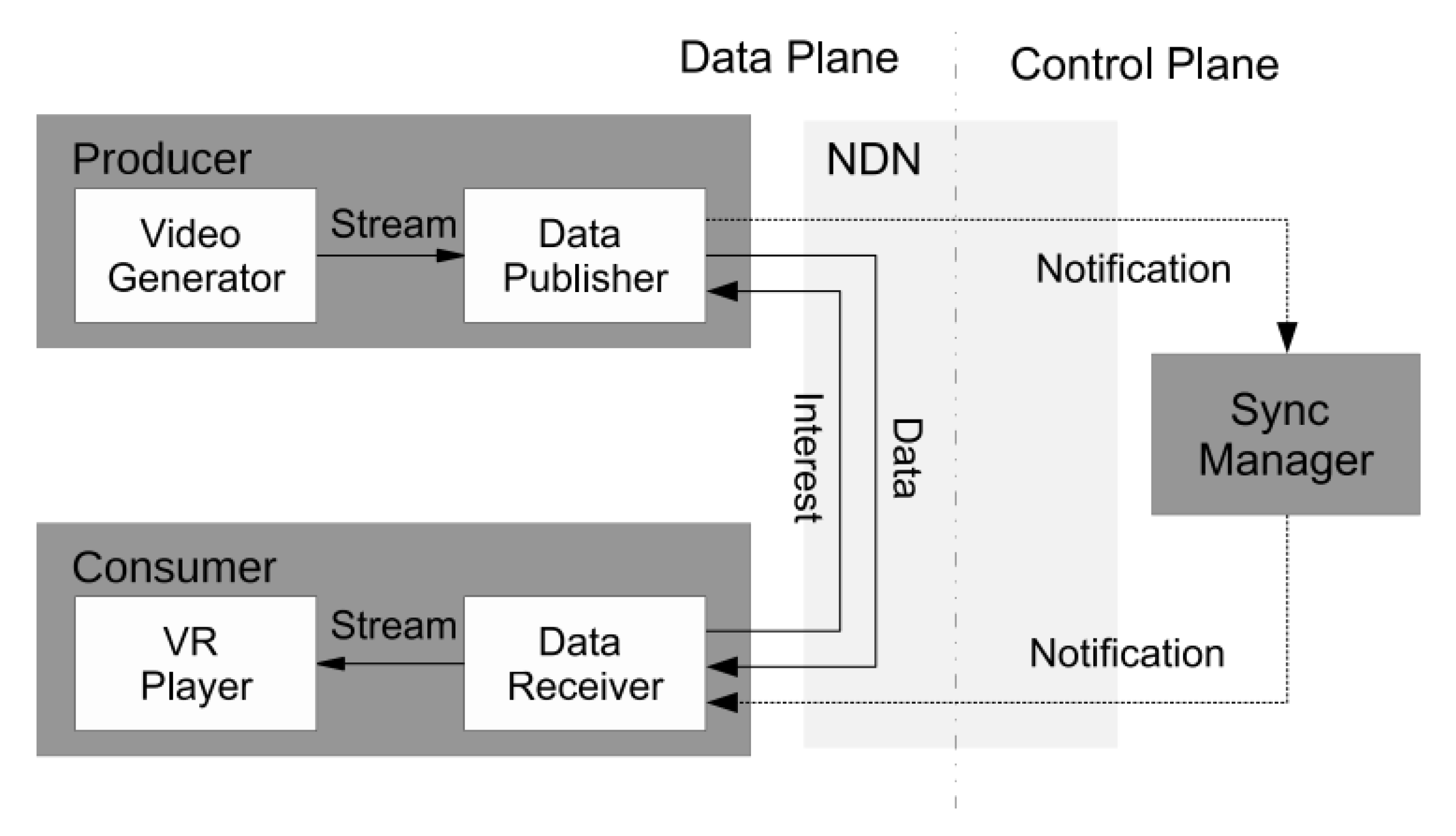

2.4.1. Future Network System Architecture

2.4.2. VR System Design

- FlashBack [53]: Boos et al. proposed FlashBack in order to solve the problem faced by products, such as Google Cardboard and Samsung Gear VR, in providing VR with limited GPU power, which cannot produce acceptable frame rates and delays. FlashBack proactively pre-computes and caches all possible images that VR users may encounter. Record rendering works in offline steps to build a cache full of panoramic images. FlashBack constructs and maintains a tiered storage cache index at runtime in order to quickly find images that a user should view. For cache misses, a fast approximation of the correct image is used, while more closely matched entries are fetched from the cache for future requests. In addition, FlashBack is not only suitable for static scenes, but also for dynamic scenes of moving and animated objects.

- Furion [54]: to enable high quality VR applications on unrestricted mobile devices such as smartphones, Lai et al. introduced Furion, a framework that enables high-quality, immersive mobile VR on today’s mobile devices and wireless networks. Furion leverages key insights into VR workloads, namely the predictability of foreground interaction and background environments as compared to rendering workloads, and uses a split renderer architecture that runs on phones and servers. This is complemented by video compression, the use of panoramic frames, and the parallel decoding of multiple cores on the phone.

- LTE-VR [55]: Tan et al. designed LTE-VR, a device-side solution for mobile VR that requires no changes to device hardware or LTE infrastructure. LTE-VR adapts the signaling operations that are involved in delay-friendliness. LTE-VR can passively use two innovative designs: (1) it adopts a cross-layer design in order to ensure rapid loss detection and (2) it only has rich side-channel information available on the device to reduce VR perception delays.

- Flare [37]: Qian et al. designed Flare, a practical VR videos streaming system for commodity mobile devices. Flare uses a viewport adaptive method: instead of downloading the entire panoramic scene, it predicts the future viewport of the user and only obtains the part that the audience will consume. When compared with the prior methods, Flare reduces bandwidth usage or improves the quality of acquiring VR content of the same bandwidth. In addition, Flare is a universal 360-degree video streaming framework that does not rely on specific video encoding technologies.

3. Different VR Networking Optimization Approaches

3.1. User-Centric Design for Edge Computing

3.2. Optimization of Node-Related Associations

3.2.1. Optimization Based on Caching

3.2.2. Optimization Based on Access Control (AC) Scheduling

3.2.3. Optimization Based on Content Awareness

3.3. VR Implementation Driven by QoE

4. Open Research Challenges

4.1. Construction of Mapping the Relationship between QoE and QoS

4.2. Unified Data Set

- Viewer information: sex, age, experience with the device, and visual health status.

- Video features: capturing projection models, encoding bit rate, resolution, etc.

- Viewing behavior: content type, visual track, experience rating, etc.

4.3. The Evolution of 6-DoF VR Applications

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

Abbreviations

| 2D | 2-dimensional |

| 3D | 3-dimensional |

| 3C | Communication computation and caching |

| 3G | The 3rd generation of mobile phone mobile communication technology standards |

| 4G | The 4th generation of mobile phone mobile communication technology standards |

| 4K | 4K resolution |

| 5G | 5th generation mobile networks or 5th generation wireless systems |

| 6G | 6th generation mobile networks or 6th generation wireless systems |

| AC | Access control |

| AP | Access point |

| AR | Augmented reality |

| CBR | Constant bit rate |

| DASH | Dynamic adaptive streaming over HTTP |

| D2D | Device-to-device |

| DRL | Deep reinforcementlLearning |

| DoF | Degree of Freedom |

| GPU | Graphics Processing Unit |

| HEVC | High-Efficiency Video Coding |

| HMD | Head-Mounted Display |

| HTTP | HyperText Transfer Protocol |

| JVET | Joint Video Experts Team |

| ICN | Information-Centric Networking |

| ISP | Internet Service Provider |

| LR | Logistic Regression |

| LSTM | Long Short-Term Memory |

| LTE | Long-Term Evolution |

| MAC | Medium Access Control |

| MEC | Mobile Edge Computing |

| ML | Machine Learning |

| MPEG | Moving Picture Experts Group |

| MTP | Motion-To-Photons |

| NDN | Named Data Networking |

| QoE | Quality of Experience |

| QoS | Quality of Service |

| QVGA | Quarter VGA |

| OHP | OctaHedral mapping Projection |

| OMAF | Omnidirectional Media Application Format |

| RNN | Recurrent Neural Network |

| ROI | Region of Interest |

| RR | Ridge Regression |

| SSP | Segmented Sphere Projection, |

| TSP | Truncated Square Pyramid projection |

| VBR | Variable Bit Rate |

| VOR | Vestibulo-ocular reflex |

| VP | Viewport Prediction |

| VR | Virtual Reality |

| VVC | Versatile Video Coding |

References

- Peltonen, E.; Bennis, M.; Capobianco, M.; Debbah, M.; Ding, A.; Gil-Castineira, F.; Jurmu, M.; Karvonen, T.; Kelanti, M.; Kliks, A.; et al. 6g white paper on edge intelligence. arXiv 2020, arXiv:2004.14850. [Google Scholar]

- Knightly, E. Scaling WI-FI for next generation transformative applications. In Proceedings of the Keynote Presentation, IEEE INFOCOM, Atlanta, GA, USA, 1–4 May 2017. [Google Scholar]

- Begole, B. Why the Internet Pipes Will Burst When Virtual Reality Takes off. 2016. Available online: https://www.forbes.com/sites/valleyvoices/2016/02/09/why-the-internet-pipes-will-burst-if-virtual-reality-takes-off/?sh=5ae310d63858 (accessed on 12 January 2021).

- Sharma, S.K.; Woungang, I.; Anpalagan, A.; Chatzinotas, S. Toward tactile internet in beyond 5g era: Recent advances, current issues, and future directions. IEEE Access 2020, 8, 56948–56991. [Google Scholar] [CrossRef]

- 3GPP. System Architecture for the 5G System, version 1.2.0:TS23.501[S]; Sophia Antipolis: Valbonne, France, 2017. [Google Scholar]

- Xu, M.; Li, C.; Zhang, S.; Callet, P.L. State-of-the-art in 360 video/image processing: Perception, assessment and compression. IEEE J. Sel. Top. Signal Process. 2020, 14, 5–26. [Google Scholar] [CrossRef] [Green Version]

- Hu, F.; Deng, Y.; Saad, W.; Bennis, M.; Aghvami, A.H. Cellular-connected wireless virtual reality: Requirements, challenges, and solutions. IEEE Commun. Mag. 2020, 58, 105–111. [Google Scholar] [CrossRef]

- Fan, C.; Lo, W.; Pai, Y.; Hsu, C. A survey on 360 video streaming: Acquisition, transmission, and display. ACM Comput. Surv. (CSUR) 2019, 52, 1–36. [Google Scholar] [CrossRef] [Green Version]

- He, D.; Westphal, C.; Garcia-Luna-Aceves, J.J. Network support for ar/vr and immersive video application: A survey. ICETE 2018, 1, 525–535. [Google Scholar]

- Duanmu, F.; He, Y.; Xiu, X.; Hanhart, P.; Ye, Y.; Wang, Y. Hybrid cubemap projection format for 360-degree video coding. In Proceedings of the 2018 Data Compression Conference, Snowbird, UT, USA, 27–30 March 2018; p. 404. [Google Scholar]

- Lin, H.C.; Li, C.Y.; Lin, J.L.; Chang, S.K.; Ju, C.C. An Efficient Compact Layout for Octahedron Format. 2016. Available online: http://phenix.it-sudparis.eu/jvet/doc_end_user/current_document.php?id=2943 (accessed on 12 January 2021).

- der Auwera, G.V.; Coban, H.M.; Karczewicz, M. Truncated Square Pyramid Projection (tsp) for 360 Video. 2016. Available online: http://phenix.it-sudparis.eu/jvet/doc_end_user/current_document.php?id=2767 (accessed on 12 January 2021).

- Zhou, M. A Study on Compression Efficiency of Icosahedral Projection. 2016. Available online: http://phenix.it-sudparis.eu/jvet/doc_end_user/current_document.php?id=2719 (accessed on 12 January 2021).

- Zhang, C.; Lu, Y.; Li, J.; Wen, Z. Segmented Sphere Projection (ssp) for 360-Degree Video Content. 2016. Available online: http://phenix.it-sudparis.eu/jvet/doc_end_user/current_document.php?id=2726 (accessed on 12 January 2021).

- Kuzyakov, E.; Pio, D. Under the Hood: Building 360 Video; Facebook Engineering: Menlo Park, CA, USA, 2015. [Google Scholar]

- Kuzyakov, E.; Pio, D. Next-Generation Video Encoding Techniques for 360 Video and vr. Facebook. 2016. Available online: https://engineering.fb.com/2016/01/21/virtual-reality/next-generation-video-encoding-techniques-for-360-video-and-vr/ (accessed on 12 January 2021).

- Domanski, M.; Stankiewicz, O.; Wegner, K.; Grajek, T. Immersive visual media—mpeg-i: 360 video, virtual navigation and beyond. In Proceedings of the 2017 International Conference on Systems, Signals and Image Processing (IWSSIP), Poznan, Poland, 22–24 May 2017; pp. 1–9. [Google Scholar]

- Sullivan, G.; Ohm, J. Meeting report of the 13th meeting of the joint collaborative team on video coding (jct-vc). In ITU-T/ISO/IEC Joint Collaborative Team on Video Coding; ITU: Incheon, Korea, 2013. [Google Scholar]

- MPEG. MPEG Strategic Standardisation Roadmap. In Proceedings of the 119th MPEG Meeting, Turin, Italy, 17–21 July 2017. [Google Scholar]

- Monnier, R.; van Brandenburg, R.; Koenen, R. Streaming uhd-quality vr at realistic bitrates: Mission impossible. In Proceedings of the 2017 NAB Broadcast Engineering and Information Technology Conference (BEITC), Las Vegas, NV, USA, 22–27 April 2017. [Google Scholar]

- Nguyen, D.V.; Tran, H.T.T.; Thang, T.C. A client-based adaptation framework for 360-degree video streaming. J. Vis. Commun. Image Represent. 2019, 59, 231–243. [Google Scholar] [CrossRef]

- Xie, L.; Xu, Z.; Ban, Y.; Zhang, X.; Guo, Z. 360probdash: Improving qoe of 360 video streaming using tile-based http adaptive streaming. In Proceedings of the 25th ACM international conference on Multimedia, Mountain View, CA, USA, 23–27 October 2017; pp. 315–323. [Google Scholar]

- Huang, W.; Ding, L.; Wei, H.; Hwang, J.; Xu, Y.; Zhang, W. Qoe-oriented resource allocation for 360-degree video transmission over heterogeneous networks. arXiv 2018, arXiv:1803.07789. [Google Scholar]

- Sreedhar, K.K.; Aminlou, A.; Hannuksela, M.M.; Gabbouj, M. Viewport-adaptive encoding and streaming of 360-degree video for virtual reality applications. In Proceedings of the 2016 IEEE International Symposium on Multimedia (ISM), San Jose, CA, USA, 11–13 December 2016; pp. 583–586. [Google Scholar]

- Corbillon, X.; Simon, G.; Devlic, A.; Chakareski, J. Viewport-adaptive navigable 360-degree video delivery. In Proceedings of the 2017 IEEE International Conference on Communications (ICC), Paris, France, 21–25 May 2017; pp. 1–7. [Google Scholar]

- Gaddam, V.R.; Riegler, M.; Eg, R.; Griwodz, C.; Halvorsen, P. Tiling in interactive panoramic video: Approaches and evaluation. IEEE Trans. Multimed. 2016, 18, 1819–1831. [Google Scholar] [CrossRef]

- Graf, M.; Timmerer, C.; Mueller, C. Towards bandwidth efficient adaptive streaming of omnidirectional video over http: Design, implementation, and evaluation. In Proceedings of the 8th ACM on Multimedia Systems Conference, Taipei, Taiwan, 20–23 June 2017; pp. 261–271. [Google Scholar]

- Brandenburg, R.; van Koenen, R.; Sztykman, D. CDN Optimization for vr Streaming. 2017. Available online: https://www.ibc.org/cdn-optimisation-for-vr-streaming-/2457.article (accessed on 12 January 2021).

- Zare, A.; Aminlou, A.; Hannuksela, M.M.; Gabbouj, M. Hevc-compliant tile-based streaming of panoramic video for virtual reality applications. In Proceedings of the 24th ACM international conference on Multimedia, Amsterdam, The Netherlands, 23–27 October 2016; pp. 601–605. [Google Scholar]

- Petrangeli, S.; Swaminathan, V.; Hosseini, M.; Turck, F.D. An http/2-based adaptive streaming framework for 360 virtual reality videos. In Proceedings of the 25th ACM international conference on Multimedia, Mountain View, CA, USA, 23–27 October 2017; pp. 306–314. [Google Scholar]

- Hosseini, M.; Swaminathan, V. Adaptive 360 vr video streaming: Divide and conquer. In Proceedings of the 2016 IEEE International Symposium on Multimedia (ISM), San Jose, CA, USA, 11–13 December 2016; pp. 107–110. [Google Scholar]

- Corbillon, X.; Devlic, A.; Simon, G.; Chakareski, J. Optimal set of 360-degree videos for viewport-adaptive streaming. In Proceedings of the 25th ACM international conference on Multimedia, New York, NY, USA, 23–27 October 2017; pp. 943–951. [Google Scholar]

- Duanmu, F.; Kurdoglu, E.; Hosseini, S.A.; Liu, Y.; Wang, Y. Prioritized buffer control in two-tier 360 video streaming. In Proceedings of the Workshop on Virtual Reality and Augmented Reality Network, Los Angeles, CA, USA, 25 August 2017; pp. 13–18. [Google Scholar]

- Xie, X.; Zhang, X. Poi360: Panoramic mobile video telephony over lte cellular networks. In Proceedings of the 13th International Conference on emerging Networking Experiments and Technologies, Incheon, Korea, 12–15 December 2017; pp. 336–349. [Google Scholar]

- Qian, F.; Ji, L.; Han, B.; Gopalakrishnan, V. Optimizing 360 video delivery over cellular networks. In Proceedings of the 5th Workshop on All Things Cellular: Operations, Applications and Challenges, New York, NY, USA, 3–7 October 2016; pp. 1–6. [Google Scholar]

- Nasrabadi, A.T.; Mahzari, A.; Beshay, J.D.; Prakash, R. Adaptive 360-degree video streaming using scalable video coding. In Proceedings of the 25th ACM International Conference on Multimedia, Mountain View, CA, USA, 23–27 October 2017; pp. 1689–1697. [Google Scholar]

- Qian, F.; Han, B.; Xiao, Q.; Gopalakrishnan, V. Flare: Practical viewport-adaptive 360-degree video streaming for mobile devices. In Proceedings of the 24th Annual International Conference on Mobile Computing and Networking, New Delhi, India, 29 October–2 November 2018; pp. 99–114. [Google Scholar]

- Xie, L.; Zhang, X.; Guo, Z. Cls: A cross-user learning based system for improving qoe in 360-degree video adaptive streaming. In Proceedings of the 26th ACM international conference on Multimedia, Seoul, Korea, 22–26 October 2018; pp. 564–572. [Google Scholar]

- Ban, Y.; Xie, L.; Xu, Z.; Zhang, X.; Guo, Z.; Wang, Y. Cub360: Exploiting cross-users behaviors for viewport prediction in 360 video adaptive streaming. In Proceedings of the 2018 IEEE International Conference on Multimedia and Expo (ICME), San Diego, CA, USA, 23–27 July 2018; pp. 1–6. [Google Scholar]

- Fan, C.-L.; Lee, J.; Lo, W.-C.; Huang, C.-Y.; Chen, K.-T.; Hsu, C.-H. Fixation prediction for 360 video streaming in head-mounted virtual reality. In Proceedings of the 27th Workshop on Network and Operating Systems Support for Digital Audio and Video, Taipei, Taiwan, 20–23 June 2017; pp. 67–72. [Google Scholar]

- Guan, Y.; Zheng, C.; Zhang, X.; Guo, Z.; Jiang, J. Pano: Optimizing 360 video streaming with a better understanding of quality perception. In Proceedings of the ACM Special Interest Group on Data Communication, Beijing, China, 19–23 August 2019; pp. 394–407. [Google Scholar]

- Zhou, Y.; Sun, B.; Qi, Y.; Peng, Y.; Liu, L.; Zhang, Z.; Liu, Y.; Liu, D.; Li, Z.; Tian, L. Mobile AR/VR in 5G based on convergence of communication and computing. Telecommun. Sci. 2018, 34, 19–33. [Google Scholar]

- Yanli, Q.; Yiqing, Z.; Ling, L.; Lin, T.; Jinglin, S. Mec coordinated future 5g mobile wireless networks. J. Comput. Res. Dev. 2018, 55, 478. [Google Scholar]

- Dai, J.; Liu, D. An mec-enabled wireless vr transmission system with view synthesis-based caching. In Proceedings of the 2019 IEEE Wireless Communications and Networking Conference Workshop (WCNCW), Marrakech, Morocco, 15–18 April 2019; pp. 1–7. [Google Scholar]

- Dai, J.; Zhang, Z.; Mao, S.; Liu, D. A view synthesis-based 360° vr caching system over mec-enabled c-ran. IEEE Trans. Circuits Syst. Video Technol. 2019, 10, 3843–3855. [Google Scholar] [CrossRef]

- Dang, T.; Peng, M. Joint radio communication, caching, and computing design for mobile virtual reality delivery in fog radio access networks. IEEE J. Sel. Areas Commun. 2019, 37, 1594–1607. [Google Scholar] [CrossRef]

- Chen, M.; Saad, W.; Yin, C. Virtual reality over wireless networks: Quality-of-service model and learning-based resource management. IEEE Trans. Commun. 2018, 66, 5621–5635. [Google Scholar] [CrossRef] [Green Version]

- Doppler, K.; Torkildson, E.; Bouwen, J. On wireless networks for the era of mixed reality. In Proceedings of the 2017 European Conference on Networks and Communications (EuCNC), Oulu, Finland, 12–15 June 2017; pp. 1–5. [Google Scholar]

- Ju, R.; He, J.; Sun, F.; Li, J.; Li, F.; Zhu, J.; Han, L. Ultra wide view based panoramic vr streaming. In Proceedings of the Workshop on Virtual Reality and Augmented Reality Network, Los Angeles, CA, USA, 25 August 2017; pp. 19–23. [Google Scholar]

- Xylomenos, G.; Ververidis, C.N.; Siris, V.A.; Fotiou, N.; Tsilopoulos, C.; Vasilakos, X.; Katsaros, K.V.; Polyzos, G.C. A survey of information-centric networking research. IEEE Commun. Surv. Tutor. 2013, 16, 1024–1049. [Google Scholar] [CrossRef]

- Zhang, L.; Afanasyev, A.; Burke, J.; Jacobson, V.; Claffy, K.C.; Crowley, P.; Papadopoulos, C.; Wang, L.; Zhang, B. Named data networking. ACM SIGCOMM Comput. Commun. Rev. 2014, 44, 66–73. [Google Scholar] [CrossRef]

- Zhang, L.; Amin, S.O.; Westphal, C. Vr video conferencing over named data networks. In Proceedings of the Workshop on Virtual Reality and Augmented Reality Network, Los Angeles, CA, USA, 25 August 2017; pp. 7–12. [Google Scholar]

- Boos, K.; Chu, D.; Cuervo, E. Flashback: Immersive virtual reality on mobile devices via rendering memoization. In Proceedings of the 14th Annual International Conference on Mobile Systems, Applications, and Services, Singapore, 26–30 June 2016; pp. 291–304. [Google Scholar]

- Lai, Z.; Hu, Y.C.; Cui, Y.; Sun, L.; Dai, N.; Lee, H.S. Furion: Engineering high-quality immersive virtual reality on today’s mobile devices. IEEE Trans. Mob. Comput. 2020, 7, 1586–1602. [Google Scholar] [CrossRef]

- Tan, Z.; Li, Y.; Li, Q.; Zhang, Z.; Li, Z.; Lu, S. Supporting mobile vr in lte networks: How close are we? Proc. ACM Meas. Anal. Comput. Syst. 2018, 2, 1–31. [Google Scholar]

- Juniper. Virtual Reality Markets: Hardware, Content & Accessories 2017–2022.[J/OL]. 2017. Available online: https://www.juniperresearch.com/researchstore/enabling-technologies/virtual-reality/hardware-content-accessories (accessed on 12 January 2021).

- Liu, H.; Chen, Z.; Qian, L. The three primary colors of mobile systems. IEEE Commun. Mag. 2016, 54, 15–21. [Google Scholar] [CrossRef] [Green Version]

- Liu, Y.; Liu, J.; Argyriou, A.; Ci, S. Mec-assisted panoramic vr video streaming over millimeter wave mobile networks. IEEE Trans. Multimed. 2018, 21, 1302–1316. [Google Scholar] [CrossRef]

- Yang, X.; Chen, Z.; Li, K.; Sun, Y.; Liu, N.; Xie, W.; Zhao, Y. Communication-constrained mobile edge computing systems for wireless virtual reality: Scheduling and tradeoff. IEEE Access 2018, 6, 16665–16677. [Google Scholar] [CrossRef]

- Perfecto, C.; Elbamby, M.S.; Ser, J.D.; Bennis, M. Taming the latency in multi-user vr 360°: A qoe-aware deep learning-aided multicast framework. IEEE Trans. Commun. 2020, 68, 2491–2508. [Google Scholar] [CrossRef] [Green Version]

- Elbamby, M.S.; Perfecto, C.; Bennis, M.; Doppler, K. Edge computing meets millimeter-wave enabled vr: Paving the way to cutting the cord. In Proceedings of the 2018 IEEE Wireless Communications and Networking Conference (WCNC), Barcelona, Spain, 15–18 April 2018; pp. 1–6. [Google Scholar]

- Bastug, E.; Bennis, M.; Kountouris, M.; Debbah, M. Cache-enabled small cell networks: Modeling and tradeoffs. EURASIP J. Wirel. Commun. Netw. 2015, 1, 1–11. [Google Scholar]

- Erman, J.; Gerber, A.; Hajiaghayi, M.; Pei, D.; Sen, S.; Spatscheck, O. To cache or not to cache: The 3g case. IEEE Internet Comput. 2011, 15, 27–34. [Google Scholar] [CrossRef]

- Ahlehagh, H.; Dey, S. Video caching in radio access network: Impact on delay and capacity. In Proceedings of the 2012 IEEE Wireless Communications and Networking Conference (WCNC), Paris, France, 1–4 April 2012; pp. 2276–2281. [Google Scholar]

- Karamchandani, N.; Niesen, U.; Maddah-Ali, M.A.; Diggavi, S.N. Hierarchical coded caching. IEEE Trans. Inf. Theory 2016, 62, 3212–3229. [Google Scholar] [CrossRef]

- Maddah-Ali, M.A.; Niesen, U. Fundamental limits of caching. IEEE Trans. Inf. Theory 2014, 60, 2856–2867. [Google Scholar] [CrossRef] [Green Version]

- Chakareski, J. Vr/ar immersive communication: Caching, edge computing, and transmission trade-offs. In Proceedings of the Workshop on Virtual Reality and Augmented Reality Network, Los Angeles, CA, USA, 25 August 2017; pp. 36–41. [Google Scholar]

- Chen, M.; Saad, W.; Yin, C. Echo-liquid state deep learning for 360 content transmission and caching in wireless vr networks with cellular-connected uavs. IEEE Trans. Commun. 2019, 67, 6386–6400. [Google Scholar] [CrossRef]

- Sukhmani, S.; Sadeghi, M.; Erol-Kantarci, M.; Saddik, A.E. Edge caching and computing in 5g for mobile ar/vr and tactile internet. IEEE Multimed. 2018, 26, 21–30. [Google Scholar] [CrossRef]

- Chakareski, J. Uplink scheduling of visual sensors: When view popularity matters. IEEE Trans. Commun. 2014, 63, 510–519. [Google Scholar] [CrossRef]

- Vasudevan, R.; Zhou, Z.; Kurillo, G.; Lobaton, E.; Bajcsy, R.; Nahrstedt, K. Real-time stereo-vision system for 3d teleimmersive collaboration. In Proceedings of the 2010 IEEE International Conference on Multimedia and Expo, Singapore, 19–23 July 2010; pp. 1208–1213. [Google Scholar]

- Hosseini, M.; Kurillo, G. Coordinated bandwidth adaptations for distributed 3d tele-immersive systems. In Proceedings of the 7th ACM International Workshop on Massively Multiuser Virtual Environments, Portland, OR, USA, 18–20 March 2015; pp. 13–18. [Google Scholar]

- Cheung, G.; Ortega, A.; Cheung, N. Interactive streaming of stored multiview video using redundant frame structures. IEEE Trans. Image Process. 2010, 20, 744–761. [Google Scholar] [CrossRef] [Green Version]

- Chakareski, J.; Velisavljevic, V.; Stankovic, V. User-action-driven view and rate scalable multiview video coding. IEEE Trans. Image Process. 2013, 22, 3473–3484. [Google Scholar] [CrossRef]

- Chakareski, J. Wireless streaming of interactive multi-view video via network compression and path diversity. IEEE Trans. Commun. 2014, 62, 1350–1357. [Google Scholar] [CrossRef]

- Blasco, P.; Gunduz, D. Learning-based optimization of cache content in a small cell base station. In Proceedings of the 2014 IEEE International Conference on Communications (ICC), Sydney, Australia, 10–14 June 2014; pp. 1897–1903. [Google Scholar]

- Martello, S. Knapsack problems: Algorithms and computer implementations. Wiley-Intersci. Ser. Discret. Math. Optim. Tion 1990, 6, 513. [Google Scholar]

- Shanmugam, K.; Golrezaei, N.; Dimakis, A.G.; Molisch, A.F.; Caire, G. Femtocaching: Wireless video content delivery through distributed caching helpers. arXiv 2011, arXiv:1109.4179. [Google Scholar] [CrossRef]

- Borji, A.; Cheng, M.M.; Hou, Q.; Jiang, H.; Li, J. Salient object detection: A survey. Comput. Vis. Media 2019, 2, 117–150. [Google Scholar] [CrossRef] [Green Version]

- Alshawi, T.; Long, Z.; AlRegib, G. Understanding spatial correlation in eye-fixation maps for visual attention in videos. In Proceedings of the 2016 IEEE International Conference on Multimedia and Expo (ICME), Seattle, WA, USA, 11–15 July 2016; pp. 1–6. [Google Scholar]

- Chaabouni, S.; Benois-Pineau, J.; Amar, C.B. Transfer learning with deep networks for saliency prediction in natural video. In Proceedings of the 2016 IEEE International Conference on Image Processing (ICIP), Phoenix, AZ, USA, 25–28 September 2016; pp. 1604–1608. [Google Scholar]

- Nguyen, T.V.; Xu, M.; Gao, G.; Kankanhalli, M.; Tian, Q.; Yan, S. Static saliency vs. dynamic saliency: A comparative study. In Proceedings of the 21st ACM international conference on Multimedia, Barcelona, Spain, 21–25 October 2013; pp. 987–996. [Google Scholar]

- Hashim, F.; Nasimi, M. Qoe-oriented cross-layer downlink scheduling for heterogeneous traffics in lte networks. In Proceedings of the IEEE Malaysia International Conference on Communications, Kuala Lumpur, Malaysia, 26–28 November 2013. [Google Scholar]

- Rubin, I.; Colonnese, S.; Cuomo, F.; Calanca, F.; Melodia, T. Mobile http-Based Streaming Using Flexible Lte Base Station Control. In Proceedings of the 16th IEEE International Symposium on A World of Wireless, Mobile and Multimedia Networks, Boston, MA, USA, 14–17 June 2015. [Google Scholar]

- Zhang, Y.; Guan, Y.; Bian, K.; Liu, Y.; Tuo, H.; Song, L.; Li, X. Epass360: Qoe-aware 360-degree video streaming over mobile devices. IEEE Trans. Mob. Comput. (Early Access) 2020, 1, 1. [Google Scholar] [CrossRef]

- Filho, R.I.T.D.C.; Luizelli, M.C.; Petrangeli, S.; Vega, M.T.; der Hooft, J.V.; Wauters, T.; Turck, F.D.; Gaspary, L.P. Dissecting the performance of vr video streaming through the vr-exp experimentation platform. ACM Trans. Multimed. Comput. Commun. Appl. (TOMM) 2019, 15, 1–23. [Google Scholar] [CrossRef]

- Yu, L.; Tillo, T.; Xiao, J. Qoe-driven dynamic adaptive video streaming strategy with future information. IEEE Trans. Broadcast. 2017, 63, 523–534. [Google Scholar] [CrossRef] [Green Version]

- Chiariotti, F.; D’Aronco, S.; Toni, L.; Frossard, P. Online learning adaptation strategy for dash clients. In Proceedings of the 7th International Conference on Multimedia Systems, Klagenfurt, Austria, 10–13 May 2016; pp. 1–12. [Google Scholar]

- Li, C.; Xu, M.; Du, X.; Wang, Z. Bridge the gap between vqa and human behavior on omnidirectional video: A large-scale dataset and a deep learning model. In Proceedings of the 26th ACM international conference on Multimedia, Seoul, Korea, 22–26 October 2018; pp. 932–940. [Google Scholar]

- Vega, M.T.; Mocanu, D.C.; Barresi, R.; Fortino, G.; Liotta, A. Cognitive streaming on android devices. In Proceedings of the 2015 IFIP/IEEE International Symposium on Integrated Network Management (IM), Ottawa, ON, Canada, 11–15 May 2015; pp. 1316–1321. [Google Scholar]

- Wang, Y.; Xu, J.; Jiang, L. Challenges of system-level simulations and performance evaluation for 5g wireless networks. IEEE Access 2014, 2, 1553–1561. [Google Scholar] [CrossRef]

- Fei, Z.; Xing, C.; Li, N. Qoe-driven resource allocation for mobile ip services in wireless network. Sci. China Inf. Sci. 2015, 58, 1–10. [Google Scholar] [CrossRef]

- Agiwal, M.; Roy, A.; Saxena, N. Next generation 5g wireless networks: A comprehensive survey. IEEE Commun. Surv. Tutor. 2016, 18, 1617–1655. [Google Scholar] [CrossRef]

- Wang, F.; Fei, Z.; Wang, J.; Liu, Y.; Wu, Z. Has qoe prediction based on dynamic video features with data mining in lte network. Sci. China Inf. Sci. 2017, 60, 042404. [Google Scholar] [CrossRef]

- Wu, C.; Tan, Z.; Wang, Z.; Yang, S. A dataset for exploring user behaviors in vr spherical video streaming. In Proceedings of the 8th ACM on Multimedia Systems Conference, Taipei, Taiwan, 20–23 June 2017; pp. 193–198. [Google Scholar]

- Lo, W.C.; Fan, C.L.; Lee, J.; Huang, C.Y.; Chen, K.T.; Hsu, C.H. 360 video viewing dataset in head-mounted virtual reality. In Proceedings of the 8th ACM on Multimedia Systems Conference, Taipei, Taiwan, 20–23 June 2017; pp. 211–216. [Google Scholar]

- Corbillon, X.; Simone, F.D.; Simon, G.; Frossard, P. Dynamic adaptive streaming for multi-viewpoint omnidirectional videos. In Proceedings of the 9th ACM Multimedia Systems Conference, Amsterdam, The Netherlands, 12–15 June 2018; pp. 237–249. [Google Scholar]

- Upenik, E.; Řeřábek, M.; Ebrahimi, T. Testbed for subjective evaluation of omnidirectional visual content. In Proceedings of the 2016 Picture Coding Symposium (PCS), Nuremberg, Germany, 4–7 December 2016; pp. 1–5. [Google Scholar]

- Abreu, A.D.; Ozcinar, C.; Smolic, A. Look around you: Saliency maps for omnidirectional images in vr applications. In Proceedings of the 2017 Ninth International Conference on Quality of Multimedia Experience (QoMEX), Erfurt, Germany, 31 May–2 June 2017; pp. 1–6. [Google Scholar]

- Oculus Rift VR. Available online: https://www.oculus.com/rift/ (accessed on 12 January 2021).

- Daydream, G. Available online: https://vr.google.com/ (accessed on 12 January 2021).

- Samsung Gear VR. Available online: http://www.samsung.com/global/galaxy/gear-vr (accessed on 12 January 2021).

- HTC Vive VR. Available online: https://www.vive.com/ (accessed on 12 January 2021).

- Jeong, J.; Jang, D.; Son, J.; Ryu, E. Bitrate efficient 3dof+ 360 video view synthesis for immersive vr video streaming. In Proceedings of the 2018 International Conference on Information and Communication Technology Convergence (ICTC), Jeju Island, Korea, 17–19 October 2018; pp. 581–586. [Google Scholar]

- Jeong, J.; Jang, D.; Son, J.; Ryu, E. 3dof+ 360 video location-based asymmetric down-sampling for view synthesis to immersive vr video streaming. Sensors 2018, 18, 3148. [Google Scholar] [CrossRef] [PubMed] [Green Version]

| Reference | Method | Sheme |

|---|---|---|

| [85] | RNN-LSTM | Predicted Viewpoint/Predicted Bandwidth |

| [37] | LR-RR | Predicted Viewpoint/Predicted Bandwidth |

| [86] | RL model | Improved Adaptive VR Streaming |

| [87] | MDP-RL | Improved Variable bitrate (VBR) |

| [88] | Post-decision state | Improved constant bitrate (CBR) |

| [89] | DRL model | Improved video quality |

| [90] | Q-Learning RL | Improved CBR |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ruan, J.; Xie, D. Networked VR: State of the Art, Solutions, and Challenges. Electronics 2021, 10, 166. https://doi.org/10.3390/electronics10020166

Ruan J, Xie D. Networked VR: State of the Art, Solutions, and Challenges. Electronics. 2021; 10(2):166. https://doi.org/10.3390/electronics10020166

Chicago/Turabian StyleRuan, Jinjia, and Dongliang Xie. 2021. "Networked VR: State of the Art, Solutions, and Challenges" Electronics 10, no. 2: 166. https://doi.org/10.3390/electronics10020166

APA StyleRuan, J., & Xie, D. (2021). Networked VR: State of the Art, Solutions, and Challenges. Electronics, 10(2), 166. https://doi.org/10.3390/electronics10020166