Localization of Multi-Class On-Road and Aerial Targets Using mmWave FMCW Radar

Abstract

:1. Introduction

- We propose AoA estimation of multi-class targets by mmWave FMCW radar measurements in a practical outdoor setting.

- The proposed method just requires only 1 Tx antenna and 1 Rx antenna for the localization of multi-class targets.

- The proposed localization method using mmWave FMCW radar achieves a large FoV in both azimuth and elevation directions.

- The proposed method estimates the AoA of both on-road and aerial targets, using morphological operators on range-angle maps.

- The proposed method improves the visual representation of multi-class targets, using range–angle images.

2. System Description

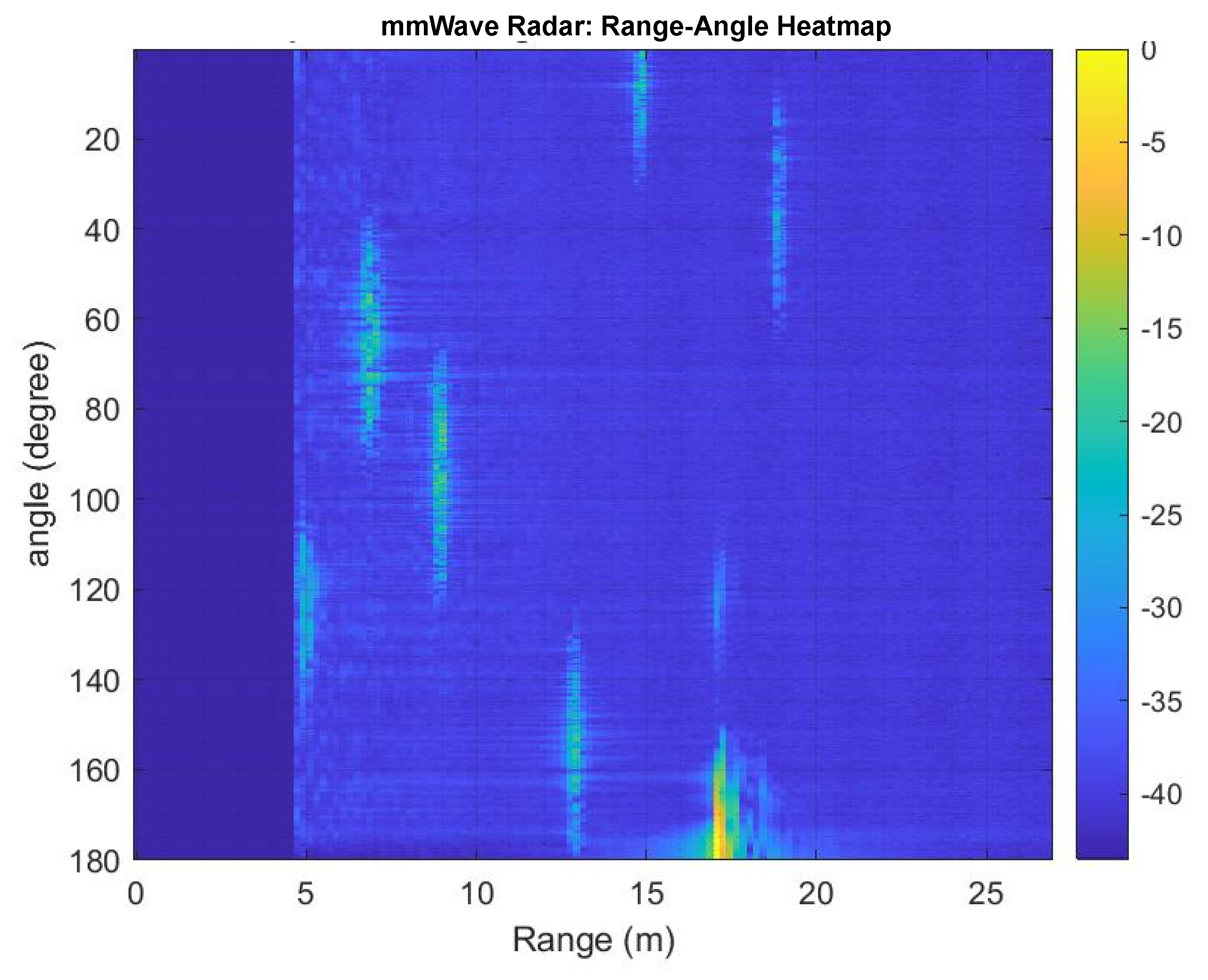

3. Outdoor Measurements and Pre-Processing

4. Range and Angle Estimation Using Morphological Operators on Range–Angle Maps

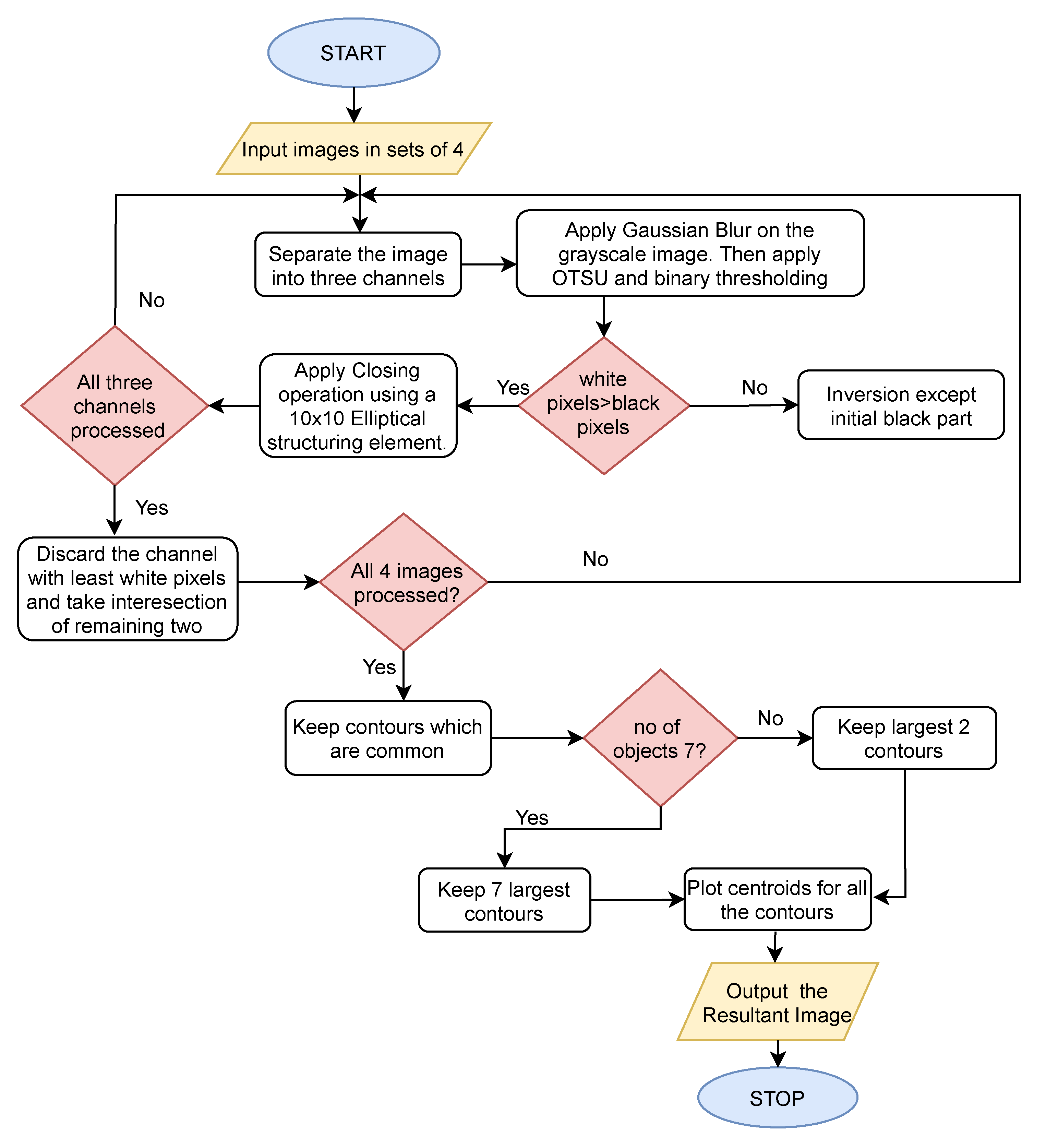

- Because four receivers were used here, the data set was divided into four sets, one for each case, and four different receivers capturing it. The images were then processed one by one. However, only 1 Tx and 1 Rx are required for angle estimation using the proposed method.

- The image was cropped off the scale using Otsu thresholding, and objects were displayed based on the most definite contour, which is the largest in area.

- An image was then divided into three channels, namely BGR, stored in a list, and converted into gray scale images. Individual channels were then processed.

- To smooth out the image, Gaussian blurring was used, followed by Otsu thresholding, to remove noise and binarize it.

- After obtaining the binary image, inversion based on the number of white and black pixels was performed, followed by the morphological operation, closing with a 10 × 10 elliptical structuring element to obtain proper contours. Any areas with a size smaller than 150 px*px were removed.

- The best two of the three channels were then chosen, and their intersection was used to generate the final processed image. Later, only contours with a common area in at least three of the four images were kept, and the best contours based on the number of objects were chosen.

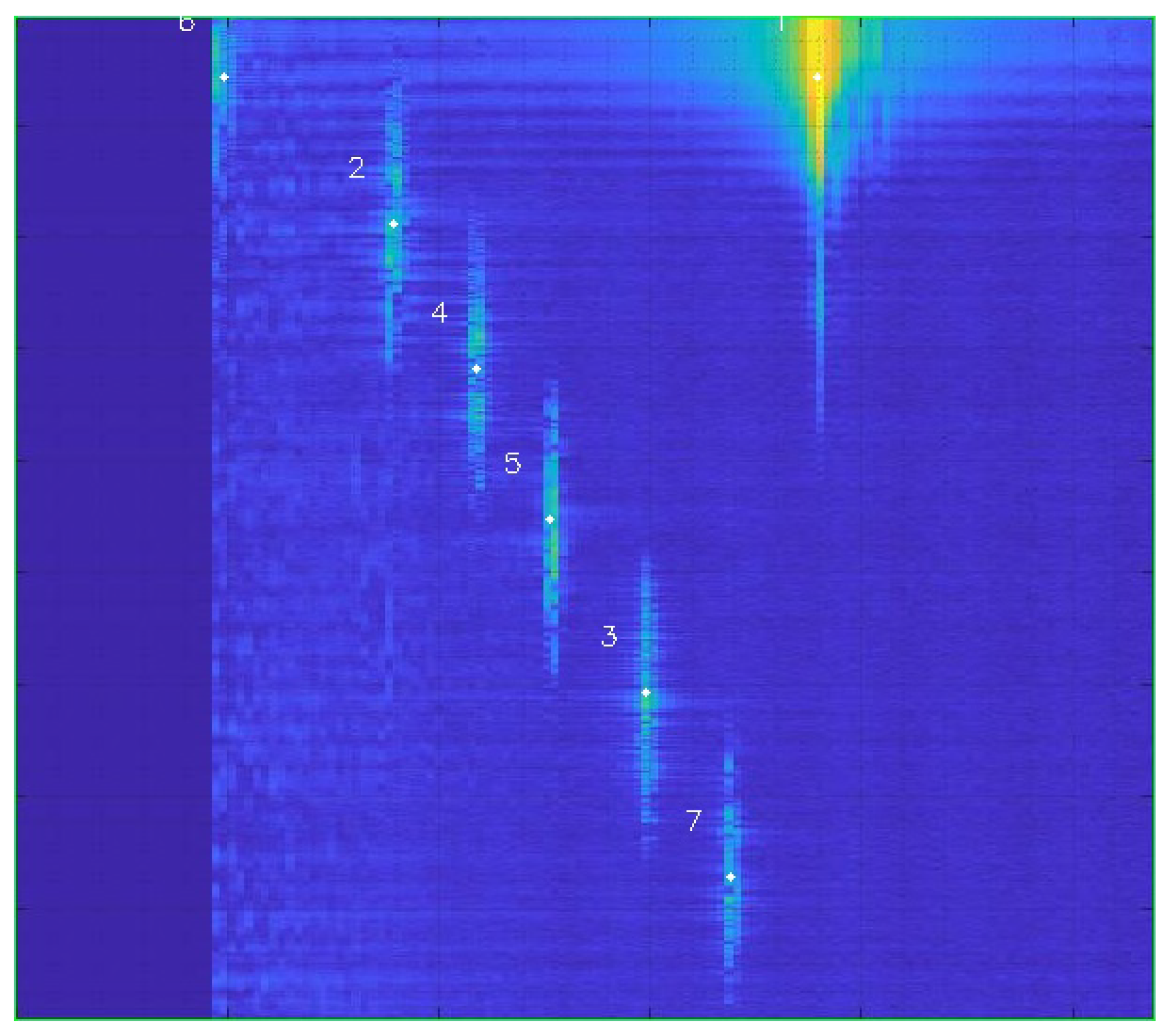

- Finally, using the concept of moments, the centroids were plotted.

4.1. Otsu Thresholding

4.2. Gaussian Blurring

4.3. Morphological Operation-Closing

4.4. Concept of Moments

5. Results and Discussion

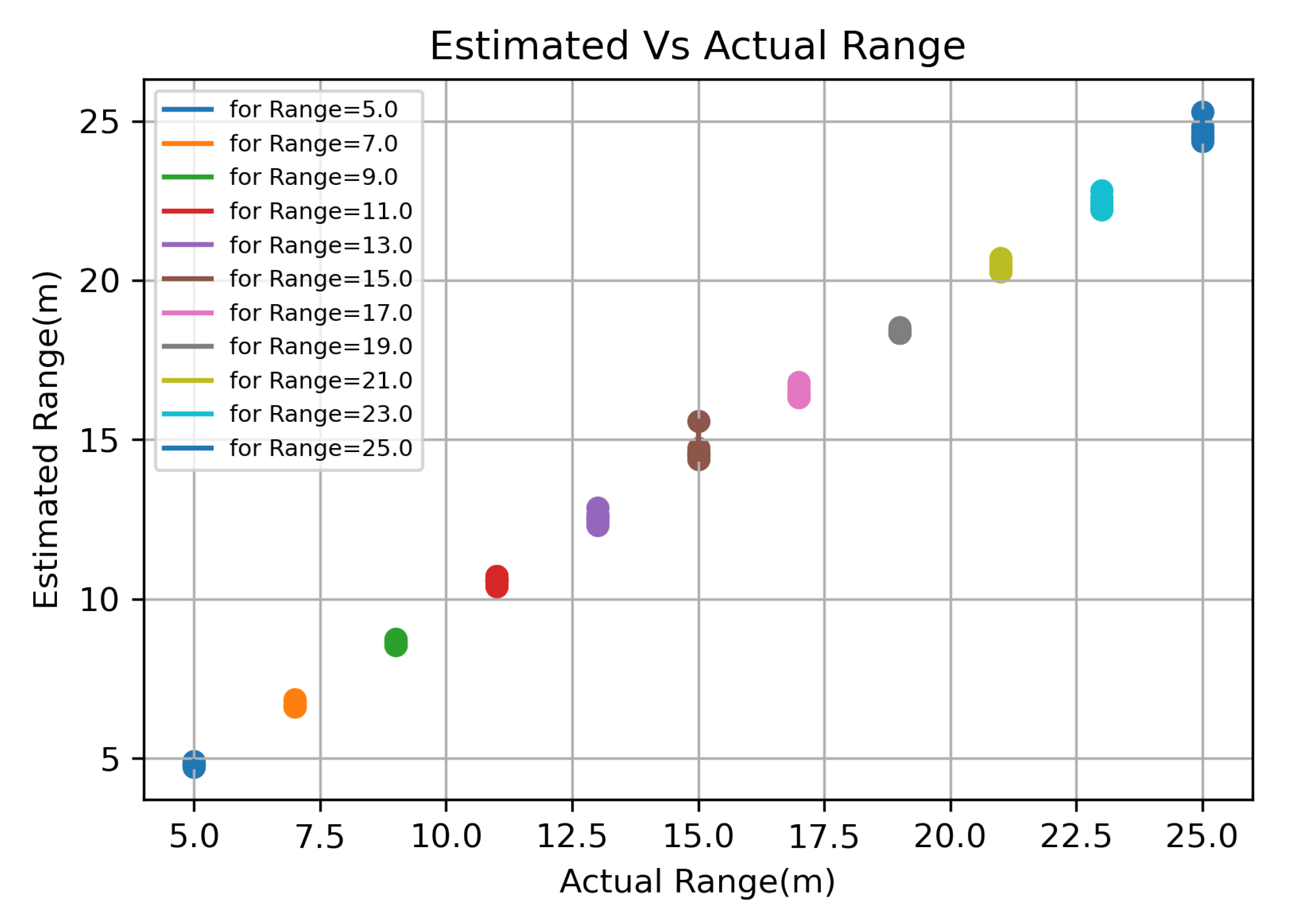

Statistical Analysis of Measurements

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Gamba, J. Automotive Radar Applications. In Radar Signal Processing for Autonomous Driving; Springer: Singapore, 2020; pp. 123–142. [Google Scholar] [CrossRef]

- Cardillo, E.; Caddemi, A. A Review on Biomedical MIMO Radars for Vital Sign Detection and Human Localization. Electronics 2020, 9, 1497. [Google Scholar] [CrossRef]

- Cardillo, E.; Li, C.; Caddemi, A. Embedded heating, ventilation, and air-conditioning control systems: From traditional technologies toward radar advanced sensing. Rev. Sci. Instruments 2021, 92, 061501. [Google Scholar] [CrossRef] [PubMed]

- Pisa, S.; Pittella, E.; Piuzzi, E. A survey of radar systems for medical applications. IEEE Aerosp. Electron. Syst. Mag. 2016, 31, 64–81. [Google Scholar] [CrossRef]

- Yang, D.; Zhu, Z.; Zhang, J.; Liang, B. The Overview of Human Localization and Vital Sign Signal Measurement Using Handheld IR-UWB Through-Wall Radar. Sensors 2021, 21, 402. [Google Scholar] [CrossRef] [PubMed]

- Cardillo, E.; Caddemi, A. Radar Range-Breathing Separation for the Automatic Detection of Humans in Cluttered Environments. IEEE Sens. J. 2021, 21, 14043–14050. [Google Scholar] [CrossRef]

- Wang, G.; Gu, C.; Inoue, T.; Li, C. A Hybrid FMCW-Interferometry Radar for Indoor Precise Positioning and Versatile Life Activity Monitoring. IEEE Trans. Microw. Theory Tech. 2014, 62, 2812–2822. [Google Scholar] [CrossRef]

- Dogru, S.; Marques, L. Pursuing Drones with Drones Using Millimeter Wave Radar. IEEE Robot. Autom. Lett. 2020, 5, 4156–4163. [Google Scholar] [CrossRef]

- Rai, P.K.; Idsøe, H.; Yakkati, R.R.; Kumar, A.; Ali Khan, M.Z.; Yalavarthy, P.K.; Cenkeramaddi, L.R. Localization and Activity Classification of Unmanned Aerial Vehicle Using mmWave FMCW Radars. IEEE Sens. J. 2021, 21, 16043–16053. [Google Scholar] [CrossRef]

- Morris, P.J.B.; Hari, K.V.S. Detection and Localization of Unmanned Aircraft Systems Using Millimeter-Wave Automotive Radar Sensors. IEEE Sens. Lett. 2021, 5, 1–4. [Google Scholar] [CrossRef]

- Cidronali, A.; Passafiume, M.; Colantonio, P.; Collodi, G.; Florian, C.; Leuzzi, G.; Pirola, M.; Ramella, C.; Santarelli, A.; Traverso, P. System Level Analysis of Millimetre-wave GaN-based MIMO Radar for Detection of Micro Unmanned Aerial Vehicles. In 2019 PhotonIcs Electromagnetics Research Symposium—Spring (PIERS-Spring); Springer: Singapore, 2019; pp. 438–450. [Google Scholar] [CrossRef]

- Rojhani, N.; Passafiume, M.; Lucarelli, M.; Collodi, G.; Cidronali, A. Assessment of Compressive Sensing 2 × 2 MIMO Antenna Design for Millimeter-Wave Radar Image Enhancement. Electronics 2020, 9, 624. [Google Scholar] [CrossRef] [Green Version]

- Bhatia, J.; Dayal, A.; Jha, A.; Vishvakarma, S.K.; Joshi, S.; Srinivas, M.B.; Yalavarthy, P.K.; Kumar, A.; Lalitha, V.; Koorapati, S.; et al. Classification of Targets Using Statistical Features from Range FFT of mmWave FMCW Radars. Electronics 2021, 10, 1965. [Google Scholar] [CrossRef]

- Rai, P.K.; Kumar, A.; Khan, M.Z.A.; Soumya, J.; Cenkeramaddi, L.R. Angle and Height Estimation Technique for Aerial Vehicles using mmWave FMCW Radar. In Proceedings of the 2021 International Conference on COMmunication Systems NETworkS (COMSNETS), Bengaluru, India, 5–9 January 2021; pp. 104–108. [Google Scholar] [CrossRef]

- Bhatia, J.; Dayal, A.; Jha, A.; Vishvakarma, S.K.; Soumya, J.; Srinivas, M.B.; Yalavarthy, P.K.; Kumar, A.; Lalitha, V.; Koorapati, S.; et al. Object Classification Technique for mmWave FMCW Radars using Range-FFT Features. In Proceedings of the 2021 International Conference on COMmunication Systems NETworkS (COMSNETS), Bengaluru, India, 5–9 January 2021; pp. 111–115. [Google Scholar] [CrossRef]

- Advanced Driver Assistance Systems (ADAS). Available online: https://www.ti.com/applications/automotive/adas/overview.html (accessed on 12 October 2021).

- Huang, Y.; Brennan, P.; Patrick, D.; Weller, I.; Roberts, P.; Hughes, K. FMCW based MIMO imaging radar for maritime navigation. Prog. Electromagn. Res. 2011, 115, 327–342. [Google Scholar] [CrossRef] [Green Version]

- Oh, D.; Lee, J. Low-Complexity Range-Azimuth FMCW Radar Sensor Using Joint Angle and Delay Estimation without SVD and EVD. IEEE Sens. J. 2015, 15, 4799–4811. [Google Scholar] [CrossRef]

- Kim, S.; Lee, K. Low-Complexity Joint Extrapolation-MUSIC-Based 2-D Parameter Estimator for Vital FMCW Radar. IEEE Sens. J. 2019, 19, 2205–2216. [Google Scholar] [CrossRef]

- Fang, W.; Fang, L. Joint Angle and Range Estimation With Signal Clustering in FMCW Radar. IEEE Sens. J. 2020, 20, 1882–1892. [Google Scholar] [CrossRef]

- Yanik, M.E.; Torlak, M. Near-Field 2-D SAR Imaging by Millimeter-Wave Radar for Concealed Item Detection. In Proceedings of the 2019 IEEE Radio and Wireless Symposium (RWS), Orlando, FL, USA, 20–23 January 2019; pp. 1–4. [Google Scholar]

- Rouveure, R.; Faure, P.; Monod, M. PELICAN: Panoramic millimeter-wave radar for perception in mobile robotics applications. Part 1: Principles of FMCW radar and of 2D image construction. Robot. Auton. Syst. 2016, 81, 1–16. [Google Scholar] [CrossRef] [Green Version]

- Nowok, S.; Kueppers, S.; Cetinkaya, H.; Schroeder, M.; Herschel, R. Millimeter wave radar for high resolution 3D near field imaging for robotics and security scans. In Proceedings of the 2017 18th International Radar Symposium (IRS), Prague, Czech Republic, 28–30 June 2017; pp. 1–10. [Google Scholar]

- Aulenbacher, U.; Rech, K.; Sedlmeier, J.; Pratisto, H.; Wellig, P. Millimeter wave radar system on a rotating platform for combined search and track functionality with SAR imaging. In Millimetre Wave and Terahertz Sensors and Technology VII; Salmon, N.A., Jacobs, E.L., Eds.; International Society for Optics and Photonics; SPIE: Bellingham, WA, USA, 2014; Volume 9252, pp. 1–11. [Google Scholar] [CrossRef]

- Sagala, T.B.V.; Suryana, J. Implementation of mechanical scanning and signal processing for FMCW radar. In Proceedings of the 2016 International Symposium on Electronics and Smart Devices (ISESD), Bandung, Indonesia, 29–30 November 2016; pp. 46–50. [Google Scholar] [CrossRef]

- Nowok, S.; Briese, G.; Kueppers, S.; Herschel, R. 3D Mechanically Pivoting Radar System using FMCW Approach. In Proceedings of the 2018 19th International Radar Symposium (IRS), Bonn, Germany, 20–22 June 2018; pp. 1–10. [Google Scholar] [CrossRef]

- Ayhan, S.; Thomas, S.; Kong, N.; Scherr, S.; Pauli, M.; Jaeschke, T.; Wulfsberg, J.; Pohl, N.; Zwick, T. Millimeter-wave radar distance measurements in micro machining. In Proceedings of the 2015 IEEE Topical Conference on Wireless Sensors and Sensor Networks (WiSNet), San Diego, CA, USA, 25–28 January 2015; pp. 65–68. [Google Scholar]

- Ikram, M.Z.; Ahmad, A.; Wang, D. High-accuracy distance measurement using millimeter-wave radar. In Proceedings of the 2018 IEEE Radar Conference (RadarConf18), Oklahoma City, OK, USA, 23–27 April 2018; pp. 1296–1300. [Google Scholar]

- AWR2243 Single-Chip 76- to 81-GHz FMCW Transceiver. Available online: https://www.ti.com/lit/ds/symlink/awr2243.pdf?ts=1637652814176&ref_url=https%253A%252F%252Fwww.ti.com%252Fproduct%252FAWR2243 (accessed on 12 October 2021).

- Gupta, S.; Rai, P.K.; Kumar, A.; Yalavarthy, P.K.; Cenkeramaddi, L.R. Target Classification by mmWave FMCW Radars Using Machine Learning on Range-Angle Images. IEEE Sens. J. 2021, 21, 19993–20001. [Google Scholar] [CrossRef]

- The Math Works, Inc. MATLAB; Version 2019a; Computer Software; The Math Works, Inc.: Natick, MA, USA, 2019. [Google Scholar]

- The Fundamentals of Millimeter Wave Sensors. Available online: https://www.ti.com/lit/spyy005 (accessed on 12 October 2021).

- Rai, P.K. Targets Classification mmWave FMCW Radar. Available online: https://github.com/prabhatrai111/Targets-Classification-mmWave-FMCW-Radar (accessed on 12 October 2021).

- Vijay, P.P.; Patil, P.N.C. Gray Scale Image Segmentation using OTSU Thresholding Optimal Approach. J. Res. 2016, 2, 20–24. [Google Scholar]

- Pathmanabhan, A.; Dinesh, S. The Effect of Gaussian Blurring on the Extraction of Peaks and Pits from Digital Elevation Models. Discret. Dyn. Nat. Soc. 2007, 2007, 1–12. [Google Scholar] [CrossRef] [Green Version]

- Gedraite, E.; Hadad, M. Investigation on the effect of a Gaussian Blur in image filtering and segmentation. In Proceedings of the International Symposium on Electronics in Marine (ELMAR), Zadar, Croatia, 14–16 September 2011; pp. 393–396. [Google Scholar]

- Gonzalez, R.; Woods, R. Morphological Imgae Processing. In Digital Image Processing, 4th ed.; Pearson: London, UK, 2017; pp. 649–698. [Google Scholar]

- Structural Analysis and Shape Descriptors—OpenCV 2.4.13.7 Documentation. Available online: https://opencv.org/ (accessed on 12 October 2021).

- Leu, J.G. Computing a shape’s moments from its boundary. Pattern Recognit. 1991, 24, 949–957. [Google Scholar] [CrossRef]

- Bland, J.M.; Altman, D. Statistical methods for assessing agreement between two methods of clinical measurement. Lancet 1986, 327, 307–310. [Google Scholar] [CrossRef]

- Kim, S.; Oh, D.; Lee, J. Joint DFT-ESPRIT Estimation for TOA and DOA in Vehicle FMCW Radars. IEEE Antennas Wirel. Propag. Lett. 2015, 14, 1710–1713. [Google Scholar] [CrossRef]

- Oh, D.G.; Ju, Y.H.; Lee, J.H. Subspace-based auto-paired range and DOA estimation of dual-channel FMCW radar without joint diagonalisation. Electron. Lett. 2014, 50, 1320–1322. [Google Scholar] [CrossRef]

- Oh, D.; Ju, Y.; Nam, H.; Lee, J. Dual smoothing DOA estimation of two-channel FMCW radar. IEEE Trans. Aerosp. Electron. Syst. 2016, 52, 904–917. [Google Scholar] [CrossRef]

- Cenkeramaddi, L.R.; Rai, P.K.; Dayal, A.; Bhatia, J.; Pandya, A.; Soumya, J.; Kumar, A.; Jha, A. A Novel Angle Estimation for mmWave FMCW Radars Using Machine Learning. IEEE Sens. J. 2021, 21, 9833–9843. [Google Scholar] [CrossRef]

| S. No. | Parameter | Value |

|---|---|---|

| 1 | RF Frequency Range | 77–81 GHz |

| 2 | Chirp Slope | 29.982 MHz/μs |

| 3 | Number of Transmitters | 3 |

| 4 | Number of Receivers | 4 |

| 5 | Number of ADC samples | 256 |

| 6 | Number of frames | 800 |

| 7 | Number of Chirps | 128 |

| 8 | RF Bandwidth | 1798.92 MHz |

| 9 | Frame periodicity | 40 ms |

| 10 | Sampling Rate | 10 MSPS |

| 11 | Drone Size | 322 × 242 × 84 mm |

| 12 | Human Height | 172 cm |

| 13 | Car Size | 4315 × 1780 × 1605 mm |

| 14 | Measurement Range | up to 26 m |

| 15 | Transmission Power | 12 dBm |

| 16 | Rx Noise Figure | 14 dB (76 GHz to 77 GHz) |

| 15 dB (77 GHz to 81 GHz) |

| Cases | a | b | c | d | e | f | g | h | i | j | k | l | m | n | ||

| Targets | ||||||||||||||||

| Human-1 | Range | 9 | 13 | 11 | 13 | 7 | 17 | 5 | 19 | 5 | 9 | 21 | 7 | 15 | 7 | |

| Angle | 30 | 60 | 0 | 0 | 60 | 30 | 60 | 90 | 90 | 120 | 180 | 120 | 180 | 30 | ||

| Human-2 | Range | 11 | 15 | 13 | 15 | 9 | 19 | 7 | 11 | 7 | 21 | 23 | 5 | 7 | 17 | |

| Angle | 60 | 90 | 30 | 30 | 90 | 60 | 90 | 120 | 120 | 60 | 0 | 180 | 0 | 0 | ||

| Human-3 | Range | 13 | 17 | 17 | 17 | 13 | 21 | 11 | 9 | 13 | 7 | 5 | 11 | 11 | 9 | |

| Angle | 90 | 120 | 90 | 60 | 150 | 90 | 150 | 150 | 180 | 180 | 150 | 60 | 30 | 60 | ||

| Human-4 | Range | 15 | 19 | 19 | 21 | 15 | 23 | 21 | 7 | 15 | 23 | 13 | 15 | 13 | 11 | |

| Angle | 120 | 150 | 120 | 120 | 0 | 120 | 0 | 0 | 60 | 90 | 90 | 90 | 120 | 120 | ||

| Human-5 | Range | 17 | 11 | 21 | 23 | 19 | 9 | 23 | 25 | 17 | 17 | 19 | 17 | 13 | ||

| Angle | 150 | 180 | 150 | 150 | 30 | 0 | 30 | 60 | 0 | 30 | 150 | 150 | 150 | |||

| Drone | Range | 5 | 7 | 9 | 11 | 5 | 7 | 9 | 5 | 11 | 5 | 7 | 9 | 9 | 5 | |

| Angle | 0 | 30 | 60 | 90 | 120 | 150 | 180 | 30 | 30 | 150 | 60 | 30 | 90 | 90 | ||

| Cases | aa | bb | cc | dd | ee | ff | gg | hh | ii | jj | kk | ll | mm | nn | oo | |

| Targets | ||||||||||||||||

| Drone | Range | 7 | 9 | 11 | 5 | 9 | 11 | 7 | 7 | 9 | 11 | 9 | 11 | 5 | 7 | 11 |

| Angle | 0 | 0 | 0 | 60 | 60 | 60 | 90 | 120 | 120 | 120 | 150 | 150 | 180 | 180 | 180 | |

| Car | Range | 25 | 19 | 17 | 15 | 17 | 17 | 21 | 23 | 15 | 21 | 17 | 23 | 15 | 21 | 25 |

| Angle | 60 | 60 | 90 | 120 | 150 | 180 | 180 | 90 | 0 | 0 | 0 | 90 | 60 | 120 | 30 |

| Measurement | PC | Bias | SD | LOA (Biasą1.96*SD) | AL | BAR | |

|---|---|---|---|---|---|---|---|

| Bias+1.96*SD | Bias-1.96*SD | ||||||

| Range | 0.9996 | −0.4143 | 0.1941 | −0.0339 | −0.7946 | 13.7595 | 0.0276 |

| Angle | 0.9954 | 3.6176 | 6.4824 | 16.3232 | −9.0879 | 89.3088 | 0.1423 |

| S. No. | Algorithm | Complexity | Targets at Same Range/Angle | Detection Limitation of Targets | Required Number of Antennas for AoA Estimation | Reference |

|---|---|---|---|---|---|---|

| 1. | DFT-ESPRIT | No | Multiple targets | 1 TX and 2 RX | [41] | |

| 2. | 2D-ESPRIT | No | Multiple targets | 1 TX and 2 RX | [42] | |

| 3. | Dual-Smoothing | No | Multiple targets | 1 TX and 2 RX | [43] | |

| 4. | Clustered ESPRIT | Yes | RX antennas could be smaller than number of targets | RX antennas could be smaller than number of targets | [20] | |

| 5. | Range-Angle | Yes | No limitation | 1 TX and 1 RX | [44] | |

| map based | ||||||

| 6. | Proposed work | Yes | No limitation | 1 TX and 1 RX | This work |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Gupta, K.; Joshi, S.; Srinivas, M.B.; Boppu, S.; Manikandan, M.S.; Cenkeramaddi, L.R. Localization of Multi-Class On-Road and Aerial Targets Using mmWave FMCW Radar. Electronics 2021, 10, 2905. https://doi.org/10.3390/electronics10232905

Gupta K, Joshi S, Srinivas MB, Boppu S, Manikandan MS, Cenkeramaddi LR. Localization of Multi-Class On-Road and Aerial Targets Using mmWave FMCW Radar. Electronics. 2021; 10(23):2905. https://doi.org/10.3390/electronics10232905

Chicago/Turabian StyleGupta, Khushi, Soumya Joshi, M. B. Srinivas, Srinivas Boppu, M. Sabarimalai Manikandan, and Linga Reddy Cenkeramaddi. 2021. "Localization of Multi-Class On-Road and Aerial Targets Using mmWave FMCW Radar" Electronics 10, no. 23: 2905. https://doi.org/10.3390/electronics10232905