1. Introduction

Information compressors allow the reduction of bandwidth requirements and, given that data transmission systems tend to demand much more power than computing systems, they are useful as well when energy or dissipation is limited. For the case of images or videos, apart from lossless compression, we may also introduce errors in a controlled manner in order to improve the compressibility of the data. A particularly convenient way to perform this is to use near-lossless compression, which ensures that these errors are bounded by a limit set by the user. When this limit is set to zero, lossless compression is obtained.

These codecs are particularly useful when the data to compress contains very valuable information and/or, given the nature of the application, a minimum quality must be ensured. Satellite image acquisition is a prominent application of these systems, which have pushed the development of many algorithms and hardware implementations [

1,

2]. Additionally, we can find medical applications such as capsule endoscopy [

3,

4,

5,

6,

7] or portable image devices [

8].

New applications emerge in scenarios where traditionally raw (uncompressed) data was transmitted. Given the rapid increase in the data volume generated, image codecs can reduce costs and development time by leveraging already available transmission infrastructure and standards. An example of this in the video broadcasting industry is the use of intermediate codecs (mezzanine codecs), used between initial acquisition and final distribution [

9]. In addition, for the manufacturing industry, we can find high frame per second (FPS) infrared cameras [

10] producing information that is subsequently processed by an algorithm that may require limitations on the quantization errors to ensure proper operation. Sometimes these are part of closed-loop control systems, which will additionally demand latency limitations to ensure control loop stability.

Particularly for the more demanding applications (low energy, high throughput, low latency), hardware implementations can be needed in order to better compete with other products in the market or just to meet requirements while achieving real-time compression of the data stream [

11,

12,

13,

14,

15]. This tends to be particularly true for the encoder side, as in the case of remote sensing, like satellite applications or portable devices.

A codec well suited for these applications is JPEG-LS [

16], based on the LOCO-I (Low Complexity Lossless Compression for Images) algorithm, which is known for its great trade-off between complexity and coding efficiency and amenable hardware implementation [

17,

18]. This led to the development of multiple hardware architectures [

2,

6,

19,

20,

21,

22,

23,

24] and the utilization of an adapted version in the Mars Rover mission (NASA) [

1]. An extension of the standard was later presented [

25], mainly, to improve the compression rate when coding lower entropy distributions like those that arise when the error tolerance is greater than zero. However, this came at the expense of increased complexity, among other reasons, because it uses an arithmetic coder.

After the development of the JPEG-LS standard extension, a new coding scheme was developed, Asymmetric Numeral Systems (ANS) [

26], which presents a better trade-off between coding efficiency and speed than the arithmetic coder or the Huffman coder [

27,

28]. Given this new coding technology plus the observation of an increasing need for more efficient codecs, LOCO-ANS (Low Complexity Lossless Compression with Asymmetric Numeral Systems) [

29] was developed, based on JPEG-LS, with the aim to improve its coding efficiency but at a lower expense, compared to the standard’s extension. Targeting photographic images, LOCO-ANS can achieve in mean up to 1.6% and 6% higher compression than the standard for an error tolerance set to 0 (lossless) and 1, respectively. This improvement continues increasing with the error tolerance. Although in the software case LOCO-ANS comes with a speed penalty, it compares favorably against state-of-the-art lossless and near-lossless codecs since several of its configurations appear on the speed-compression Pareto frontier.

This work has the objective to approach the aspects not covered previously in [

29]. That is:

Design a hardware implementation for the LOCO-ANS encoder.

Determine the performance of this encoder in hardware for several of its configurations, and what limits this performance.

Compare the obtained LOCO-ANS hardware encoder with other JPEG-LS hardware implementations.

Given these objectives, where we aimed to achieve a first architecture, not a fine-tuned one, the encoder was completely implemented using High-Level Synthesis to allow faster development. Thanks to a careful design and advances in the HLS compilers, the resulting system achieves high performance and a reasonably small footprint. The complete set of sources required to reproduce the systems here presented are open to the community through a publicly available repository (

https://github.com/hpcn-uam/LOCO-ANS-HW-coder, accessed on 23 October 2021).

The rest of this paper is structured as follows: first,

Section 2 revises the ideas in which this paper is grounded. Next,

Section 3 describes the architecture of the implemented system. Then,

Section 4 provides the obtained implementation results when deploying the system in different FPGA platforms and evaluates them. After this,

Section 5 further discusses the achieved results in light of the related work. Finally,

Section 6 concludes the paper by summarizing its main contributions.

2. Background

Before describing the proposed system, this section introduces ANS, the LOCO-ANS algorithm, and HLS, which are the fundamental ideas this work is based on.

2.1. ANS

ANS coding system [

26], similarly to the arithmetic coder, codes a stream of symbols in a single output bitstream, where whole bits cannot be assigned to a particular input symbol. That is, it codes the alphabet extension of order

number of symbols. However, instead of storing the information in a range (as the arithmetic coder does), it encodes it in a single natural number, the state. In order to limit the size of this state, a re-normalization is performed when it is out of bounds and new output bits are generated. Furthermore, to be able to decode the resulting bitstream, the last ANS coder state must be sent to the decoder.

ANS logic can be encoded in a ROM storing, for each current state (ROM address), the next state and numbers of bits to take from the current state. This is one of the ways of implementing tANS, one of the ANS variants. Therefore, although the ideas behind ANS are a bit more complex, its operation can be really simple. Each ROM, or table, codes for a specific symbol source distribution, so to perform adaptive coding, several tables need to be available, choosing the one that better adapts to the currently estimated symbol probabilities.

When designing a system using tANS, it is important to take into account that the Kullback–Leibler divergence tends to stay in the range, with , where is the size of the state (generally assumed to be ) and is the cardinality of the symbol source the table codes for. In addition, the output bitstream acts as a Last In First Out (LIFO) memory, a stack. Then, the decoding is performed in the reverse order, starting the process with the last bits generated and recovering the last symbols first.

For more about ANS, see [

26,

28], and about tANS in hardware, see [

30,

31].

2.2. LOCO-ANS Algorithm

Figure 1 shows the LOCO-ANS algorithm block diagram, where two main subsystems can be appreciated, the Pixel Decorrelator and the TSG Coder. The former processes the input pixels with the aim to turn them into a stream of statistically independent symbols with their estimated distribution parameters, which the latter will code. These symbols are errors made by the adaptive predictor, which are then quantized according to the error tolerance (

parameter) as shown by Equation (

1). This quantization allows ensuring that the absolute difference between the original value of a pixel and the decoded one is less or equal to

. Note that if

, then lossless compression is obtained. Other reversible operations are then applied to

to improve compression.

The adaptive predictor is composed of a fixed predictor plus an adaptive bias correction. The adaptive correction is computed for each context, which is a function of the gradients surrounding the pixel currently processed. The prediction errors are modeled using the Two-Sided Geometric (TSG) distribution, that is, an error

is assumed to have the following probabilities:

where

and

s are the distribution parameters and

is a normalization factor.

However, to simplify the modeling and coding of this error, the next re-parametrization is used:

and

where

and

is the same parameter as in Equation (

2) [

32]. These distribution parameters are estimated by the Context Modeler for each context, generating the estimated quantized versions,

and

.

As seen in the block diagram, the TSG coder uses two different coders to handle y and z, both based on tANS. As mentioned, ANS output bitstream acts as a LIFO, but the decoder needs to obtain the errors in the same order the decorrelator processed them, to be able to mimic the model adaptations. For this reason, the Block Buffer groups symbols in blocks and inverts their order. The output bits of a block are packed in the Binary Stack and stored in the inverse order, so the decoder can recover pixels in the same order the encoder processed them without additional metadata.

The Bernoulli coder requires a single access to the tANS ROM to code the input

y, whereas the Geometric coder may need several accesses. This is because

z is decomposed in

subsymbols, where

is the cardinality of the tANS symbol source used for a given

and

is a coder parameter that sets the maximum ROM accesses for each

z symbol. However, as shown in [

29], for 8-bit gray images and using

and

greater than the

z range, the coder only requires 1.3 accesses on average.

These coders may or may not use the same ANS state. If they do, at the cost of losing the ability to run in parallel, only one ANS state is sent at the end of the block. If they do not, larger symbol blocks can be used to compensate for the additional bits required to send the second ANS state. Then, this option establishes a memory-speed trade-off.

For a more in-depth explanation of how the codec works and its design, refer to [

29]. Additionally,

Appendix A provides some examples of images compressed with LOCO-ANS setting

to 0 and 3.

2.3. High-Level Synthesis

There are currently several compilers in the market that translate C/C++ code to Register Transfer Level (RTL) such as VHDL or Verilog. Examples of these compilers are Vitis HLS (Xilinx), Intel HLS, or Catapult (Mentor). Apart from the C/C++ code, directives (sometimes included in the code as #pragmas) are used to guide the compiler towards the desired architecture. These directives, for example, can establish the desired number of clock cycles required for a module to be ready to consume a new input or, in other words, to set the Initial Interval (II). Additionally, they can shape memories and select a specific resource for their implementation.

HLS compilers allow faster development of hardware modules [

33]. The main reasons are:

The code describes the algorithm, whereas the compiler is in charge of scheduling operations to clock cycles and assigning operators/memory to the target technology resources.

Code can be validated much faster using a C/C++ program instead of an RTL simulator.

Directives allow a wide design space exploration. Moving from a low footprint to a heavily pipelined, high-performance architecture is possible just by changing a single line of code.

After code verification and RTL generation, the output system can be automatically validated using the C/C++ code to perform an RTL simulation.

The source code is less technology-dependent.

However, even though compilers have been improving, the use of HLS tends to establish a trade-off between design time and quality of results (performance and/or footprint). Furthermore, except for trivial applications, being aware of the underlying architecture and resources used is still necessary to obtain good implementations.

3. Encoder Architecture

In this section, the LOCO-ANS encoder architecture is presented. The block diagram in

Figure 2 shows the main modules composing the system: The Pixel Decorrelator,

Quantizer, and TSG coder. Each of these modules is implemented in C/C++ with compiler pragmas and transformed to RTL code using Vitis HLS.

The pixel decorrelator takes pixels as input and outputs a stream of y, , z, t, and . The last two variables are further processed by the quantizer to generate the geometric distribution parameter. The TSG coder uses a tANS coder to transform the y and z streams in blocks of bits and, finally, the File Writer sends these streams and header information, issuing the appropriate DMA commands.

The TSG coder may need several cycles to code a symbol, but it is much faster than the Pixel Decorrelator, so in order to increase the encoder throughput, the former module runs at a higher clock frequency. FIFOs are inserted between these modules to move data from one clock domain to the other.

Subsections below explain in more detail each module.

3.1. Pixel Decorrelation

Given the sequential nature of the pixel decorrelation algorithm, it is mainly implemented by a single pipelined module, including a single line row buffer. It consists of an initialization phase and the pixel loop. In the initialization phase, the first pixel is read (which is not coded but included in the bitstream directly), context memories and tables used in the pixel loop are initialized according to the

parameter setting. The operation takes about 512 clock cycles to complete. This could be optimized in many ways, such as computing and storing several memory entries in a single cycle, or avoiding the re-computation of tables when

does not change. Additionally, ping-pong memories could be used to achieve zero-throughput penalty, initializing these memories in a previous pipeline stage, as done in [

19]). However, the HLS compiler did not support some constructions required to create that architecture. Although workarounds exist, the potential benefit for HD and higher resolution images is negligible (less than

performance improvement in the best case and assuming the same clock frequency is achieved). What is more, particularly in high congestion implementations (i.e., FPGAs with high usage ratio), this could even reduce the actual throughput, given that the extra logic and use of additional memory ports can imply frequency penalties. For these reasons, and given that other works have presented optimized architectures for this part of the algorithm (changes to the JPEG-LS algorithm do not have important architectural implications), these initialization time optimizations were not implemented.

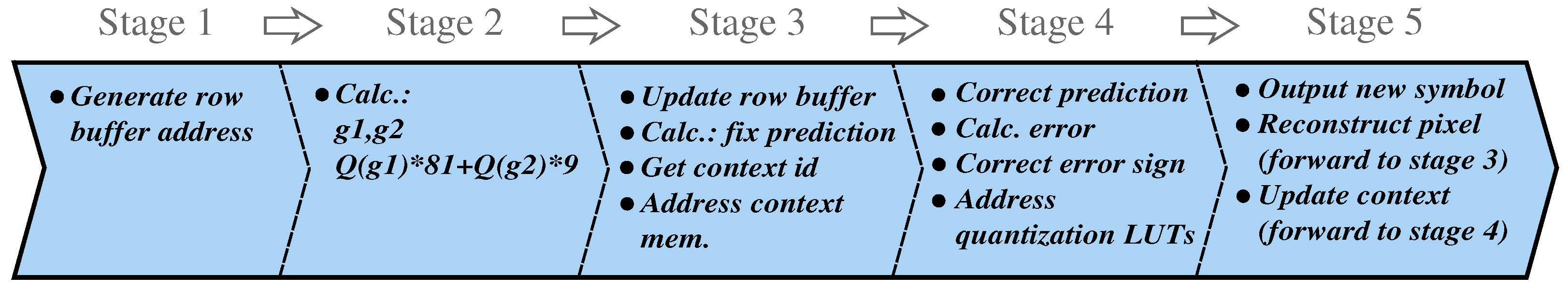

Algorithm 1 describes the pixel loop. This code structure allowed a deep pipeline (shown in

Figure 3), which reads the row buffer, computes the quantized gradients

and

, which do not depend on the previous pixel (after quantization), and starts to compute the context id before the previous pixel quantization is finished. To obtain the context id and sign, the value

is computed, where only the

gradient uses the previous pixel. Then,

can be computed in an earlier stage, which is what the pipeline does. Observe that the gradients order in the equation was chosen such that the dependency between loop iterations is eased, as the component requiring

(which cannot be computed earlier) is not multiplied by any factor.

| Algorithm 1 Pixel loop algorithm structure |

- 1:

- 2:

fordo - 3:

#pragma HLS PIPELINE II = 2 ▹ The lossless optimized version uses II = 1 ▹ Data stored in the row buffer does not establish dependencies - 4:

#pragma HLS DEPENDENCE variable=row_buffer intra false - 5:

#pragma HLS DEPENDENCE variable=row_buffer inter false - 6:

- 7:

- 8:

- 9:

- 10:

- 11:

- 12:

- 13:

- 14:

- 15:

end for

|

Additionally, to improve the performance (reducing the II), the updated context data is forwarded to previous stages when two consecutive pixels have the same context (something that happens in most cases according to [

23], although this depends on the nature of the images). Originally, this optimization was done explicitly in the code and using pragmas (to inform the compiler of the false dependency), but newer versions of the HLS compiler perform this optimization automatically.

Since the HLS compiler handles the scheduling of the operations, the number of pipeline stages may change depending on the target frequency and FPGA. For the tested technologies, aiming at the maximum performance, the pixel loop operations were scheduled in five stages.

3.1.1. Obtaining the Distribution Parameter

The decorrelator keeps for each context a register

. The register and the context counter

t are then processed by the downstream module S

t Quantizer (

Figure 2) to obtain the quantized distribution parameter

. The implemented quantization procedure is a generalization of the iterative method used in LOCO-I to obtain the

k parameter of the Golomb-power-of-2 coder [

34] and it is described in detail in [

29]. Algorithm 2 shows the coarse-grained configuration of this quantization function.

| Algorithm 2 Coarse grained quantization function () |

- Require:

- Require:

t - Ensure:

- 1:

#pragma HLS PIPELINE - 2:

- 3:

for do - 4:

if - 5:

- 6:

end if - 7:

end for

|

Although this procedure could have been done within the decorrelator, it was decided to keep it separated, to ease the scheduler job and ensure this operation extended the pipeline without affecting the pixel loop performance. This operation can be compute-intensive, but as there are no dependencies among consecutive symbols, the module can be deeply pipelined, achieving high throughput.

3.1.2. Near-Lossless Quantization and Error Reduction

To handle the quantization processes, a set of tables (the term look-up table (LUT) is usually used to refer to these tables, but here it is avoided in order not to confuse it with the FPGA resource also denominated LUT) was designed to increase the system performance, taking into account that even small FPGA have plenty of memory blocks to implement these tables. The Algorithm 3 describes the error quantization (lines 1–5), modulo reduction (lines 6–10), and re-scale (line 11) processes.

| Algorithm 3 Error quantization and modulo reduction |

- Require:

▹ Input error - Ensure:

▹ Output symbol - Ensure:

▹ Re-scaled error, ysed to update context bias ▹ Uniform quantization - 1:

if then - 2:

- 3:

else - 4:

- 5:

end if ▹ Reduction modulo = f(NEAR, pixel depth) - 6:

if then - 7:

- 8:

else if then - 9:

- 10:

end if - 11:

|

As suggested in [

34], the error quantization can be easily implemented using a table. However, the result after the modulo reduction logic is stored in the table, as the memory resources are reduced and it helps to speed up the context update, which is one of the logical paths that limits the maximum frequency. In addition, a second table contains the re-scaled error (

), to avoid the general integer multiplication logic and also to ease the sequential context dependency.

Additionally, a third table is used, in this case, to speed up the pixel reconstruction process, which is the other important logical path that could limit the maximum frequency. There are several ways to perform this, as is shown in

Figure 4. To our knowledge, previous implementations of the LOCO/JPEG-LS encoder reconstruct the pixel starting from the quantized prediction error (as indicated in the ITU recommendation [

16]) or from the re-scaled error (e.g., [

35]). Instead, we use the value of the exact prediction error (only available on the encoder), to get the reconstructed pixel. Given a

value, each integer will have a quantization error, which can be pre-computed and stored in a table. Then, the exact prediction error (before the sign correction) addresses the table that provides the quantization error, and it is then added to the original value of the pixel. As it can be appreciated in

Figure 4, using this method greatly simplifies the computation and eases the path. This is one of the key ideas that enabled our high-throughput implementation.

These tables could be implemented as ROMs, supporting a small set of values, or implemented by RAMs, which are filled depending on the value currently needed. In the presented design, the latter option was chosen, giving the system the flexibility to use any practical value, using 3 tables with entries each. The time required to fill these memories can be masked, as stated before. Although the uniform quantization would require general integer division, the tables are filled with simpler logic. It is easy to see that, if sweeping the error range sequentially (either increasing or decreasing by 1) and starting from zero, almost trivial logic is required to keep track of the division and remainder.

If a single clock and one edge of the clock are used, the minimum II for the system will be 2. To compute the prediction, the context memory is read (memory latency ), then the prediction error is obtained, which is needed to address the quantization tables (also implemented with memories with a latency ). The result of the quantization process is used to address the next pixel context, producing a minimum II = 2.

Within a module, Vitis HLS does not allow the designs with multiple clocks or using different clock edges. However, in this case, a great improvement is not expected from the implementation of these techniques, they will imply a much greater development time and the result will tend to be more technology-dependent (given that the FPGA fabric architecture and relative propagation times vary, affecting the pipeline tuning).

3.1.3. Decorrelator Optimized for Lossless Compression

A decorrelator optimized just for lossless compression operation was also implemented. The removal of the quantization logic, plus the logic simplification that arises from using a fixed

allows going from an II = 2 to II = 1 with approximately a 25% frequency penalty in the tested technologies. That is about a 50% throughput increase (see

Section 4). In this case, this pixel loop is implemented with a 4-stage pipeline and the frequency bottleneck is established by the context update.

An interesting fact about this optimization is that going from the general decorrelator to testing on hardware, a first lossless only version took less than one hour. Such fast development was possible given that just a few lines of C++ code needed to be modified. These simple modifications led to significant changes in the scheduling of the pipeline, resulting in the stated performance, which would have been much more time-consuming using HDL languages.

3.2. TSG Coder

Figure 5 shows the block diagram of the double lane TSG coder, which allows sharing the tANS ROMs without clock cycle penalties, as double port memories are used and each lane requires one port. This module can receive the output of two independent Pixel Decorrelators and process them in parallel. In this way, it allows the compression of images in vertical tiles, which was shown to improve compression for HD and higher resolution images [

29].

The system was designed in a 2-level hierarchy because, as we go downstream, the basic data elements each module processes change. The input buffer works with blocks of symbols, while the subsymbol generator works at the symbol level, the ANS coder at the subsymbol level, and the output stack with blocks of packed bits. This modularization allows easily choosing the coding technique better suited for each module. The modules shown in

Figure 5 are instantiated in a dataflow region synchronized only by the input and output interfaces such that each module can run independently. In Vitis HLS, this is accomplished with the following pragma:

Figure 5.

High-level block diagram of the double lane TSG coder.

Figure 5.

High-level block diagram of the double lane TSG coder.

3.2.1. Stages of the TSG Coder

Subsymbol Generator

Figure 7 depicts the Subsymbol Generator and how data is transformed as it goes downstream. Its main function is to decompose

z in a variable-length sequence of subsymbols

which is one of the main processes of the Geometric coder.

For coding efficiency reasons, the cardinality of the symbol source modeled by the z ANS ROM varies for each distribution parameter . Then, for a given tANS will model a distribution of the symbols . For this reason, z needs to be represented in terms of these symbols, so it is decomposed as follows: , where the first subsymbol is equal to and all the rest are set to . In this way, to retrieve z, the decoder just needs to sum subsymbols until it finds one (first encoded, but last decoded) that is different to . As is always an integer power of 2, this process is simple. Finally, if it is detected that the length of this sequence is going to be greater than a design parameter (which determines the maximum number of geometric coder iterations) the subsymbol sequence represents an escape symbol. Following this sequence, the original z is inserted in the bitstream.

As described in [

29], this process is used to reduce the cardinality tANS needs to handle, which translates into significantly lower memory requirements and higher coding efficiency while keeping simple operation.

As it decomposes

z and serializes the result with

y (in the coupled coders version), this module establishes the TSG coder bottleneck in terms of symbols per clock cycle (not the frequency bottleneck, i.e., contains the critical path). Due to this, it was fundamental to optimize this module to be able to output a new subsymbol every clock cycle. Pipelining the modules was not sufficient to accomplish this goal. As shown in

Figure 7, the

z subsymbol generation process was split into two modules, one to get the required metadata and another one to decompose the symbol. Furthermore, the Z Decompose module was not described as a loop, as one normally would specify this procedure, but instead, it was coded as a pipelined state machine, which allowed reaching the desired performance. Finally, all these modules are instantiated in a dataflow region synchronized only by the input and output interfaces.

ANS Coder

As shown in

Figure 8, the ANS coder is composed of three modules. For each sub-symbol, the first one chooses the tANS table according to the symbol type (

or

y) and the distribution parameter. This table is then used to obtain the variable-length code for the sub-symbol. Thus, the module implements the Bernoulli Coder and the remaining process of the Geometric Coder. However, they can be easily split, resulting in a simpler module and the ROM memories would have weaker placement and routing constraints. The module also accepts bypass symbols, which are used to insert

z after the escape symbol. After the last sub-symbol is coded, the second module inserts the last ANS state as a new code. The last module packs these codes into compact bytes.

The ANS coder can accept a new input in every clock cycle. This was accomplished by instantiating the modules in a dataflow region synchronized only by the input and output interfaces and pipelining each of them with an II=1. This II was achieved by the modularization of the process and by describing all three modules as state machines.

Output Stack

Finally, the Output Stack is in charge of reversing the order of the byte stream of each block of symbols. For this, it uses a structure similar to the one employed in the Input Buffer.

4. Results

This section presents how the designs were tested as well as the achieved frequencies and resource footprints. Finally, throughput and latency analyses are provided.

4.1. Test Platform and Encoder Configurations Description

In order to conduct the hardware verification, the system depicted in

Figure 9 was implemented in two different Xilinx FPGA technologies, described in

Table 1: Zynq 7 (cost-optimized, Artix 7 based FPGA fabric) and Zynq UltraScale+ MPSoC. For all implementations, although not optimal in terms of resources, two input and output DMAs were used to simplify the hardware, as the objective was to verify the encoders building a demonstrator, not a fully optimized system. Images were sent from the Zynq

P running a Linux to the FPGA fabric using the input DMAs, which accessed the main memory and fed the encoder using an AXI4 stream interface. As the encoder generates the compressed binary, the Output DMA stores it in the main memory. The evaluation of the coding system was carried out for the configurations in

Table 2.

4.2. Implementation Results

For the tested implementations and both technologies, the critical path of the low-frequency clock domain is, in general, in the pixel reconstruction loop for the near-lossless encoders and within the update logic of the adaptive bias correction for the lossless version.

In the case of the high-frequency clock domain, the slowest paths of these implementations tend to be in the TSG coder and the output DMA for the Zynq 7020 implementation. Within the TSG coder, the critical path is, in general, either in the tANS logic (from the tANS ROM new state data output to the tANS ROM address, the new state) or in the Z Decompose module. In the case of the Zynq MPSoC, the slowest paths tend all to be in the tANS logic.

4.3. Results Evaluation

Results are analyzed in terms of throughput and latency, which are of paramount importance for real-time image and video applications.

4.3.1. Throughput

The near-lossless decorrelator critical path is in the pixel reconstruction loop, which is the same procedure used in the standard. This fact supports that the changes introduced by LOCO-ANS in the decorrelator do not limit the system performance. In the case of the lossless decorrelator, the bias context update logic limits the frequency. This procedure is the same as in the JPEG-LS standard extension, which requires an additional conditional sign inversion compared to the baseline. This tends to worsen the critical path, but it is a minor operation compared to the complete logical path. Although it achieves a slower clock, the lossless decorrelator throughput is about 50% higher than the near-lossless decorrelator, given that it achieves an II = 1 instead of II = 2.

The presented implementations represent a wide range of trade-offs between performance, compression, and resources (also cost, considering technology dimension). All of them have the Bernoulli and Geometric coders coupled, then their mean throughput will be

MPixels/s for photographic images, where

refers to the clock shown in

Table 3. In this way, for a given configuration and target, the TSG coder will have in the mean between 83% and 98% higher throughput than the near-lossless decorrelators for the Zynq 7020 implementations and between 47% and 76% for the Zynq MPSoC. In the case of the lossless optimized decorrelators, this performance gap is reduced to (15%, 26%) and (−10%, 16%), for Zynq 7020 and Zynq MPSoC, respectively. From the presented implementations, just one of them shows a lower TSG coder throughput. In this case, the increased compression ratio comes at the cost of not only higher memory utilization but also a throughput penalty.

However, it is observed that many possible optimizations of the TSG coder exist, and particularly of the tANS procedures. The Z ROM memory layout can be enhanced to significantly reduce the memory usage, which could have a positive impact on the maximum frequency as

Table 3 suggests. Furthermore, alternative hardware tANS implementations exist [

30], which may allow a wider range of performance/resources trade-offs.

The obtained results support the hypothesis that the use of the proposed TSG coder, which has a compression efficiency higher than the methods used in JPEG-LS, will not reduce the encoder throughput. This is observed in the hardware tests, where the encoder pixel rate is determined by the decorrelators when photographic images are compressed, except the lower TSG coder throughput case (LOCO-ANS7-LS in the Zynq MPSoC). As expected, this is not the case for randomly generated images, as the coder requires larger code words for them, and then, it is the TSG coder the one that limits throughput, particularly for small images and lossless compression.

4.3.2. Latency

The implemented decorrelator latency is determined by the initialization time plus the pixel loop pipeline depth, which results in cycles. For the lossless optimized version, this is reduced to cycles. In the case of the low-end device implementation (Zynq 7020), this results in 6.3 s and 5.8 s latency, respectively. As mentioned before, if required, the initialization time could be reduced or even completely masked, but these optimizations were not implemented due to compiler limitations, and the fact that it was considered that the potential benefits were low.

It is a bit more complicated to obtain the TSG coder latency, as it is data-dependent, and the coder works with blocks of symbols. To determine the marginal latency (delay added by the coder), we consider the time starting when the last symbol of the block is provided to the coder until the moment the coded block is completely out of the module. Then, avoiding the smaller pipeline delay terms, the TSG coder latency can be computed as:

Here, is the block size, is the mean subsymbols z is decomposed into, is the mean bits per pixel within the block and is the size (in bits) of each element of the output stack. The latency is dominated by two modules: the Subsymbol Generator (first term of the equation) and the Output Stack (second term). This is because, as mentioned before, the former creates a bottleneck given that for each input it consumes it outputs several through a single port and the latter buffers the whole block of output bytes and outputs it in the inverse order.

To obtain a pessimistic mean latency, we assume a low compression rate of 2 (

). The block size is set to 2048, the output stack word size to 8, and

(as determined in [

29]). Then, for the Zynq 7020 implementation, the mean TSG coder latency is 31.9

s.

To estimate a practical upper bound to this latency, the following image compression case was analyzed:

Image pixels equal to . In this way, we maximize the block used while keeping the pixel count low, so the decorrelator’s capability to learn the statistics of the image is reduced.

Pixels independently generated using a uniform distribution (worst-case scenario) and the errors model hurts compression (the prior knowledge is wrong).

Image shape: 64 × 32 (cols × rows). This shape allows visiting many different contexts, and then, the adaptation of the distribution parameter will be slower, thus increasing the resulting bpp.

(lossless compression): which maximizes the error range and bpp.

From a set of 100 images generated in this way, we took the lower compression instance, where and . This code expansion is due to the fact that the prior knowledge embedded in the algorithm (coming from the feature analysis of photographic images, such as the correlation between pixels) is wrong in this case and, as the image is small, it does not have enough samples to correct this. Moreover, given that the range of the distribution parameter was determined with photographic images, additional tables may be needed for these abnormally high entropies. Then, using the presented formulas, we obtain 97.2 s as a practical upper bound on the encoder latency for the Zynq 7020 implementation running at 180 MHz.

Although the presented system establishes a trade-off between latency and compression, the achieved latency is remarkably low and suitable for many real-time systems. Moreover, it is possible to tune this trade-off by modifying the implementation parameters.

6. Conclusions

In this work a hardware architecture of LOCO-ANS was described, as well as implementation results presented, analyzed, and compared against prior works in the area of near-lossless real-time hardware image compression.

The presented encoder excels in near-lossless compression, achieving the fastest pixel rate so far with up to 40.5 MPixels/s/lane for a low-end Zynq 7020 device and 124.15 MPixels/s/lane for Zynq Ultrascale+ MPSOC. At the same time, a balanced configuration of the presented encoder can achieve 7.4%, 16.7%, 25.1%, and 33.0% better compression than the previous fastest JPEG-LS near-lossless implementation (for an error tolerance in , respectively).

In this way, the presented encoder is able to cope with higher image resolutions or FPS than previous near-lossless encoders while achieving higher compression and keeping encoding latency below 100 s. Thus, it is a great tool for real-time video compression and, in general, for highly constrained scenarios like many remote sensing applications.

These results are in part possible thanks to a new method to perform the pixel reconstruction in the pixel decorrelator and the high-performance Two-Sided coder, based on tANS, which increases the coding efficiency. Moreover, as mentioned throughout the article, it is noted that further optimizations of the presented system are possible. Finally, experiment results support that if used with the fastest lossless optimized JPEG-LS decorrelators in the state-of-the-art, this coder will improve compression without limiting the encoder throughput.