The Contribution of Machine Learning and Eye-Tracking Technology in Autism Spectrum Disorder Research: A Systematic Review

Abstract

:1. Introduction

Our Contribution

2. Method

Search Strategy

3. Results

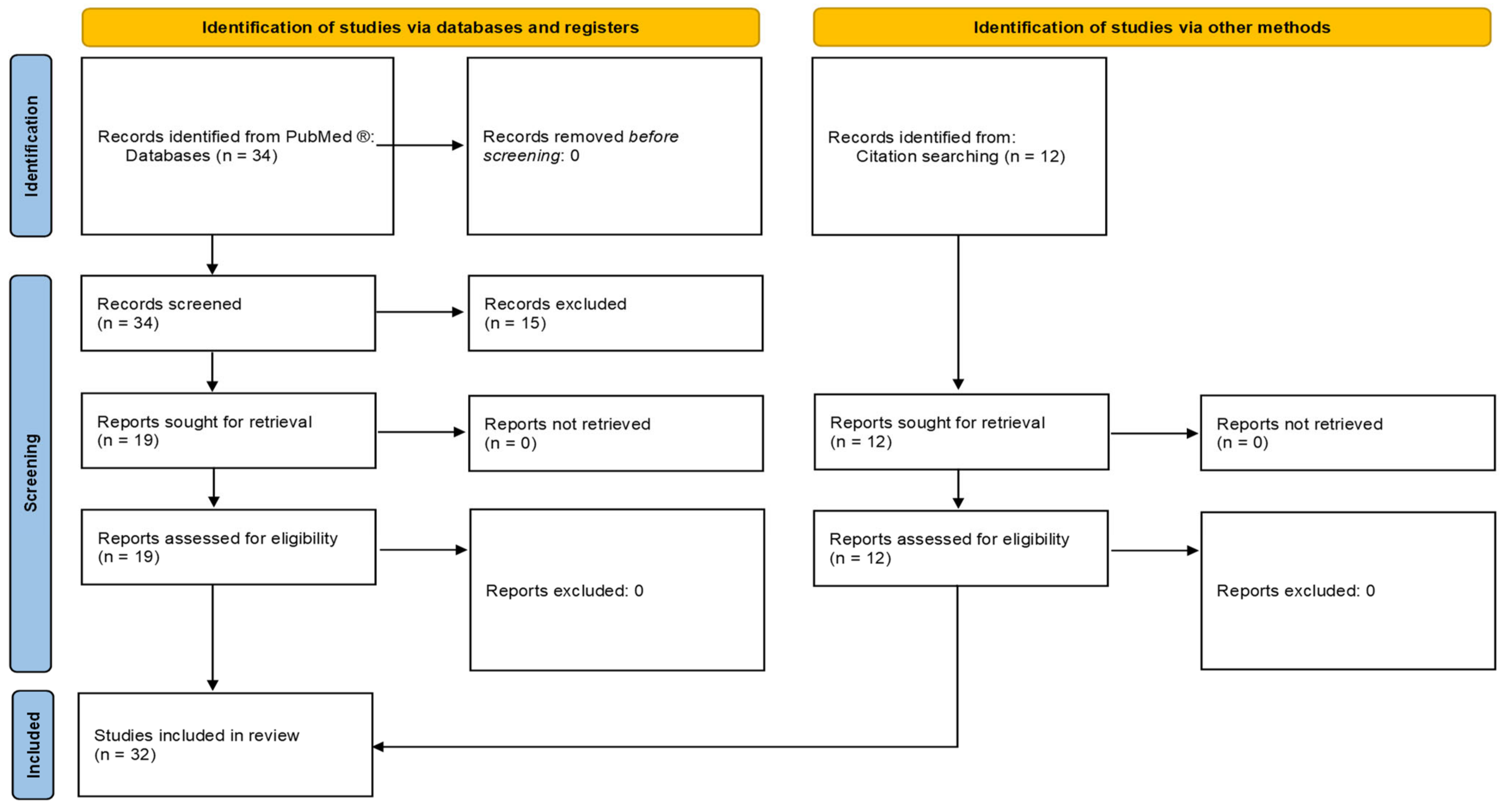

3.1. Review Flow

3.2. Selected Studies of the Systematic Review

4. Discussion

4.1. Emotion Recognition Studies

4.2. Video-Based Studies

4.3. Image-Based Studies

4.4. Web-Browsing Studies

4.5. Gaze and Demographic Features Study

4.6. Movement Imitation Study

4.7. Virtual Reality (VR) Interaction Study

4.8. Social Interaction Task (SIT) Study

4.9. Face-to-Face Conversation Study

5. Limitations

6. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Casanova, M.F. The Neuropathology of Autism. Brain Pathol. 2007, 17, 422–433. [Google Scholar] [CrossRef] [PubMed]

- American Psychiatric Association. Diagnostic and Statistical Manual of Mental Disorders, 5th ed.; American Psychiatric Association: Washington, DC, USA, 2013. [Google Scholar]

- Carette, R.; Cilia, F.; Dequen, G.; Bosche, J.; Guerin, J.-L.; Vandromme, L. Automatic Autism Spectrum Disorder Detection Thanks to Eye-Tracking and Neural Network-Based Approach. In Proceedings of the International Conference on IoT Technologies for Healthcare, Angers, France, 24–25 October 2017; Springer: Cham, Switzerland; pp. 75–81. [Google Scholar]

- Kanner, L. Autistic Disturbances of Affective Contact. Nerv. Child 1943, 2, 217–250. [Google Scholar]

- Asperger, H. Die “autistischen Psychopathen” Im Kindesalter. Eur. Arch. Psychiatry Clin. Neurosci. 1944, 117, 76–136. [Google Scholar] [CrossRef]

- Fombonne, E. Epidemiology of Pervasive Developmental Disorders. Pediatr. Res. 2009, 65, 591–598. [Google Scholar] [CrossRef]

- Lord, C.; Rutter, M.; DiLavore, P.C.; Risi, S. ADOS. Autism Diagnostic Observation Schedule. Manual; Western Psychological Services: Los Angeles, CA, USA, 2001. [Google Scholar]

- Klin, A.; Shultz, S.; Jones, W. Social Visual Engagement in Infants and Toddlers with Autism: Early Developmental Transitions and a Model of Pathogenesis. Neurosci. Biobehav. Rev. 2015, 50, 189–203. [Google Scholar] [CrossRef] [Green Version]

- Nayar, K.; Voyles, A.C.; Kiorpes, L.; Di Martino, A. Global and Local Visual Processing in Autism: An Objective Assessment Approach. Autism Res. 2017, 10, 1392–1404. [Google Scholar] [CrossRef]

- Tsuchiya, K.J.; Hakoshima, S.; Hara, T.; Ninomiya, M.; Saito, M.; Fujioka, T.; Kosaka, H.; Hirano, Y.; Matsuo, M.; Kikuchi, M. Diagnosing Autism Spectrum Disorder without Expertise: A Pilot Study of 5-to 17-Year-Old Individuals Using Gazefinder. Front. Neurol. 2021, 11, 1963. [Google Scholar] [CrossRef]

- Vu, T.; Tran, H.; Cho, K.W.; Song, C.; Lin, F.; Chen, C.W.; Hartley-McAndrew, M.; Doody, K.R.; Xu, W. Effective and Efficient Visual Stimuli Design for Quantitative Autism Screening: An Exploratory Study. In Proceedings of the 2017 IEEE EMBS International Conference on Biomedical & Health Informatics (BHI), Orlando, FL, USA, 16–19 February 2017; pp. 297–300. [Google Scholar]

- Lord, C.; Rutter, M.; Le Couteur, A. Autism Diagnostic Interview-Revised: A Revised Version of a Diagnostic Interview for Caregivers of Individuals with Possible Pervasive Developmental Disorders. J. Autism Dev. Disord. 1994, 24, 659–685. [Google Scholar] [CrossRef] [PubMed]

- Goldstein, S.; Ozonoff, S. Assessment of Autism Spectrum Disorder; Guilford Publications: New York, NY, USA, 2018. [Google Scholar]

- Kamp-Becker, I.; Albertowski, K.; Becker, J.; Ghahreman, M.; Langmann, A.; Mingebach, T.; Poustka, L.; Weber, L.; Schmidt, H.; Smidt, J. Diagnostic Accuracy of the ADOS and ADOS-2 in Clinical Practice. Eur. Child Adolesc. Psychiatry 2018, 27, 1193–1207. [Google Scholar] [CrossRef]

- He, Q.; Wang, Q.; Wu, Y.; Yi, L.; Wei, K. Automatic Classification of Children with Autism Spectrum Disorder by Using a Computerized Visual-Orienting Task. PsyCh J. 2021, 10, 550–565. [Google Scholar] [CrossRef] [PubMed]

- Fenske, E.C.; Zalenski, S.; Krantz, P.J.; McClannahan, L.E. Age at Intervention and Treatment Outcome for Autistic Children in a Comprehensive Intervention Program. Anal. Interv. Dev. Disabil. 1985, 5, 49–58. [Google Scholar] [CrossRef]

- Frank, M.C.; Vul, E.; Saxe, R. Measuring the Development of Social Attention Using Free-Viewing. Infancy 2012, 17, 355–375. [Google Scholar] [CrossRef] [PubMed]

- Yaneva, V.; Eraslan, S.; Yesilada, Y.; Mitkov, R. Detecting High-Functioning Autism in Adults Using Eye Tracking and Machine Learning. IEEE Trans. Neural Syst. Rehabil. Eng. 2020, 28, 1254–1261. [Google Scholar] [CrossRef] [PubMed]

- Sasson, N.J.; Elison, J.T. Eye Tracking Young Children with Autism. J. Vis. Exp. JoVE 2012, 61, 3675. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Bone, D.; Goodwin, M.S.; Black, M.P.; Lee, C.-C.; Audhkhasi, K.; Narayanan, S. Applying Machine Learning to Facilitate Autism Diagnostics: Pitfalls and Promises. J. Autism Dev. Disord. 2015, 45, 1121–1136. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Liu, W.; Li, M.; Yi, L. Identifying Children with Autism Spectrum Disorder Based on Their Face Processing Abnormality: A Machine Learning Framework. Autism Res. 2016, 9, 888–898. [Google Scholar] [CrossRef]

- Carette, R.; Elbattah, M.; Cilia, F.; Dequen, G.; Guérin, J.-L.; Bosche, J. Learning to Predict Autism Spectrum Disorder Based on the Visual Patterns of Eye-Tracking Scanpaths. In Proceedings of the HEALTHINF, Prague, Czech Republic, 22–24 February 2019; pp. 103–112. [Google Scholar]

- Peral, J.; Gil, D.; Rotbei, S.; Amador, S.; Guerrero, M.; Moradi, H. A Machine Learning and Integration Based Architecture for Cognitive Disorder Detection Used for Early Autism Screening. Electronics 2020, 9, 516. [Google Scholar] [CrossRef] [Green Version]

- Crippa, A.; Salvatore, C.; Perego, P.; Forti, S.; Nobile, M.; Molteni, M.; Castiglioni, I. Use of Machine Learning to Identify Children with Autism and Their Motor Abnormalities. J. Autism Dev. Disord. 2015, 45, 2146–2156. [Google Scholar] [CrossRef] [PubMed]

- Zhou, Y.; Yu, F.; Duong, T. Multiparametric MRI Characterization and Prediction in Autism Spectrum Disorder Using Graph Theory and Machine Learning. PLoS ONE 2014, 9, e90405. [Google Scholar] [CrossRef]

- Minissi, M.E.; Giglioli, I.A.C.; Mantovani, F.; Raya, M.A. Assessment of the Autism Spectrum Disorder Based on Machine Learning and Social Visual Attention: A Systematic Review. J. Autism Dev. Disord. 2021, 1–16. [Google Scholar] [CrossRef]

- Alam, M.E.; Kaiser, M.S.; Hossain, M.S.; Andersson, K. An IoT-Belief Rule Base Smart System to Assess Autism. In Proceedings of the 2018 4th International Conference on Electrical Engineering and Information & Communication Technology (iCEEiCT), Dhaka, Bangladesh, 13–15 September 2018; pp. 672–676. [Google Scholar]

- Hosseinzadeh, M.; Koohpayehzadeh, J.; Bali, A.O.; Rad, F.A.; Souri, A.; Mazaherinezhad, A.; Rezapour, A.; Bohlouli, M. A Review on Diagnostic Autism Spectrum Disorder Approaches Based on the Internet of Things and Machine Learning. J. Supercomput. 2020, 77, 2590–2608. [Google Scholar] [CrossRef]

- Syriopoulou-Delli, C.K.; Gkiolnta, E. Review of Assistive Technology in the Training of Children with Autism Spectrum Disorders. Int. J. Dev. Disabil. 2020, 1–13. [Google Scholar] [CrossRef]

- Jiang, M.; Francis, S.M.; Tseng, A.; Srishyla, D.; DuBois, M.; Beard, K.; Conelea, C.; Zhao, Q.; Jacob, S. Predicting Core Characteristics of ASD Through Facial Emotion Recognition and Eye Tracking in Youth. In Proceedings of the 2020 42nd Annual International Conference of the IEEE Engineering in Medicine & Biology Society (EMBC), Montreal, QC, Canada, 20–24 July 2020; pp. 871–875. [Google Scholar]

- Canavan, S.; Chen, M.; Chen, S.; Valdez, R.; Yaeger, M.; Lin, H.; Yin, L. Combining Gaze and Demographic Feature Descriptors for Autism Classification. In Proceedings of the 2017 IEEE International Conference on Image Processing (ICIP), Beijing, China, 17–20 September 2017; pp. 3750–3754. [Google Scholar]

- Jiang, M.; Francis, S.M.; Srishyla, D.; Conelea, C.; Zhao, Q.; Jacob, S. Classifying Individuals with ASD through Facial Emotion Recognition and Eye-Tracking. In Proceedings of the 2019 41st Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Berlin, Germany, 23–27 July 2019; pp. 6063–6068. [Google Scholar]

- Bruni, T.P. Test Review: Social Responsiveness Scale–Second Edition (SRS-2). J. Psychoeduc. Assess. 2014, 32, 365–369. [Google Scholar] [CrossRef]

- Bodfish, J.W.; Symons, F.J.; Lewis, M.H. The Repetitive Behavior Scale (Western Carolina Center Research Reports). Morganton NC West. Carol. Cent. 1999. [Google Scholar]

- Nag, A.; Haber, N.; Voss, C.; Tamura, S.; Daniels, J.; Ma, J.; Chiang, B.; Ramachandran, S.; Schwartz, J.; Winograd, T. Toward Continuous Social Phenotyping: Analyzing Gaze Patterns in an Emotion Recognition Task for Children with Autism through Wearable Smart Glasses. J. Med. Internet Res. 2020, 22, e13810. [Google Scholar] [CrossRef]

- Król, M.E.; Król, M. A Novel Machine Learning Analysis of Eye-Tracking Data Reveals Suboptimal Visual Information Extraction from Facial Stimuli in Individuals with Autism. Neuropsychologia 2019, 129, 397–406. [Google Scholar] [CrossRef]

- Bulat, A.; Tzimiropoulos, G. How Far Are We from Solving the 2d & 3d Face Alignment Problem? (And a Dataset of 230,000 3d Facial Landmarks). In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 1021–1030. [Google Scholar]

- Ahuja, K.; Bose, A.; Jain, M.; Dey, K.; Joshi, A.; Achary, K.; Varkey, B.; Harrison, C.; Goel, M. Gaze-Based Screening of Autistic Traits for Adolescents and Young Adults Using Prosaic Videos. In Proceedings of the 3rd ACM SIGCAS Conference on Computing and Sustainable Societies, Guayaquil, Ecuador, 15–17 June 2020; p. 324. [Google Scholar]

- Wan, G.; Kong, X.; Sun, B.; Yu, S.; Tu, Y.; Park, J.; Lang, C.; Koh, M.; Wei, Z.; Feng, Z. Applying Eye Tracking to Identify Autism Spectrum Disorder in Children. J. Autism Dev. Disord. 2019, 49, 209–215. [Google Scholar] [CrossRef]

- Elbattah, M.; Carette, R.; Dequen, G.; Guérin, J.-L.; Cilia, F. Learning Clusters in Autism Spectrum Disorder: Image-Based Clustering of Eye-Tracking Scanpaths with Deep Autoencoder. In Proceedings of the 2019 41st Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Berlin, Germany, 23–27 July 2019; pp. 1417–1420. [Google Scholar]

- Van der Maaten, L.; Hinton, G. Visualizing Data Using T-SNE. J. Mach. Learn. Res. 2008, 9, 2579–2605. [Google Scholar]

- Reimann, G.E.; Walsh, C.; Csumitta, K.D.; McClure, P.; Pereira, F.; Martin, A.; Ramot, M. Gauging Facial Feature Viewing Preference as a Stable Individual Trait in Autism Spectrum Disorder. Autism Res. 2021, 14, 1670–1683. [Google Scholar] [CrossRef]

- Liu, W.; Yu, X.; Raj, B.; Yi, L.; Zou, X.; Li, M. Efficient Autism Spectrum Disorder Prediction with Eye Movement: A Machine Learning Framework. In Proceedings of the 2015 International Conference on Affective Computing and Intelligent Interaction (ACII), Xi’an, China, 21–24 September 2015; pp. 649–655. [Google Scholar]

- Kang, J.; Han, X.; Hu, J.-F.; Feng, H.; Li, X. The Study of the Differences between Low-Functioning Autistic Children and Typically Developing Children in the Processing of the Own-Race and Other-Race Faces by the Machine Learning Approach. J. Clin. Neurosci. 2020, 81, 54–60. [Google Scholar] [CrossRef]

- Kang, J.; Han, X.; Song, J.; Niu, Z.; Li, X. The Identification of Children with Autism Spectrum Disorder by SVM Approach on EEG and Eye-Tracking Data. Comput. Biol. Med. 2020, 120, 103722. [Google Scholar] [CrossRef]

- Peng, H.; Long, F.; Ding, C. Feature Selection Based on Mutual Information Criteria of Max-Dependency, Max-Relevance, and Min-Redundancy. IEEE Trans. Pattern Anal. Mach. Intell. 2005, 27, 1226–1238. [Google Scholar] [CrossRef]

- Wang, S.; Jiang, M.; Duchesne, X.M.; Laugeson, E.A.; Kennedy, D.P.; Adolphs, R.; Zhao, Q. Atypical Visual Saliency in Autism Spectrum Disorder Quantified through Model-Based Eye Tracking. Neuron 2015, 88, 604–616. [Google Scholar] [CrossRef] [Green Version]

- Jiang, M.; Zhao, Q. Learning Visual Attention to Identify People with Autism Spectrum Disorder. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 3267–3276. [Google Scholar]

- Hart, P.E.; Stork, D.G.; Duda, R.O. Pattern Classification; Wiley: Hoboken, NJ, USA, 2000. [Google Scholar]

- Tao, Y.; Shyu, M.-L. SP-ASDNet: CNN-LSTM Based ASD Classification Model Using Observer Scanpaths. In Proceedings of the 2019 IEEE International Conference on Multimedia & Expo Workshops (ICMEW), Shanghai, China, 8–12 July 2019; pp. 641–646. [Google Scholar]

- Duan, H.; Zhai, G.; Min, X.; Che, Z.; Fang, Y.; Yang, X.; Gutiérrez, J.; Callet, P.L. A Dataset of Eye Movements for the Children with Autism Spectrum Disorder. In Proceedings of the 10th ACM Multimedia Systems Conference, Amherst, MA, USA, 18–21 June 2019; pp. 255–260. [Google Scholar]

- Pan, J.; Ferrer, C.C.; McGuinness, K.; O’Connor, N.E.; Torres, J.; Sayrol, E.; Giro-i-Nieto, X. Salgan: Visual Saliency Prediction with Generative Adversarial Networks. arXiv 2017, arXiv:1701.01081. [Google Scholar]

- Liaqat, S.; Wu, C.; Duggirala, P.R.; Cheung, S.S.; Chuah, C.-N.; Ozonoff, S.; Young, G. Predicting ASD Diagnosis in Children with Synthetic and Image-Based Eye Gaze Data. Signal Process. Image Commun. 2021, 94, 116198. [Google Scholar] [CrossRef]

- Li, J.; Zhong, Y.; Ouyang, G. Identification of ASD Children Based on Video Data. In Proceedings of the 2018 24th International Conference on Pattern Recognition (ICPR), Beijing, China, 20–24 August 2018; pp. 367–372. [Google Scholar]

- Li, J.; Zhong, Y.; Han, J.; Ouyang, G.; Li, X.; Liu, H. Classifying ASD Children with LSTM Based on Raw Videos. Neurocomputing 2020, 390, 226–238. [Google Scholar] [CrossRef]

- Yaneva, V.; Ha, L.A.; Eraslan, S.; Yesilada, Y.; Mitkov, R. Detecting Autism Based on Eye-Tracking Data from Web Searching Tasks. In Proceedings of the 15th International Web for All Conference, Lyon, France, 23–25 April 2018; pp. 1–10. [Google Scholar]

- Vabalas, A.; Gowen, E.; Poliakoff, E.; Casson, A.J. Applying Machine Learning to Kinematic and Eye Movement Features of a Movement Imitation Task to Predict Autism Diagnosis. Sci. Rep. Nat. Publ. Group 2020, 10, 8346. [Google Scholar] [CrossRef]

- Lin, Y.; Gu, Y.; Xu, Y.; Hou, S.; Ding, R.; Ni, S. Autistic Spectrum Traits Detection and Early Screening: A Machine Learning Based Eye Movement Study. J. Child Adolesc. Psychiatr. Nurs. 2021. [Google Scholar] [CrossRef]

- Drimalla, H.; Scheffer, T.; Landwehr, N.; Baskow, I.; Roepke, S.; Behnia, B.; Dziobek, I. Towards the Automatic Detection of Social Biomarkers in Autism Spectrum Disorder: Introducing the Simulated Interaction Task (SIT). NPJ Digit. Med. 2020, 3, 25. [Google Scholar] [CrossRef] [Green Version]

- Zhao, Z.; Tang, H.; Zhang, X.; Qu, X.; Hu, X.; Lu, J. Classification of Children with Autism and Typical Development Using Eye-Tracking Data From Face-to-Face Conversations: Machine Learning Model Development and Performance Evaluation. J. Med. Internet Res. 2021, 23, e29328. [Google Scholar] [CrossRef]

| Authors | Groups | N | Age | Machine Learning Models | Type of Study/Stimuli | Main Results |

|---|---|---|---|---|---|---|

| Jiang et al. (2019) | ASD | 23 | 8–17 years | RF | Emotion recognition study | Differences concerning response time and eye movements, but no difference concerning emotion recognition accuracy. High Classification accuracy (86%). |

| TD | 35 | 8–34 years | ||||

| Jiang et al. (2020) | ASD | 13 | M = 12.13 years | RF Regressor | Emotion recognition study | High prediction accuracy on SRS-2 total score (R2 = 0.325) and its subscales. RBS-R predicted with high accuracy (R2 = 0.302) concerning total score but not its subscales. |

| ASD + ADHD | 8 | |||||

| ADHD | 3 | |||||

| TD | 36 | M = 12.50 years | ||||

| Nag et al. (2020) | ASD | 16 | 6–17 years | Elastic Net/Standard Logistic Regression Classifiers | Emotion recognition study | A classification accuracy of 0.71 was achieved across all trials by the model. |

| TD | 17 | 8–17 years | ||||

| Król and Król (2019) | ASD | 21 | 11–29 years | Facial features landmark detection algorithm/DNN | Emotion recognition study | Emotion recognition task played the most important role in ASD and TD participants discrimination. |

| TD | 23 | 10–21 years | ||||

| Ahuja et al (2020) | ASD | 35 | 15–29 years | SVM/Multi-layer Perceptron Regressor | Video-based study: watching some ordinary videos | Individuals with an autism diagnosis were classified with a really high accuracy (92.5%). |

| TD | 25 | 19–30 years | ||||

| Carette et al. (2017) | ASD | 17 | 8–10 years | LSTM | Video-based study: eye-tracking while watching a joint-attention video | The status of 83% of ASD patients was validated using a neural network. |

| TD | 15 | |||||

| Carette et al. (2019) | ASD | 29 | M = 8 years | NB, Logistic Regression, SVM, RF, ANN | Video-based study: watching stimulating videos for eye gaze | The single hidden layer model consisting of 200 neurons had the best performance in discerning ASD participants (accuracy = 92%). |

| TD | 30 | |||||

| Wan et al. (2019) | ASD | 37 | 4–6 years | SVM | Video-based study: watching a muted video in which a woman was mouthing the alphabet | Only the difference in time spent on fixating at the moving mouth and body was significant enough to differentiate ASD from TD children with a high accuracy (85.1%). |

| TD | 37 | |||||

| Elbattah et al. (2019) | ASD | 29 | M = 7.88 years | k-means | Video-based study: stimuli from Carette et al. 2019 | Autoencoder provided better cluster separation showing a relationship between faster eye movement and higher ASD symptom severity. |

| TD | 30 | |||||

| Reimann et al. (2021) | HFA | 33 | M = 20.25 years | ANN | Video-based study: two or more characters engaged in conversation | ASD participants spent less time looking to the center of the face i.e., the nose. There were no significant differences compared to TD participants concerning eye and mouth looking time. |

| TD | 36 | M = 20.83 years | ||||

| Tsuchiya et al. (2021) | ASD | 39 | M = 10.3 years | LOO method | Video-based study: social and preferential paradigms | Accuracy of 78% was achieved, and the area under curve (AUC) of the best-fit algorithm was 0.84. The cross-validation showed an AUC of 0.74 and the validation in the second control group was of 0.91. |

| TD | 102 | M = 9.5 years | ||||

| Second control group | 24 | M = 10.4 years | ||||

| Liu et al. (2015) | ASD | 21 | M = 7.85 years | K-means, SVM | Image-based study: pictures of Chinese female faces | The SVM that was based on BoW histogram features containing combined variables obtained from k-means clustering performed better in ASD discrimination (AUC = 0.92), (accuracy = 86.89%). |

| TD-age matched | 21 | M = 7.73 years | ||||

| TD-IQ matched | 20 | M = 5.69 years | ||||

| Liu et al. (2016) | ASD | 29 | 4–11 years | K-means, RBF kernel SVM | Image-based study: memorising an amount of faces (Caucasian and Chinese) and recognising them among some additional Caucasian and Chinese faces | RBF kernel SVM that was applied on all faces performed better than SVM applied on other race faces and same race faces (accuracy = 88.51%). |

| TD-age matched | 29 | |||||

| TD-IQ matched | 29 | M = 5.74 years | ||||

| Vu et al. (2017) | ASD | 16 | 2–10 years | kNN | Image-based study: different types of content (social scene, human face and object) and time of exposure (1s, 3s, 5s) | “Social scene” stimulus combined with 5s exposure time reached the highest classicification accuracy at 98.24%. |

| TD | 16 | |||||

| Kang et al. (2020a) | LFA | 77 | 3–6 years | K-means, SVM | Image-based study: watching images randomly, i.e., other-race faces, own-race strange faces and own-race familiar faces | The classification accuracy that combined three types of faces reached a highest accuracy of 84.17% (AUC = 0.89) with 120 features selected. |

| TD | 80 | |||||

| Kang et al. (2020b) | ASD | 49 | 3–6 years | SVM | Image-based/Electroencephalography (EEG) study: watching photos of an own-race and another-race young girl | The combination of eye-tracking data with EEG data reached the highest classification accuracy (85.44%) and AUC (0.93) with 32 features selected. |

| TD | 48 | |||||

| Wang et al. (2015) | HFA | 20 | M = 30.8 years | SVM | Image-based study: passively viewing natural scene images | ASD people have atypical visual attention throughout multiple levels and categories of objects when compared with TD people. |

| TD | 19 | M = 32.3 years | ||||

| Jiang and Zhao (2017) | HFA | 20 | M = 30.8 years | Deep Neural Network (DNN), SVM | Image-based study: use of eye-tracking data from Wang et al. (2015). Fisher score method was performed aiming at finding the most discriminative images | An automatic approach contributed to a better understanding of ASD people attention traits without depending on any previously acquired knowledge of the disorder with high accuracy (92%). |

| TD | 19 | M = 32.3 years | ||||

| Tao & Shyu (2019) | ASD | 14 | M = 8 years | CNN, LSTM | Image-based study: Saliency4ASD grand challenge eye movement dataset (social and non-social images depicting either people, or objects, or naturalistic scenes) | The six-layer CNN-LSTM architecture with batch normalization achieved the best ASD discrimination performance (accuracy = 74.22%). |

| TD | 14 | |||||

| Liaqat et al. (2021) | ASD | 14 | M = 8 years | A synthetic saccade approach called STAR-FC where data were input to a deep learning classifier, an image-based approach with a sequence of fixation maps that were fed into a CNN or an RNN. | Image-based study: Saliency4ASD grand challenge eye movement dataset (social and non-social images depicting either people, or objects, or naturalistic scenes) | Image-based approaches showed a slightly better performance compared with synthetic saccade approaches concerning both accuracy and AUC. A relatively high prediction accuracy (67.23%) was reached on the validation dataset and on the test dataset (62.13%). |

| TD | 14 | |||||

| He et al. (2021) | HFA | 26 | M = 5.08 years | kNN | Image-based study: computerised visual-orienting task with gaze and non-gaze directional cues | Performance: TD>HFA>LFA, Non-gaze directional cues also contributed to group distinction suggesting that people with ASD show domain-general visual orienting deficits, Classification accuracy of 81.08%. |

| LFA | 24 | M = 4.98 years | ||||

| TD | 24 | M = 5.24 years | ||||

| Li et al. (2018) | ASD | 53 | 4–7 years | SVM | Image-based study: images of children’s mothers | SVM on 40 video frames was the best model (accuracy = 93.7%). |

| TD | 136 | 6–8 years | ||||

| Li et al. (2019) | ASD Dataset 1 | 53 | 4–7 years | SVM, LSTM | Image-based study: images of children’s mothers | LSTM in combination with accumulative histograms on dataset 2 achieved best ASD discrimination performance (accuracy = 92.60%). |

| ASD Dataset 2 | 83 | |||||

| TD | 136 | 6–8 years | ||||

| Yaneva et al. (2018) | HFA | 18 | M = 37 years | Logistic Regression | Web-browsing study: eye-movement observation while Browsing and Searching on specific web pages | Search task achieved a best performance of 0.75 and Browse task achieved a best performance of 0.71 when training on selected media took place. |

| TD | 18 | M = 33.6 years | ||||

| Yaneva et al. (2020) | HFA | 19 | M = 41 years | Logistic Regression | Web-browsing study: eye-movement observation while Browsing (30 s) and Synthesizing information (<120 s) on specific web pages | Search task brought the best results (0.75), first Browse task and Synthesis task scored slightly lower, 0.74 and 0.73, respectively, whereas the Browse task of the present study scored a little lower (0.65). |

| TD | 25 | M = 32.2 years | ||||

| Canavan et al. (2017) | Low/High ASD risk | 94 | 2–10 years | Random Regression Forests, C4.5 DT, PART | Gaze and Demographic Features Study | A high classification accuracy was reached regarding PART (96.2%), C4.5 (94.94%) and Random Regression Forest (93.25%) when outliers were removed. |

| Low/Medium/High ASD risk | 71 | 11–20 years | ||||

| Medium ASD risk | 68 | 21–30 years | ||||

| Medium ASD risk | 4 | 31–40 years | ||||

| ASD | 20 | 60+ years | ||||

| Vabalas et al. (2020) | Training ASD | 15 | M = 33.1 years | SVM | Movement imitation study | ASD and non-ASD participants were classified with 73% accuracy regarding kinematic measures and with 70% accuracy regarding eye movement. When eye-tracking and kinematic measures were combined an increased accuracy was achieved (78%). |

| Training TD | 15 | M = 32.2 years | ||||

| Test ASD | 7 | M = 28.16 years | ||||

| Test TD | 7 | M = 27.90 years | ||||

| Lin et al. (2021) | TD (preliminary experiment) | 107 | M = 24.84 years | Logistic regression, NB, kNN, SVM, DT, RF, GBDT and an Ensemble Model | VR Interaction Study: A scenario with real-life objects depicting a couple in the garden with their dog | The Ensemble Model achieved the best performance in the preliminary experiment (accuracy = 0.73). 77% of ASD participants in the test experiment were effectively verified as showing high levels of autistic traits. |

| ASD (test experiment) | 22 | M = 12.68 years | ||||

| Drimalla et al. (2020) | ASD | 37 | 22–62 years | RF | Social Interaction Task (SIT) Study: sitting in front of a computer screen taking part in a small conversation with a woman, whose part had been recorded before | Facial expressions and vocal characteristics provided the best accuracy (73%), sensitivity (67%), and specificity (79%). Gaze behavior did not provide any evidence about significant group differences. |

| TD | 43 | 18–49 years | ||||

| Zhao et al. (2021) | ASD | 20 | M = 99.6 months | SVM, LDA, DT, RF | Face-to-face conversation study: A structured interview with a female interviewer | The SVM classifier achieved the highest accuracy reaching (92.31%) with the use of only three features, i.e., length of total session, mouth in the first session, whole body in the third session. |

| TD | 23 | M = 108.8 months |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kollias, K.-F.; Syriopoulou-Delli, C.K.; Sarigiannidis, P.; Fragulis, G.F. The Contribution of Machine Learning and Eye-Tracking Technology in Autism Spectrum Disorder Research: A Systematic Review. Electronics 2021, 10, 2982. https://doi.org/10.3390/electronics10232982

Kollias K-F, Syriopoulou-Delli CK, Sarigiannidis P, Fragulis GF. The Contribution of Machine Learning and Eye-Tracking Technology in Autism Spectrum Disorder Research: A Systematic Review. Electronics. 2021; 10(23):2982. https://doi.org/10.3390/electronics10232982

Chicago/Turabian StyleKollias, Konstantinos-Filippos, Christine K. Syriopoulou-Delli, Panagiotis Sarigiannidis, and George F. Fragulis. 2021. "The Contribution of Machine Learning and Eye-Tracking Technology in Autism Spectrum Disorder Research: A Systematic Review" Electronics 10, no. 23: 2982. https://doi.org/10.3390/electronics10232982

APA StyleKollias, K.-F., Syriopoulou-Delli, C. K., Sarigiannidis, P., & Fragulis, G. F. (2021). The Contribution of Machine Learning and Eye-Tracking Technology in Autism Spectrum Disorder Research: A Systematic Review. Electronics, 10(23), 2982. https://doi.org/10.3390/electronics10232982