Research on Target Localization Method of CRTS-III Slab Ballastless Track Plate Based on Machine Vision

Abstract

:1. Introduction

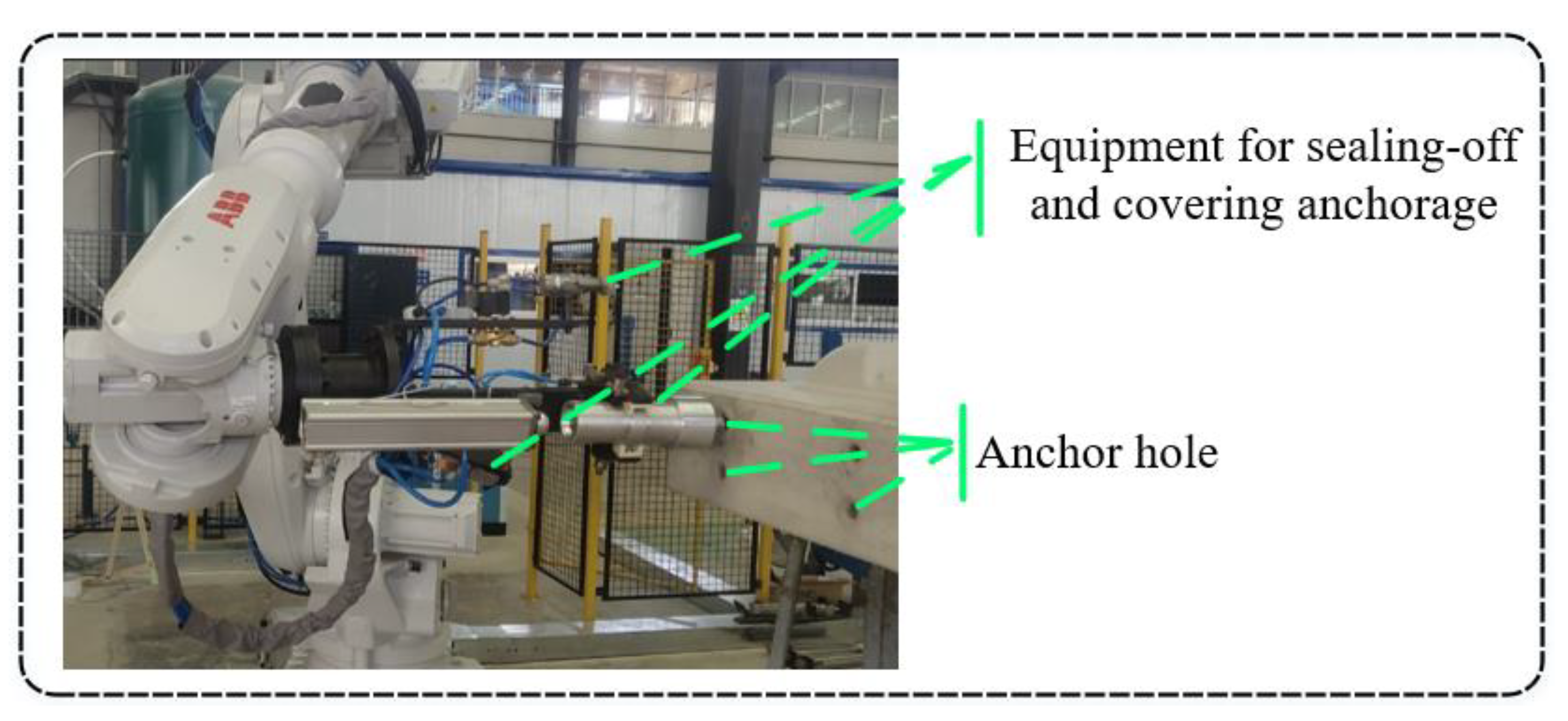

- We design a novel automated anchor sealing platform based on vision guidance to reduce labor costs and improve product quality (Section 2.1);

- We establish an efficient, accurate, and simple method for locating the CRTS-III slab ballastless track plate based on the edge feature points (Section 2.2);

- We design and implement an affordable visual localization system based on monocular camera and machine vision software in the anchor sealing platform to correct the robot end coordinate system. Furthermore, we evaluate the system’s effectiveness in a production environment (Section 3).

2. Materials and Methods

2.1. Platform Overview

2.2. Visual Localization Method Design

2.2.1. Region of Interest

2.2.2. Image Preprocessing

2.2.3. Feature Extraction and Target Localization

2.2.4. Hand–Eye Calibration

3. Results and Discussion

3.1. Evaluation of Repeatable Positioning Accuracy

3.2. Evaluation of Temporal Performance

4. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Otsuka, A. Assessment of the improvement in energy intensity by the new high-speed railway in Japan. Asia Pac. J. Reg. Sci. 2020, 1–16. [Google Scholar] [CrossRef]

- Guo, X.; Sun, W.; Yao, S.; Zheng, S. Does High-Speed Railway Reduce Air Pollution along Highways?—Evidence from China. Transp. Res. Part D Transp. Environ. 2020, 89, 102607. [Google Scholar] [CrossRef]

- Demizu, F.; Li, Y.-T.; Schmöcker, J.-D.; Nakamura, T.; Uno, N. Long-term impact of the Shinkansen on rail and air demand: Analysis with data from Northeast Japan. Transp. Plan. Technol. 2017, 40, 741–756. [Google Scholar] [CrossRef]

- Kanazawa, H.; Su, K.; Noguchi, T.; Hachiya, Y.; Nakano, M. Evaluation of airport runway pavement based on pilots’ subjec-tive judgement. Int. J. Pavement Eng. 2010, 11, 189–195. [Google Scholar] [CrossRef]

- Zhi-ping, Z.; Jun-dong, W.; Shi-wen, S.; Ping, L.; Shuaibu, A.A.; Wei-dong, W. Experimental Study on Evolution of Mechan-ical Properties of CRTS III Ballastless Slab Track under Fatigue Load. Constr. Build. Mater. 2019, 210, 639–649. [Google Scholar] [CrossRef]

- Xu, Q.; Sun, H.; Wang, L.; Xu, L.; Chen, W.; Lou, P. Influence of Vehicle Number on the Dynamic Characteristics of High-Speed Train-CRTS III Slab Track-Subgrade Coupled System. Materials 2021, 14, 3662. [Google Scholar] [CrossRef]

- He, W.; Jiang, Z.; Ming, W.; Zhang, G.; Yuan, J.; Yin, L. A critical review for machining positioning based on computer vision. Measurement 2021, 184, 109973. [Google Scholar] [CrossRef]

- Wan, G.; Wang, G.; Fan, Y. A Robotic grinding station based on an industrial manipulator and vision system. PLoS ONE 2021, 16, e0248993. [Google Scholar] [CrossRef]

- Xu, Y.; Lv, N.; Fang, G.; Du, S.; Zhao, W.; Ye, Z.; Chen, S. Welding seam tracking in robotic gas metal arc welding. J. Mater. Process. Technol. 2017, 248, 18–30. [Google Scholar] [CrossRef]

- Pagano, S.; Russo, R.; Savino, S. A vision guided robotic system for flexible gluing process in the footwear industry. Robot. Comput. Manuf. 2020, 65, 101965. [Google Scholar] [CrossRef]

- Gao, M.; Li, X.; He, Z.; Yang, Y. An Automatic Assembling System for Sealing Rings Based on Machine Vision. J. Sens. 2017, 2017, 4207432. [Google Scholar] [CrossRef] [Green Version]

- Ni, Q.; Li, D.; Chen, Y.; Dai, H. Visual Positioning Algorithm Based on Micro Assembly Line. J. Phys. Conf. Ser. 2020, 1626, 012023. [Google Scholar] [CrossRef]

- Zhang, J.; Xu, Z.; Yu, F.; Tang, Q. A Fully Distributed Multi-Robot Navigation Method without Pre-Allocating Target Positions. Auton. Robot. 2021, 45, 473–492. [Google Scholar] [CrossRef]

- Pérez, L.; Rodríguez, Í.; Rodríguez, N.; Usamentiaga, R.; García, D.F. Robot Guidance Using Machine Vision Techniques in Industrial Environments: A Comparative Review. Sensors 2016, 16, 335. [Google Scholar] [CrossRef] [PubMed]

- Qin, T.; Li, P.; Shen, S. VINS-Mono: A Robust and Versatile Monocular Visual-Inertial State Estimator. IEEE Trans. Robot. 2018, 34, 1004–1020. [Google Scholar] [CrossRef] [Green Version]

- Min, Y.; Xiao, B.; Dang, J.; Yue, B.; Cheng, T. Real time detection system for rail surface defects based on machine vision. EURASIP J. Image Video Process. 2018, 2018, 3. [Google Scholar] [CrossRef] [Green Version]

- Dikarinata, R.; Wibowo, I.K.; Bachtiar, M.M.; Haq, M.A. Searching Ball Around ROI to Increase Computational Processing of Detection. In Proceedings of the 2020 International Electronics Symposium (IES), Surabaya, Indonesia, 29–30 September 2020; pp. 207–212. [Google Scholar]

- Rida, I.; Herault, R.; Marcialis, G.L.; Gasso, G. Palmprint recognition with an efficient data driven ensemble classifier. Pattern Recognit. Lett. 2019, 126, 21–30. [Google Scholar] [CrossRef]

- Chen, X.; Drew, M.S.; Li, Z.N. Illumination and Reflectance Spectra Separation of Hy-perspectral Image Data under Multiple Illumination Conditions. Electron. Imaging 2017, 2017, 194–199. [Google Scholar] [CrossRef] [Green Version]

- Yousif, W.K.; Ali, A.A. A Corporative System of Edge Mapping and Hybrid Path A*-Douglas-Pucker Algorithm Planning Method. J. Southwest Jiaotong Univ. 2019, 54. [Google Scholar] [CrossRef]

- Penumuru, D.P.; Muthuswamy, S.; Karumbu, P. Identification and classification of materials using machine vision and ma-chine learning in the context of industry 4.0. J. Intell. Manuf. 2019, 31, 1229–1241. [Google Scholar] [CrossRef]

- Tsai, D.-M.; Hsieh, Y.-C. Machine Vision-Based Positioning and Inspection Using Expectation–Maximization Technique. IEEE Trans. Instrum. Meas. 2017, 66, 2858–2868. [Google Scholar] [CrossRef]

- Yuan, X.C.; Wu, L.S.; Chen, H. Rail Image Segmentation Based on Otsu Threshold Method. Opt. Precis. Eng. 2016, 24, 1772–1781. [Google Scholar] [CrossRef]

- Zhou, J.; Qian, H.; Chen, C.-F.; Zhao, J.; Li, G.; Wu, Q.; Luo, H.; Wen, S.; Liu, Z. Optical edge detection based on high-efficiency dielectric metasurface. Proc. Natl. Acad. Sci. USA 2019, 116, 11137–11140. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Orujov, F.; Maskeliūnas, R.; Damaševičius, R.; Wei, W. Fuzzy Based Image Edge Detection Algorithm for Blood Vessel De-tection in Retinal Images. Appl. Soft Comput. 2020, 94, 106452. [Google Scholar] [CrossRef]

- Steger, C.; Ulrich, M.; Wiedemann, C. Machine Vision Algorithms and Applications; Wiley: Hoboken, NJ, USA, 2018; ISBN 978-3-527-41365-2. [Google Scholar]

- Connelly, L. Logistic Regression. Medsurg Nurs. 2020, 29, 353–354. [Google Scholar]

- Rida, I.; Al-Maadeed, N.; Al-Maadeed, S.; Bakshi, S. A comprehensive overview of feature representation for biometric recognition. Multimed. Tools Appl. 2018, 79, 4867–4890. [Google Scholar] [CrossRef]

- Aggarwal, C.C. An Introduction to Outlier Analysis. In Outlier Analysis; Springer: Berlin/Heidelberg, Germany, 2017; pp. 1–34. [Google Scholar]

- Wang, H.; Bah, M.J.; Hammad, M. Progress in Outlier Detection Techniques: A Survey. IEEE Access 2019, 7, 107964–108000. [Google Scholar] [CrossRef]

- Ting, K.M.; Aryal, S.; Washio, T. Which Outlier Detector Should I Use? In Proceedings of the 2018 IEEE International Con-ference on Data Mining (ICDM), Singapore, 17–20 November 2018; p. 8. [Google Scholar]

- Zhao, Y.; Nasrullah, Z.; Li, Z. Pyod: A Python Toolbox for Scalable Outlier Detection. arXiv 2019, arXiv:1901.01588. [Google Scholar]

- Rida, I.; Al-Maadeed, S.; Mahmood, A.; Bouridane, A.; Bakshi, S. Palmprint Identification Using an Ensemble of Sparse Rep-resentations. IEEE Access 2018, 6, 3241–3248. [Google Scholar] [CrossRef]

- Bandaragoda, T.R.; Ting, K.M.; Albrecht, D.; Liu, F.T.; Zhu, Y.; Wells, J.R. Isolation-Based Anomaly Detection Using Near-est-Neighbor Ensembles. Comput. Intell. 2018, 34, 968–998. [Google Scholar] [CrossRef]

- Damrongsakmethee, T.; Neagoe, V.-E. Principal Component Analysis and ReliefF Cascaded with Decision Tree for Credit Scoring. In Proceedings of the Computer Science Online Conference, Zlin, Czech Republic, 24–27 April 2019; pp. 85–95. [Google Scholar]

- Hariri, S.; Kind, M.C.; Brunner, R.J. Extended Isolation Forest. IEEE Trans. Knowl. Data Eng. 2021, 33, 1479–1489. [Google Scholar] [CrossRef] [Green Version]

- Hubert, M.; Debruyne, M.; Rousseeuw, P.J. Minimum Covariance Determinant and Extensions. Wiley Interdiscip. Rev. Comput. Stat. 2018, 10, e1421. [Google Scholar] [CrossRef] [Green Version]

- Koide, K.; Menegatti, E. General Hand–Eye Calibration Based on Reprojection Error Minimization. IEEE Robot. Autom. Lett. 2019, 4, 1021–1028. [Google Scholar] [CrossRef]

- Jia, G.; Dong, X.; Huo, Q.; Wang, K.; Mei, X. Positioning and navigation system based on machine vision intended for la-ser-electrochemical micro-hole processing. Int. J. Adv. Manuf. Technol. 2017, 94, 1397–1410. [Google Scholar] [CrossRef]

- Dongarra, J.; Gates, M.; Haidar, A.; Kurzak, J.; Luszczek, P.; Tomov, S.; Yamazaki, I. The Singular Value Decomposition: Anatomy of Optimizing an Algorithm for Extreme Scale. SIAM Rev. 2018, 60, 808–865. [Google Scholar] [CrossRef]

| Evaluation Items | Δx (mm) | Δy (mm) | Δθ (°) |

|---|---|---|---|

| Data Range | −0.137–0.108 | −0.129–0.116 | −0.091–0.115 |

| AM | −0.004 | 0.002 | 0.010 |

| MAD | 0.046 | 0.038 | 0.030 |

| SD | 0.055 | 0.048 | 0.038 |

| Data Range(mm) | AM (ms) | MAD (ms) | SD (ms) |

|---|---|---|---|

| 563.45–583.15 | 571.27 | 3.27 | 4.21 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liu, X.; Wu, W.; Zheng, L.; Wang, S.; Zhang, Q.; Wang, Q. Research on Target Localization Method of CRTS-III Slab Ballastless Track Plate Based on Machine Vision. Electronics 2021, 10, 3033. https://doi.org/10.3390/electronics10233033

Liu X, Wu W, Zheng L, Wang S, Zhang Q, Wang Q. Research on Target Localization Method of CRTS-III Slab Ballastless Track Plate Based on Machine Vision. Electronics. 2021; 10(23):3033. https://doi.org/10.3390/electronics10233033

Chicago/Turabian StyleLiu, Xinjun, Wenjiang Wu, Liaomo Zheng, Shiyu Wang, Qiang Zhang, and Qi Wang. 2021. "Research on Target Localization Method of CRTS-III Slab Ballastless Track Plate Based on Machine Vision" Electronics 10, no. 23: 3033. https://doi.org/10.3390/electronics10233033

APA StyleLiu, X., Wu, W., Zheng, L., Wang, S., Zhang, Q., & Wang, Q. (2021). Research on Target Localization Method of CRTS-III Slab Ballastless Track Plate Based on Machine Vision. Electronics, 10(23), 3033. https://doi.org/10.3390/electronics10233033