Multi-Language Spam/Phishing Classification by Email Body Text: Toward Automated Security Incident Investigation

Abstract

1. Introduction

2. Related Work

2.1. Email Preprocessing

2.2. Email Classification Solutions

3. Research on Text-Based Spam/Phishing Email Classification Solution

3.1. Email Dataset Preparation

3.2. Research Methodology and Results

4. Conclusions and Future Work

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Spam and Phishing in Q3 2020. Available online: https://securelist.com/spam-and-phishing-in-q3-2020/99325/ (accessed on 15 November 2020).

- 2020 Cyber Security Statistics. Available online: https://purplesec.us/resources/cyber-security-statistics/ (accessed on 15 November 2020).

- Social Engineering & Email Phishing–The 21st Century’s #1 Attack? Available online: https://www.wizlynxgroup.com/news/2020/08/27/social-engineering-email-phishing-21st-century-n1-cyber-attack/ (accessed on 15 November 2020).

- Carmona-Cejudo, J.M.; Baena-García, M.; del Campo-Avila, J.; Morales-Bueno, R. Feature extraction for multi-label learning in the domain of email classification. In Proceedings of the 2011 IEEE Symposium on Computational Intelligence and Data Mining (CIDM), Paris, France, 11–15 April 2011; pp. 30–36. [Google Scholar]

- Goel, S.; Williams, K.; Dincelli, E. Got phished? Internet security and human vulnerability. J. Assoc. Inf. Syst. 2017, 18, 22–44. [Google Scholar] [CrossRef]

- Aassal, A.E.; Moraes, L.; Baki, S.; Das, A.; Verma, R. Anti-phishing pilot at ACM IWSPA 2018: Evaluating performance with new metrics for unbalanced datasets. In Proceedings of the IWSPA-AP Anti Phishing Shared Task Pilot 4th ACM IWSPA, Tempe, Arizona, 21 March 2018; pp. 2–10. [Google Scholar]

- El Aassal, A.; Baki, S.; Das, A.; Verma, R.M. An In-Depth Benchmarking and Evaluation of Phishing Detection Research for Security Needs. IEEE Access 2020, 8, 22170–22192. [Google Scholar] [CrossRef]

- Abu-Nimeh, S.; Nappa, D.; Wang, X.; Nair, S. A comparison of machine learning techniques for phishing detection. In Proceedings of the Anti-phishing Working Groups 2nd Annual Ecrime Researchers Summit, Pittsburgh, PA, USA, 4–5 October 2007; pp. 60–69. [Google Scholar]

- L’Huillier, G.; Weber, R.; Figueroa, N. Online phishing classification using adversarial data mining and signaling games. In Proceedings of the ACM SIGKDD Workshop on CyberSecurity and Intelligence Informatics, Paris, France, 28 June–1 July 2009; pp. 33–42. [Google Scholar]

- Peng, T.; Harris, I.; Sawa, Y. Detecting phishing attacks using natural language processing and machine learning. In Proceedings of the 2018 IEEE 12th international conference on semantic computing (icsc), Laguna Hills, CA, USA, 31 January–2 February 2018; IEEE: New York, NY, USA, 2018; pp. 300–301. [Google Scholar]

- Weinberger, K.; Dasgupta, A.; Langford, J.; Smola, A.; Attenberg, J. Feature hashing for large scale multitask learning. In Proceedings of the 26th Annual International Conference on Machine Learning, Montreal, QC, Canada, 14-18 June 2009; pp. 11–1120. [Google Scholar]

- Zareapoor, M.; Seeja, K.R. Feature extraction or feature selection for text classification: A case study on phishing email detection. Int. J. Inf. Eng. Electron. Bus. 2015, 7, 60. [Google Scholar] [CrossRef]

- Smadi, S.; Aslam, N.; Zhang, L. Detection of online phishing email using dynamic evolving neural network based on reinforcement learning. Decis. Support Syst. 2018, 107, 88–102. [Google Scholar] [CrossRef]

- Toolan, F.; Carthy, J. Feature selection for spam and phishing detection. In Proceedings of the 2010 eCrime Researchers Summit, Dallas, TX, USA, 18–20 October 2010; IEEE: New York, NY, USA, 2010; pp. 1–12. [Google Scholar]

- Verma, R.M.; Zeng, V.; Faridi, H. Data Quality for Security Challenges: Case Studies of Phishing, Malware and Intrusion Detection Datasets. In Proceedings of the 2019 ACM SIGSAC Conference on Computer and Communications Security, London, UK, 11–15 November 2019; pp. 2605–2607. [Google Scholar]

- Smadi, S.; Aslam, N.; Zhang, L.; Alasem, R.; Hossain, M.A. Detection of phishing emails using data mining algorithms. In Proceedings of the 2015 9th International Conference on Software, Knowledge, Information Management and Applications (SKIMA), Kathmandu, Nepal, 15–17 December 2015; IEEE: New York, NY, USA, 2015; pp. 1–8. [Google Scholar]

- Akinyelu, A.A.; Adewumi, A.O. Classification of phishing email using random forest machine learning technique. J. Appl. Math. 2014, 2014. [Google Scholar] [CrossRef]

- Gangavarapu, T.; Jaidhar, C.D.; Chanduka, B. Applicability of machine learning in spam and phishing email filtering: Review and approaches. Artif. Intell. Rev. 2020, 53, 5019–5081. [Google Scholar] [CrossRef]

- Li, X.; Zhang, D.; Wu, B. Detection method of phishing email based on persuasion principle. In Proceedings of the 2020 IEEE 4th Information Technology, Networking, Electronic and Automation Control Conference (ITNEC), Chongqing, China, 12–14 June 2020; Volume 1, pp. 571–574. [Google Scholar]

- Verma, P.; Goyal, A.; Gigras, Y. Email phishing: Text classification using natural language processing. Comput. Sci. Inf. Technol. 2020, 1, 1–12. [Google Scholar] [CrossRef]

- Sonowal, G. Phishing Email Detection Based on Binary Search Feature Selection. SN Comput. Sci. 2020, 1. [Google Scholar] [CrossRef] [PubMed]

- Ablel-Rheem, D.M.; Ibrahim, A.O.; Kasim, S.; Almazroi, A.A.; Ismail, M.A. Hybrid Feature Selection and Ensemble Learning Method for Spam Email Classification. Int. J. 2020, 9, 217–223. [Google Scholar] [CrossRef]

- Zamir, A.; Khan, H.U.; Mehmood, W.; Iqbal, T.; Akram, A.U. A feature-centric spam email detection model using diverse supervised machine learning algorithms. Electron. Libr. 2020, 38, 633–657. [Google Scholar] [CrossRef]

- Gaurav, D.; Tiwari, S.M.; Goyal, A.; Gandhi, N.; Abraham, A. Machine intelligence-based algorithms for spam filtering on document labeling. Soft Comput. 2020, 24, 9625–9638. [Google Scholar] [CrossRef]

- Saidani, N.; Adi, K.; Allili, M.S. A Semantic-Based Classification Approach for an Enhanced Spam Detection. Comput. Secur. 2020, 94, 101716. [Google Scholar] [CrossRef]

- Jáñez-Martino, F.; Fidalgo, E.; González-Martínez, S.; Velasco-Mata, J. Classification of Spam Emails through Hierarchical Clustering and Supervised Learning. arXiv 2020, arXiv:2005.08773. [Google Scholar]

- Dada, E.G.; Bassi, J.S.; Chiroma, H.; Adetunmbi, A.O.; Ajibuwa, O.E. Machine learning for email spam filtering: Review, approaches and open research problems. Heliyon 2019, 5, e01802. [Google Scholar] [CrossRef] [PubMed]

- Pérez-Díaz, N.; Ruano-Ordas, D.; Fdez-Riverola, F.; Méndez, J.R. Wirebrush4SPAM: A novel framework for improving efficiency on spam filtering services. Softw. Pract. Exp. 2013, 43, 1299–1318. [Google Scholar] [CrossRef]

- Wu, C.H. Behavior-based spam detection using a hybrid method of rule-based techniques and neural networks. Expert Syst. Appl. 2009, 36, 4321–4330. [Google Scholar] [CrossRef]

- Enron Email Dataset. Available online: https://www.cs.cmu.edu/~enron/ (accessed on 22 October 2020).

- SpamAssassin Dataset. Available online: https://spamassassin.apache.org/ (accessed on 22 October 2020).

- Nazario Dataset. Available online: https://www.monkey.org/~jose/phishing/ (accessed on 23 October 2020).

- UCI Machine Learning Repository. Available online: https://archive.ics.uci.edu/ml/datasets.php (accessed on 28 October 2020).

- Asquith, A.; Horsman, G. Let the robots do it!–Taking a look at Robotic Process Automation and its potential application in digital forensics. Forensic Sci. Int. Rep. 2019, 1, 100007. [Google Scholar] [CrossRef]

- Hayes, D.; Kyobe, M. The Adoption of Automation in Cyber Forensics. In Proceedings of the 2020 Conference on Information Communications Technology and Society (ICTAS), Durban, South Africa, 11–12 March 2020; IEEE: New York, NY, USA, 2020; pp. 1–6. [Google Scholar]

- Syarif, I.; Prugel-Bennett, A.; Wills, G. SVM parameter optimization using grid search and genetic algorithm to improve classification performance. Telkomnika 2016, 14, 1502. [Google Scholar] [CrossRef]

- Vinitha, V.S.; Renuka, D.K. Feature Selection Techniques for Email Spam Classification: A Survey. In Proceedings of the International Conference on Artificial Intelligence, Smart Grid and Smart City Applications (AISGSC), Coimbatore, India, 3–5 January 2019; Springer: Cham, Switzerland, 2020; pp. 925–935. [Google Scholar]

- Mendez, J.R.; Cotos-Yanez, T.R.; Ruano-Ordas, D. A new semantic-based feature selection method for spam filtering. Appl. Soft Comput. 2019, 76, 89–104. [Google Scholar] [CrossRef]

| Paper | Classification Categories | Classification Method | Dataset | MAX F-Score |

|---|---|---|---|---|

| El Aassal et al. [9] | phishing, ham | SVM, RF, DT, NB, LR, kNN, other | Enron [28], SpamAssassin [29], Nazario [30] | 99.95 |

| Li et al. [31] | phishing, ham | DT, NB, kNN | SpamAssassin, Nazario | 97.30 |

| Verma et al. [32,33] | phishing, ham | SVM, RF, DT, NB, LR, kNN | SpamAssassin, Nazario | 99.00 |

| Sonowal et al. [6] | phishing, ham | RF, other | Nazario | 97.78 |

| Gangavarapu et al. [34] | spam + phishing, ham | SVM, RF, NB, other | SpamAssassin, Nazario | 99.40 |

| Gaurav et al. [21] | spam, ham | RF, DT, NB | Enron, UCI Machine Learning Repository [35] | 87.00 |

| Ablel-Rheem et al. [36] | spam, ham | DT, NB, other | UCI Machine Learning Repository | 94.40 |

| Saidani et al. [24] | spam, ham | SVM, RF, DT, NB, kNN, other | Enron | 98.90 |

| Jáñez-Martino et al. [37] | spam, ham | SVM, NB, LR | SpamAssassin | 95.40 |

| Zamir et al. [23] | spam, ham | SVM, RF, DT, other | SpamAssassin | 97.20 |

| Initial Dataset | Language | Before Balancing | After Balancing | ||||

|---|---|---|---|---|---|---|---|

| Spam Emails | Phishing Emails | Total | Spam Emails | Phishing Emails | Total | ||

| SpamAssassin + Nazario | English | 692 | 182 | 874 | 150 | 150 | 300 |

| Lithuanian (translated) | 692 | 182 | 874 | 150 | 150 | 300 | |

| Russian (translated) | 692 | 182 | 874 | 150 | 150 | 300 | |

| VilniusTech | English | 559 | 205 | 864 | 200 | 200 | 400 |

| Lithuanian | 40 | 38 | 78 | 35 | 35 | 70 | |

| Russian | 18 | 19 | 37 | 15 | 15 | 30 | |

| Total | 2693 | 808 | 3601 | 700 | 700 | 1400 | |

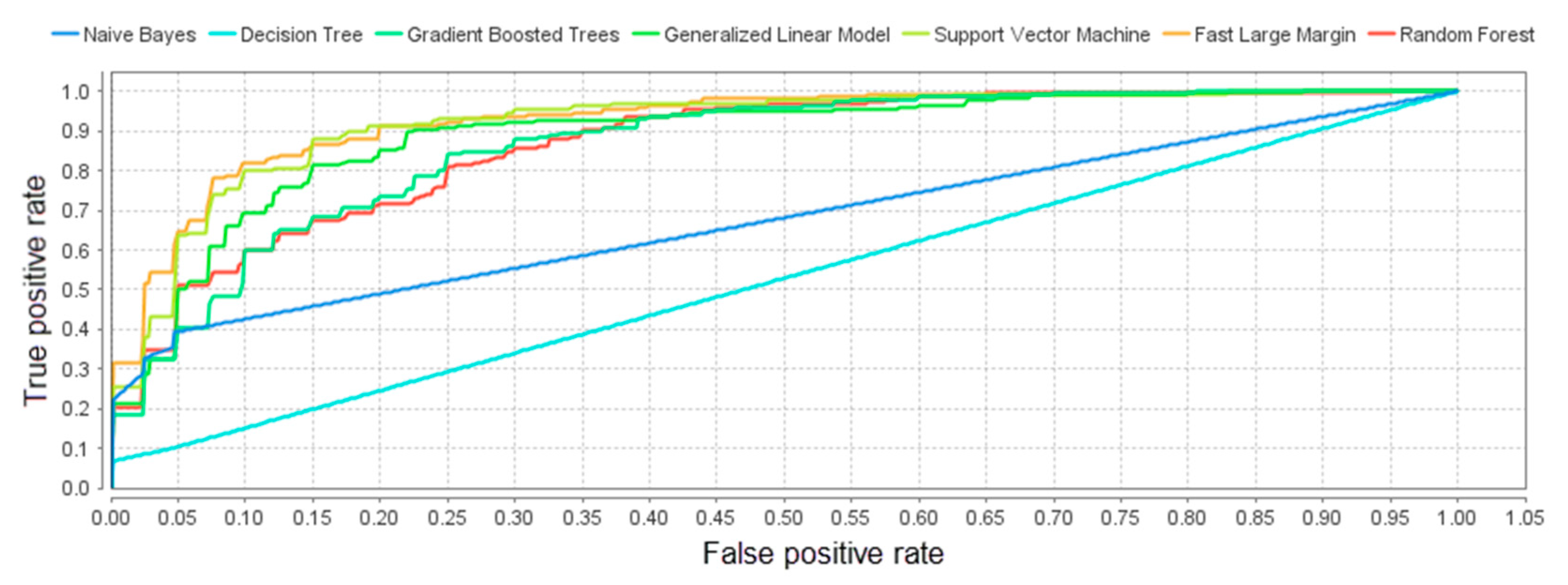

| Methods | Accuracy, % | Precision, % | Recall, % | F Score, % | AUC, % | Training Time (1000 Rows), s | Scoring Time (1000 Rows), s |

|---|---|---|---|---|---|---|---|

| Naïve Bayes | 59.8 | 93.0 | 21.5 | 34.7 | 67.7 | 0.269 | 14 |

| Generalized Linear Model | 82.8 | 79.6 | 88.7 | 83.9 | 88.9 | 0.831 | 10 |

| Fast Large Margin | 83.2 | 79.1 | 90.7 | 84.4 | 92.5 | 0.157 | 15 |

| Decision Tree | 54.0 | 100.0 | 6.1 | 11.5 | 52.9 | 0.419 | 9 |

| Random Forest | 57.2 | 100.0 | 12.7 | 22.4 | 86.4 | 5.000 | 28 |

| Gradient Boost Trees | 57.0 | 93.0 | 13.7 | 23.5 | 98.2 | 15.000 | 9 |

| Support Vector Machine | 84.0 | 78.0 | 95.2 | 85.6 | 91.8 | 2.000 | 19 |

| True Spam | True Phishing | Class Prediction | |

|---|---|---|---|

| Predicted Spam | 662 | 101 | 86.76% |

| Predicted Phishing | 38 | 599 | 94.03% |

| Class recall | 94.57% | 85.57% |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Rastenis, J.; Ramanauskaitė, S.; Suzdalev, I.; Tunaitytė, K.; Janulevičius, J.; Čenys, A. Multi-Language Spam/Phishing Classification by Email Body Text: Toward Automated Security Incident Investigation. Electronics 2021, 10, 668. https://doi.org/10.3390/electronics10060668

Rastenis J, Ramanauskaitė S, Suzdalev I, Tunaitytė K, Janulevičius J, Čenys A. Multi-Language Spam/Phishing Classification by Email Body Text: Toward Automated Security Incident Investigation. Electronics. 2021; 10(6):668. https://doi.org/10.3390/electronics10060668

Chicago/Turabian StyleRastenis, Justinas, Simona Ramanauskaitė, Ivan Suzdalev, Kornelija Tunaitytė, Justinas Janulevičius, and Antanas Čenys. 2021. "Multi-Language Spam/Phishing Classification by Email Body Text: Toward Automated Security Incident Investigation" Electronics 10, no. 6: 668. https://doi.org/10.3390/electronics10060668

APA StyleRastenis, J., Ramanauskaitė, S., Suzdalev, I., Tunaitytė, K., Janulevičius, J., & Čenys, A. (2021). Multi-Language Spam/Phishing Classification by Email Body Text: Toward Automated Security Incident Investigation. Electronics, 10(6), 668. https://doi.org/10.3390/electronics10060668