Pixel P Air-Wise Fragile Image Watermarking Based on HC-Based Absolute Moment Block Truncation Coding

Abstract

1. Introduction

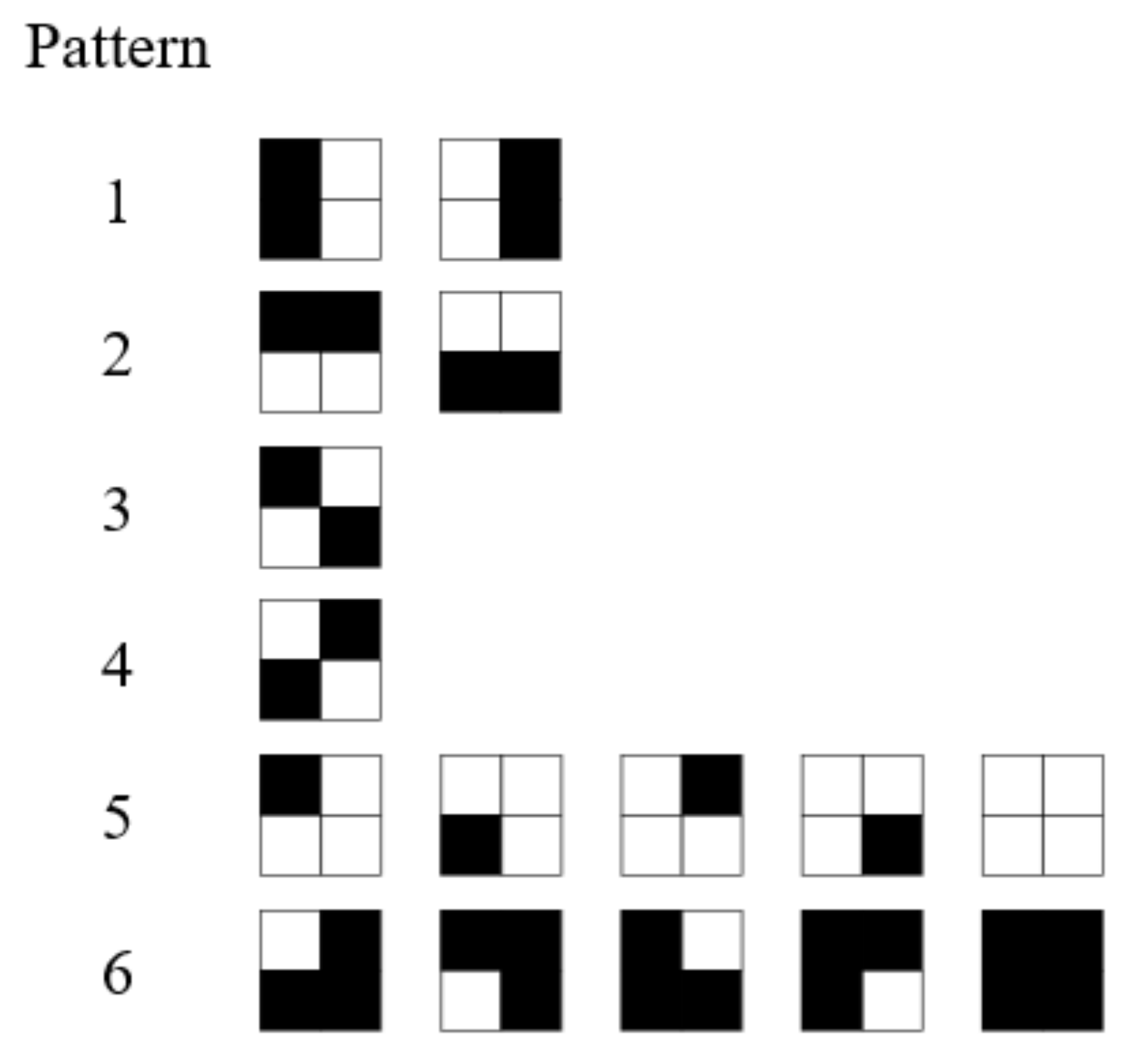

2. HC-Based Absolute Moment Block Truncation Coding

3. Proposed Pixel Pair-Wise Fragile Watermarking Scheme

3.1. Watermark Embedding

3.1.1. Recovery Information Generation

3.1.2. Embedding Strategy

- i.

- To embed (0111)2 into pixel pair (4, 4): First, convert (0111)2 into a decimal value as 7, search for a coordinate that is closest to (4, 4) and with a V value that is equal to 7. Finally, coordinate (5, 5) is found since it is the closest digit to “7” from (4, 4).

- ii.

- To embed (1011)2 into pixel pair (5, 6): First, convert (1011)2 into a decimal value as 11, search for a coordinate that is closest to (5, 6) and has a V value that is equal to 11. Since the original coordinate (5, 6) maps to V(5, 6) = 11, the coordinate remains unchanged.

- iii.

- To embed (0011)2 into pixel pair (6, 2): First, convert (0011)2 into a decimal value as 3, search for a coordinate that is closest to (6, 2) and with a V value that is equal to 3. In this case, there are two candidates mapped to 3, which are (5, 4) and (8, 3). Finally, the coordinate (8, 3) is selected based on the search path demonstrated in Figure 8.

3.2. Detection and Recovery of Tampered Area

3.2.1. Tampered Pixel Detection

3.2.2. Tampered Pixel Recovery

4. Experiment Results

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Fridrich, J.; Goljan, M. Images with self-correcting capabilities. In Proceedings of the 1999 International Conference on Image Processing (Cat. 99CH36348), Kobe, Japan, 24–28 October 1999; pp. 792–796. [Google Scholar]

- Zhu, X.Z.; Ho, T.S.; Marziliano, P. A New Semi-fragile Image Watermarking with Robust Tampering Restoration Using Ir-regular Sampling. Signal Process. Image Commun. 2007, 22, 515–528. [Google Scholar] [CrossRef]

- Zhang, X.; Wang, S. Fragile Watermarking with Error-Free Restoration Capability. IEEE Trans. Multimed. 2008, 10, 1490–1499. [Google Scholar] [CrossRef]

- Zhang, X.; Wang, S.; Feng, G. Fragile watermarking scheme with extensive content restoration capability. In International Workshop on Digital Watermarking; Springer: Berlin/Heidelberg, Germany, 2009; pp. 268–278. [Google Scholar]

- Lee, T.-Y.; Lin, S.D. Dual watermark for image tamper detection and recovery. Pattern Recognit. 2008, 41, 3497–3506. [Google Scholar] [CrossRef]

- Yang, C.-W.; Shen, J.-J. Recover the tampered image based on VQ indexing. Signal Process. 2010, 90, 331–343. [Google Scholar] [CrossRef]

- Zhang, X.; Wang, S.; Qian, Z.; Feng, G. Reference Sharing Mechanism for Watermark Self-Embedding. IEEE Trans. Image Process. 2011, 20, 485–495. [Google Scholar] [CrossRef]

- Raj, I.K. Image Data Hiding in Images Based on Interpolative Absolute Moment Block Truncation Coding. Math. Model. Sci. Comput. 2012, 283, 456–463. [Google Scholar]

- Singh, D.; Shivani, S.; Agarwal, S. Self-embedding pixel wise fragile watermarking scheme for image authentication. In International Conference on Intelligent Interactive Technologies and Multimedia; Springer: Berlin/Heidelberg, Germany, 2013; Volume 10, pp. 111–122. [Google Scholar] [CrossRef]

- Yang, S.; Qin, C.; Qian, Z.; Xu, B. Tampering detection and content recovery for digital images using halftone mechanism. In Proceedings of the 2014 Tenth International Conference on Intelligent Information Hiding and Multimedia Signal Processing, Kitakyushu, Japan, 27–29 August 2014; pp. 130–133. [Google Scholar]

- Chang, C.C.; Liu, Y.; Nguyen, T.S. A novel turtle shell based scheme for data hiding. In Proceedings of the 2014 Tenth International Conference on Intelligent Information Hiding and Multimedia Signal Processing, Kitakyushu, Japan, 27–29 August 2014; pp. 89–93. [Google Scholar]

- Dhole, V.S.; Patil, N.N. Self embedding fragile watermarking for image tampering detection and image recovery using self recovery blocks. In Proceedings of the 2015 International Conference on Computing Communication Control and Automation, Pune, India, 26–27 February 2015; pp. 752–757. [Google Scholar]

- Qin, C.; Wang, H.; Zhang, X.; Sun, X. Self-embedding fragile watermarking based on reference-data interleaving and adaptive selection of embedding mode. Inf. Sci. 2016, 373, 233–250. [Google Scholar] [CrossRef]

- Manikandan, V.M.; Masilamani, V. A context dependent fragile watermarking scheme for tamper detection from de-mosaicked color images. In Proceedings of the ICVGIP ’16: Tenth Indian Conference on Computer Vision, Graphics and Image Processing, Madurai, India, 18–22 December 2016; pp. 1–8. [Google Scholar]

- Qin, C.; Ji, P.; Wang, J.; Chang, C.-C. Fragile image watermarking scheme based on VQ index sharing and self-embedding. Multimed. Tools Appl. 2016, 76, 2267–2287. [Google Scholar] [CrossRef]

- Qin, C.; Ji, P.; Zhang, X.; Dong, J.; Wang, J. Fragile image watermarking with pixel-wise recovery based on overlapping embedding strategy. Signal Process. 2017, 138, 280–293. [Google Scholar] [CrossRef]

- Bravo-Solorio, S.; Calderon, F.; Li, C.-T.; Nandi, A.K. Fast fragile watermark embedding and iterative mechanism with high self-restoration performance. Digit. Signal Process. 2018, 73, 83–92. [Google Scholar] [CrossRef]

- Lin, C.C.; Huang, Y.H.; Tai, W.L. A Novel Hybrid Image Authentication Scheme Based on Absolute Moment Block Trunca-tion Coding. Multimed. Tools Appl. 2017, 76, 463–488. [Google Scholar] [CrossRef]

- Li, W.; Lin, C.-C.; Pan, J.-S. Novel image authentication scheme with fine image quality for BTC-based compressed images. Multimed. Tools Appl. 2015, 75, 4771–4793. [Google Scholar] [CrossRef]

- Liu, X.-L.; Lin, C.-C.; Yuan, S.-M. Blind Dual Watermarking for Color Images’ Authentication and Copyright Protection. IEEE Trans. Circuits Syst. Video Technol. 2018, 28, 1047–1055. [Google Scholar] [CrossRef]

- Huang, R.; Liu, H.; Liao, X.; Sun, S. A divide-and-conquer fragile self-embedding watermarking with adaptive payload. Multimed. Tools Appl. 2019, 78, 26701–26727. [Google Scholar] [CrossRef]

- Wang, X.; Li, X.; Pei, Q. Independent Embedding Domain Based Two-stage Robust Reversible Watermarking. IEEE Trans. Circuits Syst. Video Technol. 2019, 30, 2406–2417. [Google Scholar] [CrossRef]

- Roy, S.S.; Basu, A.; Chattopadhyay, A. On the Implementation of A Copyright Protection Scheme Us-ing Digital Image Watermarking. Multimed. Tools Appl. 2020, 79, 13125–13138. [Google Scholar]

- Su, G.D.; Chang, C.C.; Chen, C.C. A hybrid-Sudoku based fragile watermarking scheme for image tam-pering detection. Multimed. Tools Appl. 2021, 1–23. [Google Scholar] [CrossRef]

- Huy, P.Q.; Anh, D.N. Saliency guided image watermarking for anti-forgery. In Soft Computing for Biomedical Applications and Related Topic; Springer: Cham, Switzerland, 2021; pp. 183–195. [Google Scholar]

- Chang, C.-C.; Lin, C.-C.; Su, G.-D. An effective image self-recovery based fragile watermarking using self-adaptive weight-based compressed AMBTC. Multimed. Tools Appl. 2020, 79, 24795–24824. [Google Scholar] [CrossRef]

- Gola, K.K.; Gupta, B.; Iqbal, Z. Modified RSA Digital Signature Scheme for Data Confidentiality. Int. J. Comput. Appl. 2014, 106, 13–16. [Google Scholar]

- National Institute of Standards and Technology. Secure Hash Standard (SHS). In Federal Information Processing Standards; National Institute of Standards and Technology: Gaithersburg, MD, USA, 2015. [Google Scholar]

- Stallings, W. Cryptography and Network Security Principles and Practices, 7th ed.; Pearson Education: London, UK, 2016. [Google Scholar]

- Delp, E.; Mitchell, O. Image Compression Using Block Truncation Coding. IEEE Trans. Commun. 1979, 27, 1335–1342. [Google Scholar] [CrossRef]

- Lema, M.; Mitchell, O. Absolute Moment Block Truncation Coding and Its Application to Color Images. IEEE Trans. Commun. 1984, 32, 1148–1157. [Google Scholar] [CrossRef]

- Huh, J.-H.; Seo, Y.-S. Understanding Edge Computing: Engineering Evolution with Artificial Intelligence. IEEE Access 2019, 7, 164229–164245. [Google Scholar] [CrossRef]

- Lee, H.; Park, S.-H.; Yoo, J.-H.; Jung, S.-H.; Huh, J.-H. Face Recognition at a Distance for a Stand-Alone Access Control System. Sensors 2020, 20, 785. [Google Scholar] [CrossRef] [PubMed]

| Images | TP | TN | FP | FN | TPR | FPR |

|---|---|---|---|---|---|---|

| Lena | 4950 | 256882 | 110 | 202 | 0.9608 | 0.0004 |

| Elaine | 1447 | 260183 | 121 | 393 | 0.7864 | 0.0004 |

| Baboon | 8740 | 251707 | 287 | 1410 | 0.8611 | 0.0011 |

| Airplane | 3229 | 257870 | 459 | 586 | 0.8464 | 0.0018 |

| Schemes | PSNR of Watermarked Image | PSNR of Recovered Image | Condition of Recovery |

|---|---|---|---|

| Scheme in [4] | 37.9 dB | [26, 29] dB | α < 59% |

| Scheme in [7] | 37.9 dB | 40.7 dB | α < 24% |

| Scheme in [10] | 51.3 dB | [24, 36] dB | α < 50% |

| Scheme in [18] | 37.9 dB | +∞ | α < 26% |

| Scheme in [23] | [37.92, 54.13] dB | [28.63, 46.98] dB | α < 50% |

| Scheme in [28] | 49.76 dB | 34.65 dB | α < 50% |

| Proposed Scheme | 46.8 dB | [32, 42] dB | α < 50% |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lin, C.-C.; He, S.-L.; Chang, C.-C. Pixel P Air-Wise Fragile Image Watermarking Based on HC-Based Absolute Moment Block Truncation Coding. Electronics 2021, 10, 690. https://doi.org/10.3390/electronics10060690

Lin C-C, He S-L, Chang C-C. Pixel P Air-Wise Fragile Image Watermarking Based on HC-Based Absolute Moment Block Truncation Coding. Electronics. 2021; 10(6):690. https://doi.org/10.3390/electronics10060690

Chicago/Turabian StyleLin, Chia-Chen, Si-Liang He, and Chin-Chen Chang. 2021. "Pixel P Air-Wise Fragile Image Watermarking Based on HC-Based Absolute Moment Block Truncation Coding" Electronics 10, no. 6: 690. https://doi.org/10.3390/electronics10060690

APA StyleLin, C.-C., He, S.-L., & Chang, C.-C. (2021). Pixel P Air-Wise Fragile Image Watermarking Based on HC-Based Absolute Moment Block Truncation Coding. Electronics, 10(6), 690. https://doi.org/10.3390/electronics10060690