Improving Model Capacity of Quantized Networks with Conditional Computation

Abstract

:1. Introduction

2. Related Works

2.1. Model Binarization and Quantization

2.2. Conditional Computations

3. Background

3.1. Learnable Step Size Quantization

3.2. Dynamic Convolution

4. Dynamic Quantized Convolution

5. Experiment Results

6. Analysis

6.1. Memory Usage and Run-Time Analysis

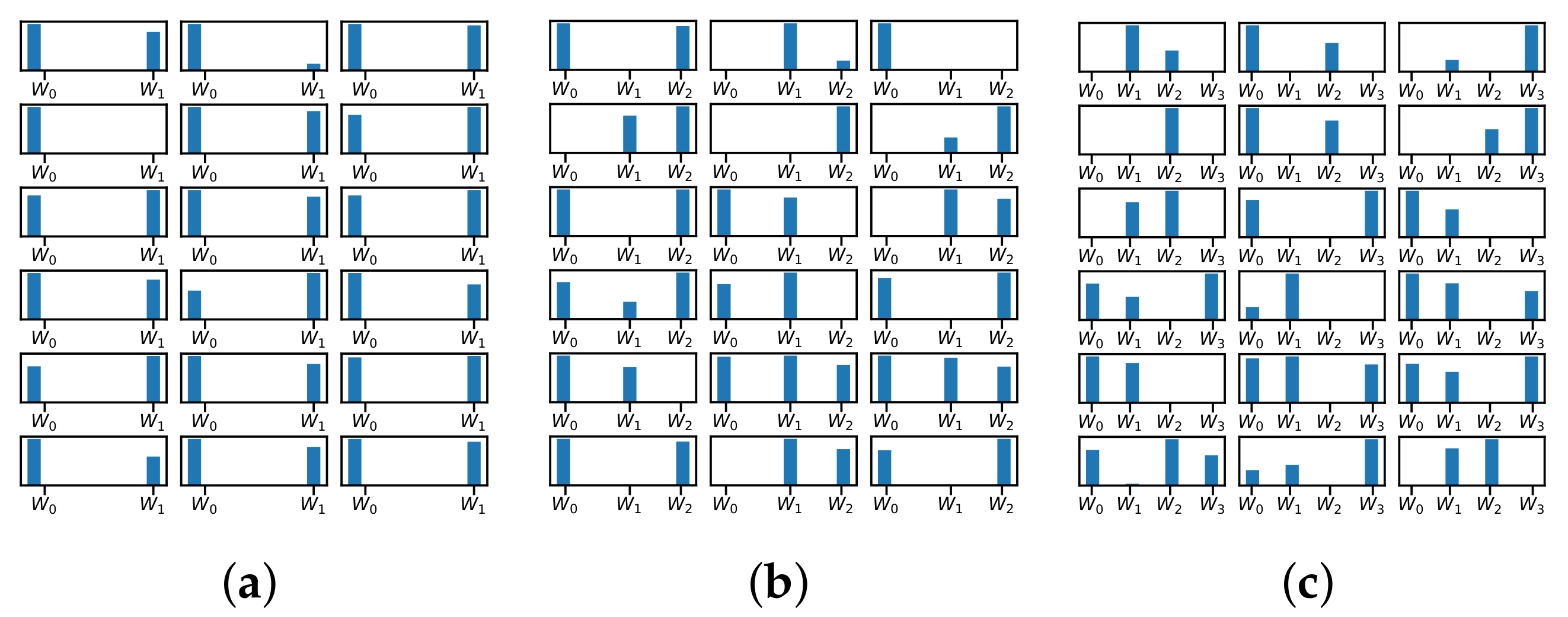

6.2. Attention Visualization

6.3. Training Complexity

7. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

Abbreviations

| CondConv | Conditionally Parameterized Convolutions [40] |

| DNN | Deep Neural Network |

| DCNNs | Deep Convolutional Neural Networks |

| DY-CNNs | Dynamic Convolutional Neural Networks [39] |

| DQConv | Dynamic Quantized Convolution |

| GPU | Graphics Processing Unit |

| LSQ | Learned Step Size Quantization [12] |

| WTA | Winners-Take-All function |

References

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. Adv. Neural Inf. Process. Syst. 2012, 25, 1097–1105. [Google Scholar] [CrossRef]

- Devlin, J.; Chang, M.W.; Lee, K.; Toutanova, K. Bert: Pre-training of deep bidirectional transformers for language understanding. arXiv 2018, arXiv:1810.04805. [Google Scholar]

- Mnih, V.; Kavukcuoglu, K.; Silver, D.; Graves, A.; Antonoglou, I.; Wierstra, D.; Riedmiller, M. Playing atari with deep reinforcement learning. arXiv 2013, arXiv:1312.5602. [Google Scholar]

- Senior, A.W.; Evans, R.; Jumper, J.; Kirkpatrick, J.; Sifre, L.; Green, T.; Qin, C.; Žídek, A.; Nelson, A.W.; Bridgland, A.; et al. Improved protein structure prediction using potentials from deep learning. Nature 2020, 577, 706–710. [Google Scholar] [CrossRef] [PubMed]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Abadi, M.; Barham, P.; Chen, J.; Chen, Z.; Davis, A.; Dean, J.; Devin, M.; Ghemawat, S.; Irving, G.; Isard, M.; et al. Tensorflow: A system for large-scale machine learning. In Proceedings of the 12th {USENIX} Symposium on Operating Systems Design and Implementation ({OSDI} 16), Savannah, GA, USA, 2–4 November 2016; pp. 265–283. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- Huang, G.; Liu, Z.; Van Der Maaten, L.; Weinberger, K.Q. Densely connected convolutional networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 4700–4708. [Google Scholar]

- Zhou, S.; Wu, Y.; Ni, Z.; Zhou, X.; Wen, H.; Zou, Y. Dorefa-net: Training low bitwidth convolutional neural networks with low bitwidth gradients. arXiv 2016, arXiv:1606.06160. [Google Scholar]

- Choi, J.; Wang, Z.; Venkataramani, S.; Chuang, P.I.J.; Srinivasan, V.; Gopalakrishnan, K. Pact: Parameterized clipping activation for quantized neural networks. arXiv 2018, arXiv:1805.06085. [Google Scholar]

- Wang, K.; Liu, Z.; Lin, Y.; Lin, J.; Han, S. Haq: Hardware-aware automated quantization with mixed precision. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–20 June 2019; pp. 8612–8620. [Google Scholar]

- Esser, S.K.; McKinstry, J.L.; Bablani, D.; Appuswamy, R.; Modha, D.S. Learned step size quantization. arXiv 2019, arXiv:1902.08153. [Google Scholar]

- Gong, R.; Liu, X.; Jiang, S.; Li, T.; Hu, P.; Lin, J.; Yu, F.; Yan, J. Differentiable soft quantization: Bridging full-precision and low-bit neural networks. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Korea, 27–28 October 2019; pp. 4852–4861. [Google Scholar]

- Courbariaux, M.; Bengio, Y.; David, J.P. Binaryconnect: Training deep neural networks with binary weights during propagations. arXiv 2015, arXiv:1511.00363. [Google Scholar]

- Rastegari, M.; Ordonez, V.; Redmon, J.; Farhadi, A. Xnor-net: Imagenet classification using binary convolutional neural networks. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 8–16 October 2016; Springer: Cham, Switzerland, 2016; pp. 525–542. [Google Scholar]

- Han, S.; Mao, H.; Dally, W.J. Deep compression: Compressing deep neural networks with pruning, trained quantization and huffman coding. arXiv 2015, arXiv:1510.00149. [Google Scholar]

- He, Y.; Zhang, X.; Sun, J. Channel pruning for accelerating very deep neural networks. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 1389–1397. [Google Scholar]

- Luo, J.H.; Wu, J.; Lin, W. Thinet: A filter level pruning method for deep neural network compression. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 5058–5066. [Google Scholar]

- Lee, D.; Kwon, S.J.; Kim, B.; Wei, G.Y. Learning low-rank approximation for cnns. arXiv 2019, arXiv:1905.10145. [Google Scholar]

- Long, X.; Ben, Z.; Zeng, X.; Liu, Y.; Zhang, M.; Zhou, D. Learning sparse convolutional neural network via quantization with low rank regularization. IEEE Access 2019, 7, 51866–51876. [Google Scholar] [CrossRef]

- Chen, W.; Wilson, J.; Tyree, S.; Weinberger, K.; Chen, Y. Compressing neural networks with the hashing trick. In Proceedings of the International Conference on Machine Learning, Lille, France, 6–11 July 2015; pp. 2285–2294. [Google Scholar]

- Zoph, B.; Le, Q.V. Neural architecture search with reinforcement learning. arXiv 2016, arXiv:1611.01578. [Google Scholar]

- Wu, B.; Wang, Y.; Zhang, P.; Tian, Y.; Vajda, P.; Keutzer, K. Mixed precision quantization of convnets via differentiable neural architecture search. arXiv 2018, arXiv:1812.00090. [Google Scholar]

- Jin, Q.; Yang, L.; Liao, Z. Towards efficient training for neural network quantization. arXiv 2019, arXiv:1912.10207. [Google Scholar]

- Ding, R.; Chin, T.W.; Liu, Z.; Marculescu, D. Regularizing activation distribution for training binarized deep networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 11408–11417. [Google Scholar]

- Lin, J.; Gan, C.; Han, S. Defensive quantization: When efficiency meets robustness. arXiv 2019, arXiv:1904.08444. [Google Scholar]

- Yang, J.; Shen, X.; Xing, J.; Tian, X.; Li, H.; Deng, B.; Huang, J.; Hua, X.S. Quantization networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 7308–7316. [Google Scholar]

- Zhang, D.; Yang, J.; Ye, D.; Hua, G. Lq-nets: Learned quantization for highly accurate and compact deep neural networks. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 365–382. [Google Scholar]

- Lin, X.; Zhao, C.; Pan, W. Towards Accurate Binary Convolutional Neural Network. In Proceedings of the NIPS, Long Beach, CA, USA, 4–9 December 2017. [Google Scholar]

- Zhuang, B.; Shen, C.; Tan, M.; Liu, L.; Reid, I. Structured binary neural networks for accurate image classification and semantic segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 413–422. [Google Scholar]

- Wang, X.; Yu, F.; Dou, Z.Y.; Darrell, T.; Gonzalez, J.E. Skipnet: Learning dynamic routing in convolutional networks. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 409–424. [Google Scholar]

- Wu, Z.; Nagarajan, T.; Kumar, A.; Rennie, S.; Davis, L.S.; Grauman, K.; Feris, R. Blockdrop: Dynamic inference paths in residual networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 8817–8826. [Google Scholar]

- Shazeer, N.; Mirhoseini, A.; Maziarz, K.; Davis, A.; Le, Q.; Hinton, G.; Dean, J. Outrageously large neural networks: The sparsely-gated mixture-of-experts layer. arXiv 2017, arXiv:1701.06538. [Google Scholar]

- Mullapudi, R.T.; Mark, W.R.; Shazeer, N.; Fatahalian, K. Hydranets: Specialized dynamic architectures for efficient inference. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 8080–8089. [Google Scholar]

- Chen, Z.; Li, Y.; Bengio, S.; Si, S. You look twice: Gaternet for dynamic filter selection in cnns. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–20 June 2019; pp. 9172–9180. [Google Scholar]

- Guerra, L.; Zhuang, B.; Reid, I.; Drummond, T. Switchable Precision Neural Networks. arXiv 2020, arXiv:2002.02815. [Google Scholar]

- Jin, Q.; Yang, L.; Liao, Z. Adabits: Neural network quantization with adaptive bit-widths. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 2146–2156. [Google Scholar]

- Chen, Y.; Dai, X.; Liu, M.; Chen, D.; Yuan, L.; Liu, Z. Dynamic relu. In Proceedings of the European Conference on Computer Vision, Glasgow, UK, 23–28 August 2020; Springer: Cham, Switzerland, 2020; pp. 351–367. [Google Scholar]

- Chen, Y.; Dai, X.; Liu, M.; Chen, D.; Yuan, L.; Liu, Z. Dynamic convolution: Attention over convolution kernels. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 11030–11039. [Google Scholar]

- Yang, B.; Bender, G.; Le, Q.V.; Ngiam, J. Condconv: Conditionally parameterized convolutions for efficient inference. arXiv 2019, arXiv:1904.04971. [Google Scholar]

- Bulat, A.; Martinez, B.; Tzimiropoulos, G. High-Capacity Expert Binary Networks. arXiv 2020, arXiv:2010.03558. [Google Scholar]

- Kim, J.; Bhalgat, Y.; Lee, J.; Patel, C.; Kwak, N. Qkd: Quantization-aware knowledge distillation. arXiv 2019, arXiv:1911.12491. [Google Scholar]

- Bulat, A.; Tzimiropoulos, G. Xnor-net++: Improved binary neural networks. arXiv 2019, arXiv:1909.13863. [Google Scholar]

- Martinez, B.; Yang, J.; Bulat, A.; Tzimiropoulos, G. Training binary neural networks with real-to-binary convolutions. arXiv 2020, arXiv:2003.11535. [Google Scholar]

- Kim, H.; Kim, K.; Kim, J.; Kim, J. BinaryDuo: Reducing Gradient Mismatch in Binary Activation Network by Coupling Binary Activations. In Proceedings of the 8th International Conference on Learning Representations (ICLR 2020), Addis Ababa, Ethiopia, 26–30 April 2020. [Google Scholar]

- He, X.; Mo, Z.; Cheng, K.; Xu, W.; Hu, Q.; Wang, P.; Liu, Q.; Cheng, J. Proxybnn: Learning binarized neural networks via proxy matrices. In Proceedings of the European Conference on Computer Vision, Glasgow, UK, 23–28 August 2020; Volume 2, pp. 7531–7542. [Google Scholar]

- Li, F.; Zhang, B.; Liu, B. Ternary weight networks. arXiv 2016, arXiv:1605.04711. [Google Scholar]

- Alemdar, H.; Leroy, V.; Prost-Boucle, A.; Pétrot, F. Ternary neural networks for resource-efficient AI applications. In Proceedings of the 2017 International Joint Conference on Neural Networks (IJCNN), Anchorage, AK, USA, 14–19 May 2017; pp. 2547–2554. [Google Scholar]

- Li, Y.; Dong, X.; Wang, W. Additive Powers-of-Two Quantization: A Non-uniform Discretization for Neural Networks. In Proceedings of the International Conference on Learning Representations, Addis Ababa, Ethiopia, 26–30 April 2020. [Google Scholar]

- Zhuang, B.; Liu, L.; Tan, M.; Shen, C.; Reid, I. Training quantized neural networks with a full-precision auxiliary module. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 1488–1497. [Google Scholar]

- Zhuang, B.; Liu, L.; Tan, M.; Shen, C.; Reid, I. Training quantized network with auxiliary gradient module. arXiv 2019, arXiv:1903.11236. [Google Scholar]

- Jung, S.; Son, C.; Lee, S.; Son, J.; Han, J.J.; Kwak, Y.; Hwang, S.J.; Choi, C. Learning to quantize deep networks by optimizing quantization intervals with task loss. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 4350–4359. [Google Scholar]

- Chen, Y.; Meng, G.; Zhang, Q.; Zhang, X.; Song, L.; Xiang, S.; Pan, C. Joint neural architecture search and quantization. arXiv 2018, arXiv:1811.09426. [Google Scholar]

- Zhuang, B.; Shen, C.; Tan, M.; Liu, L.; Reid, I. Towards effective low-bitwidth convolutional neural networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 7920–7928. [Google Scholar]

- Dou, H.; Deng, Y.; Yan, T.; Wu, H.; Lin, X.; Dai, Q. Residual D2NN: Training diffractive deep neural networks via learnable light shortcuts. Opt. Lett. 2020, 45, 2688–2691. [Google Scholar] [CrossRef]

- Yu, J.; Yang, L.; Xu, N.; Yang, J.; Huang, T. Slimmable neural networks. arXiv 2018, arXiv:1812.08928. [Google Scholar]

- Yu, J.; Huang, T.S. Universally slimmable networks and improved training techniques. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Korea, 27–28 October 2019; pp. 1803–1811. [Google Scholar]

- Du, K.; Zhang, Y.; Guan, H. From Quantized DNNs to Quantizable DNNs. arXiv 2020, arXiv:2004.05284. [Google Scholar]

- Ha, D.; Dai, A.; Le, Q.V. Hypernetworks. arXiv 2016, arXiv:1609.09106. [Google Scholar]

- Cai, H.; Gan, C.; Wang, T.; Zhang, Z.; Han, S. Once for All: Train One Network and Specialize it for Efficient Deployment. In Proceedings of the International Conference on Learning Representations, Addis Ababa, Ethiopia, 26–30 April 2020. [Google Scholar]

- Luo, P.; Zhanglin, P.; Wenqi, S.; Ruimao, Z.; Jiamin, R.; Lingyun, W. Differentiable dynamic normalization for learning deep representation. In Proceedings of the International Conference on Machine Learning, Long Beach, CA, USA, 10–15 June 2019; pp. 4203–4211. [Google Scholar]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-excitation networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 7132–7141. [Google Scholar]

- Krizhevsky, A.; Hinton, G. Learning Multiple Layers of Features from Tiny Images; Technical Report TR-2009; University of Toronto: Toronto, ON, Canada, 2009. [Google Scholar]

| Methods | Bit-Width (W/A) | |||

|---|---|---|---|---|

| 2/2 | 3/3 | 4/4 | ||

| CIFAR10 (FP: 92.86) | LSQ | 90.83 | 92.54 | 92.85 |

| DQConv (K = 3) | 91.82 | 92.89 | 93.07 | |

| DQConv (K = 4) | 91.76 | 92.96 | 93.03 | |

| CIFAR-100 (FP: 71.35) | LSQ | 68.26 | 71.32 | 71.49 |

| DQConv (K = 3) | 70.41 | 71.98 | 72.68 | |

| DQConv (K = 4) | 70.56 | 71.65 | 72.38 | |

| Dataset | Attention Structure | Bit-Width (W/A) | ||

|---|---|---|---|---|

| 2/2 | 3/3 | 4/4 | ||

| CIFAR-10 | Equation (6) | 91.88 | 92.72 | 92.94 |

| Equation (7) | 91.82 | 92.89 | 93.07 | |

| CIFAR-100 | Equation (6) | 69.79 | 71.49 | 72.05 |

| Equation (7) | 70.41 | 71.98 | 72.68 | |

| Bit-Width (W/A) | |||

|---|---|---|---|

| 2/2 | 3/3 | 4/4 | |

| LSQ (Baseline) | 68.26 | 71.32 | 71.49 |

| DQConv (K = 2) | 69.92 | 71.21 | 72.04 |

| DQConv (K = 3) | 70.41 | 71.98 | 72.68 |

| DQConv (K = 4) | 70.56 | 71.65 | 72.38 |

| DQConv (K = 5) | 70.21 | 71.5 | 71.89 |

| Configurations | Bit-Width (W/A) | ||

|---|---|---|---|

| 2/2 | 3/3 | 4/4 | |

| DQConv () + Full-Precision Attention | 70.41 | 71.98 | 72.68 |

| DQConv () + Quantized Attention | 70.14 | 71.64 | 71.89 |

| Configurations | Bit-Width (W/A) | ||

|---|---|---|---|

| 2/2 | 3/3 | 4/4 | |

| DQConv ( = 0.02) | 68.77 | 70.93 | 71.6 |

| DQConv ( = 0.5) | 70.41 | 71.98 | 72.68 |

| DQConv ( = 1.0) | 70.18 | 71.75 | 71.97 |

| DQConv ( = 5.0) | 69.83 | 71.56 | 72.07 |

| DQConv ( = 30) | 69.71 | 71.54 | 72.15 |

| DQConv ( = 30 → 1) | 70.27 | 71.76 | 72.33 |

| DQConv ( = 30 → 0.5) | 69.64 | 71.41 | 72.07 |

| Method | Bit-Width (W/A) | Attention Structure | # Params | Model Size (KB) | CR | MACs (M) |

|---|---|---|---|---|---|---|

| FP32 | 32/32 | - | 470,292 | 1790 | 1× | 69.13 |

| LSQ | 2/2 | - | 470,356 | 137 | 13.06× | 69.13 |

| 3/3 | - | 470,356 | 192 | 9.32× | 69.13 | |

| 4/4 | - | 470,356 | 252 | 7.10× | 69.13 | |

| DQConv (K = 2) | 2/2 | Equation (6) | 936,056 | 253.5 | 7.00× | 69.13 |

| 2/2 | Equation (7) | 948,360 | 256.5 | 6.97× | 69.14 | |

| 3/3 | Equation (6) | 936,056 | 367.2 | 4.87× | 69.13 | |

| 3/3 | Equation (7) | 948,360 | 371.8 | 4.81× | 69.14 | |

| 4/4 | Equation (6) | 936,056 | 481.0 | 3.72× | 69.13 | |

| 4/4 | Equation (7) | 948,360 | 487.1 | 3.61× | 69.14 | |

| DQConv (K = 3) | 2/2 | Equation (6) | 1,400,600 | 369.6 | 4.84× | 69.13 |

| 2/2 | Equation (7) | 1,412,096 | 372.5 | 4.80× | 69.14 | |

| 3/3 | Equation (6) | 1,400,600 | 541.4 | 3.30× | 69.13 | |

| 3/3 | Equation (7) | 1,412,096 | 545.7 | 3.28× | 69.14 | |

| 4/4 | Equation (6) | 1,400,600 | 713.2 | 2.50× | 69.13 | |

| 4/4 | Equation (7) | 1,412,096 | 719.0 | 2.48× | 69.14 | |

| DQConv (K = 4) | 2/2 | Equation (6) | 1,865,144 | 485.7 | 3.68× | 69.13 |

| 2/2 | Equation (7) | 1,875,832 | 488.45 | 3.66× | 69.14 | |

| 3/3 | Equation (6) | 1,865,144 | 715.6 | 2.50× | 69.13 | |

| 3/3 | Equation (7) | 1,875,832 | 719.6 | 2.48× | 69.14 | |

| 4/4 | Equation (6) | 1,865,144 | 945.5 | 1.89× | 69.13 | |

| 4/4 | Equation (7) | 1,875,832 | 950.9 | 1.88× | 69.14 | |

| DQConv (K = 5) | 2/2 | Equation (6) | 2,329,688 | 601.9 | 2.97× | 69.13 |

| 2/2 | Equation (7) | 2,339,568 | 604.3 | 2.96× | 69.14 | |

| 3/3 | Equation (6) | 2,329,688 | 889.8 | 2.01× | 69.13 | |

| 3/3 | Equation (7) | 2,339,568 | 893.5 | 2.00× | 69.14 | |

| 4/4 | Equation (6) | 2,329,688 | 1177.0 | 1.52× | 69.13 | |

| 4/4 | Equation (7) | 2,339,568 | 1182.7 | 1.51× | 69.14 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Pham, P.; Chung, J. Improving Model Capacity of Quantized Networks with Conditional Computation. Electronics 2021, 10, 886. https://doi.org/10.3390/electronics10080886

Pham P, Chung J. Improving Model Capacity of Quantized Networks with Conditional Computation. Electronics. 2021; 10(8):886. https://doi.org/10.3390/electronics10080886

Chicago/Turabian StylePham, Phuoc, and Jaeyong Chung. 2021. "Improving Model Capacity of Quantized Networks with Conditional Computation" Electronics 10, no. 8: 886. https://doi.org/10.3390/electronics10080886