1. Introduction

Deep Learning requires Big Data to learn behaviors from infrequent edge cases or anomalies. One application making use of Big Data is vehicle automation, where information representing diverse scenarios is necessary to train algorithms resilient to infrequent and high-impact events.

While manufacturers log fleet data (e.g., Tesla capture customer telemetry [

1,

2]), it is difficult to validate that data are “clean” (e.g., common drunk, drowsy, drugged or distracted drivers might negatively impact a lane-holding algorithm). Even sober drivers with a poor understanding of the centerline of their vehicle may taint data. Further, the perception of “appropriate” morals and ethics is a driver of automated vehicle growth [

3]. To meet the needs of robust, diverse and high quality data reflecting safe and ethical driving, the capture of known-good and large-scale data is necessary. “Wisdom of the crowd” requires massive scale, particularly in critical systems [

4], so companies may instead capture data from costly trained drivers to maximize quality at the expense of quantity.

There is a need to capture large volumes of high-quality data with minimal supervision, and for inexpensive physical test platforms to validate real-world edge-case performance. This manuscript proposes gamified simulation as a means of collecting bulk data for self-driving and a platform based on commodity hardware for real-world algorithm validation. Such a simulator could capture human- and AI-enabled data to train vision-based self-driving models and validate trained models’ domain adaptation from simulator to real-world without explicit transfer learning.

Simulation is well-established [

1], and small-scale hardware already tests algorithms in lieu of full-scale vehicles [

5,

6], particularly for algorithms that may be dangerous or costly to test on a full-scale physical platform, such as collision avoidance or high-speed and inclement weather operation. Our approach furthers proven techniques to allow the generation of data from

unskilled drivers and through the validation of resulting algorithms on lower cost and more widely accessible hardware than is used today. This enables large scale, rapid data capture and real-world model validation that may then be translated to costlier and higher fidelity test platforms including full-scale vehicles.

We develop such a system and demonstrate its ability to generate data from untrained human drivers as well as AI-controlled agents. The simulated environment mimics a real-world laboratory, and game mechanics implicitly motivate quality data capture: players compete for high scores or the best time to collect line-following training data. The result is a human-in-the-loop simulation enabling inexpensive (<$500 platform + <$150 game asset costs), rapid (fewer administrative hurdles to clear for test approval), and high-quality (crowdsourced, configurable and supervised by game mechanics) data collection for certain use cases relative to conventional simulated and full-scale data capture environments and trained safety drivers. Our holistic solution integrates domain randomization, automated data generation, and thoughtful game mechanics to facilitate the bulk capture of useful data and transferrability to a near-disposable platform.

Our solution is unique in enforcing overt and latent rules in data collection: we learn from humans who have adapted to drive well in complex scenarios, while ensuring the data collected are of high quality by encouraging capture of useful data, automatically discarding low-quality data, disallowing specific actions, and enabling increased scale. Scoring mechanics encourage users to collect information for both common and edge-case scenarios, yielding valuable insight into common and low-frequency, high-risk events while the physical platform democratizes access to hardware-in-the-loop validation for budget-constrained developers.

This manuscript’s primary contributions are the toolchain comprising the simulator and physical validation hardware. These support a data-generating framework capable of simulating scenarios infeasible to capture in reality, with the benefit of semi-supervised (enforced by game mechanics) and crowd-sourceable (from untrained and unskilled users) data provided by humans testable on real images. Indeed, crowdsourcing has demonstrated success within transportation-for mode identification, parking location, and more [

7]. The unique combination of implicitly supervisory game mechanics and crowdsourcing enables an end-to-end software and hardware training and testing platform unlike those used in contemporary research. Proof-of-concept Convolutional Neural Networks, while effective demonstrators of the simulator’s ability to generate data suitable for domain adaptation to real hardware without domain adaptation, are peripheral contributions validating our claims of effective simulator and platform design.

2. Prior Art

2.1. Simulation and Gamification

One challenge in training AI systems is data availability, whether limited due to the scale of instrumented systems or the infrequency of low-likelihood events. To capture unpredictable driving events, Waymo, Tesla, and others collect data from costly highly-instrumented fleets [

1,

2,

8]. To generate data at a lower cost, Waymo uses simulation to increase training data diversity [

1] including not-yet-encountered scenarios. Simulation has been used to generate valuable data particularly for deep learning [

9,

10]. However, simulated data abide by implicit and explicit rules, meaning algorithms may learn latent features that do not accurately mirror reality and may miss “long tail” events [

11]. Though Waymo’s vehicles have reduced crash rates relative to those of human drivers [

12], there is room for improvement, particularly in coping with chaotic real-world systems operated by irrational agents. The use of thoughtfully-designed games lowers data capture cost relative to physical driving, with game mechanics, increased data volume, and scenario generation supporting the observation of infrequent events.

Researchers modified Grand Theft Auto V (GTA V) [

13] to capture vehicle speed, steering angle, and synthetic camera data [

14] and Franke used game data to train radio controlled vehicles to drive [

15]. However, GTA V is inflexible, e.g., with respect to customizing vehicle or sensor, and data require manual capture and labeling.

Fridman’s DeepTraffic supports self-driving algorithm development [

16]. However, DeepTraffic generates 2D information, and while DeepTraffic crowdsources data, it does not crowdsource

human-operated training data.

The open source VDrift [

17] was used to create an optical flow dataset used to train a pairwise CRF model for image segmentation [

18]. However, the tool was not designed with reconfigurability or data output in mind.

Another gamified simulation is SdSandbox and its derivatives [

19,

20,

21] that generate steering/image pairs from virtual vehicles and environments. These tools generate unrealistic images, may or may not be human-in-the loop, and are not designed to crowdsource training data.

CARLA [

22] is a cross-platform game-based simulator emulating self-driving vehicles and offering programmable traffic and pedestrian scenarios. While CARLA is a flexible research tool, users are unmotivated to collect targeted data, limiting the ability to trust data “cleanliness”. Further, there is no physical analog to the in-game vehicle. A solution with game mechanics motivating user performance and a low-cost physical test platform would add research value.

Other, task-specific elements of automated driving have been demonstrated in games and simulation, e.g., pedestrian detection [

23,

24] and stop sign detection [

25]. Some simulators test transferrability of driving skills across varied virtual environments [

26]. These approaches have not been integrated with physical testbeds, which could yield insight into real-world operations and prove simulated data may be used to effectively train self-driving algorithms.

There is an opportunity to create an easy-to-use gamified simulator and associated low-cost physical platform for collecting self-driving data and validating model performance. A purpose-built, human-in-the-loop, customizable simulator capable of generating training data for different environments and crowdsourcing multiple drivers’ information would accelerate research, tapping into a large userbase capable of generating training data for both mundane and long-tail events.

Such a simulator could create a virtualized vehicle, synthetic sensor data, and present objectives encouraging “good behavior” or desirable (for training) “bad behavior”. For example, a user could gain points from staying within lane markers, or by collecting coins placed along a trajectory, or by completing laps as quickly as possible with collision penalties. Collected data would aid behavior-cloning, self-driving models in which input and output relationships are learning without explicit controller modeling. The simulator could be made variable through the use of randomized starting locations, dynamic lighting conditions, and noisy surface textures, compared with more deterministic traditional simulators.

Algorithms trained on the resulting synthetic data will be more likely to learn invariant features, rather than features latent to the simulator design. The result will be improved algorithms capable of responding well to infrequent but impactful edge cases missed by other tools, while a physical test platform will validate model performance in the real-world and capture data for transfer learning (if necessary).

2.2. Training Deep Networks with Synthetic Data

To prove the simulator’s utility, we generate synthetic images and train deep learning behavior cloning models, then test those models on a low-cost hardware platform. This section explores training models using synthetic data and porting models to the real-word.

Neural network training is data-intensive and typically involves manually collecting and annotating input. This process is time consuming [

27] and requires expert knowledge for some labels [

28,

29]. Generating high-quality, automatically labeled synthetic data can overcome these limitations. Techniques include Domain Randomization (DR) and Domain Adaptation (DA).

DR hypothesizes that a model trained on synthetic views augmented with random lighting conditions, backgrounds, and minor perturbations will generalize to real-world conditions. DR’s potential has been demonstrated in image-based tasks, including object detection [

30], segmentation [

31] and

object pose estimation [

32,

33]. These methods render textured

models onto synthetic or real image backgrounds (e.g., MS COCO [

34]) with varying brightness and noise. The domain gap between synthetic and realistic images is reduced by increasing the generalizability of the trained model (small perturbations increase the likelihood of the model converging on latent features).

Synthetic data also facilitates 3D vision tasks. For example, FlowNet3D [

35] is trained on a synthetic dataset (FlyingThings3D [

36]) to learn scene flow from point clouds, and generalizes to real LiDAR scans captured in the KITTI dataset [

37]. Im2avatar [

38] reconstructs voxelized 3D models from synthetic 2D views from ShapeNet [

39], and the trained model produces convincing 3D models from realistic images of PASCAL3D+ dataset [

40].

Domain Adaptation (DA) uses a model trained on one source data distribution and applies that model to a different, related target distribution-for example, applying a lane-keeping model trained on synthetic images to real-world images for the same problem type. In cases, models may be retrained on some data from the new domain using explicit transfer learning. In our case, we use DA to learn a model from a simulated distribution of driving data and adapt that model to a real-world context without explicit retraining.

For generating realistic data to support transfer learning without explicit capture of real-world data, Generative Adversarial Networks (GANs) [

41] have been used to generate realistic data [

42], 3D pose estimators [

43] and grasping algorithms [

44]. This work shows promising results, but the adapted images present unrealistic details and noise artifacts.

We aim to develop a simulator based on a game engine capable of generating meaningful data to inform Deep Learning self-driving behavior cloning models capable of real-world operation, with the benefit of being able to crowdsource human control and trusting the resulting input data as being “clean”. These models clone human behavior to visually-learn steering control based on optical environmental input such as road lines seen in RGB images. Such a system may learn the relationship between inputs, such as monocular RGB camera images, and outputs, such as steering angles. In this respect, both a vision model and an output process controller are implicitly learned.

3. Why Another Simulator?

While there are existing simulators [

45], our Gamified Digital Simulator (GDS) has three key advantages.

GDS is designed for ease-of-use and self-supervision. A user can collect data (images and corresponding steering angles) without technical knowledge of the vehicle, simulator, or data capture needs. This expands GDS’s potential userbase over traditional simulators.

GDS provides an in-game AI training solution that can, without track knowledge, collect data independently. This allows some data to be generated automatically and with near-perfect routing as described in

Section 4.10.

GDS offers a resource-efficient simulation, adapting the graphical quality level of scene elements depending on the importance of each element in training a model. Elements such the track and its texture (

Section 4.1) are high quality whereas the background (

Section 4.3) uses low-polygon models and low-resolution textures. This reduces the processing power needed to run the GDS, expanding its applicability to constrained compute devices.

4. The Gamified Digital Simulator: Development Methodology

This section details GDS’s development. In

Figure 1, we overview the GDS toolchain. The virtual car can be driven both by a human (using a gamepad) or a rule-based AI. The simulator generates two types of data: (a) images from a virtual camera simulating the physical vehicle and (b) a CSV file with speed and steering angle details. To test that these virtual data are effective for training behavior cloning algorithms, we capture data and train models to operate a virtual vehicle agent in the GDS environment and test this model on the physical platform to determine whether the learned models are effective in a different (physical) environment.

The GDS is built upon the cross-platform Unity3D Game Engine. The game consists of four scenes: (a) Main Menu, (b) Track Selection, (c) User Input Mode and (d) AI Mode. The Main Menu and Track Selection scene canvases and UI elements help the user transition between modes. From the Track Selection scene, a user selects one of two identical playable scenes (tracks/environments), one with a human-operated vehicle and the other with an AI-operated vehicle. The AI Scene utilizes an in-game AI or an external script, e.g., running TensorFlow [

46] or Keras [

47] AI models, as described in

Section 4.10 and

Section 7.

We first explore the application design, and then describe the methodology for transforming the game into a tool for generating synthetic data for training a line following model. Gamification allows non-experts to provide high-volume semi-supervised training data. This approach is unique relative to conventional simulation in that it provides a means of crowdsourcing data from goal-driven humans, expediting behavior cloning from the “wisdom of the crowd”.

While designing the GDS, we considered it as both a research tool and a game with purpose [

48,

49] and therefore include elements to enhance the user experience (UX). The fun is “serious fun” (

Figure 2), as defined by UX expert Nicole Lazzaro [

50,

51], where users play (or do boring tasks) to make a difference in the real world.

While the simulator cannot be released open-source due to license restrictions on some constituent assets, it is capturing data for academic studies related to training AVs and human-AV interaction and these data will be made available to researchers. The detail provided in this section should allow experienced game developers to recreate a similar tool without requiring extensive research. For researchers interested in implementing a similar software solution, the authors are happy to share source code with individuals or groups have purchased the appropriate license to the required assets-please contact the authors for more information.

4.1. Road Surface

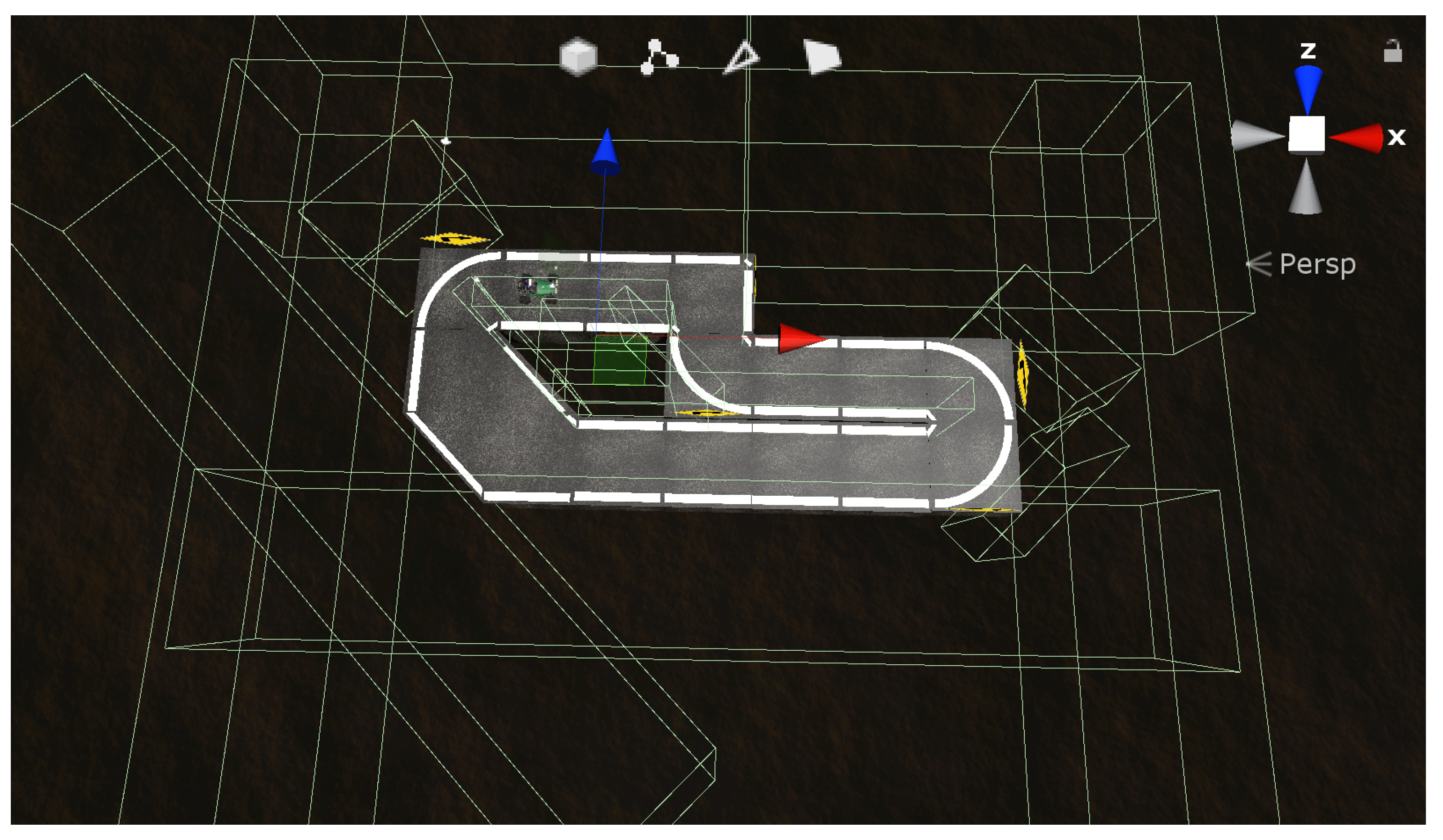

A road surface GameObject simulates a test track (

Figure 3). We developed modular track segments using Unity’s cuboid elements and ProBuilder (

Figure 4). Modules were given a realistic texture (

Figure 5) that changes based upon ambient lighting and camera angles, minimizing the likelihood that the neural network learns behaviors based on tessellated edges.

To reduce trained model overfit, we developed “data augmentation” using Unity’s lerp function to add Gaussian noise creating speckles appearing over time. A second script manipulates the in-game lighting sources randomly so that vehicles repeating trajectories capture varied training data. Both techniques support Domain Randomization and improve generalizability for domain adaptation (e.g., sim2real).

4.2. Game Mechanics

Gamification encourages players to abide by latent and overt rules to yield higher-quality, crowdsourcable data.

On the rectangular track, we created GameObject coins within the lane markers. Players were intrinsically motivated to collect coins for a chance to “win”.

Figure 6, though this approach allowed non-sequential collection, with users exiting the track boundaries and reentering without penalty. While we could disregard non-consecutive pickups, failing to do so would contaminate data.

We subsequently developed a system of colliders (green in

Figure 7) invisible to the camera prevent buggy from traveling outside the white lane makers, ensuring that collected data are always within the lane, reducing contamination. Crash data and preceding/trailing frames were also eliminated from training data.

4.3. Environment

The game has two purposes: to create a compelling user experience (UX) conducive to long play, and to create dynamic conditions to enhance domain randomization and prevent the neural network model fitting to environmental features.

The game environment is a series of GameObjects and rendering lighting parameters. Objects include rocky surfaces and canyon models (

Figure 8a) scaled and arranged to create a rocky mountain (

Figure 8b). We included assets from the Unity Asset Store to excite players and create dynamic background images. These include a lava stream particle effect (

Figure 8c,d) and animated earthquake models (

Figure 8e,f). Combined assets create the experience of driving near a volcano. The dynamic background provides “noise” in training images, ensuring the neural network fits to only the most-invariant features. Finally, a night Skybox is applied (

Figure 8g). Game environment elements deemed non-critical to the simulation are low-polygon for efficiency.

The perceived hostility of the environment implicitly conveys the game mechanics-the buggy must not exit the track boundaries, or it will fall to its doom. This mechanic allows users to pick up and play the game without instruction.

4.4. Representative Virtual Vehicle

The virtual vehicle is a multi-part 3D model purchased from Unity’s Asset store and customized with CAD from the physical vehicle’s camera mount.

To make the buggy drivable, we created independent wheel controllers (

Figure 9) using a plugin that simulates vehicle physics. Using this model, we set parameters including steering angles, crossover speed, steering coefficient, Ackermann percentage, flipover behavior, forward and side slip thresholds, and speed limiters. These parameters allowed us to tailor the in-game model to mimic the physical vehicle.

4.5. Simulated Cameras

The GDS uses a multi-camera system to simultaneously render the user view and export training data. The user camera provides a third person perspective, while a second camera placed at the front of the buggy simulates the RC vehicle’s real camera in terms of location, resolution and field of view (FoV). This camera generates synthetic images used for training the neural network model and is calibrated in software. A third camera is placed above the vehicle, facing downwards. This camera provides an orthographic projection and, along with a RenderTexture, creates a mini-map.

Two additional cameras behave akin to SONAR or LiDAR and calculate distance to nearby objects using Unity’s Raycasting functionality. These cameras support in-game AI used to independently generate synthetic training data (

Section 4.10).

The location of the cameras is linked to the buggy’s body. Each camera has differing abilities to “see” certain GameObjects as determined using Unity Layers. For example, the primary camera sees turn indicators directing the user, but the synthetic forward imaging camera ignores these signs when generating training data. In the coin demo, the user could see the coins, but these were invisible to the data-generating camera.

4.6. Exported Data

We attached a script to the buggy to generate sample data at regular intervals, including a timestamp, the buggy’s speed, and the local rotation angle of the front wheels. This information is logged to a CSV in the same format used by the physical platform. Another script captures images from the synthetic front-facing camera at each timestep to correlate the steering angle and velocity with a particular image. The CSV file and the images are stored within the runtime-accessible StreamingAssets folder.

4.7. Simulator Reconfigurability

In order to simulate the configuration of the physical vehicle, we needed to iterate tests to converge on parameters approximating the physical vehicle. To speed the process of tuning the simulator for other vehicles, options can be changed by editing an external file. Multi-display users can configure options on a second monitor (

Figure 10). Configuration includes:

Low speed steering angle-the absolute value of the maximum angle at which the center of the steered wheels is maximum when the vehicle is in low-speed mode

High speed steering angle-same as above, but in high-speed mode

Crossover speed-the speed at which the vehicle changes from low- to high-speed mode

Steer coefficient (front wheels)-the steering multiplier between the steering angle and the actual wheel movement

Steer coefficient (back wheels)-same as above. Can be negative for high-speed lane changes (translation without rotation)

Forward slip threshold-slip limit for transition from static to sliding friction when accelerating/braking

Side slip threshold-same as above, but for steering

Speed limiter-maximum vehicle speed allowable (also reduces available power to accelerate)

Vertical Field of View-in degrees, to match physical vehicle camera

Sampling Camera Width-ratio of width to height of captured image

Sampling Rate-rate, in Hz, of capture of JPG images and logging to CSV file

We use another external file to set the starting coordinates of the buggy (

Figure 11), helping test humans and AI alike under complicated scenarios (e.g., starting immediately in front of a right turn where the horizontal line is exactly perpendicular to the vehicle, or starting perpendicular to the lane markers).

4.8. 2D Canvas Elements

2D GameObjects show the buggy’s realtime speed and wheel angle. There are also two RenderTexture elements, one displaying a preview of the synthetic image being captured (the “real cam” view) and one showing the track on a “minimap”. Throttle and brake status indicators turn green when a user presses the associated controls (

Figure 12). This feature makes it easier for newcomers to quickly capture useful training data.

4.9. Unity C# and Python Bridge

We test models in the virtual environment before porting them to a physical vehicle. Deep learning frameworks commonly run in Python, whereas Unity supports C# and JavaScript.

We developed a bridge between Unity and Python by allowing GDS to read an external file containing commanded steering angle, commanded velocity, and AI mode (in-game AI—using the cameras described in

Section 4.10, or external AI from

Section 6, where the commanded steering and velocity values are read from the file at every loop).

This approach allows near-realtime control from an external model. Python scripts monitor the StreamingAssets folder for a new image, process this image to determine a steering angle and velocity, and update the file with these new values for controlling steering and velocity at Hz.

Using the PyGame library [

52], we also “pass through” non-zero gamepad values, allowing for semi-supervised vehicle control (AI with human overrides). Upon releasing the controller, the external AI resumes control. This allows us to test models in-game and helps us “unstick” vehicles to observe a model after encountering a complex scenario.

4.10. User Controlled Input and In-Game AI Modes

There are two game modes: User Control and AI Control.

In User Control, users interface with gamepads’ analog joysticks to control the vehicle.

Under AI Control, the buggy is controlled by in-game AI or an external model (

Section 4.9).

In-game AI captures data without user input. This AI uses a three-camera system (the front camera [the same used to capture the synthetic view image] and two side cameras rotated relative to the y-axis) as input. Each camera calculates the distance between its position and the invisible track-border colliders.

The camera orientations and “invisible” distance measurements are represented in

Figure 13 (green represents the longest clear distance, and red lines indicate more-obstructed pathways). The car moves in the direction of the longest free distance. If the longest distance comes from the front camera, the buggy moves straight. If it comes from a side cameras, then it centers itself in the available space. When the front distance falls under a threshold, the buggy brakes in advance of a turn.

As Unity cannot emulate joystick input, we use the bridge from

Section 4.9 to both write and read output for controlling the buggy. The in-game AI model knows ground truth, such as the external positioning of the track’s invisible colliders, to effectively measure relative positioning. This allows the virtual buggy to drive predictably and create valuable samples without human involvement. This method generates trustable unsupervised training data for the deep learning network described in

Section 6.

5. Integrating GDS with an End-to-End Training Platform

The GDS is part of an end-to-end training system for self driving also comprising a physical platform (used for model validation) and a physical training environment (duplicated in the simulated world). Each element is detailed in the following subsections.

5.1. A Physical Self-Driving Test Platform

Self-driving models used to automate vehicle control are ultimately designed for use in physical vehicles. Full-size vehicles, however, are costly-with a self-driving test platform potentially costing in excess of $250,000, and requiring paid skilled operators for data capture. We therefore created a lower-cost, easy-to-operate and smaller-scale physical vehicle platform and environment mirroring the GDS world to validate model performance.

We considered the TurtleBot [

53] and DonkeyCar [

54] platforms; both are low-cost systems. The TurtleBot natively supports the ROS middleware and Gazebo simulation tool, however the kinematics of the differential-drive TurtleBot do not mirror conventional cars. The DonkeyCar offers realistic Ackermann steering and a more powerful powertrain with higher top speed, better mirroring passenger vehicles. While the DonkeyCar platform is compelling, it suffers from some limitations: it is primarily a modularized compute module featuring one RGB camera, and this module may be installed onto a range of hardware that may introduce undesirable variability. Integrating the robotics, software, and mobility platform in a tightly-coupled package eliminates variability introduced by changing mobility platforms. The DonkeyCar also does not natively run ROS, limiting research extensibility.

We therefore developed a self-driving platform using hardware similar to the DonkeyCar, and a software framework similar to that of the TurtleBot—a 1/10th scale radio-controlled car chassis with Ackermann steering, running ROS. Our hardware platform is a HobbyKing Short Course Truck, which limits variability in experimental design and provides a platform suitable for carrying a larger and more complex payload, as well as capable of operating robustly in off-road environments. The large base, for example, has room for GPS receivers, has the ability to carry a larger battery (for longer runtimes or powering compute/sensing equipment), and can easily support higher speeds such that future enhancements such as LiDAR can be tested in representative environments. The total cost of the platform is approximately $400 including batteries, or $500 for a version with large storage, a long-range gamepad controller, and a Tensor Accelerator onboard.

Unlike other large-scale platforms like F1/10th [

55], AutoRally [

56] or the QCar [

5], which cost more than our proposed platform, we offer low-cost extensibility, off-road capability, and ruggedness.

Computing is provided by a Raspberry Pi 3B+ (we have also tested with a Raspberry Pi 4 and Google Coral tensor accelerator), while a Navio2 [

57] provides an Inertial Measurement Unit (IMU) and I/O for RC radios, servos and motor controllers. Input is provided by a Raspberry Pi camera with 130 degree field of view and IR filter (to improve daytime performance), and optionally a 360-degree planar YDLIDAR X4 [

58] to measure radial distances. The platform receives human input from a Logitech F710 dual analog USB joystick.

The HobbyKing platform is four-wheel drive and has a brushless motor capable of over 20 kph. The Pi is vibrationally-isolated on an acrylic plate, reducing mechanical noise and providing crash protection. The camera is mounted atop the same acrylic plate and protected by an aluminum enclosure to minimize damage during collisions. The camera is mounted to a 3D printed bracket, the angle of which was set to provide an appropriate field of view for line detection. A LiDAR, if used, is mounted to this same plate using standoffs to raise the height above the camera enclosure. The vehicle platform is shown in

Figure 14.

The Raspberry Pi runs Raspian Stretch with realtime kernel provided by Emlid. At boot, the OS launches the Ardupilot [

59] service, mavros [

60], and the joy node. If LiDAR is used, the rplidar node is loaded. The user launches one of two Python nodes via SSH: a teleop note, which uses the joystick to control the car and logs telemetry to an onboard SD card at 10 Hz, or a model node, which uses one or more camera images and a pretrained model to command the steering servo to follow line markers using a pretrained neural network. In this mode, the user manually controls the throttle using the F710. Motor and servo commands take the form of a pulse width command ranging from 1000–2000 uS, published to the /mavros/rc/override topic.

When teleop mode is started, IMU and controller data are captured by ROS subscribers and written to .CSV file, along with the 160 × 120 RGB .JPG image captured from

at the same time step. An overview of ROS architecture, including nodes and topics, appears in

Figure 15.

5.2. Modular Training Environment

We designed a test track using reconfigurable “monomers” to create repeatable environments. We created track elements using low-cost

EVA foam tiles. Components included tight turns, squared and rounded turns, sweeping turns, straightaways, and lane changes. Tiles are shown in

Figure 16.

Straightaways and rounded (fixed-width) corners provide the simplest features for classification; wide, sweeping and right-angle corners provide challenging markings.

The reconfigurable track is quick to setup and modular compared with placing tape on the ground. It also helps to standardize visual indicators, similar to how lane dividers have fixed dimensions depending on local laws. Inexpensive track components can be stored easily, making this approach suitable to budget-constrained organizations. Sample track layouts appear in

Figure 17.

5.3. Integration with Gamified Digital Simulator

Simulation affords researchers low-cost, high-speed data collection across environments. We use the GDS to parallelize and crowdsource data collection without the space, cost, or setup requirements associated with capturing data from conventional vehicles. We aim for a physical vehicle to “learn” to drive visually from GDS inputs.

In

Section 4, we describe the creation of an in-game proxy for the physical platform. The virtual car mirrors the real vehicle’s physics, and driven using the same F710 joystick. The simulator roughly matches the friction coefficient, speed, and steering sensitivity between the vehicles, with numeric calibration where data were readily quantifiable (e.g., field of view for the camera).

The virtual car saves images to disk in the same format as the physical vehicle to maximize data interoperability, and exports throttle position, brake position, and steering angle to a CSV.

6. Data Collection, Algorithm Implementation and Preliminary Results

For our purposes, the development of a line following algorithm functioning in both simulation and on the physical platform serves as a representative “minimum viable test case,” with successful performance in both the simulated and real environment reflective of the suitability of crowd-generated data for training certain self-driving algorithms, as well as of the relevance and tight coupling of both the hardware and software elements designed.

To prove the viability of the simulator as a training tool, we designed an experiment to collect line-following data in the simulated environment for training a behavior-cloning, lane-following Convolutional Neural Network (CNN). This approach is not designed for State of the Art performance; rather, it is to demonstrate the tool’s applicability to generating suitable training data and resulting trained models for operation on the test platform without explicit domain adaptation.

We generated training data both from “wisdom of the crowd” (multiple human drivers) and from “optimal AI” (steering and velocity based on logical rules and perfect situational information). We then attempted to repurpose the resulting model, without retraining, to the physical domain.

We first collected data in the simulated environment by manually driving laps in 10-min batches. As described in

Section 5.3, the simulated camera images, steering angles, and throttle positions were written to file at 30 Hz. In addition to relying on “wisdom of the crowd” and implicit game mechanics enforcing effective data generation, samples with or near zero velocity, negative throttle (braking/reverse), or steering outside the control limits (indicating a collision) were ignored to prevent the algorithm from learning “near crash” situations. Such data can later be used for specialized training.

Rather than using image augmentation, we instead used the in-game “optimal AI” (AI with complete, noiseless sensor measurements and well characterized rules as described in

Section 4.10) to generate additional ground-truth data from the simulator and ultimately used only symmetric augmentation (image and steering angle mirroring to ensure left/right turn balance).

When training CNNs on an fixed-image budget of 50 K or 100 K images, neither the human-only training approach, nor the AI-only approach individually resulted in models supporting in-game or real-world AI capable of completing laps. With larger numbers of images from each individual training source, an effective neural network might be trained. Instead, blending the training images / from human and AI sources with the same image budget (50 and 100 K) yielded models capable of completing real-world laps (occasionally and repeatably, respectively). Our results indicate that the joint-training approach converges more quickly than either source alone and we posit that the combination of repeatability (assisted exploration via in-game AI) and variability (human operation) yields a more effective training set.

Final training data were approximately half human-driven and half controlled by AI with noiseless information. We collected 224,293 images, almost 450,000 images after symmetry augmentation. Data capture occurred rapidly; we were able to capture all necessary data. To the point of being faster than physical testing on a full-scale vehicle, it took less time to capture, train, and validate the model than a typical review cycle for experiments involving human subjects –approximately one week. Each image was then post-processed to extract informative features.

We developed an image preprocessing pipeline in OpenCV [

61] similar to that proposed in the Udacity “Intro to Self Driving” Nano Degree Program capable of:

Correcting the image for camera lens properties

Conducting a perspective transform to convert to a top-down view

Conversion from RGB to HSL color space

Gaussian blurring the image

Masking the image to particular ranges of white and yellow

Greyscaling the image

Conducting Canny edge detection

Masking the image to a polygonal region of interest

Fitting lines using a Hough transform

Filtering out lines with slopes outside a particular range

Grouping lines by slope (left or right lines)

Fitting a best-fit line to the left and/or right side using linear regression

Creating an image of the best-fit lines

Blending the (best-fit) line(s) with the edge image, greyscale image, or RGB image

Using a sample CNN to estimate the significance of each preprocessing step, we conducted a grid search and identified a subset as being performance-critical. Ultimately, preprocessing comprised:

Conversion from RGB to HSL color space

HSL masking to allow only white and yellow regions to pass through into a binary (black/white) image

Gaussian blurring

Masking the image to a region including only the road surface and none of the vehicle or environs

This pipeline improved predictor robustness for varied lighting conditions without increasing per-frame processing time compared to raw RGB images. Example input and output from the real and simulated camera appear in

Figure 18.

We additionally tested camera calibration and perspective transformation, but found these operations to add little performance relative to their computational complexity. The predicted steering angles and control loop update sufficiently quickly that over- or under-steering is easily addressed, and a maximum steering slew rate prevented the vehicle from changing steering direction abruptly, minimizing oscillation.

We trained

CNN variants in Keras [

47] using simulated data. For each model, we recorded outsample mean-squared error (MSE). We saved the best model and stopped training when the validation loss had not decreased more than

across the previous 200 epochs. In practice, this was approximately 400 epochs. In all cases, testing and validation loss decreased alongside each other for the entirety of training, indicating the models did not overfit.

From these results, we selected two CNNs with the best outsample performance: one using a single input image, and one using a three-image sequence (current and the two preceding frames) to provide time history and context. The trained convolution layers were 2D for single-images and 3D for image sequences, which provided the sequence model with temporal context and improved velocity independence. The representative models chosen to demonstrate the simulator’s ability to generate data suitable for effective sim2real domain transfer, are shown in

Figure 19a,b.

A comparison of the predictive performance of the single-image and multi-image model for the (simulated) validation set appears in

Figure 20. These plots compare the predicted steering angle to the ground-truth steering angle, with a 1:1 slope indicating perfect fit.

7. Model Testing and Cross-Domain Transferrability

We tested the trained model in both virtual and physical environments to establish qualitative performance metrics related to domain adaptation.

7.1. Model Validation (Simulated)

We first tested the model in the simulator, using Keras to process output images from the virtual camera. The neural network monitored the image output directory, running each new frame through a pretrained model to predict the steering angle. This steering angle and a constant throttle value were converted to simulated joystick values and written to an input file monitored by the simulator. The simulator updated the vehicle control with these inputs at 30 Hz, so the delay between the image creation, processing, and input was

s or better. This process is described in

Section 4.9.

The simulated vehicle was able to reliably navigate along straightaways and fixed-radius turns. It also correctly identified the directionality of tight and sweeping corners with high accuracy, though it struggled with hard-right-angle turns, suggesting the model identifies turns by looking for curved segments rather than by corner geometry. For some initial starting positions, the simulated vehicle could complete >20 laps without incident. For other seeds values, the vehicle would interact with the invisible colliders and “ping-pong” against the walls (directional trends were correct, but narrow lanes left little room for error). In these cases, human intervention unstuck the vehicle and the buggy would resume self-driving.

We next transferred the most-effective model to the physical vehicle without explicit transfer learning.

7.2. Model Transferrability to Physical Platform

We ported the pretrained model to the physical vehicle, changing only the HSL lightness range for OpenCV’s white mask and changed the polygonal mask region to block out the physical buggy’s suspension, and scaled the predicted output angle from degrees to microsecond servo pulses. There was no camera calibration and no perspective transformation.

As with the simulated vehicle, the buggy was able to follow straight lines and sweeping corners using the unaltered single- and multi-image models, with the vehicle repeatedly completing several laps. It was not necessary to retrain the model. Both the real car and simulator control loops operate at low loop rates (8–30 Hz) and speeds (≈10 kph), so the classifiers’ predicted steering angles cause each vehicle to behave as though being operated with a “bang-bang” controller (“left–right”) rather than a nuanced PID controller (see

video (accessed on 2 October 2020) running a representative image sequence model at low speeds). Though line-following appears “jerky,” small disparities between the calibration and sensitivity of the virtual and physical vehicle’s steering response minimally impact lane keeping as framerates (enabled with enhanced compute) increase.

We also qualitatively evaluated the single- and multi-image models relative to their performance in the simulated environment. In practice, the model relying on the single image worked most robustly within the simulated environment. This is because the images for training were captured at a constant 30 Hz, but the vehicle speed varied throughout these frames. Because the 3D convolution considers multiple frames at fixed time intervals, there is significant velocity dependence. The desktop was able to both process and run the trained model at a consistent 30 Hz, including preprocessing, classification, polling for override events from the joystick, and writing the output file, making the single-image model perform well and making the impact of reduced angular accuracy relative to the image-sequence model insignificant as the time-delta between control inputs was only 0.03 s.

In the physical world, where the buggy speed and frame capture and processing are slower, the vehicle’s velocity variation is a smaller percentage of the mean velocity and computational complexity matters more. As a result, the image sequence provides better performance as it anticipates upcoming turns without the complication of high inter-frame velocity variation. The physical vehicle performed laps consistently with the image-sequence model, though it still struggled with the same right-angle turns as the simulator.

Other models (e.g., LSTMs) may offer improved performance over those demonstrated; an ablation study could validate this. However, those chosen models demonstrate effectively the desired goal of model transferrability and the function of the end-to-end simulator and physical platform toolchain.

These results show successful model transferrability from the simulated to physical domain without retraining. The gamified driving simulator and low-cost physical platform provide an effective end-to-end solution for crowdsourced data collection, algorithm training, and model validation suitable for resource-sensitive research and development environments.

8. Conclusions, Discussion, and Future Work

Our toolchain uniquely combines simulation, gamification, and extensibility with a low-cost physical test platform. This combination supports semi-supervised, crowdsourced data collection, rapid algorithm development cycles, and inexpensive model validation relative to contemporary solutions.

There are opportunities for future improvement. For example, adding a calibration pattern would allow us to evaluate the impact of camera calibration on model performance. We plan to include simulated LiDAR to improve the simulator’s utility for collision avoidance, and to create a track-builder utility or procedural track generator. Incorporating multiplayer, simulated traffic, and/or unpredictable events (“moose crossing” or passing cyclists [

62]) would help train more complex scenarios. Alternatively, an extension of the simulator may be used to train adversarial networks for self-driving to support automated defensive driving techniques [

63], or high-performance vehicles [

64].

Because the simulator is based on a multi-platform game engine, broader distribution and the creation of improved scoring mechanisms and game modes will provide incentive for players to contribute informative supervised data, supporting rapid behavior cloning for long-tail events. These same robust scoring metrics would allow us to rank the highest-performing drivers’ training data more heavily than lower-scoring drivers when training the neural network. Some of these can be visible to the user (a “disqualification” notice), while other score metrics may be invisible (a hidden collider object could disable image and CSV capture and deduct from the user’s score when the vehicle leaves a “safe” region). Other changes to game mechanics might support the capture of necessary “edge case” unsuitable to generate by other means-particularly with typical research labs’ economic or computational constraints. These adaptations might, for example, contribute to the capture of data from incident avoidance scenarios, or operating in inclement weather, or during particular vehicle failures (such as a tire blowout). Multiplayer driving, with AI or human operated other vehicles, would generate other sorts of edge case data to enhance data capture via naturalistic ablation. This work will require developing a network backend for data storage and retrieval from diverse devices. Changing game mechanics in some respects is simpler and more comprehensively addresses evolving data capture needs than seeking to explicitly codify desirable and undesirable data capture.

There may also be opportunities to integrate the simulator and physical vehicles into an IoT framework [

65] or with AR/VR tools [

66], such that physical vehicles inform the simulation in realtime and vise-versa.

Finally, crowdsourcing only works if tools are widely available, and we plan to refine and release the compiled simulator, test platform details, and resulting datasets to the public.