Evaluation of Feature Selection Methods on Psychosocial Education Data Using Additive Ratio Assessment

Abstract

1. Introduction

2. Related Work

Research Contribution

- This paper provides a systematic model for determining the best feature selection method using an adapted additive ratio assessment model [24]. Specifically, the selection of the feature selection method is implemented in the psychosocial education dataset.

- This paper offers a comprehensive study and evaluation by comparing the performance of machine learning in every feature selection method. ARAS used the performance metrics from machine learning as criteria in determining the best feature selection method.

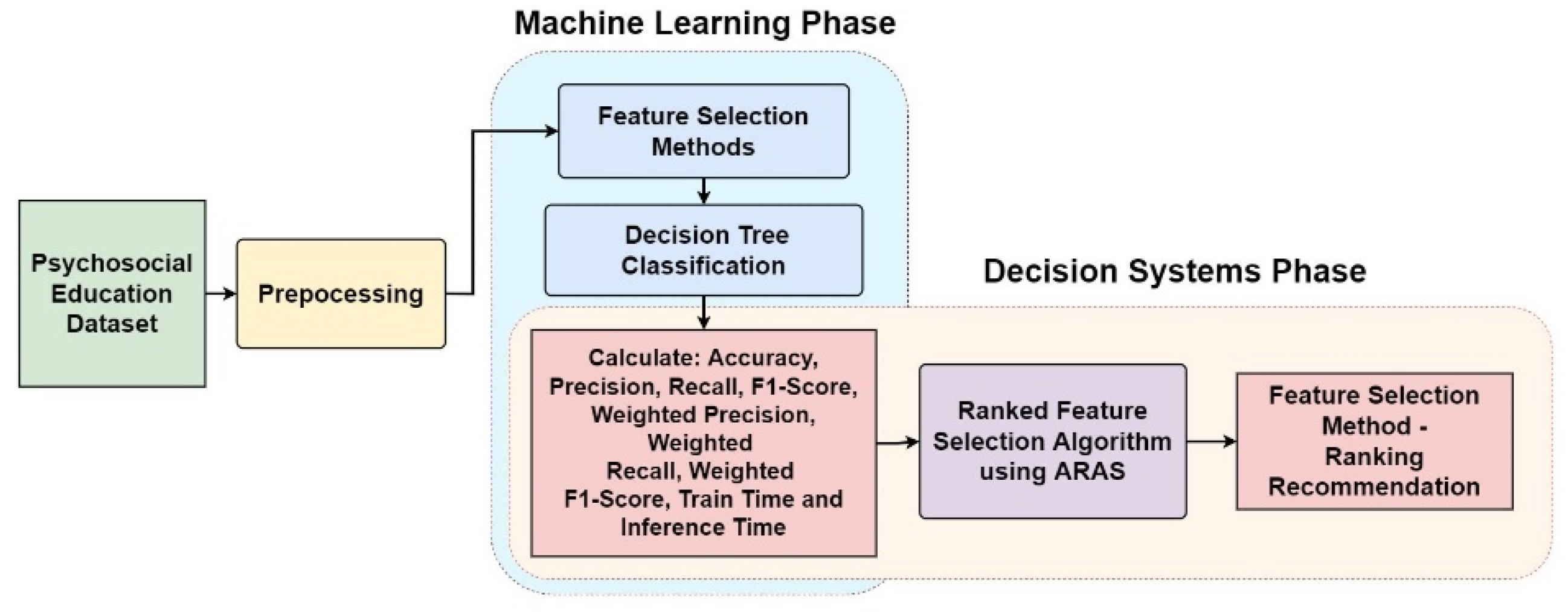

3. Methodology

3.1. Theoretical Overview

3.1.1. Artificial Intelligence Research on Psychosocial Education

3.1.2. Feature Selection Methods

3.1.3. ANOVA

3.1.4. Chi-Square

3.1.5. Mutual Information (MI)

3.1.6. Exhaustive Search Feature (EFS)

3.1.7. Embedding Random Forest (ERF)

3.1.8. Lasso

3.1.9. Recursive Feature Elimination (RFE)

3.1.10. ARAS: Decision System Approach for the Feature Evaluation Method

3.2. Experimental Design

3.3. Dataset Description

3.4. Dataset Preprocessing

3.5. Evaluation of Performance Metrics for Feature Selection Methods

4. Results and Discussion

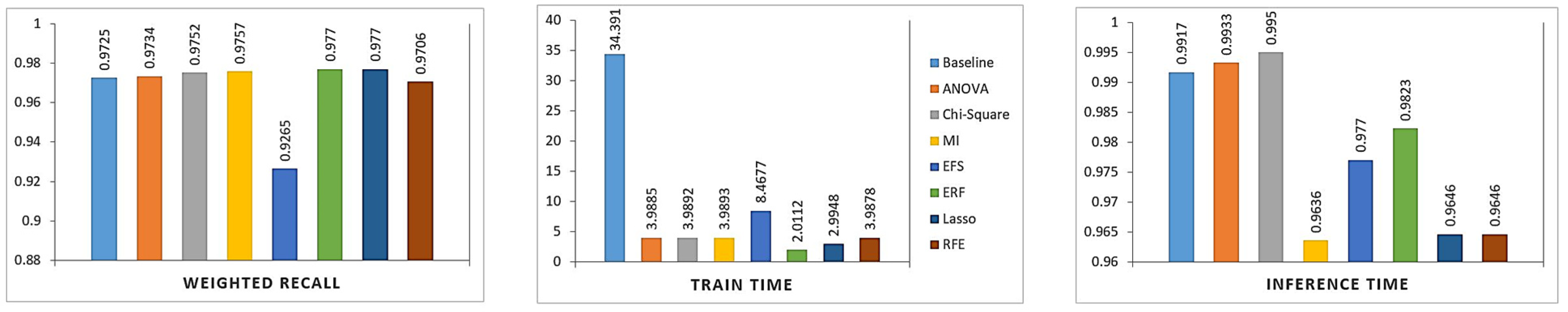

4.1. Performance Analysis of the Feature Selection Method

4.2. Evaluation Feature Selection Method Using ARAS

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Hoti, A.H.; Heinzmann, S.; Müller, M.; Buholzer, A. Psychosocial Adaptation and School Success of Italian, Portuguese and Albanian Students in Switzerland: Disentangling Migration Background, Acculturation and the School Context. J. Int. Migr. Integr. 2015, 18, 85–106. [Google Scholar] [CrossRef]

- Wong, R.S.M.; Ho, F.; Wong, W.H.S.; Tung, K.T.S.; Chow, C.B.; Rao, N.; Chan, K.L.; Ip, P. Parental Involvement in Primary School Education: Its Relationship with Children’s Academic Performance and Psychosocial Competence through Engaging Children with School. J. Child Fam. Stud. 2018, 27, 1544–1555. [Google Scholar] [CrossRef]

- Raskind, I.G.; Haardörfer, R.; Berg, C.J. Food insecurity, psychosocial health and academic performance among college and university students in Georgia, USA. Public Health Nutr. 2019, 22, 476–485. [Google Scholar] [CrossRef]

- Sierra-Díaz, M.J.; González-Víllora, S.; Pastor-Vicedo, J.C.; Sánchez, G.F.L. Can We Motivate Students to Practice Physical Activities and Sports Through Models-Based Practice? A Systematic Review and Meta-Analysis of Psychosocial Factors Related to Physical Education. Front. Psychol. 2019, 10, 2115. [Google Scholar] [CrossRef]

- Souravlas, S.; Anastasiadou, S. Pipelined Dynamic Scheduling of Big Data Streams. Appl. Sci. 2020, 10, 4796. [Google Scholar] [CrossRef]

- López-Belmonte, J.; Segura-Robles, A.; Moreno-Guerrero, A.-J.; Parra-González, M.E. Machine Learning and Big Data in the Impact Literature. A Bibliometric Review with Scientific Mapping in Web of Science. Symmetry 2020, 12, 495. [Google Scholar] [CrossRef]

- Al-Jarrah, O.Y.; Yoo, P.; Muhaidat, S.; Karagiannidis, G.K.; Taha, K. Efficient Machine Learning for Big Data: A Review. Big Data Res. 2015, 2, 87–93. [Google Scholar] [CrossRef]

- Altman, N.; Krzywinski, M. The curse(s) of dimensionality. Nat. Methods 2018, 15, 399–400. [Google Scholar] [CrossRef]

- Köppen, M. The curse of dimensionality. In Proceedings of the 5th Online World Conference on Soft Computing in Industrial Applications (WSC5), Online, 4–8 September 2000; Volume 1, pp. 4–8. [Google Scholar]

- Khaire, U.M.; Dhanalakshmi, R. Stability of feature selection algorithm: A review. J. King Saud Univ.Comput. Inf. Sci. 2019, 34. [Google Scholar] [CrossRef]

- Jović, A.; Brkić, K.; Bogunović, N. A review of feature selection methods with applications. In Proceedings of the 2015 38th International Convention on Information and Communication Technology, Electronics and Microelectronics (MIPRO), Opatija, Croatia, 25–29 May 2015; pp. 1200–1205. [Google Scholar]

- Chandrashekar, G.; Sahin, F. A survey on feature selection methods. Comput. Electr. Eng. 2014, 40, 16–28. [Google Scholar] [CrossRef]

- Cai, J.; Luo, J.; Wang, S.; Yang, S. Feature selection in machine learning: A new perspective. Neurocomputing 2018, 300, 70–79. [Google Scholar] [CrossRef]

- Moorthy, U.; Gandhi, U.D. A novel optimal feature selection technique for medical data classification using ANOVA based whale optimization. J. Ambient. Intell. Humaniz. Comput. 2020, 12, 3527–3538. [Google Scholar] [CrossRef]

- Ding, H.; Li, D. Identification of mitochondrial proteins of malaria parasite using analysis of variance. Amino Acids 2015, 47, 329–333. [Google Scholar] [CrossRef]

- Utama, H. Sentiment analysis in airline tweets using mutual information for feature selection. In Proceedings of the 2019 4th International Conference on Information Technology, Information Systems and Electrical Engineering (ICITISEE), Yogyakarta, Indonesia, 20–21 November 2019; pp. 295–300. [Google Scholar]

- Richhariya, B.; Tanveer, M.; Rashid, A. Diagnosis of Alzheimer’s disease using universum support vector machine based recursive feature elimination (USVM-RFE). Biomed. Signal Process. Control. 2020, 59, 101903. [Google Scholar] [CrossRef]

- Park, D.; Lee, M.; Park, S.E.; Seong, J.-K.; Youn, I. Determination of Optimal Heart Rate Variability Features Based on SVM-Recursive Feature Elimination for Cumulative Stress Monitoring Using ECG Sensor. Sensors 2018, 18, 2387. [Google Scholar] [CrossRef]

- ZLiu, Z.; Thapa, N.; Shaver, A.; Roy, K.; Siddula, M.; Yuan, X.; Yu, A. Using Embedded Feature Selection and CNN for Classification on CCD-INID-V1—A New IoT Dataset. Sensors 2021, 21, 4834. [Google Scholar]

- Loscalzo, S.; Wright, R.; Acunto, K.; Yu, L. Sample aware embedded feature selection for reinforcement learning. In Proceedings of the 14th Annual Conference on Genetic and Evolutionary Computation, Philadelphia, PA, USA, 7–11 July 2012; pp. 887–894. [Google Scholar]

- Liu, H.; Zhou, M.; Liu, Q. An embedded feature selection method for imbalanced data classification. IEEE/CAA J. Autom. Sin. 2019, 6, 703–715. [Google Scholar] [CrossRef]

- Kou, G.; Yang, P.; Peng, Y.; Xiao, F.; Chen, Y.; Alsaadi, F.E. Evaluation of feature selection methods for text classification with small datasets using multiple criteria decision-making methods. Appl. Soft Comput. 2020, 86, 10583. [Google Scholar] [CrossRef]

- Hashemi, A.; Dowlatshahi, M.B.; Nezamabadi-Pour, H. Ensemble of feature selection algorithms: A multi-criteria decision-making approach. Int. J. Mach. Learn. Cybern. 2021, 1–21. [Google Scholar] [CrossRef]

- Singh, R.; Kumar, H.; Singla, R.K. TOPSIS based multi-criteria decision making of feature selection techniques for network traffic dataset. Int. J. Eng. Technol. 2014, 5, 4598–4604. [Google Scholar]

- Souravlas, S.; Anastasiadou, S.; Katsavounis, S. A Survey on the Recent Advances of Deep Community Detection. Appl. Sci. 2021, 11, 7179. [Google Scholar] [CrossRef]

- Acosta, D.; Fujii, Y.; Joyce-Beaulieu, D.; Jacobs, K.D.; Maurelli, A.T.; Nelson, E.J.; McKune, S.L. Psychosocial Health of K-12 Students Engaged in Emergency Remote Education and In-Person Schooling: A Cross-Sectional Study. Int. J. Environ. Res. Public Health 2021, 18, 8564. [Google Scholar] [CrossRef]

- Carreon, A.D.V.; Manansala, M.M. Addressing the psychosocial needs of students attending online classes during this COVID-19 pandemic. J. Public Health 2021, 43, e385–e386. [Google Scholar] [CrossRef]

- Mahapatra, A.; Sharma, P. Education in times of COVID-19 pandemic: Academic stress and its psychosocial impact on children and adolescents in India. Int. J. Soc. Psychiatry 2021, 67, 397–399. [Google Scholar] [CrossRef]

- Navarro, R.M.; Castrillón, O.D.; Osorio, L.P.; Oliveira, T.; Novais, P.; Valencia, J.F. Improving classification based on physical surface tension-neural net for the prediction of psychosocial-risk level in public school teachers. PeerJ. Comput. Sci. 2021, 7, e511. [Google Scholar] [CrossRef]

- Kira, K.; Rendell, L.A. A practical approach to feature selection. In Machine Learning Proceedings 1992; Sleeman, D., Edwards, P., Eds.; Morgan Kaufmann: San Francisco, CA, USA, 1992; pp. 249–256. [Google Scholar]

- Dash, M.; Liu, H. Feature selection for classification. Intell. Data Anal. 1997, 1, 131–156. [Google Scholar] [CrossRef]

- Urbanowicz, R.J.; Meeker, M.; La Cava, W.; Olson, R.S.; Moore, J.H. Relief-based feature selection: Introduction and review. J. Biomed. Inform. 2018, 85, 189–203. [Google Scholar] [CrossRef] [PubMed]

- Bommert, A.; Sun, X.; Bischl, B.; Rahnenführer, J.; Lang, M. Benchmark for filter methods for feature selection in high-dimensional classification data. Comput. Stat. Data Anal. 2020, 143, 106839. [Google Scholar] [CrossRef]

- Ashik, M.; Jyothish, A.; Anandaram, S.; Vinod, P.; Mercaldo, F.; Martinelli, F.; Santone, A. Detection of Malicious Software by Analyzing Distinct Artifacts Using Machine Learning and Deep Learning Algorithms. Electronics 2021, 10, 1694. [Google Scholar] [CrossRef]

- Johnson, K.J.; Synovec, E.R. Pattern recognition of jet fuels: Comprehensive GC×GC with ANOVA-based feature selection and principal component analysis. Chemom. Intell. Lab. Syst. 2002, 60, 225–237. [Google Scholar] [CrossRef]

- Vora, S.; Yang, H. A comprehensive study of eleven feature selection algorithms and their impact on text classification. In Proceedings of the 2017 Computing Conference, London, UK, 18–20 July 2017; pp. 440–449. [Google Scholar]

- Ghosh, M.; Sanyal, G. Performance Assessment of Multiple Classifiers Based on Ensemble Feature Selection Scheme for Sentiment Analysis. Appl. Comput. Intell. Soft Comput. 2018, 2018, 8909357. [Google Scholar] [CrossRef]

- Alazab, M. Automated Malware Detection in Mobile App Stores Based on Robust Feature Generation. Electronics 2020, 9, 435. [Google Scholar] [CrossRef]

- Cilia, N.D.; De Stefano, C.; Fontanella, F.; di Freca, A.S. A ranking-based feature selection approach for handwritten character recognition. Pattern Recognit. Lett. 2019, 121, 77–86. [Google Scholar] [CrossRef]

- Bahassine, S.; Madani, A.; Al-Sarem, M.; Kissi, M. Feature selection using an improved Chi-square for Arabic text classification. J. King Saud Univ. Comput. Inf. Sci. 2020, 32, 225–231. [Google Scholar] [CrossRef]

- Thejas, G.S.; Joshi, S.R.; Iyengar, S.S.; Sunitha, N.R.; Badrinath, P. Mini-Batch Normalized Mutual Information: A Hybrid Feature Selection Method. IEEE Access 2019, 7, 116875–116885. [Google Scholar] [CrossRef]

- Macedo, F.; Oliveira, M.R.; Pacheco, A.; Valadas, R. Theoretical foundations of forward feature selection methods based on mutual information. Neurocomputing 2019, 325, 67–89. [Google Scholar] [CrossRef]

- Guyon, I.; Elisseeff, A. An Introduction to Variable and Feature Selection. J. Mach. Learn. Res. 2003, 3, 1157–1182. [Google Scholar]

- Peng, H.; Long, F.; Ding, C. Feature selection based on mutual information criteria of max-dependency, max-relevance, and min-redundancy. IEEE Trans. Pattern Anal. Mach. Intell. 2005, 27, 1226–1238. [Google Scholar] [CrossRef] [PubMed]

- Gonzalez-Lopez, J.; Ventura, S.; Cano, A. Distributed multi-label feature selection using individual mutual information measures. Knowl.-Based Syst. 2020, 188, 105052. [Google Scholar] [CrossRef]

- Zhou, H.; Zhang, Y.; Zhang, Y.; Liu, H. Feature selection based on conditional mutual information: Minimum conditional relevance and minimum conditional redundancy. Appl. Intell. 2019, 49, 883–896. [Google Scholar] [CrossRef]

- Ruggieri, S. Complete Search for Feature Selection in Decision Trees. J. Mach. Learn. Res. 2019, 20, 1–34. [Google Scholar]

- Igarashi, Y.; Ichikawa, H.; Nakanishi-Ohno, Y.; Takenaka, H.; Kawabata, D.; Eifuku, S.; Tamura, R.; Nagata, K.; Okada, M. ES-DoS: Exhaustive search and density-of-states estimation as a general framework for sparse variable selection. J. Phys. Conf. Ser. 2018, 1036, 012001. [Google Scholar] [CrossRef]

- Lee, C.-Y.; Chen, B.-S. Mutually-exclusive-and-collectively-exhaustive feature selection scheme. Appl. Soft Comput. 2018, 68, 961–971. [Google Scholar] [CrossRef]

- Granitto, P.; Furlanello, C.; Biasioli, F.; Gasperi, F. Recursive feature elimination with random forest for PTR-MS analysis of agroindustrial products. Chemom. Intell. Lab. Syst. 2006, 83, 83–90. [Google Scholar] [CrossRef]

- Tibshirani, R. Regression Shrinkage and Selection Via the Lasso. J. R. Stat. Soc. Ser. B Methodol. 1996, 58, 267–288. [Google Scholar] [CrossRef]

- Hesterberg, T.; Choi, N.H.; Meier, L.; Fraley, C. Least angle and ℓ1 penalized regression: A review. Stat. Surv. 2008, 2, 61–93. [Google Scholar] [CrossRef]

- Abdulsalam, S.O.; Mohammed, A.A.; Ajao, J.F.; Babatunde, R.S.; Ogundokun, R.O.; Nnodim, C.T.; Arowolo, M.O. Performance Evaluation of ANOVA and RFE Algorithms for Classifying Microarray Dataset Using SVM. Lect. Notes Bus. Inf. Process. 2020, 480–492. [Google Scholar] [CrossRef]

- Guyon, I.; Weston, J.; Barnhill, S.; Vapnik, V. Gene Selection for Cancer Classification using Support Vector Machines. Mach. Learn. 2002, 46, 389–422. [Google Scholar] [CrossRef]

- Zavadskas, E.K.; Turskis, Z. A new additive ratio assessment (ARAS) method in multi-criteria decision-making. Technol. Econ. Dev. Econ. 2010, 16, 159–172. [Google Scholar] [CrossRef]

- Radović, D.; Stević, Ž.; Pamučar, D.; Zavadskas, E.K.; Badi, I.; Antuchevičiene, J.; Turskis, Z. Measuring Performance in Transportation Companies in Developing Countries: A Novel Rough ARAS Model. Symmetry 2018, 10, 434. [Google Scholar] [CrossRef]

- Maulana, C.; Hendrawan, A.; Pinem, A.P.R. Pemodelan Penentuan Kredit Simpan Pinjam Menggunakan Metode Additive Ratio Assessment (Aras). J. Pengemb. Rekayasa Teknol. 2019, 15, 7–11. [Google Scholar] [CrossRef]

- García, S.; Luengo, J.; Herrera, F. Data preparation basic models. In Data Preprocessing in Data Mining; International Publishing; Springer: Cham, Switzerland, 2015; pp. 39–57. [Google Scholar]

- Kotsiantis, S.B.; Kanellopoulos, D.; Pintelas, P.E. Data preprocessing for supervised leaning. Int. J. Comput. Sci. 2006, 1, 111–117. [Google Scholar]

- Mosquera, R.; Castrillón, O.D.; Parra, L. Prediction of Psychosocial Risks in Colombian Teachers of Public Schools using Machine Learning Techniques. Inf. Tecnol. 2018, 29, 267–280. [Google Scholar] [CrossRef][Green Version]

- Newman, M.E.J. Power laws, Pareto distributions and Zipf’s law. Contemp. Phys. 2005, 46, 323–351. [Google Scholar] [CrossRef]

- Luque, A.; Carrasco, A.; Martín, A.; de las Heras, A. The impact of class imbalance in classification performance metrics based on the binary confusion matrix. Pattern Recognit. 2019, 91, 216–231. [Google Scholar] [CrossRef]

- Takahashi, K.; Yamamoto, K.; Kuchiba, A.; Koyama, T. Confidence interval for micro-averaged F1 and macro-averaged F1 scores. Appl. Intell. 2021, 1–12. [Google Scholar] [CrossRef]

- Pillai, I.; Fumera, G.; Roli, F. F-measure optimisation in multi-label classifiers. In Proceedings of the 21st International Conference on Pattern Recognition (ICPR2012), Tsukuba, Japan, 11–15 November 2012; pp. 2424–2427. [Google Scholar]

- Van Asch, V. Macro- and Micro-Averaged Evaluation Measures. 2013, pp. 1–27. Available online: https://www.semanticscholar.org/paper/Macro-and-micro-averaged-evaluation-measures-%5B-%5B-%5D-Asch/1d106a2730801b6210a67f7622e4d192bb309303 (accessed on 14 November 2021).

- Sokolova, M.; Japkowicz, N.; Szpakowicz, S. Beyond accuracy, F-score and ROC: A family of discriminant measures for performance evaluation. In AI 2006: Advances in Artificial Intelligence; Springer: Berlin/Heidelberg, Germany, 2006; pp. 1015–1021. [Google Scholar]

- Yin, M.; Vaughan, J.W.; Wallach, H. Understanding the effect of accuracy on trust in machine learning models. In Proceedings of the 2019 CHI Conference on Human Factors in Computing Systems, Glasgow, UK, 4–9 May 2019; pp. 1–12. [Google Scholar]

| No | Detail | Specification |

|---|---|---|

| 1 | Number of Features | 118 |

| 2 | Number of Classes | 4 |

| 3 | Number of Instances | 4890 |

| 4 | Classes Name | Low, Medium, High, Very High |

| 5 | Features Domain | Sociodemographic (S), Demands of the Job (D), Control over Work (C), Leadership and Social Relations at Work (L), Rewards (R) |

| No | Models | Feature | Features Selected |

|---|---|---|---|

| 1 | Baseline [29,60] | 118 | All Features |

| 2 | ANOVA [35] | 11 | S2, S3, S4, S5, S7, S8, S10, D3, D28, L1, R8 |

| 3 | Chi-Square [39,40] | 11 | S2, S4, S5, S10, D3, D6, D28, C16, L1, R5, R8 |

| 4 | MI [45,46] | 11 | S2, S3, S7, S8, S10, D9, D27, D37, C21, L1, L4 |

| 5 | EFS [47,48] | 24 | S1, S2, S3, D2, D5, D8, D14, D17, D19, D25, D27, D31, D34, D38, C2, C9, L3, L9, L10, L12, L30, R3, R4, M1 |

| 6 | ERF [50] | 9 | S2, S3, S4, S5, S7, S8, S10, D3, L1 |

| 7 | Lasso [52] | 10 | S1, S3, S4, S5, S7, S8, S10, M1, M2, M3 |

| 8 | RFE [54] | 11 | S2, S3, S8, D2, D18, D21, D26, D28, D38, L17, R5 |

| Model | Accuracy | Precision | Recall | F1-Score | Weighted Prec. | Weighted Recall | Weighted F1-Score | Train Time (s) | Inference Time (s) |

|---|---|---|---|---|---|---|---|---|---|

| Baseline | 0.9725 | 0.9581 | 0.9427 | 0.9501 | 0.9721 | 0.9725 | 0.9722 | 34.3910 | 0.9917 |

| ANOVA | 0.9734 | 0.9585 | 0.9453 | 0.9517 | 0.9730 | 0.9734 | 0.9731 | 3.9885 | 0.9933 |

| Chi-Square | 0.9752 | 0.9614 | 0.9484 | 0.9547 | 0.9749 | 0.9752 | 0.9750 | 3.9892 | 0.9950 |

| MI | 0.9757 | 0.9647 | 0.9479 | 0.9569 | 0.9754 | 0.9757 | 0.9753 | 3.9893 | 0.9636 |

| EFS | 0.9265 | 0.9324 | 0.9257 | 0.9267 | 0.9344 | 0.9265 | 0.9273 | 8.4677 | 0.9770 |

| ERF | 0.9770 | 0.9719 | 0.9478 | 0.9591 | 0.9769 | 0.9770 | 0.9766 | 2.0112 | 0.9823 |

| Lasso | 0.9770 | 0.9684 | 0.9513 | 0.9597 | 0.9768 | 0.9770 | 0.9768 | 2.9948 | 0.9646 |

| RFE | 0.9706 | 0.9537 | 0.9400 | 0.9470 | 0.9703 | 0.9706 | 0.9704 | 3.9878 | 0.9646 |

| Alternative | Criteria | ||||||||

|---|---|---|---|---|---|---|---|---|---|

| A | P | R | FS | WP | WR | WFS | TT | IT | |

| Optimization | Benefit | Benefit | Benefit | Benefit | Benefit | Benefit | Benefit | Cost | Cost |

| Weight (w) | 0.2 | 0.1 | 0.1 | 0.1 | 0.1 | 0.1 | 0.1 | 0.1 | 0.1 |

| Baseline | 0.9725 | 0.9581 | 0.9427 | 0.9501 | 0.9721 | 0.9725 | 0.9722 | 34.3910 | 0.9917 |

| ANOVA | 0.9734 | 0.9585 | 0.9453 | 0.9517 | 0.9730 | 0.9734 | 0.9731 | 3.9885 | 0.9933 |

| Chi-Square | 0.9752 | 0.9614 | 0.9484 | 0.9547 | 0.9749 | 0.9752 | 0.9750 | 3.9892 | 0.9950 |

| MI | 0.9757 | 0.9647 | 0.9479 | 0.9569 | 0.9754 | 0.9757 | 0.9753 | 3.9893 | 0.9636 |

| EFS | 0.9265 | 0.9324 | 0.9257 | 0.9267 | 0.9344 | 0.9265 | 0.9273 | 8.4677 | 0.9770 |

| ERF | 0.9770 | 0.9719 | 0.9478 | 0.9591 | 0.9769 | 0.9770 | 0.9766 | 2.0112 | 0.9823 |

| Lasso | 0.9770 | 0.9684 | 0.9513 | 0.9597 | 0.9768 | 0.9770 | 0.9768 | 2.9948 | 0.9646 |

| RFE | 0.9706 | 0.9537 | 0.9400 | 0.9470 | 0.9703 | 0.9706 | 0.9704 | 3.9878 | 0.9646 |

| Alternative | Criteria | ||||||||

|---|---|---|---|---|---|---|---|---|---|

| Weight (w) | 0.2 | 0.1 | 0.1 | 0.1 | 0.1 | 0.1 | 0.1 | 0.1 | 0.1 |

| A0 | 0.1120 | 0.1125 | 0.1119 | 0.1120 | 0.1119 | 0.1120 | 0.1120 | 0.2007 | 0.1124 |

| Baseline | 0.1115 | 0.1109 | 0.1109 | 0.1109 | 0.1113 | 0.1115 | 0.1114 | 0.0112 | 0.1120 |

| ANOVA | 0.1116 | 0.1109 | 0.1112 | 0.1111 | 0.1114 | 0.1116 | 0.1116 | 0.1012 | 0.1090 |

| Chi-Square | 0.1118 | 0.1113 | 0.1116 | 0.1115 | 0.1117 | 0.1118 | 0.1118 | 0.1012 | 0.1088 |

| MI | 0.1118 | 0.1116 | 0.1115 | 0.1117 | 0.1117 | 0.1118 | 0.1118 | 0.1012 | 0.1124 |

| EFS | 0.1062 | 0.1079 | 0.1089 | 0.1082 | 0.1070 | 0.1062 | 0.1063 | 0.0477 | 0.1108 |

| ERF | 0.1120 | 0.1125 | 0.1115 | 0.1120 | 0.1119 | 0.1120 | 0.1120 | 0.2007 | 0.1102 |

| Lasso | 0.1120 | 0.1121 | 0.1119 | 0.1120 | 0.1119 | 0.1120 | 0.1120 | 0.1348 | 0.1122 |

| RFE | 0.1112 | 0.1104 | 0.1106 | 0.1106 | 0.1111 | 0.1112 | 0.1112 | 0.1012 | 0.1122 |

| Alternative | Criteria | ||||||||

|---|---|---|---|---|---|---|---|---|---|

| A0 | 0.0224 | 0.0113 | 0.0112 | 0.0112 | 0.0112 | 0.0112 | 0.0112 | 0.0201 | 0.0112 |

| Baseline | 0.0223 | 0.0111 | 0.0111 | 0.0111 | 0.0111 | 0.0112 | 0.0111 | 0.0011 | 0.0112 |

| ANOVA | 0.0223 | 0.0111 | 0.0111 | 0.0111 | 0.0111 | 0.0112 | 0.0112 | 0.0101 | 0.0109 |

| Chi-Square | 0.0224 | 0.0111 | 0.0112 | 0.0112 | 0.0112 | 0.0112 | 0.0112 | 0.0101 | 0.0109 |

| MI | 0.0224 | 0.0112 | 0.0112 | 0.0112 | 0.0112 | 0.0112 | 0.0112 | 0.0101 | 0.0112 |

| EFS | 0.0212 | 0.0108 | 0.0109 | 0.0108 | 0.0107 | 0.0106 | 0.0106 | 0.0048 | 0.0111 |

| ERF | 0.0224 | 0.0113 | 0.0112 | 0.0112 | 0.0112 | 0.0112 | 0.0112 | 0.0201 | 0.0110 |

| Lasso | 0.0224 | 0.0112 | 0.0112 | 0.0112 | 0.0112 | 0.0112 | 0.0112 | 0.0135 | 0.0112 |

| RFE | 0.0223 | 0.0110 | 0.0111 | 0.0111 | 0.0111 | 0.0111 | 0.0111 | 0.0101 | 0.0112 |

| Alternative | i | S | K | Rank |

|---|---|---|---|---|

| A0 | 0 | 0.1210 | - | - |

| Baseline | 1 | 0.1013 | 0.8372 | 8 |

| ANOVA | 2 | 0.1101 | 0.9099 | 5 |

| Chi-Square | 3 | 0.1105 | 0.9132 | 4 |

| MI | 4 | 0.1109 | 0.9165 | 3 |

| EFS | 5 | 0.1015 | 0.8388 | 7 |

| ERF | 6 | 0.1208 | 0.9983 | 1 |

| Lasso | 7 | 0.1143 | 0.9446 | 2 |

| Model | Rank |

|---|---|

| ERF | 1 |

| Lasso | 2 |

| MI | 3 |

| Chi-Square | 4 |

| ANOVA | 5 |

| RFE | 6 |

| EFS | 7 |

| Baseline | 8 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Muttakin, F.; Wang, J.-T.; Mulyanto, M.; Leu, J.-S. Evaluation of Feature Selection Methods on Psychosocial Education Data Using Additive Ratio Assessment. Electronics 2022, 11, 114. https://doi.org/10.3390/electronics11010114

Muttakin F, Wang J-T, Mulyanto M, Leu J-S. Evaluation of Feature Selection Methods on Psychosocial Education Data Using Additive Ratio Assessment. Electronics. 2022; 11(1):114. https://doi.org/10.3390/electronics11010114

Chicago/Turabian StyleMuttakin, Fitriani, Jui-Tang Wang, Mulyanto Mulyanto, and Jenq-Shiou Leu. 2022. "Evaluation of Feature Selection Methods on Psychosocial Education Data Using Additive Ratio Assessment" Electronics 11, no. 1: 114. https://doi.org/10.3390/electronics11010114

APA StyleMuttakin, F., Wang, J.-T., Mulyanto, M., & Leu, J.-S. (2022). Evaluation of Feature Selection Methods on Psychosocial Education Data Using Additive Ratio Assessment. Electronics, 11(1), 114. https://doi.org/10.3390/electronics11010114