1. Introduction

The software-defined networking (SDN) architecture is a network-based model that separates network functions into a control plane and a data plane to allow easier implementation of new protocols and applications [

1]. The control plane manages the network by transmitting commands to the switches residing in the data plane [

2]. The separation of responsibilities between the two planes is realized with the help of a programming interface between the data plane and the control plane components [

3]. Among the benefits brought by the SDN paradigm, the most important are it features the concept of network programmability, it improves network scaling features, it allows the integration in the software of functions above the control layer, or the implementation of functions independent of data consumption [

4]. In addition to the benefits of the SDN architecture, the introduction of multiple layers also increases the attack surface of the environment. The attack surface is the total sum of points of possible unauthorized entry into the network [

5,

6]. SDN security is the topic discussed throughout this paper.

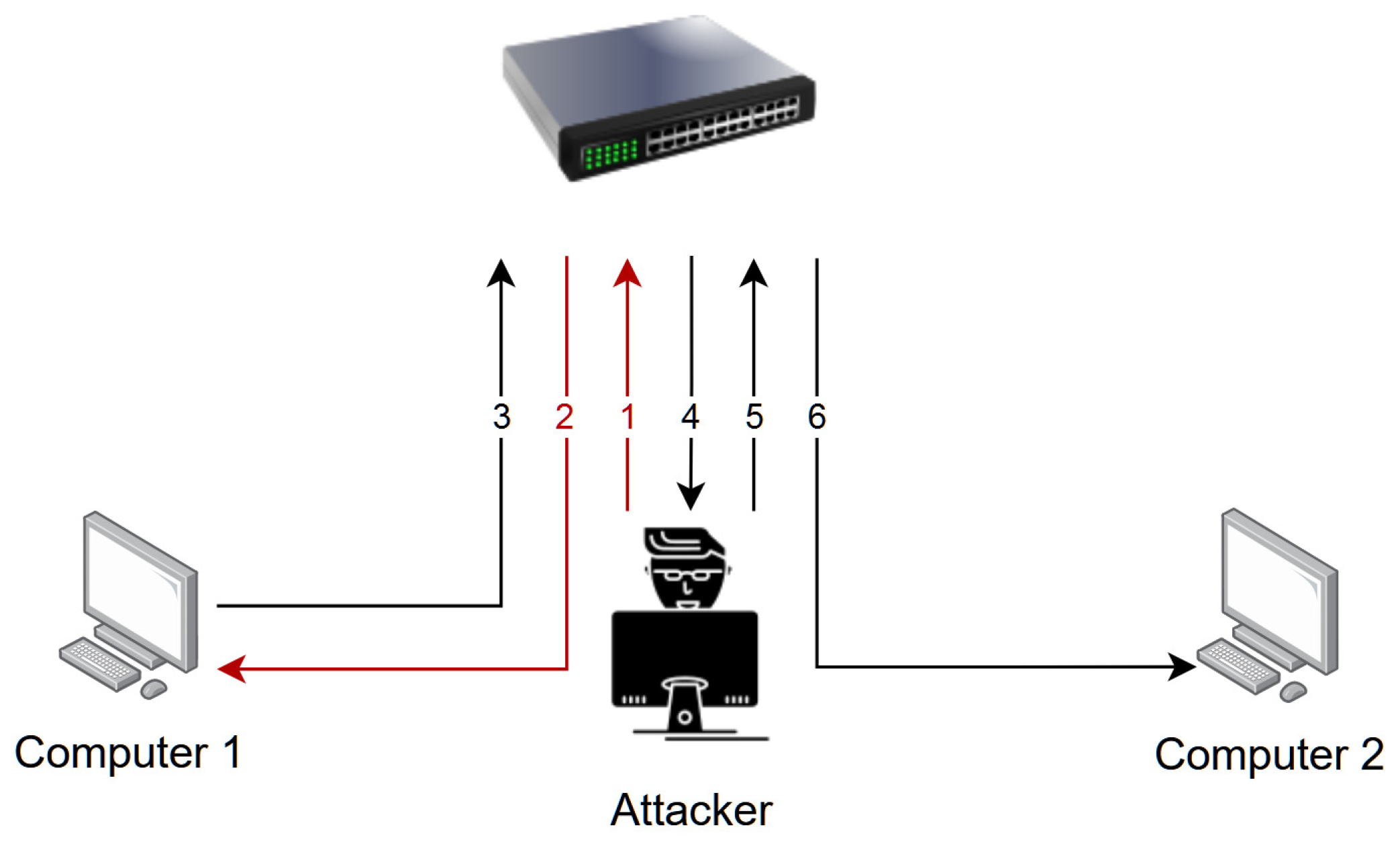

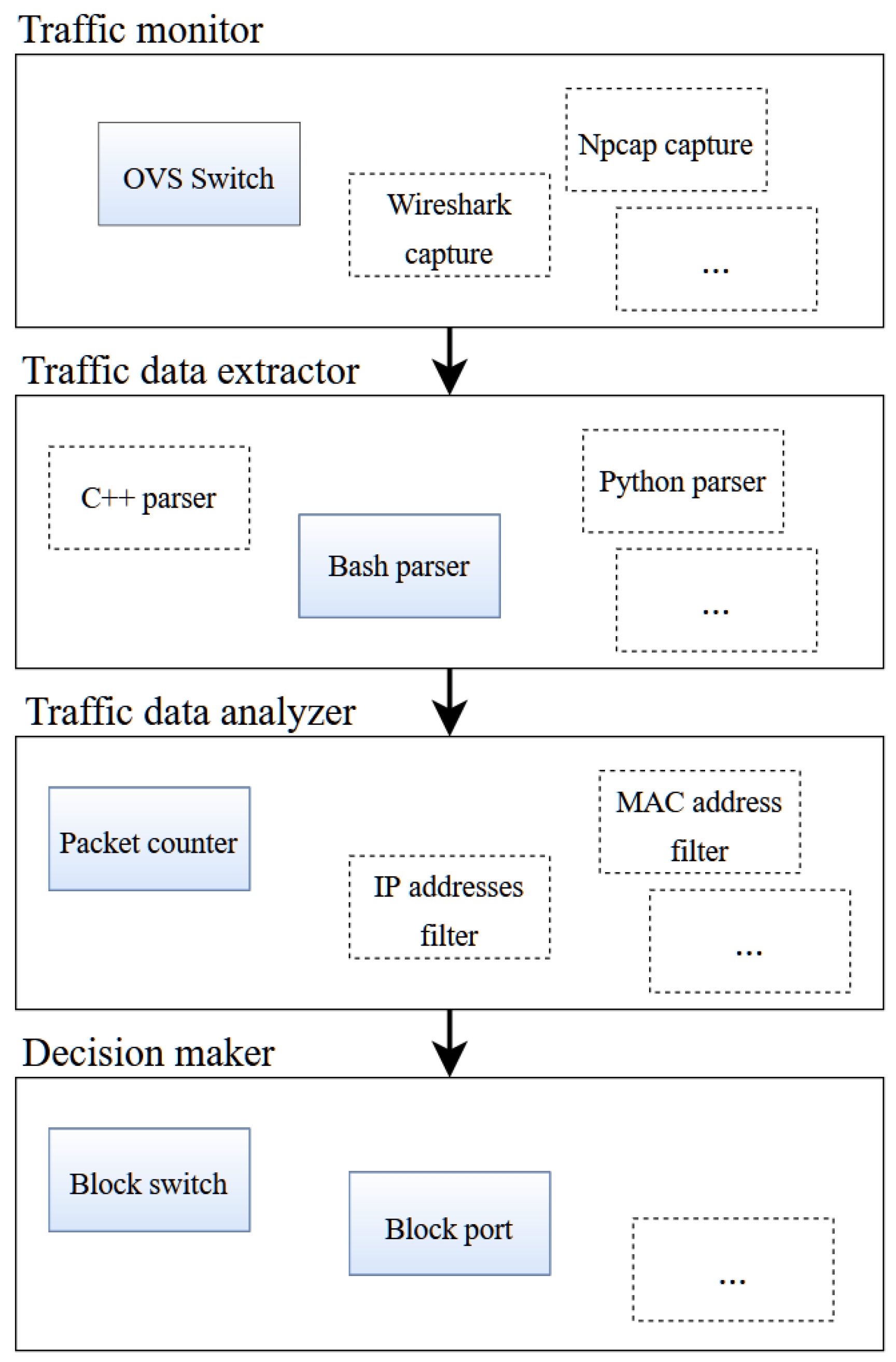

Figure 1 shows the architecture of the traditional SDN and the locations where security solutions are deployed to address ARP spoofing-based attacks. What is important to note is that existing solutions are deployed at every architectural layer. SDNs are heterogeneous by nature; therefore, they must support a wide range of technologies implemented at various layers of the SDN architecture. Thus, customizable SDN solutions should be introduced to accommodate different running environments and programming environments (e.g., OpenVSwitch-based, npcap-based, etc.).

Among the most common types of attacks in Ethernet-based networks are attacks based on the ARP Spoofing technique [

7]. This technique allows an attacker to assume the identity of another network device at the Data Link Layer of the ISO/OSI reference model before the data is processed by higher-level protocols, such as IP, TCP, or HTTP.

The reason this attack is very common nowadays is that there is a multitude of automated tools that can be used to perform the attack (e.g., Ettercap [

8], Arpspoof [

9], and Arpoison [

10]) without the attackers having to understand all the technical details of the operation. This is also facilitated by the widespread information over the internet [

11]. As a result of the flexibility offered by the SDN environment, various security functions can be implemented at different layers of the SDN architecture to help detect and prevent these attacks [

12].

The current work presents the implementation of an extendable and configurable software solution for mitigating ARP spoofing-based attacks that is applied in the data plane layer of the SDN. The solution aims to improve the response time and effectiveness in mitigating the attack. The complexity of the proposed approach is also given by integrating a wide range of tools and programming languages to create test networks, generate attacks, and provide a detection and mitigation solution. Mininet [

13,

14] was used as a network emulator to generate various network topologies for testing. KaliLinux and the Ettercap tool were used to perform the MitM attack in the test networks. A new type of distributed MitM attack has also been introduced to study the behavior of different attackers. The detection and mitigation solution is implemented as an OpenVSwitch [

15] extension, which analyzes the switch flow tables for data traffic generated by the hosts connected to the network. The Linux bash and Python programming languages were used to implement a solution focusing on being extendable and customizable for future development.

The current work is original because it offers novel approaches to software architectural decisions, testing scenarios, and attacker behavior analysis and mitigation possibilities. The proposed architecture aims to create an extendable and customizable SDN platform to accommodate different environments (e.g., OpenVSwitch-based, npcap-based, etc.) and programming environments. By implementing several specific customizations, the proposed solution allows an SDN provider to integrate various technologies for different components in the architecture (traffic monitoring, data extraction, data analyzing, and decision making) based on the available expertise. This is necessary because there is a wide technological variety in the networking world and technical integrability is of paramount importance. The solution, being implemented under the Linux operating system, can be used across the industry as most network devices are Linux-based [

16]. To improve the response time in detecting and mitigating the attack, the current solution is implemented strictly in the data plane [

17]. Other studies, although focusing on the data plane, still require a degree of interaction with the control plane [

12,

18,

19], and no other research proposes such an isolated solution. The test networks are created with different congestion levels and with different numbers of devices to offer a comprehensive understanding of the needed computational impact and to clearly identify the feasibility of deploying the solution in certain environments depending on the criticality level. This provides a more detailed overview of the attacks in more than one network type. This scaling benefit is offered by Mininet. The current research also introduces a new distributed, never formalized before, ARP spoofing-based MitM attack and performs an in-depth attack behavior analysis. In the proposed distributed MitM attack, a malicious actor splits its spoofing traffic on two or more devices at a reduced rate so as not to trigger the detection threshold for a single device, thereby reducing the likelihood of detection.

The main original contributions presented in the current work are summarized below:

Implementation of a solution that detects and mitigates ARP spoofing-based attacks in the data plane layer of an SDN

Customizability in the general architecture of the SDN solution capable of accommodating different running environments (e.g., OpenVSwitch-based, npcap-based, etc.) and integrating several technologies in the software layers.

Implementation strictly in the SDN data plane.

More comprehensive performance evaluation in different size networks with various congestion levels. The evaluation also presents the impact that the solution has on the network throughput, whether the MitM attack is taking place or not.

The conceptualization of a distributed ARP spoofing-based attack and a behavioral analysis of the attacker’s actions. This also brings a novelty in attack handling compared to related research as a solution is provided for this distributed attack.

New benchmark proposal providing new testing scenarios for future research comparisons, contributing to the general body of knowledge.

The paper is structured as follows:

Section 2 provides state-of-the-art information on ARP spoofing-based attacks and mitigation techniques at different SDN layers and introduces prerequisite theoretical technical information.

Section 3 presents the system architecture and the implementation of the proposed solution.

Section 4 presents and discusses the testing environment and the obtained experimental results.

Section 5 discusses further development ideas that highlight the relevance of our work in a future context.

Section 6 concludes the paper by adding ideas for further research.

2. Related Research

With the increased number of SDN deployments in recent years [

20], together with the development of tools that facilitate the installation and configuration of said SDNs, it is not only the positive side that gains recognition through increased performance and scalability. The attention is also greatly redirected towards the security risks this architecture is susceptible to. The layered separation of the SDN planes increases the attack surface by allowing multiple entry points for the attackers; therefore, various techniques can be employed to mitigate the security threats at different layers [

4]. The topics covered in this chapter are ARP spoofing-based attacks, various techniques that can be used to mitigate the ARP spoofing-based threats, and SDN approaches for threat detection and mitigation. The relationship of each topic is detailed in the context of the current research, highlighting the contributions and approaches used in the current work with the state-of-the-art.

ARP spoofing-based attacks allow a malicious actor to falsely assume the identity of a specific network device by generating requests which appear to originate from a valid host. The network requests generated by the attacker are ARP request messages which are sent in the network containing information that a specific IP address corresponds to a false MAC address, i.e., the MAC address of the attacker [

12]. This way, whenever a network device intends to communicate with the advertised IP address, it will add the wrong Layer 2 information to the network packet, causing the other network devices (e.g., switches, bridges) to deliver the packet to the wrong destination (the attacker’s machine). It is important to note that the ARP spoofing attack is the enabler of other attacks such as MitM; therefore, this is the first attack that must be mitigated, not the future attacks it facilitates. MitM is an ARP poisoning attack in which the attacker can go unnoticed because he is placed in the middle of the network and transfers data or modifies the correspondence between two parties that communicate with each other [

21]. Our research simulates such ARP spoofing attacks with the help of the Ettercap by performing the MitM attack, but also with the help of a custom script which allows the generation of a parameterizable number of ARP requests—thus allowing the detection and mitigation techniques to be more thoroughly tested.

The survey presented in [

12] highlights three major categories of solutions for ARP spoofing: traffic pattern-based, flowgraphs, and IP-MAC address binding analysis. The solution presented in [

22] is a traffic analysis application that monitors and analyzes all ARP request packets in the network, and when a packet does not match what it has stored in the database, it will be blocked. The paper [

23] presents an architecture improvement that helps prevent an ARP MITM (man-in-the-middle) attack. This solution is based on the translation and segmentation of IP addresses so that an attacker cannot detect them. The solution presented in [

24] is based on the IP-MAC address links on the other network devices. This solution seeks to ease the work of the controller by adding new tasks to the switches. IP-MAC mappings are maintained as OpenFlow streams in switches, and when a new packet is sent, it is checked, and if it does not match the existing ones, the switch sends a request to the controller to learn how to process the packet. With the help of our proposed solution, it is desired that the switch no longer sends that request to the controller and makes the decision itself. Many SDNs use the OpenFlow protocol because it defines the requirements and standards for communication between the control plane and the data plane. It aims to develop solutions for accelerating services in SDN. The mechanism of operation on which it is based consists of several steps, as reported in the article [

25].

Another technique for detecting ARP spoofing attacks is based on flow graphs. Flow graphs are based on OpenFlow packet interception and based on their structured interpretation, validate the data of each flow. Ref. [

26] generates dynamic flow graphs based on OpenFlow packets exchanged between the controller and switch components. The advantage of the solution is that it does not require controller updates for it to work, but it does require the presence of a controller sending or receiving OpenFlow packets so that the flow graphs can be generated.

The solution presented in [

27] does not operate at the SDN level; however, it uses JPCAP as a traffic monitor component which can very well replace the current article’s use of OpenVSwitch. The solution presented in [

28] offers a solution using OpenVSwitch, but this only uses OpenVSwitch for IP-MAC bindings. The current solution is based on traffic volume and uses OpenVSwitch for a different purpose; therefore, the current solution is actually an OpenVSwitch extension. In addition, the referenced solution requires an SDN Controller to implement the functionality, whereas the solution in the current research is implemented solely at the data plane level. A similar situation can be found in [

29] as it offers a method implemented in the control plane’s Pox Controller device, which contains a list of MAC addresses that are blacklisted. This list is forwarded to the OpenVSwitch via the OpenFlow protocol. The solution in the current article differs from the referenced study in the fact that there is no interaction with the control plane and the solution is implemented at the switch level as an OpenVSwitch extension and does not make use of a predefined list of MAC addresses, but is capable of detecting any MAC address which is starting to become an attacker. The solution presented in [

30] designs a multistage security algorithm to detect whether an attack is in progress. It uses the SDN architecture and specific SDN layer locations for the different stages, including the existence of an SDN controller. In contrast, the solution implemented in the current paper can detect the attack on a single level (the switch level) with the implemented OpenVSwitch extension; therefore, reducing SDN complexity and localizing the implementation on a single network node. Regarding the previous related articles, arguments can be made regarding the increased performance without Controller interaction [

17].

In [

18], a study is presented that implements several methods for denying ARP spoofing attacks. The D-ARPSpoof solution is implemented on the Open Floodlight controller [

31] in the control plane. The current research differs in the approach by implementing the solution directly in the data plane without needing controller interaction. In addition, the current work performs a more thorough analysis of different-sized networks. The study presented in [

19], although it presents a data plane solution, requires a Ryu SDN controller [

32] to define the rules, which are, in turn, followed by the data plane switch. An important factor where the current work differs is that the current work integrates the concept of edge computing to a limited degree, i.e., delegating some responsibility to the outer layers of the network and not keeping all the logic at the controller level. This is an important difference in what concerns the security of the system: as security threats have to be handled with a very short response time, eliminating the control component improves the reaction time of the solution. Briefly, giving a degree of authority to the data plane switch components allows the system to perform better for some local operations.

The study [

19] also uses Mininet; however, it lacks concrete specifications on how the attacks were launched or simulated. The current work attempts to fill these gaps. From a technical perspective, the paper [

33] proposes a client-side detection solution that is also implemented in the Linux bash. However, it is only used to detect attacks on the client-side and not in a centralized device, as the current work does at the switch level.

The measure of success of this solution is given by the response time in mitigating the attack depending on the network size and by avoiding permanent actions being taken based on false-positive alerts. Considering these topics, several testing scenarios are performed with various attacks occurring in different size networks and the results are also presented from the Mininet simulated environment. The testing results of the current solution use measurement criteria of computational overhead and general performance, which are also used as a benchmark for testing in [

34]. By including these concepts in the testing scenarios, the current work also addressed the impact of the solution on the network performance and throughput. Possible optimization solutions are also discussed, together with the extension of the architecture with a self-healing mechanism for wrong actions taken based on false-positive alerts.

4. Testing Environment and Results

As previously described, the testing environment uses a Mininet constructed network in a KaliLinux virtual machine. The simulations were created to trigger the ARP spoofing-based attacks using EtterCap and manual ARP request triggering. The attacks were performed in networks with different levels of congestion and the total number of packets being transmitted. The network traffic between the Mininet devices was generated using the iPerf tool [

35]. In addition, multiple test networks were created with different numbers of hosts connected to the switch to measure the impact of the network size over the time it takes the solution to detect and mitigate the attack. The different levels of congestion, namely low traffic, medium traffic, and high traffic, signify a different percentage of the available network bandwidth. Low traffic signifies the usage of below 10% of the total network bandwidth; medium traffic signifies the usage of approximately 50% of the total network bandwidth, and high traffic signifies the usage of the total available network bandwidth. It is important to note that depending on the computer running these experiments, the actual bandwidth values may differ. In the current work, the Mininet network allowed for a total bandwidth of approximately 32 Gb/s, and traffic was generated with respect to this value.

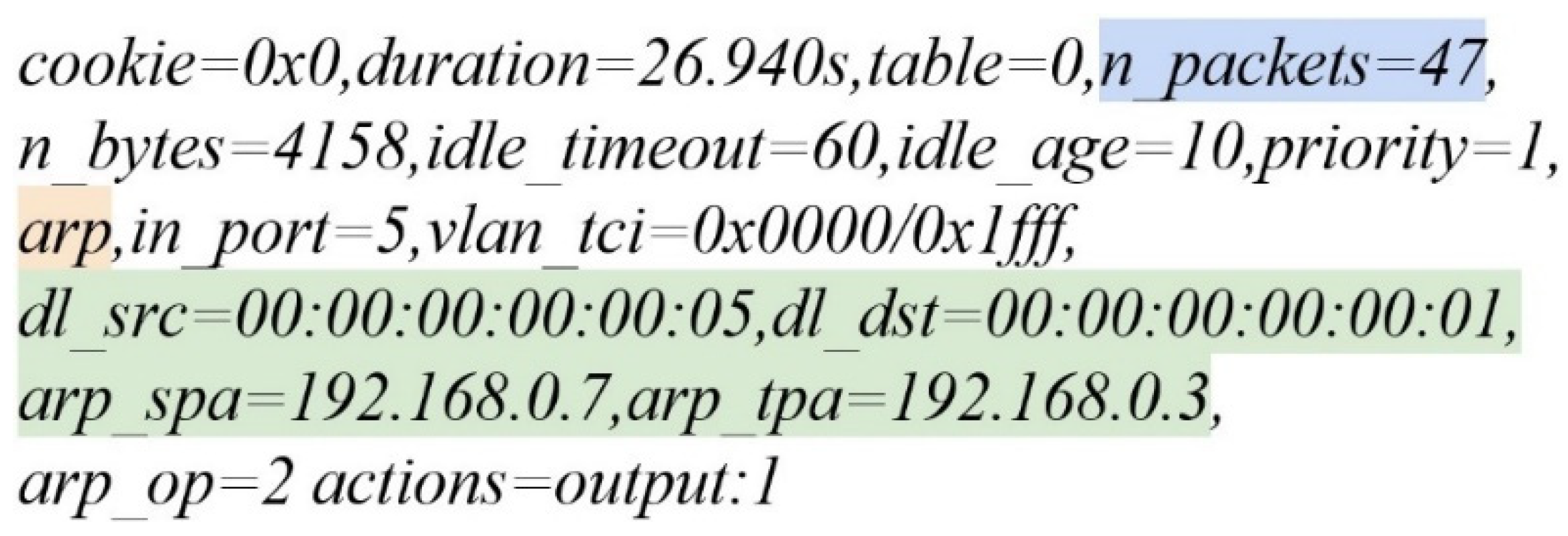

Figure 8 shows a Wireshark capture of the MitM attack in progress performed in a Mininet network using the Ettercap tool. The packet with the number 440 in the capture shows traffic generated from the IP address 192.168.0.3 to IP address 192.168.0.4. This is the selected packet which is shown in the bottom part, and it shows the following IP-MAC addresses correspondences: 192.168.0.3-00:00:00:00:00:01 and 192.168.0.4-00:00:00:00:00:02. Packet number 445 shows an ARP request triggered from MAC address 00:00:00:00:00:03 (the attacker) to the 192.168.0.3 host, informing that the IP address 192.168.0.4 is now found at the attacker’s MAC address. This is the first ARP spoofing attack in the sequence. Starting with packet number 446, the traffic will now flow to the attacker’s MAC address, so the attack was performed. Continuing with the capture, looking at packets with numbers: 449, 452, 454, and 456, these are continuous ARP spoofing requests which ensure that the attacked host continuously registers the attacker at the given MAC address, thus maintaining the attack in progress.

For a more descriptive explanation of how the testing is performed, consider

Figure 9, which shows a sample of commands run in the Mininet environment to perform a testing scenario. Although this might appear trivial for more experienced engineers, the current article aims to provide clear and straightforward instructions on how to run the Mininet simulations, especially as many articles do not provide comprehensive simulation details. Mininet emulates multiple network devices, so any normal command must be run on the desired device. The current network contains ten hosts, ranging from h1 to h10, and a switch, named s1.

The following information is contained in

Figure 9:

Host h3 starts the EtterCap software, meaning h3 is the attacker in this setup.

Hosts h1, h5, h7, and h9 are starting TCP servers using the iperf tool, which expect incoming traffic from other devices in the network.

Hosts h2, h4, h6, h8, h10 use the iperf tool to create TCP clients which connect to other IP addresses which correspond to the previously mentioned servers. The “-t 600” parameter specifies that the clients will generate traffic for 600 s (i.e., 10 min). The “-l 64,000" parameter specifies the packet length which is generated by the clients. The "-b 3G" argument specified the traffic bandwidth, in this case, 3 Gb/s, summing up to a total of 15 Gb/s in this simulation scenario.

Switch s1 is running the solution.py program, which is the actual implementation of the detection and mitigation solution.

It is important to clarify certain specific details that were taken into consideration in the current testing environment. As it can be seen in

Figure 8 above, the ARP spoofing requests are delivered by the EtterCap tool with an approximate frequency of 1 per second. The loop interval and the threshold value are chosen in accordance with this frequency. The tools used in the testing scenarios were EtterCap and a custom script generating a custom number of ARP requests to a specific IP address. This custom script was helpful in testing deterministic attack detection scenarios where the threshold values were tested with precision as compared to the EtterCap testing scenarios, which are not deterministic in sending a fixed number of packets in each period of time. Another advantage of the custom script was the fact that EtterCap uses a user interface to generate the attack, which consumes more time than automating the execution of a script from the command line.

In the following subchapters, the results of several testing scenarios with different network sizes and congestion levels are presented. In these scenarios, the loop interval and the threshold value are chosen differently depending on the network size, testing tools, and congestion parameters. Additional test scenarios are also performed to measure the impact that an attack has on the network throughput in different traffic pattern use cases. Conclusions are also drawn from the results.

4.1. Small Network, Single Attacker

Figure 10 shows the network topology where three end devices are connected to a switch and one of them is the attacker performing the MitM attack between the other end devices.

Figure 11 shows the attack detection duration in ten iterations where different levels of traffic were generated inside the network. For low traffic, the average detection duration was 398.8 ms, for medium traffic, the average detection duration was 921.8 ms, and for high traffic, the average duration was 1975.6 ms. In this scenario, running the EtterCap tool, the loop interval value was 3 s, and the threshold was considered to be 2 packets.

4.2. Medium Network, Single Attacker

Figure 12 shows the topology where an attacker is performing the MitM attack in a network of ten end devices.

Figure 13 shows the attack detection duration in ten iterations where different levels of traffic were generated inside the network. For low traffic, the average detection duration was 402.7 ms, for medium traffic, the average detection duration was 1086.8 ms, and for high traffic, the average duration was 3239.5 ms. In this scenario, again running EtterCap and as the high traffic test increases over 3 s, the loop interval value was raised to be 4 s, and the threshold value was also raised to 3 packets.

4.3. Large Network, Single Attacker

Figure 14 shows the network topology where an attacker is performing the MitM attack in a network containing twenty end devices.

Figure 15 shows the attack detection duration in ten iterations where different levels of traffic were generated inside the network. For low traffic, the average detection duration was 415.6 ms, for medium traffic, the average detection duration was 1503.2 ms, and for high traffic, the average duration was 5615 ms. Increasing the network size and congestion level has a high computation impact exceeding 5 s; therefore, the loop interval value was raised to be 6 s and the threshold value was also raised to 5 packets in the EtterCap test.

4.4. Overhead of Extracting OVS Flow Rules

The current subchapter presents the overhead of extracting the OVS flow rules to be processed in a different process on the operating system.

Figure 16 shows the duration of the rules being exported in each simulation scenario, considering the previously presented number of devices in the different traffic volume scenarios. As it can be seen, the duration increases exponentially as the number of devices and traffic volumes increase which is useful information for network design and performance decisions.

Figure 17 shows the overhead duration compared to the total simulation time for scenarios containing the small size network containing three devices. In the case of low traffic volume, the overhead represents 6.4% of the total simulation time. In the case of medium traffic volume, the overhead represents 7.4% of the total simulation time. In the case of high traffic volume, the overhead represents 10.5% of the total simulation time.

Figure 18 shows the overhead duration compared to the total simulation time for scenarios containing the medium-size network containing ten devices. In the case of low traffic volume, the overhead represents 7.3% of the total simulation time. In the case of medium traffic volume, the overhead represents 9.6% of the total simulation time. In the case of high traffic volume, the overhead represents 14% of the total simulation time.

Figure 19 shows the overhead duration compared to the total simulation time for scenarios containing the large size network containing twenty devices. In case of low traffic volume, the overhead represents 10% of the total simulation time. In the case of medium traffic volume, the overhead represents 11.5% of the total simulation time. In the case of high traffic volume, the overhead represents 15% of the total simulation time.

4.5. Impact over Network Throughput

This subchapter presents the impact that the solution has on the network throughput of data transmission between two hosts. More precisely, these testing scenarios measure the transmission speed between two end devices while the MitM attack is taking place and when it is not taking place. The testing scenarios were made using different network traffic patterns between the two end devices. Identical traffic patterns were generated in MitM and non-MitM scenarios to compare the end-to-end effect of the solution.

Two testing setups were created using the ping and iperf tools provided by the running operating system:

4.5.1. Transfer of Fixed Size Packets between the Two Network Devices

This subchapter presents the results of acknowledged traffic being transferred between two end devices while the MitM attack is taking place and when it is not taking place. The measurements include the roundtrip time that is necessary to confirm that the payload transfer took place.

Figure 20 shows the duration of fixed packet sizes being delivered between the two end devices while the MitM attack is not active. As it can be seen, the larger the packet payload is, the longer the duration is for the packet to be transferred and acknowledged.

Figure 21 shows the duration of fixed packet sizes being delivered between the two end devices while the MitM attack is taking place. As it can be seen, the larger the packet payload is, the longer the duration is for the packet to be transferred and acknowledged.

Comparing the results obtained in

Figure 20 and

Figure 21, the payload transfer duration is significantly higher when the MitM attack is taking place. The fact that data transmission is taking place in both directions (data transfer one way and acknowledge packet the other way), increases the total time for the sending device to receive the confirmation that the data has been delivered. Computing the averages while the MitM attack is not active, the 8 KB packets are transferred on average in 0.05 milliseconds, the 32 KB packets are transferred on average in 0.12 milliseconds, and the 64 KB packets are transferred on average in 0.21 milliseconds. When the MitM attack is active, the 8 KB packets are transferred on average in 11.35 milliseconds, the 32 KB packets are transferred on average in 13.39 milliseconds, and the 64 KB packets are transferred on average in 16.61 milliseconds. Therefore, it’s a very significant duration increase with the attack taking place.

4.5.2. Transfer of Continuous Fixed Bandwidth Traffic between the Two Network Devices

The current subchapter shows a test scenario with UDP traffic between two end devices. The UDP traffic is not acknowledged; therefore, there is less throughput penalty, and the sending device is not aware of any delays that occur due to packet redirection through the MitM. Simulations were performed which consider constant traffic performed for 5, 10, and 20 s, with and without the MitM attack taking place. The test scenario was performed twice, once in a network having configured a bandwidth of 1 Mbps per link and another test having a bandwidth of 100 Mbps per link. Both simulation scenarios show a similar behavior where the throughput penalty introduced by the MitM attack remains constant despite the bandwidth allocation per link. The reason for this is the fact that the traffic is not acknowledged and the only factor introducing the penalty is the retransmission of the packets, which is performed by the attacker. This test was made using iperf, creating an iperf server on a device and an iperf client on the second device generating traffic. At the end of the iperf transfer session, statistical information is presented in the command output and that information constitutes the results that are presented in this subchapter. The datagrams size for the UDP traffic that was generated with iperf were of length 1480 bytes.

Figure 22 shows the throughput of UDP traffic between the two end devices on a fixed bandwidth of 1 Mbps. The throughput decreased in every transfer session when the MitM was active. For a transfer session of 5 s, the throughput decreased by 13%; for a transfer session of 5 s, the throughput decreased by 8%; for a transfer session of 5 s, the throughput decreased by 7%.

Figure 23 shows the throughput of UDP traffic between the two end devices on a fixed bandwidth of 100 Mbps. Identically with the previously presented results, the throughput decreased in every transfer session when the MitM was active. For a transfer session of 5 s, the throughput decreased by 14%; for a transfer session of 5 s, the throughput decreased by 8%; for a transfer session of 5 s, the throughput decreased by 5%.

4.6. General Findings after Testing

One important thing to initially clarify is that the duration and mitigation time is, in fact, the time it takes the solution to execute the entire loop. Consequently, the detection and mitigation duration cannot be shorter than the solution execution; therefore, the minimum time to detect and mitigate the attack is given by the solution execution time. As the experiments show, the duration varies depending on network size and congestion level. For low traffic scenarios, the average detection and mitigation duration is approximately identical. However, as the number of network devices and traffic increase, the computation time increases exponentially, as shown in

Figure 24.

This behavior is caused by the fact that low traffic is causing a lower number of flow entries to be generated on the switch, meaning that the next steps in the pipeline don’t receive as much processing input and do not generate any significant additional processing time, but as more traffic is being generated, the memory and computation needs increase. For medium traffic scenarios, an increase in detection and mitigation duration is more visible as the network topology gains complexity. Comparing the network with ten devices to the network with three devices, the processing time increases by 17.8%. Comparing the network with twenty devices to the network with ten devices, the processing time increases by 38.3%. Therefore, an important knowledge that is extracted is that as more devices are in the network, the processing computation time grows in an exponential manner. For high traffic scenarios, the duration increase is again visible, as was the previous case of medium traffic. Comparing the network with ten devices to the network with three devices, the processing time increases by 63.9%. Comparing the network with twenty devices to the network with ten devices, the processing time increases by 73.3%. And again, a non-linear increase is visible, showing that the larger the network becomes, the slower the solution becomes.

Another useful information that can be extracted from the results is made through an analysis of the low traffic versus high traffic in identical networks. More precisely, in the network with three devices, the high traffic detection duration is approximately five times greater than the low traffic time. In the network with ten devices, the high traffic detection duration is approximately eight times greater than the low traffic time. And finally, in the network with twenty devices, the high traffic detection duration is approximately 13.5 times greater than the low traffic time. This again proves the exponential growth of time processing, not only as the network scales up but also as the traffic simply scales up inside the given network.

Regarding the proposed distributed MitM attack, its detection and mitigation require more significant computation resources. The presented details show the case where the ARP spoofing is split only between two devices; however, this can scale to multiple coordinated attackers. As the detection is required to inspect all the available network devices in tuples of K arguments, the solution complexity increases to O(n^K), n to the power of K, where n is the number of devices in the network and K is the number of coordinated attackers. Of course, several optimizations could be performed, such as marking some devices trustworthy so that they are not inspected, thus reducing the complexity, but this falls out of the scope of the current research.

5. Discussions

The limitations of the current work are related to the environment being simulated and not being deployed on an actual SDN. However, although simulated with Mininet, the testing scenarios used a real software tool (EtterCap) to perform the attack, validating the approach. The attack was first studied, its behavior reverse engineered with Wireshark, and only after it was understood, Mininet was employed to address scalability features. This was the only feasible option, whereas performing the same test scenario in a real network would have been more time-consuming and prone to setup and configuration errors. Another limitation of the current approach is that the chosen programming languages do not have the highest performance (such as the bash parser and Python). Nevertheless, this is sufficient for generating a proof of concept, demonstrating the proposed architecture, and generating results for analysis. Furthermore, the current solution is designed to allow flexibility and extensibility.

As further development steps, several experiments can be derived by replacing the plugin components and studying which has increased performance. The performance of the system can be extended by only monitoring certain devices based on their addresses, thus reducing the computation time as fewer components will be inspected. Another further development idea concerning the physical layer of networking is to perform tests in heterogeneous SDNs where WiFi devices connect to the switch through wireless access points. The proposed solution already supports this setup because WiFi networks are processed identically to cabled Ethernet networks (they have the same Layer 2 header), but a more thorough study could be made regarding the latency of physical layer signal conversions (from radio waves to electrical signals), and an access point would allow more devices to connect in a given location. This study would provide more insight into the deployment capabilities of this solution in a real hardware environment. Another further development idea is to extend this concept to a distributed system; a collaborative environment can be created where different switches can share data of known attacker MAC addresses so that an attacker can more easily be found in various network locations. A collaborative environment can integrate artificial intelligence techniques such as federated learning to detect attacks based on more complex traffic patterns.

Further development of the current research can extend beyond the ideas of having local software complexity and attempts to increase the performance of a single network device. Therefore, future research can be included in the layers of the SDN architecture by using network function virtualization through network hypervisors. The study presented in [

36] presents CoVisor, a network hypervisor that allows multiple controllers to co-exist on the same machine and share their best features for optimizing network functionality. Libera is also a network hypervisor [

37] that has the ability to create a dedicated network infrastructure for handling specific network traffic. Such hypervisors as CoVisor and Libera could be used together to create a virtualized ecosystem that allows the application of different policies and features in different network infrastructures. A more concrete use case related to the research presented in this article concerning security would be the deployment of different attack mitigation strategies in different network infrastructures. Simultaneous testing and performance measurement can take place in the separately created network infrastructures, which leads to a faster method of deciding what the best method for handling a specific attack is. However, one downside that hypervisors can have in the security context is that functionality is being moved in the middle layers of the network, but attacks are always preferably identified as close as possible to the attack source. So, for critical use cases, it is better to operate at the network edge and not in the upper SDN layers after information was already passed through many intermediate devices. In such a scenario, the utilization of a network hypervisor is arguable and proper benchmarking and testing must be performed before deploying a network infrastructure solution.

An additional concept that can be used in conjunction with virtualized and programmable ecosystems is the programmable Application-Specific Integrated Circuits (ASICs), where functions of the user level are moved at the circuit level. So, in theory, use cases that are being implemented in the upper operating system layers can be moved closer to the circuit level. Used in conjunction with the benefits offered by a network hypervisor, dynamic programmability can be configured on different network locations. However, hardware programmability launches a debate on whether the customizability and easy extensibility features can still characterize such a device belonging to a complicated ecosystem. Efforts will have to continue in the direction of hiding physical layer complexity, but for the moment, software configuration is more tangible.

6. Conclusions

SDNs have the benefit of being programmable and they allow the development of many customizations for specific network use cases. In this regard, the current research focused on creating an extendable architecture that can detect and mitigate ARP spoofing-based attacks in Ethernet networks simulated in Mininet. The solution is constructed on the Linux pipeline architecture and a plugin system, where a pipeline of operations is mandatory, containing different modules for data acquisition, relevant data extraction, data analysis, and decision making. Each of the components is replaceable using a configuration file and depending on the network use cases and desired severity levels; different actions can be taken upon attack detection. The fact that the components are replaceable allows the system to be easily extendable and configurable.

The testing environment was constructed using a toolchain consisting of KaliLinux, Mininet, Ettercap, and various helper scripts written in the Linux Bash and Python, generating ARP requests and traffic inside the networks. Several simulations were performed to detect and mitigate the ARP spoofing attack with different network sizes and different congestion levels for each network. The main feature that is shown in each simulation is the exponential time increase that the solution needs to detect and mitigate the attack. This occurs when the network size and traffic increase. The reason for this is that as traffic increases, so does the number of flow entries on the OpenVSwitch switch component; therefore, causing the data extractor to perform more operations. A useful benefit of the exponential growth finding is the fact that based on these findings, the system administrators can also make network design decisions, such as limiting the number of devices connected per switch to satisfy the needed network functional constraints. In addition, a network throughput analysis was made after simulating different data transfers with acknowledged and not acknowledged traffic. The conclusion was that the network throughput always decreases while the MitM attack is active, but acknowledged traffic has a significantly higher throughput drop due to the roundtrip time that is implied with the acknowledge packet. Note that the reason for choosing twenty end devices as the largest network size is the fact that many industrial network switches have twenty-four ports. Some of the ports are used for linking to other switches, thus allowing approximately twenty end devices to connect to a single switch. In addition, with the introduction of the distributed MitM attack, an analysis is also made concerning the time needed by the decision-maker component to detect the attack that is in progress. This shows that the decision-maker component can also be slowed down due to heavier processing needs. One important thing to note here is that although the work took place in a simulated environment using Mininet, the attack behavior was real because Mininet traffic is not simulated; it is real network traffic generated on a single machine.