1. Introduction

Like most daily use machines, vehicles were once under the domain of mechanical engineering. However, the extraordinary advancements in IoT and embedded systems have made most of them intelligent innovative devices that work with the help of the internet. These technological advancements have transformed traditional automobiles into fully functional, intelligent machines from old-fashioned travel sources, which also provide ease while traveling. The advancements in automation and opportunities offered by cutting-edge technology serve as the base for intelligent vehicles. These intelligent vehicles are in high demand as we move forward with a focus on safety and making daily life more convenient. These vehicles support features like sensing the environment, connecting to the internet, obeying traffic guidelines, navigating by themselves, making quick decisions, ensuring pedestrian and passenger safety, parking, etc. Such machines are called autonomous vehicles. They are currently regarded as the topmost level in developing intelligent vehicles. The fundamental motivation behind the research and development of autonomous vehicles are: the need for more driving safety, an increasing population that also leads to an increase in vehicles on the road, expanding infrastructure, the comfort of depending on machines for tasks like driving, and the demand for optimization of resources and time management. With the population growing, a very stressful impact has been created on our roads, infrastructure, open spaces, fuel stations, and resources.

In the past few decades, the adoption of electric batteries has been considered a promising alternative that might relieve the fuel stations and keep our carbon emissions in check. LSBs are the next promising energy storage system with advantages such as high energy density and high capacity at a low-cost rate. A study found the effect catalysts have on LSBs and provides an insight on the design and future perspective of LSBs [

1]. MOFs are compounds that contain molecules held together by metal ions. These materials have sparked an interest in their catalytic properties because of their size, shape, and crystallinity. A recent study examines the use of MOFs as sulfur hosts for the preparation of cathodes for Li-S batteries [

2]. Another study claims that the different crystal planes also impact the absorption abilities and performance [

3]. This determines that the particle shape and size should also be considered for an appropriate sulfur host. The durability is a significant concern for MOFs as these materials hardly maintain their chemical and physical properties after continuous exposure to an alkaline solution. A study from [

4] presents a design of a stable MOF that retains its original structure for 15 days. This material can have a lot of prospects in flexible, lightweight, and portable electric batteries In the past few decades, governments all over the globe have taken severe measures to ensure road safety, like introducing dynamic surveillance technologies like CCTV cameras to capture law breakers, road sensors to ensure speed limits, and more. Thus, unconventional technologies like autonomous and connected cars are being researched to reduce life-endangering situations caused by erroneous human behavior like driving under the influence, distractions, and the inability to drive. The progression and arrival of autonomous cars are the results of remarkable research progress in IoT and embedded systems, sensors and ad hoc networks, data acquisition and analysis, wireless communication, and artificial intelligence. A list of key acronyms used throughout the paper is given in

Table 1.

1.1. Motivation

According to the World Health Organization (WHO), 1.3 million people die yearly in road accidents. It is the leading cause of death among kids aged 5–29. The leading risk factors in road accidents are: speeding, driving under the influence of alcohol, distracted driving, unsafe vehicles, and unsafe infrastructure [

5]. AV support driving tasks like sensing the surrounding environment, shortest and safest path planning, speed control, navigating, and parking without human input, thereby reducing accidents by human error. This has piqued the interest of a lot of researchers and manufacturers worldwide. Even though AVs are not imminently widespread, we can foresee their potential social and economic benefits. They can be vital in reducing road accidents, fuel consumption, and road congestion. In addition to saving time and space, they also facilitate the mobility of the elderly, and disabled [

6]. Economically backward households and those with disabilities that affect movement and motor skills can benefit from AVs as they can reduce transportation costs and improve accessibility. This can significantly enhance many people’s productivity and quality of life. These potential benefits are a significant motivating factor for this study. We wish to dig deeper, learn more about the ongoing developments, and understand if we can genuinely see that it replaces a lot of sectors with autonomous machines.

1.2. Contribution

This study attempts to provide an organized and comprehensive outline of the advanced automated driving-related software practices. This paper aims to fill the literature gap by providing a comprehensive overview of the existing literature. In addition, we discuss the psychology of people regarding AVs and emerging trends. Review papers covering specific functionalities and challenges are available on the subject. However, a study that covers: a review of the current research on the topic, emerging and available technologies, present challenges, and individual functions such as perception, planning, vehicle control, and detection together does not exist. Finally, we outline future research challenges and research directions.

1.3. Comparison

This paper aims to provide an overall picture of the recent advances in autonomous driving and the problems this technology can solve. Therefore, we present an extensive survey on various topics such as Vehicle Cybersecurity, Psychology, Pedestrian Detection, Motion Control, Path Planning, and Environment Perception. It has been found through a literature survey that several reviews have been conducted on AVs and their different aspects. The comparison of related works concerning our paper is summarized in

Table 2.

1.4. Organization

Section 2 of this paper presents the background of autonomous driving and the progress in its technologies.

Section 3 presents the research methodology used to make this review paper and classify the papers used. Next, in

Section 4, we present a review on environment perception, pedestrian detection, path planning, vehicle cybersecurity, and motion control.

Section 5 summarizes the psychology of autonomous cars.

Section 6 provides an overview of the paper, and

Section 7 presents the conclusion and future scope of self-driving cars and related technologies.

2. Methods and Materials

In this section, we showcase the research questions that we want to address in this paper. We will also explain the research methodology of how we formed the database of papers we referred to while conducting the literature survey for this paper. We will also go through our screening process and mapping process.

2.1. Research Questions

This study aims to provide an overview of autonomous driving technologies. So, we have defined the research questions in

Table 3.

2.2. Conducting the Research

A keyword search-based research was conducted systematically from the topic section of literature databases like IEEE, Science Direct, EBSCO, and Emerald. Specific terms such as “Autonomous driving,” “Autonomous car,” “Autonomous vehicle,” “Self driving vehicle,” and “Self driving car” were included in the title, abstract, or keywords of the papers. We only included papers listed in the academic journals of the mentioned databases. As a result, we did not seek to find all literature about autonomous driving. That would have resulted in an overwhelming amount of information due to the extensive applications, testing, and research in other fields and domains. Instead, we aimed to gain a comprehensive overview and classification to identify scientific gaps in the literature body. We only considered the literature publications that pertained to relevant topics such as roads, traffic, crossroads, commuting, production, algorithms, detection, path planning, and cybersecurity. We included a few relevant publications where the topic pertained to the automotive industry or the application mentioned would be relevant for AVs. For the psychology aspect of the study, we considered papers that did not necessarily have core topics regarding automation in vehicles but topics that acknowledged AVs and raised questions as to how public perception and daily tasks would change with the introduction of autonomous technology. Our initial search resulted in 80 papers. After analyzing the title, we eliminated duplicate papers. In this process, we found the occurrence of three papers in multiple databases. One final filtering process eliminated publications that did not meet scientific standards nor did a peer-review process. This resulted in the exclusion of three further publications, delivering a final number of 74 papers.

2.3. Screening of Papers

After searching for papers in scientific databases, we compiled our papers database. Not all of the papers we included in our final database were relevant to our research questions. As a result, we had to screen through the titles and journals of the papers to determine their actual relevance. Studies that were irrelevant to the research topic were excluded. The title of the paper could not always determine its relevancy of the paper. In such cases, we sent the papers in the second phase of screening, where we read the abstracts of the papers to determine their relevancy. Furthermore, we screened each paper using specific criteria where we excluded the following types of papers:

- (1)

Papers that were just posters.

- (2)

Papers that did not have full text available.

- (3)

Papers where English was not the primary language.

- (4)

Papers where AVs were taken in a different context.

- (5)

Papers that were totally out of scope for our research questions.

If all 5 of these exclusion criteria were passed and the paper’s abstract was considered relevant, we included the paper in our final database for a literature review.

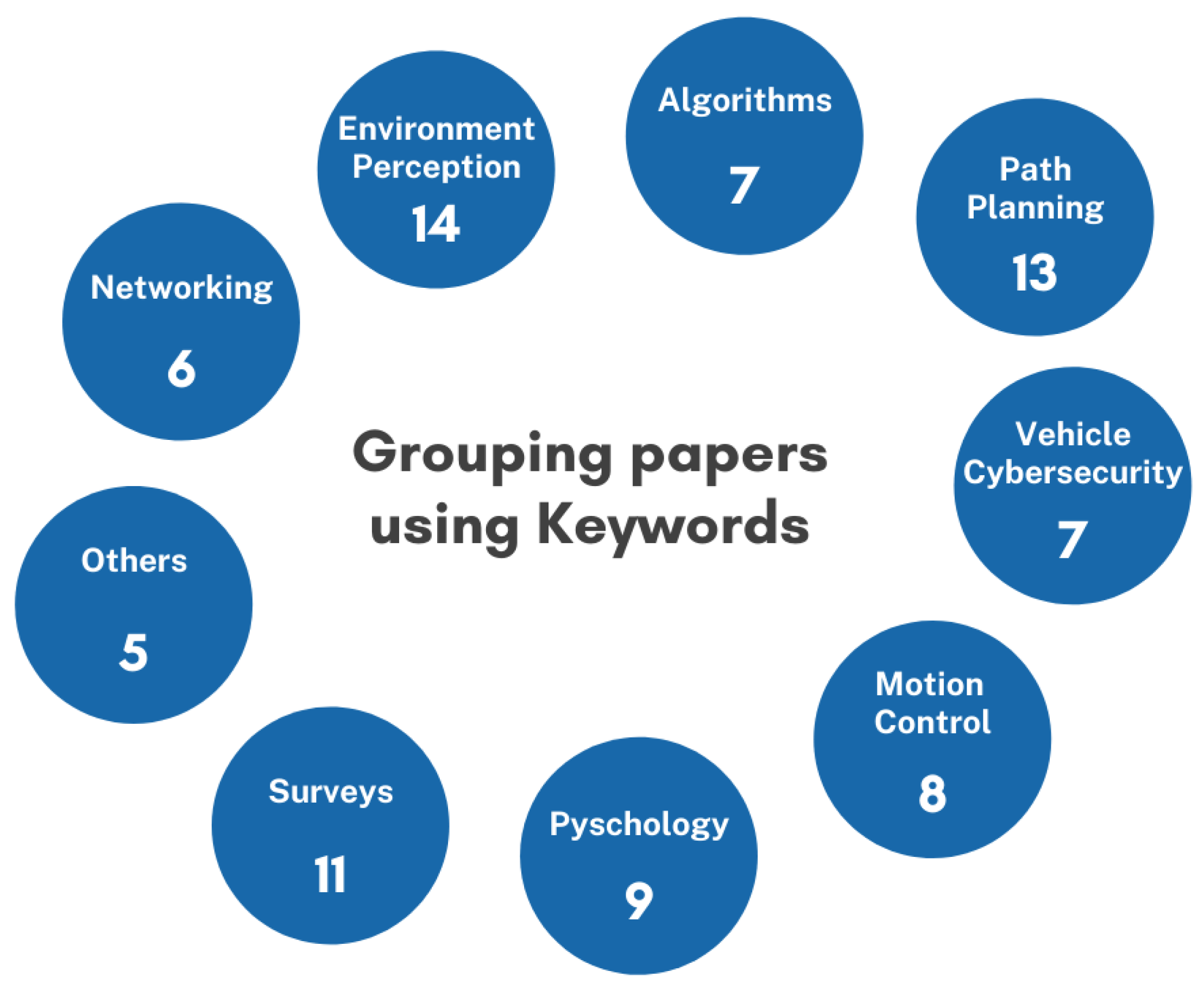

2.4. Grouping Using Keywords

After screening the papers, the next step was to look at the keywords of the papers in the abstract and then group them according to their different domains and specializations. This helped us develop a better understanding of the papers. We categorized them into the following categories:

- (1)

Review/survey papers on AV.

- (2)

Papers focusing on AI/ML algorithms in AV.

- (3)

Papers focusing on environment perception and pedestrian detection.

- (4)

Papers focusing on motion control.

- (5)

Papers focusing on path planning.

- (6)

Papers focusing on vehicle cybersecurity.

- (7)

Papers focusing on networking and communication.

- (8)

Papers focusing on psychology towards AV.

- (9)

General papers that do not have a specific focus.

Figure 1 is a visual representation of classification according to domains.

2.5. Mapping the Process

The Mapping process was based on two parameters:

(1) Year of Publication—we have analyzed the number of publications released yearly among the papers in our final database. Three papers from this were from 2014, the year we started collecting papers from 2015 and 2016 had three papers, and 2017 had four. Most of our database has papers from 2018, 2019, 2020, and 2021—thirteen, fifteen, nineteen, and fourteen, respectively. This analysis showed us that research on AVs has been happening for a long time and increasing each year.

Figure 2 demonstrates the increase in research over the years. (2) Topic of Research—after grouping the papers according to different keywords, we learned the main focus areas in which research was the spearhead for AV technologies. We decided to choose five of the nine topics to review the current research and development in those topics. We chose Environment Perception, Pedestrian Detection, Path Planning, Vehicle Cybersecurity, Motion Control, and Psychology towards AV. Environment Perception and Pedestrian Detection are two separate topics because there was a lot of research focusing on just pedestrians and how the algorithms required in pedestrian detection are different from object detection. The other survey and review papers were used as a reference while writing this paper, and a comparison between the five review papers is also provided in the Introduction.

3. Background of Autonomous Vehicles

In the past, vehicles were very straightforward; their main goal was just transportation. Over time, as the world grew and technology advanced, a vehicle’s main goal was not limited to transportation, but also included comfort, safety, and convenience. This led to extensive research on improving vehicles and incorporating technological breakthroughs and advancements. The idea of turning vehicles autonomous was soon conceived. Autonomous Driving is arguably the next significant disruptive innovation. However, like any other technology, research and development for AVs began with laying a base and defining a few ground terms. These terms, listed in

Section 3.1, would later serve as a starting point when branching out into different aspects of AVs. As research in the sector of AV grew, researchers started experimenting with autonomy. Different technologies were being proposed and added to vehicles to make them autonomous.

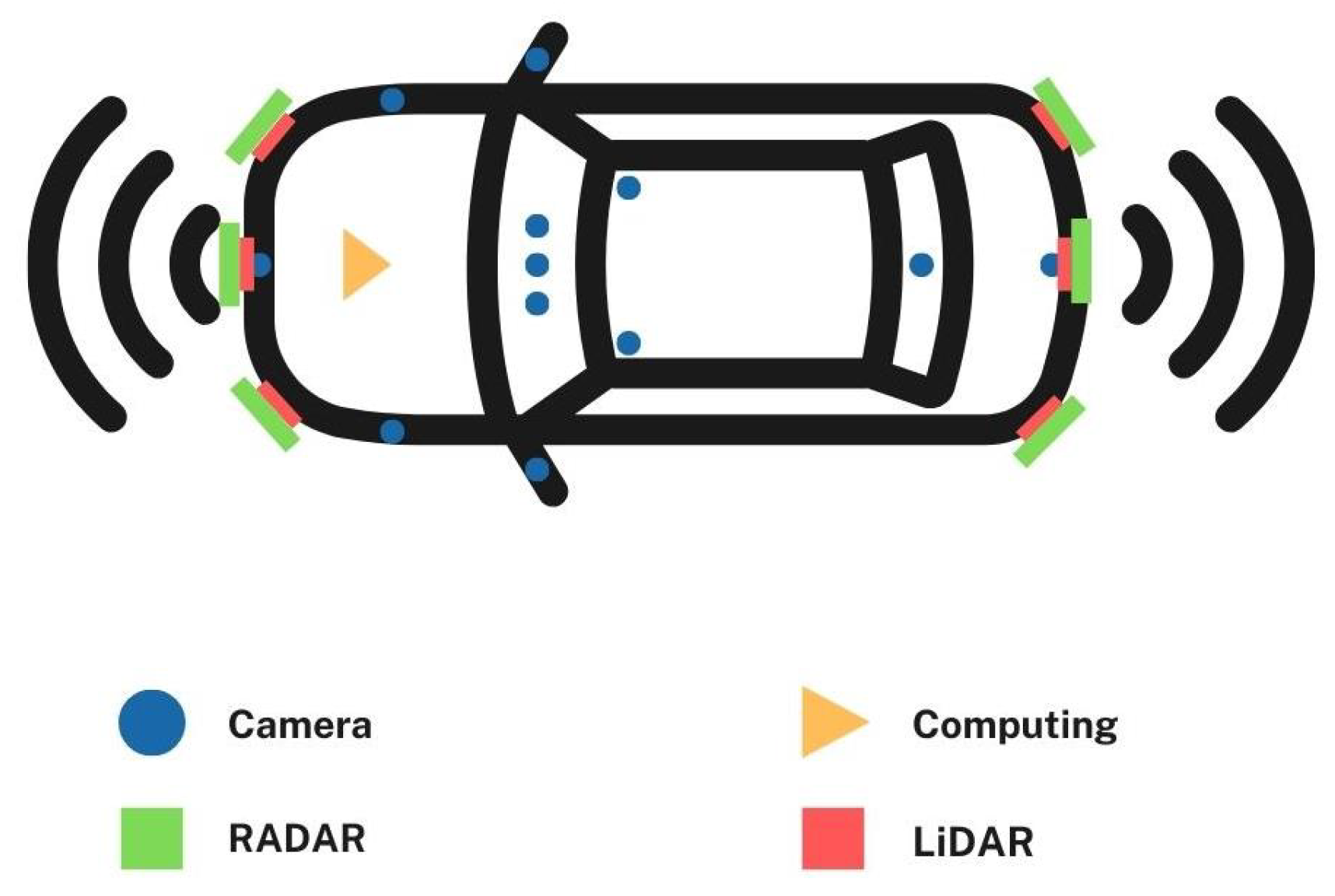

Section 3.2 discusses the different levels of autonomy and what technologies are present in each level. Today, Avs are furnished with various sensors like cameras, RADAR, LiDAR, and Ultrasonic actuators to achieve security and automation. These provide information to the vehicle about object detection, lane occupancy, traffic flow, and more. Camera sensors support the surrounding view, whereas Radar and LIDAR sensors are used for object detection and collision avoidance, respectively. Some of the popular sensors used for autonomous vehicles are discussed as follows: We have detailed some standard sensors that are present in modern vehicles in

Section 3.3. The data gathered from all the different sensors are used as input for multiple algorithms to gather useful information for the vehicle to interpret and take action. These algorithms discussed in

Section 3.4 are AI, ML, and DL combined with image processing and are used to help an AV perform autonomy-related tasks.

3.1. Common Terms Related to Autonomous Vehicles

DDT: The tactical functions required to operate AVs in on-road traffic, including short limitations and excluding tactical functions such as trip planning and selecting endpoints, is DDT. Vehicle control–Lateral (Steering left and right), Longitudinal (Acceleration and Deceleration); Environment Monitoring; and Maneuver planning; are all a part of DDT. ADS: Software and hardware capable of continuously performing the DDT regardless of whether the DDT is restricted to a specific ODD. This term is usually used to describe levels 3, 4, and 5 of an ADS. ODD: ‘Driving automation systems or features function under specific operating conditions determined by geographical, environmental, and time-of-day conditions. They also depend on the presence or absence of traffic or road characteristics. If a self-driving vehicle can drive on the highway and in an urban environment, then the ODD of the vehicle could incorporate the accompanying data to characterize the abilities and restrictions of the ADS based on road category, topographical region, and climate conditions. OEDR: It is a sub-task of DDT. It includes environment monitoring like detecting, recognizing, and classifying objects and providing appropriate responses to events. OEDR works inside an ODD and allows the ADS of the vehicle to differentiate between different vehicles, pedestrians, and objects and respond to any events that would influence the driving or safety of the vehicle. Active Safety Systems: Active safety systems monitor and sense the conditions both outside and inside AVs to identify the dangers, potential and present; to the passengers, pedestrians, vehicles, etc. It intervenes automatically to lessen the probability of possible collisions by alerting the driver, adjusting the vehicle system, and controlling the subsystems like throttle, brakes, suspensions, etc. [

11].

3.2. Levels of Autonomy

The Society of Automobile Engineers (SAE) defines six levels of automation [

11]. Level Zero Automation indicates no automation. At level zero, the driver performs the entire DDT with the help of active safety systems. Level One Automation has the ADS perform either longitudinal or lateral control of the vehicle, but not the complete OEDR. The DDT fallback is the driver, so the driver must be present in the vehicle at all times and be able to take control of the vehicle at any time. Systems like Electronic Stability Control, Anti-lock Braking Systems, and Adaptive Cruise Control come under Level One Automation. In Level Two Automation, the ADS can perform both longitudinal and lateral control of the vehicle, but is similar to Level One and cannot perform the complete OEDR. The DDT fallback again is the driver, so they must always be present in the vehicle and be able to take control of the vehicle at any instance. In addition to the systems mentioned in Level One, Automatic Emergency Braking and collision prevention systems come under Level Two Automation. In Level Three Automation, the ADS performs the entire DDT. The DDT fallback again is the driver, and the driver gets notified if the ODD’s limits will be exceeded. The driver also gets notified if any performance-relevant system fails. Level Four Automation does not require any human intervention. The ADS of a vehicle at Level Four Automation will perform the entire DDT in restricted ODDs-places with proper maps and road structures. The driver can intervene if they want to, but the ADS will achieve a minimal risk on any DDT Fallback. Level Five Automation is the final level of automation. There is complete automation, and the ADS can perform the entire ODD without any limitations. DDT fallback is also taken care of by the ADS and the only time a driver takes control of the vehicle is when they request it.

Figure 3 is a representation of all of the different Levels of Autonomy.

3.3. Sensors Used in Autonomous Vehicles

Cameras: Autonomous vehicles have visible light cameras to provide a 360-degree view of the surroundings. They are great at object detection and recognition, and this data is sent to the AI-based algorithms for further use. However, such cameras are inaccurate in dark conditions and generate a large amount of data to process. Infrared cameras are also used for better performance in conditions of low visibility. RADAR: It is a sensor that uses radio waves to calculate factors like distance, velocity, and angle. With the help of radar transmitters, AVs can emit radio waves and receive the reflected waves with the help of radar receivers. Radar operates well in most weathers and over long distances, but it may falsely identify objects [

12]. LiDAR: A sensor that uses light as a medium to measure distances is calculated by measuring the time it takes for the light to get reflected in the receiver. Such systems emit laser beams that hit the environment and reflect to a photo-detector. The collected beams are converged together like a point cloud. This creates a 3D image of the environment. Although LiDAR is a powerful and efficient sensor, it is expensive. GNSS: It describes the layout and configuration of satellites, providing vital information such as navigation, positioning, and traffic. GNSS uses triangulation to detect the position of receivers and calculates the distance between satellites in the geostationary orbit and the vehicle. These signals, however, are weak and can be manipulated by interference. Ultrasonic sensors: They are short-range parking sensors and are pretty cheap. They have a limited range, but are ideal for low-speed situations. These sensors measure the distance of a target object by using ultrasonic waves, and the reflected signals are converted into electrical signals. GPS: It is a sensor that provides users with spatial information such as navigation, positioning, and timing. The service is free for civilians and is a vital aspect of path planning in autonomous vehicles.

Figure 4 represents the positioning of different sensors on a vehicle.

3.4. Architectures and Algorithms

CNN: This type of neural network is used for image processing as they have high accuracy in extracting distinctive features from images using the convolution function. It takes a 2D input and uses multiple hidden layers to extract high-level features. After taking the input, it identifies useful patterns within the images based on the spatial organization of the input pixels. Since there is no pre-processing required, CNN can be deployed easily. In AVs, they are used for path planning and pedestrian detection [

12]. RCNN: This type of neural network is used for object detection. It is preferred over conventional CNN as using CNN to detect an object within an image takes up a lot of space since the number of occurrences of the object does not remain constant. RCNN uses a selective searching process to identify the boundaries and labels for each object in an image and create a boundary box around them. The bounding box is finally subjected to a linear regression model to find accurate coordinates for the box. In AVs, RCNN is used for pedestrian, object, and traffic sign detection [

12]. SLAM: SLAM is mainly used to estimate the relative position of static objects in an environment based on measurements made by LiDAR or RADAR sensors. RADAR-SLAM can provide velocity information by acting as an odometer and even perform localization based on map data. LiDAR-SLAM or Visual SLAM uses a monocular or stereo camera to track the features of consecutive images while estimating the relative orientation and translation. SLAM is used in AVs for Motion Control, Path Planning, and sometimes even pedestrian detection [

13]. K-Means: It is an unsupervised algorithm that groups unlabeled or unclassified datasets into predefined clusters. It associates every cluster with a centroid and aims to minimize the total sum of distances between cluster centroids and their data points iteratively [

14]. YOLO: It is a CNN-based algorithm written by Joseph Redmon in the Darknet framework, which works efficiently for real-time object detection. Following that, there has been a succession of object detectors in computer vision based on YOLO: YOLOv2, YOLOv3, and YOLOv4. It is a single-stage detector that handles object identification and classification in one network pass. YOLO-based models are efficient and easy to deploy [

15].

4. Autonomous Driving: Key Technologies

AVs alleviate the human burden by performing many intelligent operations like obstacle avoidance, traffic sign detection, computing the most efficient path to be taken, and more [

6]. However, to do so, these vehicles require contemporaneous solutions in terms of perception, control, and planning. This section explores the functions above and the research progress done in their technologies.

4.1. Environment Perception

Any autonomous vehicle must independently perceive its environment to gain necessary information and make control decisions accordingly. Environmental perception can be performed using visual navigation, laser navigation, or radar navigation. Laser and radar sensors are deployed to gain extensive information to perceive the environment. Laser sensors are used as bridges between the real world and the data world, and radar sensors are used to calculate the distance. Visual sensors are to detect the traffic signs [

16]. AVs need to classify all the different objects that are present in their surrounding areas. Object detection is broadly classified into the following three categories [

17]:

Region selection;

Feature extraction;

Classification.

Several constraints and added complexities arise in the image scenario as it approaches real-world conditions, such as:

Partial, side, or angled view, multiple objects in a certain area, similar looking objects at different distances—making them appear of different sizes, while in reality, they are of the same size, occluded objects, variation in illumination due to the time of day, slippery roads, unclear road markings, hazy weather conditions like rain, fog, snow, and changing traffic lights. One of the most challenging tasks in environment perception of automated driving is the simultaneous processing of noisy, unclassified, unstructured, substantial point clouds obtained from 3D LiDAR [

18]. DL solves this as it helps us segment, classify and measure the distance of LiDAR point clouds in real-time to detect objects in a dynamic environment. YOLOv3 is mostly used as an object detection technique based on deep learning. K-means clustering is used to divide a picture into grid cells. Every grid cell produces bounding boxes. The object center falls into one of these bounding boxes, and the grid cell corresponding to that bounding box is responsible for detecting this object. The bounding box describes the coordinates of the box center, the height of the box, the width of the box, and if an object’s center has fallen into the bounding box or not [

19]. Images are captured using RGB, LiDAR, and RADAR cameras and sensors. DL is used on these images for environment perception and object detection-mainly for: differentiating between individual objects, vehicle tracking, self-localization, pedestrian detection, predicting trajectories for unknown paths by generalization, and understanding traffic patterns.

4.2. Pedestrian Detection

Pedestrian recognition is the use of sensors to detect pedestrians in or around the path of an AV. It incorporates four components: Segmentation, Feature Extraction, Segment categorization, and Track Categorization [

20]. Blurry weather conditions limit the pre-existing pedestrian detection methods. These reduce the perceptibility and cause hazy outlines in the images taken by the cameras [

21]. A predominant challenge faced by these methods is detecting pedestrians in misty weather. The low visibility, color cast, and unclear outline make it challenging to differentiate pedestrians from the background. Chen et al. suggest in their paper a pedestrian detection method that is based on 3D LiDAR data. The pipeline of their algorithm is as follows:

Transforming 3D LiDAR data to a 2D image. This ensures that the accuracy during detection does not get affected by any variation in illumination;

Building a new dataset for accurately detecting pedestrians outside the field of view of the camera. This helps in improving the safety factor;

Clustering and filtering the dataset to make the detection of pedestrians more prominent and separate objects from the background;

A CNN based on PVANET is proposed for increasing the accuracy of pedestrian detection.

This has been tested to be faster than the original PVANET and RCNN models [

21]. A cheaper algorithm is proposed by [

22] based on 2D LiDAR and monocular images-PPLP Net. It consists of three sub-networks: OrientNet, RPN, and PredictorNet. The OrientNet supports powerful 2D pedestrian detection algorithms based on neural networks. Two-dimensional LiDAR point clouds are transferred to occupancy grid maps by the RPN to estimate non-oriented pedestrian bounding boxes. For a final regression, the outputs from these two algorithms are passed through PredictorNet. The final output of the system is the collection of 3D bounding boxes and orientations for all the pedestrians present. Zhang et al. discuss in their paper that by combining the information we receive from process tracking with segment categorization, we can resolve the occlusion problem. This method divides the point cloud into groups that are not dependent on each other. We need to plan for three kinds of features to get complete cues. An algorithm for univariate feature selection and feature linking is used to synthesize 18 active features. Then, trunk categorization for subsequent frames is done based on the segment categorization for a distinct frame. A filter for probability data linkage is taken by using a particle filter for the categorization. For pedestrians obstructed from the camera’s view, track categorization can be used to enhance their recognition [

23].

Table 4 presents the summary of different pedestrian detection algorithms.

4.3. Path Planning

Path planning is a challenging task where an object has to find the optimal path to drive between two points. Path planning is also known as Trajectory Planning. Avs use it to provide an optimal path for the vehicle in real-time based on onboard sensors like LIDAR, Camera, GPS, Millimeter Wave Radar, Inertial sensor, etc. A good self-driving car should be able to make proper analysis and judgment using all the acquired information regarding roads, traffic, and weather and accurately predict a path for the vehicle. In this paper, we will look at some of the different path planning algorithms developed over time and the different approaches used. In a paper titled ‘‘Perception, Planning and Control for Self-Driving System Based on On-board Sensors,’’ a real-time lane detection system is proposed, with vision system functions as the primary base [

13]. They use a deep learning algorithm based on CNN. Their system improves the traditional lane detection method by using CNN to detect lane markings and lane edges for curved lanes. The advantages of using CNN are that essential features are automatically detected without human supervision. It can also run on any device as it is very computationally efficient. A discrete optimization approach based on the frenet coordinate system is used for path calculation; in their approach, a finite number of paths are calculated, and a cost for each path is calculated. The path with the least cost is chosen as the final result. Their approach also includes a cubic spline interpolation technique that generates a path based on GPS data. Compared to the traditional method of the cubic polynomial fitting algorithm, the curve fit path by the cubic spline interpolation technique is much smoother and more satisfied with the vehicle motion pattern. They use LabVIEW to simulate their path planning and lane detection models. They use images of curved and straight lanes where the proposed path based on their method is highlighted in green. They compare the pure pursuit trajectory planning method and frenet optimized trajectory planning method on the simulator to test results. They also test out their approach on a small model. In another paper entitled ‘‘Navigation Engine Design for Automated Driving Using INS/GNSS/3D LiDAR-SLAM and Integrity Assessment,’’ a multisensor fusion system is proposed [

24]. This multisensor fusion system, along with an INS, a GNSS, and a LiDAR system, would be used to implement a 3D SLAM. This multisensor fusion system is proposed as an improvement over the conventional INS GNSS and odometer system as it compensates for drawbacks in the previous system. It helps to remove the INS-drift by using a highly integrated INS-aiding LiDAR-SLAM so that the performance. It also increases robustness by adjusting to different environments because it uses the INS’s initial values. The proposed FDE also contributes to the SLAM by eliminating failure solutions like algorithm divergence and any local solutions. High dynamic movement is dealt with using the SLAM model. A central fusion filter benefits from integrity assessments to avoid failure measurements in the updating process based on INS-aiding SLAM, consequently increasing reliability and accuracy. As a result of this multisensor design, a variety of situations can be dealt with, including long-term GNSS outages, deep urban areas, and highways. The results of this proposed system have an accuracy of under 1 m in challenging scenarios. The paper proposes such a design because, according to the authors, an automated driving system will soon require a multisensor fusion system to meet the high accuracy requirements.

Figure 5 demonstrates overtaking a moving vehicle using an optimal path.

4.4. Vehicle Cyber Security

The automotive sector is going through a significant development trend regarding autonomous driving. Sensors, communication systems, actuators, etc., are some of the components becoming more and more common in vehicles due to this. This has led to more complexity, which has increased the number of ways cyber-attacks can occur [

25]. This leads to attackers having access to the vehicles from outside. Several security-related projects have been conducted as a result of these concerns. Bold initiatives are being taken by technology giants, automobile manufacturers, and governments all over the world to build safer and more affordable AVs and bring them to market quickly. Cooperation is essential for tackling the issue of cybersecurity. Various publishers published a collection of cyber attacks [

26]. Based on the different levels of details, these attacks were classified and presented by Florian Sommer et al. [

25]. By categorizing attacks according to varying levels of fact, we can use the taxonomy for security testing and TARA to provide a uniform description for testers and security developers. The classification has a collection of 162 published security attacks, while another version has 413 multi-stage attacks on AVs. These are made available online to collect knowledge for further research on these topics. Remote control of an AV is another area where more research is required. When an autonomous vehicle is under attack, all the vehicle controlling operations need to be assigned to the driver [

27]. This approach won’t be possible in a level 5 automation. The velocity and position are essential parameters to control a vehicle remotely. For this, a possible solution could be deploying IoT sensors in ITS [

28,

29]. If the data communications through wireless channels are under attack, the attack signals need to be isolated from all the decision-making processes. Once the attack signals are detected, the jammer could be set up to block attackers only, without interfering with communication.

Figure 6 demonstrates the potential attack surfaces vulnerable in a cyber attack.

4.5. Motion Control

Algorithms of motion planning decide paths for given scenarios. It is crucial for such algorithms to also learn the driving styles of surrounding vehicles [

30]. It is implemented by steering control and accelerating suitably to generate safe motions for the AV. Dang et al. suggest three essential categories for developing motion control algorithms—Artificial Potential Field, Sampling-based technique, and optimal control techniques [

31]. The Artificial Potential Field constructs possible fields of motion based on target pull and obstruction repulsion. It then analyses the target’s shortest path along the potential field gradient so that the computing cost becomes small. The downside is that this algorithm does not account for future driving actions.

For safety reasons, a fair estimate of the intentions of neighboring vehicles needs to be computed. For such traffic situations, Liu et al. suggest the Driving Intention Prediction Method, which uses the Hidden Markov model [

32]. Cuenca et al., in their paper, suggest a Q-learning algorithm to navigate roundabouts correctly, as it is a complicated task in driving situations. Knowledge of entry/exit lanes, priority rules and intentions of other vehicles in the traffic is required [

33].

Wu et al., in their paper, suggest a Gray Prediction Model estimate gaps and find the correct instance of time for lane switching. Continuous path planning is safe and efficient for lane switching [

34]. Overtaking is an essential element in motion control because, if not done correctly, it can lead to accidents. Model-Predictive-Control is a technique used for such maneuvers. It determines the probability of this vehicle overtaking the vehicle in front based on their comparative speeds [

35].

For sideways motion control, Deep Reinforcement Learning methods can be used. This includes techniques controlling steering-angle, braking, and acceleration [

36].

5. Psychology

Customers’ lack of trust and acceptance is a major roadblock to autonomous driving and vehicles. We require more government and automobile manufacturers’ involvement to increase consumer trust. Transparency plays a key role in increasing trust regarding self-driving cars. To build consumer trust, transparency must be modeled as a Non-functional Requirement for producing self-driving cars. It would also expose the consumer to what are the potentially significant risks of such technology, which is unproven, and what are the potential large-scale consequences. Understanding what makes autonomous cars more acceptable is key for making changes going forward. A study examined the key predictors that will lead to acceptance of self-driving cars [

37]. Four hundred participants were surveyed to assess the factors leading to acceptance of self-driving cars, and “Posthuman ability” was the strongest factor. It suggests that people are more receptive to technology that can exceed human capabilities. This study also revealed several key factors regarding the acceptance of AVs. This, in turn, helps vehicle manufacturers make changes so that consumers widely accept the upcoming models. People often identify casual links making judgments of an event. In the case of AV, the same applies as AI and human drivers differ regarding the blame level. A study was conducted to see how the level of blame varies based on attribution theory between an AI and a human driver [

38]. The experiment used a story about a car driving in the dark and hitting a jaywalker. This is similar to the self-driving car accident by Uber. Eight different scenarios were modified − 2 (victim survived vs. victim died) × 2 (human vs. AI driver) × 2 (female vs. male driver) to fit the experimental design. This story was based on an accident that occurred in Arizona with a self-driving car (Wakabayashi, 2018). This study concluded that the participants blamed AI drivers more than human drivers. An important parameter that the automakers and government must consider is how the consumer’s attitude has changed over the years and whether that change is positive or negative. The change in perception over the years shows our progress and helps create a road map on how to keep changing public perspectives in the coming years. Through text analysis, a study examined attitudes towards self-driving vehicles and factors that motivated them [

39]. This study also adds to a previous analysis based on 2016 and 2017 data [

39]. Associating the topics with the survey year revealed the changes in people’s mentality toward AVs. A Bayes factor of 6.3 represents “positive” evidence of an effect obtained for the survey year. The study found a change in perception in response to the survey questions compared to the previous year’s result. A similar study examined how individuals attribute responsibility to an AI agent or a human agent under the Expectancy Violation Theory for a positive or negative event [

40]. People who participated in the study were given a fictitious news article to read as stimuli. The news articles were of two types-one contained an AI agent, and the other held a human agent. After reading the article, all participants were asked about their perceptions of the driver. The study had similar findings where they concluded that if an adverse event occurred, an AI agent would be deemed more responsible than a human. Still, it was also found that if an AI agent results in a positive outcome, it receives more praise than a human agent.

6. Challenges

In this section, we discuss the issues prevalent in research on AI in AV. Research on this topic highlights the numerous advantages AV brings to the table. We studied the literary works on this topic and reviewed them in this paper. The common trend in the challenges is the lack of implementation of proposed algorithms due to a scarcity of data in this domain. In pedestrian detection, no real-world experimentation is done to test the proposed methods’ ability to classify objects in real-time. As a result of heavy obstructions, the orientation of a pedestrian may occasionally not match the image mask of another, resulting in errors in orientation estimation. So no algorithm is completely accurate or fast. There is a compromise between speed and accuracy while detecting pedestrians in the dark. Prediction of pedestrians’ behavior is often overlooked. In trajectory planning, most of the research papers that focused on trajectory detection did not have any real-world demonstration and solely relied on either simulation to prove their approach for trajectory detection or proposed problems to solutions using deep learning algorithms. The papers which did have real-world approaches were now obsolete. In motion control, the Model Predictive Control algorithm is the main algorithm used for lateral motion control. Still, it has limited fault detection, and the uncertainties that do not match given conditions are not eliminated [

20]. In psychology research, there is no real-life implementation for non-functional requirements regarding transparency in self-driving cars, and it’s not much studied, so the literature search was limited. With dramatically transforming technology, the availability bias makes it likely that they will be more influenced by the crashes that have occurred with various types of automated vehicles than the new experiences self-driving vehicles might afford.

7. Future Scope

Vehicles and other transportation systems are under research and development to usher in an autonomous future. The future is heading towards a technologically advanced driverless world. These developments in AVs will lead to further advancements in technology. Various applications based on IoV and VANETs have already been proposed. Advancements in IoV have made it possible to construct powerful next-generation infrastructures, acting as an interface to connect multiple objects such as vehicular sensors, actuators, and entire vehicles to the Internet. Research into technologies such as BCG is being carried out to distribute content through cloud support in developing smart cities based on IoT [

41]. VANETs is a technology based on IoV. Its primary role is to ensure uninterrupted access to resources such as the Internet for connectivity [

42]. The rapid advancement of the Internet has increased the number of users, and VANETs can be used to satisfy their needs by accessing resources and staying connected to the Internet while on the move. It also had potential uses in other fields such as health and safety, intelligent transportation systems, and military systems, to name a few. Using VANET, we can cluster vehicles according to routing, mobility, and behaviors on the road, allowing us to keep an adaptive approach in monitoring traffic and pollution density. This information will also help the AVs make adaptive decisions for route selection. The advantages of using VANET for tracking, navigating, routing, and communication will become more apparent once vehicle-to-vehicle communication becomes approved by governments and all cars on the road adapt to this technology [

43]. The study at [

44] suggests using Radio Frequency Identification to be utilized by moving vehicles and roadside units for Vehicle-to-Infrastructure communication as efficient cellular or LTE communication channels exist between Roadside units and the cloud platform. This suggests a secure and safe channel to get information to and from the vehicular cloud. According to the study, it can also be used for Vehicle-to-Vehicle communication and will have countless benefits in the healthcare sector. The study at [

45] suggests a new routing algorithm based on collaborative learning for delivering information to the destination, maximizing throughput, and minimizing delay. This technology would help vehicular sensor networks (VSNs) in case of an increase in the density of the vehicles on the road and route jamming in the network. Based on the closeby access points, the learning automata learn from experience and make routing decisions quickly.

8. Conclusions

In this survey, we have outlined, highlighted, and investigated the technological advancements in autonomous driving. We also examined the recent advances and case studies on autonomous driving. While conducting this review, we discovered and discussed all the future research challenges for further studies in this domain that were mostly related to implementing the proposed technologies in the real world. Certain aspects of safety, path planning algorithms, security, and privacy have also been reviewed in this paper. Non-technical challenges such as consumer trust, governance, and human behavior towards autonomous driving play a major role in bringing AVs and related technologies to widespread use. This review could contribute with necessary insights into the emerging domains in autonomous driving. We can conclude that autonomous vehicles are the way of the future, and vehicles with Level 3 Automation are ready for commercialization. Despite the enormous advances in these past years with autonomous technology, we think it is still early to speculate about the commercialization of AV above Level 3 Automation. With efforts toward robustness at all levels of automation, we believe the automated vehicles running on efficient and safe roads are just around the corner.

Author Contributions

Conceptualization, D.P., N.P., A.R. and M.C.; data curation, D.P., N.P., A.R.; formal analysis, D.P.; funding acquisition, W.C.; investigation, N.P., N.K. and G.P.J.; methodology, N.P., A.R. and M.C.; project administration, W.C.; resources, M.C. and G.P.J.; supervision, M.C. and W.C.; validation, M.C., N.K. and W.C.; visualization, A.R.; writing—original draft, D.P., N.P. and A.R.; writing—review and editing, G.P.J. All authors have read and agreed to the published version of the manuscript.

Funding

This work is supported by the Korea Agency for Infrastructure Technology Advancement (KAIA) grant funded by the Ministry of Land, Infrastructure and Transport (Grant 21AMDP-C161756-01).

Conflicts of Interest

The authors declare no conflict of interest.

References

- Zhang, T.; Zhang, L.; Zhao, L.; Huang, X.; Hou, Y. Catalytic Effects in the Cathode of Li-S Batteries: Accelerating polysulfides redox conversion. EnergyChem 2020, 2, 100036. [Google Scholar] [CrossRef]

- Li, W.; Guo, X.; Geng, P.; Du, M.; Jing, Q.; Chen, X.; Zhang, G.; Li, H.; Xu, Q.; Braunstein, P.; et al. Rational Design and General Synthesis of Multimetallic Metal–Organic Framework Nano-Octahedra for Enhanced Li–S Battery. Adv. Mater. 2021, 33, 2105163. [Google Scholar] [CrossRef] [PubMed]

- Geng, P.; Wang, L.; Du, M.; Bai, Y.; Li, W.; Liu, Y.; Chen, S.; Braunstein, P.; Xu, Q.; Pang, H. MIL-96-Al for Li–S Batteries: Shape or Size? Adv. Mater. 2021, 34, 2107836. [Google Scholar] [CrossRef] [PubMed]

- Zheng, S.; Li, Q.; Xue, H.; Pang, H.; Xu, Q. A highly alkaline-stable metal oxide@metal–organic framework composite for high-performance electrochemical energy storage. Natl. Sci. Rev. 2019, 7, 305–314. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- WHO. Global Status Report on Road Safety 2018; World Health Organization: Geneva, Switzerland, 2018. [Google Scholar]

- Yaqoob, I.; Khan, L.U.; Kazmi, S.M.A.; Imran, M.; Guizani, N.; Hong, C.S. Autonomous Driving Cars in Smart Cities: Recent Advances, Requirements, and Challenges. IEEE Netw. 2019, 34, 174–181. [Google Scholar] [CrossRef]

- Yurtsever, E.; Lambert, J.; Carballo, A.; Takeda, K. A Survey of Autonomous Driving: Common Practices and Emerging Technologies. IEEE Access 2020, 8, 58443–58469. [Google Scholar] [CrossRef]

- Gandia, R.M.; Antonialli, F.; Cavazza, B.H.; Neto, A.M.; de Lima, D.A.; Sugano, J.Y.; Nicolai, I.; Zambalde, A.L. Autonomous vehicles: Scientometric and bibliometric review. Transp. Rev. 2018, 39, 9–28. [Google Scholar] [CrossRef]

- Hussain, R.; Zeadally, S. Autonomous Cars: Research Results, Issues, and Future Challenges. IEEE Commun. Surv. Tutorials 2018, 21, 1275–1313. [Google Scholar] [CrossRef]

- Faisal, A.; Kamruzzaman, M.; Yigitcanlar, T.; Currie, G. Understanding autonomous vehicles: A systematic literature review on capability, impact, planning and policy. J. Transp. Land Use 2019, 12, 45–72. [Google Scholar] [CrossRef] [Green Version]

- SAE International. Taxonomy and Definitions for Terms Related to Driving Automation Systems for on-Road Motor Vehicles J3016; SAE International: Warrendale, PA, USA, 2018; Volume J3016, p. 35. [Google Scholar] [CrossRef]

- Miglani, A.; Kumar, N. Deep learning models for traffic flow prediction in autonomous vehicles: A review, solutions, and challenges. Veh. Commun. 2019, 20, 100184. [Google Scholar] [CrossRef]

- Dai, Y.; Lee, S.-G. Perception, Planning and Control for Self-Driving System Based on On-board Sensors. Adv. Mech. Eng. 2020, 12, 1687814020956494. [Google Scholar] [CrossRef]

- Ahmed, M.; Seraj, R.; Islam, S.M.S. The k-means Algorithm: A Comprehensive Survey and Performance Evaluation. Electronics 2020, 9, 1295. [Google Scholar] [CrossRef]

- Li, C.; Wang, R.; Li, J.; Fei, L. Face Detection Based on YOLOv3. In Recent Trends in Intelligent Computing, Communication and Devices; Advances in Intelligent Systems and Computing; Jain, V., Patnaik, S., Popențiu Vlădicescu, F., Sethi, I., Eds.; Springer: Singapore, 2020; Volume 1006. [Google Scholar] [CrossRef]

- Zhao, J.; Liang, B.; Chen, Q. The key technology toward the self-driving car. Int. J. Intell. Unmanned Syst. 2018, 6, 2–20. [Google Scholar] [CrossRef]

- Gupta, A.; Anpalagan, A.; Guan, L.; Khwaja, A.S. Deep learning for object detection and scene perception in self-driving cars: Survey, challenges, and open issues. Array 2021, 10, 100057. [Google Scholar] [CrossRef]

- Jung, Y.; Seo, S.-W.; Kim, S.-W. Curb Detection and Tracking in Low-Resolution 3D Point Clouds Based on Optimization Framework. IEEE Trans. Intell. Transp. Syst. 2019, 21, 3893–3908. [Google Scholar] [CrossRef]

- Li, G.; Yang, Y.; Qu, X. Deep Learning Approaches on Pedestrian Detection in Hazy Weather. IEEE Trans. Ind. Electron. 2019, 67, 8889–8899. [Google Scholar] [CrossRef]

- Bachute, M.R.; Subhedar, J.M. Autonomous Driving Architectures: Insights of Machine Learning and Deep Learning Algorithms. Mach. Learn. Appl. 2021, 6, 100164. [Google Scholar] [CrossRef]

- Chen, G.; Mao, Z.; Yi, H.; Li, X.; Bai, B.; Liu, M.; Zhou, H. Pedestrian detection based on panoramic depth map transformed from 3d-lidar data. Period. Polytech. Electr. Eng. Comput. Sci. B 2020, 64, 274–285. [Google Scholar] [CrossRef] [Green Version]

- Bu, F.; Le, T.; Du, X.; Vasudevan, R.; Johnson-Roberson, M. Pedestrian Planar LiDAR Pose (PPLP) Network for Oriented Pedestrian Detection Based on Planar LiDAR and Monocular Images. IEEE Robot. Autom. Lett. 2019, 5, 1626–1633. [Google Scholar] [CrossRef]

- Zhang, S.; Yang, J.; Schiele, B. Occluded pedestrian detection through guided attention in cnns. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 6995–7003. [Google Scholar]

- Chiang, K.-W.; Tsai, G.-J.; Li, Y.-H.; Li, Y.; El-Sheimy, N. Navigation Engine Design for Automated Driving Using INS/GNSS/3D LiDAR-SLAM and Integrity Assessment. Remote Sens. 2020, 12, 1564. [Google Scholar] [CrossRef]

- Sommer, F.; Dürrwang, J.; Kriesten, R. Survey and Classification of Automotive Security Attacks. Information 2019, 10, 148. [Google Scholar] [CrossRef] [Green Version]

- Ring, M.; Dürrwang, J.; Sommer, F.; Kriesten, R. Survey on vehicular attacks-building a vulnerability database. In Proceedings of the 2015 IEEE International Conference on Vehicular Electronics and Safety (ICVES), Yokohama, Japan, 5–7 November 2015; pp. 208–212. [Google Scholar]

- Chowdhury, A.; Karmakar, G.; Kamruzzaman, J.; Jolfaei, A.; Das, R. Attacks on Self-Driving Cars and Their Countermeasures: A Survey. IEEE Access 2020, 8, 207308–207342. [Google Scholar] [CrossRef]

- Rana, M.M. IoT-based electric vehicle state estimation and control algorithms under cyber attacks. IEEE Internet Things J. 2019, 7, 874–881. [Google Scholar] [CrossRef]

- Liu, Q.; Mo, Y.; Mo, X.; Lv, C.; Mihankhah, E.; Wang, D. Secure pose estimation for autonomous vehicles under cyber attacks. In Proceedings of the 2019 IEEE Intelligent Vehicles Symposium (IV), Paris, France, 9–12 June 2019; pp. 1583–1588. [Google Scholar]

- Yin, G.; Li, J.; Jin, X.; Bian, C.; Chen, N. Integration of Motion Planning and Model-Predictive-Control-Based Control System for Autonomous Electric Vehicles. Transport 2015, 30, 353–360. [Google Scholar] [CrossRef] [Green Version]

- Dang, D.; Gao, F.; Hu, Q. Motion Planning for Autonomous Vehicles Considering Longitudinal and Lateral Dynamics Coupling. Appl. Sci. 2020, 10, 3180. [Google Scholar] [CrossRef]

- Liu, S.; Zheng, K.; Zhao, L.; Fan, P. A driving intention prediction method based on hidden Markov model for autonomous driving. Comput. Commun. 2020, 157, 143–149. [Google Scholar] [CrossRef] [Green Version]

- García Cuenca, L.; Puertas, E.; Fernandez Andrés, J.; Aliane, N. Autonomous driving in roundabout maneuvers using reinforcement learning with Q-learning. Electronics 2019, 8, 1536. [Google Scholar] [CrossRef] [Green Version]

- Wu, X.; Qiao, B.; Su, C. Trajectory Planning with Time-Variant Safety Margin for Autonomous Vehicle Lane Change. Appl. Sci. 2020, 10, 1626. [Google Scholar] [CrossRef] [Green Version]

- Ortega, J.; Lengyel, H.; Szalay, Z. Overtaking maneuver scenario building for autonomous vehicles with PreScan software. Transp. Eng. 2020, 2, 100029. [Google Scholar] [CrossRef]

- Wasala, A.; Byrne, D.; Miesbauer, P.; O’Hanlon, J.; Heraty, P.; Barry, P. Trajectory based lateral control: A Reinforcement Learning case study. Eng. Appl. Artif. Intell. 2020, 94, 103799. [Google Scholar] [CrossRef]

- Gambino, A.; Sundar, S.S. Acceptance of self-driving cars: Does their posthuman ability make them more eerie or more desirable? In Proceedings of the Extended Abstracts of the 2019 CHI Conference on Human Factors in Computing Systems, Glasgow, UK, 4–9 May 2019; pp. 1–6. [Google Scholar]

- Hong, J.W.; Wang, Y.; Lanz, P. Why is artificial intelligence blamed more? Analysis of faulting artificial intelligence for self-driving car accidents in experimental settings. Int. J. Hum. Comput. Interact. 2020, 36, 1768–1774. [Google Scholar] [CrossRef]

- Lee, J.D.; Kolodge, K. Exploring Trust in Self-Driving Vehicles Through Text Analysis. Hum. Factors J. Hum. Factors Ergon. Soc. 2019, 62, 260–277. [Google Scholar] [CrossRef] [PubMed]

- Hong, J.W.; Cruz, I.; Williams, D. AI, you can drive my car: How we evaluate human drivers vs. self-driving cars. Comput. Hum. Behav. 2021, 125, 106944. [Google Scholar] [CrossRef]

- Kumar, N.; Rodrigues, J.J.P.C.; Chilamkurti, N. Bayesian Coalition Game as-a-Service for Content Distribution in Internet of Vehicles. IEEE Internet Things J. 2014, 1, 544–555. [Google Scholar] [CrossRef]

- Kumar, N.; Chilamkurti, N.; Park, J.H. ALCA: Agent learning–based clustering algorithm in vehicular ad hoc networks. Pers. Ubiquitous Comput. 2012, 17, 1683–1692. [Google Scholar] [CrossRef]

- Lee, M.; Atkison, T. VANET applications: Past, present, and future. Veh. Commun. 2020, 28, 100310. [Google Scholar] [CrossRef]

- Kumar, N.; Kaur, K.; Misra, S.C.; Iqbal, R. An intelligent RFID-enabled authentication scheme for healthcare applications in vehicular mobile cloud. Peer-to-Peer Netw. Appl. 2015, 9, 824–840. [Google Scholar] [CrossRef]

- Kumar, N.; Misra, S.; Obaidat, M.S. Collaborative Learning Automata-Based Routing for Rescue Operations in Dense Urban Regions Using Vehicular Sensor Networks. IEEE Syst. J. 2014, 9, 1081–1090. [Google Scholar] [CrossRef]

| Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).