Development of a Virtual Object Weight Recognition Algorithm Based on Pseudo-Haptics and the Development of Immersion Evaluation Technology

Abstract

:1. Introduction

- Development of decoupling algorithms for user hand positions in VR environments and user hand positions in real space;

- A weight recognition software approach that takes into account the volume, density, and speed of a virtual object based on hand tracking using a camera in a VR headset;

- Develop a customized virtual object weight algorithm based on the speed of lifting standard objects in real space;

- After conducting an experiment to measure the speed of lifting an object according to weight in real space, consider the resulting value as a weight;

- Because the user feels a different individual weight depending on the experience and degree of immersion in VR, the user lifts a golf ball in the real space and measures this speed to define it as a customized standard speed;

- A study showing the subject’s perceived weight using the proposed approach;

- Development of qualitative evaluation technology based on an immersive experience questionnaire (IEQ) within a VR environment;

- Qualitative user experience evaluation based on developed pseudo-haptics technology and analysis of the results.

2. Related Work

2.1. Pseudo-Haptics

2.2. Muscle Strength for One Hand

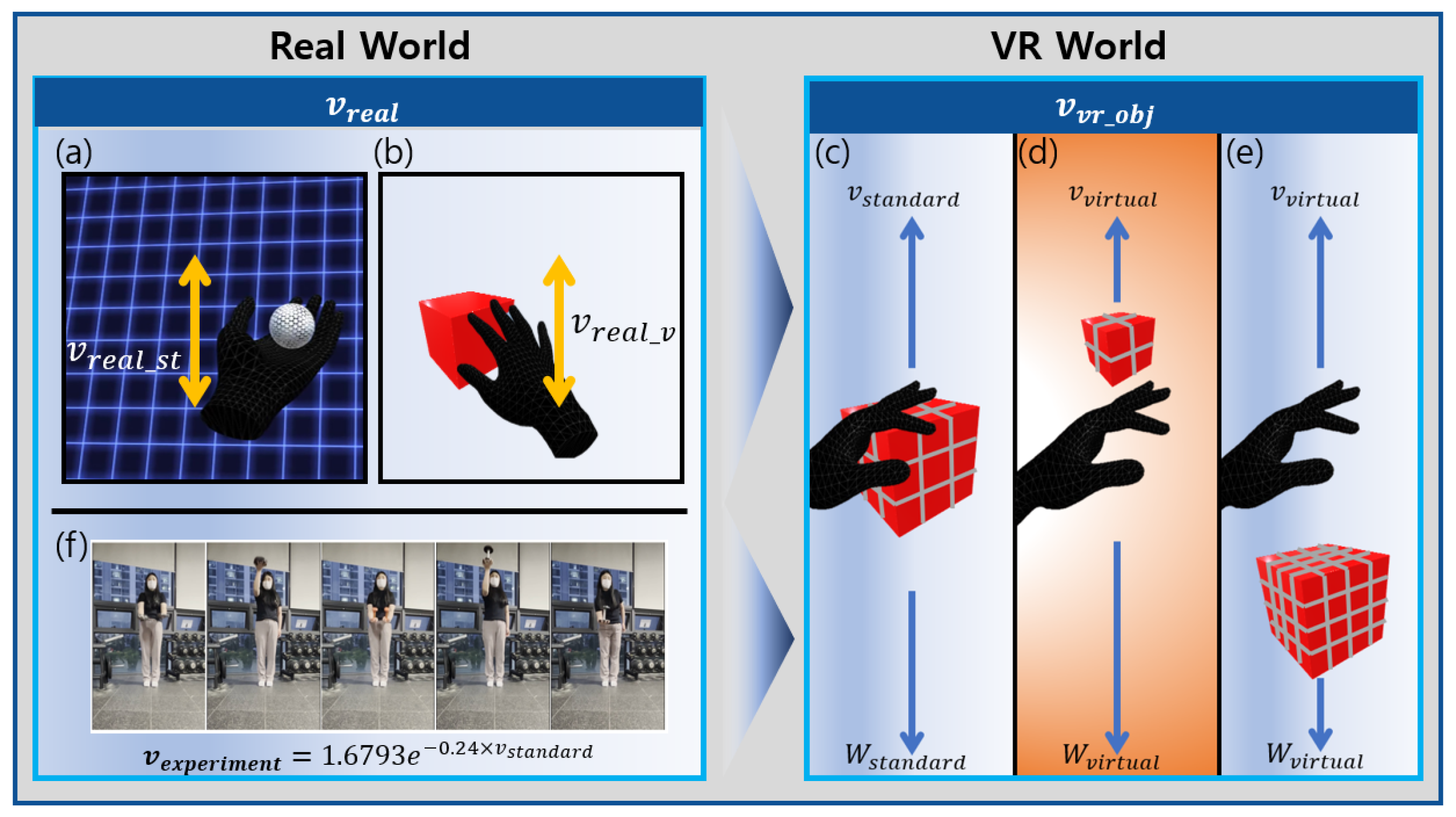

3. Designing a Virtual Object Weight Algorithm

3.1. Ideas and Algorithms

- The virtual object can be lifted only on the y-axis in a three-dimensional VR environment;

- The location of each virtual object is represented by local coordinates and moves in a positive space greater than or equal to 0 (the y-axis coordinates of a virtual object cannot be negative);

- The unit time for measuring the average speed of a virtual object is defined as 1 s;

- The densities of the virtual objects compared are the same.

- The virtual object is lighter in weight and faster in speed;

- If the weight is lighter and the speed is slower;

- If the weight is heavier and the speed is the same;

- If the weight is lighter and the speed is the same;

- If the weight is heavier and the speed is also slow.

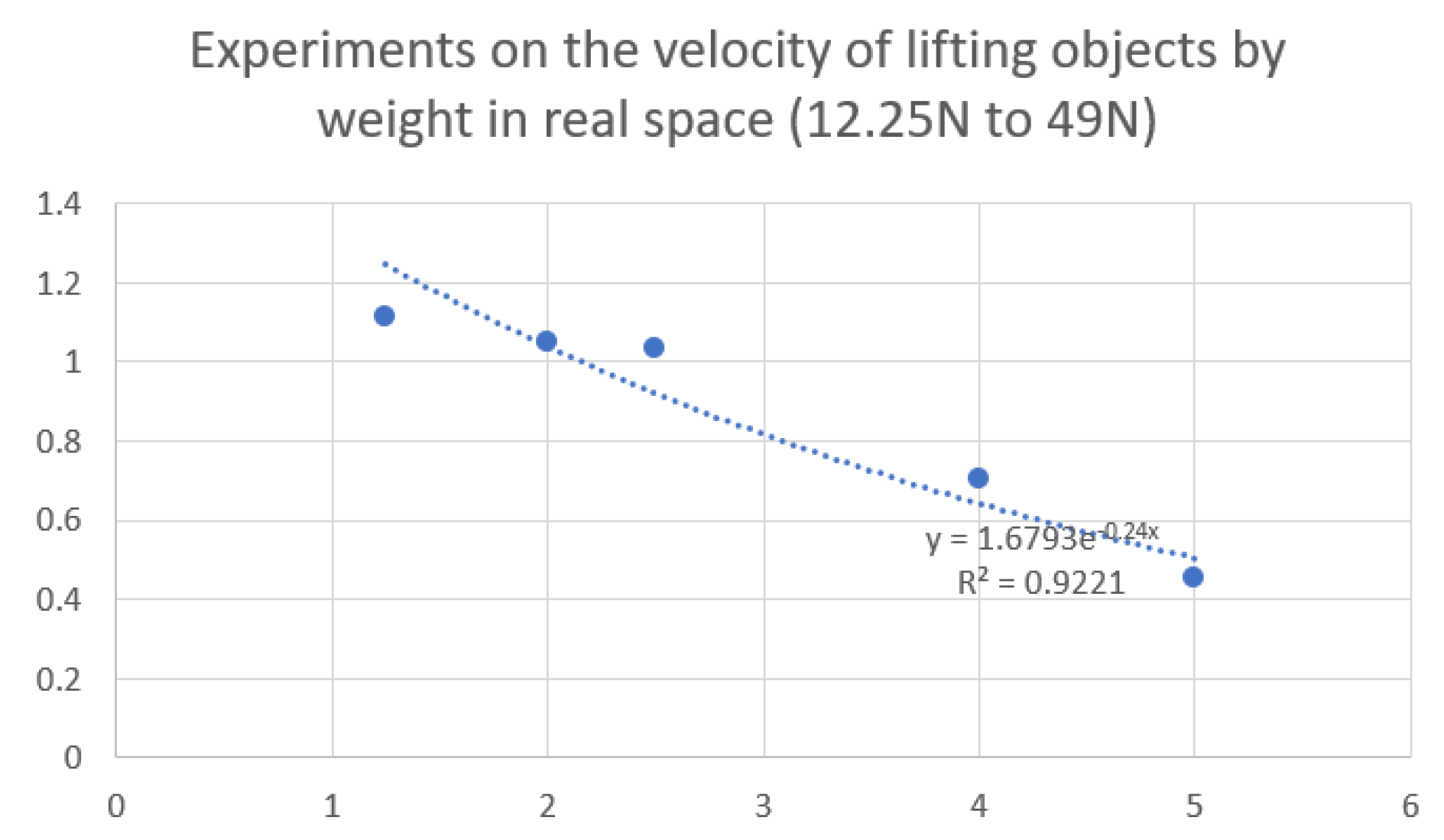

3.2. Experiment to Measure the Speed of a One-Handed Lift According to the Weight in Real Space

3.2.1. Participants and Experimental Tools

3.2.2. Experimental Procedures and Methods

- The experimenter set the camera position and angle in consideration of the height of the participant;

- The position and angle of the camera filming the participant were fixed;

- Participants lifted an object weighing 12.25 N using one hand;

- The lifting of an object of the same weight was repeated six times;

- The experimenter measured the movement time of the object from the center of the participant’s palm to the top of the head;

- Repeated lifting of objects weighing 19.60 N, 24.50 N, 39.20 N, and 49.00 N (steps 2–5);

- The experimenter used the measured data to calculate the speed of lifting objects by weight;

- The user derived a formula suitable for the speed of lifting objects by weight.

3.2.3. Speed Measurement Experiment Results

3.3. Exclude Inertia

3.4. Hand Tracking

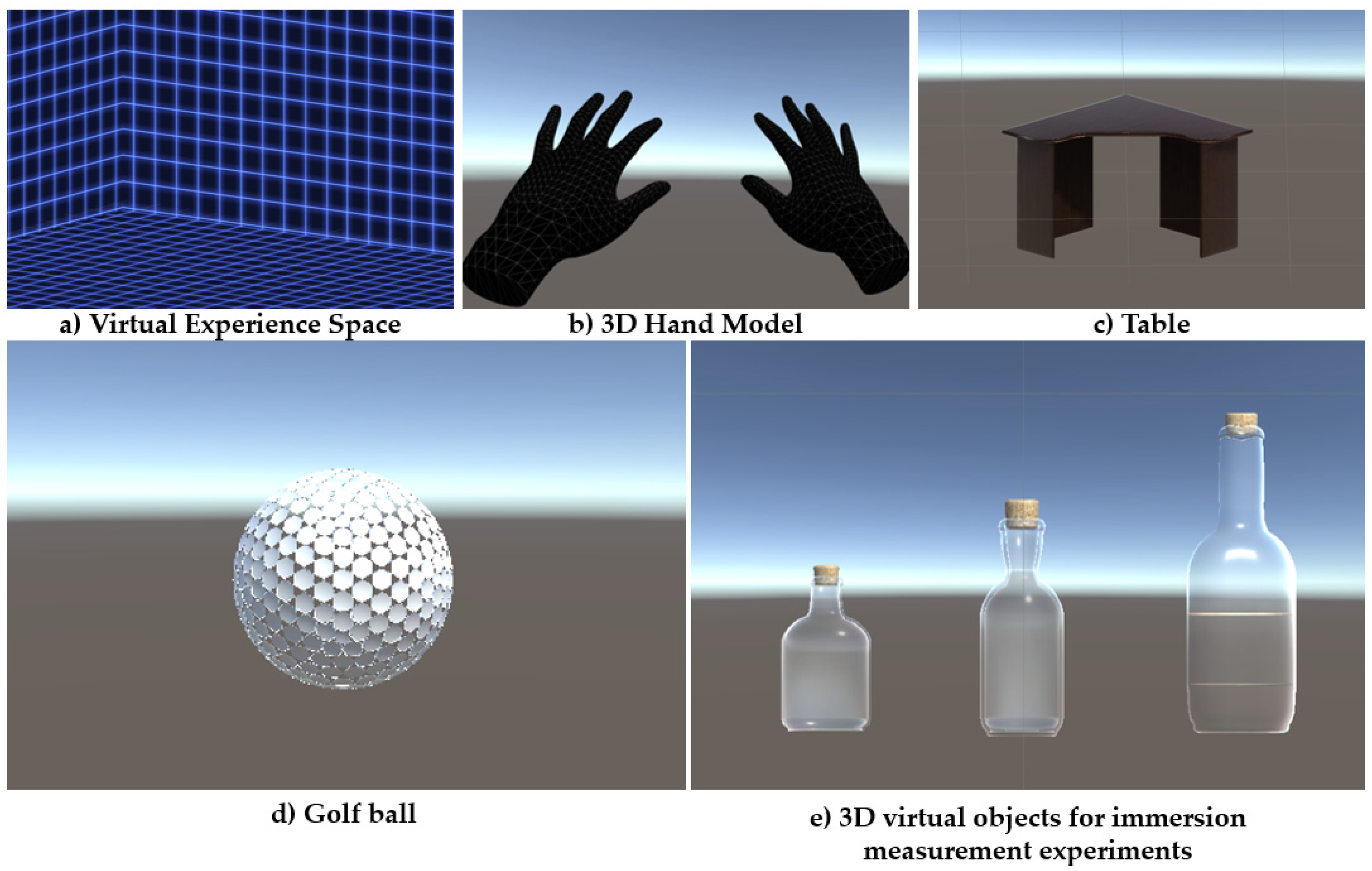

4. Design and System Implementation of Simulated Content

4.1. Building a Virtual Environment

4.2. User and 3D Virtual Object Interaction

4.3. System Implementation and Enforcement

5. Questionnaire Development

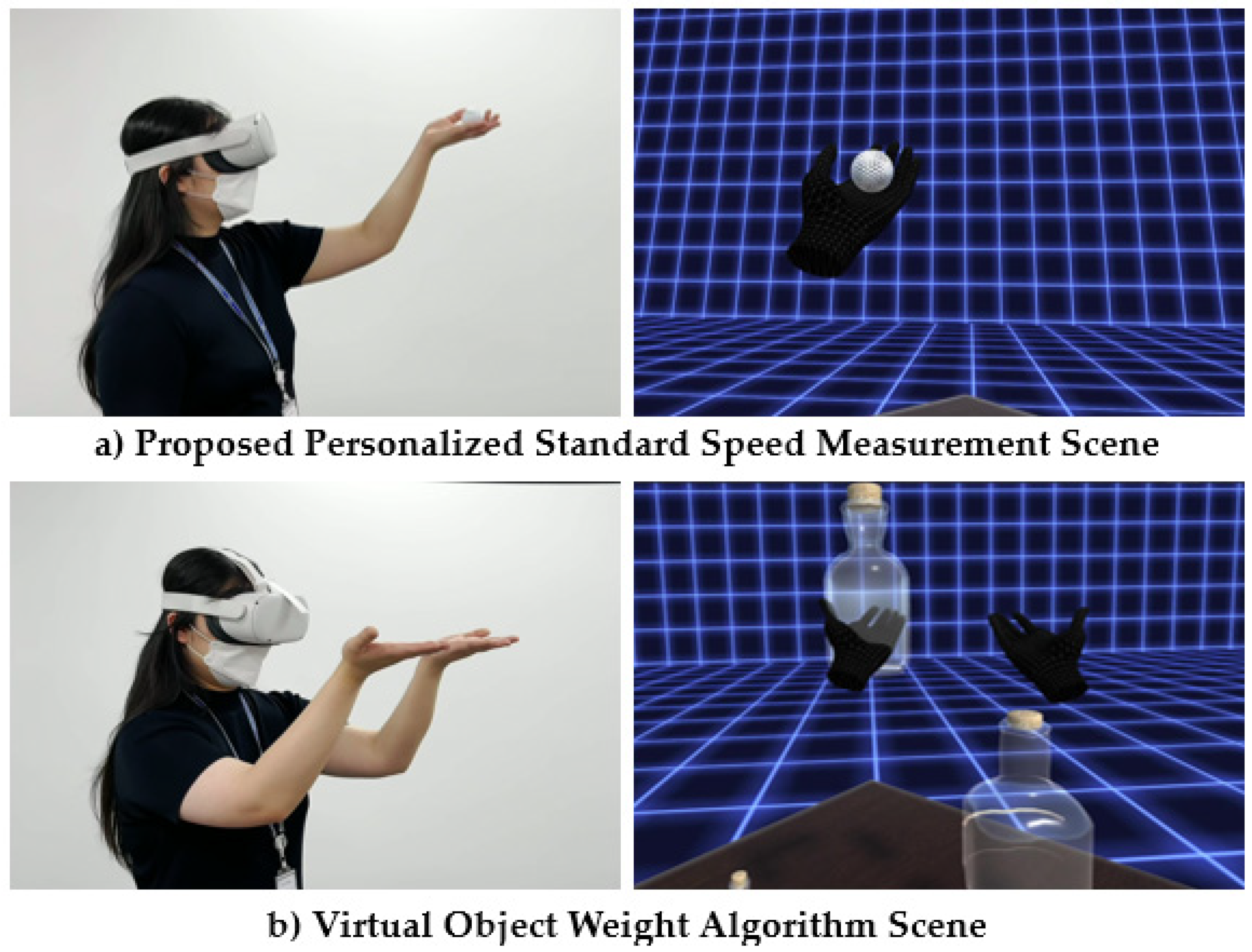

6. Experiment 1

6.1. Procedure

6.2. Results

7. Experiment 2

7.1. Procedure

7.2. Results

8. Discussion

9. Conclusions

Author Contributions

Funding

Informed Consent Statement

Acknowledgments

Conflicts of Interest

Appendix A. Immersion Questionnaire Used in Experiment 1

Appendix B. Immersion Questionnaire Used in Experiment 2

References

- MacIsaac, D. Google Cardboard: A virtual reality headset for $10? AAPT 2015, 53, 125. [Google Scholar] [CrossRef]

- Praveena, P.; Rakita, D.; Mutlu, B.; Gleicher, M. Supporting Perception of Weight through Motion-induced Sensory Conflicts in Robot Teleoperation. In Proceedings of the ACM/IEEE International Conference on Human-Robot Interaction, Online, 9 March 2020; pp. 509–517. [Google Scholar]

- Kim, J.; Kim, S.; Lee, J. The Effect of Multisensory Pseudo-Haptic Feedback on Perception of Virtual Weight. IEEE Access 2022, 10, 5129–5140. [Google Scholar] [CrossRef]

- Collins, K.; Kapralos, B. Pseudo-haptics: Leveraging cross-modal perception in virtual environments. Senses Soc. 2019, 14, 313–329. [Google Scholar] [CrossRef]

- Lécuyer, A.; Coquillart, S.; Kheddar, A.; Richard, P.; Coiffet, P. Pseudo-haptic feedback: Can isometric input devices simulate force feedback? In Proceedings of the IEEE Virtual Reality 2000, New Brunswick, NJ, USA, 18–22 March 2000; pp. 83–90. [Google Scholar]

- Punpongsanon, P.; Iwai, D.; Sato, K. Softar: Visually manipulating haptic softness perception in spatial augmented reality. IEEE Trans. Vis. Comput. Graph. 2015, 21, 1279–1288. [Google Scholar] [CrossRef] [PubMed]

- Lopes, P.; You, S.; Cheng, L.P.; Marwecki, S.; Baudisch, P. Providing haptics to walls & heavy objects in virtual reality by means of electrical muscle stimulation. In Proceedings of the 2017 CHI Conference on Human Factors in Computing Systems, Online, 2 May 2017; pp. 1471–1482. [Google Scholar]

- Culbertson, H.; Schorr, S.B.; Okamura, A.M. Haptics: The present and future of artificial touch sensation. Annu. Rev. Control. Robot. Auton. Syst. 2018, 1, 385–409. [Google Scholar] [CrossRef]

- Borst, C.W.; Indugula, A. Realistic virtual grasping. In Proceedings of the IEEE Virtual Reality, Bonn, Germany, 12–16 March 2005; pp. 91–98. [Google Scholar]

- Ott, R.; De Perrot, V.; Thalmann, D.; Vexo, F. MHaptic: A haptic manipulation library for generic virtual environments. In Proceedings of the 2007 International Conference on Cyberworlds (CW’07), Hannover, Germany, 24–26 October 2007; pp. 338–345. [Google Scholar]

- Ott, R.; Vexo, F.; Thalmann, D. Two-handed haptic manipulation for CAD and VR applications. Comput. Des. Appl. 2010, 7, 125–138. [Google Scholar] [CrossRef] [Green Version]

- Fisch, A.; Mavroidis, C.; Melli-Huber, J.; Bar-Cohen, Y. Haptic devices for virtual reality, telepresence, and human-assistive robotics. Biologically inspired intelligent robots. Biol. Inspired Intell. Robot. 2003, 73, 1–24. [Google Scholar]

- Turner, M.L.; Gomez, D.H.; Tremblay, M.R.; Cutkosky, M.R. Preliminary tests of an arm-grounded haptic feedback device in telemanipulation. ASME 1998, 15861, 145–149. [Google Scholar]

- Giachritsis, C.D.; Garcia-Robledo, P.; Barrio, J.; Wing, A.M.; Ferre, M. Unimanual, bimanual and bilateral weight perception of virtual objects in the master finger 2 environment. In Proceedings of the 19th International Symposium in Robot and Human Interactive Communication, Viareggio, Italy, 13–15 September 2010; pp. 513–519. [Google Scholar]

- Hummel, J.; Dodiya, J.; Wolff, R.; Gerndt, A.; Kuhlen, T. An evaluation of two simple methods for representing heaviness in immersive virtual environments. In Proceedings of the 2013 IEEE Symposium on 3D User Interfaces (3DUI), Orlando, FL, USA, 16–17 March 2013; pp. 87–94. [Google Scholar]

- Rietzler, M.; Geiselhart, F.; Gugenheimer, J.; Rukzio, E. Breaking the tracking: Enabling weight perception using perceivable tracking offsets. In Proceedings of the 2018 CHI Conference on Human Factors in Computing Systems, Online, 19 April 2018; pp. 1–12. [Google Scholar]

- Rietzler, M.; Geiselhart, F.; Frommel, J.; Rukzio, E. Conveying the perception of kinesthetic feedback in virtual reality using state-of-the-art hardware. In Proceedings of the 2018 CHI Conference on Human Factors in Computing Systems, Online, 21 April 2018; pp. 1–13. [Google Scholar]

- Lécuyer, A.; Burkhardt, J.M.; Coquillart, S.; Coiffet, P. “Boundary of illusion”: An experiment of sensory integration with a pseudo-haptic system. In Proceedings of the IEEE Virtual Reality 2001, Yokohama, Japan, 13–17 March 2001; pp. 115–122. [Google Scholar]

- Lopes, P.; Baudisch, P. Interactive systems based on electrical muscle stimulation. Computer 2017, 50, 28–35. [Google Scholar] [CrossRef] [Green Version]

- Azmandian, M.; Hancock, M.; Benko, H.; Ofek, E.; Wilson, A.D. Haptic retargeting: Dynamic repurposing of passive haptics for enhanced virtual reality experiences. In Proceedings of the 2016 Chi Conference on Human Factors in Computing Systems, Online, 7 May 2016; pp. 1968–1979. [Google Scholar]

- Lopes, P.; You, S.; Ion, A.; Baudisch, P. Adding force feedback to mixed reality experiences and games using electrical muscle stimulation. In Proceedings of the 2018 CHI Conference on Human Factors in Computing Systems, Online, 21 April 2018; p. 446. [Google Scholar]

- Choi, I.; Culbertson, H.; Miller, M.R.; Olwal, A.; Follmer, S. Grabity: A wearable haptic interface for simulating weight and grasping in virtual reality. In Proceedings of the 30th Annual ACM Symposium on User Interface Software and Technology, Online, 20 October 2017; pp. 119–130. [Google Scholar]

- Park, C.H.; Kim, H.T. Induced pseudo-haptic sensation using multisensory congruency in virtual reality. In Proceedings of the HCI Society of Korea Conference, Jeju-si, Korea, 14 February 2017; pp. 1055–1059. [Google Scholar]

- Kim, J.; Lee, J. The Effect of the Virtual Object Size on Weight Perception Augmented with Pseudo-Haptic Feedback. In Proceedings of the 2021 IEEE Conference on Virtual Reality and 3D User Interfaces Abstracts and Workshops (VRW), Lisbon, Portugal, 27 March–1 April 2021; pp. 575–576. [Google Scholar]

- Mo, S.; Kwag, J.; Jung, M.C. Literature Review on One-Handed Manual Material Handling. J. Ergon. Soc. Korea 2010, 29, 819–829. [Google Scholar] [CrossRef] [Green Version]

- Kim, H.K. Comparison of Muscle Strength for One-hand and Two-hands Lifting Activity. J. Ergon. Soc. Korea 2007, 26, 35–44. [Google Scholar]

- Jennett, C.; Cox, A.L.; Cairns, P.; Dhoparee, S.; Epps, A.; Tijs, T.; Walton, A. Measuring and defining the experience of immersion in games. Int. J. Hum. Comput. Stud. 2008, 66, 641–661. [Google Scholar] [CrossRef]

- Rigby, J.M.; Brumby, D.P.; Gould, S.J.; Cox, A.L. Development of a questionnaire to measure immersion in video media: The Film IEQ. In Proceedings of the 2019 ACM International Conference on Interactive Experiences for TV and Online Video, Online, 4 June 2019; pp. 35–46. [Google Scholar]

| P1 | P2 | P3 | P4 | P5 | P6 |

|---|---|---|---|---|---|

| 4.6 | 5.0 | 3.8 | 3.2 | 4.3 | 4.8 |

| P7 | P8 | P9 | P10 | P11 | P12 |

| 5.0 | 4.7 | 2.9 | 3.7 | 4.5 | 4.9 |

| P1 | P2 | P3 | P4 | P5 | P6 |

|---|---|---|---|---|---|

| 3.8 | 4.2 | 3.1 | 4.6 | 4.7 | 2.1 |

| P7 | P8 | P9 | P10 | P11 | P12 |

| 3.5 | 4.8 | 4.7 | 4.2 | 4.3 | 4.6 |

| P1 | P2 | P3 | P4 | P5 | P6 |

|---|---|---|---|---|---|

| 7 | 10 | 8 | 10 | 10 | 5 |

| P7 | P8 | P9 | P10 | P11 | P12 |

| 8 | 9 | 8 | 8 | 9 | 9 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Son, E.; Song, H.; Nam, S.; Kim, Y. Development of a Virtual Object Weight Recognition Algorithm Based on Pseudo-Haptics and the Development of Immersion Evaluation Technology. Electronics 2022, 11, 2274. https://doi.org/10.3390/electronics11142274

Son E, Song H, Nam S, Kim Y. Development of a Virtual Object Weight Recognition Algorithm Based on Pseudo-Haptics and the Development of Immersion Evaluation Technology. Electronics. 2022; 11(14):2274. https://doi.org/10.3390/electronics11142274

Chicago/Turabian StyleSon, Eunjin, Hayoung Song, Seonghyeon Nam, and Youngwon Kim. 2022. "Development of a Virtual Object Weight Recognition Algorithm Based on Pseudo-Haptics and the Development of Immersion Evaluation Technology" Electronics 11, no. 14: 2274. https://doi.org/10.3390/electronics11142274

APA StyleSon, E., Song, H., Nam, S., & Kim, Y. (2022). Development of a Virtual Object Weight Recognition Algorithm Based on Pseudo-Haptics and the Development of Immersion Evaluation Technology. Electronics, 11(14), 2274. https://doi.org/10.3390/electronics11142274