3.1. The Poisson Equation in One Dimension

Considering only one space variable,

x, Equation (

1) can be written as:

where

is the solution domain defining the possible

x values. A set of

N discrete

x values

of

x is selected where the equation is to be solved. Then, the domain is

. The boundary of the domain is assumed to be the outer edges:

. Some texts use the notation

and

to indicate the boundary points and exclude them from the definition of the solution domain. However, in this paper, the edge points of the solution domain are taken as boundary points unless otherwise stated.

The domain points are referred to as , . For simplicity, it is assumed that these points are separated by a constant number (i.e., ). The corresponding values of f and u are denoted and , respectively (i.e., , ). It is convenient to express these sets of discrete points as vectors , , and . Note that transpose notations were used to write the column vectors in a compact manner. With vector notation, the differential equation can be imagined as a linear algebra problem where the vector is to be solved for a given . The next step is to convert the second-derivative operator into a matrix operator so that a complete linear algebraic system can be formed.

To convert a continuous derivative operator to a discrete matrix operator, the starting point is the finite difference formulas for calculating the derivatives. The vectors

and

are defined to denote the first and second derivatives, respectively, corresponding to the points

(i.e.,

=

, and

=

). Using the second-order center-difference formulas, the first and second derivatives can be expressed as [

16]:

Note that there are other higher-order finite difference formulas for the above derivatives. The widely used second-order center-difference formulas are selected for the current analysis. Now, from Equations (

5) and (

6), focusing only on three consecutive quantities

,

,

, the following matrix equation can be written:

Equations (

7) and (

8) work fine for

to

. However, for the boundary points

and

, the subscripts overflow the bounds, requiring values of

and

, respectively. Thus, the equation is not valid for those two cases (note that the outer boundary points are

and

). We resort to using forward (for

) and backward (for

) finite difference formulas for these two cases [

16]:

Now, using Equations (

7) to (

12), the first and second derivatives at all points in the solution space can be calculated. These equations for all

i values can be compiled to form the following matrix equations:

It should be noted that for nonuniform grids, the term

would no longer be constant. As a result, in the place of the common

factor in the matrices, every matrix element would have a denominator corresponding to its own

value. Every other step discussed in this manuscript would remain unchanged. With the help of these matrices, the derivative operations can be expressed in linear algebra form as:

Here

and

are

matrices, as shown in Equations (

13) and (

14). These are referred to as

matrix operators for the first and second derivatives, respectively, as they operate on a vector to calculate its derivatives. Equation (

4) can be converted to a discrete linear algebraic system using these matrix operators:

Now the boundary conditions must be implemented in such a way that they can be easily integrated with Equation (

17).

Let us consider a general implementation of boundary conditions in matrix form:

Here

B is referred to as the

boundary operator matrix, and

is the known/given boundary function vector. For a given problem, the boundary operator matrix is determined by the type of the boundary conditions. For Dirichlet boundary conditions, the boundary function values are directly assigned to the boundary points. In such a case,

B would be an identity matrix, and

would list the values of

at the boundary. For Neumann boundary conditions, the value of the first derivative is assigned at the boundary points. For this case,

B would be the first-derivative matrix operator

, and

would list the values of

at the boundary. Thus,

Here

is the

identity matrix, and

is expressed in Equation (

13). Note that although Equation (

19) relates the quantities in such a way that

B operates on the entire

, the equation is valid only at the boundary

. In fact,

elements at non-boundary points are unknown. In other words, the

locations of the boundaries are not defined explicitly within

B. However, to understand which entries of

B come from

and which entries come from

, information about the geometry of the system is required. In the following paragraphs, we will discuss how to compile the linear algebraic system so that only the relevant rows of

B are used. This is where the remaining geometry information will be encoded. Before that, it is important to discuss how a multi-boundary system would be treated. As previously mentioned,

can be subdivided into smaller regions

each with its own boundary conditions. Each

corresponds to a set of specific rows in the boundary operator matrix. For such cases,

would be defined appropriately in a piecewise manner. For mixed boundary conditions, the rows of

B would be a compilation of rows taken from either

or

depending on which boundary regime the corresponding equation falls under. It should also be noted that for Neumann boundary conditions, an additional constraint such as Equation (

3) would be needed to obtain a unique solution.

After defining the matrix forms for the PDE and the boundary conditions, the process of integrating those into a single linear algebraic equation can be started. It is possible to simply solve Equation (

17) under the constraint defined in Equation (

19). However, an alternative and somewhat more elegant approach would be to form a single system matrix that incorporates both the PDE and the boundary conditions in a compact form. By observing the full form of the matrix and vectors in Equation (

17), it can be noted that each row of the matrix defines an equation containing a few elements of

. Since some of the elements of

are on the boundary and follow Equation (

19), replacing the corresponding rows of

with those of

B can lead to the formation of a system matrix,

. This can be mathematically described as:

Of course, similar changes to the right-hand vector would need to be made as well. The

system right-hand vector,

, can be defined as:

According to this definition, if the

k-th element of

,

, corresponds to a Dirichlet boundary point, then the

k-th row of

would be the

k-th row of

. Further, the

k-th element of

would be the

k-th row of

. All rows corresponding to non-boundary points will be identical to the rows of

. Now, the system equation can finally be written as:

Dimension-wise,

is an

matrix, and

is an

vector. This is a fully defined linear system from which

can be easily solved. Note that the derivative operator, the boundary conditions, and the problem geometry are all encoded within

and

b. Let us consider the 1D case with

and

being the boundary points. For Dirichlet boundary conditions, this correspond to

, where

and

are given. Using Equations (

20) and (

21), the matrix system is constructed and solved. Note that

, are not defined and are not needed to form

.

One notable feature of the proposed system matrix construction procedure is that part of it is problem independent. Note that only the B matrix and the vector depend on the boundary condition types at different boundaries. The matrix is the same for all problems. The construction of involves swapping out certain rows of with B. This part depends on the problem geometry (as points on need to be identified). Thus, modifying the solver for different geometries and boundary conditions involves selecting the appropriate form of B and modifying the row-swapping operation of only (the same operation must be done for the right-hand vector, also). This is a major benefit over conventional procedures for which the system matrix is constructed from scratch for every geometry/boundary condition.

It is obvious that the size of the matrix operators, having size , can be very large for moderately large values of N. As will be discussed later, the sizes of the matrices are even larger for cases with higher dimensions. Working with such large matrices in full form can be computationally demanding. Fortunately, these matrices are sparse in nature (i.e., the number of non-zero elements is small compared to the full size of the matrix), as can be seen from the forms of and . As such, when solving numerically, they are always defined as sparse matrices, which significantly reduces memory requirements and speeds up the operation. Almost all programming languages commonly used for numerical analysis have sparse-matrix libraries that can be used for this (e.g., Python has scipy.sparse package).

3.2. The Poisson Equation in Two Dimensions

The 2D Poisson equation in a Cartesian

plane is given by:

Here the solution

spans a 2D space. The matrix analysis for the 1D Poisson equation discussed in

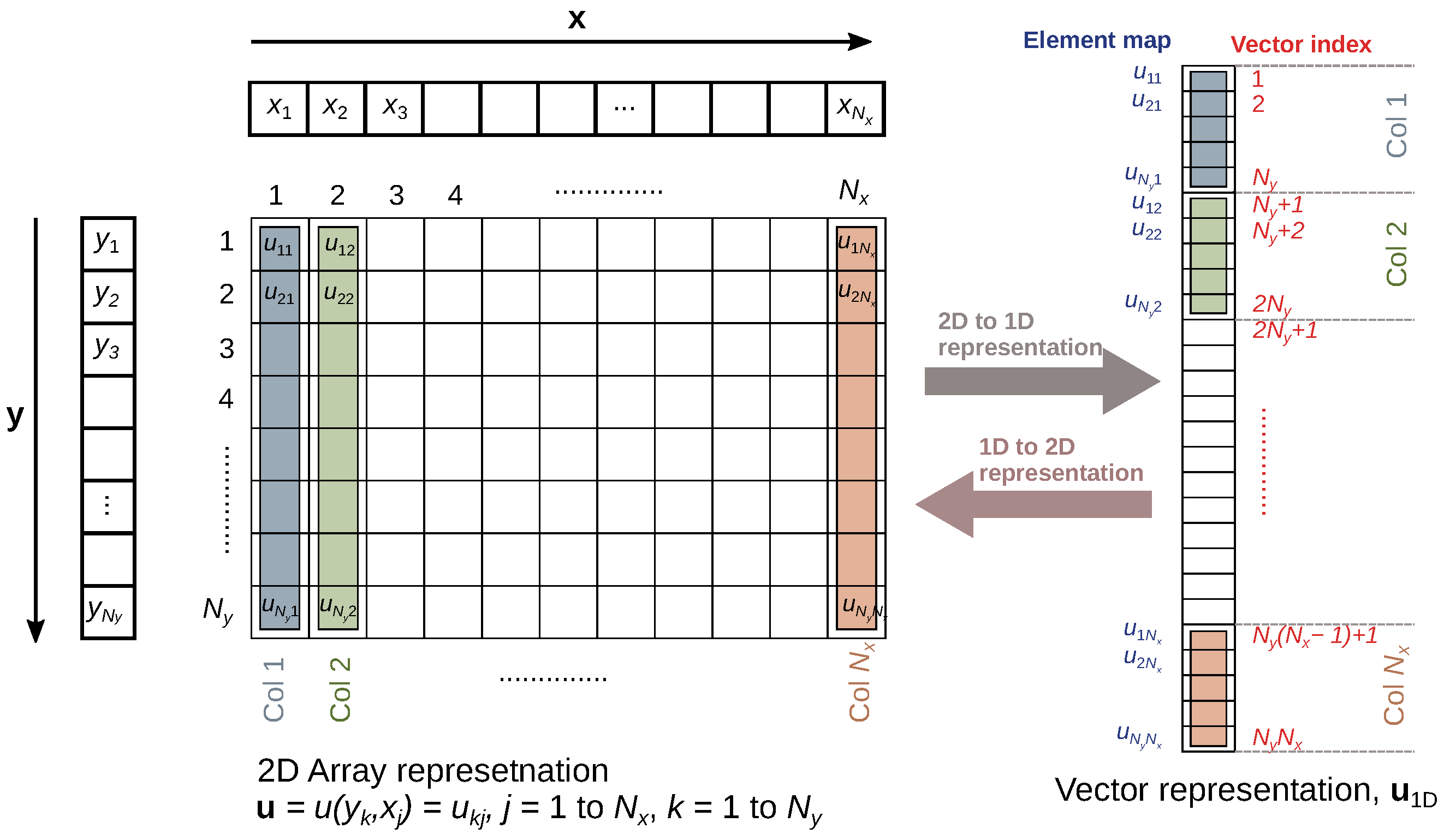

Section 3.1 can be extended for this 2D case. The matrix operators developed for the 1D case can be used as the building blocks. The key idea is to convert the 2D problem into an equivalent 1D problem. This is done by mapping the 2D solution grid into a 1D vector, solving the 1D vector, and then remapping it to the original 2D space. The main tasks are developing the mapping algorithm, figuring out how the boundary conditions are mapped from 2D to 1D space, and assembling the corresponding matrix operators.

The 2D solution space in the

-Cartesian plane is defined as

. This denotes a continuous rectangular region with

x values spanning from

to

and

y values spanning from

to

. Note that the analysis would hold for any shape of solution space. In discrete space, a grid of equally spaced

points

are considered, where

and

. The

x and

y spacing are denoted

and

, respectively. These points can be imagined as elements of a matrix with

rows and

columns, as shown in

Figure 1. Any point can be addressed by the matrix coordinate

, where

k refers to the row number and

j refers to the column number. The solution

u at point

is denoted as

. The order of the indices

j and

k is selected in this way to be consistent with syntax of common numerical coding languages (e.g., Python and MATLAB).

Now,

, being 2D, is not an ideal input for a matrix operator. The elements of the 2D grid are rearranged by stacking the columns on top of each other to form one large

vector, referred to as

. This is shown in

Figure 1 [

17]. Each element of this

corresponds to a specific grid point

and the corresponding solution

. The elements of

can be addressed by a single index

. Note that there are many other valid approaches to mapping a 2D matrix into an 1D vector. This discussion is limited to the column-stacking method, which is relatively simple and intuitive. For this mapping, the 2D and 1D indices are related as:

The opposite transformation is:

Here mod refers to the modulo operation. Using these relationships, it is possible to transfer back and forth between the 2D representation and the equivalent 1D representation. This process is referred to as

mapping. The mapping process is shown in

Figure 1.

After obtaining

from the mapping process, the corresponding derivative matrix operators need to formulated. The matrix operators for the first and second partial derivatives with respect to

x are expressed as

and

, respectively. Similar quantities with respect to

y are expressed as

and

, respectively. The superscripts denote that these are 2D matrix operators. For the 1D case discussed in

Section 3.1, the consecutive elements of the vector

corresponded to consecutive points along a 1D spatial axis. For the 2D case, however, the relation between the elements of

and the corresponding spatial points is more complex. Every

block of elements in

(shown in different colors in

Figure 1) represents a column in the 2D representation. They have consecutive

y values for a given

x value. Within this block, matrix operators of similar form as Equation (

13) and (

14) would work for the

y derivative. As one moves from one of these blocks to the next (moving from one color to another in

Figure 1), the

y values restart from the beginning and the

x values increment by one step. Our

x derivative operator must operate on this jumbled space and accurately pick out the correct elements for a differentiation operation.

First, the

y derivatives are considered, as they are simpler to understand than the

x derivatives for this mapping process. The

y partial derivatives in vector form are denoted

and

. The 2D matrix operator for the

y partial derivative must be such that it sifts each

block of elements and multiplies it by the 1D-derivative matrix operator. As it goes through each of the columns, the results should be stacked on a column to maintain the same spatial mapping as

. This can be done by forming a

block diagonal matrix whose diagonal blocks are copies of the 1D

matrix operator [

8,

17] (

has the same form as

from Equation (

13), with

replaced by

). The process is shown graphically in

Figure 2. Each rectangular block in

is an

matrix. The zero blocks are zero matrices of the same size. Each block operates on an

sized block of

(represented as different-colored columns and labeled

). This is shown in the figure by connecting a

matrix block with the corresponding

column block by curved lines. For a given block-row (the rows drawn in the figure with black lines) of the matrix, all elements of

beside a specific block of

consecutive elements are ignored (i.e., multiplied by zeros). Thus, the derivative operation is performed for each of these column blocks as one moves from one block-row of the matrix to another. This matrix multiplication produces a column vector representing the

y derivatives mapped in the same format as

. The second-derivative matrix,

, can be similarly constructed by replacing

with

in each block.

Let us now consider the matrix operators for the

x partial derivatives,

and

. When multiplied with

, these matrices produce the partial derivatives in vector form denoted

and

, respectively. First, the locations of the elements representing consecutive

x values are identified and listed in the 1D-mapped vectors. The

x values increase by one when moving from left to right in a given row (move by one column) in the 2D representation (

Figure 1). In the 1D map, movement of one space along a row translates into movement by

elements in the corresponding 1D-mapped vector. A block matrix construction is again used to form

from

. This time, however, each row of

must operate on elements of

that are

spaces apart. This can be done by adding

zeros after each element in a row of

and then copying and arranging the rows appropriately [

17]. The required configuration is shown in

Figure 3. The elements of

are repeated in the diagonal of each block in

. Thus, each block is a diagonal matrix. Each block sifts out certain elements of

, as shown by the curved lines in the figure. Careful observation shows that these elements are indeed the ones required to calculate the derivatives at that point. A similar block matrix can be constructed for

by replacing

elements with

elements.

Now that the matrix operators have been defined, their construction using simple mathematical operations is discussed. A powerful tool in constructing block matrices is the Kronecker product [

4,

18]. The Kronecker product between two matrices

A (size

) and

B (size

) is defined as:

where

represents the

element of matrix

A, and ⊗ represents Kronecker product operation. Note that each term in Equation (

27) is a matrix itself. The

block matrix is formed by tiling

B matrices after multiplying them with an element of the

A matrix. It is not difficult to see that

can be constructed from the Kronecker product of

and an identity matrix of size

(denoted as

):

Careful observation of

Figure 3 and Equation (

27) suggests that

can also be computed using the Kronecker product of

and an identity matrix of size

(denoted

):

The second-derivative matrices are calculated identically:

The overall sizes of these matrices are

. This can be very large even for moderate values of

and

. However, it is noted that the sparsity of the matrices from the 1D case is maintained in the 2D case as well. Even more so than with the 1D case, any numerical implementation of the 2D Poisson equation should employ sparse-matrix algorithms.

The step after constructing the differentiation operators is formulation of the boundary operator matrix. The 2D boundary operator matrix,

, would be identical to the one discussed in the previous section for the one-dimensional case. Equation (

19) would be slightly modified to accommodate for the larger two-dimensional matrices:

where

is an identity matrix of size

. As there are two independent variables, there are two possible Neumann boundary conditions that can be applied. Which derivative to use for the Neumann boundary is determined by the problem statement. Having defined the boundary operator, construction of the system matrix operator is straightforward:

The right-hand vector also follows a similar definition as the 1D case:

where

and

are 1D-mapped versions of the corresponding two-dimensional quantities,

and

, respectively. Obviously, the algorithm used for the 1D mapping here should be identical to the one used for calculating

. Finally, the system equation is constructed as:

Just like the one-dimensional case, this is a simple linear algebraic equation that can be solved easily. It should be noted that the solution is in a 1D-mapped vector form. Thus, an additional step of converting it back to the 2D form is necessary. This can be done using Equations (

25) and (

26).

It should be noted that only the matrix, the vector, and the construction of and are geometry and boundary condition dependent. The rest of the matrices and vectors are problem independent. Thus, configuring the solver for different geometries and boundary conditions is straightforward.