Framework for Improved Sentiment Analysis via Random Minority Oversampling for User Tweet Review Classification

Abstract

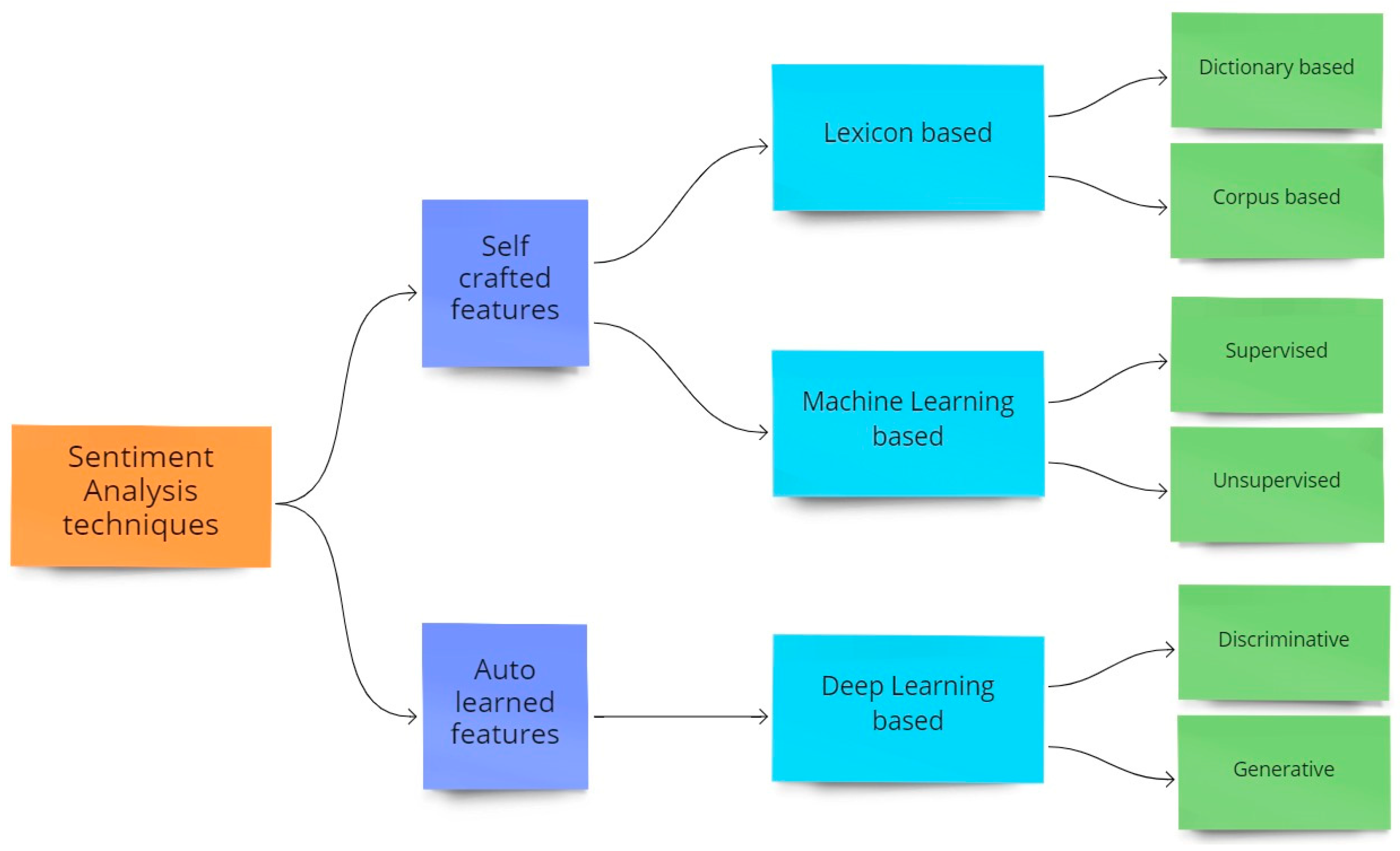

:1. Introduction

- Presenting a comprehensive framework for improved sentiment analysis which is specifically designed for imbalanced datasets;

- Handling the imbalanced dataset for multiclass classification problem through the use of random oversampling;

- Selection of best features for sentiment analysis. This task must be performed manually as it is highly dependent on the dataset and it must not be automated;

- Finding the best preprocessing order for the tweet text so that accurate oversampling can be performed without causing the problem of overfitting;

- Finding the actual impact of over-sampling when compared to the results generated with non-oversampled data.

2. Literature Review

3. Methology

3.1. Proposed Framework

3.1.1. Feature Selection and Combination

3.1.2. Text Preprocessing

- Converting all characters to lowercase

- Expanding contractions

- Tokenization

- Removing words less than two characters

- Removing repeating words

- Removing punctuations

- Removing digits

- Slang correction

- Removing stop-words

- Spell correction

3.1.3. Text Normalization

3.1.4. Word Representation

3.1.5. Oversampling

3.1.6. Sentiment Classification

4. Results and Discussion

4.1. Dataset

- Twitter US Airline Sentiment

Discussion on Dataset

4.2. Experimental Setup

4.3. Evaluation Metrics

- True Positive (TP): It produces an output which shows the right prediction made for the +ve class.

- True Negative (TN): It produces an output which shows the right predictions made for the −ve class.

- False Positive (FP): It produces an output which shows the wrong predictions made for the +ve class.

- False Negative (FN): It produces an output which shows the wrong predictions made for the −ve class.

4.4. Classification Results

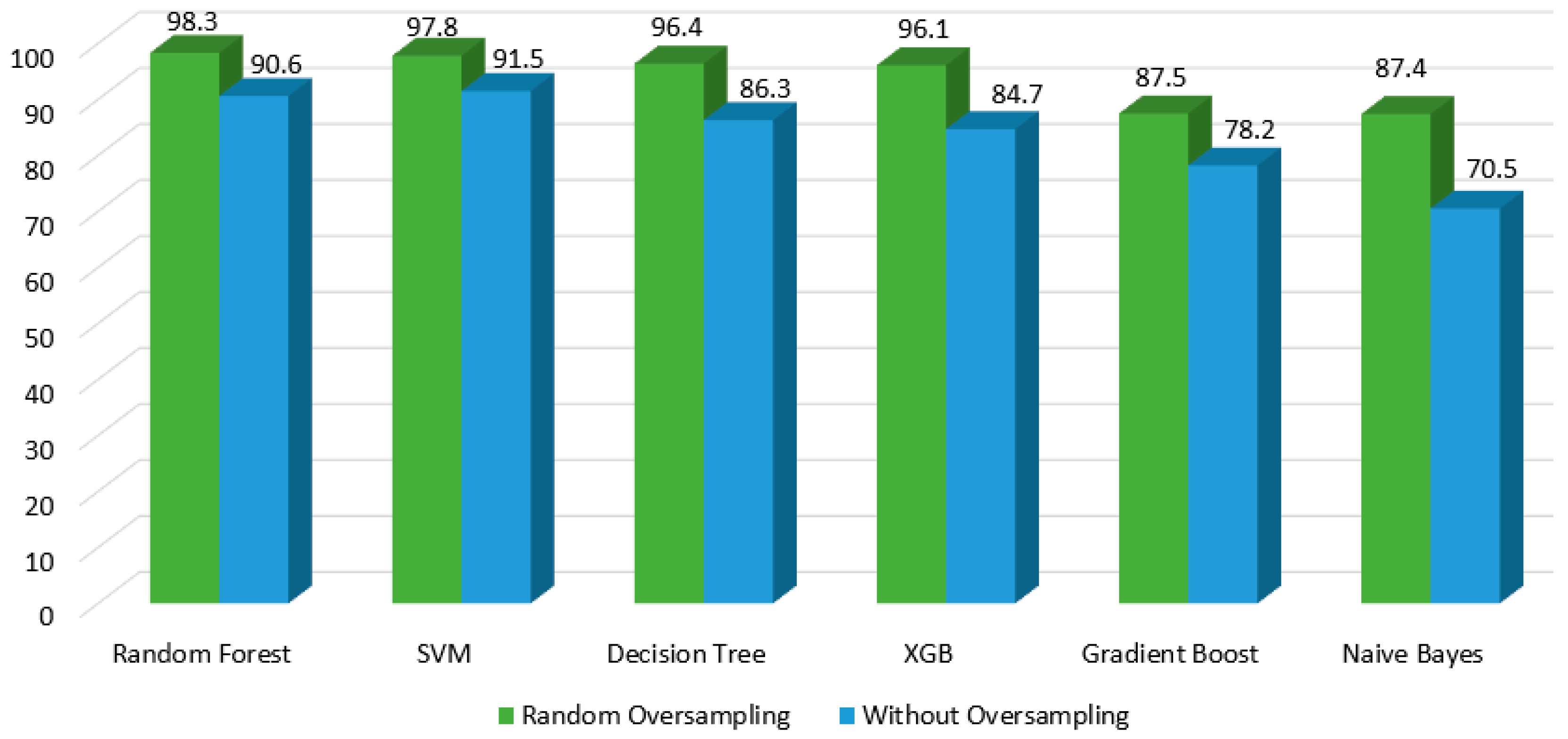

4.5. Results Comparison: Oversampling vs. No Oversampling

5. Result Visualization and Discussion

5.1. Word Cloud of Positive Tweets after Processing

5.2. Word Cloud of Neutral Tweets after Processing

5.3. Word Cloud of Negative Tweets after Processing

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Wang, C.; Zhang, P. The Evolution of Social Commerce: The People, Management, Technology, and Information Dimensions. Commun. Assoc. Inf. Syst. 2012, 31, 105–127. [Google Scholar] [CrossRef]

- Davies, A.; Ghahramani, Z. Language-Independent Bayesian Sentiment Mining of Twitter. In Proceedings of the Fifth International Workshop on Social Network Mining and Analysis (SNAKDD 2011), San Diego, CA, USA, 21–24 August 2011; pp. 99–106. [Google Scholar]

- Pang, B.; Lee, L. Opinion Mining and Sentiment Analysis. Found. Trends Inf. Retr. 2008, 2, 1–135. [Google Scholar] [CrossRef]

- Taboada, M.; Brooke, J.; Tofiloski, M.; Voll, K.; Stede, M. Lexicon-Based Methods for Sentiment Analysis. 2011. Available online: http://direct.mit.edu/coli/article-pdf/37/2/267/1798865/coli_a_00049.pdf (accessed on 25 July 2022).

- Jain, P.K.; Pamula, R.; Srivastava, G. A systematic literature review on machine learning applications for consumer sentiment analysis using online reviews. Comput. Sci. Rev. 2021, 41, 100413. [Google Scholar] [CrossRef]

- Yadav, A.; Vishwakarma, D.K. Sentiment analysis using deep learning architectures: A review. Artif. Intell. Rev. 2020, 53, 4335–4385. [Google Scholar] [CrossRef]

- Arabnia, H.R.; Deligiannidis, L.; Hashemi, R.R.; Tinetti, F.G. Information and Knowledge Engineering; Center for the Study of Race and Ethnicity in America: Providence, RI, USA, 2018. [Google Scholar]

- Rustam, F.; Khalid, M.; Aslam, W.; Rupapara, V.; Mehmood, A.; Choi, G.S. A performance comparison of supervised machine learning models for COVID-19 tweets sentiment analysis. PLoS ONE 2021, 16, e0245909. [Google Scholar] [CrossRef]

- Vashishtha, S.; Susan, S. Fuzzy rule based unsupervised sentiment analysis from social media posts. Expert Syst. Appl. 2019, 138, 112834. [Google Scholar] [CrossRef]

- Wassan, S.; Chen, X.; Shen, T.; Waqar, M.; Jhanjhi, N.Z. Amazon Product Sentiment Analysis using Machine Learning Techniques. Rev. Argent. Clín. Psicol. 2021, 30, 695–703. [Google Scholar] [CrossRef]

- Korovkinas, K.; Danėnas, P.; Garšva, G. SVM and k-Means Hybrid Method for Textual Data Sentiment Analysis. Balt. J. Mod. Comput. 2019, 7, 47–60. [Google Scholar] [CrossRef]

- Chakraborty, K.; Bhatia, S.; Bhattacharyya, S.; Platos, J.; Bag, R.; Hassanien, A.E. Sentiment Analysis of COVID-19 tweets by Deep Learning Classifiers—A study to show how popularity is affecting accuracy in social media. Appl. Soft Comput. 2020, 97, 106754. [Google Scholar] [CrossRef]

- Dogra, V.; Singh, A.; Verma, S.; Kavita; Jhanjhi, N.Z.; Talib, M.N. Analyzing DistilBERT for Sentiment Classification of Banking Financial News. Lect. Notes Netw. Syst. 2021, 248, 501–510. [Google Scholar] [CrossRef]

- Birjali, M.; Kasri, M.; Beni-Hssane, A. A comprehensive survey on sentiment analysis: Approaches, challenges and trends. Knowl.-Based Syst. 2021, 226, 107134. [Google Scholar] [CrossRef]

- Hussein, D.M.E.-D.M. A survey on sentiment analysis challenges. J. King Saud Univ.-Eng. Sci. 2018, 30, 330–338. [Google Scholar] [CrossRef]

- Yang, L.; Li, Y.; Wang, J.; Sherratt, R.S. Sentiment Analysis for E-Commerce Product Reviews in Chinese Based on Sentiment Lexicon and Deep Learning. IEEE Access 2020, 8, 23522–23530. [Google Scholar] [CrossRef]

- Ghosh, K.; Banerjee, A.; Chatterjee, S.; Sen, S. Imbalanced Twitter Sentiment Analysis using Minority Oversampling. In Proceedings of the 2019 IEEE 10th International Conference on Awareness Science and Technology (iCAST), Morioka, Japan, 23–25 October 2019. [Google Scholar]

- Rao, K.N.; Reddy, C.S. A novel under sampling strategy for efficient software defect analysis of skewed distributed data. Evol. Syst. 2020, 11, 119–131. [Google Scholar] [CrossRef]

- Zhou, S.; Li, X.; Dong, Y.; Xu, H. A Decoupling and Bidirectional Resampling Method for Multilabel Classification of Imbalanced Data with Label Concurrence. Sci. Program. 2020, 2020, 8829432. [Google Scholar] [CrossRef]

- Aljarah, I.; Al-Shboul, B.; Hakh, H. Online Social Media-Based Sentiment Analysis for US Airline Companies. 2017. Available online: https://www.researchgate.net/publication/315643035 (accessed on 25 July 2022).

- Liu, Y.; Bi, J.-W.; Fan, Z.-P. Multi-class sentiment classification: The experimental comparisons of feature selection and machine learning algorithms. Expert Syst. Appl. 2017, 80, 323–339. [Google Scholar] [CrossRef]

- Hasan, A.; Moin, S.; Karim, A.; Shamshirband, S. Machine Learning-Based Sentiment Analysis for Twitter Accounts. Math. Comput. Appl. 2018, 23, 11. [Google Scholar] [CrossRef]

- Catal, C.; Nangir, M. A sentiment classification model based on multiple classifiers. Appl. Soft Comput. 2017, 50, 135–141. [Google Scholar] [CrossRef]

- Eler, D.M.; Grosa, D.; Pola, I.; Garcia, R.; Correia, R.; Teixeira, J. Analysis of Document Pre-Processing Effects in Text and Opinion Mining. Information 2018, 9, 100. [Google Scholar] [CrossRef]

- Dzisevic, R.; Sesok, D. Text Classification using Different Feature Extraction Approaches. In Proceedings of the 2019 Open Conference of Electrical, Electronic and Information Sciences (eStream), Vilnius, Lithuania, 25 April 2019; pp. 1–4. [Google Scholar]

- Jing, N.; Wu, Z.; Wang, H. A hybrid model integrating deep learning with investor sentiment analysis for stock price prediction. Expert Syst. Appl. 2021, 178, 115019. [Google Scholar] [CrossRef]

- Obiedat, R.; Qaddoura, R.; Al-Zoubi, A.M.; Al-Qaisi, L.; Harfoushi, O.; Alrefai, M.; Faris, H. Sentiment Analysis of Customers’ Reviews Using a Hybrid Evolutionary SVM-Based Approach in an Imbalanced Data Distribution. IEEE Access 2022, 10, 22260–22273. [Google Scholar] [CrossRef]

- Jiang, W.; Zhou, K.; Xiong, C.; Du, G.; Ou, C.; Zhang, J. KSCB: A Novel Unsupervised Method for Text Sentiment Analysis. Appl. Intell. 2022. [Google Scholar] [CrossRef]

- Dablain, D.; Krawczyk, B.; Chawla, N.V. DeepSMOTE: Fusing Deep Learning and SMOTE for Imbalanced Data. IEEE Trans. Neural Netw. Learn. Syst. 2022, 31, 1–15. [Google Scholar] [CrossRef] [PubMed]

- Rahmanda, R.; Setiawan, E.B. Word2Vec on Sentiment Analysis with Synthetic Minority Oversampling Technique and Boosting Algorithm. J. RESTI 2022, 6, 599–605. [Google Scholar] [CrossRef]

- Bibi, M.; Abbasi, W.A.; Aziz, W.; Khalil, S.; Uddin, M.; Iwendi, C.; Gadekallu, T.R. A novel unsupervised ensemble framework using concept-based linguistic methods and machine learning for twitter sentiment analysis. Pattern Recognit. Lett. 2022, 158, 80–86. [Google Scholar] [CrossRef]

- Atmaja, B.T.; Sasou, A. Sentiment Analysis and Emotion Recognition from Speech Using Universal Speech Representations. Sensors 2022, 22, 6369. [Google Scholar] [CrossRef]

- IEEE Thailand Section and Institute of Electrical and Electronics Engineers. ICEAST 2018. In Proceedings of the 4th International Conference on Engineering, Applied Sciences, and Technology: Exploring Innovative Solutions for Smart Society, Phuket, Thailand, 4–7 July 2018. [Google Scholar]

- Mukherjee, A.; Mukhopadhyay, S.; Panigrahi, P.K.; Goswami, S. Utilization of Oversampling for multiclass sentiment analysis on Amazon Review Dataset. In Proceedings of the 2019 IEEE 10th International Conference on Awareness Science and Technology (iCAST), Morioka, Japan, 23–25 October 2019. [Google Scholar]

- Alnatara, W.D.; Khodra, M.L. Imbalanced data handling in multi-label aspect categorization using oversampling and ensemble learning. In Proceedings of the 2020 International Conference on Advanced Computer Science and Information Systems (ICACSIS), Depok, Indonesia, 17–18 October 2020; pp. 165–170. [Google Scholar] [CrossRef]

- Alwakid, G.; Osman, T.; El Haj, M.; Alanazi, S.; Humayun, M.; Sama, N.U. MULDASA: Multifactor Lexical Sentiment Analysis of Social-Media Content in Nonstandard Arabic Social Media. Appl. Sci. 2022, 12, 3806. [Google Scholar] [CrossRef]

- Khalil, M.I.; Tehsin, S.; Humayun, M.; Jhanjhi, N.; AlZain, M.A. Multi-Scale Network for Thoracic Organs Segmentation. Comput. Mater. Contin. 2022, 70, 3251–3265. [Google Scholar]

- Humayun, M.; Sujatha, R.; Almuayqil, S.N.; Jhanjhi, N.Z. A Transfer Learning Approach with a Convolutional Neural Network for the Classification of Lung Carcinoma. Healthcare 2022, 10, 1058. [Google Scholar] [CrossRef]

- Attaullah, M.; Ali, M.; Almufareh, M.F.; Ahmad, M.; Hussain, L.; Jhanjhi, N.; Humayun, M. Initial Stage COVID-19 Detection System Based on Patients’ Symptoms and Chest X-Ray Images. Appl. Artif. Intell. 2022, 36, 1–20. [Google Scholar] [CrossRef]

| Cite | Technique | Purpose | Positive | Gaps |

|---|---|---|---|---|

| [16] | Hybrid Lexicon and DL | The sentiment lexicon is employed in order to improve the attributes of sentiment in the reviews. The weighed sentiment characteristics are classified using GRU as well as CNN. | The data has a scale of 100,000 order of magnitude, and it may be commonly utilized in the domain of Chinese SA. | This approach can only classify sentiment into +ve and −ve, which is not useful in domains where required refinement of sentiment is higher. |

| [17] | ML based | In SA, live tweets from Twitter were utilized to methodically analyze the influence of the issue of class inequality. To address the issue of class inequality, the minority up-sampling approach is used here. | Results show that minority up-sampling based technique can handle the issue of class inequality to a great extent. | Not tested for multiclass classification. |

| [18] | ML based | To fix the class imbalance, decrease the less significant instances from the majority subgroups. | They identified the mostly misclassified samples based on KNN. | Does not perform well for small datasets. |

| [19] | ML based | The label discrepancy was reduced by isolating the highly contemporaneous data of the predominant and less dominant labels and analyzing the influence of the labels while resampling. | The algorithm’s usefulness has been demonstrated, particularly on datasets with good disparity of majority and minority instances. | The parameters directly impact the performance of the algorithm. |

| [20] | ML based | SA is performed on customers’ feedback about various airlines. | Feature engineering is used to choose the best features, that not only enhances the model’s overall effectiveness but also decreases time required for training. | The imbalanced among the classes present in most of the larger datasets might cause overfitting. |

| [21] | ML based | A feature engineering procedure is applied to identify the major characteristics that will eventually be utilized to train a machine learning-related algorithm. | Improved accuracy over base model through effective feature selection. | May not perform well for imbalanced datasets. |

| [22] | ML based | Adoption of a hybrid methodology that includes a SA analyzer through algorithms related to machine learning. | A great comparison of sentiment lexicons (Senti-Word-Net, W-WSD, Text-Blob) was presented so that the best might be used for SA. | Only accuracy was used as performance measure and the results were not impressive. |

| [23] | ML based | Investigating the effect of various classification systems on Turkish SA. | The results concluded that utilizing various classifiers increases the results for solo classifiers. | Various classification systems offer more promise for sentiment categorization, but it is not fully matured. |

| [24] | ML based | The use of an adequate preprocessing approach may result in improved performance for sentiment classification. | According to research, the combining multiple preprocessing procedures is critical in determining the optimum categorization results. | Imbalanced datasets have not been investigated. That is why our study will focus on that. |

| [25] | DL based | Utilizing three different feature extraction methods for text analytics through neural networks. | The experiment shows that TF-IDF helps it to achieve higher accuracy with a large dataset. | May not work well with highly imbalanced multiclass dataset. |

| [26] | DL-based | Using a CNN model to classify investor sentiments from a major Chinese stock forum. | This hybrid model with sentiments outperforms the baseline model. The results support that investor sentiment is a driver of stock prices. | The pretty outstanding forecast accuracy was achieved utilizing solely data from China. |

| [27] | ML based | To address the class imbalance problem, it proposes a hybrid strategy that combines the Support Vector (SVM) algorithm plus Particle Swarm Optimization (PSO) and several up-sampling approaches. | The study shows that the suggested PSO/SVM technique is successful and surpasses the other alternatives in all parameters tested. | The paper is mainly focused on Arabic language. Other languages may need to be explored. |

| [28] | Hybrid ML and DL based | KSCB is a novel text SA classification model that combines K-means++ algo with SMOTE for up-sampling, CNN, and Bi_LSTM. | The ablation investigation on both balanced and unbalanced datasets proved KSCB’s efficacy in text SA. | KSCB method does not take into account EPI: emotional-polarity-intensity. |

| [29] | DL based | An oversampling approach for deep learning systems that takes advantage of the famous SMOTE algorithm for class inequality data. | Deep-SMOTE needs no discriminator and yields better artificial pictures that are simultaneously information-rich and acceptable for eye examination. | Deep-SMOTE lacks knowledge related to challenges associated with class and instance level. |

| [30] | ML based | On Twitter, an aspect-based SA was performed on Telkomsel customers. The data utilized comprises various tweets from consumers who discuss various elements of Telkomsel’s offerings on Twitter. | The Word2Vec, Synthetic Minority up-sampling Technique, and Boosting algorithms combined with the LR classifier achieved the best performance. | Data was significantly small to accurately test the performance. |

| [31] | ML based | For Twitter sentiment analysis, a unique, unsupervised machine-learning approach that relies on concept-based and hierarchical grouping is presented. | The results obtained with this unsupervised learning method are on par with other supervised learning methods. | Boolean and TF-IDF are investigated utilizing unigrams. Bigrams and trigrams may also be investigated. Large datasets can also be explored. |

| [32] | DL based | Evaluation of SA and emotion identifcation from speech through supervised learning methods, specially speech representations. | Impressive results were obtained through weighted accuracy. Unimodel acoustic examination accomplished competitive results against previous methods. | The model did not perform well for multi-class problems where number of classes were high. |

| Sample Tweet Input | After Lowercase |

|---|---|

| it was absolutely amazing, and we reached an hour early. You’re great. | it was absolutely amazing, and we reached an hour early. you’re great. |

| Sample Tweet Input | Expanding Contractions |

|---|---|

| it was absolutely amazing, and we reached an hour early. You’re great. | it was absolutely amazing, and we reached an hour early. you are great. |

| Sample Tweet Input | Removing Punctuations |

|---|---|

| it was absolutely amazing, and we reached an hour early. you are great. | it was absolutely amazing and we reached an hour early you are great. |

| Sample Tweet Input | Removing Digits |

|---|---|

| is flight 81 on the way should have taken off 30 min ago | is flight on the way should have taken off minutes ago |

| Sample Tweet Input | Handling Slangs and Abbreviations |

|---|---|

| your deals never seem to include NYC | your deals never seem to include new york city |

| Sample Tweet Input | Removing Stop-Words |

|---|---|

| it was absolutely amazing and we reached an hour early you are great | absolutely amazing reached hour early good |

| Sample Tweet Input | Spell Correction |

|---|---|

| thanks for the good expirince | thanks for the good experience |

| Features | Description |

|---|---|

| Text | Original text of the tweet written by the customer. |

| Airline | Name of the US airline Company. |

| Airline_Sentiment_Confidence | A numeric characteristic that represents the confidence rate of categorizing the tweet into one of the three possible categorizes. |

| Airline_Sentiment | Labels of individual tweets (+ve, neutral, −ve). |

| Negative Reason | The reason is provided to consider a tweet as −ve. |

| Negative_Reason_Confidence | The rate of confidence in deciding the −ve reason in relation to a −ve tweet. |

| Retweet Count | Number of retweets made for a tweet. |

| Sentiment Class | Precision | Recall | F1-Score | |

|---|---|---|---|---|

| Random Forest Accuracy = 98.3% | negative | 0.99 | 1.00 | 0.99 |

| neutral | 0.98 | 0.96 | 0.97 | |

| positive | 0.97 | 0.97 | 0.97 | |

| SVM Accuracy = 97.8% | negative | 0.99 | 1.00 | 0.99 |

| neutral | 0.98 | 0.96 | 0.97 | |

| positive | 0.97 | 0.97 | 0.97 | |

| Naïve Bayes Accuracy = 87.4% | negative | 0.85 | 0.94 | 0.89 |

| neutral | 0.88 | 0.78 | 0.83 | |

| positive | 0.89 | 0.90 | 0.90 | |

| Gradient Boosting Accuracy = 87.5% | negative | 0.85 | 0.94 | 0.90 |

| neutral | 0.88 | 0.79 | 0.83 | |

| positive | 0.89 | 0.90 | 0.90 | |

| XGB Accuracy = 96.1% | negative | 0.98 | 0.98 | 0.98 |

| neutral | 0.97 | 0.96 | 0.95 | |

| positive | 0.96 | 0.96 | 0.97 | |

| Decision Tree Accuracy = 96.4% | negative | 0.98 | 0.98 | 0.98 |

| neutral | 0.97 | 0.97 | 0.95 | |

| positive | 0.96 | 0.96 | 0.97 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Almuayqil, S.N.; Humayun, M.; Jhanjhi, N.Z.; Almufareh, M.F.; Javed, D. Framework for Improved Sentiment Analysis via Random Minority Oversampling for User Tweet Review Classification. Electronics 2022, 11, 3058. https://doi.org/10.3390/electronics11193058

Almuayqil SN, Humayun M, Jhanjhi NZ, Almufareh MF, Javed D. Framework for Improved Sentiment Analysis via Random Minority Oversampling for User Tweet Review Classification. Electronics. 2022; 11(19):3058. https://doi.org/10.3390/electronics11193058

Chicago/Turabian StyleAlmuayqil, Saleh Naif, Mamoona Humayun, N. Z. Jhanjhi, Maram Fahaad Almufareh, and Danish Javed. 2022. "Framework for Improved Sentiment Analysis via Random Minority Oversampling for User Tweet Review Classification" Electronics 11, no. 19: 3058. https://doi.org/10.3390/electronics11193058