Empirical Analysis of Data Streaming and Batch Learning Models for Network Intrusion Detection

Abstract

1. Introduction

- i.

- How effective are the selected BLM implementations for detecting network intrusion detection?

- ii.

- How effective are the selected DSM implementations for detecting network intrusion detection?

- iii.

- Can DSM achieve comparable performance compared with BLM?

- iv.

- How effective is the performance of the experimented BLM and DSM compared with existing methods?

- Extension of the state-of-the-art studies in the domain of network intrusion detection by investigating the performance of BLM and DSM algorithms.

- Investigation of the performance of selected BLM and DSM algorithms based on binary and multi-class classification tasks.

- Extensive analysis to ascertain the effectiveness of the selected BLM and DSM algorithms.

2. Related Work

3. Materials and Methods

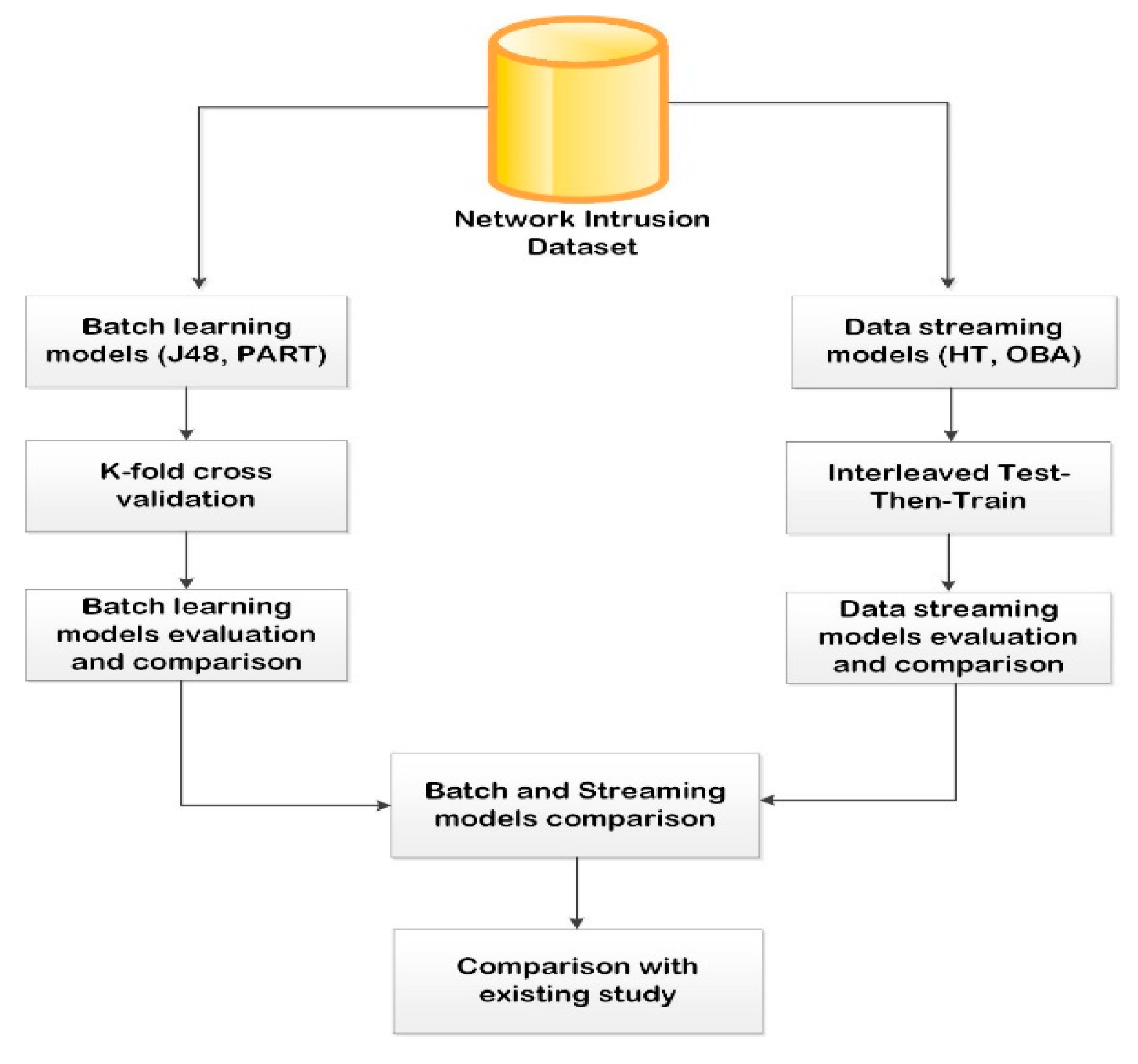

3.1. Proposed Framework

3.2. Batch Learning Models (BLM)

3.2.1. Decision Tree (J48)

| Algorithm 1: J48 Algorithm |

| 1: Generate a node N as the root node; 2: If (T and C are in the same category) { leaf node = N; N is mark as class C; return N; } 3: For j = 1 to n { Compute Information gain(Ai);} 4: Assign ta as the testing attribute; 5: N.ta = attribute with the highest information gain; 6: if (N.ta = continuous) { compute threshold value; } 7: For (each T’ in the T split) 8: if (empty(T’) = true) { assign child of N as leaf node;} 9: else {child of N = dtree (T’)} 10: compute the node N classification error rate; 11: return N; |

3.2.2. Projective Adaptive Resonance Theory (PART)

| Algorithm 2: PART Algorithm |

| Inputs: Dataset S, A1-> dimensions of input vectors, A2 -> expected maximum clusters allowable at each clustering level Initial parameters ρ0, ρh, σ, α, θ and e. Output: RuleSet -> set of rules 1: Let ρ = ρ0. 2: L1: WHILE (not stopping condition i.e., stable clusters not yet formed) FOREACH input vector in S do Compute kij for all A1 nodes Vi and committed A2 nodes Vj. If all A2 nodes are non-committed, go to L2 Compute Tj for all committed A2 nodes Vj. L2: Select the best A2 node Vj. If no A2 node can be picked, add the input data into outlier O and then proceed with L1 If the best is a committed node, calculate rj, else goto L3 If rj ≥ ρ, goto L3, else reset the best Vj and goto L2 L3: Set the winner Vj as the committed and update the bottom-up and top-down weights for winner node Vj. ENDFOR FOREACH cluster Cj in A/2, compute the dimension associated with set Dj. Let S = Cj ρ = ρ + ρh, then, goto L1. For the outlier O, let S = 0, goto L1 ENDWHILE |

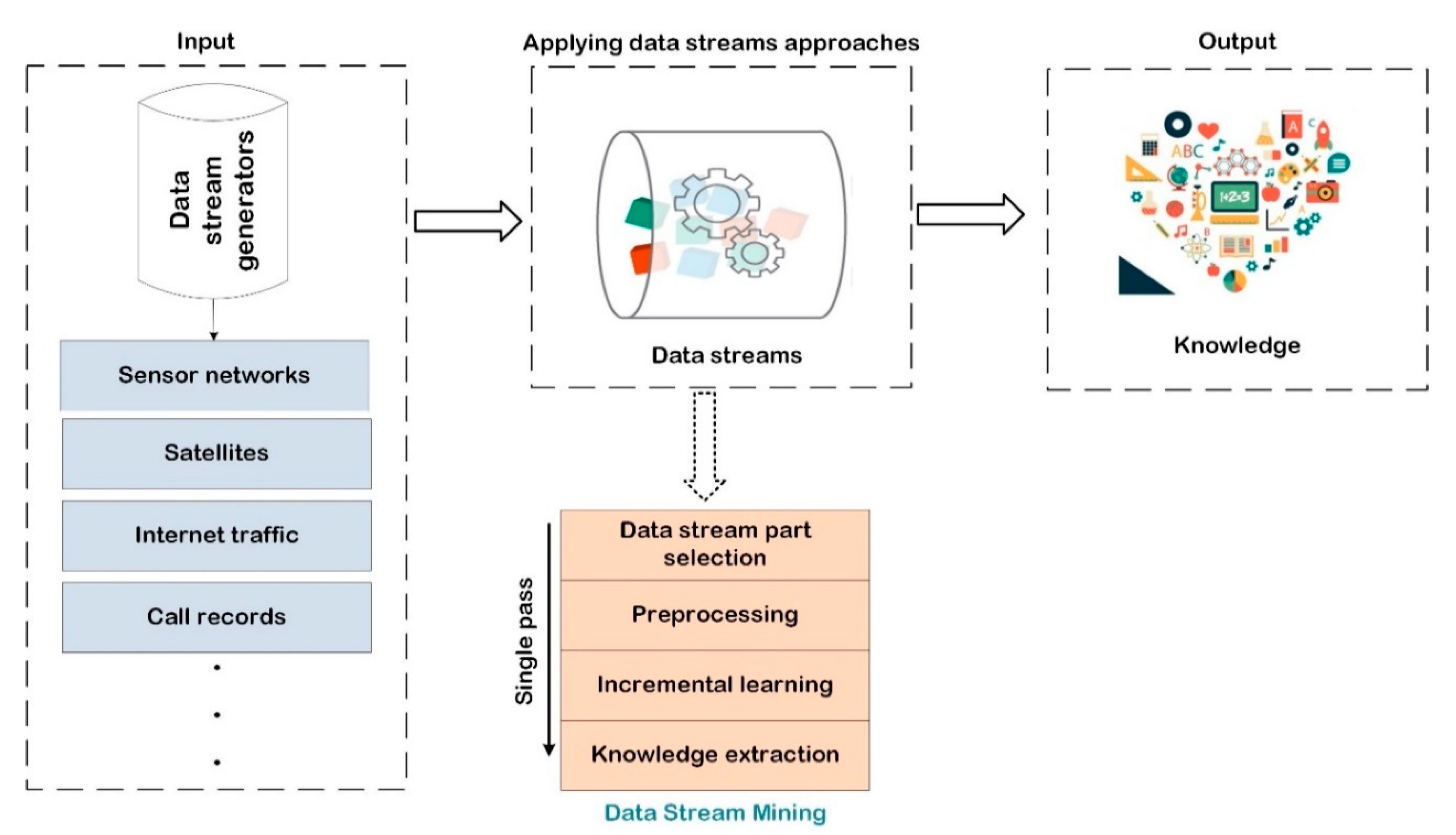

3.3. Data Stream Model (DSM)

3.3.1. Hoeffding Tree (HT)

| Algorithm 3: HT Algorithm |

| Input: A stream of labeled data, confidence parameter δ Output: Tree Model 1: Assign HT as a tree(root) having single leaf 2: Initialization: initialize nijk as counter at the root 3: for each data(a,b) in Stream do 4: HTGrow((a,b), HT, ꝺ) HTGrow((a,b), HT, ꝺ) 1: apply sorting to (a,b) as leaf l based on HT 2: perform an update on nijk counter at leaf l 3: if data found at l so far do not belong to the same class 4: then calculate G for every attribute 5: if G(best-attribute) – G(second-best) > ∈ 6: then on best attribute perform split-leaf 7: for every branch do 8: start a new leaf and initialize counts |

3.3.2. OzaBagAdwin (OBA)

| Algorithm 4: Ozabag Algorithm |

| Input: S (Stream data), M (Number of Bases Model), Y (set of class labels), X (features vector) Output: class label predicted based on majority vote 1: Initialization: setup M number of base classifier: h1, h2, …, hM; 2: while CanNext(S) do 3: (x, y) = NextInstance(S); 4: for m = 1, …, M do 5: w ← Poisson(1); 6: train hm with an instance using weight w; 7: return overall class label based on the majority vote. |

4. Experimental Setup

4.1. Dataset Description

4.2. Evaluation Metrics

- Accuracy: This shows the percentage of classifications the model correctly predicts. It is computed utilizing

- 2.

- Sensitivity: This computes the percentage of actual positive cases that were predicted to be positive or (true positive). Another name for sensitivity is recall. Thus, it refers to the model’s accuracy in detecting network intrusions in this context. Sensitivity is calculated mathematically by

- 3.

- Precision: This indicator shows the percentage of data occurrences that the model correctly predicts to be normal. It is calculated by dividing the total number of true positives by the sum of all true positives and false positives. Mathematically, it can be calculated by

- 4.

- F-measure: This is the precision and sensitivity weighted harmonic mean. The formula is as follows:

- 5.

- False-positive rate: This gauges the percentage of lawful traffic that was incorrectly categorized as a network assault. The indicator is determined by

- 6.

- Kappa statistics: The observed and anticipated accuracy are correlated by the kappa statistic.

5. Results and Discussion

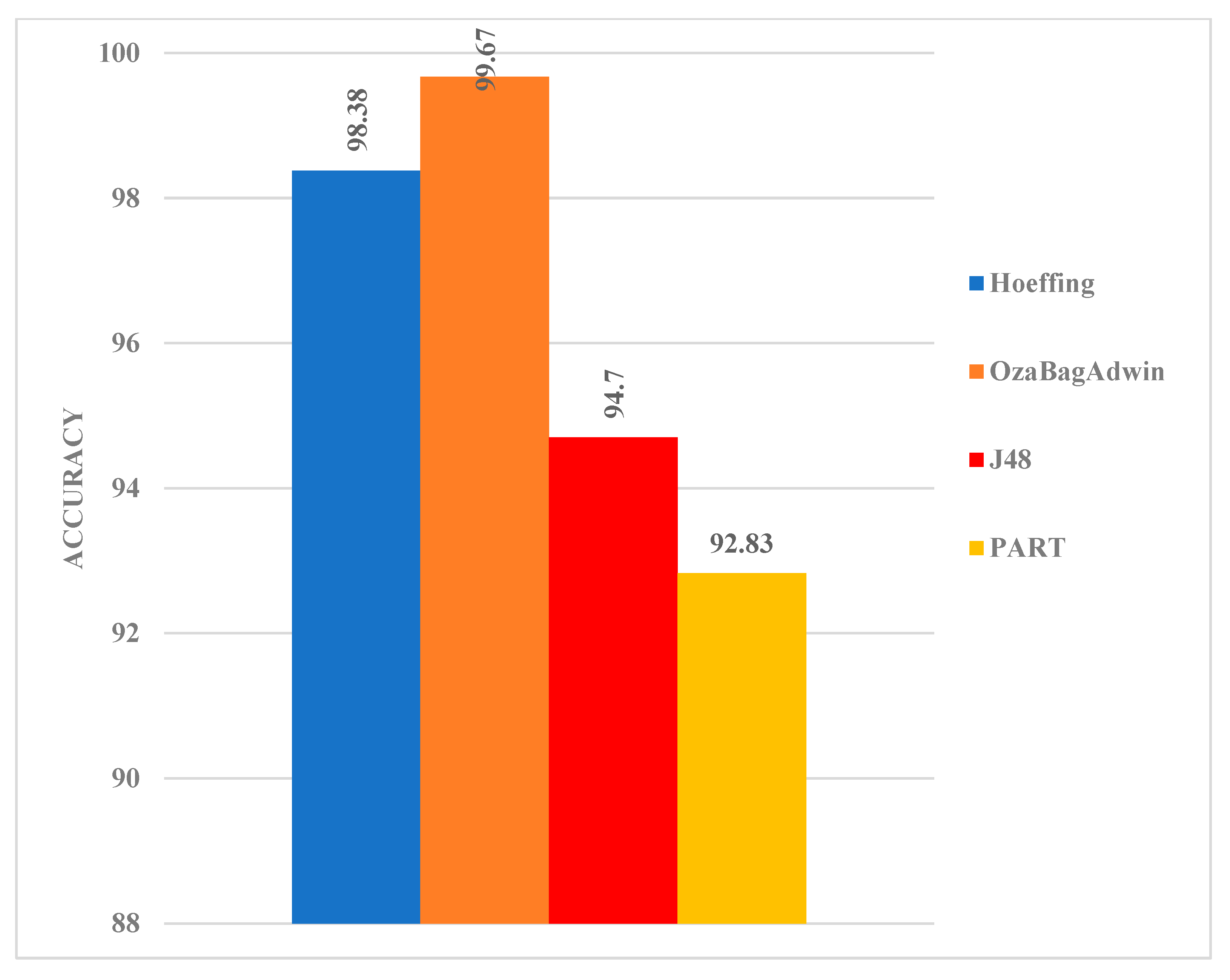

5.1. DSM Experimental Results Based on Binary Class Classification

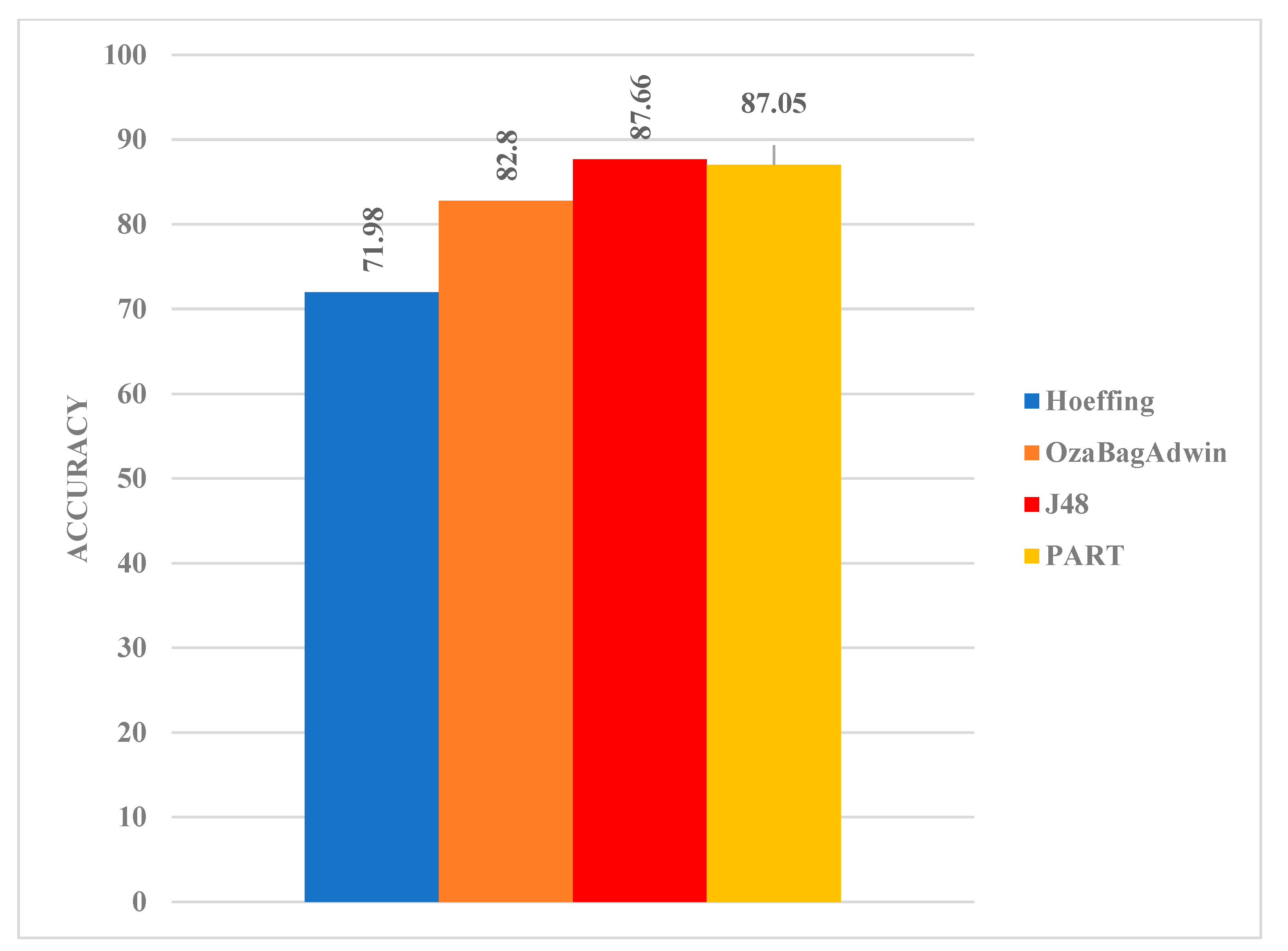

5.2. DSM Experimental Results Based on Multi-Class Classification

5.3. BLM Experimental Results Based on Binary Class Classification

5.4. BLM Experimental Results Based on Multi-Class Classification

5.5. Performance Comparison of DSM and BLM Based on Binary Class Classification

5.6. Performance Comparison of DSM and BLM Based on Multi-Class Classification

5.7. Performance Comparison of DSM and BLM with Existing Studies

5.8. Performance Comparison of DSM and BLM based on Statistical Test

5.9. Findings Based on Research Questions

6. Conclusions and Future Work

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Balogun, O.A.; Mojeed, H.A.; Adewole, K.S.; Akintola, A.G.; Salihu, S.A.; Bajeh, A.O.; Jimoh, R.G. Optimized Decision Forest for Website Phishing Detection. In Proceedings of the Computational Methods in Systems and Software, Online, 1 October 2021; Springer: Cham, Switzerland, 2021; pp. 568–582. [Google Scholar]

- Balogun, O.A.; Adewole, K.S.; Bajeh, A.O.; Jimoh, R.G. Cascade Generalization Based Functional Tree for Website Phishing Detection. In Proceedings of the International Conference on Advances in Cyber Security, Penang, Malaysia, 24–25 August 2021; Springer: Singapore, 2021; pp. 288–306. [Google Scholar]

- Balogun, A.O.; Adewole, K.S.; Raheem, M.O.; Akande, O.N.; Usman-Hamza, F.E.; Mabayoje, M.A.; Akintola, A.G.; Asaju-Gbolagade, A.W.; Jimoh, M.K.; Jimoh, R.G.; et al. Improving the phishing website detection using empirical analysis of Function Tree and its variants. Heliyon 2021, 7, e07437. [Google Scholar] [CrossRef] [PubMed]

- Awotunde, J.B.; Chakraborty, C.; Adeniyi, A.E. Intrusion Detection in Industrial Internet of Things Network-Based on Deep Learning Model with Rule-Based Feature Selection. Wirel. Commun. Mob. Comput. 2021, 2021, 7154587. [Google Scholar] [CrossRef]

- Utzerath, J.; Dennis, R. Numbers and statistics: Data and cyber breaches under the General Data Protection Regulation. Int. Cybersecur. Law Rev. 2021, 2, 339–348. [Google Scholar] [CrossRef]

- Elijah, A.V.; Abdullah, A.; JhanJhi, N.; Supramaniam, M. Ensemble and Deep-Learning Methods for Two-Class and Multi-Attack Anomaly Intrusion Detection: An Empirical Study. Int. J. Adv. Comput. Sci. Appl. 2019, 10, 520–528. [Google Scholar] [CrossRef]

- Balogun, A.O.; Jimoh, R.G. Anomaly intrusion detection using an hybrid of decision tree and K-nearest neighbor. J. Adv. Sci. Res. Appl. 2015, 2, 67–74. [Google Scholar]

- Atli, B.G.; Miche, Y.; Kalliola, A.; Oliver, I.; Holtmanns, S.; Lendasse, A. Anomaly-Based Intrusion Detection Using Extreme Learning Machine and Aggregation of Network Traffic Statistics in Probability Space. Cogn. Comput. 2018, 10, 848–863. [Google Scholar] [CrossRef]

- Khraisat, A.; Gondal, I.; Vamplew, P.; Kamruzzaman, J. Survey of intrusion detection systems: Techniques, datasets and challenges. Cybersecurity 2019, 2, 20. [Google Scholar] [CrossRef]

- Salau-Ibrahim, T.T.; Jimoh, R.G. Negative Selection Algorithm Based Intrusion Detection Model. In Proceedings of the 20th IEEE Mediterranean Electrotechnical Conference, MELECON 2020-Proceedings, Palermo, Italy, 16–18 June 2020; pp. 202–206. [Google Scholar]

- Adewole, K.S.; Raheem, M.O.; Abdulraheem, M.; Oladipo, I.D.; Balogun, A.O.; Baker, O.F. Malicious URLs Detection Using Data Streaming Algorithms. J. Teknol. Dan Sist. Komput. 2021, 9, 224–229. [Google Scholar] [CrossRef]

- Kasongo, S.M.; Sun, Y. Performance Analysis of Intrusion Detection Systems Using a Feature Selection Method on the UNSW-NB15 Dataset. J. Big Data 2020, 7, 105. [Google Scholar] [CrossRef]

- Jiang, K.; Wang, W.; Wang, A.; Wu, H. Network Intrusion Detection Combined Hybrid Sampling with Deep Hierarchical Network. IEEE Access 2020, 8, 32464–32476. [Google Scholar] [CrossRef]

- Kaja, N.; Shaout, A.; Ma, D. An intelligent intrusion detection system. Appl. Intell. 2019, 49, 3235–3247. [Google Scholar] [CrossRef]

- Liu, H.; Lang, B. Machine Learning and Deep Learning Methods for Intrusion Detection Systems: A Survey. Appl. Sci. 2019, 9, 4396. [Google Scholar] [CrossRef]

- Nguyen, M.T.; Kim, K. Genetic convolutional neural network for intrusion detection systems. Future Gener. Comput. Syst. 2020, 113, 418–427. [Google Scholar] [CrossRef]

- Sinha, U.; Gupta, A.; Sharma, D.K.; Goel, A.; Gupta, D. Network Intrusion Detection Using Genetic Algorithm and Predictive Rule Mining. In Cognitive Informatics and Soft Computing; Springer: Berlin/Heidelberg, Germany, 2021; pp. 143–156. [Google Scholar]

- Tao, Z.; Huiling, L.; Wenwen, W.; Xia, Y. GA-SVM based feature selection and parameter optimization in hospitalization expense modeling. Appl. Soft Comput. 2019, 75, 323–332. [Google Scholar] [CrossRef]

- Gu, J.; Lu, S. An effective intrusion detection approach using SVM with naïve Bayes feature embedding. Comput. Secur. 2021, 103, 102158. [Google Scholar] [CrossRef]

- Moustafa, N.; Turnbull, B.; Choo, K.-K.R. An Ensemble Intrusion Detection Technique Based on Proposed Statistical Flow Features for Protecting Network Traffic of Internet of Things. IEEE Internet Things J. 2018, 6, 4815–4830. [Google Scholar] [CrossRef]

- Radiuk, P.M. Impact of Training Set Batch Size on the Performance of Convolutional Neural Networks for Diverse Datasets. Inf. Technol. Manag. Sci. 2018, 20, 20–24. [Google Scholar] [CrossRef]

- Horchulhack, P.; Viegas, E.K.; Santin, A.O. Toward feasible machine learning model updates in network-based intrusion detection. Comput. Netw. 2022, 202, 108618. [Google Scholar] [CrossRef]

- Data, M.; Aritsugi, M. AB-HT: An Ensemble Incremental Learning Algorithm for Network Intrusion Detection Systems. In Proceedings of the 2022 International Conference on Data Science and Its Applications (ICoDSA), Bandung, Indonesia, 6–7 July 2022; pp. 47–52. [Google Scholar]

- Saeed, M.M. A real-time adaptive network intrusion detection for streaming data: A hybrid approach. Neural Comput. Appl. 2022, 34, 6227–6240. [Google Scholar] [CrossRef]

- Gong, Q.; DeMar, P.; Altunay, M. ThunderSecure: Deploying real-time intrusion detection for 100G research networks by leveraging stream-based features and one-class classification network. Int. J. Inf. Secur. 2022, 21, 799–812. [Google Scholar] [CrossRef]

- Fekri, M.N.; Patel, H.; Grolinger, K.; Sharma, V. Deep learning for load forecasting with smart meter data: Online Adaptive Recurrent Neural Network. Appl. Energy 2021, 282, 116177. [Google Scholar] [CrossRef]

- Wang, K.; Gopaluni, R.B.; Chen, J.; Song, Z. Deep Learning of Complex Batch Process Data and Its Application on Quality Prediction. IEEE Trans. Ind. Inform. 2018, 16, 7233–7242. [Google Scholar] [CrossRef]

- Sarwar, S.S.; Ankit, A.; Roy, K. Incremental Learning in Deep Convolutional Neural Networks Using Partial Network Sharing. IEEE Access 2019, 8, 4615–4628. [Google Scholar] [CrossRef]

- Yadav, S.S.; Jadhav, S.M. Deep convolutional neural network based medical image classification for disease diagnosis. J. Big Data 2019, 6, 113. [Google Scholar] [CrossRef]

- Parisi, G.I.; Kemker, R.; Part, J.L.; Kanan, C.; Wermter, S. Continual lifelong learning with neural networks: A review. Neural Networks 2019, 113, 54–71. [Google Scholar] [CrossRef]

- Schelter, S.; Biessmann, F.; Januschowski, T.; Salinas, D.; Seufert, S.; Szarvas, G. On Challenges in Machine Learning Model Management; Bulletin of the IEEE Computer Society Technical Committee on Data Engineering; IEEE Explorer; Pennsylvania State University: State College, PA, USA, 2018. [Google Scholar]

- Mnih, V.; Badia, A.P.; Mirza, L.; Graves, A.; Harley, T.; Lillicrap, T.P.; Silver, D.; Kavukcuoglu, K. Asynchronous methods for deep reinforcement learning. In Proceedings of the 33rd International Conference on Machine Learning, PMLR, New York, NY, USA, 19–24 June 2016; pp. 1928–1937. [Google Scholar]

- Stan, T.; Thompson, Z.T.; Voorhees, P.W. Optimizing convolutional neural networks to perform semantic segmentation on large materials imaging datasets: X-ray tomography and serial sectioning. Mater. Charact. 2020, 160, 110119. [Google Scholar] [CrossRef]

- Tran, H.T.N.; Ang, K.S.; Chevrier, M.; Zhang, X.; Lee, N.Y.S.; Goh, M.; Chen, J. A benchmark of batch-effect correction methods for single-cell RNA sequencing data. Genome Biol. 2020, 21, 12. [Google Scholar] [CrossRef]

- Garbuio, M.; Gheno, G. An Algorithm for Designing Value Propositions in the IoT Space: Addressing the Challenges of Selecting the Initial Class in Reference Class Forecasting. IEEE Trans. Eng. Manag. 2021, 1–12. [Google Scholar] [CrossRef]

- Montero-Manso, P.; Hyndman, R.J. Principles and algorithms for forecasting groups of time series: Locality and globality. Int. J. Forecast. 2021, 37, 1632–1653. [Google Scholar] [CrossRef]

- Benzer, S.; Garabaghi, F.H.; Benzer, R.; Mehr, H.D. Investigation of some machine learning algorithms in fish age classification. Fish. Res. 2022, 245, 106151. [Google Scholar] [CrossRef]

- Adewole, K.S.; Akintola, A.G.; Salihu, S.A.; Faruk, N.; Jimoh, R.G. Hybrid Rule-Based Model for Phishing URLs Detection. Lect. Notes Inst. Comput. Sci. Soc.-Inform. Telecommun. Eng. 2019, 285, 119–135. [Google Scholar]

- Rutkowski, L.; Jaworski, M.; Duda, P. Basic Concepts of Data Stream Mining. In Stream Data Mining: Algorithms and Their Probabilistic Properties; Springer: Cham, Switzerland, 2020; pp. 13–33. [Google Scholar]

- Kholghi, M.; Keyvanpour, M. An analytical framework for data stream mining techniques based on challenges and requirements. arXiv 2011, arXiv:1105.1950. [Google Scholar]

- Gaber, M.M.; Gama, J.; Krishnaswamy, S.; Gomes, J.B.; Stahl, F. Data stream mining in ubiquitous environments: State-of-the-art and current directions. WIREs Data Min. Knowl. Discov. 2014, 4, 116–138. [Google Scholar] [CrossRef]

- Ramírez-Gallego, S.; Krawczyk, B.; García, S.; Woźniak Michałand Herrera, F. A survey on data preprocessing. Neurocomputing 2017, 239, 39–57. [Google Scholar] [CrossRef]

- Morales, G.D.F.; Bifet, A.; Khan, L.; Gama, J.; Fan, W. IoT big data stream mining. In Proceedings of the ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, Online, 13–17 August 2016; pp. 2119–2120. [Google Scholar]

- Adewole, K.S.; Raheem, M.O.; Abikoye, O.C.; Ajiboye, A.R.; Oladele, T.O.; Jimoh, M.K.; Aremu, D.R. Malicious Uniform Resource Locator Detection Using Wolf Optimization Algorithm and Random Forest Classifier. In Machine Learning and Data Mining for Emerging Trend in Cyber Dynamics; Springer: Cham, Swizarland, 2021; pp. 177–196. [Google Scholar]

- Balogun, A.O.; Basri, S.; Mahamad, S.; Capretz, L.F.; Imam, A.A.; Almomani, M.A.; Adeyemo, V.E.; Kumar, G. A Novel Rank Aggregation-Based Hybrid Multifilter Wrapper Feature Selection Method in Software Defect Prediction. Comput. Intell. Neurosci. 2021, 2021, 5069016. [Google Scholar] [CrossRef] [PubMed]

- Alsariera, Y.A.; Balogun, A.O.; Adeyemo, V.E.; Tarawneh, O.H.; Mojeed, H.A. Intelligent Tree-Based Ensemble Approaches for Phishing Website Detection. J. Eng. Sci. Technol. 2022, 17, 0563–0582. [Google Scholar]

| Feature | Data Stream Mining | Traditional Data Mining |

|---|---|---|

| Processing | Real-time samples | Offline every record |

| Storage | Not feasible | Feasible |

| Volume | Infinite | Finite |

| Data Generation | Dynamic | Static |

| Time | Only one pass | More time to access data |

| Data Type | Heterogeneous | Homogeneous |

| Result | Approximation | Accurate |

| Metrics | HT Mean Value | OBA Mean Value |

|---|---|---|

| Accuracy | 98.38 | 99.67 |

| Kappa | 96.73 | 99.33 |

| Ram Hours | 0.00 | 0.00 |

| Time | 0.91 | 4.08 |

| Memory | 0.00 | 0.00 |

| Metrics | HT Mean Value | OBA Mean Value |

|---|---|---|

| Accuracy | 71.98 | 82.80 |

| Kappa | 62.27 | 76.14 |

| Ram Hours | 0.00 | 0.00 |

| Time | 1.80 | 12.86 |

| Memory | 0.00 | 0.00 |

| Metrics | J48 Mean Value | PART Mean Value |

|---|---|---|

| Accuracy | 94.73 | 92.83 |

| Sensitivity | 94.70 | 92.80 |

| Precision | 94.70 | 0.93 |

| F-measure | 0.947 | 0.93 |

| ROC | 0.982 | 0.98 |

| False Positive Rate | 0.056 | 0.071 |

| Metrics | J48 Mean Value | PART Mean Value |

|---|---|---|

| Accuracy | 87.66 | 87.05 |

| Sensitivity | 87.70 | 87.10 |

| Precision | 0.88 | 0.87 |

| F-measure | 0.87 | 0.87 |

| ROC | 0.97 | 0.97 |

| False Positive Rate | 0.024 | 0.024 |

| Approaches | Classification | Model | Accuracy (%) |

|---|---|---|---|

| BLM | Binary | J48 | 94.73 |

| PART | 92.83 | ||

| J48 | 87.66 | ||

| Multiclass | PART | 87.05 | |

| DSM | Binary | HT | 98.38 |

| OBA | 99.67 | ||

| HT | 71.98 | ||

| Multiclass | OBA | 82.80 | |

| [20] | Binary | Decision Tree | 95.32 |

| Naïve Bayes | 91.17 | ||

| Artificial Neural Network | 92.54 |

| t-Test: Two-Sample Assuming Unequal Variances | ||

|---|---|---|

| DSM | BLM | |

| Mean | 99.025 | 93.78 |

| Variance | 0.83205 | 1.805 |

| Hypothesized Mean Difference | 0 | |

| df | 2 | |

| t Stat | 4.567739292 | |

| p (T ≤ t) one-tail | 0.02236856 | |

| t Critical one-tail | 2.91998558 | |

| p (T ≤ t) two-tail | 0.044737121 | |

| t Critical two-tail | 4.30265273 | |

| t-Test: Two-Sample Assuming Unequal Variances | ||

|---|---|---|

| DSM | BLM | |

| Mean | 77.39 | 87.355 |

| Variance | 58.5362 | 0.18605 |

| Hypothesized Mean Difference | 0 | |

| df | 1 | |

| t Stat | −1.839039075 | |

| p (T ≤ t) one-tail | 0.158531543 | |

| t Critical one-tail | 6.313751515 | |

| p (T ≤ t) two-tail | 0.317063086 | |

| t Critical two-tail | 12.70620474 | |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Adewole, K.S.; Salau-Ibrahim, T.T.; Imoize, A.L.; Oladipo, I.D.; AbdulRaheem, M.; Awotunde, J.B.; Balogun, A.O.; Isiaka, R.M.; Aro, T.O. Empirical Analysis of Data Streaming and Batch Learning Models for Network Intrusion Detection. Electronics 2022, 11, 3109. https://doi.org/10.3390/electronics11193109

Adewole KS, Salau-Ibrahim TT, Imoize AL, Oladipo ID, AbdulRaheem M, Awotunde JB, Balogun AO, Isiaka RM, Aro TO. Empirical Analysis of Data Streaming and Batch Learning Models for Network Intrusion Detection. Electronics. 2022; 11(19):3109. https://doi.org/10.3390/electronics11193109

Chicago/Turabian StyleAdewole, Kayode S., Taofeekat T. Salau-Ibrahim, Agbotiname Lucky Imoize, Idowu Dauda Oladipo, Muyideen AbdulRaheem, Joseph Bamidele Awotunde, Abdullateef O. Balogun, Rafiu Mope Isiaka, and Taye Oladele Aro. 2022. "Empirical Analysis of Data Streaming and Batch Learning Models for Network Intrusion Detection" Electronics 11, no. 19: 3109. https://doi.org/10.3390/electronics11193109

APA StyleAdewole, K. S., Salau-Ibrahim, T. T., Imoize, A. L., Oladipo, I. D., AbdulRaheem, M., Awotunde, J. B., Balogun, A. O., Isiaka, R. M., & Aro, T. O. (2022). Empirical Analysis of Data Streaming and Batch Learning Models for Network Intrusion Detection. Electronics, 11(19), 3109. https://doi.org/10.3390/electronics11193109