A Hybrid Asynchronous Brain–Computer Interface Based on SSVEP and Eye-Tracking for Threatening Pedestrian Identification in Driving

Abstract

1. Introduction

2. Materials and Methods

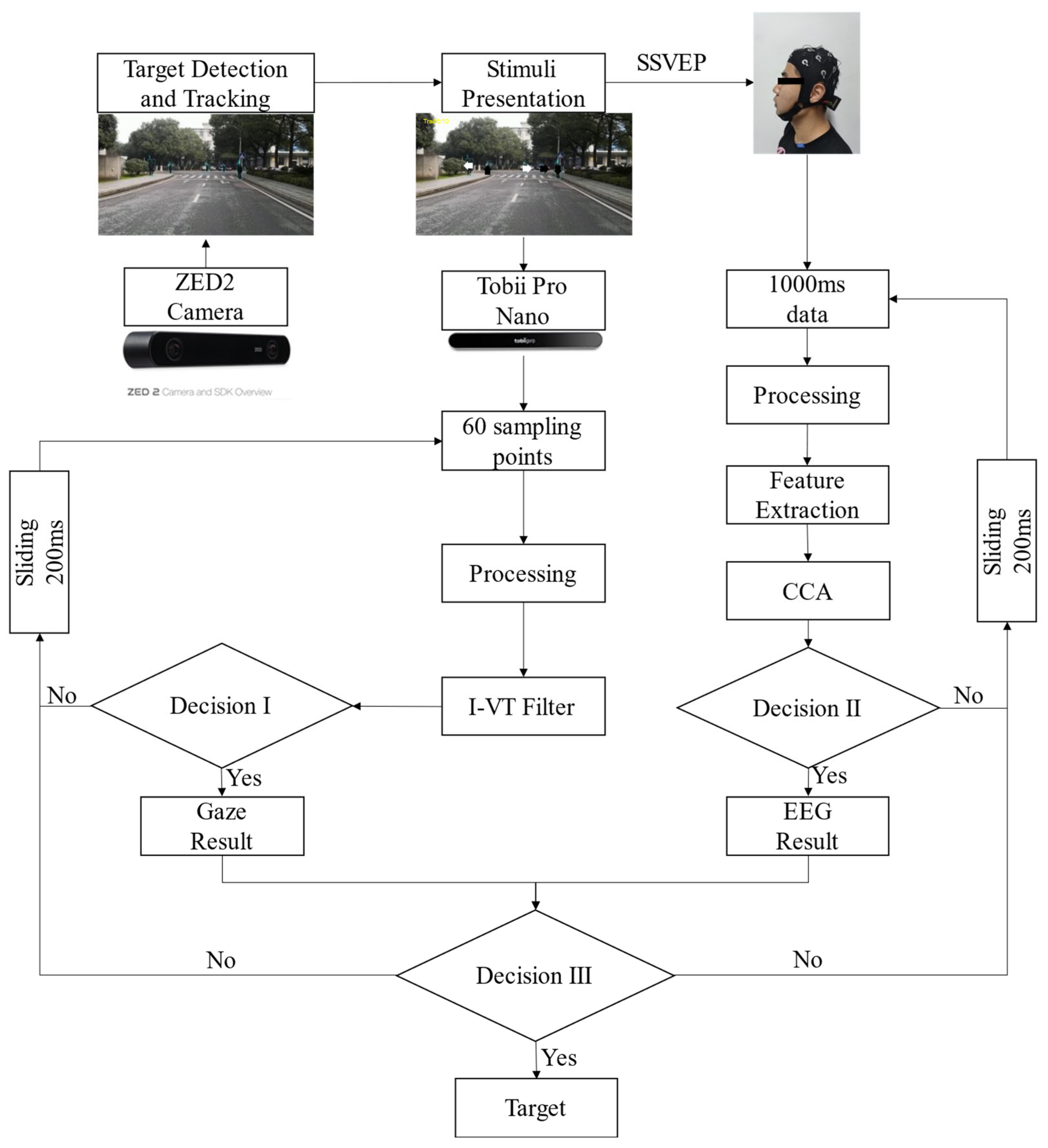

2.1. System Description

2.2. Target Detection and Tracking

2.3. Graphical Stimuli Interface

2.4. Participants

2.5. Signal Acquisition and Processing

3. Results

3.1. Evaluation Metrics

3.2. Performance of the Offline Experiment

3.3. Performance of Asynchronous Online Experiment

4. Discussion

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Sánchez-Reyes, L.M.; Rodríguez-Reséndiz, J.; Avecilla-Ramírez, G.N.; García-Gomar, M.L.; Robles-Ocampo, J.B. Impact of eeg parameters detecting dementia diseases: A systematic review. IEEE Access 2021, 9, 78060–78074. [Google Scholar] [CrossRef]

- Ortiz-Echeverri, C.; Paredes, O.; Salazar-Colores, J.S.; Rodríguez-Reséndiz, J.; Romo-Vázquez, R. A Comparative Study of Time and Frequency Features for EEG Classification. Lat. Am. Conf. Biomed. Eng. 2019, 75, 91–97. [Google Scholar]

- Liu, Y.; Liu, Y.; Tang, J.; Yin, E.; Hu, D.; Zhou, Z. A self-paced BCI prototype system based on the in-corporation of an intelligent environment-understanding approach for rehabilitation hospital environmental. Comput. Biol. Med. 2020, 118, 103618. [Google Scholar] [CrossRef] [PubMed]

- Li, W.; Duan, F.; Sheng, S.; Xu, C.; Liu, R.; Zhang, Z.; Jiang, X. A human-vehicle collaborative sim-ulated driving system based on hybrid brain–computer interfaces and computer vision. IEEE Trans. Cogn. Dev. Syst. 2017, 10, 810–822. [Google Scholar] [CrossRef]

- Leeb, R.; Tonin, L.; Rohm, M.; Desideri, L.; Carlson, T.; Millan, J.D.R. Towards Independence: A BCI Telepresence Robot for People With Severe Motor Disabilities. Proc. IEEE 2015, 103, 969–982. [Google Scholar] [CrossRef]

- Bi, L.; Fan, X.-A.; Luo, N.; Jie, K.; Li, Y.; Liu, Y. A Head-Up Display-Based P300 Brain–Computer Interface for Destination Selection. IEEE Trans. Intell. Transp. Syst. 2013, 14, 1996–2001. [Google Scholar] [CrossRef]

- Zhuang, J.; Yin, G. Motion control of a four-wheel-independent-drive electric vehicle by motor imagery EEG based BCI system. In Proceedings of the 2017 36th Chinese Control Conference (CCC), Dalian, China, 26–28 July 2017; pp. 5449–5454. [Google Scholar]

- Stawicki, P.; Gembler, F.; Volosyak, I. Driving a Semiautonomous Mobile Robotic Car Controlled by an SSVEP-Based BCI. Comput. Intell. Neurosci. 2016, 2016, 4909685. [Google Scholar] [CrossRef]

- Fernandez-Fraga, S.M.; Aceves-Fernandez, M.A.; Rodríguez-Resendíz, J.; Pedraza-Ortega, J.C.; Ramos-Arreguín, J.M. Steady-state visual evoked potential (SSEVP) from EEG signal modeling based upon recurrence plots. Evol. Syst. 2019, 10, 97–109. [Google Scholar] [CrossRef]

- Ortner, R.; Allison, B.Z.; Korisek, G.; Gaggl, H.; Pfurtscheller, G. An SSVEP BCI to Control a Hand Orthosis for Persons With Tetraplegia. IEEE Trans. Neural Syst. Rehabil. Eng. 2010, 19, 784–796. [Google Scholar] [CrossRef]

- Wu, Z.; Lai, Y.; Xia, Y.; Wu, D.; Yao, D. Stimulator selection in SSVEP-based BCI. Med. Eng. Phys. 2008, 30, 1079–1088. [Google Scholar] [CrossRef]

- Pavan Kumar, B.N.; Balasubramanyam, A.; Patil, A.K.; Chethana, B.; Chai, Y.H. GazeGuide: An Eye-Gaze-Guided Active Immersive UAV Camera. Appl. Sci. 2020, 10, 1668. [Google Scholar]

- Jacob, R.J.K. Eye Tracking in Advanced Interface Design. In Virtual Environments and Advanced Interface Design; Oxford University Press: Oxford, UK, 1995; Volume 258, p. 288. [Google Scholar]

- Stawicki, P.; Gembler, F.; Rezeika, A.; Volosyak, I. A Novel Hybrid Mental Spelling Application Based on Eye Tracking and SSVEP-Based BCI. Brain Sci. 2017, 7, 35. [Google Scholar] [CrossRef]

- Kishore, S.; González-Franco, M.; Hintemüller, C.; Kapeller, C.; Guger, C.; Slater, M.; Blom, K.J. Comparison of SSVEP BCI and eye tracking for controlling a humanoid robot in a social environment. Presence Teleoper. Virtual Environ. 2014, 23, 242–252. [Google Scholar] [CrossRef]

- Pfurtscheller, G.; Allison, B.Z.; Bauernfeind, G.; Brunner, C.; Solis Escalante, T.; Scherer, R.; Zander, T.O.; Mueller-Putz, G.; Neuper, C.; Birbaumer, N. The hybrid BCI. Front. Neurosci. 2010, 4, 3. [Google Scholar] [CrossRef] [PubMed]

- Zhou, Y.; He, S.; Huang, Q.; Li, Y. A Hybrid Asynchronous Brain-Computer Interface Combining SSVEP and EOG Signals. IEEE Trans. Biomed. Eng. 2020, 67, 2881–2892. [Google Scholar] [CrossRef]

- Myna, K.N.; Tarpin-Bernard, F. Evaluation and comparison of a multimodal combination of BCI paradigms and eye tracking with affordable consumer-grade hardware in a gaming context. IEEE Trans. Comput. Intell. AI Games 2013, 5, 150–154. [Google Scholar] [CrossRef]

- McMullen, D.P.; Hotson, G.; Katyal, K.D.; Wester, B.A.; Fifer, M.S.; McGee, T.G.; Harris, A.; Johannes, M.S.; Vogelstein, R.J.; Ravitz, A.D.; et al. Demonstration of a semi-autonomous hybrid brain–machine interface using human intracranial EEG, eye tracking, and computer vision to control a robotic upper limb prosthetic. IEEE Trans. Neural Syst. Rehabil. Eng. 2013, 22, 784–796. [Google Scholar] [CrossRef]

- Kim, B.H.; Kim, M.; Jo, S. Quadcopter flight control using a low-cost hybrid interface with EEG-based classification and eye tracking. Comput. Biol. Med. 2014, 51, 82–92. [Google Scholar] [CrossRef]

- Thuan, D. Evolution of Yolo Algorithm and Yolov5: The State-of-the-Art Object Detention Algorithm; Oulu University of Applied Sciences: Oulu, Finland, 2021. [Google Scholar]

- Wojke, N.; Bewley, A.; Paulus, D. Simple online and realtime tracking with a deep association metric. In Proceedings of the 2017 IEEE International Conference on Image Processing (ICIP), Beijing, China, 17–20 September 2017; pp. 3645–3649. [Google Scholar]

- Bewley, A.; Ge, Z.; Ott, L.; Ramos, F.; Upcroft, B. Simple online and realtime tracking. In Proceedings of the 2016 IEEE International Conference on Image Processing (ICIP), Phoenix, AZ, USA, 25–28 September 2016; pp. 3464–3468. [Google Scholar]

- Zhang, N.; Zhou, Z.; Liu, Y.; Yin, E.; Jiang, J.; Hu, D. A Novel Single-Character Visual BCI Paradigm With Multiple Active Cognitive Tasks. IEEE Trans. Biomed. Eng. 2019, 66, 3119–3128. [Google Scholar] [CrossRef]

- Schalk, G.; McFarland, D.; Hinterberger, T.; Birbaumer, N.; Wolpaw, J. BCI2000: A General-Purpose Brain-Computer Interface (BCI) System. IEEE Trans. Biomed. Eng. 2004, 51, 1034–1043. [Google Scholar] [CrossRef]

- Kelly, S. Basic introduction to pygame. In Python, PyGame and Raspberry Pi Game Development; Springer: Berlin/Heidelberg, Germany, 2016; pp. 59–65. [Google Scholar]

- Lin, Z.; Zhang, C.; Wu, W.; Gao, X. Frequency Recognition Based on Canonical Correlation Analysis for SSVEP-Based BCIs. IEEE Trans. Biomed. Eng. 2006, 53, 2610–2614. [Google Scholar] [CrossRef] [PubMed]

- Olsen, A. The Tobii I-VT Fixation Filter; Tobii Technology: Danderyd Municipality, Sweden, 2012; Volume 21, pp. 4–19. [Google Scholar]

| Subject | S1 | S2 | S3 | S4 | S5 | S6 |

|---|---|---|---|---|---|---|

| Threshold | 0.56 | 0.62 | 0.51 | 0.47 | 0.45 | 0.39 |

| Subject | SSVEP | Hybrid | ||||

|---|---|---|---|---|---|---|

| Mean Time (s) | Accuracy (%) | ITR (bits/min) | Mean Time (s) | Accuracy (%) | ITR (bits/min) | |

| S1 | 1.9 + 0.5 | 95 | 48.39 | 1.2 + 0.5 | 95 | 68.31 |

| S2 | 1.8 + 0.5 | 100 | 60.57 | 1.0 + 0.5 | 100 | 92.88 |

| S3 | 2.0 + 0.5 | 95 | 46.45 | 1.3 + 0.5 | 100 | 77.39 |

| S4 | 1.9 + 0.5 | 90 | 41.32 | 1.4 + 0.5 | 95 | 61.12 |

| S5 | 2.3 + 0.5 | 80 | 25.71 | 1.6 + 0.5 | 90 | 47.23 |

| S6 | 2.2 + 0.5 | 85 | 31.38 | 1.5 + 0.5 | 95 | 58.07 |

| Mean | 2.02 + 0.5 | 90.83 | 42.30 | 1.33 + 0.5 | 95.83 | 67.50 |

| Std | 0.18 | 6.72 | 11.43 | 0.20 | 3.44 | 14.62 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Sun, J.; Liu, Y. A Hybrid Asynchronous Brain–Computer Interface Based on SSVEP and Eye-Tracking for Threatening Pedestrian Identification in Driving. Electronics 2022, 11, 3171. https://doi.org/10.3390/electronics11193171

Sun J, Liu Y. A Hybrid Asynchronous Brain–Computer Interface Based on SSVEP and Eye-Tracking for Threatening Pedestrian Identification in Driving. Electronics. 2022; 11(19):3171. https://doi.org/10.3390/electronics11193171

Chicago/Turabian StyleSun, Jianxiang, and Yadong Liu. 2022. "A Hybrid Asynchronous Brain–Computer Interface Based on SSVEP and Eye-Tracking for Threatening Pedestrian Identification in Driving" Electronics 11, no. 19: 3171. https://doi.org/10.3390/electronics11193171

APA StyleSun, J., & Liu, Y. (2022). A Hybrid Asynchronous Brain–Computer Interface Based on SSVEP and Eye-Tracking for Threatening Pedestrian Identification in Driving. Electronics, 11(19), 3171. https://doi.org/10.3390/electronics11193171